Language Experience Changes Audiovisual Perception

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Design

2.3. Materials

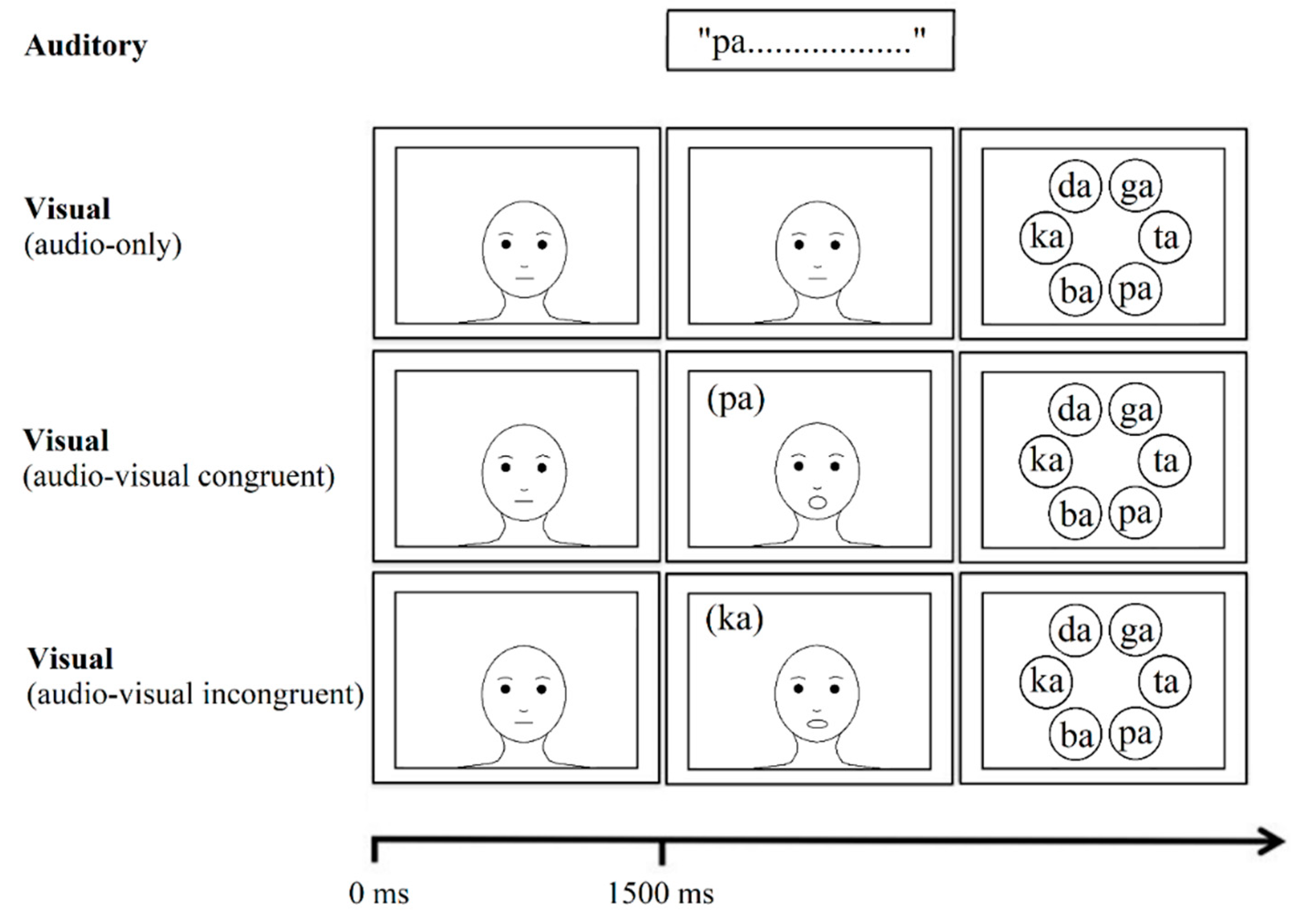

2.4. Procedure

2.5. Data Analysis

2.6. Data Availability

2.7. Use of Human Participants

3. Results

3.1. The McGurk Effect

3.1.1. Quiet Condition

3.1.2. Noise Condition

3.2. Speech Perception Ability

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Marks, L.E. On colored-hearing synesthesia: Cross-modal translations of sensory dimensions. Psychol. Bull. 1975, 82, 303–331. [Google Scholar] [CrossRef] [PubMed]

- Ward, J.; Simner, J.; Auyeung, V. A comparison of lexical-gustatory and grapheme-colour synaesthesia. Cogn. Neuropsychol. 2005, 22, 28–41. [Google Scholar] [CrossRef] [PubMed]

- Burnham, D.; Dodd, B. Auditory-visual speech integration by prelinguistic infants: Perception of an emergent consonant in the McGurk effect. Dev. Psychobiol. 2004, 45, 204–220. [Google Scholar] [CrossRef] [PubMed]

- Maier, J.X.; Neuhoff, J.G.; Logothetis, N.K.; Ghazanfar, A.A. Multisensory integration of looming signals by rhesus monkeys. Neuron 2004, 43, 177–181. [Google Scholar] [CrossRef] [PubMed]

- Frank, R.A.; Byram, J. Taste–smell interactions are tastant and odorant dependent. Chem. Senses 1988, 13, 445–455. [Google Scholar] [CrossRef]

- Reinoso Carvalho, F.; Wang, Q.J.; van Ee, R.; Persoone, D.; Spence, C. “Smooth operator”: Music modulates the perceived creaminess, sweetness, and bitterness of chocolate. Appetite 2017, 108, 383–390. [Google Scholar] [CrossRef] [PubMed]

- Ho, H.N.; Iwai, D.; Yoshikawa, Y.; Watanabe, J.; Nishida, S. Combining colour and temperature: A blue object is more likely to be judged as warm than a red object. Sci. Rep. 2014, 4, 5527. [Google Scholar] [CrossRef] [PubMed]

- Saldaña, H.M.; Rosenblum, L.D. Visual influences on auditory pluck and bow judgments. Percept. Psychophys. 1993, 54, 406–416. [Google Scholar] [CrossRef] [PubMed]

- Ross, L.A.; Saint-Amour, D.; Leavitt, V.M.; Javitt, D.C.; Foxe, J.J. Do You See What I Am Saying? Exploring Visual Enhancement of Speech Comprehension in Noisy Environments. Cereb. Cortex 2006, 17, 1147–1153. [Google Scholar] [CrossRef] [PubMed]

- Mcgurk, H.; Macdonald, J. Hearing lips and seeing voices. Nature 1976, 264, 746–748. [Google Scholar] [CrossRef] [PubMed]

- Grant, K.W.; Seitz, P.-F. The use of visible speech cues for improving auditory detection of spoken sentences. J. Acoust. Soc. Am. 2000, 108, 1197–1208. [Google Scholar] [CrossRef] [PubMed]

- Fixmer, E.; Hawkins, S. The influence of quality of information on the McGurk effect. In Proceedings of the AVSP’98 International Conference on Auditory-Visual Speech Processing, Terrigal, Australia, 5 December 1998; pp. 27–32. [Google Scholar]

- Sekiyama, K.; Kanno, I.; Miura, S.; Sugita, Y. Auditory-visual speech perception examined by fMRI and PET. Neurosci. Res. 2003, 47, 277–287. [Google Scholar] [CrossRef]

- Sekiyama, K.; Tohkura, Y. McGurk effect in non-English listeners: Few visual effects for Japanese subjects hearing Japanese syllables of high auditory intelligibility. J. Acoust. Soc. Am. 1991, 90, 1797–1805. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Hazan, V. Language Effects on the Degree of Visual Influence in Audiovisual Speech Perception. In Proceedings of the 16th International Congress of Phonetic Sciences, Saarbrueken, Germany, 6–10 August 2007; pp. 2177–2180. [Google Scholar]

- Sekiyama, K.; Tohkura, Y. Inter-language differences in the influence of visual cues in speech perception. J. Phonetics 1993, 21, 427–444. [Google Scholar]

- Sekiyama, K. Differences in auditory-visual speech perception between Japanese and Americans: McGurk effect as a function of incompatibility. J. Acoust. Soc. Jpn. 1994, 15, 143–158. [Google Scholar] [CrossRef]

- Wang, Y.; Behne, D.M.; Jiang, H. Influence of native language phonetic system on audio-visual speech perception. J. Phonetics 2009, 37, 344–356. [Google Scholar] [CrossRef]

- Bialystok, E. Bilingualism in Development; Cambridge University Press: Cambridge, UK, 2001; ISBN 9780511605963. [Google Scholar]

- Costa, A.; Hernández, M.; Sebastián-Gallés, N. Bilingualism aids conflict resolution: Evidence from the ANT task. Cognition 2008, 106, 59–86. [Google Scholar] [CrossRef] [PubMed]

- Cummins, J. Bilingualism and the development of metalinguistic awareness. J. Cross Cult. Psychol. 1978, 9, 131–149. [Google Scholar] [CrossRef]

- Galambos, S.J.; Goldin-Meadow, S. The effects of learning two languages on levels of metalinguistic awareness. Cognition 1990, 34, 1–56. [Google Scholar] [CrossRef]

- Bialystok, E.; Craik, F.I.M.; Freedman, M. Bilingualism as a protection against the onset of symptoms of dementia. Neuropsychologia 2007, 45, 459–464. [Google Scholar] [CrossRef] [PubMed]

- Alladi, S.; Bak, T.H.; Duggirala, V.; Surampudi, B.; Shailaja, M.; Shukla, A.K.; Chaudhuri, J.R.; Kaul, S. Bilingualism delays age at onset of dementia, independent of education and immigration status. Neurology 2013, 81, 1938–1944. [Google Scholar] [CrossRef] [PubMed]

- Goetz, P.J. The effects of bilingualism on theory of mind development. Biling. Lang. Cogn. 2003, 6, 1–15. [Google Scholar] [CrossRef]

- Fan, S.P.; Liberman, Z.; Keysar, B.; Kinzler, K.D. The exposure advantage: Early exposure to a multilingual environment promotes effective communication. Psychol. Sci. 2015, 26, 1090–1097. [Google Scholar] [CrossRef] [PubMed]

- Bradlow, A.R.; Bent, T. The clear speech effect for non-native listeners. J. Acoust. Soc. Am. 2002, 112, 272–284. [Google Scholar] [CrossRef] [PubMed]

- Mayo, L.H.; Florentine, M.; Buus, S. Age of second-language acquisition and perception of speech in noise. J. Speech Lang. Hear. Res. 1997, 40, 686–693. [Google Scholar] [CrossRef] [PubMed]

- Gollan, T.H.; Montoya, R.I.; Cera, C.; Sandoval, T.C. More use almost always means a smaller frequency effect: Aging, bilingualism, and the weaker links hypothesis. J. Mem. Lang. 2008, 58, 787–814. [Google Scholar] [CrossRef] [PubMed]

- Gollan, T.H.; Slattery, T.J.; Goldenberg, D.; Van Assche, E.; Duyck, W.; Rayner, K. Frequency drives lexical access in reading but not in speaking: The frequency-lag hypothesis. J. Exp. Psychol. Gen. 2011, 140, 186–209. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Marian, V.; Spivey, M. Competing activation in bilingual language processing: Within-and between-language competition. Biling. Lang. Cogn. 2018, 6, 97–115. [Google Scholar] [CrossRef]

- Thierry, G.; Wu, Y.J. Brain potentials reveal unconscious translation during foreign-language comprehension. Proc. Natl. Acad. Sci. USA 2007, 104, 12530–12535. [Google Scholar] [CrossRef] [PubMed]

- Best, C.T. The emergence of native-language phonological influences in infants: A perceptual assimilation model. In The Development of Speech Perception: The Transition from Speech Sounds to Spoken Words; The MIT Press: Cambridge, MA, USA, 1994; Volume 167, pp. 167–224. [Google Scholar]

- Kuhl, P.; Williams, K.; Lacerda, F.; Stevens, K.; Lindblom, B. Linguistic experience alters phonetic perception in infants by 6 months of age. Science 1992, 121–154. [Google Scholar] [CrossRef]

- Sebastián-Gallés, N.; Soto-Faraco, S. Online processing of native and non-native phonemic contrasts in early bilinguals. Cognition 1999, 72, 111–123. [Google Scholar] [CrossRef]

- Fennell, C.T.; Byers-Heinlein, K.; Werker, J.F. Using speech sounds to guide word learning: The case of bilingual infants. Child Dev. 2007, 78, 1510–1525. [Google Scholar] [CrossRef] [PubMed]

- Navarra, J.; Soto-Faraco, S. Hearing lips in a second language: Visual articulatory information enables the perception of second language sounds. Psychol. Res. 2007, 71, 4–12. [Google Scholar] [CrossRef] [PubMed]

- Pons, F.; Bosch, L.; Lewkowicz, D.J. Bilingualism modulates infants’ selective attention to the mouth of a talking face. Psychol. Sci. 2015, 26, 490–498. [Google Scholar] [CrossRef] [PubMed]

- Marian, V.; Blumenfeld, H.K.; Kaushanskaya, M. The language experience and proficiency questionnaire (LEAP-Q): Assessing language profiles in bilinguals and multilinguals. J. Speech Lang. Hear. Res. 2007, 50, 940–967. [Google Scholar] [CrossRef]

- Van Wassenhove, V.; Grant, K.W.; Poeppel, D. Visual speech speeds up the neural processing of auditory speech. Proc. Natl. Acad. Sci. USA 2005, 102, 1181–1186. [Google Scholar] [CrossRef] [PubMed]

- Van Wassenhove, V.; Grant, K.W.; Poeppel, D. Temporal window of integration in auditory-visual speech perception. Neuropsychologia 2007, 45, 598–607. [Google Scholar] [CrossRef] [PubMed]

- Alsius, A.; Navarra, J.; Campbell, R.; Soto-Faraco, S. Audiovisual integration of speech falters under high attention demands. Curr. Biol. 2005, 15, 839–843. [Google Scholar] [CrossRef] [PubMed]

- Bialystok, E. Coordination of executive functions in monolingual and bilingual children. J. Exp. Child Psychol. 2011, 110, 461–468. [Google Scholar] [CrossRef] [PubMed]

- Magnotti, J.F.; Beauchamp, M.S. The noisy encoding of disparity model of the McGurk effect. Psychon. Bull. Rev. 2015, 22, 701–709. [Google Scholar] [CrossRef] [PubMed]

- Basu Mallick, D.; Magnotti, J.F.; Beauchamp, M.S. Variability and stability in the McGurk effect: Contributions of participants, stimuli, time, and response type. Psychon. Bull. Rev. 2015, 22, 1299–1307. [Google Scholar] [CrossRef] [PubMed]

- Nath, A.R.; Beauchamp, M.S. A neural basis for interindividual differences in the McGurk effect, a multisensory speech illusion. Neuroimage 2012, 59, 781–787. [Google Scholar] [CrossRef] [PubMed]

- Stevenson, R.A.; Zemtsov, R.K.; Wallace, M.T. Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. J. Exp. Psychol. Hum. Percept. Perform. 2012, 38, 1517–1529. [Google Scholar] [CrossRef] [PubMed]

- Mongillo, E.A.; Irwin, J.R.; Whalen, D.H.; Klaiman, C.; Carter, A.S.; Schultz, R.T. Audiovisual processing in children with and without autism spectrum disorders. J. Autism Dev. Disord. 2008, 38, 1349–1358. [Google Scholar] [CrossRef] [PubMed]

- Irwin, J.R.; Tornatore, L.A.; Brancazio, L.; Whalen, D.H. Can children with autism spectrum disorders “hear” a speaking face? Child Dev. 2011, 82, 1397–1403. [Google Scholar] [CrossRef] [PubMed]

- Strand, J.; Cooperman, A.; Rowe, J.; Simenstad, A. Individual differences in susceptibility to the McGurk effect: Links with lipreading and detecting audiovisual incongruity. J. Speech Lang. Hear. Res. 2014, 57, 2322–2331. [Google Scholar] [CrossRef] [PubMed]

- Sekiyama, K.; Soshi, T.; Sakamoto, S. Enhanced audiovisual integration with aging in speech perception: A heightened McGurk effect in older adults. Front. Psychol. 2014, 5, 323. [Google Scholar] [CrossRef] [PubMed]

- Walker, S.; Bruce, V.; O’Malley, C. Facial identity and facial speech processing: Familiar faces and voices in the McGurk effect. Percept. Psychophys. 1995, 57, 1124–1133. [Google Scholar] [CrossRef] [PubMed]

- Bovo, R.; Ciorba, A.; Prosser, S.; Martini, A. The McGurk phenomenon in Italian listeners. Acta Otorhinolaryngol. Ital. 2009, 29, 203–208. [Google Scholar] [PubMed]

- Fuster-Duran, A. Perception of conflicting audio-visual speech: An examination across Spanish and German. In Speechreading by Humans and Machines; Springer: Berlin/Heidelberg, Germany, 1996; pp. 135–143. [Google Scholar]

- Sekiyama, K. Cultural and linguistic factors in audiovisual speech processing: The McGurk effect in Chinese subjects. Percept. Psychophys. 1997, 59, 73–80. [Google Scholar] [CrossRef] [PubMed]

- Aloufy, S.; Lapidot, M.; Myslobodsky, M. Differences in susceptibility to the “blending illusion” among native Hebrew and English speakers. Brain Lang. 1996, 53, 51–57. [Google Scholar] [CrossRef] [PubMed]

- Price, C.; Thierry, G.; Griffiths, T. Speech-specific auditory processing: Where is it? Trends Cogn. Sci. 2005, 9, 271–276. [Google Scholar] [CrossRef] [PubMed]

- Magnotti, J.F.; Beauchamp, M.S. A causal inference model explains perception of the McGurk effect and other incongruent audiovisual speech. PLoS Comput. Biol. 2017, 13, e1005229. [Google Scholar] [CrossRef] [PubMed]

- Navarra, J.; Alsius, A.; Velasco, I.; Soto-Faraco, S.; Spence, C. Perception of audiovisual speech synchrony for native and non-native language. Brain Res. 2010, 1323, 84–93. [Google Scholar] [CrossRef] [PubMed]

- Shams, L.; Kamitani, Y.; Shimojo, S. Visual illusion induced by sound. Cogn. Brain Res. 2002, 14, 147–152. [Google Scholar] [CrossRef]

- Grosjean, F. Bilingual: Life and Reality; Harvard University Press: Cambridge, MA, USA, 2010; ISBN 9780674048874. [Google Scholar]

- Maguire, E.A.; Gadian, D.G.; Johnsrude, I.S.; Good, C.D.; Ashburner, J.; Frackowiak, R.S.J.; Frith, C.D. Navigation-related structural change in the hippocampi of taxi drivers. Proc. Natl. Acad. Sci. USA 2000, 97, 4398–4403. [Google Scholar] [CrossRef] [PubMed]

- Green, C.S.; Bavelier, D. Action video game modifies visual selective attention. Nature 2003, 423, 534–537. [Google Scholar] [CrossRef] [PubMed]

| Monolinguals | Early Bilinguals | Late Bilinguals | Mono vs. Early | Mono vs. Late | Early vs. Late | |

|---|---|---|---|---|---|---|

| N | 17 | 18 | 16 | - | - | - |

| Age | 21.71 (2.42) | 20.44 (2.20) | 21.38 (2.00) | n.s. | n.s. | n.s. |

| English AoA | 0.24 (0.44) | 3.44 (2.04) | 9.07 (1.83) | * | * | * |

| English Proficiency | 9.76 (0.44) | 9.31 (0.87) | 8.62 (0.93) | n.s. | * | * |

| Korean AoA | - | 0.94 (1.21) | 0.47 (1.06) | - | - | n.s. |

| Korean Proficiency | - | 8.39 (1.83) | 9.13 (0.85) | - | - | n.s. |

| Monolinguals | Early Bilinguals | Late Bilinguals | |

|---|---|---|---|

| (N = 17) | (N = 18) | (N = 16) | |

| Audio-Only | |||

| Quiet | 0.99 (0.02) | 0.99 (0.02) | 0.97 (0.04) |

| Noise | 0.31 (0.19) | 0.22 (0.15) | 0.15 (0.14) |

| Audio-Visual | |||

| Quiet | 1.00 (0.00) | 0.99 (0.03) | 0.99 (0.02) |

| Noise | 0.79 (0.17) | 0.75 (0.15) | 0.70 (0.14) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marian, V.; Hayakawa, S.; Lam, T.Q.; Schroeder, S.R. Language Experience Changes Audiovisual Perception. Brain Sci. 2018, 8, 85. https://doi.org/10.3390/brainsci8050085

Marian V, Hayakawa S, Lam TQ, Schroeder SR. Language Experience Changes Audiovisual Perception. Brain Sciences. 2018; 8(5):85. https://doi.org/10.3390/brainsci8050085

Chicago/Turabian StyleMarian, Viorica, Sayuri Hayakawa, Tuan Q. Lam, and Scott R. Schroeder. 2018. "Language Experience Changes Audiovisual Perception" Brain Sciences 8, no. 5: 85. https://doi.org/10.3390/brainsci8050085

APA StyleMarian, V., Hayakawa, S., Lam, T. Q., & Schroeder, S. R. (2018). Language Experience Changes Audiovisual Perception. Brain Sciences, 8(5), 85. https://doi.org/10.3390/brainsci8050085