How Scientists View Vaccine Hesitancy

Abstract

:1. Introduction

2. Literature

2.1. Vaccine Hesitancy: Causes and Consequences

2.2. Overcoming Vaccine Hesitancy: Policy Approaches and Solutions

2.3. Scientists’ Roles at the Interface of Science and Society for Vaccine Hesitancy

3. Materials and Methods

4. Results

4.1. Part I: Controversy, Confusion and Vaccination Behavior

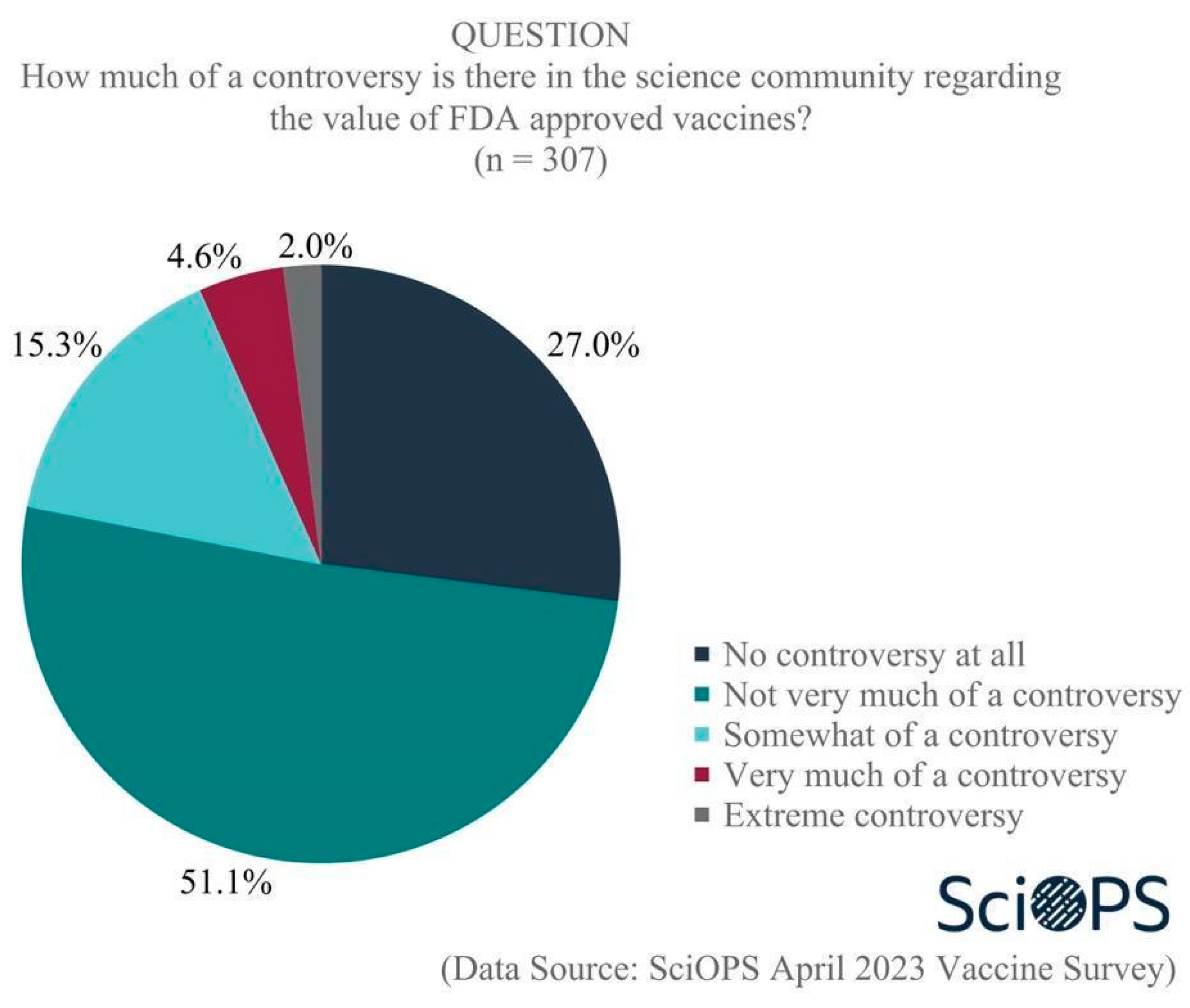

4.1.1. Science Controversy and Public Confusion

4.1.2. Causes of Public Confusion about Vaccines and Vaccination

4.1.3. In What Ways Do Scientists’ Behaviors Reflect Controversy and Confusion?

- There was no evidence that the vaccine offered protection for me, as I had recently recovered from the virus it supposedly protected against.

- The virus was not particularly dangerous.

- There was no evidence that the vaccine did anything to slow the spread of infection (and some evidence that it somehow increased infection rates).

- There was ample anecdotal evidence that the vaccine was much more harmful than health authorities admitted.

- Given 4, there was ample reason to believe that everything else official sources said about the vaccine—and the virus—was misleading and potentially false.

4.2. Part II: Policy and Institutions: Trust, Vaccination Strategies and Regulation

4.2.1. US Public Trust in Institutions and Scientists’ Opinions

4.2.2. Scientists’ Beliefs about Vaccination Strategies and Approaches

- Almost two-thirds (63.8%) indicated that the statement “mandatory immunization laws are critical for protecting public health” was consistent with their personal beliefs, and

- More than three-quarters (77%) felt that the statement “compulsory immunization laws unnecessarily limit personal freedoms” was not consistent with their beliefs.

4.2.3. Risk Benefit Tradeoffs for Expediting FDA Approvals

4.3. Part III: Scientists’ Communication Roles and Ethics

4.3.1. Scientists’ Roles

4.3.2. Ethics and Vaccine Communication

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lalani, H.S.; Nagar, S.; Sarpatwari, A.; Barenie, R.E.; Avorn, J.; Rome, B.N.; Kesselheim, A.S. US public investment in development of mRNA COVID-19 vaccines: Retrospective cohort study. BMJ 2023, 380, e073747. [Google Scholar] [CrossRef]

- Athey, S.; Castillo, J.C.; Chaudhuri, E.; Kremer, M.; Simoes Gomes, A.; Snyder, C.M. Expanding capacity for vaccines against COVID-19 and future pandemics: A review of economic issues. Oxf. Rev. Econ. Policy 2022, 38, 742–770. [Google Scholar] [CrossRef]

- Pecetta, S.; Tortorice, D.; Scorza, F.B.; Pizza, M.; Dougan, G.; Hatchett, R.; Black, S.; Bloom, D.E.; Rappuoli, R. The trillion dollar vaccine gap. Sci. Transl. Med. 2022, 14, eabn4342. [Google Scholar] [CrossRef]

- Kennedy, B.; Tyson, A.; Funk, C. Americans’ Trust in Scientists, Other Groups Declines. Pew Research Center Report. 15 February 2022. Available online: https://www.pewresearch.org/science/2022/02/15/americans-trust-in-scientists-other-groups-declines/ (accessed on 23 June 2023).

- Blendon, R.J.; Benson, J.M. Trust in Medicine, the Health System & Public Health. Daedalus 2022, 151, 67–82. [Google Scholar]

- Hatton, C.R.; Barry, C.L.; Levine, A.S.; McGinty, E.E.; Han, H. American Trust in Science & Institutions in the Time of COVID-19. Daedalus 2022, 151, 83–97. [Google Scholar]

- ASPR Press Office. Fact Sheet: HHS Details $5 Billion “Project Nextgen” Initiative to Stay Ahead of COVID-19; ASPR Press Office: Washington, DC, USA, 2023. [Google Scholar]

- Weber, L.; Achenbach, J. Covid Backlash Hobbles Public Health and Future Pandemic Response. The Washington Post. 22 March 2023. Available online: https://www.washingtonpost.com/health/2023/03/08/covid-public-health-backlash/ (accessed on 23 June 2023).

- Yu, C.; Lei, Q.; Li, W.; Wang, X.; Liu, W.; Fan, X.; Li, W. Clinical characteristics, associated factors, and predicting COVID-19 mortality risk: A retrospective study in Wuhan, China. Am. J. Prev. Med. 2020, 59, 168–175. [Google Scholar] [CrossRef]

- Huang, C.; Wang, Y.; Li, X.; Ren, L.; Zhao, J.; Hu, Y.; Zhang, L.; Fan, G.; Xu, J.; Gu, X. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 2020, 395, 497–506. [Google Scholar] [CrossRef] [Green Version]

- Wu, Z.; McGoogan, J.M. Characteristics of and important lessons from the coronavirus disease 2019 (COVID-19) outbreak in China: Summary of a report of 72,314 cases from the Chinese Center for Disease Control and Prevention. JAMA 2020, 323, 1239–1242. [Google Scholar] [CrossRef]

- Yan, B.; Zhang, X.; Wu, L.; Zhu, H.; Chen, B. Why do countries respond differently to COVID-19? A comparative study of Sweden, China, France, and Japan. Am. Rev. Public Adm. 2020, 50, 762–769. [Google Scholar] [CrossRef]

- Djalante, R.; Nurhidayah, L.; Van Minh, H.; Phuong, N.T.N.; Mahendradhata, Y.; Trias, A.; Lassa, J.; Miller, M.A. COVID-19 and ASEAN responses: Comparative policy analysis. Prog. Disaster Sci. 2020, 8, 100129. [Google Scholar] [CrossRef]

- Pew Research Center. Lack of Preparedness among Top Reactions Americans Have to Public Health Officials’ COVID-19 Response. Available online: https://www.pewresearch.org/science/wp-content/uploads/sites/16/2022/10/PS_2022.10.05_covid-response-views_REPORT.pdf (accessed on 19 May 2023).

- Badr, H.; Zhang, X.; Oluyomi, A.; Woodard, L.D.; Adepoju, O.E.; Raza, S.A.; Amos, C.I. Overcoming COVID-19 vaccine hesitancy: Insights from an online population-based survey in the United States. Vaccines 2021, 9, 1100. [Google Scholar] [CrossRef] [PubMed]

- Wallace-Wells, D. Who’s to Blame for a Million Deaths? The New York Times, 23 April 2023. [Google Scholar]

- Gluckman, P.; Bardsley, A.; Kaiser, M. Brokerage at the science–policy interface: From conceptual framework to practical guidance. Humanit. Soc. Sci. Commun. 2021, 8, 84. [Google Scholar] [CrossRef]

- Sallam, M. COVID-19 vaccine hesitancy worldwide: A concise systematic review of vaccine acceptance rates. Vaccines 2021, 9, 160. [Google Scholar] [CrossRef]

- De Figueiredo, A.; Simas, C.; Karafillakis, E.; Paterson, P.; Larson, H.J. Mapping global trends in vaccine confidence and investigating barriers to vaccine uptake: A large-scale retrospective temporal modelling study. Lancet 2020, 396, 898–908. [Google Scholar] [CrossRef] [PubMed]

- WHO. Ten Threats to Global Health in 2019; WHO: Geneva, Switzerland, 2019.

- Lazarus, J.V.; Wyka, K.; White, T.M.; Picchio, C.A.; Rabin, K.; Ratzan, S.C.; Parsons Leigh, J.; Hu, J.; El-Mohandes, A. Revisiting COVID-19 vaccine hesitancy around the world using data from 23 countries in 2021. Nat. Commun. 2022, 13, 3801. [Google Scholar] [CrossRef] [PubMed]

- Murphy, J.; Vallières, F.; Bentall, R.P.; Shevlin, M.; McBride, O.; Hartman, T.K.; McKay, R.; Bennett, K.; Mason, L.; Gibson-Miller, J. Psychological characteristics associated with COVID-19 vaccine hesitancy and resistance in Ireland and the United Kingdom. Nat. Commun. 2021, 12, 29. [Google Scholar] [CrossRef]

- Patty, N.J.; Van Dijk, H.M.; Wallenburg, I.; Bal, R.; Helmerhorst, T.J.; Van Exel, J.; Cramm, J.M. To vaccinate or not to vaccinate? Perspectives on HPV vaccination among girls, boys, and parents in the Netherlands: A Q-methodological study. BMC Public Health 2017, 17, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Kennedy, J. Populist politics and vaccine hesitancy in Western Europe: An analysis of national-level data. Eur. J. Public Health 2019, 29, 512–516. [Google Scholar] [CrossRef]

- Khairat, S.; Zou, B.; Adler-Milstein, J. Factors and reasons associated with low COVID-19 vaccine uptake among highly hesitant communities in the US. Am. J. Infect. Control 2022, 50, 262–267. [Google Scholar] [CrossRef]

- MacDonald, N.E. Vaccine hesitancy: Definition, scope and determinants. Vaccine 2015, 33, 4161–4164. [Google Scholar] [CrossRef]

- Rohrmann, B. Risk perception, risk attitude, risk communication, risk management: A conceptual appraisal. In Proceedings of the 15th International Emergency Management Society (TIEMS) Annual Conference, Prague, Czech Republic, 17–19 June 2008. [Google Scholar]

- Forgas, J.P. Affect in social judgments and decisions: A multiprocess model. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 1992; Volume 25, pp. 227–275. [Google Scholar]

- Finucane, M.L.; Peters, E.; Slovic, P. Judgment and decision making: The dance of affect and reason. In Emerging Perspectives on Judgment and Decision Research; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Slovic, P.; Finucane, M.L.; Peters, E.; MacGregor, D.G. Risk as analysis and risk as feelings: Some thoughts about affect, reason, risk, and rationality. Risk Anal. Int. J. 2004, 24, 311–322. [Google Scholar] [CrossRef]

- Smith, R.D. Responding to global infectious disease outbreaks: Lessons from SARS on the role of risk perception, communication and management. Soc. Sci. Med. 2006, 63, 3113–3123. [Google Scholar] [CrossRef]

- Tang, C.S.; Wong, C.-Y. An outbreak of the severe acute respiratory syndrome: Predictors of health behaviors and effect of community prevention measures in Hong Kong, China. Am. J. Public Health 2003, 93, 1887–1888. [Google Scholar] [CrossRef]

- Wise, T.; Zbozinek, T.D.; Michelini, G.; Hagan, C.C.; Mobbs, D. Changes in risk perception and self-reported protective behaviour during the first week of the COVID-19 pandemic in the United States. R. Soc. Open Sci. 2020, 7, 200742. [Google Scholar] [CrossRef] [PubMed]

- Caserotti, M.; Girardi, P.; Rubaltelli, E.; Tasso, A.; Lotto, L.; Gavaruzzi, T. Associations of COVID-19 risk perception with vaccine hesitancy over time for Italian residents. Soc. Sci. Med. 2021, 272, 113688. [Google Scholar] [CrossRef] [PubMed]

- Slovic, P. Perception of risk: Reflections on the psychometric paradigm. In Theories of Risk; Praeger: New York, NY, USA, 1992. [Google Scholar]

- Niño, M.; Harris, C.; Drawve, G.; Fitzpatrick, K.M. Race and ethnicity, gender, and age on perceived threats and fear of COVID-19: Evidence from two national data sources. SSM—Popul. Health. 2021, 13, 100717. [Google Scholar] [CrossRef]

- Weichselgartner, J.; Kasperson, R. Barriers in the Science-Policy-Practice Interface: Toward A Knowledge-Action-System in Global Environmental Change Research. Glob. Environ. Chang. 2010, 20, 266–277. [Google Scholar] [CrossRef] [Green Version]

- Larson, H.J.; Jarrett, C.; Eckersberger, E.; Smith, D.M.D.; Paterson, P. Understanding vaccine hesitancy around vaccines and vaccination from a global perspective: A systematic review of published literature, 2007–2012. Vaccine 2014, 32, 2150–2159. [Google Scholar] [CrossRef]

- Agley, J. Assessing changes in US public trust in science amid the COVID-19 pandemic. Public Health 2020, 183, 122–125. [Google Scholar] [CrossRef] [PubMed]

- Jasanoff, S. Controversy studies. Blackwell Encycl. Sociol. 2019, 1–5. Available online: https://stsprogram.org/summerschool/2019-readings/ (accessed on 23 June 2023).

- Schueller, L.; Booth, L.; Fleming, K.; Abad, J. Using serious gaming to explore how uncertainty affects stakeholder decision-making across the science-policy divide during disasters. Int. J. Disaster Risk Reduct. 2020, 51, 101802. [Google Scholar] [CrossRef]

- Pielke, R. The Honest Broker: Making Sense of Science in Policy and Politics; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Hoffman, F.O.; Hammonds, J.S. Propagation of uncertainty in risk assessments: The need to distinguish between uncertainty due to lack of knowledge and uncertainty due to variability. Risk Anal. 1994, 14, 707–712. [Google Scholar] [CrossRef] [PubMed]

- Wilson, S.L.; Wiysonge, C. Social media and vaccine hesitancy. BMJ Glob. Health 2020, 5, e004206. [Google Scholar] [CrossRef] [PubMed]

- Finset, A.; Bosworth, H.; Butow, P.; Gulbrandsen, P.; Hulsman, R.L.; Pieterse, A.H.; Street, R.; Tschoetschel, R.; van Weert, J. Effective health communication—A key factor in fighting the COVID-19 pandemic. Patient Educ. Couns. 2020, 103, 873. [Google Scholar] [CrossRef]

- Larson, H.J. Blocking information on COVID-19 can fuel the spread of misinformation. Nature 2020, 580, 306–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, D.K.D.; Kreps, G.L. An analysis of government communication in the United States during the COVID-19 pandemic: Recommendations for effective government health risk communication. World Med. Health Policy 2020, 12, 398–412. [Google Scholar] [CrossRef] [PubMed]

- Garegnani, L.I.; Madrid, E.; Meza, N. Misleading clinical evidence and systematic reviews on ivermectin for COVID-19. BMJ Evid. Based Med. 2022, 27, 156–158. [Google Scholar] [CrossRef]

- Roy, A.M.; Mathew, A. Bad science in the time of COVID-19. Cancer Res. Stat. Treat. 2020, 3, 434–436. [Google Scholar]

- Gudi, S.K.; George, S.M.; Jose, J. Influence of social media on the public perspectives of the safety of COVID-19 vaccines. Expert Rev. Vaccines 2022, 21, 1697–1699. [Google Scholar] [CrossRef]

- Jennings, W.; Stoker, G.; Bunting, H.; Valgarðsson, V.O.; Gaskell, J.; Devine, D.; McKay, L.; Mills, M.C. Lack of trust, conspiracy beliefs, and social media use predict COVID-19 vaccine hesitancy. Vaccines 2021, 9, 593. [Google Scholar] [CrossRef]

- Neely, S.R.; Eldredge, C.; Ersing, R.; Remington, C. Vaccine hesitancy and exposure to misinformation: A survey analysis. J. Gen. Intern. Med. 2022, 37, 179–187. [Google Scholar] [CrossRef]

- Van Der Linden, S. Misinformation: Susceptibility, spread, and interventions to immunize the public. Nat. Med. 2022, 28, 460–467. [Google Scholar] [CrossRef] [PubMed]

- Freeman, D.; Waite, F.; Rosebrock, L.; Petit, A.; Causier, C.; East, A.; Jenner, L.; Teale, A.-L.; Carr, L.; Mulhall, S. Coronavirus conspiracy beliefs, mistrust, and compliance with government guidelines in England. Psychol. Med. 2022, 52, 251–263. [Google Scholar] [CrossRef] [PubMed]

- Roozenbeek, J.; Schneider, C.R.; Dryhurst, S.; Kerr, J.; Freeman, A.L.; Recchia, G.; Van Der Bles, A.M.; Van Der Linden, S. Susceptibility to misinformation about COVID-19 around the world. R. Soc. Open Sci. 2020, 7, 201199. [Google Scholar] [CrossRef]

- Sturgis, P.; Brunton-Smith, I.; Jackson, J. Trust in science, social consensus and vaccine confidence. Nat. Hum. Behav. 2021, 5, 1528–1534. [Google Scholar] [CrossRef] [PubMed]

- Savulescu, J. Good reasons to vaccinate: Mandatory or payment for risk? J. Med. Ethics 2021, 47, 78–85. [Google Scholar] [CrossRef]

- Dubov, A.; Phung, C. Nudges or mandates? The ethics of mandatory flu vaccination. Vaccine 2015, 33, 2530–2535. [Google Scholar] [CrossRef]

- Tafuri, S.; Gallone, M.S.; Cappelli, M.G.; Martinelli, D.; Prato, R.; Germinario, C. Addressing the anti-vaccination movement and the role of HCWs. Vaccine 2014, 32, 4860–4865. [Google Scholar] [CrossRef]

- Schumacher, S.; Salmanton-García, J.; Cornely, O.A.; Mellinghoff, S.C. Increasing influenza vaccination coverage in healthcare workers: A review on campaign strategies and their effect. Infection 2021, 49, 387–399. [Google Scholar] [CrossRef]

- Fishman, J.; Salmon, M.K.; Scheitrum, D.; Schaefer, K.A.; Robertson, C.T. Comparative effectiveness of mandates and financial policies targeting COVID-19 vaccine hesitancy: A randomized, controlled survey experiment. Vaccine 2022, 40, 7451–7459. [Google Scholar] [CrossRef]

- Peters, M.D. Addressing vaccine hesitancy and resistance for COVID-19 vaccines. Int. J. Nurs. Stud. 2022, 131, 104241. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Chapman, G.B. Nudge to health: Harnessing decision research to promote health behavior. Soc. Personal. Psychol. Compass 2013, 7, 187–198. [Google Scholar] [CrossRef]

- Dubé, E.; Gagnon, D.; MacDonald, N.E. Strategies intended to address vaccine hesitancy: Review of published reviews. Vaccine 2015, 33, 4191–4203. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gong, J.D.; Barnboym, E.; O’Mara, M.; Gurevich, N.; Mattar, M.; Anthony, D.D.; Singer, N.G.; Perzynski, A.T. Financial Incentives Are Associated with Lower Likelihood of COVID-19 Vaccination in Northeast Ohio. J. Am. Board Fam. Med. 2023, 36, 170–174. [Google Scholar] [CrossRef]

- Campos-Mercade, P.; Meier, A.N.; Schneider, F.H.; Meier, S.; Pope, D.; Wengström, E. Monetary incentives increase COVID-19 vaccinations. Science 2021, 374, 879–882. [Google Scholar] [CrossRef]

- Iyer, G.; Nandur, V.; Soberman, D. Vaccine hesitancy and monetary incentives. Humanit. Soc. Sci. Commun. 2022, 9, 81. [Google Scholar] [CrossRef]

- Schwalbe, N.; Hanbali, L.; Nunes, M.C.; Lehtimaki, S. Use of financial incentives to increase adult vaccination coverage: A narrative review of lessons learned from COVID-19 and other adult vaccination efforts. Vaccine X 2022, 12, 100225. [Google Scholar] [CrossRef]

- Batteux, E.; Mills, F.; Jones, L.F.; Symons, C.; Weston, D. The effectiveness of interventions for increasing COVID-19 vaccine uptake: A systematic review. Vaccines 2022, 10, 386. [Google Scholar] [CrossRef] [PubMed]

- Giubilini, A. Vaccination ethics. Br. Med. Bull. 2021, 137, 4–12. [Google Scholar] [CrossRef]

- Ale, B.J.; Slater, D.H.; Hartford, D.N. The ethical dilemmas of risky decisions. Risk Anal. 2023, 43, 219–233. [Google Scholar] [CrossRef]

- Reñosa, M.D.C.; Landicho, J.; Wachinger, J.; Dalglish, S.L.; Bärnighausen, K.; Bärnighausen, T.; McMahon, S.A. Nudging toward vaccination: A systematic review. BMJ Glob. Health 2021, 6, e006237. [Google Scholar] [CrossRef]

- Jasanoff, S. The Fifth Branch: Science Advisers as Policymakers; Harvard University Press: Cambridge, UK, 1990. [Google Scholar]

- Avorn, J.; Kesselheim, A. Regulatory decision-making on COVID-19 vaccines during a public health emergency. JAMA 2020, 324, 1284–1285. [Google Scholar] [CrossRef] [PubMed]

- Shah, A.; Marks, P.W.; Hahn, S.M. Unwavering regulatory safeguards for COVID-19 vaccines. JAMA 2020, 324, 931–932. [Google Scholar] [CrossRef] [PubMed]

- Hahn, S.M. Opinion|FDA Commissioner: No Matter What, Only a Safe, Effective Vaccine will Get Our Approval. The Washington Post. 5 August 2020. Available online: https://www.washingtonpost.com/opinions/fda-commissioner-no-matter-what-only-a-safe-effective-vaccine-will-get-our-approval/2020/08/05/e897d920-d74e-11ea-aff6-220dd3a14741_story.html (accessed on 23 June 2023).

- Soveri, A.; Karlsson, L.C.; Antfolk, J.; Lindfelt, M.; Lewandowsky, S. Unwillingness to engage in behaviors that protect against COVID-19: The role of conspiracy beliefs, trust, and endorsement of complementary and alternative medicine. BMC Public Health 2021, 21, 684. [Google Scholar] [CrossRef]

- Fridman, I.; Lucas, N.; Henke, D.; Zigler, C.K. Association between public knowledge about COVID-19, trust in information sources, and adherence to social distancing: Cross-sectional survey. JMIR Public Health Surveill. 2020, 6, e22060. [Google Scholar] [CrossRef]

- Romer, D.; Jamieson, K.H. Conspiracy theories as barriers to controlling the spread of COVID-19 in the US. Soc. Sci. Med. 2020, 263, 113356. [Google Scholar] [CrossRef] [PubMed]

- Biasio, L.R.; Corsello, G.; Costantino, C.; Fara, G.M.; Giammanco, G.; Signorelli, C.; Vecchio, D.; Vitale, F. Communication about vaccination: A shared responsibility. Hum. Vaccin. Immunother. 2016, 12, 2984–2987. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hyland-Wood, B.; Gardner, J.; Leask, J.; Ecker, U.K.H. Toward effective government communication strategies in the era of COVID-19. Humanit. Soc. Sci. Commun. 2021, 8, 30. [Google Scholar] [CrossRef]

- Fischhoff, B. The sciences of science communication. PNAS 2013, 110, 14033–14039. [Google Scholar] [CrossRef]

- Lubchenco, J. Entering the century of the environment: A new social contract for science. Science 1998, 279, 491–497. [Google Scholar] [CrossRef] [Green Version]

- National Academies of Sciences Engineering and Medicine. Communicating Science Effectively: A Research Agenda; The National Academies Press: Washington, DC, USA, 2017. [Google Scholar]

- Rose, K.M.; Markowitz, E.M.; Brossard, D. Scientists’ incentives and attitudes toward public communication. Proc. Natl. Acad. Sci. USA 2020, 117, 1274–1276. [Google Scholar] [CrossRef] [Green Version]

- WHO. Risk Communication and Community Engagement Readiness and Response to Coronavirus Disease (COVID-19): Interim Guidance, 19 March 2020; World Health Organization: Geneva, Switzerland, 2020.

- Wang, Y.; Hao, H.; Platt, L.S. Examining risk and crisis communications of government agencies and stakeholders during early-stages of COVID-19 on Twitter. Comput. Hum. Behav. 2021, 114, 106568. [Google Scholar] [CrossRef]

- Gollust, S.E.; Nagler, R.H.; Fowler, E.F. The emergence of COVID-19 in the US: A public health and political communication crisis. J. Health Polit. Policy Law 2020, 45, 967–981. [Google Scholar] [CrossRef] [PubMed]

- Lupia, A. Communicating science in politicized environments. PNAS 2013, 110, 14048–14054. [Google Scholar] [CrossRef]

- Kreps, S.E.; Kriner, D.L. Model uncertainty, political contestation, and public trust in science: Evidence from the COVID-19 pandemic. Sci. Adv. 2020, 6, eabd4563. [Google Scholar] [CrossRef]

- Siegrist, M.; Zingg, A. The role of public trust during pandemics. Eur. Psychol. 2014, 19, 23–32. [Google Scholar] [CrossRef]

- Van Dooren, W.; Noordegraaf, M. Staging Science: Authoritativeness and Fragility of Models and Measurement in the COVID-19 Crisis. Public Adm. Rev. 2020, 80, 610–615. [Google Scholar] [CrossRef] [PubMed]

- O’Riordan, T. Interpreting the Precautionary Principle, 1st ed.; Cameron, J., Ed.; Routledge: London, UK, 1994. [Google Scholar]

- Ricci, P.F.; Zhang, J. Benefits and Limitations of the Precautionary Principle. In Encyclopedia of Environmental Health; Nriagu, J.O., Ed.; Elsevier: Burlington, VT, USA, 2011; pp. 276–285. [Google Scholar]

- Gluckman, P. The science–policy interface. Science 2016, 353, 969. [Google Scholar] [CrossRef] [Green Version]

- van der Arend, J. Bridging the research/policy gap: Policy officials’ perspectives on the barriers and facilitators to effective links between academic and policy worlds. Policy Stud. 2014, 35, 611–630. [Google Scholar] [CrossRef] [Green Version]

- Head, B.W. Three Lenses of Evidence-Based Policy. Aust. J. Public Adm. 2008, 67, 1–11. [Google Scholar] [CrossRef]

- Radu, L.; Bârgăoanu, A.; Durach, F.; Udrea, G. Developing Instruments for Evidence-Based Policy Making: A Case Study in Knowledge Management for the Public Sector. In Knowledge Management in the Sharing Economy: Cross-Sectoral Insights into the Future of Competitive Advantage; Vătămănescu, E.-M., Pînzaru, F.M., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 235–252. [Google Scholar]

- Churchman, C.W. Science and Decision Making. Philos. Sci. 1956, 23, 247–249. [Google Scholar] [CrossRef]

- Hinchliffe, S. Indeterminacy In-Decisions: Science, Policy and Politics in the BSE (Bovine Spongiform Encephalopathy) Crisis. Trans. Inst. Br. Geogr. 2001, 26, 182–204. [Google Scholar] [CrossRef] [Green Version]

- Hisschemöller, M.; Hoppe, R. Coping with intractable controversies: The case for problem structuring in policy design and analysis. Knowl. Policy 1995, 8, 40–60. [Google Scholar] [CrossRef] [Green Version]

- Elisha, E.; Guetzkow, J.; Shir-Raz, Y.; Ronel, N. Suppressing Scientific Discourse on Vaccines? Self-perceptions of researchers and practitioners. HEC Forum May 2022, 1–19. [Google Scholar] [CrossRef]

- Fraiman, J.; Erviti, J.; Jones, M.; Greenland, S.; Whelan, P.; Kaplan, R.M.; Doshi, P. Serious adverse events of special interest following mRNA COVID-19 vaccination in randomized trials in adults. Vaccine 2022, 40, 5798–5805. [Google Scholar] [CrossRef]

- Cernic, M. Ideological Constructs of Vaccination; Vega Press Limited: Singapore, 2018. [Google Scholar]

- Gøtzsche, P.C. Vaccines: Truth, Lies and Controversy; Art People: Singapore, 2020. [Google Scholar]

- AAPOR (The American Association for Public Opinion Research). Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys; AAPOR: Chicago, IL, USA, 2023. [Google Scholar]

- Nowell, L.S.; Norris, J.M.; White, D.E.; Moules, N.J. Thematic analysis: Striving to meet the trustworthiness criteria. Int. J. Qual. Methods 2017, 16, 1609406917733847. [Google Scholar] [CrossRef]

- Khubchandani, J.; Sharma, S.; Price, J.H.; Wiblishauser, M.J.; Sharma, M.; Webb, F.J. COVID-19 vaccination hesitancy in the United States: A rapid national assessment. J. Community Health 2021, 46, 270–277. [Google Scholar] [CrossRef] [PubMed]

- King, W.C.; Rubinstein, M.; Reinhart, A.; Mejia, R. Time trends, factors associated with, and reasons for COVID-19 vaccine hesitancy: A massive online survey of US adults from January–May 2021. PLoS ONE 2021, 16, e0260731. [Google Scholar] [CrossRef] [PubMed]

- Dubé, E.; Laberge, C.; Guay, M.; Bramadat, P.; Roy, R.; Bettinger, J.A. Vaccine hesitancy: An overview. Hum. Vaccines Immunother. 2013, 9, 1763–1773. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vanderpool, R.C.; Gaysynsky, A.; Chou, W.-Y.S.; Tonorezos, E.S. Using behavioral science to address COVID-19 vaccine hesitancy among cancer survivors: Communication strategies and research opportunities. J. Behav. Med. 2023, 46, 366–376. [Google Scholar] [CrossRef]

- Singh, P.; Dhalaria, P.; Kashyap, S.; Soni, G.K.; Nandi, P.; Ghosh, S.; Mohapatra, M.K.; Rastogi, A.; Prakash, D. Strategies to overcome vaccine hesitancy: A systematic review. Syst. Rev. 2022, 11, 78. [Google Scholar] [CrossRef] [PubMed]

| Construct | Variable | (n) | % |

|---|---|---|---|

| Age 1 | 31 to 45 | (75) | 23.8 |

| 46 to 60 | (110) | 34.8 | |

| 61 to 75 | (91) | 28.7 | |

| Above 75 | (22) | 6.9 | |

| Gender | Female | (126) | 39.8 |

| Male | (190) | 60.2 | |

| Field | Biology | (219) | 69.4 |

| Public Health | (97) | 30.6 | |

| Rank | Full Professor | (132) | 41.6 |

| Associate Professor | (71) | 22.4 | |

| Assistant Professor | (68) | 21.6 | |

| Non-tenure Track faculty | (46) | 14.4 | |

| Race 2 | White | (261) | 82.6 |

| Non-white | (38) | 12.0 | |

| Not reported | (17) | 5.4 | |

| University Region 3 | Northeast | (73) | 23.0 |

| Midwest | (63) | 19.9 | |

| South | (119) | 37.8 | |

| West | (61) | 19.3 | |

| State Politics 4 | Blue state | (175) | 55.3 |

| Red state | (141) | 44.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Welch, E.W.; Johnson, T.P.; Chen, T.; Ma, J.; Islam, S.; Michalegko, L.F.; Caldarulo, M.; Frandell, A. How Scientists View Vaccine Hesitancy. Vaccines 2023, 11, 1208. https://doi.org/10.3390/vaccines11071208

Welch EW, Johnson TP, Chen T, Ma J, Islam S, Michalegko LF, Caldarulo M, Frandell A. How Scientists View Vaccine Hesitancy. Vaccines. 2023; 11(7):1208. https://doi.org/10.3390/vaccines11071208

Chicago/Turabian StyleWelch, Eric W., Timothy P. Johnson, Tipeng Chen, Jinghuan Ma, Shaika Islam, Lesley Forst Michalegko, Mattia Caldarulo, and Ashlee Frandell. 2023. "How Scientists View Vaccine Hesitancy" Vaccines 11, no. 7: 1208. https://doi.org/10.3390/vaccines11071208