Detection of Minor and Major Depression through Voice as a Biomarker Using Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants and Study Design

2.2. Demographics and Antipsychotics

2.3. Questionnaires

2.4. Voice Feature Extraction

2.5. Statistical Analysis

3. Results

3.1. Comparison of Demographics and Clinical Characteristics According to Depressive Episodes

3.2. Clinical Characteristics

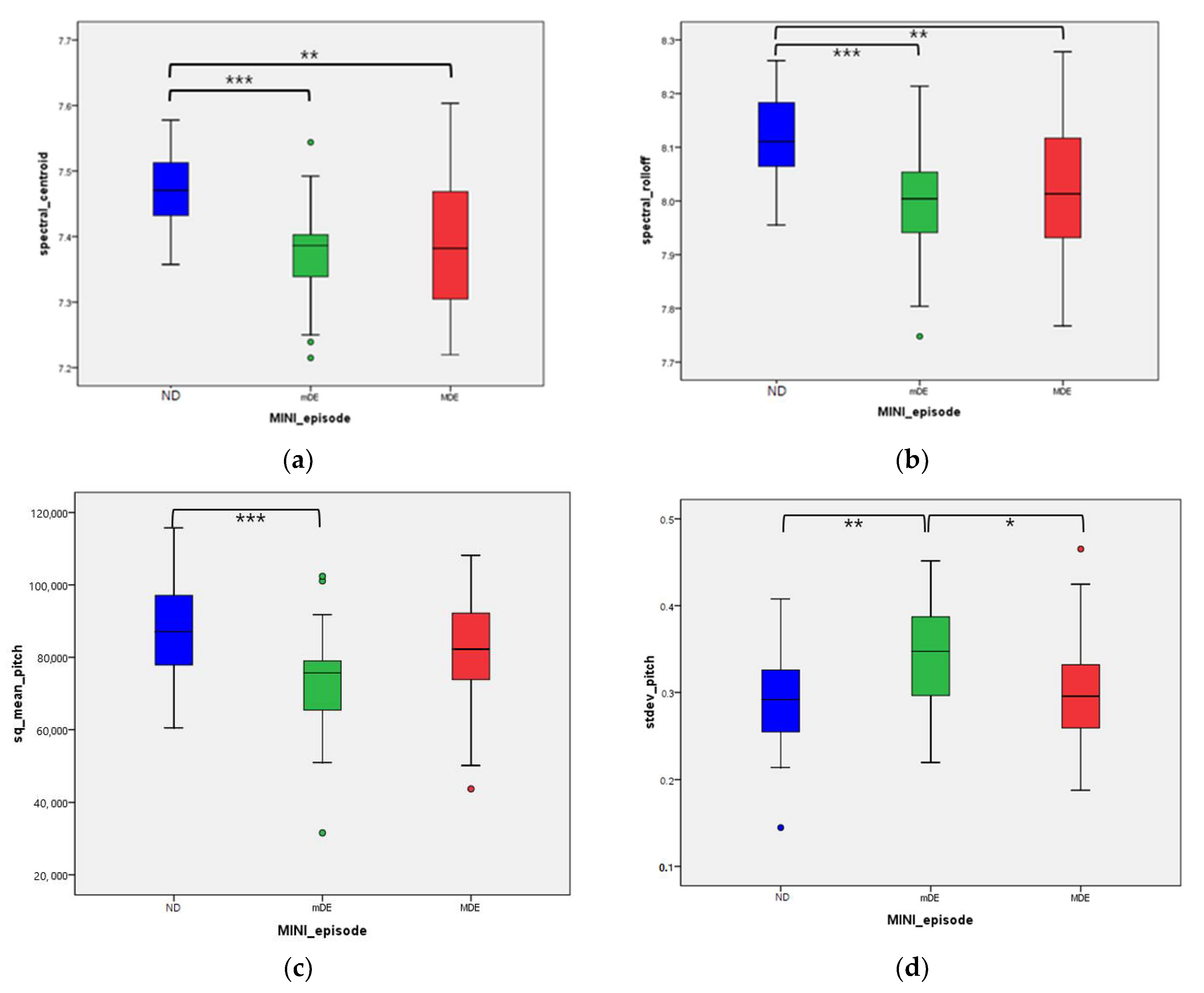

3.3. Voice Features

3.4. Prediction of Depressive Episode through Machine Learning

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- WHO. Depression and Other Common Mental Disorders: Global Health Estimates; World Health Organization: Geneva, Switzerland, 2017; pp. 1–24. [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Pub: Arlington, VA, USA, 2013. [Google Scholar]

- Wells, K.B.; Burnam, M.A.; Rogers, W.; Hays, R.; Camp, P. The course of depression in adult outpatients: Results from the Medical Outcomes Study. Arch. Gen. Psychiatry 1992, 49, 788–794. [Google Scholar] [CrossRef] [PubMed]

- Wagner, H.R.; Burns, B.J.; Broadhead, W.E.; Yarnall, K.S.H.; Sigmon, A.; Gaynes, B. Minor depression in family practice: Functional morbidity, co-morbidity, service utilization and outcomes. Psychol. Med. 2000, 30, 1377–1390. [Google Scholar] [CrossRef] [PubMed]

- Davidson, J.R.; Meltzer-Brody, S. The underrecognition and undertreatment of depression: What is the breadth and depth of the problem? J. Clin. Psychiatry 1999, 60, 4–11. [Google Scholar] [PubMed]

- Hall, R.C.; Wise, M.G. The Clinical and Financial Burden of Mood Disorders. Psychosomatics 1995, 36, S11–S18. [Google Scholar] [CrossRef]

- Cuijpers, P.; Smit, F. Subthreshold depression as a risk indicator for major depressive disorder: A systematic review of prospective studies. Acta Psychiatr. Scand. 2004, 109, 325–331. [Google Scholar] [CrossRef]

- Rodríguez, M.R.; Nuevo, R.; Chatterji, S.; Ayuso-Mateos, J.L. Definitions and factors associated with subthreshold depressive conditions: A systematic review. BMC Psychiatry 2012, 12, 181. [Google Scholar] [CrossRef] [Green Version]

- Rapaport, M.H.; Judd, L.L. Minor depressive disorder and subsyndromal depressive symptoms: Functional impairment and response to treatment. J. Affect. Disord. 1998, 48, 227–232. [Google Scholar] [CrossRef]

- Zivetz, L. The ICD-10 Classification of Mental and Behavioural Disorders: Clinical Descriptions and Diagnostic Guidelines; World Health Organization: Geneva, Switzerland, 1992; Volume 1. [Google Scholar]

- Jager, M.; Frasch, K.; Lang, F.U. Psychopathological differentiation of depressive syndromes. Fortschr. Neurol. Psychiatr. 2013, 81, 689–696. [Google Scholar]

- Lyness, J.M.; Cox, C.; Ba, J.C.; Conwell, Y.; King, D.A.; Caine, E.D. Older Age and the Underreporting of Depressive Symptoms. J. Am. Geriatr. Soc. 1995, 43, 216–221. [Google Scholar] [CrossRef]

- Jeon, H.J.; Walker, R.S.; Inamori, A.; Hong, J.P.; Cho, M.J.; Baer, L.; Clain, A.; Fava, M.; Mischoulon, D. Differences in depressive symptoms between Korean and American outpatients with major depressive disorder. Int. Clin. Psychopharmacol. 2014, 29, 150–156. [Google Scholar] [CrossRef]

- Mitchell, A.J.; Vaze, A.; Rao, S. Clinical diagnosis of depression in primary care: A meta-analysis. Lancet 2009, 374, 609–619. [Google Scholar] [CrossRef]

- Taylor, D.M.; Barnes, T.R.; Young, A.H. The Maudsley Prescribing Guidelines in Psychiatry; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Schoicket, S.; MacKinnon, R.A.; Michels, R. The Psychiatric Interview in Clinical Practice. Fam. Co-ord. 1974, 23, 216. [Google Scholar] [CrossRef]

- Pope, B.; Blass, T.; Siegman, A.W.; Raher, J. Anxiety and depression in speech. J. Consult. Clin. Psychol. 1970, 35, 128–133. [Google Scholar] [CrossRef]

- Hargreaves, W.A.; Starkweather, J.A. Voice Quality Changes in Depression. Lang. Speech 1964, 7, 84–88. [Google Scholar] [CrossRef]

- Kuny, S.; Stassen, H. Speaking behavior and voice sound characteristics in depressive patients during recovery. J. Psychiatr. Res. 1993, 27, 289–307. [Google Scholar] [CrossRef]

- Ozdas, A.; Shiavi, R.G.; E Silverman, S.; Silverman, M.K.; Wilkes, D.M. Investigation of Vocal Jitter and Glottal Flow Spectrum as Possible Cues for Depression and Near-Term Suicidal Risk. IEEE Trans. Biomed. Eng. 2004, 51, 1530–1540. [Google Scholar] [CrossRef]

- Moore, E., 2nd; Clements, M.A.; Peifer, J.W.; Weisser, L. Critical analysis of the impact of glottal features in the classification of clinical depression in speech. IEEE Trans. Biomed. Eng. 2008, 55, 96–107. [Google Scholar] [CrossRef] [PubMed]

- Taguchi, T.; Tachikawa, H.; Nemoto, K.; Suzuki, M.; Nagano, T.; Tachibana, R.; Nishimura, M.; Arai, T. Major depressive disorder discrimination using vocal acoustic features. J. Affect. Disord. 2018, 225, 214–220. [Google Scholar] [CrossRef] [PubMed]

- Mundt, J.C.; Vogel, A.; Feltner, D.E.; Lenderking, W.R. Vocal Acoustic Biomarkers of Depression Severity and Treatment Response. Biol. Psychiatry 2012, 72, 580–587. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ooi, K.E.B.; Lech, M.; Allen, N. Multichannel Weighted Speech Classification System for Prediction of Major Depression in Adolescents. IEEE Trans. Biomed. Eng. 2012, 60, 497–506. [Google Scholar] [CrossRef] [PubMed]

- Hashim, N.W.; Wilkes, M.; Salomon, R.; Meggs, J.; France, D.J. Evaluation of Voice Acoustics as Predictors of Clinical Depression Scores. J. Voice 2017, 31, 256.e1–256.e6. [Google Scholar] [CrossRef]

- Harati, S.; Crowell, A.; Mayberg, H.; Nemati, S. Depression Severity Classification from Speech Emotion. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; Volume 2018, pp. 5763–5766. [Google Scholar]

- Mundt, J.C.; Snyder, P.; Cannizzaro, M.S.; Chappie, K.; Geralts, D.S. Voice acoustic measures of depression severity and treatment response collected via interactive voice response (IVR) technology. J. Neurolinguistics 2007, 20, 50–64. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, J.; Zhang, L.; Liu, T.; Pan, W.; Hu, B.; Zhu, T. Acoustic differences between healthy and depressed people: A cross-situation study. BMC Psychiatry 2019, 19, 1–12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sinha, P.; Vandana, V.P.; Lewis, N.V.; Jayaram, M.; Enderby, P. Predictors of Effect of Atypical Antipsychotics on Speech. Indian J. Psychol. Med. 2015, 37, 429–433. [Google Scholar] [CrossRef] [Green Version]

- Tiihonen, J.; Mittendorfer-Rutz, E.; Torniainen, M.; Alexanderson, K.; Tanskanen, A. Mortality and Cumulative Exposure to Antipsychotics, Antidepressants, and Benzodiazepines in Patients with Schizophrenia: An Observational Follow-Up Study. Am. J. Psychiatry 2016, 173, 600–606. [Google Scholar] [CrossRef] [PubMed]

- Leucht, S.; Samara, M.; Heres, S.; Patel, M.X.; Furukawa, T.; Cipriani, A.; Geddes, J.; Davis, J.M. Dose Equivalents for Second-Generation Antipsychotic Drugs: The Classical Mean Dose Method. Schizophr. Bull. 2015, 41, 1397–1402. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sheehan, D.V.; Lecrubier, Y.; Sheehan, K.H.; Amorim, P.; Janavs, J.; Weiller, E.; Hergueta, T.; Baker, R.; Dunbar, G.C. The Mini-International Neuropsychiatric Interview (M.I.N.I.): The development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. J. Clin. Psychiatry 1998, 59. [Google Scholar]

- Yoo, S.W.; Kim, Y.S.; Noh, J.S.; Oh, K.S.; Kim, C.H.; NamKoong, K.; Chae, J.H.; Lee, G.C.; Jeon, S.I.; Min, K.J.; et al. Validity of Korean version of the mini-international neuropsychiatric interview. Anxiety Mood 2006, 2, 50–55. [Google Scholar]

- Hamilton, M. Development of a Rating Scale for Primary Depressive Illness. Br. J. Soc. Clin. Psychol. 1967, 6, 278–296. [Google Scholar] [CrossRef]

- Bobo, W.V.; Angleró, G.C.; Jenkins, G.; Hall-Flavin, D.K.; Weinshilboum, R.; Biernacka, J.M. Validation of the 17-item Hamilton Depression Rating Scale definition of response for adults with major depressive disorder using equipercentile linking to Clinical Global Impression scale ratings: Analysis of Pharmacogenomic Research Network Antidepressant Medication Pharmacogenomic Study (PGRN-AMPS) data. Hum. Psychopharmacol. 2016, 31, 185–192. [Google Scholar]

- Zimmerman, M.; Martinez, J.H.; Young, D.; Chelminski, I.; Dalrymple, K. Severity classification on the Hamilton depression rating scale. J. Affect. Disord. 2013, 150, 384–388. [Google Scholar] [CrossRef] [PubMed]

- Kroenke, K.; Spitzer, R.L.; Williams, J.B. The PHQ-9: Validity of a brief depression severity measure. J. Gen. Intern. Med. 2001, 16, 606–613. [Google Scholar] [CrossRef] [PubMed]

- Hirschtritt, M.E.; Kroenke, K. Screening for Depression. JAMA 2017, 318, 745–746. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Levis, B.; Benedetti, A.; Thombs, B.D. Accuracy of Patient Health Questionnaire-9 (PHQ-9) for screening to detect major depression: Individual participant data meta-analysis. BMJ 2019, 365, l1476. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Beck, A.T.; Epstein, N.; Brown, G.; Steer, R.A. An inventory for measuring clinical anxiety: Psychometric properties. J. Consult. Clin. Psychol. 1988, 56, 893. [Google Scholar] [CrossRef] [PubMed]

- Beck, A.T.; Steer, R.A. Relationship between the Beck Anxiety Inventory and the Hamilton Anxiety Rating-Scale with Anxious Outpatients. J. Anxiety Disord. 1991, 5, 213–223. [Google Scholar] [CrossRef]

- Bardhoshi, G.; Duncan, K.; Erford, B.T. Psychometric Meta-Analysis of the English Version of the Beck Anxiety Inventory. J. Couns. Dev. 2016, 94, 356–373. [Google Scholar] [CrossRef]

- Dolan, M.; Anderson, I.M.; Deakin, J. Relationship between 5-HT function and impulsivity and aggression in male offenders with personality disorders. Br. J. Psychiatry 2001, 178, 352–359. [Google Scholar] [CrossRef] [Green Version]

- Barratt, E.S. Anxiety and impulsiveness related to psychomotor efficiency. Percept. Mot. Ski. 1959, 9, 191–198. [Google Scholar] [CrossRef]

- Spinella, M. Normative data and a short form of the barratt impulsiveness scale. Int. J. Neurosci. 2007, 117, 359–368. [Google Scholar] [CrossRef]

- Lee, S.-R.; Lee, W.-H.; Park, J.-S.; Kim, S.-M.; Kim, J.-W.; Shim, J.-H. The Study on Reliability and Validity of Korean Version of the Barratt Impulsiveness Scale-11-Revised in Nonclinical Adult Subjects. J. Korean Neuropsychiatr. Assoc. 2012, 51, 378–386. [Google Scholar] [CrossRef] [Green Version]

- Belalcázar-Bolaños, E.A.; Orozco-Arroyave, J.R.; Vargas-Bonilla, J.F.; Haderlein, T.; Nöth, E. Glottal Flow Patterns Analyses for Parkinson’s Disease Detection: Acoustic and Nonlinear Approaches. In Proceedings of the Transactions on Petri Nets and Other Models of Concurrency XV; Springer: Cham, Switzerland, 2016; pp. 400–407. [Google Scholar]

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; McVicar, M.; Battenberg, E.; Nieto, O. librosa: Audio and Music Signal Analysis in Python. In Proceedings of the 14th python in science conference 2015, Austin, TX, USA, 6–12 July 2015; pp. 18–24. [Google Scholar] [CrossRef] [Green Version]

- Snell, R.; Milinazzo, F. Formant location from LPC analysis data. IEEE Trans. Speech Audio Process. 1993, 1, 129–134. [Google Scholar] [CrossRef]

- Bachu, R.G.; Kopparthi, S.; Adapa, B.; Barkana, B.D. Separation of Voiced and Unvoiced Using Zero Crossing Rate and Energy of the Speech Signal. In American Society for Engineering Education (ASEE) Zone Conference Proceedings; 2008; Available online: https://www.asee.org/documents/zones/zone1/2008/student/ASEE12008_0044_paper.pdf (accessed on 18 March 2021).

- Mardia, K.V. Applications of some measures of multivariate skewness and kurtosis in testing normality and robustness studies. Sankhyā. Indian J. Stat. Ser. B 1974, 36, 115–128. [Google Scholar]

- Vapnik, V.; Golowich, S.E.; Smola, A.J. Support Vector Method for Function Approximation, Regression Estimation and Signal Processing. In Advances in Neural Information Processing Systems 9; Mozer, M.C., Jordan, M.I., Petsche, T., Eds.; MIT Press: Boston, MA, USA, 1997; pp. 281–287. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Internal Representations by Error Propagation; California Univ San Diego La Jolla Inst for Cognitive Science: San Diego, CA, USA, 1985. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chollet, F. Keras: The python deep learning library. Astrophys. Source Code Libr. 2018, ascl-1806.022. Available online: https://ui.adsabs.harvard.edu/abs/2018ascl.soft06022C/abstract (accessed on 18 March 2021).

- Schubert, E.; Wolfe, J.; Tarnopolsky, A. Spectral centroid and timbre in complex, multiple instrumental textures. In Proceedings of the international conference on music perception and cognition, North Western University, Evanston, IL, USA, 3–7 August 2004. [Google Scholar]

- Kim, J.-C. Detection of the Optimum Spectral Roll-off Point using Violin as a Sound Source. J. Korea Soc. Comput. Inf. 2007, 12, 51–56. [Google Scholar]

- Standards Secretariat, A.S.o.A. American National Standard Acoustical Terminology; Acoustical Society of America: Melville, NY, USA, 1994. [Google Scholar]

- Gouyon, F.; Pachet, F.; Delerue, O. On the use of zero-crossing rate for an application of classification of percussive sounds. In Proceedings of the COST G-6 conference on Digital Audio Effects (DAFX-00), Verona, Italy, 7–9 December 2000. [Google Scholar]

- Nilsonne, Å.; Sundberg, J.; Ternström, S.; Askenfelt, A. Measuring the rate of change of voice fundamental frequency in fluent speech during mental depression. J. Acoust. Soc. Am. 1988, 83, 716–728. [Google Scholar] [CrossRef]

- Darby, J.K.; Hollien, H. Vocal and Speech Patterns of Depressive Patients. Folia Phoniatr. Logop. 1977, 29, 279–291. [Google Scholar] [CrossRef] [PubMed]

- Cannizzaro, M.; Harel, B.; Reilly, N.; Chappell, P.; Snyder, P.J. Voice acoustical measurement of the severity of major depression. Brain Cogn. 2004, 56, 30–35. [Google Scholar] [CrossRef]

- Meeks, T.W.; Vahia, I.V.; Lavretsky, H.; Kulkarni, G.; Jeste, D.V. A tune in “a minor” can “b major”: A review of epidemiology, illness course, and public health implications of subthreshold depression in older adults. J. Affect. Disord. 2011, 129, 126–142. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Judd, L.L.; Akiskal, H.S.; Maser, J.D.; Zeller, P.J.; Endicott, J.; Coryell, W.; Paulus, M.; Kunovac, J.L.; Leon, A.C.; I Mueller, T.; et al. Major depressive disorder: A prospective study of residual subthreshold depressive symptoms as predictor of rapid relapse. J. Affect. Disord. 1998, 50, 97–108. [Google Scholar] [CrossRef]

- Nakov, P.; Ritter, A.; Rosenthal, S.; Sebastiani, F.; Stoyanov, V. SemEval-2016 Task 4: Sentiment Analysis in Twitter. In Proceedings of the 10th International Workshop on Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2016; pp. 1–18. [Google Scholar]

- Van Hee, C.; Lefever, E.; Hoste, V. SemEval-2018 Task 3: Irony Detection in English Tweets. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 39–50. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-vectors: Robust DNN embeddings for speaker recognition. In Proceedings of the 2018 IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5329–5333. [Google Scholar]

| ND ‡ | mDE | MDE | p Value | Post Hoc Test | ||

|---|---|---|---|---|---|---|

| N | 33 | 26 | 34 | |||

| sex | M | 8 (24.2%) | 8 (30.8%) | 7 (20.6%) | 0.689 | |

| F | 25 (75.8%) | 18 (69.2%) | 27 (79.4%) | |||

| Age * | 28.12 ± 4.827 | 34.58 ± 11.497 | 29.68 ± 9.914 | 0.022 | ||

| SES | Very low | 0 (0%) | 2 (7.7%) | 1 (2.9%) | 0.397 | |

| Low | 10 (30.3%) | 5 (19.2%) | 7 (20.6%) | |||

| Middle | 18 (54.5%) | 15 (57.7%) | 18 (52.9%) | |||

| High | 5 (15.2%) | 3 (11.5%) | 4 (11.8%) | |||

| Very high | 0 (0%) | 1 (3.8%) | 4 (11.8%) | |||

| BMI *** | 21.356 ± 1.861 | 23.470 ± 4.575 | 25.620 ± 5.396 | <0.001 | 1 < 3 ** | |

| drugs taken for non-psychiatric disorders * | Yes | 2 (6.1%) | 8 (30.8%) | 4 (11.8%) | 0.025 | 1 ≠ 2 * |

| No | 31 (93.9%) | 18 (69.2%) | 30 (88.2%) | |||

| Antipsychotics *** | Yes | 0 (0%) | 23 (88.5%) | 29 (85.3%) | <0.001 | 1 ≠ 2 ***, 1 ≠ 3 *** |

| No | 33 (100%) | 3 (11.5%) | 5 (14.7%) | |||

| Antipsychotics dose | 5.142 ± 4.589 | 8.254 ± 7.893 | 0.100 | |||

| diagnosis by MINI *** | No psychiatric disorder | 26 (78.8%) | 0 (0%) | 0 (0%) | 0.000 | |

| Major depressive disorder | 5 (15.2%) | 9 (34.6%) | 1 (2.9%) | |||

| Bipolar disorder | 2 (6.1%) | 17 (65.4%) | 33 (97.1%) | |||

| Anxiety disorders † | 2 (6.1%) | 2 (7.7%) | 13 (38.2%) | |||

| Obsessive compulsive disorder | 1 (3.0%) | 1 (3.8%) | 3 (8.8%) | |||

| Alcohol use disorder | 2 (6.1%) | 1 (3.8%) | 3 (8.8%) |

| ND | mDE | MDE | p Value | Post Hoc Test | ||

|---|---|---|---|---|---|---|

| N | 33 | 26 | 34 | |||

| HRDS *** | mean | 3.879 | 13.346 | 18.706 | <0.001 | 1 < 2 < 3 *** |

| SD | 2.902 | 4.127 | 4.414 | |||

| PHQ *** | mean | 1.576 | 11.615 | 16.294 | <0.001 | 1 < 2, 3 ***, 2 < 3 * |

| SD | 2.332 | 5.947 | 6.279 | |||

| BAI *** | mean | 1.394 | 20.385 | 25.206 | <0.001 | 1 < 2 ***, 1 < 3 *** |

| SD | 2.609 | 16.346 | 17.562 | |||

| BIS | mean | 60.909 | 65.615 | 62.088 | 0.127 | |

| SD | 5.598 | 8.750 | 8.155 |

| ND | mDE | MDE | p Value | M–W Test | B–H Test | Adjusted p Value # | J–T Test † | ||

|---|---|---|---|---|---|---|---|---|---|

| N | 33 | 26 | 34 | ||||||

| log_glottal_OP * | mean | 0.890 | 0.846 | 0.925 | 0.037 | 2 < 3 * | 0.051 | 0.381 | |

| SD | 0.105 | 0.096 | 0.137 | ||||||

| log_glottal_CP | mean | 0.710 | 0.692 | 0.759 | 0.094 | 0.097 | 0.069 | ||

| SD | 0.124 | 0.110 | 0.132 | ||||||

| log_glottal_C | mean | −0.675 | −0.694 | -0.627 | 0.094 | 0.097 | 0.068 | ||

| SD | 0.124 | 0.110 | 0.132 | ||||||

| log_spectral_time | mean | 2.345 | 2.583 | 2.457 | 0.191 | 0.461 | 0.343 | ||

| SD | 0.463 | 0.529 | 0.497 | ||||||

| spectral_centroid *** | mean | 7.471 | 7.375 | 7.398 | <0.001 | 1 > 2 ***, 1 > 3 ** | 1 > 2 ***, 1 > 3 ** | 0.008 ‡‡ | <0.001 ††† |

| SD | 0.058 | 0.075 | 0.105 | ||||||

| spectral_bandwidth | mean | 7.444 | 7.422 | 7.430 | 0.343 | 0.968 | 0.384 | ||

| SD | 0.050 | 0.057 | 0.069 | ||||||

| spectral_roll-off *** | mean | 8.118 | 7.994 | 8.026 | <0.001 | 1 > 2 ***, 1 > 3 ** | 1 > 2 ***, 1 > 3 ** | 0.012 ‡ | 0.001 †† |

| SD | 0.082 | 0.110 | 0.132 | ||||||

| spectral_rmse | mean | 4.358 | 4.058 | 4.329 | 0.180 | 0.468 | 0.794 | ||

| SD | 0.540 | 0.668 | 0.760 | ||||||

| log_spectral_tempo | mean | 4.771 | 4.779 | 4.772 | 0.093 | 0.327 | 0.286 | ||

| SD | 0.012 | 0.019 | 0.012 | ||||||

| formant1 | mean | 6.230 | 6.239 | 6.218 | 0.552 | 0.553 | 0.562 | ||

| SD | 0.062 | 0.069 | 0.082 | ||||||

| formant2 | mean | 7.374 | 7.349 | 7.349 | 0.490 | 0.304 | 0.221 | ||

| SD | 0.085 | 0.095 | 0.101 | ||||||

| formant3 | mean | 8.043 | 8.022 | 8.026 | 0.526 | 0.919 | 0.621 | ||

| SD | 0.080 | 0.068 | 0.079 | ||||||

| formant_BW1 | mean | 42.083 | 39.681 | 44.119 | 0.331 | 0.159 | 0.729 | ||

| SD | 10.233 | 8.399 | 14.107 | ||||||

| formant_BW2 * | mean | 180.213 | 201.080 | 198.655 | 0.200 | 0.040 ‡ | 0.094 | ||

| SD | 53.886 | 50.843 | 45.060 | ||||||

| sq_formant_BW3 | mean | 50622.267 | 52003.924 | 44566.728 | 0.133 | 0.094 | 0.292 | ||

| SD | 13671.136 | 18982.013 | 14031.728 | ||||||

| sq_mean_pitch ** | mean | 87561.420 | 73835.557 | 81997.509 | 0.002 | 1 > 2 ***, 2 < 3 * | 1 > 2 **, | 0.027 ‡ | 0.149 |

| SD | 12409.867 | 15014.241 | 15820.365 | ||||||

| stdev_pitch ** | mean | 0.287 | 0.344 | 0.300 | 0.003 | 1 < 2 **, 2 > 3 * | 1 < 2 **, 2 > 3 * | 0.020 ‡ | 0.520 |

| SD | 0.057 | 0.065 | 0.067 | ||||||

| mean_magnitude ** | mean | 69.894 | 61.002 | 65.060 | 0.009 | 1 > 2** | 1 > 2 * | 0.059 | 0.110 |

| SD | 11.454 | 11.292 | 9.902 | ||||||

| sq_stdev_magnitude | mean | 0.787 | 0.748 | 0.852 | 0.140 | 0.237 | |||

| SD | 0.146 | 0.252 | 0.215 | 0.045 † | |||||

| ZCR *** | mean | 0.055 | 0.044 | 0.047 | <0.001 | 1 > 2 ***, 1 < 3 ** | 1 > 2 ***, 1 < 3 *** | <0.001 ‡‡‡ | |

| SD | 0.007 | 0.006 | 0.010 | < 0.001 ††† | |||||

| voice portion ** | mean | 0.665 | 0.695 | 0.681 | 0.001 | 1 < 2 **, 1 < 3 * | 1 < 2 ** | 0.020 ‡ | |

| SD | 0.023 | 0.031 | 0.033 | 0.021 † |

| 7:3 | 8:2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | LR | GNB | SVM | MLP | LR | GNB | SVM | MLP | |

| Accuracy Mean (max) | augmented | 45.2 (53.6) | 48.8 (57.1) | 46.4 (50) | 51.2 (53.6) | 43.2 (57.9) | 43.2 (57.9) | 45.3 (52.6) | 60 (68.4) |

| selected-augmented | 47.6 (57.1) | 48.8 (57.1) | 40.5 (53.6) | 51.2 (57.1) | 43.2 (63.2) | 43.2 (57.9) | 35.8 (42.1) | 51.6 (57.9) | |

| AUC Mean (max) | augmented | 63.4 (68) | 64.7 (70.2) | 60.3 (61) | 59.7 (65.1) | 64.5 (72.1) | 64.5 (73.9) | 61.1 (70.6) | 65.9 (72.1) |

| selected-augmented | 63.6 (75.7) | 63.6 (72) | 58.8 (66.5) | 62.9 (70.7) | 62 (74.6) | 60.3 (67.5) | 56.8 (64.9) | 62.6 (69.9) | |

| Precision (sensitivity) Mean (max) | augmented | 45.1 (55.6) | 49.1 (58) | 47.3 (55) | 51.4 (71.5) | 46.6 (70.9) | 41.6 (60) | 48.7 (68.7) | 65.6 (76.7) |

| selected-augmented | 57.2 (64.6) | 54 (66.7) | 36.3 (56.3) | 60 (62.6) | 44 (61.3) | 42.3 (58.9) | 34.6 (42.1) | 62.6 (72.2) | |

| Recall (specificity) Mean (max) | augmented | 44.8 (55.1) | 49.6 (60.2) | 45.1 (48.6) | 48.5 (55.8) | 43.3 (61.7) | 42.6 (64.2) | 45.6 (52.5) | 66.2 (69.7) |

| selected-augmented | 46.1 (55.8) | 48.1 (56.7) | 48.4 (64.6) | 54.8 (62.5) | 43.8 (64.6) | 43 (58.1) | 37.7 (44.2) | 93.3 (100) | |

| F1 Mean (max) | augmented | 43.1 (50.8) | 46.4 (55.5) | 43.5 (49.4) | 44.3 (52.3) | 42.1 (58.2) | 39.6 (55.6) | 43.4 (49.2) | 58.9 (69.7) |

| selected-augmented | 45.4 (56.8) | 47.8 (57.5) | 40.7 (64.6) | 49.3 (57.9) | 41 (62.1) | 42.1 (58.3) | 33.1 (35.7) | 58.7 (71.4) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, D.; Cho, W.I.; Park, C.H.K.; Rhee, S.J.; Kim, M.J.; Lee, H.; Kim, N.S.; Ahn, Y.M. Detection of Minor and Major Depression through Voice as a Biomarker Using Machine Learning. J. Clin. Med. 2021, 10, 3046. https://doi.org/10.3390/jcm10143046

Shin D, Cho WI, Park CHK, Rhee SJ, Kim MJ, Lee H, Kim NS, Ahn YM. Detection of Minor and Major Depression through Voice as a Biomarker Using Machine Learning. Journal of Clinical Medicine. 2021; 10(14):3046. https://doi.org/10.3390/jcm10143046

Chicago/Turabian StyleShin, Daun, Won Ik Cho, C. Hyung Keun Park, Sang Jin Rhee, Min Ji Kim, Hyunju Lee, Nam Soo Kim, and Yong Min Ahn. 2021. "Detection of Minor and Major Depression through Voice as a Biomarker Using Machine Learning" Journal of Clinical Medicine 10, no. 14: 3046. https://doi.org/10.3390/jcm10143046

APA StyleShin, D., Cho, W. I., Park, C. H. K., Rhee, S. J., Kim, M. J., Lee, H., Kim, N. S., & Ahn, Y. M. (2021). Detection of Minor and Major Depression through Voice as a Biomarker Using Machine Learning. Journal of Clinical Medicine, 10(14), 3046. https://doi.org/10.3390/jcm10143046