Comparison of Deep Learning Models for Cervical Vertebral Maturation Stage Classification on Lateral Cephalometric Radiographs

Abstract

:1. Introduction

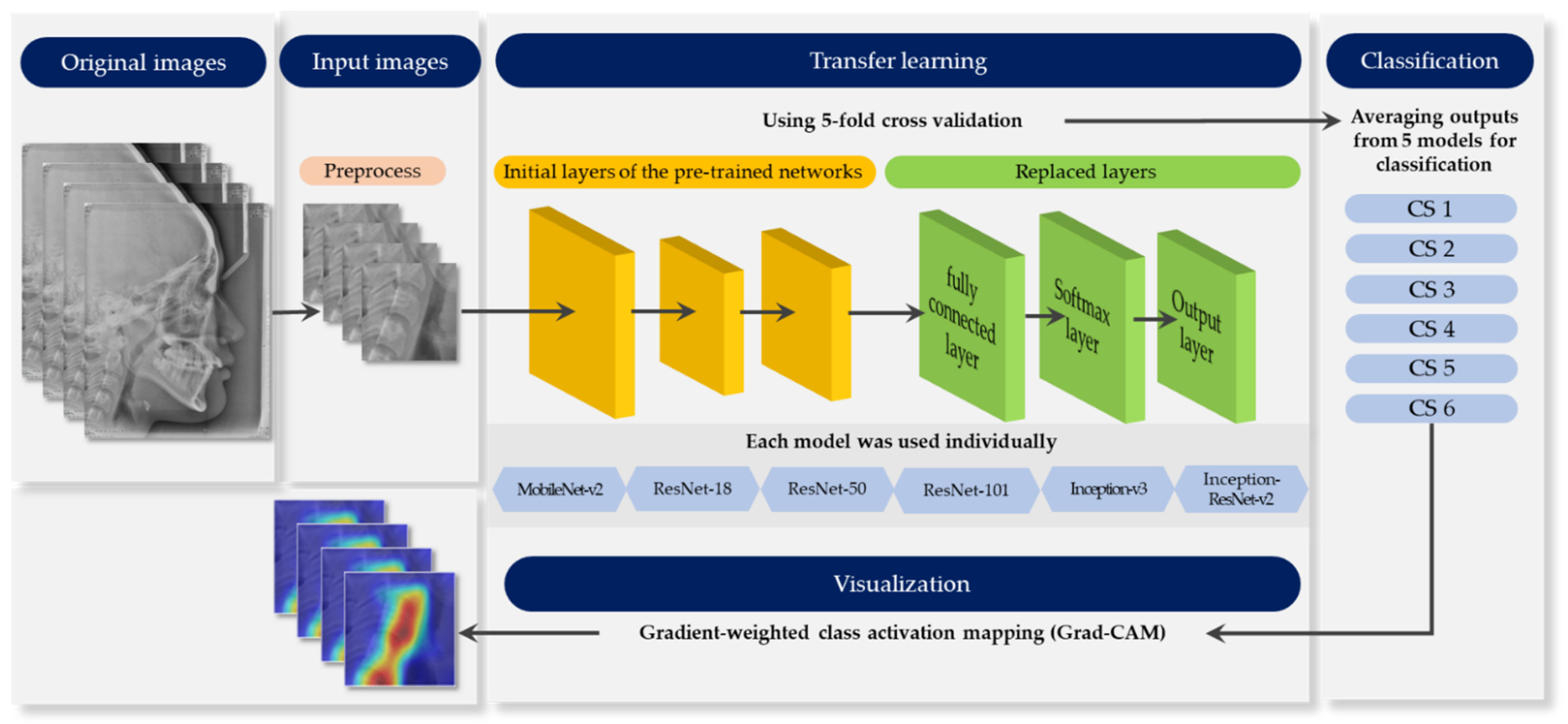

2. Materials and Methods

2.1. Ethics Statement

2.2. Subjects

2.3. Methods

2.3.1. Pre-Process

2.3.2. Pre-Trained Networks

2.3.3. Data Augmentation

2.3.4. Training Configuration

2.3.5. Performance Evaluation

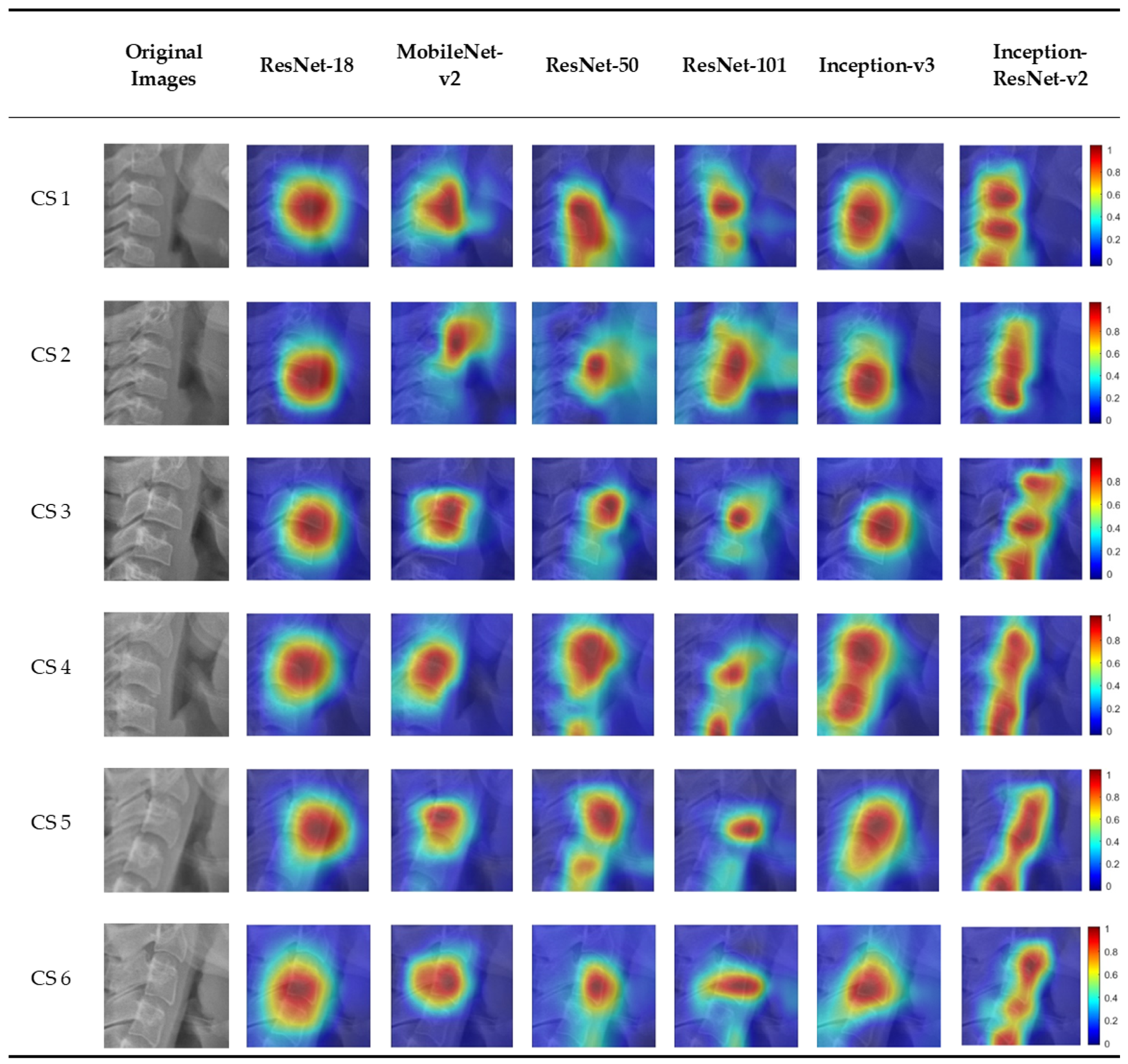

2.3.6. Model Visualization

3. Results

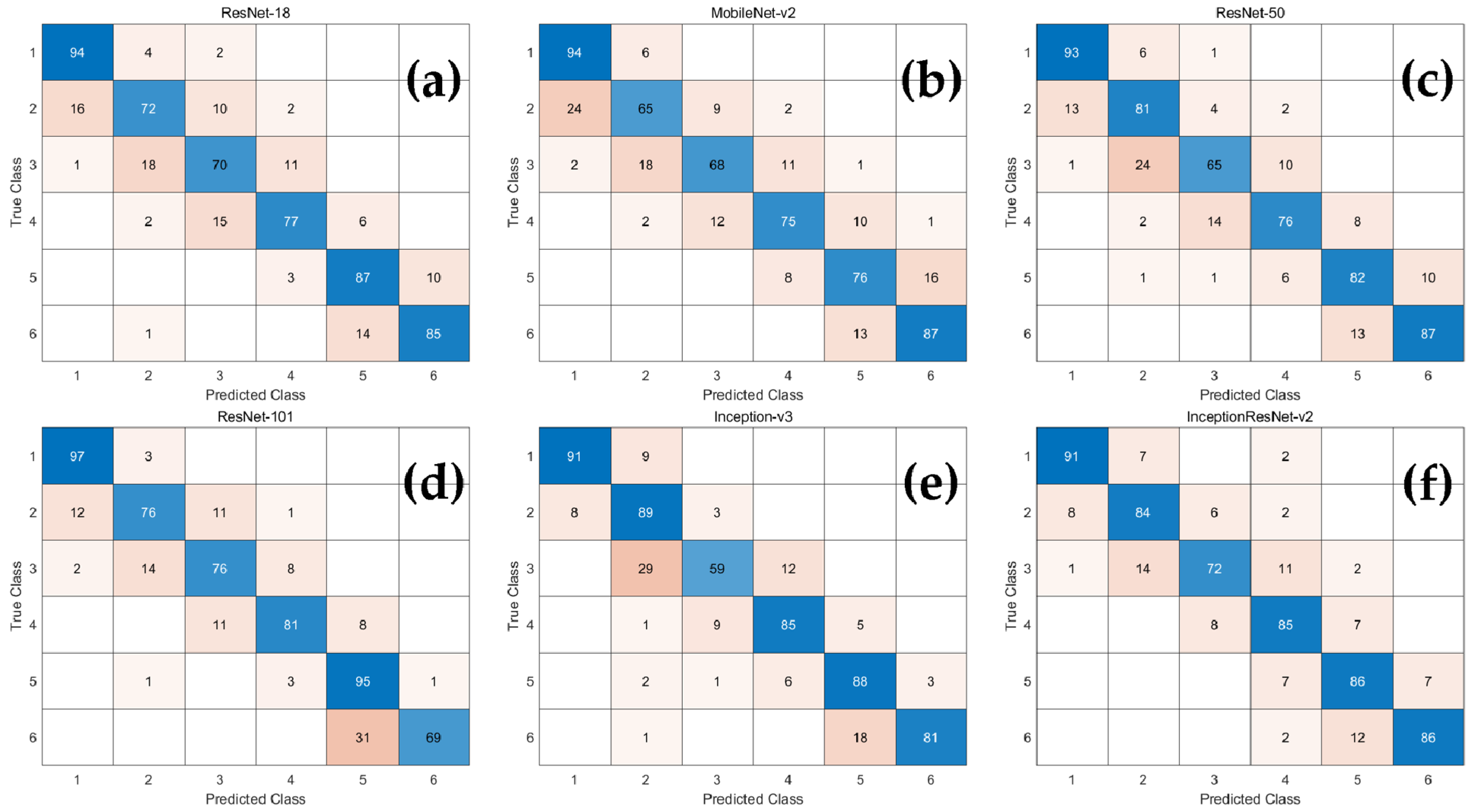

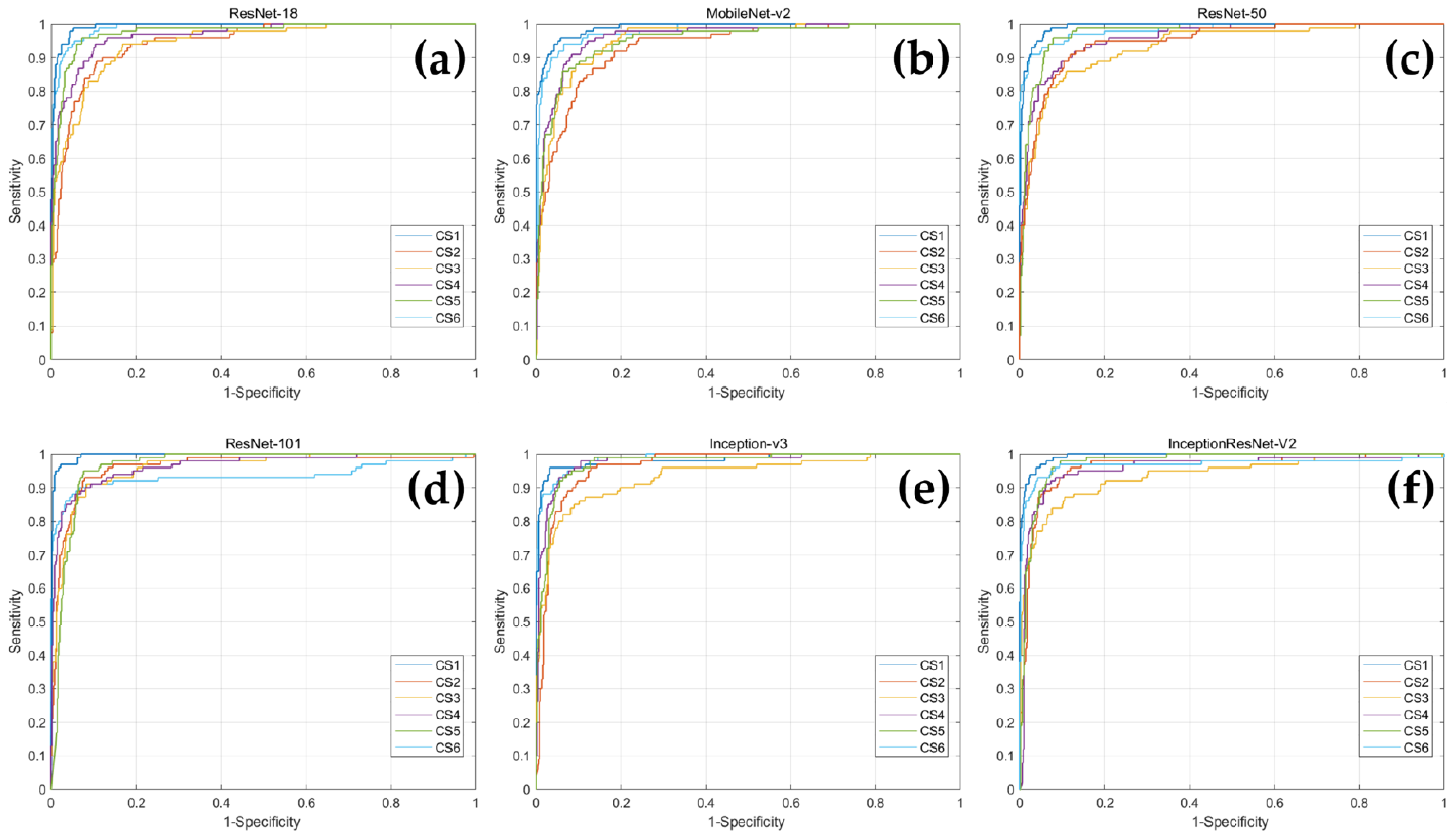

3.1. Classification Performance

3.2. Visualization of Model Classification

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rolland-Cachera, M.F.; Peneau, S. Assessment of growth: Variations according to references and growth parameters used. Am. J. Clin. Nutr. 2011, 94, 1794S–1798S. [Google Scholar] [CrossRef] [Green Version]

- Korde, S.J.; Daigavane, P.; Shrivastav, S. Skeletal Maturity Indicators-Review Article. Int. J. Sci. Res. 2015, 6, 361–370. [Google Scholar]

- Fishman, L.S. Chronological versus skeletal age, an evaluation of craniofacial growth. Angle Orthod. 1979, 49, 181–189. [Google Scholar] [CrossRef]

- Alkhal, H.A.; Wong, R.W.; Rabie, A.B.M. Correlation between chronological age, cervical vertebral maturation and Fishman’s skeletal maturity indicators in southern Chinese. Angle Orthod. 2008, 78, 591–596. [Google Scholar] [CrossRef]

- Baccetti, T.; Franchi, L.; McNamara, J.A. The Cervical Vertebral Maturation (CVM) Method for the Assessment of Optimal Treatment Timing in Dentofacial Orthopedics. Semin. Orthod. 2005, 11, 119–129. [Google Scholar] [CrossRef]

- De Sanctis, V.; Di Maio, S.; Soliman, A.T.; Raiola, G.; Elalaily, R.; Millimaggi, G. Hand X-ray in pediatric endocrinology: Skeletal age assessment and beyond. Indian J. Endocrinol. Metab. 2014, 18, S63–S71. [Google Scholar] [CrossRef] [PubMed]

- Cericato, G.O.; Bittencourt, M.A.; Paranhos, L.R. Validity of the assessment method of skeletal maturation by cervical vertebrae: A systematic review and meta-analysis. Dentomaxillofacial Radiol. 2015, 44, 20140270. [Google Scholar] [CrossRef] [Green Version]

- Flores-Mir, C.; Nebbe, B.; Major, P.W. Use of skeletal maturation based on hand-wrist radiographic analysis as a predictor of facial growth: A systematic review. Angle Orthod. 2004, 74, 118–124. [Google Scholar] [CrossRef]

- Manzoor Mughal, A.; Hassan, N.; Ahmed, A. Bone age assessment methods: A critical review. Pak. J. Med. Sci. 2014, 30, 211–215. [Google Scholar] [CrossRef]

- Lamparski, D. Skeletal Age Assessment Utilizing Cervical Vertebrae; University of Pittsburgh: Pittsburgh, PA, USA, 1972. [Google Scholar]

- Hassel, B.; Farman, A.G. Skeletal maturation evaluation using cervical vertebrae. Am. J. Orthod. Dentofac. Orthop. 1995, 107, 58–66. [Google Scholar] [CrossRef]

- Baccetti, T.; Franchi, L.; McNamara, J.A. An improved version of the cervical vertebral maturation (CVM) method for the assessment of mandibular growth. Angle Orthod. 2002, 72, 316–323. [Google Scholar] [CrossRef] [PubMed]

- Navlani, M.; Makhija, P.G. Evaluation of skeletal and dental maturity indicators and assessment of cervical vertebral maturation stages by height/width ratio of third cervical vertebra. J. Pierre Fauchard Acad. (India Sect.) 2013, 27, 73–80. [Google Scholar] [CrossRef]

- Nestman, T.S.; Marshall, S.D.; Qian, F.; Holton, N.; Franciscus, R.G.; Southard, T.E. Cervical vertebrae maturation method morphologic criteria: Poor reproducibility. Am. J. Orthod. Dentofac. Orthop. 2011, 140, 182–188. [Google Scholar] [CrossRef]

- Gabriel, D.B.; Southard, K.A.; Qian, F.; Marshall, S.D.; Franciscus, R.G.; Southard, T.E. Cervical vertebrae maturation method: Poor reproducibility. Am. J. Orthod. Dentofac. Orthop. 2009, 136, 478-e1. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Kim, J.R.; Shim, W.H.; Yoon, H.M.; Hong, S.H.; Lee, J.S.; Cho, Y.A.; Kim, S. Computerized Bone Age Estimation Using Deep Learning Based Program: Evaluation of the Accuracy and Efficiency. Am. J. Roentgenol. 2017, 209, 1374–1380. [Google Scholar] [CrossRef]

- Lee, H.; Tajmir, S.; Lee, J.; Zissen, M.; Yeshiwas, B.A.; Alkasab, T.K.; Choy, G.; Do, S. Fully Automated Deep Learning System for Bone Age Assessment. J. Digit. Imaging 2017, 30, 427–441. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kok, H.; Acilar, A.M.; Izgi, M.S. Usage and comparison of artificial intelligence algorithms for determination of growth and development by cervical vertebrae stages in orthodontics. Prog. Orthod. 2019, 20, 41. [Google Scholar] [CrossRef] [PubMed]

- Amasya, H.; Yildirim, D.; Aydogan, T.; Kemaloglu, N.; Orhan, K. Cervical vertebral maturation assessment on lateral cephalometric radiographs using artificial intelligence: Comparison of machine learning classifier models. Dentomaxillofacial Radiol. 2020, 49, 20190441. [Google Scholar] [CrossRef]

- Makaremi, M.; Lacaule, C.; Mohammad-Djafari, A. Deep Learning and Artificial Intelligence for the Determination of the Cervical Vertebra Maturation Degree from Lateral Radiography. Entropy 2019, 21, 1222. [Google Scholar] [CrossRef] [Green Version]

- Makaremi, M.; Lacaule, C.; Mohammad-Djafari, A. Determination of the Cervical Vertebra Maturation Degree from Lateral Radiography. Proceedings 2019, 33, 30. [Google Scholar] [CrossRef] [Green Version]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1312.4400. [Google Scholar]

- McNamara, J.A., Jr.; Franchi, L. The cervical vertebral maturation method: A user’s guide. Angle Orthod. 2018, 88, 133–143. [Google Scholar] [CrossRef] [Green Version]

- Mehta, S.; Dresner, R.; Gandhi, V.; Chen, P.J.; Allareddy, V.; Kuo, C.L.; Mu, J.; Yadav, S. Effect of positional errors on the accuracy of cervical vertebrae maturation assessment using CBCT and lateral cephalograms. J. World Fed. Orthod. 2020, 9, 146–154. [Google Scholar] [CrossRef]

- Perinetti, G.; Caprioglio, A.; Contardo, L. Visual assessment of the cervical vertebral maturation stages: A study of diagnostic accuracy and repeatability. Angle Orthod. 2014, 84, 951–956. [Google Scholar] [CrossRef] [Green Version]

- Tajmir, S.H.; Lee, H.; Shailam, R.; Gale, H.I.; Nguyen, J.C.; Westra, S.J.; Lim, R.; Yune, S.; Gee, M.S.; Do, S. Artificial intelligence-assisted interpretation of bone age radiographs improves accuracy and decreases variability. Skelet. Radiol. 2019, 48, 275–283. [Google Scholar] [CrossRef]

- Mahdi, F.P.; Motoki, K.; Kobashi, S. Optimization technique combined with deep learning method for teeth recognition in dental panoramic radiographs. Sci. Rep. 2020, 10, 19261. [Google Scholar] [CrossRef]

- Zhong, G.; Ling, X.; Wang, L.N. From shallow feature learning to deep learning: Benefits from the width and depth of deep architectures. WIREs Data Min. Knowl. Discov. 2018, 9. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.E.; Nam, N.E.; Shim, J.S.; Jung, Y.H.; Cho, B.H.; Hwang, J.J. Transfer Learning via Deep Neural Networks for Implant Fixture System Classification Using Periapical Radiographs. J. Clin. Med. 2020, 9, 1117. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schoretsaniti, L.; Mitsea, A.; Karayianni, K.; Sifakakis, I. Cervical Vertebral Maturation Method: Reproducibility and Efficiency of Chronological Age Estimation. Appl. Sci. 2021, 11, 3160. [Google Scholar] [CrossRef]

- Zhou, T.; Ruan, S.; Canu, S. A review: Deep learning for medical image segmentation using multi-modality fusion. Array 2019, 3, 100004. [Google Scholar] [CrossRef]

| CVM Stage | Numbers | Mean Age (Years) ± SD |

|---|---|---|

| CS 1 | 100 | 7.27 ± 1.17 |

| CS 2 | 100 | 9.41 ± 1.60 |

| CS 3 | 100 | 10.99 ± 1.28 |

| CS 4 | 100 | 12.54 ± 1.08 |

| CS 5 | 100 | 14.72 ± 1.58 |

| CS 6 | 100 | 17.65 ± 1.69 |

| Total | 600 | 12.10 ± 3.52 |

| Network Model | Depth | Size (MB) | Parameter (Millions) | Input Image Size |

|---|---|---|---|---|

| ResNet-18 | 18 | 44.0 | 11.7 | 224 × 224 × 3 |

| MobileNet-v2 | 53 | 13.0 | 3.5 | 224 × 224 × 3 |

| ResNet-50 | 50 | 96.0 | 25.6 | 224 × 224 × 3 |

| ResNet-101 | 101 | 167.0 | 44.6 | 224 × 224 × 3 |

| Inception-v3 | 48 | 89.0 | 23.9 | 299 × 299 × 3 |

| Inception-ResNet-v2 | 164 | 209.0 | 55.9 | 299 × 299 × 3 |

| Accuracy | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| ResNet-18 | 0.927 ± 0.025 | 0.808 ± 0.094 | 0.808 ± 0.065 | 0.807 ± 0.074 |

| MobileNet-v2 | 0.912 ± 0.022 | 0.775 ± 0.111 | 0.773 ± 0.040 | 0.772 ± 0.070 |

| ResNet-50 | 0.927 ± 0.025 | 0.807 ± 0.096 | 0.808 ± 0.068 | 0.806 ± 0.075 |

| ResNet-101 | 0.934 ± 0.020 | 0.823 ± 0.113 | 0.837 ± 0.096 | 0.822 ± 0.054 |

| Inception-v3 | 0.933 ± 0.027 | 0.822 ± 0.119 | 0.833 ± 0.100 | 0.821 ± 0.082 |

| Inception-ResNet-v2 | 0.941 ± 0.018 | 0.840 ± 0.064 | 0.843 ± 0.061 | 0.840 ± 0.051 |

| CS 1 | CS 2 | CS 3 | CS 4 | CS 5 | CS 6 | |

|---|---|---|---|---|---|---|

| ResNet-18 | 0.993 | 0.945 | 0.944 | 0.967 | 0.976 | 0.989 |

| MobileNet-v2 | 0.990 | 0.934 | 0.954 | 0.964 | 0.953 | 0.980 |

| ResNet-50 | 0.992 | 0.949 | 0.934 | 0.959 | 0.975 | 0.983 |

| ResNet-101 | 0.996 | 0.962 | 0.959 | 0.965 | 0.965 | 0.935 |

| Inception-v3 | 0.983 | 0.964 | 0.935 | 0.978 | 0.974 | 0.987 |

| Inception-ResNet-v2 | 0.994 | 0.961 | 0.935 | 0.959 | 0.975 | 0.969 |

| ResNet-18 | MobileNet-v2 | ResNet-50 | ResNet-101 | Inception-v3 | Inception-ResNet-v2 | |

|---|---|---|---|---|---|---|

| Training time | 9 min, 30 s | 21 min, 10 s | 22 min, 20 s | 47 min, 25 s | 41 min, 30 s | 119 min, 40 s |

| Single image testing time | 0.02 s | 0.02 s | 0.02 s | 0.03 s | 0.03 s | 0.07 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, H.; Hwang, J.; Jeong, T.; Shin, J. Comparison of Deep Learning Models for Cervical Vertebral Maturation Stage Classification on Lateral Cephalometric Radiographs. J. Clin. Med. 2021, 10, 3591. https://doi.org/10.3390/jcm10163591

Seo H, Hwang J, Jeong T, Shin J. Comparison of Deep Learning Models for Cervical Vertebral Maturation Stage Classification on Lateral Cephalometric Radiographs. Journal of Clinical Medicine. 2021; 10(16):3591. https://doi.org/10.3390/jcm10163591

Chicago/Turabian StyleSeo, Hyejun, JaeJoon Hwang, Taesung Jeong, and Jonghyun Shin. 2021. "Comparison of Deep Learning Models for Cervical Vertebral Maturation Stage Classification on Lateral Cephalometric Radiographs" Journal of Clinical Medicine 10, no. 16: 3591. https://doi.org/10.3390/jcm10163591

APA StyleSeo, H., Hwang, J., Jeong, T., & Shin, J. (2021). Comparison of Deep Learning Models for Cervical Vertebral Maturation Stage Classification on Lateral Cephalometric Radiographs. Journal of Clinical Medicine, 10(16), 3591. https://doi.org/10.3390/jcm10163591