Prediction of Long-Term Stroke Recurrence Using Machine Learning Models

Abstract

1. Introduction

2. Methods

2.1. Data Source

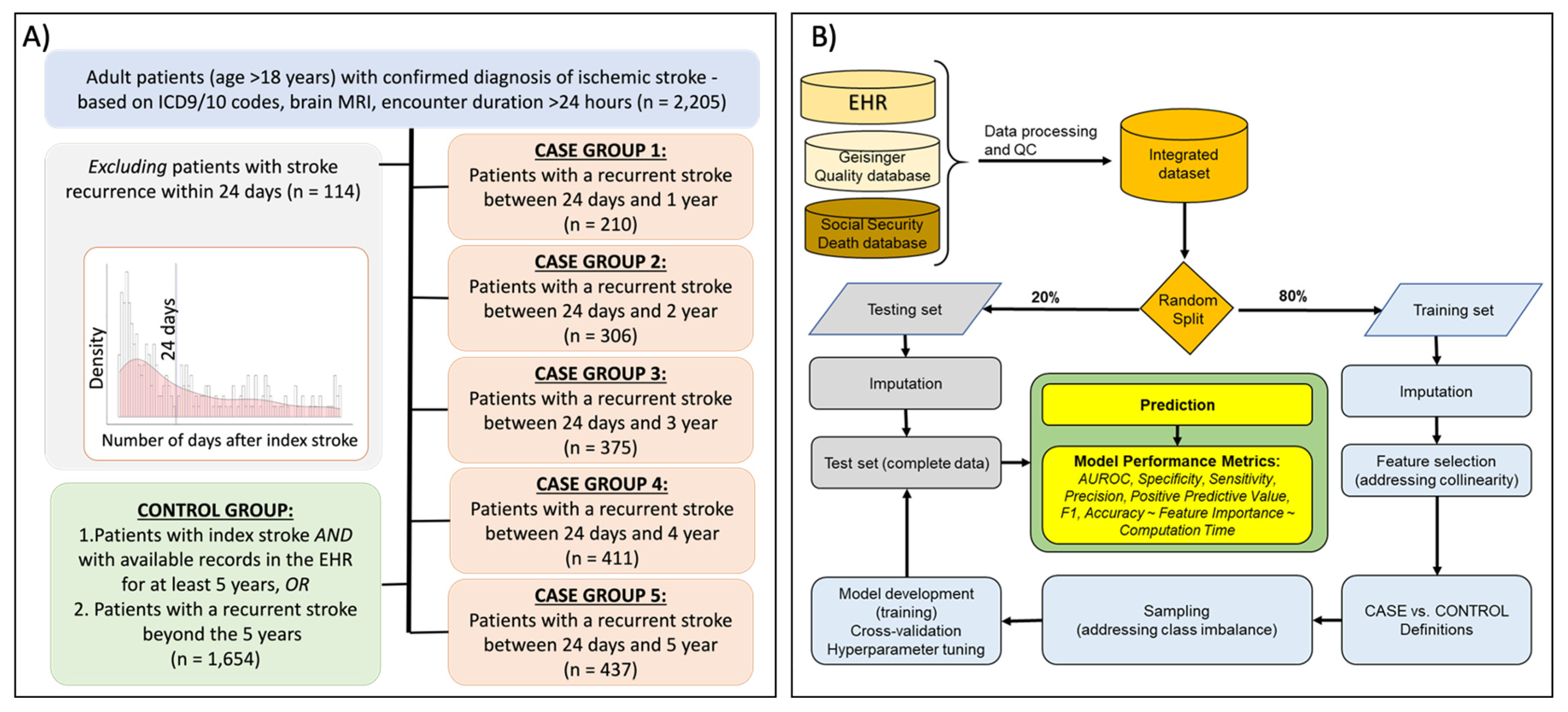

2.2. Study Population

2.3. Data Processing, Feature Extraction, and Sampling

2.4. Model Development and Testing

3. Results

3.1. Patient Population and Characteristics

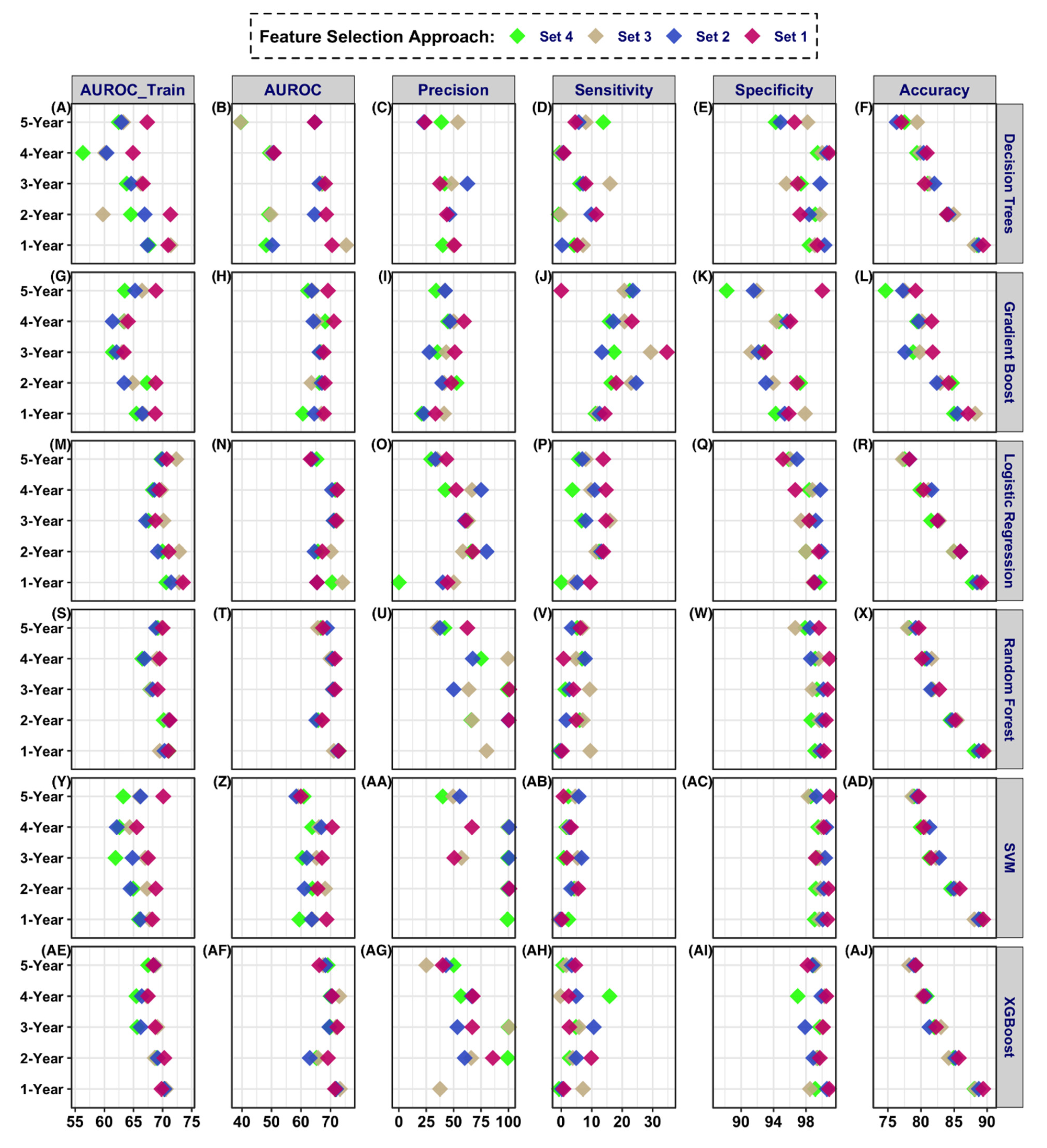

3.2. Models Can Be Trained to Predict the Long-Term Stroke Recurrence

3.3. Age, BMI, and Laboratory Values Highly Associated with Stroke Recurrence

3.4. Models’ Performance Metrics Improved through Sampling Strategies

4. Discussion

4.1. Models Could Be Trained to Predict the Long-Term Stroke Recurrence

4.2. Clinical Features Highly Associated with Stroke Recurrence

4.3. Model Performance Metrics Optimized Based on the Target Goals

4.4. Study Strengths, Limitations, and Future Directions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUROC | Area Under the Receiver Operating Characteristic Curve |

| CI | Confidence Interval |

| CV | Cross-Validation |

| DT | Decision Tree |

| EHR | Electronic Health Records |

| ESRS | Essen Stroke Risk Score |

| GBM | Gradient Boosting Machine |

| GNSIS | Geisinger Neuroscience Ischemic Stroke |

| HbA1c | Hemoglobin A1c |

| HDL | High-Density Lipoprotein |

| ICD-9/10-CM | International Classification of Diseases, Ninth/Tenth Revision, Clinical Modification |

| IQR | Interquartile Range |

| LDL | Low-Density Lipoprotein |

| LR | Logistic Regression |

| MICE | Multivariate Imputation by Chained Equations |

| ML | Machine Learning |

| MRI | Magnetic Resonance Imaging |

| NIHSS | National Institutes of Health Stroke Scale |

| OR | Odds Ratios |

| PPV | Positive Predictive Value |

| RF | Random Forest |

| SA | Sensitivity Analysis |

| SMOTE | Synthetic Minority Over-sampling Technique |

| SPI-II | Stroke Prognostic Instrument |

| SVM | Support Vector Machines |

| XGBoost | Extreme Gradient Boosting |

References

- Katan, M.; Luft, A. Global Burden of Stroke. Semin. Neurol. 2018, 38, 208–211. [Google Scholar] [CrossRef] [PubMed]

- Benjamin, E.J.; Blaha, M.J.; Chiuve, S.E.; Cushman, M.; Das, S.R.; de Ferranti, S.D.; Floyd, J.; Fornage, M.; Gillespie, C.; Isasi, C.R.; et al. Heart disease and stroke statistics—2017 update a report from the American heart association. Circulation 2017, 135, e146–e603. [Google Scholar] [CrossRef] [PubMed]

- Burn, J.; Dennis, M.; Bamford, J.; Sandercock, P.; Wade, D.; Warlow, C. Long-term risk of recurrent stroke after a first-ever stroke. The Oxfordshire Community Stroke Project. Stroke 1994, 25, 333–337. [Google Scholar] [CrossRef] [PubMed]

- Hillen, T.; Coshall, C.; Tilling, K.; Rudd, A.G.; McGovern, R.; Wolfe, C.D. Cause of Stroke Recurrence Is Multifactorial. Stroke 2003, 34, 1457–1463. [Google Scholar] [CrossRef] [PubMed]

- Samsa, G.P.; Bian, J.; Lipscomb, J.; Matchar, D.B. Epidemiology of Recurrent Cerebral Infarction. Stroke 1999, 30, 338–349. [Google Scholar] [CrossRef]

- Chaudhary, D.; Abedi, V.; Li, J.; Schirmer, C.M.; Griessenauer, C.J.; Zand, R. Clinical Risk Score for Predicting Recurrence Following a Cerebral Ischemic Event. Front. Neurol. 2019, 10, 1106. [Google Scholar] [CrossRef] [PubMed]

- Yuanyuan, Z.; Jiaman, W.; Yimin, Q.; Haibo, Y.; Weiqu, Y.; Zhuoxin, Y. Comparison of Prediction Models based on Risk Factors and Retinal Characteristics Associated with Recurrence One Year after Ischemic Stroke. J. Stroke Cerebrovasc. Dis. 2020, 29, 104581. [Google Scholar] [CrossRef] [PubMed]

- Noorbakhsh-Sabet, N.; Zand, R.; Zhang, Y.; Abedi, V. Artificial Intelligence Transforms the Future of Health Care. Am. J. Med. 2019, 132, 795–801. [Google Scholar] [CrossRef]

- Heo, J.; Yoon, J.G.; Park, H.; Kim, Y.D.; Nam, H.S.; Heo, J.H. Machine Learning–Based Model for Prediction of Outcomes in Acute Stroke. Stroke 2019, 50, 1263–1265. [Google Scholar] [CrossRef]

- Abedi, V.; Goyal, N.; Tsivgoulis, G.; Hosseinichimeh, N.; Hontecillas, R.; Bassaganya-Riera, J.; Elijovich, L.; Metter, J.E.; Alexandrov, A.W.; Liebeskind, D.S.; et al. Novel Screening Tool for Stroke Using Artificial Neural Network. Stroke 2017, 48, 1678–1681. [Google Scholar] [CrossRef]

- Stanciu, A.; Banciu, M.; Sadighi, A.; Marshall, K.A.; Holland, N.R.; Abedi, V.; Zand, R. A predictive analytics model for differentiating between transient ischemic attacks (TIA) and its mimics. BMC Med. Infor. Decis. Mak. 2020, 20, 112. [Google Scholar] [CrossRef]

- Abedi, V.; Khan, A.; Chaudhary, D.; Misra, D.; Avula, V.; Mathrawala, D.; Kraus, C.; Marshall, K.A.; Chaudhary, N.; Li, X.; et al. Using artificial intelligence for improving stroke diagnosis in emergency departments: A practical framework. Ther. Adv. Neurol. Disord. 2020, 13, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Chaudhary, D.; Khan, A.; Shahjouei, S.; Gupta, M.; Lambert, C.; Avula, V.; Schirmer, C.M.; Holland, N.; Griessenauer, C.J.; Azarpazhooh, M.R.; et al. Trends in ischemic stroke outcomes in a rural population in the United States. J. Neurol. Sci. 2021, 422, 117339. [Google Scholar] [CrossRef] [PubMed]

- Van Buuren, S.; Groothuis-Oudshoorn, K. mice: Multivariate Imputation by Chained Equations in R. J. Stat. Softw. 2011, 45, 1–67. Available online: https://www.jstatsoft.org/v45/i03/ (accessed on 19 March 2021). [CrossRef]

- Kernan, W.N.; Ovbiagele, B.; Black, H.R.; Bravata, D.M.; Chimowitz, M.I.; Ezekowitz, M.D.; Fang, M.C.; Fisher, M.; Furie, K.L.; Heck, D.V.; et al. Guidelines for the Prevention of Stroke in Patients with Stroke and Transient Ischemic Attack: A Guideline for Healthcare Professionals from the American Heart Association/American Stroke Association. Stroke 2014, 45, 2160–2236. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018; Available online: https://www.R-project.org (accessed on 19 March 2021).

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Friedman, J.H.; Hastie, T.; Tibshirani, R. Regularization Paths for Generalized Linear Models via Coordinate Descent. J. Stat. Softw. 2010, 33, 1–22. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Kuhn, M. Caret: Classification and Regression Training. R package Version 6.0-86. 2020. Available online: https://cran.r-project.org/package=caret (accessed on 19 March 2021).

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H. Xgboost: Extreme Gradient Boosting. R Package Version 1.1.1.1. 2020. Available online: https://cran.r-project.org/package=xgboost (accessed on 19 March 2021).

- Greenwell, B.; Boehmke, B.; Cunningham, J.; Developers, G. GBM: Generalized Boosted Regression Models. R package version 2.1.5. 2019. Available online: https://cran.r-project.org/package=gbm (accessed on 19 March 2021).

- Karatzoglou, A.; Smola, A.; Hornik, K.; Zeileis, A. kernlab—AnS4Package for Kernel Methods inR. J. Stat. Softw. 2004, 11, 1–20. [Google Scholar] [CrossRef]

- Kuhn, M.; Quinlan, R. C50: C5.0 Decision Trees and Rule-Based Models. R package version 0.1.3.1. 2020. Available online: https://cran.r-project.org/package=C50 (accessed on 19 March 2021).

- Wallert, J.; Tomasoni, M.; Madison, G.; Held, C. Predicting two-year survival versus non-survival after first myocardial infarction using machine learning and Swedish national register data. BMC Med Inform. Decis. Mak. 2017, 17, 1–11. [Google Scholar] [CrossRef]

- Kernan, W.N.; Viscoli, C.M.; Brass, L.M.; Makuch, R.W.; Sarrel, P.M.; Roberts, R.S.; Gent, M.; Rothwell, P.; Sacco, R.L.; Liu, R.C.; et al. The stroke prognosis instrument II (SPI-II): A clinical prediction instrument for patients with transient ischemia and nondisabling ischemic stroke. Stroke 2000, 31, 456–462. [Google Scholar] [CrossRef] [PubMed]

- Weimar, C.; Diener, H.-C.; Alberts, M.J.; Steg, P.G.; Bhatt, D.L.; Wilson, P.W.; Mas, J.-L.; Röther, J. The Essen Stroke Risk Score Predicts Recurrent Cardiovascular Events. Stroke 2009, 40, 350–354. [Google Scholar] [CrossRef]

- Chandratheva, A.; Geraghty, O.C.; Rothwell, P.M. Poor Performance of Current Prognostic Scores for Early Risk of Recurrence After Minor Stroke. Stroke 2011, 42, 632–637. [Google Scholar] [CrossRef]

- Andersen, S.D.; Gorst-Rasmussen, A.; Lip, G.Y.; Bach, F.W.; Larsen, T.B. Recurrent Stroke. Stroke 2015, 46, 2491–2497. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Li, W.A.; Yan, A.; Wang, Y. Validation of the Essen Stroke Risk Score in different subtypes of ischemic stroke. Neurol. Res. 2017, 39, 504–508. [Google Scholar] [CrossRef]

- Weimar, C.; Benemann, J.; Michalski, D.; Müller, M.; Luckner, K.; Katsarava, Z.; Weber, R.; Diener, H.-C. Prediction of Recurrent Stroke and Vascular Death in Patients with Transient Ischemic Attack or Nondisabling Stroke. Stroke 2010, 41, 487–493. [Google Scholar] [CrossRef]

- Navi, B.B.; Kamel, H.; Sidney, S.; Klingman, J.G.; Nguyen-Huynh, M.N.; Johnston, S.C. Validation of the Stroke Prognostic Instrument-II in a Large, Modern, Community-Based Cohort of Ischemic Stroke Survivors. Stroke 2011, 42, 3392–3396. [Google Scholar] [CrossRef]

- Sadighi, A.; Stanciu, A.; Banciu, M.; Abedi, V.; el Andary, N.; Holland, N.; Zand, R. Rate and associated factors of transient ischemic attack misdiagnosis. eNeurological. Sci. 2019, 15, 100193. [Google Scholar] [CrossRef]

- Carey, D.J.; Fetterolf, S.N.; Davis, F.D.; Faucett, W.A.; Kirchner, H.L.; Mirshahi, U.; Murray, M.F.; Smelser, D.T.; Gerhard, G.S.; Ledbetter, D.H. The Geisinger MyCode community health initiative: An electronic health record–linked biobank for precision medicine research. Genet. Med. 2016, 18, 906–913. [Google Scholar] [CrossRef] [PubMed]

- Abedi, V.; Shivakumar, M.K.; Lu, P.; Hontecillas, R.; Leber, A.; Ahuja, M.; Ulloa, A.E.; Shellenberger, M.J.; Bassaganya-Riera, J. Latent-Based Imputation of Laboratory Measures from Electronic Health Records: Case for Complex Diseases. BioRxiv 2018, 275743. [Google Scholar] [CrossRef]

- Abedi, V.; Li, J.; Shivakumar, M.K.; Avula, V.; Chaudhary, D.P.; Shellenberger, M.J.; Khara, H.S.; Zhang, Y.; Lee, M.T.M.; Wolk, D.M.; et al. Increasing the Density of Laboratory Measures for Machine Learning Applications. J. Clin. Med. 2020, 10, 103. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Chaudhary, D.P.; Khan, A.; Griessenauer, C.; Carey, D.J.; Zand, R.; Abedi, V. Polygenic Risk Scores Augment Stroke Subtyping. Neurol. Genet. 2021, 7, e560. [Google Scholar] [CrossRef]

- Alam, M.; Deng, X.; Philipson, C.; Bassaganya-Riera, J.; Bisset, K.; Carbo, A.; Eubank, S.; Hontecillas, R.; Hoops, S.; Mei, Y.; et al. Sensitivity Analysis of an ENteric Immunity SImulator (ENISI)-Based Model of Immune Responses to Helicobacter pylori Infection. PLoS ONE 2015, 10, e0136139. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, W.; Xie, G.; Hontecillas, R.; Verma, M.; Leber, A.; Bassaganya-Riera, J.; Abedi, V. Multi-Resolution Sensitivity Analysis of Model of Immune Response to Helicobacter pylori Infection via Spatio-Temporal Metamodeling. Front. Appl. Math. Stat. 2019, 5. [Google Scholar] [CrossRef]

- Available online: https://github.com/wwvt/bioSA (accessed on 19 March 2021).

- Verma, M.; Bassaganya-Riera, J.; Leber, A.; Tubau-Juni, N.; Hoops, S.; Abedi, V.; Chen, X.; Hontecillas, R. High-resolution computational modeling of immune responses in the gut. GigaScience 2019, 8. [Google Scholar] [CrossRef] [PubMed]

| Patient Characteristics | % Missing | Statistics (All Patients) | Control Group | Case Group 1 | Case Group 2 | Case Group 3 | Case Group 4 | Case Group 5 |

|---|---|---|---|---|---|---|---|---|

| Total number of patients | - | 2091 | 1654 | 210 | 306 | 375 | 411 | 437 |

| Age in years, mean (SD) | - | 67 (13) | 66 (13) | 71 (14) | 71 (13) | 71 (13) | 71 (13) | 71 (13) |

| Age in years, median (IQR) | - | 68 (58–77) | 67 (57–76) | 73 (62–83) | 72 (63–81) | 73 (63–81) | 73 (63–81) | 73 (63–81) |

| Male, n (%) | - | 1079 (52%) | 53% | 47% | 46% | 46% | 47% | 47% |

| Body mass index (BMI) in kg/m2, mean (SD) | 2.63% | 30 (7) | 30 (7) | 29 (6) | 29 (6) | 29 (6) | 29 (7) | 29 (6) |

| Body mass index (BMI) in kg/m2, median [IQR] | 2.63% | 29 (26–33) | 29 (26–33) | 28 (24–32) | 28 (25–32) | 28 (25–32) | 28 (25–32) | 28 (25–32) |

| Diastolic Blood Pressure, mean (SD) | 31.90% | 76 (12) | 76 (12) | 75 (13) | 75 (12) | 75 (12) | 75 (12) | 74 (12) |

| Systolic Blood Pressure, mean (SD) | 31.90% | 137 (22) | 136 (22) | 139 (26) | 139 (25) | 140 (24) | 139 (24) | 139 (24) |

| Hemoglobin (Unit: g/dL), mean (SD) | 1.82% | 14 (2) | 14 (2) | 13 (2) | 14 (2) | 14 (2) | 14 (2) | 14 (2) |

| Hemoglobin A1c (Unit: %), mean (SD) | 25.11% | 7 (2) | 7 (2) | 7 (2) | 7 (2) | 7 (2) | 7 (2) | 7 (2) |

| HDL (Unit: mg/dL), mean (SD) | 5.40% | 47 (15) | 47 (15) | 45 (13) | 45 (14) | 45 (14) | 45 (14) | 45 (14) |

| LDL (Unit: mg/dL), mean (SD) | 5.79% | 102 (40) | 103 (40) | 103 (44) | 100 (43) | 101 (42) | 101 (41) | 100 (41) |

| Platelet (Unit: 103/uL), mean (SD) | 1.82% | 232 (77) | 233 (76) | 227 (70) | 229 (73) | 231 (80) | 230 (78) | 229 (78) |

| White blood cell (Unit: 103/uL), mean (SD) | 1.82% | 9 (3) | 9 (3) | 8 (3) | 8 (3) | 9 (3) | 9 (3) | 9 (3) |

| Creatinine (Unit: mg/dL), mean (SD) | 2.58% | 1 (1) | 1 (0.5) | 1 (1) | 1 (1) | 1 (1) | 1 (1) | 1 (1) |

| Current smoker, n (%) | - | 288 (14%) | 14 (1) | 12 (6) | 12 (4) | 13 (3) | 13 (3) | 13 (3) |

| Difference in days between Last outpatient visit prior to index date and index date, mean (SD) | 26.16% | 347 (726) | 345 (691) | 371 (882) | 354 (846) | 369 (855) | 352 (826) | 354 (840) |

| MEDICAL HISTORY, n (%) | ||||||||

| Atrial flutter | 41 (2%) | 28 (2%) | 4 (2%) | 9 (3%) | 11 (3%) | 13 (3%) | 13 (3%) | |

| Atrial fibrillation | 319 (15%) | 230 (14%) | 35 (17%) | 55 (18%) | 72 (19%) | 82 (20%) | 89 (20%) | |

| Atrial fibrillation/flutter | 324 (15%) | 233 (14%) | 36 (17%) | 56 (18%) | 74 (20%) | 84 (20%) | 91 (21%) | |

| Chronic Heart failure (CHF) | 159 (8%) | 103 (6%) | 33 (16%) | 42 (14%) | 49 (13%) | 53 (13%) | 56 (13%) | |

| Chronic kidney disease | 223 (11%) | 142 (9%) | 55 (26%) | 68 (22%) | 74 (20%) | 78 (19%) | 81 (19%) | |

| Chronic liver disease | 35 (2%) | 23 (1%) | 2 (1%) | 7 (2%) | 10 (3%) | 11 (3%) | 12 (3%) | |

| Chronic liver disease (mild) | 33 (2%) | 21 (1%) | 2 (1%) | 7 (2%) | 10 (3%) | 11 (3%) | 12 (3%) | |

| Chronic liver disease (moderate to severe) | 7 (0.3%) | 5 (0.3%) | 0 (0%) | 1 (0.3%) | 1 (0.3%) | 2 (0.5%) | 2 (0.5%) | |

| Chronic lung diseases | 391 (19%) | 296 (18%) | 51 (24%) | 70 (23%) | 83 (22%) | 92 (22%) | 95 (22%) | |

| Diabetes | 615 (29%) | 439 (27%) | 86 (41%) | 122 (40%) | 151 (40%) | 165 (40%) | 176 (40%) | |

| Dyslipidemia | 1298 (62%) | 994 (60%) | 142 (68%) | 211 (69%) | 258 (69%) | 285 (69%) | 304 (70%) | |

| Hypertension | 1495 (72%) | 1150 (70%) | 168 (80%) | 240 (78%) | 293 (78%) | 327 (80%) | 345 (79%) | |

| Myocardial infarction | 215 (10%) | 159 (10%) | 30 (14%) | 43 (14%) | 51 (14%) | 53 (13%) | 56 (13%) | |

| Neoplasm | 284 (14%) | 211 (13%) | 35 (17%) | 49 (16%) | 61 (16%) | 65 (16%) | 73 (17%) | |

| Hypercoagulable | 29 (1%) | 24 (1%) | 4 (2%) | 4 (1%) | 5 (1%) | 5 (1%) | 5 (1%) | |

| Peripheral vascular disease | 313 (15%) | 219 (13%) | 46 (22%) | 65 (21%) | 75 (20%) | 88 (21%) | 94 (22%) | |

| Patent Foramen Ovale | 241 (12%) | 184 (11%) | 30 (14%) | 41 (13%) | 47 (13%) | 53 (13%) | 57 (13%) | |

| Rheumatic diseases | 76 (4%) | 53 (3%) | 11 (5%) | 14 (5%) | 18 (5%) | 21 (5%) | 23 (5%) | |

| FAMILY HISTORY | ||||||||

| Heart disorder | 943 (45%) | 747 (45%) | 85 (40%) | 130 (42%) | 165 (44%) | 182 (44%) | 196 (45%) | |

| Stroke | 361 (17%) | 279 (17%) | 39 (19%) | 60 (20%) | 72 (19%) | 77 (19%) | 82 (19%) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abedi, V.; Avula, V.; Chaudhary, D.; Shahjouei, S.; Khan, A.; Griessenauer, C.J.; Li, J.; Zand, R. Prediction of Long-Term Stroke Recurrence Using Machine Learning Models. J. Clin. Med. 2021, 10, 1286. https://doi.org/10.3390/jcm10061286

Abedi V, Avula V, Chaudhary D, Shahjouei S, Khan A, Griessenauer CJ, Li J, Zand R. Prediction of Long-Term Stroke Recurrence Using Machine Learning Models. Journal of Clinical Medicine. 2021; 10(6):1286. https://doi.org/10.3390/jcm10061286

Chicago/Turabian StyleAbedi, Vida, Venkatesh Avula, Durgesh Chaudhary, Shima Shahjouei, Ayesha Khan, Christoph J Griessenauer, Jiang Li, and Ramin Zand. 2021. "Prediction of Long-Term Stroke Recurrence Using Machine Learning Models" Journal of Clinical Medicine 10, no. 6: 1286. https://doi.org/10.3390/jcm10061286

APA StyleAbedi, V., Avula, V., Chaudhary, D., Shahjouei, S., Khan, A., Griessenauer, C. J., Li, J., & Zand, R. (2021). Prediction of Long-Term Stroke Recurrence Using Machine Learning Models. Journal of Clinical Medicine, 10(6), 1286. https://doi.org/10.3390/jcm10061286