Abstract

This article provides a comprehensive and up-to-date overview of the repositories that contain color fundus images. We analyzed them regarding availability and legality, presented the datasets’ characteristics, and identified labeled and unlabeled image sets. This study aimed to complete all publicly available color fundus image datasets to create a central catalog of available color fundus image datasets.

1. Introduction

Research and healthcare delivery are changing in the digital age. Digital health research and deep-learning-based applications are promising to transform some of the ways we care for our patients and expand access to healthcare in both developed and underprivileged regions of the world [1,2,3]. Automated screening for diabetic retinopathy (DR) is one facet of this transformation, with deep-learning algorithms already supplementing clinical practice in different parts of the world [4,5].

As the barrier to entry for creating deep-learning-based applications significantly diminished over the last few years, many smaller companies and institutions now attempt to create their algorithms for healthcare, particularly for image-based analysis [6]. Radiology and ophthalmology are medical specialties for which deep learning is most applicable due to their reliance on images and visual analysis [7,8,9]. Color fundus photos, retinal and anterior chamber optical coherence tomography (OCT) scans, and visual field analyzer reports can all lend themselves to automatic analysis for various possible pathologies [9,10].

Creating these algorithms requires sizable numbers of initial images, both with and without pathology. Data are needed in every step of developing a deep-learning application. In the modern world, with electronic healthcare records, centralized imaging storage, and the pervasiveness of digital solutions and storage, such data are generated worldwide in massive quantities due to access, cost, and healthcare issues. Privacy and data governing laws, lack of centralized databases, heterogeneity within particular datasets, lack of or insufficient labeling, or the sheer volume of images required to access such data are often challenging. This article provides an overview of the repositories containing color fundus photos. We analyze them in terms of availability and legality of use. We present the characteristics of datasets and identify labeled and not labeled sets of images. We also analyze the origin of the datasets. This review aims to complement all publicly available color fundus image datasets to create a central catalog of what is currently available. We list the source of each dataset, their availability, and a summary of the populations represented.

Khan and colleagues have previously identified, described, listed, and jointly reviewed 94 ophthalmological imaging datasets [11]. However, this review is outdated now. We aim to provide an update on those datasets’ current state and accessibility.

Khan et al.’s [11] work includes 54 repositories of color fundus photos. At the time of writing this article, only 47 were available. In this work, we have added 73 repositories of color fundus photos, samples of the content of each of them (https://shorturl.at/hmyz3; accessed on 16 May 2023), a tool for automatic content inspection of current or future repositories, accurate access type information (form/registration/e-mail to the authors/no difficulties), degree of difficulty of access to the repository, total file size, image sizes, photo descriptions, information about additional artifacts and legality of use, along with any required papers to be cited.

In addition, we classified the datasets into the following five categories:

- The availability of the datasets;

- A breakdown of the legality of using the datasets;

- A classification of the image descriptions;

- Geographical distribution of the datasets that are available by continent and country.

2. Methods

We used the information presented in the review of publicly available ophthalmological imaging datasets [11]. Each dataset includes details about its accessibility, data access, file types, countries of origin, number of patients undergoing examination, number of all images taken, ocular diseases, types of eye examinations performed, and the device used. We have extended the information about all the datasets marked as available in the review mentioned above. We found 47 such color fundus image repositories.

We then used well-known tools to find other repositories not described in the mentioned papers. Searching for color fundus image repositories consisted of typing different types of terms into three types of search engines, including “fundus”, “retina” and “retinal image” along with the words “dataset”, “database” and “repositories”. The exact search was done in the Google search engine and the Google Dataset search engine, designed to search online datasets. Google Dataset Search is designed for online repository discovery and supports searching for tabular, graphical, and text datasets. Indexing is available for publishing their dataset with a metadata reference schema. All results from the search describe the dataset’s contents, direct links, and file format. Google searches also included terms related to images of the retina and terms related to datasets. For both searches, we considered the first ten pages of results. We found 17 unique repositories: 5 using the Google search engine and 12 using the Google Dataset search engine.

The third search engine we chose was Kaggle. Kaggle is a data science and artificial intelligence platform on which users can share their datasets and examine the datasets shared by others. Kaggle datasets are open-sourced, but to determine for what purposes these datasets can be used, we need to check the datasets’ licenses. The vast majority of Kaggle datasets are reliable. We can judge a dataset’s reliability by looking at its upvotes or reviewing the notebooks shared using the dataset. We used the same types of terms as with the Google search engine and Google Dataset search engine. We found 61 unique repositories. We investigated the actual condition of files with their total size, image sizes, information about image description, additional artifacts found in images, issues of legality of data used in scientific applications, and visualizations of sample data. We did not exclude any color fundus image datasets based on the age, sex, or ethnicity of the patients from whom data was collected. We also included datasets of all languages and geographic origins.

2.1. Dataset Checking Strategy

We noticed that the levels of access to the datasets varied: from fully accessible to available on request after sending a request to the authors. Some datasets were also unavailable. In this article, we have defined access levels as follows:

- (1)

- Fully open;

- (2)

- Available after completing a form;

- (3)

- Available after account registration (and possible approval by the authors);

- (4)

- Available after sending an email to authors and approval from them;

- (5)

- Not available.

- After accessing, we manually checked each dataset described in 1 by downloading them to extract information about file status, sizes, and additional artifacts found in the images. Most available datasets were available as compressed files (ZIP/RAR/7Z), but some were available as separate files. We determined the sizes of the datasets in which the files were delivered separately by downloading all files and summing their sizes. We have prepared a tool to automatically generate the discussed information on repository contents and image samples—Ophthalmic Repository Sample Generator (Section 4.1). We also manually checked the content of each randomly selected image to see if there were any additional artifacts.

2.2. Image Descriptions and Legality of Use

All the datasets we reviewed were described on dedicated web pages or in scientific publications. Based on these sources, we have determined methods of describing images included in these datasets.

We noticed the following types of image descriptions:

- (1)

- Manually assigned labels corresponding to diagnosed ocular diseases, image quality, or described areas of interest;

- (2)

- Manual annotations on images indicating areas of interest;

- (3)

- No descriptions.

We have also extracted information on the legality of data use from websites dedicated to the datasets. We noticed the following approaches to defining the legality of the use of data contained in the datasets:

- (1)

- Notifying the authors of the datasets of the results and awaiting permission to publish the results;

- (2)

- References to the indicated articles or the dataset in the case of publication of the results;

- (3)

- No restrictions.

3. Data Availability

In the analysis, we used 121 publicly available datasets containing color fundus images [12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127].

4. Code Availability

4.1. Ophthalmic Repository Sample Generator

We developed a generator of pseudo-random samples from publicly available repositories containing color fundus photos. The generator is written in Python 3 programming language and is available on GitHub (https://github.com/betacord/OphthalmicRepositorySampleGenerator; accessed on 16 May 2023).

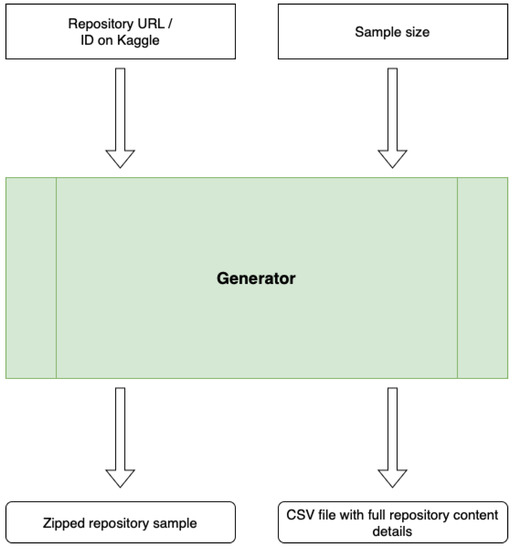

The prepared tool facilitates the manual inspection of the contents of repositories. The program obtains the URL of a given repository (or ID on the Kaggle platform) and the sample size (n). The operation result will be a pseudo-random selection of n color fundus photos from the repository and a CSV file containing extracted attributes representing the entire repository. The tool can be easily run on a local computer or in a cloud environment. The general scheme of the generator is shown in Figure 1.

Figure 1.

General scheme of the Ophthalmic Repository Sample Generator.

As an input, the generator takes the sample size, the repository URL, the data output file path, the temporary full data path, the repository sample output path, the repository type, and the output CSV file path.

- The sample size is an integer number representing the size of the random output sample of photos from the repository.

- A repository URL is a string representing a direct URL of the image file; e.g., for the Kaggle dataset, the schema is [username]/[dataset_id]. In the case of a Kaggle competition, it is ID.

- The data output file path is a string representing the output file with the downloaded repository content.

- The temporary full data path is a string representing the temporary path to which the repository will be extracted.

- The repository sample output path is a string representing the path where a randomly selected repository sample will be placed.

- The repository type is an integer representing the type of the repository source: 0 for classic URL, 1 for Kaggle competition, and 2 for Kaggle dataset.

- The output CSV file path is a string representing the path where the CSV file will be saved (separated by;) containing information about the repository.

Therefore, external parameters characterizing the size of the generated sample of images, data source, temporary paths, source type, and paths to the output files should also be included in the tool’s run.

5. Results

In Table 1, we included the results of our review. In total, we checked 127 repositories containing color fundus images, of which 120 were currently available, and seven were unavailable due to a non-existent URL. Downloading one dataset was prevented due to a critical server error, and one dataset was delivered as a corrupt zipped file. We have described the characteristics only for the available datasets. We also generated a sample of their content and placed it in the cloud (https://shorturl.at/hmyz3; accessed on 16 May 2023).

Table 1.

Characteristics of the open access datasets.

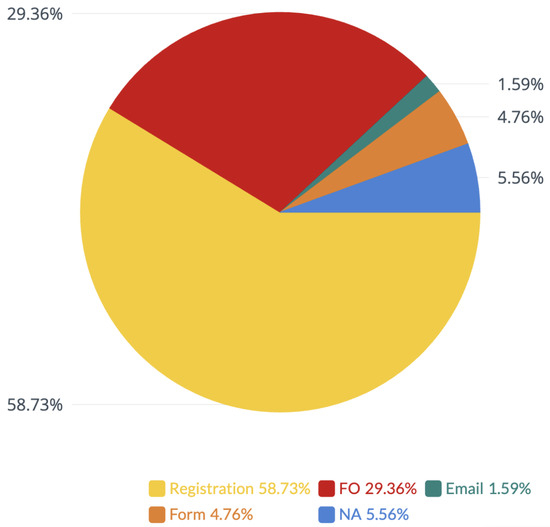

5.1. Data Access

Out of the 127 available datasets, we marked 37 as fully open, 6 as available after completing a form, 75 as available after account registration, 2 as available after sending an email to authors and approval from them, and 7 as not available, as can be seen in Figure 2.

Figure 2.

Availability of datasets. FO = fully open, FORM = available after completing a form, Registration = available after account registration (and possible approval by the authors), Email = available after sending an email to authors and approval from them, NA = not available.

5.2. Characteristics of Datasets

Almost all (122 out of 124) of the datasets could be downloaded as zipped files, and only 2 could be downloaded separately. There was a problem with the extension on three of the zipped files that contained datasets. In 59 datasets, all images had the exact dimensions (in pixels), but there were 68 unique ones. Images from nine different datasets contained additional artifacts such as dates, numbers, digits, color scales, markers, icons, arteries, vessels, veins, and key points marked on the photographs.

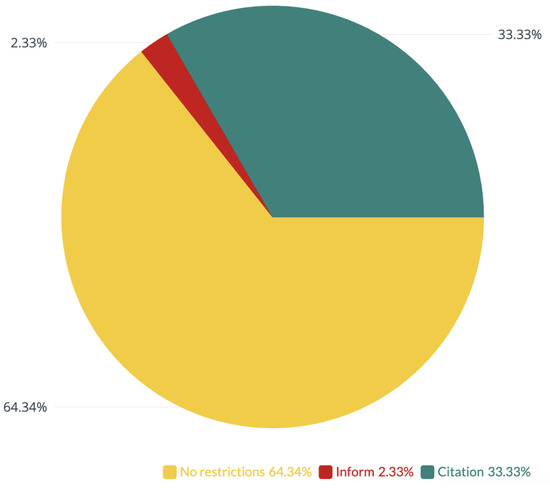

5.3. The Legality of Use and Image Descriptions

Out of the 127 datasets, the authors of 3 of them provided a note about the need to inform them about the obtained results. Authors of 44 datasets provided information about the need to cite the indicated works using the provided data and publishing the results. Over two-thirds, or 80 datasets, had no restrictions on use. Figure 3 shows the full breakdown of the legality of using data contained in the datasets.

Figure 3.

Breakdown of the legality of using data. Inform = notifying the authors of the datasets of the results and awaiting permission to publish the results, Citation = references to the indicated articles in the case of publication of the results.

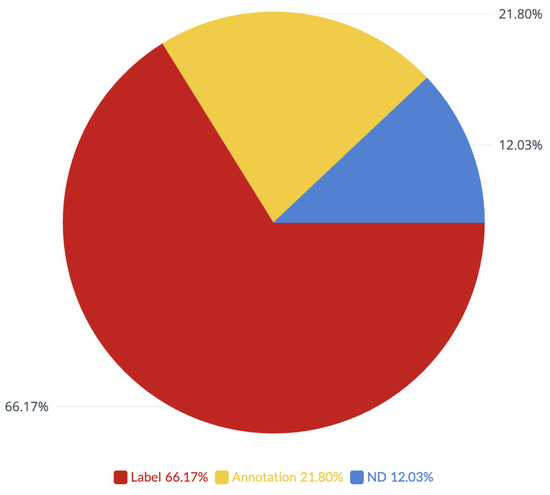

Eighty-nine datasets were labeled with the images assigned to them. Thirty datasets had areas of interest labeled on the images. Sixteen datasets did not have any descriptions. A full breakdown of the image descriptions is shown in Figure 4.

Figure 4.

Breakdown of the image descriptions. Label = manually assigned labels corresponding to diagnosed ocular diseases, image quality or described areas of interest, Annotation = manual annotations on images indicating areas of interest, ND = no descriptions.

6. Discussion

Publicly available datasets remain important in digital health research and innovation in ophthalmology. Although, on the whole, the number of publicly available color fundus image datasets is growing, it is an ongoing process with new datasets arriving and older datasets becoming inaccessible. A central repository or listing for ophthalmic datasets, coupled with the low discoverability of many of the datasets, constitutes a significant barrier to access to high-quality representative data suitable to a given purpose. However, this article provided an up-to-date review and discussion of available color fundus image datasets.

Health data poverty, in this case, scarcity of color fundus image datasets originating from underprivileged regions, particularly Africa, is cause for concern. Although the relationship between patients’ ethnicity, background, and other attributes and fundus features is not clearly documented, the lack of representative datasets might lead to ethnic or geographical bias and poor generalizability in deep-learning applications. The recent relative lack of datasets might mean underrepresented regions miss out on future data-driven screening and healthcare solutions benefits.

The study published by Khan and colleagues was the first comprehensive and systematic listing of public ophthalmological imaging databases. In their work, out of 121 datasets identified through various searching strategies, only 94 were deemed truly available, with 27 databases being inaccessible even after multiple attempts spaced weeks apart [11]. Therefore roughly one-fourth of datasets were inaccessible at the time of their review in work mentioned above. It is in line with our findings in writing this update. Out of 94 datasets marked available by the authors, only 74 were available at the time of preparing this article, just over 1 year from the initial paper publication of Khan and colleagues and just 15 months after the first online publishing. Therefore, access to over one-fifth (21%) of datasets was lost in fewer than 2 years, similar to the 22% found inaccessible in the original review. Almost all of the datasets that became unavailable since the publishing of Khan’s study were offline—the dedicated websites are unreachable, with two unavailable due to errors.

Of the unavailable datasets, 7 became unavailable during the identification, verification, and review of the newly discovered datasets out of 127 color fundus image repositories initially identified for this analysis. Although the initial period between publishing the individual datasets and becoming inaccessible has yet to be discovered, it is clear that datasets going offline or otherwise becoming unreachable is an ongoing process, and information on availability can quickly become obsolete. It is important to note that while Khan et al. published a list of datasets containing OCT and other imaging modalities, this review focuses specifically on datasets of color fundus images [11].

Given adequate citation of sources, all but three of the datasets allowed unrestricted access and publishing of results for scientific, non-commercial purposes. The two exceptions required approval from dataset authors before publishing any results, which may limit their usability in scientific regard, leaving potential publication opportunities to the whim of original dataset authors. More than half of datasets do not impose any restrictions and do not explicitly require citations, though quoting sources is one of the fundamental ethical principles of scientific use.

Most datasets contain additional information about individual images. More than half of the datasets (66%) contained manually assigned text-based labels corresponding to diagnosed ocular diseases, image quality, or described areas of interest. One-fifth (22%) of datasets contained annotations indicating areas of interest or pathology. Only 12% of datasets contained raw images without metadata for individual images.

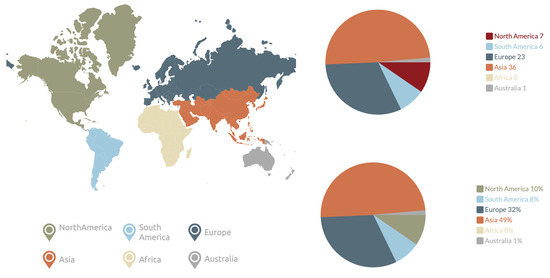

Figure 5.

Geographical distribution of the available datasets by continent (where origins of the dataset could be determined).

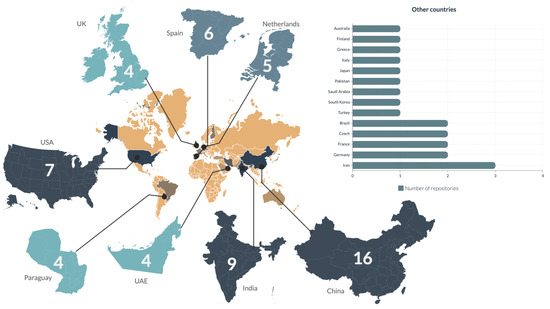

Figure 6.

Number of datasets originating from individual countries (where origins of the dataset could be determined).

Almost half of the datasets for which a region of origin could be established originated from Asia, with Europe making up another one-third. Overall, out of 73 datasets, 24 originated from outside of Europe or Asia, with none of the datasets originating from Africa and a nearly equal split between North and South America. The distribution of datasets available from individual countries is shown in Figure 6. Although dataset origin relates to the location of the person or organization sharing the dataset and does not necessarily represent the origin of patients’ images, the complete lack of images from Africa is concerning.

Africa, particularly sub-Saharan Africa, is a vastly underserved region with one of the lowest numbers of ophthalmologists in the population globally [167]. There are, on average, three ophthalmologists per million populations in sub-Saharan Africa, compared to about 80 in developed countries [167]. Although this is likely one of the reasons for the lack of available datasets from the region, it is also the rationale for the need for datasets from this region. Digital healthcare solutions, including deep-learning software, may help alleviate some of the healthcare disparities in the region. However, these require development or at least validation on the target validation to avoid any potential for racial or other population-specific trait bias [168]. It remains steadfast in the case of color fundus images where other than background fundus pigmentation, the influence of patients’ attributes such as age, sex, or race on fundus features and their variations are not well known. It is also currently being tackled using deep-learning methods [169]. The suspicion of poor generalizability in populations outside of the ethnic or geographical scope of the initial training image data and, subsequently, the need for the development and validation of multi-ethnic populations is not a new concept in the automated analysis of color fundus images [11,149,170,171]. Serener et al. have shown that the performance of deep-learning algorithms for detecting diabetic retinopathy in color fundus images varies based on geographical or ethnic traits of the training and validation populations [171].

7. Conclusions

Open datasets are still crucial for digital health research and innovation in ophthalmology. Even though the public has access to more datasets with color fundus images, new datasets are always being added, making older datasets inaccessible. There are only a few places to store or list ophthalmic datasets, and many of them are hard to find, making it hard to obtain high-quality, representative data useful for a given purpose. This paper discussed the many color fundus image datasets that are now available and gave an up-to-date review.

Author Contributions

Conceptualization: A.E.G. and T.K.; methodology: T.K.; data collection: T.K.; project administration: A.E.G.; supervision: A.E.G.; software: T.K. and A.M.Z.; formal analysis and visualizations: A.M.Z.; writing original draft: A.E.G., P.B., A.M.Z. and T.K; writing-review and editing, A.M.Z. and T.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors thank Siamak Yousefi and his team from the University of Tennessee, Memphis, USA, and Paweł Borkowski from the Rzeszow University of Technology, Poland, for their help in identifying new repositories.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ibrahim, H.; Liu, X.; Zariffa, N.; Morris, A.D.; Denniston, A.K. Health data poverty: An assailable barrier to equitable digital health care. Lancet Digit. Health 2021, 3, e260–e265. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Norgeot, B.; Glicksberg, B.S.; Butte, A.J. A call for deep-learning healthcare. Nat. Med. 2019, 25, 14–15. [Google Scholar] [CrossRef]

- Grzybowski, A.; Brona, P.; Lim, G.; Ruamviboonsuk, P.; Tan, G.S.W.; Abramoff, M.; Ting, D.S.W. Artificial intelligence for diabetic retinopathy screening: A review. Eye 2019, 34, 451–460. [Google Scholar] [CrossRef]

- Grzybowski, A.; Brona, P. A pilot study of autonomous artificial intelligence-based diabetic retinopathy screening in Poland. Acta Ophthalmol. 2019, 97, e1149–e1150. [Google Scholar] [CrossRef] [PubMed]

- Miotto, R.; Wang, F.; Wang, S.; Jiang, X.; Dudley, J.T. Deep learning for healthcare: Review, opportunities and challenges. Briefings Bioinform. 2017, 19, 1236–1246. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Bluemke, D.A.; Moy, L.; Bredella, M.A.; Ertl-Wagner, B.B.; Fowler, K.J.; Goh, V.J.; Halpern, E.F.; Hess, C.P.; Schiebler, M.L.; Weiss, C.R. Assessing Radiology Research on Artificial Intelligence: A Brief Guide for Authors, Reviewers, and Readers from the Editorial Board. Radiology 2020, 294, 487–489. [Google Scholar] [CrossRef]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic accuracy of deep learning in medical imaging: A systematic review and meta-analysis. NPJ Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef] [PubMed]

- Ting, D.S.W.; Pasquale, L.R.; Peng, L.; Campbell, J.P.; Lee, A.Y.; Raman, R.; Tan, G.S.W.; Schmetterer, L.; Keane, P.A.; Wong, T.Y. Artificial intelligence and deep learning in ophthalmology. Br. J. Ophthalmol. 2018, 103, 167–175. [Google Scholar] [CrossRef]

- Khan, S.M.; Liu, X.; Nath, S.; Korot, E.; Faes, L.; Wagner, S.K.; Keane, P.A.; Sebire, N.J.; Burton, M.J.; Denniston, A.K. A global review of publicly available datasets for ophthalmological imaging: Barriers to access, usability, and generalisability. Lancet Digit. Health 2021, 3, e51–e66. [Google Scholar] [CrossRef]

- Available online: https://www.kaggle.com/datasets/linchundan/fundusimage1000 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/dh2x8v6nf8/1 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/trghs22fpg/1 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/trghs22fpg/2 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/trghs22fpg/3 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/trghs22fpg/4 (accessed on 16 May 2023).

- Available online: https://figshare.com/s/c2d31f850af14c5b5232 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/c/aptos2019-blindness-detection/data (accessed on 16 May 2023).

- Available online: https://people.eng.unimelb.edu.au/thivun/projects/AV_nicking_quantification/ (accessed on 16 May 2023).

- Available online: https://blogs.kingston.ac.uk/retinal/chasedb1/ (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/gilescodes/cropped-train-diabetic-retinopathy-detection (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/andrewmvd/retinal-disease-classification (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/fareesamasroor/cardiacemboli (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/mariaherrerot/ddrdataset (accessed on 16 May 2023).

- Available online: https://medicine.uiowa.edu/eye/abramoff/ (accessed on 16 May 2023).

- Available online: https://zenodo.org/record/4532361#.Yrr5sOzP1W4 (accessed on 16 May 2023).

- Available online: https://zenodo.org/record/4647952#.YtRttC-plQI (accessed on 16 May 2023).

- Available online: https://zenodo.org/record/4891308#.YtRwDS-plQI (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/nikkich9/derbi-hackathon-retinal-fundus-image-dataset (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/3csr652p9y/1 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/2rnnz5nz74/1 (accessed on 16 May 2023).

- Available online: https://data.mendeley.com/datasets/2rnnz5nz74/2 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/tanlikesmath/diabetic-retinopathy-resized (accessed on 16 May 2023).

- Available online: https://figshare.com/articles/dataset/Advancing_Bag_of_Visual_Words_Representations_for_Lesion_Classification_in_Retinal_Images/953671/2 (accessed on 16 May 2023).

- Available online: https://figshare.com/articles/dataset/Advancing_Bag_of_Visual_Words_Representations_for_Lesion_Classification_in_Retinal_Images/953671/3 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/mustaqimabrar/diabeticretinopathy (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/rutujachaudhari/diabetic-retinopathy (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/himanshuagarwal1998/diabetic-retinopathy (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/analaura000/diabetic-retinopathy (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/kameshwarandhayalan/diabetic-retinopathy (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/sovitrath/diabetic-retinopathy-2015-data-colored-resized (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/sovitrath/diabetic-retinopathy-224x224-2019-data (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/sovitrath/diabetic-retinopathy-224x224-gaussian-filtered (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/sovitrath/diabetic-retinopathy-224x224-grayscale-images (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/amanneo/diabetic-retinopathy-resized-arranged (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/kushagratandon12/diabetic-retinopathy-balanced (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/makrovh/diabetic-retinopathy-blindness-detection-c-data (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/dola1507108/diabetic-retinopathy-classified (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/competitions/diabetic-retinopathy-classification-2/data (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/competitions/retinopathy-classification-sai/data (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/sachinkumar413/diabetic-retinopathy-dataset (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/ahmedghazal54/diabetic-retinopathy-detection (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/poojita2305/diabetic-retinopathy-detection (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/mostafaeltalawy/diabetic-retinopathy-dataset (accessed on 16 May 2023).

- Available online: https://personalpages.manchester.ac.uk/staff/niall.p.mcloughlin/ (accessed on 16 May 2023).

- Available online: http://www.ia.uned.es/~ejcarmona/DRIONS-DB.html (accessed on 16 May 2023).

- Available online: https://drive.grand-challenge.org (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/dola1507108/diabetic-retinopathy-organized (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/sachinkumar413/diabetic-retinopathy-preprocessed-dataset (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/shuvokkr/diabeticresized300 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/makjn10/diabetic-retinopathy-small (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/dantealonso/diabeticretinopathytrainvalidation (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/saipavansaketh/diabetic-retinopathy-unziped (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/zhizhid/dr-2000 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/aviagarwal123/dr-201010 (accessed on 16 May 2023).

- Available online: https://github.com/deepdrdoc/DeepDRiD/blob/master/README.md (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/nguyenhung1903/diaretdb1-v21 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/muhamedahmed/diabetic (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/alisalen/diabetic-retinopathy-detection-processed (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/lokeshsaipureddi/drishtigs-retina-dataset-for-onh-segmentation (accessed on 16 May 2023).

- Available online: http://cvit.iiit.ac.in/projects/mip/drishti-gs/mip-dataset2/Home.php (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/diveshthakker/eoptha-diabetic-retinopathy (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/c/diabetic-retinopathy-detection (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/bishalbanerjee/eye-dataset (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/iamachal/fundus-image-dataset (accessed on 16 May 2023).

- Available online: https://projects.ics.forth.gr/cvrl/fire/ (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/izander/fundus (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/klmsathishkumar/fundus-images (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/spikeetech/fundus-dr (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/balnyaupane/gaussian-filtered-diabetic-retinopathy (accessed on 16 May 2023).

- Available online: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/1YRRAC (accessed on 16 May 2023).

- Available online: https://github.com/lgiancaUTH/HEI-MED (accessed on 16 May 2023).

- Available online: https://www5.cs.fau.de/research/data/fundus-images/ (accessed on 16 May 2023).

- Available online: http://ai.baidu.com/broad/introduction (accessed on 16 May 2023).

- Available online: https://idrid.grand-challenge.org/Rules/ (accessed on 16 May 2023).

- Available online: https://medicine.uiowa.edu/eye/inspire-datasets (accessed on 16 May 2023).

- Available online: http://ai.baidu.com/broad/subordinate?dataset=pm (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/dineswarreddy/indian-retina-classification (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/bachaboos/isbi-2021-retina-23 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/bachaboos/isbi-retina-test (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/linchundan/fundusimage1000 (accessed on 16 May 2023).

- Available online: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0179790#sec006 (accessed on 16 May 2023).

- Available online: https://github.com/smilell/AG-CNN (accessed on 16 May 2023).

- Available online: https://www.adcis.net/en/third-party/messidor2/ (accessed on 16 May 2023).

- Available online: https://odir2019.grand-challenge.org/Download/ (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/andrewmvd/ocular-disease-recognition-odir5k (accessed on 16 May 2023).

- Available online: https://aistudio.baidu.com/aistudio/datasetdetail/122940 (accessed on 16 May 2023).

- Available online: https://figshare.com/articles/dataset/PAPILA/14798004/1 (accessed on 16 May 2023).

- Available online: https://figshare.com/articles/dataset/PAPILA/14798004 (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/benjaminwarner/resized-2015-2019-blindness-detection-images (accessed on 16 May 2023).

- Available online: https://refuge.grand-challenge.org/ (accessed on 16 May 2023).

- Available online: https://ai.baidu.com/broad/download?dataset=gon (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/kssanjaynithish03/retinal-fundus-images (accessed on 16 May 2023).

- Available online: https://deepblue.lib.umich.edu/data/concern/data_sets/3b591905z?locale=en (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/andrewmvd/fundus-image-registration (accessed on 16 May 2023).

- Available online: http://medimrg.webs.ull.es/research/downloads/ (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/priyanagda/ritedataset (accessed on 16 May 2023).

- Available online: http://www.rodrep.com/longitudinal-diabetic-retinopathy-screening—description.html (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/aifahim/retinal-vassel-combine-same-format (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/jr2ngb/cataractdataset (accessed on 16 May 2023).

- Available online: http://webeye.ophth.uiowa.edu/ROC/ (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/beatrizsimoes/retina-quality (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/hebamohamed/retinagen (accessed on 16 May 2023).

- Available online: http://bioimlab.dei.unipd.it/Retinal%20Vessel%20Tortuosity.htm (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/ustinianw/retinal-tiny (accessed on 16 May 2023).

- Available online: http://cecas.clemson.edu/~ahoover/stare/ (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/balnyaupane/small-diabetic-retinopathy-dataset (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/mariaherrerot/the-sustechsysu-dataset (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/competitions/innovation-challenge-2019/data (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/competitions/vietai-advance-retinal-disease-detection-2020/data (accessed on 16 May 2023).

- Available online: http://www.varpa.es/research/ophtalmology.html#vicavr (accessed on 16 May 2023).

- Available online: http://people.duke.edu/~sf59/Estrada_TMI_2015_dataset.htm (accessed on 16 May 2023).

- Available online: https://novel.utah.edu/Hoyt/ (accessed on 16 May 2023).

- Available online: https://zenodo.org/record/3393265#.XazZaOgzbIV (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/nawa393/dr15_test (accessed on 16 May 2023).

- Available online: https://www.kaggle.com/datasets/makorromanuel/merged-retina-datasets (accessed on 16 May 2023).

- Cen, L.P.; Ji, J.; Lin, J.W.; Ju, S.T.; Lin, H.J.; Li, T.P.; Wang, Y.; Yang, J.F.; Liu, Y.F.; Tan, S.; et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. 2021, 12, 4828. [Google Scholar] [CrossRef]

- Yoo, T.K. A CycleGAN Deep Learning Technique for Artifact Reduction in Fundus Photography. Graefe’s Arch. Clin. Exp. Ophthalmol. 2020, 258, 1631–1637. [Google Scholar] [CrossRef]

- Hassan, T. A Composite Retinal Fundus and OCT Dataset along with Detailed Clinical Markings for Extracting Retinal Layers, Retinal Lesions and Screening Macular and Glaucomatous Disorders (dataset 1). 2021. [Google Scholar] [CrossRef]

- Hassan, T. A Composite Retinal Fundus and OCT Dataset along with Detailed Clinical Markings for Extracting Retinal Layers, Retinal Lesions and Screening Macular and Glaucomatous Disorders (dataset 2). 2021. [Google Scholar] [CrossRef]

- Hassan, T. A Composite Retinal Fundus and OCT Dataset along with Detailed Clinical Markings for Extracting Retinal Layers, Retinal Lesions and Screening Macular and Glaucomatous Disorders (dataset 3). 2021. [Google Scholar] [CrossRef]

- Hassan, T.; Akram, M.U.; Masood, M.F.; Yasin, U. Deep structure tensor graph search framework for automated extraction and characterization of retinal layers and fluid pathology in retinal SD-OCT scans. Comput. Biol. Med. 2019, 105, 112–124. [Google Scholar] [CrossRef] [PubMed]

- Hassan, T.; Akram, M.U.; Werghi, N.; Nazir, M.N. RAG-FW: A Hybrid Convolutional Framework for the Automated Extraction of Retinal Lesions and Lesion-Influenced Grading of Human Retinal Pathology. IEEE J. Biomed. Health Inform. 2021, 25, 108–120. [Google Scholar] [CrossRef] [PubMed]

- Diaz-Pinto, A.; Morales, S.; Naranjo, V.; Köhler, T.; Mossi, J.M.; Navea, A. CNNs for automatic glaucoma assessment using fundus images: An extensive validation. Biomed. Eng. Online 2019, 18, 29. [Google Scholar] [CrossRef] [PubMed]

- Pachade, S.; Porwal, P.; Thulkar, D.; Kokare, M.; Deshmukh, G.; Sahasrabuddhe, V.; Giancardo, L.; Quellec, G.; Mériaudeau, F. Retinal Fundus Multi-Disease Image Dataset (RFMiD): A Dataset for Multi-Disease Detection Research. Data 2021, 6, 14. [Google Scholar] [CrossRef]

- Li, T.; Gao, Y.; Wang, K.; Guo, S.; Liu, H.; Kang, H. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 2019, 501, 511–522. [Google Scholar] [CrossRef]

- Benítez, V.E.C.; Matto, I.C.; Román, J.C.M.; Noguera, J.L.V.; García-Torres, M.; Ayala, J.; Pinto-Roa, D.P.; Gardel-Sotomayor, P.E.; Facon, J.; Grillo, S.A. Dataset from fundus images for the study of diabetic retinopathy. Data Brief 2021, 36, 107068. [Google Scholar] [CrossRef]

- Akbar, S.; Sharif, M.; Akram, M.U.; Saba, T.; Mahmood, T.; Kolivand, M. Automated techniques for blood vessels segmentation through fundus retinal images: A review. Microsc. Res. Tech. 2019, 82, 153–170. [Google Scholar] [CrossRef]

- Raja, H. Data on OCT and Fundus Images. 2020. [Google Scholar] [CrossRef]

- Pires, R.; Jelinek, H.F.; Wainer, J.; Valle, E.; Rocha, A. Advancing Bag-of-Visual-Words Representations for Lesion Classification in Retinal Images. PLoS ONE 2014, 9, e96814. [Google Scholar] [CrossRef] [PubMed]

- Holm, S.; Russell, G.; Nourrit, V.; McLoughlin, N. DR HAGIS—a fundus image database for the automatic extraction of retinal surface vessels from diabetic patients. J. Med. Imaging 2017, 4, 014503. [Google Scholar] [CrossRef] [PubMed]

- Carmona, E.J.; Rincón, M.; García-Feijoó, J.; de-la Casa, J.M.M. Identification of the optic nerve head with genetic algorithms. Artif. Intell. Med. 2008, 43, 243–259. [Google Scholar] [CrossRef]

- Kauppi, T.; Kalesnykiene, V.; Kamarainen, J.K.; Lensu, L.; Sorri, I.; Raninen, A.; Voutilainen, R.; Uusitalo, H.; Kalviainen, H.; Pietila, J. The DIARETDB1 diabetic retinopathy database and evaluation protocol. In Proceedings of the British Machine Vision Conference 2007, Warwick, UK, 10–13 September 2007. [Google Scholar] [CrossRef]

- Sivaswamy, J.; Chakravarty, A.; Joshi, G.D.; Syed, T.A. A Comprehensive Retinal Image Dataset for the Assessment of Glaucoma from the Optic Nerve Head Analysis. JSM Biomed. Imaging Data Pap. 2015, 2, 1004. [Google Scholar]

- Sivaswamy, J.; Krishnadas, S.R.; Datt Joshi, G.; Jain, M.; Syed Tabish, A.U. Drishti-GS: Retinal image dataset for optic nerve head(ONH) segmentation. In Proceedings of the 2014 IEEE 11th International Symposium on Biomedical Imaging (ISBI), Beijing, China, 29 April–2 May 2014; pp. 53–56. [Google Scholar] [CrossRef]

- Hernandez-Matas, C.; Zabulis, X.; Triantafyllou, A.; Anyfanti, P.; Douma, S.; Argyros, A.A. FIRE: Fundus Image Registration dataset. Model. Artif. Intell. Ophthalmol. 2017, 1, 16–28. [Google Scholar] [CrossRef]

- Akbar, S.; Hassan, T.; Akram, M.U.; Yasin, U.; Basit, I. AVRDB: Annotated Dataset for Vessel Segmentation and Calculation of Arteriovenous Ratio. 2017. [Google Scholar]

- Giancardo, L.; Meriaudeau, F.; Karnowski, T.P.; Li, Y.; Garg, S.; Tobin, K.W.; Chaum, E. Exudate-based diabetic macular edema detection in fundus images using publicly available datasets. Med. Image Anal. 2012, 16, 216–226. [Google Scholar] [CrossRef] [PubMed]

- Kohler, T.; Budai, A.; Kraus, M.F.; Odstrcilik, J.; Michelson, G.; Hornegger, J. Automatic no-reference quality assessment for retinal fundus images using vessel segmentation. In Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems, Porto, Portugal, 20–22 June 2013. [Google Scholar] [CrossRef]

- Budai, A.; Bock, R.; Maier, A.; Hornegger, J.; Michelson, G. Robust Vessel Segmentation in Fundus Images. Int. J. Biomed. Imaging 2013, 2013, 1–11. [Google Scholar] [CrossRef]

- Prasanna Porwal, S.P. Indian Diabetic Retinopathy Image Dataset (IDRiD). 2018. [Google Scholar] [CrossRef]

- Niemeijer, M.; Xu, X.; Dumitrescu, A.V.; Gupta, P.; van Ginneken, B.; Folk, J.C.; Abramoff, M.D. Automated Measurement of the Arteriolar-to-Venular Width Ratio in Digital Color Fundus Photographs. IEEE Trans. Med. Imaging 2011, 30, 1941–1950. [Google Scholar] [CrossRef]

- Tang, L.; Garvin, M.K.; Lee, K.; Alward, W.L.W.; Kwon, Y.H.; Abramoff, M.D. Robust Multiscale Stereo Matching from Fundus Images with Radiometric Differences. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2245–2258. [Google Scholar] [CrossRef]

- Fu, H.; Li, F.; Orlando, J.I.; Bogunović, H.; Sun, X.; Liao, J.; Xu, Y.; Zhang, S.; Zhang, X. ADAM: Automatic Detection Challenge on Age-Related Macular Degeneration. 2020. [Google Scholar] [CrossRef]

- Li, L.; Xu, M.; Wang, X.; Jiang, L.; Liu, H. Attention Based Glaucoma Detection: A Large-scale Database and CNN Model. arXiv 2019, arXiv:1903.10831. [Google Scholar]

- Decenciere, E.; Zhang, X.; Cazuguel, G.; Lay, B.; Cochener, B.; Trone, C.; Gain, P.; Ordonez, R.; Massin, P.; Erginay, A.; et al. Feedback on a publicly distributed image database: The messidor database. Image Anal. Stereol. 2014, 33, 231. [Google Scholar] [CrossRef]

- Kovalyk, O.; Morales-Sánchez, J.; Verdú-Monedero, R.; Sellés-Navarro, I.; Palazón-Cabanes, A.; Sancho-Gómez, J.L. PAPILA. 2022. [Google Scholar] [CrossRef]

- Orlando, J.I.; Fu, H.; Breda, J.B.; van Keer, K.; Bathula, D.R.; Diaz-Pinto, A.; Fang, R.; Heng, P.A.; Kim, J.; Lee, J.; et al. REFUGE Challenge: A unified framework for evaluating automated methods for glaucoma assessment from fundus photographs. Med. Image Anal. 2020, 59, 101570. [Google Scholar] [CrossRef]

- Almazroa, A. Retinal Fundus Images for Glaucoma Analysis: The Riga Dataset. 2018. [Google Scholar] [CrossRef]

- Hu, Q.; Abràmoff, M.D.; Garvin, M.K. Automated Separation of Binary Overlapping Trees in Low-Contrast Color Retinal Images. In Advanced Information Systems Engineering; Springer: Berlin/Heidelberg, Germany, 2013; pp. 436–443. [Google Scholar] [CrossRef]

- Adal, K.M.; van Etten, P.G.; Martinez, J.P.; van Vliet, L.J.; Vermeer, K.A. Accuracy Assessment of Intra- and Intervisit Fundus Image Registration for Diabetic Retinopathy Screening. Investig. Ophthalmol. Vis. Sci. 2015, 56, 1805–1812. [Google Scholar] [CrossRef] [PubMed]

- Grisan, E.; Foracchia, M.; Ruggeri, A. A Novel Method for the Automatic Grading of Retinal Vessel Tortuosity. IEEE Trans. Med. Imaging 2008, 27, 310–319. [Google Scholar] [CrossRef] [PubMed]

- Lin, L.; Li, M.; Huang, Y.; Cheng, P.; Xia, H.; Wang, K.; Yuan, J.; Tang, X. The SUSTech-SYSU dataset for automated exudate detection and diabetic retinopathy grading. Sci. Data 2020, 7, 409. [Google Scholar] [CrossRef] [PubMed]

- Vázquez, S.G.; Cancela, B.; Barreira, N.; Penedo, M.G.; Rodríguez-Blanco, M.; Seijo, M.P.; de Tuero, G.C.; Barceló, M.A.; Saez, M. Improving retinal artery and vein classification by means of a minimal path approach. Mach. Vis. Appl. 2012, 24, 919–930. [Google Scholar] [CrossRef]

- Estrada, R.; Allingham, M.J.; Mettu, P.S.; Cousins, S.W.; Tomasi, C.; Farsiu, S. Retinal Artery-Vein Classification via Topology Estimation. IEEE Trans. Med. Imaging 2015, 34, 2518–2534. [Google Scholar] [CrossRef] [PubMed]

- Resnikoff, S.; Felch, W.; Gauthier, T.M.; Spivey, B. The number of ophthalmologists in practice and training worldwide: A growing gap despite more than 200,000 practitioners. Br. J. Ophthalmol. 2012, 96, 783–787. [Google Scholar] [CrossRef]

- Holst, C.; Sukums, F.; Radovanovic, D.; Ngowi, B.; Noll, J.; Winkler, A.S. Sub-Saharan Africa—the new breeding ground for global digital health. Lancet Digit. Health 2020, 2, e160–e162. [Google Scholar] [CrossRef]

- Müller, S.; Koch, L.M.; Lensch, H.; Berens, P. A Generative Model Reveals the Influence of Patient Attributes on Fundus Images. In Proceedings of the Medical Imaging with Deep Learning, Zurich, Switzerland, 6–8 July 2022. [Google Scholar]

- Ting, D.S.W.; Cheung, C.Y.L.; Lim, G.; Tan, G.S.W.; Quang, N.D.; Gan, A.; Hamzah, H.; Garcia-Franco, R.; Yeo, I.Y.S.; Lee, S.Y.; et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. JAMA 2017, 318, 2211. [Google Scholar] [CrossRef] [PubMed]

- Serener, A.; Serte, S. Geographic variation and ethnicity in diabetic retinopathy detection via deeplearning. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 664–678. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).