1. Introduction

The number of people with diabetes mellitus (DM) is rapidly increasing, with up to 642 million cases expected by 2040 [

1,

2]. More than 40% of these diagnosed persons will develop retinopathy. Diabetic retinopathy (DR) and diabetic macular edema (DME) are the main ophthalmological complications of DM, with DR being the leading cause of blindness and visual disability in the working-age population. The risk of such vision loss can be reduced by annual retinal screening and early retinopathy detection to refer cases for follow-up and treatment. The necessary fundus photographs for such screening can be easily obtained non-invasively in an outpatient setting. Implementing a nationwide screening program based on fundus photography resulted in DR no longer being the leading cause of blindness certification in the United Kingdom [

3,

4,

5].

However, as long as an ophthalmologist interprets retinal images manually, this screening procedure will always be labor-intensive and expensive, thereby complicating large-scale accessible implementation in many countries. New technologies facilitate the development of care solutions that keep our health system manageable and affordable, especially for diseases of affluence such as DM and associated eye health complications. To realize this ambition, experts in technology and medicine collaborate on solutions to reduce the workload caused by manual grading, a task for which artificial intelligence (AI) is well suited [

4,

5].

AI research in healthcare accelerates with applications achieving human-level performance across various fields of medicine. The use of AI can range from organizational help to surgical applications, with image classification for diagnostic support being one of the main areas of interest [

6,

7]. IB Neuro™ (Imaging Biometrics, Elm Grove, WI, USA) was the first FDA-approved AI application in 2008 for detecting brain tumors on MRI images. Multiple applications have been approved since then, many in medical imaging domains such as radiology. Some applications go beyond diagnosis and enter therapeutic fields such as radiotherapy [

7].

Deep learning, a subtype of AI, was introduced not so long ago for the automated analysis and classification of images. In 2016, Gulshan et al. published a landmark paper on a deep learning algorithm with high sensitivity and specificity to classify referable DR [

8]. Later papers showed that deep learning algorithms’ diagnostic accuracy is at least comparable to the assessments done by clinicians [

9,

10,

11,

12]. Abràmoff and colleagues published their paper on an autonomous AI-based diagnostic system for detecting diabetic retinopathy in 2018 (IDx-DR (Digital Diagnostics, Coralville, IA, USA)). This work led to the first FDA-permitted marketing of an AI-based medical device for automated DR referral [

13]. Since then, multiple AI devices have been developed around the world [

14].

These developments are exciting, but the clinical community is not yet widely adopting the new tools. Several bottlenecks are at the basis of this hesitation. First, most algorithms are reported by the scientific community and have not been developed into easy-to-use software for primary or secondary care. Second, algorithms mostly report on DR performance, but when considering diabetic eye screening, both DR and DME are relevant. Third, the performance evaluation of the algorithms is done under limited test conditions. Finally, discussions are ongoing at different levels in the healthcare sector about the medico-legal position of AI-based screening and its integration into the patient care path.

AI accomplishes a specific task on previously curated data, typically from one setting. Ideally, datasets to develop an algorithm are sufficiently diverse to represent the population, with metadata such as age, ethnicity, and sex to allow for performance analysis. In reality, health data lack standardization and contain a bias due to variance in human grading. The actual patient populations are more diverse than those in commonly used datasets [

15,

16]. Medical data with high-quality labels is challenging to collect, and the General Data Protection Regulation (GDPR) and other privacy-preserving regulations restrict medical data usage. Therefore, most AI models are trained with datasets that have limited heterogeneity. Predictions often do not generalize to different populations or settings. Analyses on subpopulations (e.g., ethnicity) are seldom done, leaving uncertainty that model performance can be reliably extrapolated to new, unseen patient populations [

17]. As a result, the performance promised in scientific publications is often not reached in clinical practice, and existing inequalities and biases in healthcare might be exacerbated [

17]. Some of these problems can be overcome by executing a prospective clinical trial incorporating pre-chosen metadata and ensuring a relevant distribution amongst specific subpopulations [

13]. However, this is a time-consuming and expensive solution, and this approach only allows model evaluation in a limited number of clinical centers.

International organizations such as the International Diabetes Federation, the International Council of Ophthalmology, the World Council of Optometry, and the International Agency for the Prevention of Blindness support the vast clinical need for widespread and convenient eye health screening tools for persons with diabetes as part of integrated diabetes care [

18]. From this perspective, we present an evaluation of a diabetic eye screening software available as a certified medical device for automated DR and DME detection. We report the performance of the deep learning model underlying the software using private and publicly available datasets. Using stratification analyses, we studied the performance in predefined subgroups based on clinically relevant parameters, thereby taking an essential step toward improving the model evaluation process and its robustness during deployment.

4. Discussion

We report a systematic retrospective evaluation of the MONA.health diabetic eye screening software that analyzes fundus images using artificial intelligence and summarizes DR and DME classification outputs as a single result to assess the patient referral status. Our investigations were performed on a large, multi-center, private test set from a US-based screening network and publicly available datasets regularly used to benchmark diabetic eye detection algorithms. The private test set reported 90.91% sensitivity and 94.24% specificity for referring a person because of DR or DME. These values are higher than the pre-specified superiority endpoints of 85% sensitivity and 82.5% specificity proposed in the work of Abràmoff and coworkers [

13]. It is relevant to say that the latter values are for a prospective study while we performed a retrospective study. Nevertheless, our performances are comparable to previously published work [

13,

34,

35,

36,

37,

38,

39,

40,

41]. Our study adds value to the research field by reporting the results of data stratification to study differences in model performance in subpopulations. Such an analysis is essential to assess the usability of the software in clinical practice, thereby providing a starting point for better insights into potential hurdles when incorporating AI-based decision support software in clinical practice.

All DR grades beyond mild DR are considered referable and justify a physical examination by an ophthalmologist. However, the higher the retinopathy grade, the higher the risk of vision loss and the more urgent the need for referral. Therefore, high sensitivities are even more critical for detecting severe non-proliferative DR and proliferative DR. Sensitivities of 99.39 and 99.54% were obtained for these cases of vision-threatening DR, indicating that the vast majority of cases will be accurately referred by the software. A substantial difference in PPV, the probability that subjects with a positive screening test truly have the disease, is noted when considering the diagnosis of DME (68.75%) compared to DR (94.14%). This difference is likely attributed to the lower disease prevalence of DME (11.76%) in the test set.

The performance was analyzed on the publicly available Kaggle, Messidor-2, and Messidor-2 Iowa reference datasets. The algorithm has a robust performance, with only slight decreases in AUC and sensitivity for DR on the publicly available Kaggle test set. This observation may be attributed to the fact that the Kaggle test set only contains images dating before 2015 [

29]. We observed a comparable decrease in sensitivity in our test set for older images (

Figure 2C). For the Messidor-2 dataset, AUC values are comparable to those reported on the test set for the regular and Iowa reference. However, a decrease in specificity and an increase in sensitivity are noted for DR. This rebalance between sensitivity and specificity indicates that the chosen threshold is suboptimal for this specific dataset. These findings are consistent with those of Gulshan et al. [

8]. A possible explanation for this shift in operating point is the homogeneity of the dataset (one camera type and only patients from France with a less diverse ethnic mix) [

30,

31]. However, the chosen threshold might still result in a performance closer to the 90% sensitivity operating point in a more variable real-life setting than shown on the Messidor-2 dataset. This hypothesis is supported by the analysis results on the more extensive test set. A decreased sensitivity for DME is observed on the Messidor-2 data compared to our own test set. A shift in operating point is the most likely explanation for this observation. This effect is larger in the Iowa reference labeling. This might be attributed to a difference in labeling between these two references. For the same images, the patient level prevalence is 21.7% in the Iowa labeling [

32] compared to 30.9% in the standard labeling (calculated based on [

8]).

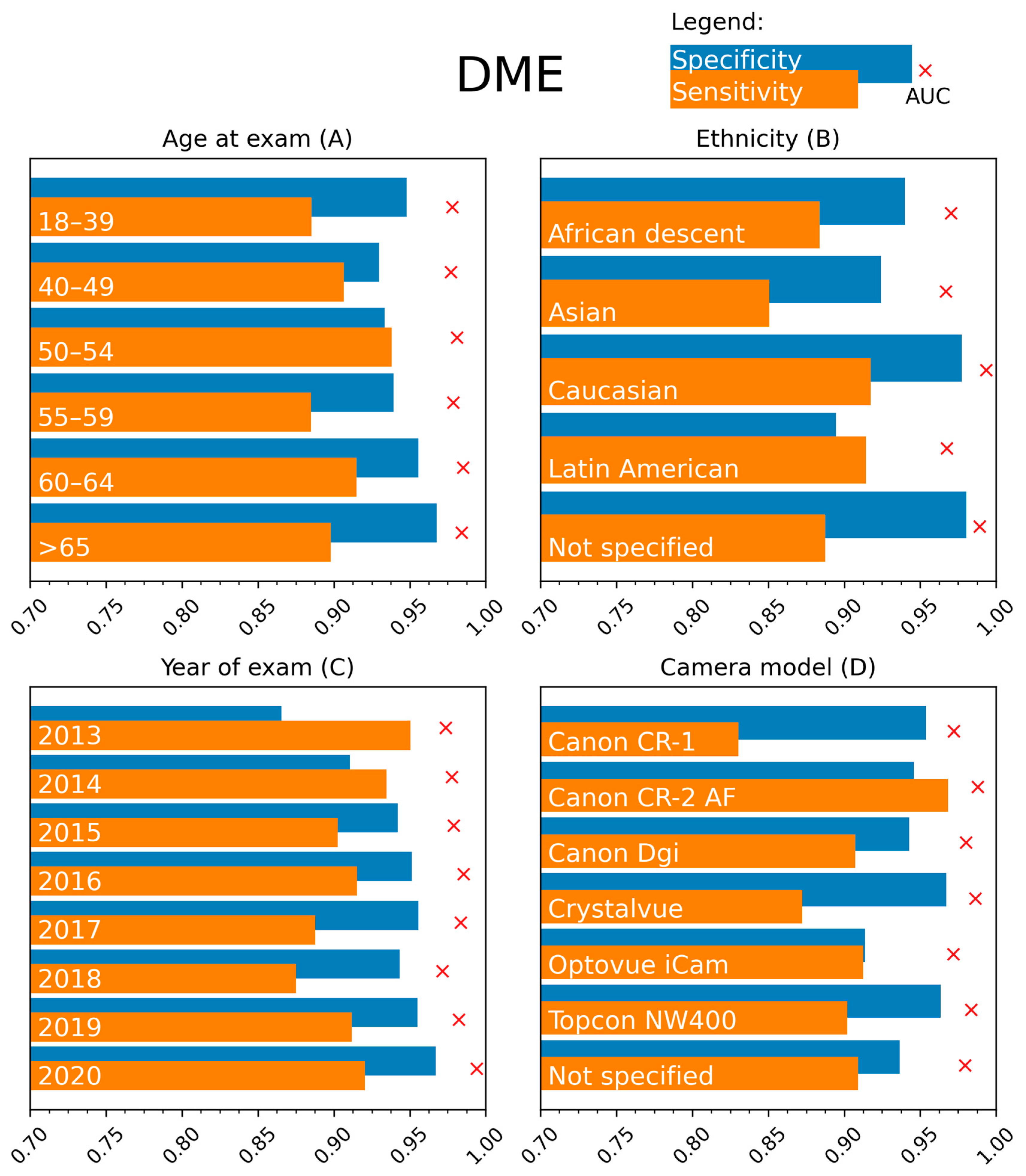

The performance evaluations of AI algorithms detecting DR and DME can yield good results, but guaranteeing high model performance for all relevant subpopulations is still a significant challenge. We performed an extensive stratification analysis in the current study to investigate possible differences in performance. This evaluation has not been reported to our knowledge.

The algorithm’s performance for DR and DME classification was stable in the different age categories up to 65 years. Beyond the patient age of 65 years, a decrease in sensitivity for DR detection to 82.51% was recorded. Acquiring high-quality fundus images can be more challenging in the elderly due to patient-related factors such as corneal changes, vitreous floaters, and cataract formation. However, the lower sensitivity in DR detection cannot be solely attributed to this factor since no remarkable differences were noted in the stratification analysis based on image quality. No alternative explanations could be found based on the performed stratifications.

The MONA.health software is registered as diabetic eye screening software in Europe. Of note, ethnicity distribution is different between the European and USA-based populations of the private test set. An ethnicity stratification was performed to aid the software performance evaluation. The biggest relevant deviations were found for sensitivity in the Caucasian subgroup (84.03%) and specificity in the Latin American subgroup (91.33%). A detailed analysis of the positive cases in this Caucasian group was done, showing that for Caucasians, 33.3% of the referable cases are based on the presence of retinal hemorrhages with/without micro-aneurysms without any other lesion types (such as cotton wool spots, hard exudates, IRMA, venous beading, new vessels, fibrous proliferation, preretinal, or vitreous hemorrhage). By comparison, this is only the case in 23.5% of all non-Caucasian cases and 22.2% of Latin American cases (the largest subgroup amongst positive cases). We assume DR detection is more difficult in the Caucasian population due to a lower prevalence of other signs besides hemorrhages. Our medical retina experts’ analysis of all Caucasian false negatives revealed that dust spots and shadows had been mislabeled as hemorrhages. Previous research showed that artifacts might be an important reason for intra- and interobserver variability and mislabeling [

15]. Nevertheless, the achieved performances remain above the non-inferiority hypothesis [

13].

The prevalence of referable DR is higher in the Latin American population than in the Caucasian population [

42]. Increased prevalence may be associated with a higher likelihood of more severe disease, which is more easily detected [

43,

44]. This might contribute to the observed differences. Furthermore, 30% of patients are of “unspecified” ethnic origin, making many images unavailable for the stratification analysis. A drop in specificity for the Latin American subgroup is observed. Considering that the AUC remains high in this group, this observation may indicate that there is a more optimal threshold for this subgroup. The high disease prevalence might reinforce this effect in this subgroup. Sensitivity and specificity metrics for the Asian and African subgroups should be interpreted cautiously. The sample size of these two groups was under the minimal sample size of 541, making it hard to draw any meaningful conclusion.

Multiple parameters were explored to stratify the analysis for disease severity. Due to the low quality of specific labels such as HbA1c values and years since diagnosis (missing data, impossible values), these parameters were not kept for analysis. Therefore, insulin dependency was selected as a surrogate parameter for disease severity. This stratification showed a difference in sensitivity/specificity division for DR (93.94/88.55% vs. 87.81/96.22%), meaning that the ideal operating point for 90% sensitivity differs between the two groups. In real life, only one threshold can be used, and a mix between insulin-dependent and independent patients is expected, balancing the differences between both groups.

Considering the year of examination, intuitively, one would expect a lower performance when analyzing older images since image quality, resolution, and ease of use have increased over the years due to technological improvements. This statement appears to hold for DR. However, for DME, increased sensitivity and decreased specificity are seen for the older images. At the same time, the AUC remained high, indicating that older images might also benefit from a different threshold. No notable discrepancies between results were recorded when considering camera type. Regarding DME, a lower sensitivity was observed for the Canon CR-1 camera (Canon, Tokyo, Japan).

This study comes with strengths and limitations. We report the performance of the MONA.health software that uses one fundus image of the left eye and one of the right eye to generate a report about the patient’s referral status for DR and DME. One fundus image per eye results in higher patient comfort and lower operational costs, making the software easy to use. This software was developed explicitly for diabetic eye screening, and its operational settings balance sensitivity, specificity, and cost-effectiveness [

45]. The referral threshold was computed and subsequently fixed for subsequent usage in the software [

45]. An additional study strength is the evaluation of the software using a sizable private test set and publicly available datasets. Furthermore, the stratification analysis investigated the diagnostic performance of such an AI-based algorithm for the first time. Overall, we report stable high-performance results using widely used metrics such as AUC, sensitivity, and specificity. We highlight the importance of stratification from a research and clinical perspective by illustrating potential hurdles to overcome before implementing AI in daily practice. The stratification illustrates that comparisons based on AUC can be deceiving since most strata have a very high AUC, but the resulting performances for a predefined threshold may shift. In a production setting, one cannot tailor this threshold to the specific needs of the context since this would require a new and elaborate validation study to prove effectiveness [

45].

The most critical limitation of stratification is that results depend on the initial label’s quality both for the ground truth of the diagnosis and for the metadata. Our research team obtained the private test set from the well-established EyePACS telemedicine platform. The EyePACS protocols for collecting fundus images and diabetic eye screening are reliable. However, the protocols were initially not designed to organize metadata for later use in a stratification analysis to assess AI-based image analysis. We noted several problems regarding this quality during our study, such as impossible numerical values and missing data. A more robust higher quality dataset would be necessary to further improve research on this subject. Nonetheless, patient consent and privacy issues limit obtaining such a dataset, and a post hoc curation of an existing dataset is extremely difficult. A second limitation is the difference between prevalence in the dataset (48.8%) and real-life prevalence, of which reports vary but are considerably lower [

46,

47,

48,

49,

50,

51]. We considered correcting for this difference in our study, but it was decided not to rebalance the dataset to maintain a sufficient number of images. Finally, prospective studies and post-market clinical evaluations are needed to evaluate MONA-health software performance further and support our conclusions. Such studies are currently underway and indexed as clinical trials NCT05260281 and NCT05391659.