Automated Speech Analysis in Bipolar Disorder: The CALIBER Study Protocol and Preliminary Results

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Sample

2.2.1. Hospital Clinic of Barcelona

2.2.2. Mayo Clinic

2.2.3. Both Recruiting Centers

2.3. Assessment

2.3.1. Sociodemographic and Clinical Assessment

Hospital Clinic of Barcelona

Mayo Clinic

2.3.2. Symptoms and Functional Assessment

Hospital Clinic of Barcelona

Mayo Clinic

2.4. Speech Recording

2.4.1. Recording Method

Hospital Clinic of Barcelona

Mayo Clinic

2.4.2. Language

2.4.3. Setting

2.4.4. Interview Format

- (i)

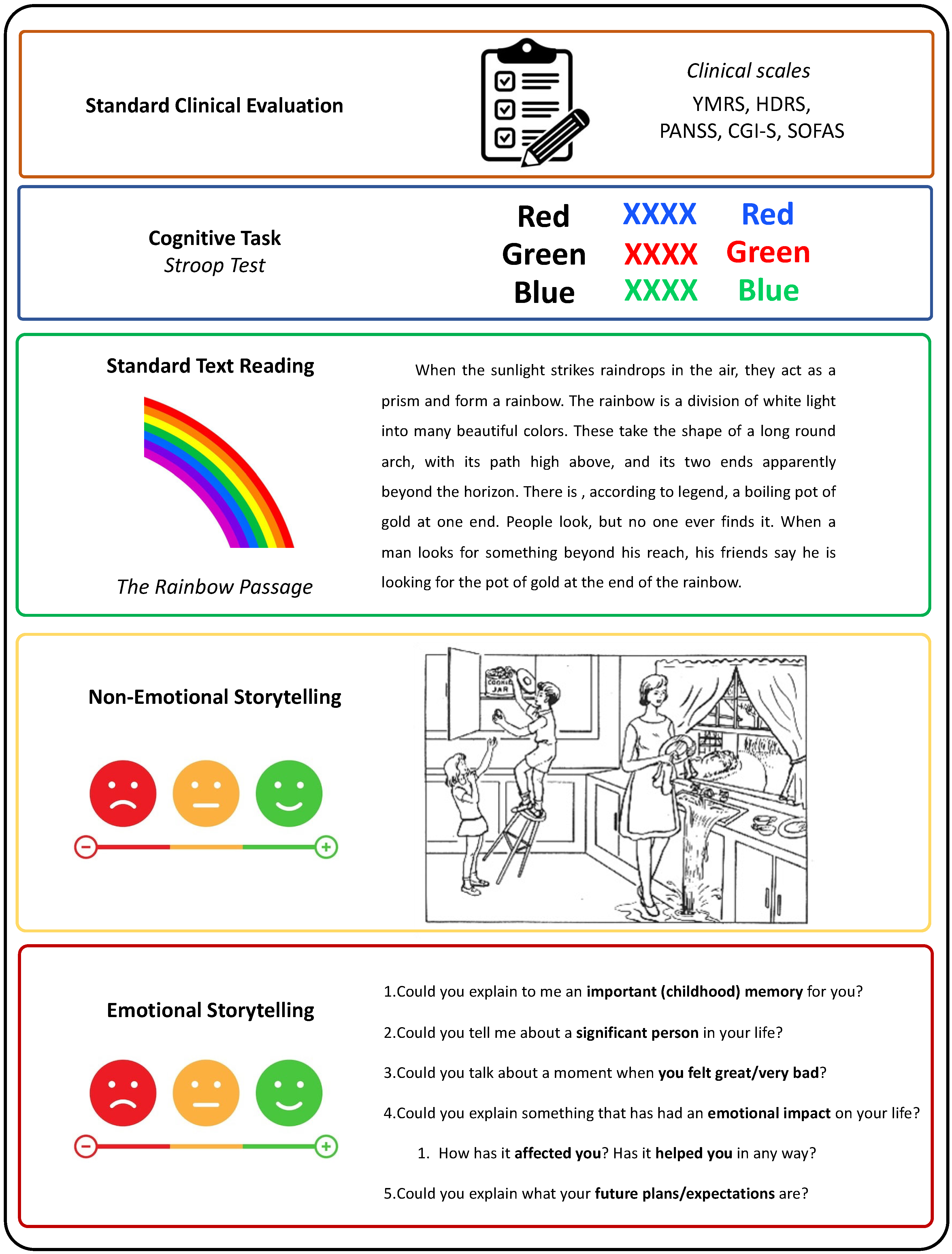

- Standard clinical evaluation—Participants were asked a variety of questions to complete the clinical scales for assessing psychopathological and functional states. Some of these scales include straightforward questions, such as item 16 from the HDRS, which asks about weight. Other items, like item 17 from the HDRS, require interpretation of responses to open-ended questions, similar to analyzing spontaneous speech. Clinical evaluations incorporating spontaneous speech have proven effective in detecting depression [51], identifying autism through acoustic feature analysis [52], and detecting manic states in BD [53];

- (ii)

- Cognitive task—Stroop test (approximately 3 min): Participants completed the Stroop test, which involves three main tasks. First, participants read aloud the names of colors printed in black ink. Second, they state the colors of the ink. Third, they perform the interference task, where they must state the color of the ink in which a color word is printed, ignoring the word itself (e.g., saying “red” when the word “blue” is written in red ink). This test assesses executive function–inhibition [54]. Mayo Clinic patients did not complete the Stroop test. The Stroop test has been used in previous literature studying prosodic features in depression [55], verbal task switches and unique sounds-based associations between BD, ADHD, and HC [28], and formal aspects of speech in BD discrimination between euthymia, mania, depression, and mixed episodes [29];

- (iii)

- Standard text reading (approximately 2 min): Patients were tasked with reading “The Rainbow Passage” [56], a 100-word excerpt commonly utilized by speech therapists to assess vocal ability. The Rainbow Passage has been used to evaluate acoustic markers as predictors of clinical depression scores [13] and fundamental frequency after a stressful activity [57];

- (iv)

- Non-emotional storytelling (approximately 3 min): Patients described the Cookie Theft picture, a visual scene depicted in a section of the Boston Diagnostic Aphasia Examination (BDAE)) [58]. This image was chosen to evoke a minimal emotional response. Patients were instructed to describe the image, including as much detail as they could, for at least one minute. If their response lacked sufficient content, supplementary questions were posed (e.g., “Please detail the steps for frying an egg, buttoning a button, putting on a shirt, or smoking a cigarette”). Non-emotional storytelling has been used to quantify speech incoherence in schizophrenia [23], detect incoherent speech in schizophrenia [22], and measure formal thought disorder in schizophrenia using image description [59];

- (v)

- Emotional storytelling (approximately 3 min): Patients were encouraged to recount autobiographical memories with emotional significance, such as discussing important childhood memories, significant individuals in their lives, moments of intense happiness or distress, future plans and expectations, and reflecting on how those memories have impacted them. Emotional storytelling has been used to distinguish between HC and patients with schizophrenia [60]. Furthermore, the emotional content of dreams has been shown to effectively differentiate between patients with BD, schizophrenia, and HC [26]. Notably, Mota et al. (2014) [26] demonstrated that speech containing emotional content is more valuable for discriminating between patients with BD, schizophrenia, and HC compared to speech without an emotional component.

2.5. Data Analysis

2.5.1. Preprocessing

2.5.2. Feature Extraction

2.5.3. Statistical Analysis

- Continuous Quantification of Psychopathology: Correlation of speech features with clinical scales assessing symptom severity for mania (YMRS), depression (HDRS-17), and psychosis (PANSS), including both global scores and specific items/symptoms;

- Categorical Classification: Using the speech features to develop predictive models for diagnostic (i.e., manic, depressive, and euthymic phases in BD) and treatment outcomes (i.e., acute phases of mania/depression vs. response phases). For these classification tasks, we will employ lasso logistic regression;

- Feature and Task Relevance Identification: The relevance of specific speech tasks and features (or combinations thereof) will be determined for each diagnostic and treatment outcome task. Variable relevance methods will be used to identify the most pertinent features. The magnitude of correlation and prediction accuracies across different speech tasks will be assessed to identify the most relevant tasks for the previous analyses.

2.5.4. Code and Data Availability

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- APA. Diagnostic and Statistical Manual of Mental Disorders: DSM-5, 5th ed.; APA: Arlington, VA, USA, 2013. [Google Scholar]

- Freedman, R.; Lewis, D.A.; Michels, R.; Pine, D.S.; Schultz, S.K.; Tamminga, C.A.; Gabbard, G.O.; Gau, S.S.-F.; Javitt, D.C.; Oquendo, M.A.; et al. The initial field trials of DSM-5: New blooms and old thorns. Am. J. Psychiatry 2013, 170, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Hidalgo-Mazzei, D.; Young, A.H. Psychiatry foretold. Aust. N. Z. J. Psychiatry 2019, 53, 365–366. [Google Scholar] [CrossRef]

- Nierenberg, A.A.; Agustini, B.; Köhler-Forsberg, O.; Cusin, C.; Katz, D.; Sylvia, L.G.; Peters, A.; Berk, M. Diagnosis and Treatment of Bipolar Disorder: A Review. JAMA 2023, 330, 1370–1380. [Google Scholar] [CrossRef]

- Dikaios, K.; Rempel, S.; Dumpala, S.H.; Oore, S.; Kiefte, M.; Uher, R. Applications of Speech Analysis in Psychiatry. In Harvard Review of Psychiatry; Lippincott Williams and Wilkins: Philadelphia, PA, USA, 2023; pp. 1–13. [Google Scholar] [CrossRef]

- Vanello, N.; Guidi, A.; Gentili, C.; Werner, S.; Bertschy, G.; Valenza, G.; Lanatá, A.; Scilingo, E.P. Speech analysis for mood state characterization in bipolar patients. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, San Diego, CA, USA, 28 August–1 September 2012; pp. 2104–2107. [Google Scholar] [CrossRef]

- Guidi, A.; Schoentgen, J.; Bertschy, G.; Gentili, C.; Landini, L.; Scilingo, E.P.; Vanello, N. Voice quality in patients suffering from bipolar disease. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6106–6109. [Google Scholar] [CrossRef]

- Low, D.M.; Bentley, K.H.; Ghosh, S.S. Automated assessment of psychiatric disorders using speech: A systematic review. Laryngoscope Investig. Otolaryngol. 2020, 5, 96–116. [Google Scholar] [CrossRef]

- Carrillo, F.; Mota, N.; Copelli, M.; Ribeiro, S.; Sigman, M.; Cecchi, G.; Slezak, D.F. Emotional intensity analysis in bipolar subjects. arXiv 2016, arXiv:1606.02231. [Google Scholar]

- Young, R.C.; Biggs, J.T.; Ziegler, V.E.; Meyer, D.A. A rating scale for mania: Reliability, validity and sensitivity. Br. J. Psychiatry 1978, 133, 429–435. [Google Scholar] [CrossRef] [PubMed]

- Hamilton, M. A rating scale for depression. J. Neurol. Neurosurg. Psychiatry 1960, 23, 56–62. [Google Scholar] [CrossRef]

- DeSouza, D.D.; Robin, J.; Gumus, M.; Yeung, A. Natural Language Processing as an Emerging Tool to Detect Late-Life Depression. Front. Psychiatry 2021, 12, 719125. [Google Scholar] [CrossRef] [PubMed]

- Hashim, N.W.; Wilkes, M.; Salomon, R.; Meggs, J.; France, D.J. Evaluation of Voice Acoustics as Predictors of Clinical Depression Scores. J. Voice 2017, 31, 256.e1–256.e6. [Google Scholar] [CrossRef] [PubMed]

- Mundt, J.C.; Snyder, P.J.; Cannizzaro, M.S.; Chappie, K.; Geralts, D.S. Voice acoustic measures of depression severity and treatment response collected via interactive voice response (IVR) technology. J. Neurolinguist. 2007, 20, 50–64. [Google Scholar] [CrossRef]

- Zhang, J.; Pan, Z.; Gui, C.; Xue, T.; Lin, Y.; Zhu, J.; Cui, D. Analysis on speech signal features of manic patients. J. Psychiatr. Res. 2018, 98, 59–63. [Google Scholar] [CrossRef] [PubMed]

- Covington, M.A.; Lunden, S.L.A.; Cristofaro, S.L.; Wan, C.R.; Bailey, C.T.; Broussard, B.; Fogarty, R.; Johnson, S.; Zhang, S.; Compton, M.T. Phonetic measures of reduced tongue movement correlate with negative symptom severity in hospitalized patients with first-episode schizophrenia-spectrum disorders. Schizophr. Res. 2012, 142, 93–95. [Google Scholar] [CrossRef]

- Moore, E., II; Clements, M.A.; Peifer, J.W.; Weisser, L. Critical analysis of the impact of glottal features in the classification of clinical depression in speech. IEEE Trans. Biomed. Eng. 2008, 55, 96–107. [Google Scholar] [CrossRef]

- Ozdas, A.; Shiavi, R.G.; Silverman, S.E.; Silverman, M.K.; Wilkes, D.M. Investigation of vocal jitter and glottal flow spectrum as possible cues for depression and near-term suicidal risk. IEEE Trans. Biomed. Eng. 2004, 51, 1530–1540. [Google Scholar] [CrossRef] [PubMed]

- Ooi, K.E.B.; Lech, M.; Allen, N.B. Multichannel weighted speech classification system for prediction of major depression in adolescents. IEEE Trans. Biomed. Eng. 2013, 60, 497–506. [Google Scholar] [CrossRef]

- Pan, W.; Deng, F.; Wang, X.; Hang, B.; Zhou, W.; Zhu, T. Exploring the ability of vocal biomarkers in distinguishing depression from bipolar disorder, schizophrenia, and healthy controls. Front. Psychiatry 2023, 14, 1079448. [Google Scholar] [CrossRef] [PubMed]

- Faurholt-Jepsen, M.; Vinberg, M.; Christensen, E.M.; Kessing, L.V.; Busk, J.; Winther, O.; Bardram, J.E.; Fros, M. Voice analysis as an objective state marker in bipolar disorder. Transl. Psychiatry 2016, 6, e856. [Google Scholar] [CrossRef]

- Iter, D.; Yoon, J.; Jurafsky, D. Automatic Detection of Incoherent Speech for Diagnosing Schizophrenia; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2018; pp. 136–146. [Google Scholar] [CrossRef]

- Elvevåg, B.; Foltz, P.W.; Weinberger, D.R.; Goldberg, T.E. Quantifying incoherence in speech: An automated methodology and novel application to schizophrenia. Schizophr. Res. 2007, 93, 304–316. [Google Scholar] [CrossRef]

- Bedi, G.; Carrillo, F.; Cecchi, G.A.; Slezak, D.F.; Sigman, M.; Mota, N.B.; Ribeiro, S.; Javitt, D.C.; Copelli, M.; Corcoran, C.M. Automated analysis of free speech predicts psychosis onset in high-risk youths. NPJ Schizophr. 2015, 1, 15030. [Google Scholar] [CrossRef]

- Corcoran, C.M.; Carrillo, F.; Fernández-Slezak, D.; Bedi, G.; Klim, C.; Javitt, D.C.; Bearden, C.E.; Cecchi, G.A. Prediction of psychosis across protocols and risk cohorts using automated language analysis. World Psychiatry 2018, 17, 67–75. [Google Scholar] [CrossRef]

- Mota, N.B.; Furtado, R.; Maia, P.P.C.; Copelli, M.; Ribeiro, S. Graph analysis of dream reports is especially informative about psychosis. Sci. Rep. 2014, 4, 3691. [Google Scholar] [CrossRef] [PubMed]

- Elvevåg, B.; Foltz, P.W.; Rosenstein, M.; DeLisi, L.E. An automated method to analyze language use in patients with schizophrenia and their first-degree relatives. J. Neurolinguist. 2010, 23, 270–284. [Google Scholar] [CrossRef] [PubMed]

- Martz, E.; Weibel, S.; Weiner, L. An overactive mind: Investigating racing thoughts in ADHD, hypomania and comorbid ADHD and bipolar disorder via verbal fluency tasks. J. Affect. Disord. 2022, 300, 226–234. [Google Scholar] [CrossRef]

- Weiner, L.; Doignon-Camus, N.; Bertschy, G.; Giersch, A. Thought and language disturbance in bipolar disorder quantified via process-oriented verbal fluency measures. Sci. Rep. 2019, 9, 14282. [Google Scholar] [CrossRef]

- Teixeira, A.S.; Talaga, S.; Swanson, T.J.; Stella, M. Revealing semantic and emotional structure of suicide notes with cognitive network science. Sci. Rep. 2021, 11, 19423. [Google Scholar] [CrossRef]

- Swain, M.; Routray, A.; Kabisatpathy, P. Databases, features and classifiers for speech emotion recognition: A review. Int. J. Speech Technol. 2018, 21, 93–120. [Google Scholar] [CrossRef]

- Khorram, S.; Jaiswal, M.; Gideon, J.; McInnis, M.; Provost, E.M. The PRIORI Emotion Dataset: Linking Mood to Emotion Detected In-the-Wild. arXiv 2018, arXiv:1806.10658. [Google Scholar]

- Carrillo, F.; Sigman, M.; Slezak, D.F.; Ashton, P.; Fitzgerald, L.; Stroud, J.; Nutt, D.J.; Carhart-Harris, R.L. Natural speech algorithm applied to baseline interview data can predict which patients will respond to psilocybin for treatment-resistant depression. J. Affect. Disord. 2018, 230, 84–86. [Google Scholar] [CrossRef]

- Vieta, E. Personalised medicine applied to mental health: Precision psychiatry. Rev. Psiquiatr. Salud Ment. 2015, 8, 117–118. [Google Scholar] [CrossRef]

- Lorenzon, N.; Dierssen, M. Diving into the precision psychiatry debate: How deep can we go? In European Neuropsychopharmacology; Elsevier B.V.: Amsterdam, The Netherlands, 2024; pp. 57–58. [Google Scholar] [CrossRef]

- Provost, E.M.; Sperry, S.H.; Tavernor, J.; Anderau, S.; Yocum, A.; McInnis, M.G. Emotion Recognition in the Real-World: Passively Collecting and Estimating Emotions from Natural Speech Data of Individuals with Bipolar Disorder. IEEE Trans. Affect. Comput. 2024. preprint. [Google Scholar] [CrossRef]

- Wadle, L.M.; Ebner-Priemer, U.W.; Foo, J.C.; Yamamoto, Y.; Streit, F.; Witt, S.H.; Frank, J.; Zillich, L.; Limberger, M.F.; Ablimit, A.; et al. Speech Features as Predictors of Momentary Depression Severity in Patients With Depressive Disorder Undergoing Sleep Deprivation Therapy: Ambulatory Assessment Pilot Study. JMIR Ment. Health 2024, 11, e49222. [Google Scholar] [CrossRef]

- Aldeneh, Z.; Jaiswal, M.; Picheny, M.; McInnis, M.G.; Provost, E.M. Identifying Mood Episodes Using Dialogue Features from Clinical Interviews. Proc. Interspeech 2019, 1926–1930. [Google Scholar] [CrossRef]

- Voleti, R.; Woolridge, S.M.; Liss, J.M.; Milanovic, M.; Stegmann, G.; Hahn, S.; Harvey, P.D.; Patterson, T.L.; Bowie, C.R.; Berisha, V. Language Analytics for Assessment of Mental Health Status and Functional Competency. Schizophr. Bull. 2023, 49, S183–S195. [Google Scholar] [CrossRef]

- Anmella, G.; Corponi, F.; Li, B.M.; Mas, A.; Garriga, M.; Sanabra, M.; Pacchiarotti, I.; Valentí, M.; Grande, I.; Benabarre, A.; et al. Identifying digital biomarkers of illness activity and treatment response in bipolar disorder with a novel wearable device (TIMEBASE): Protocol for a pragmatic observational clinical study. BJPsych Open 2024. preprint. [Google Scholar] [CrossRef] [PubMed]

- Corponi, F.; Li, B.M.; Anmella, G.; Mas, A.; Pacchiarotti, I.; Valentí, M.; Grande, I.; Benabarre, A.; Garriga, M.; Vieta, E.; et al. Automated mood disorder symptoms monitoring from multivariate time-series sensory data: Getting the full picture beyond a single number. Transl. Psychiatry 2024, 14, 161. [Google Scholar] [CrossRef] [PubMed]

- Valenzuela-Pascual, C.; Mas, A.; Borràs, R.; Anmella, G.; Sanabra, M.; González-Campos, M.; Valentí, M.; Pacchiarotti, I.; Benabarre, A.; Grande, I.; et al. Sleep–wake variations of electrodermal activity in bipolar disorder. Acta Psychiatrica Scandinavica 2024, preprint. [Google Scholar] [CrossRef]

- Association, W.M. World Medical Association Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef]

- Fusar-Poli, P.; Manchia, M.; Koutsouleris, N.; Leslie, D.; Woopen, C.; Calkins, M.E.; Dunn, M.; Tourneau, C.L.; Mannikko, M.; Mollema, T.; et al. Ethical considerations for precision psychiatry: A roadmap for research and clinical practice. Eur. Neuropsychopharmacol. 2022, 63, 17–34. [Google Scholar] [CrossRef]

- First, M.; Spitzer, R.; Gibbon, M.; Williams, J. Structured Clinical Interview for DSM-IV Axis I Disorders-Clinician (SCID-I); American Psychiatric Press: Washington, DC, USA, 1997; preprint. [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders: DSM-5-TR, 5th ed.; American Psychiatric Association Publishing: Washington, DC, USA, 2022. [Google Scholar]

- Tohen, M.; Frank, E.; Bowden, C.L.; Colom, F.; Ghaemi, S.N.; Yatham, L.N.; Malhi, G.S.; Calabrese, J.R.; Nolen, W.A.; Vieta, E.; et al. The International Society for Bipolar Disorders (ISBD) Task Force report on the nomenclature of course and outcome in bipolar disorders. Bipolar Disord. 2009, 11, 453–473. [Google Scholar] [CrossRef]

- Guy, W. ECDEU Assessment Manual for Psychopharmacology; US Department of Health, Education, and Welfare Publication (ADM); National Institute of Mental Health: Rockville, MD, USA, 1976. Available online: https://www.scirp.org/(S(351jmbntvnsjt1aadkposzje))/reference/ReferencesPapers.aspx?ReferenceID=1265746 (accessed on 12 April 2022).

- Morosini, P.L.; Magliano, L.; Brambilla, L.; Ugolini, S.; Pioli, R. Development, reliability and acceptability of a new version of the DSM-IV Social and Occupational Functioning Assessment Scale (SOFAS) to assess routine social funtioning. Acta Psychiatr. Scand. 2000, 101, 323–329. [Google Scholar] [CrossRef]

- Wireless GO II | Dual Wireless Mic System | RØDE (No Date). Available online: https://rode.com/es/microphones/wireless/wirelessgoii (accessed on 26 May 2024).

- Alghowinem, S.; Goecke, R.; Wagner, M.; Epps, J.; Gedeon, T.; Breakspear, M.; Parker, G. A comparative study of different classifiers for detecting depression from spontaneous speech. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8022–8026. [Google Scholar] [CrossRef]

- Bone, D.; Black, M.P.; Lee, C.-C.; Williams, M.E.; Levitt, P.; Lee, S.; Narayanan, S. Spontaneous-speech acoustic-prosodic features of children with autism and the interacting psychologist. Proc. Interspeech 2012, 1043–1046. [Google Scholar] [CrossRef]

- Pan, Z.; Gui, C.; Zhang, J.; Zhu, J.; Cui, D. Detecting Manic State of Bipolar Disorder Based on Support Vector Machine and Gaussian Mixture Model Using Spontaneous Speech. Psychiatry Investig. 2018, 15, 695–700. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Stroop, J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Martínez, C.; Kontaxis, S.; Miguel, M.P.; García, E.; Siddi, S.; Aguiló, J.; Haro, J.M.; de la Cámara, C.; Bailón, R.; Ortega, A. Analysis of Prosodic Features During Cognitive Load in Patients with Depression. In Conversational Dialogue Systems for the Next Decade; Lecture Notes in Electrical Engineering; D'Haro, L.F., Callejas, Z., Nakamura, S., Eds.; Springer: Singapore, 2021; Volume 704. [Google Scholar] [CrossRef]

- Fairbanks, G. Voice and Articulation Drillbook, 2nd ed.; Harper & Row: New York, NY, USA, 1960. [Google Scholar]

- Perrine, B.L.; Scherer, R.C. Aerodynamic and Acoustic Voice Measures Before and After an Acute Public Speaking Stressor. J. Speech Lang. Hear. Res. 2020, 63, 3311–3325. [Google Scholar] [CrossRef] [PubMed]

- Fong, M.W.M.; Van Patten, R.; Fucetola, R.P. The Factor Structure of the Boston Diagnostic Aphasia Examination. J. Int. Neuropsychol. Soc. JINS 2019, 25, 772–776. [Google Scholar] [CrossRef]

- Çokal, D.; Zimmerer, V.; Turkington, D.; Ferrier, N.; Varley, R.; Watson, S.; Hinzen, W. Disturbing the rhythm of thought: Speech pausing patterns in schizophrenia, with and without formal thought disorder. PLoS ONE. 2019, 14, e0217404. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Hong, K.; Nenkova, A.; March, M.E.; Parker, A.P.; Verma, R.; Kohler, C.G. Lexical use in emotional autobiographical narratives of persons with schizophrenia and healthy controls. Psychiatry Res. 2015, 225, 40–49. [Google Scholar] [CrossRef] [PubMed]

- Bedi, G.; Cecchi, G.; Slezak, D.; Carrillo, F.; Sigman, M.; Wit, H. A window into the intoxicated mind? Speech as an index of psychoactive drug effects. Neuropsychopharmacology 2014, 39, 2340–2348. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. Proc. Mach. Learn. Res. 2022, 202, 28492–28518. [Google Scholar]

- Bredin, H.P. Audio 2.1 speaker diarization pipeline: Principle, benchmark, and recipe. In Proceedings of the 24th INTERSPEECH Conference (INTERSPEECH 2023), Dublin, Ireland, 20–24 August 2023; pp. 1983–1987. [Google Scholar] [CrossRef]

- Yeung, A.; Iaboni, A.; Rochon, E.; Lavoie, M.; Santiago, C.; Yancheva, M.; Novikova, J.; Xu, M.; Robin, J.; Kaufman, L.D.; et al. Correlating natural language processing and automated speech analysis with clinician assessment to quantify speech-language changes in mild cognitive impairment and Alzheimer’s dementia. Alzheimer’s Res. Ther. 2021, 13, 109. [Google Scholar] [CrossRef]

- Belouali, A.; Gupta, S.; Sourirajan, V.; Yu, J.; Allen, N.; Alaoui, A.; Dutton, M.A.; Reinhard, M.J. Acoustic and language analysis of speech for suicidal ideation among US veterans. BioData Min. 2021, 14, 11. [Google Scholar] [CrossRef] [PubMed]

- de la Torre-Luque, A.; Pemau, A.; Ayad-Ahmed, W.; Borges, G.; Fernandez-Sevillano, J.; Garrido-Torres, N.; Garrido-Sanchez, L.; Garriga, M.; Gonzalez-Ortega, I.; Gonzalez-Pinto, A.; et al. Risk of suicide attempt repetition after an index attempt: A systematic review and meta-analysis. In General Hospital Psychiatry; Elsevier Inc.: Amsterdam, The Netherlands, 2023; pp. 51–56. [Google Scholar] [CrossRef]

- Pemau, A.; Marin-Martin, C.; Diaz-Marsa, M.; de la Torre-Luque, A.; Ayad-Ahmed, W.; Gonzalez-Pinto, A.; Garrido-Torres, N.; Garrido-Sanchez, L.; Roberto, N.; Lopez-Peña, P.; et al. Risk factors for suicide reattempt: A systematic review and meta-analysis. Psychol. Med. 2024, preprint. [Google Scholar] [CrossRef] [PubMed]

- Llach, C.D.; Vieta, E. Therapeutic inertia in psychiatry: Focus on practice-evidence gaps. Eur. Neuropsychopharmacol. 2023, 66, 64–65. [Google Scholar] [CrossRef] [PubMed]

- Beltrami, D.; Gagliardi, G.; Rossini Favretti, R.; Ghidoni, E.; Tamburini, F.; Calzà, L. Speech analysis by natural language processing techniques: A possible tool for very early detection of cognitive decline? Front. Aging Neurosci. 2018, 10, 414837. [Google Scholar] [CrossRef] [PubMed]

- Espinola, C.W. Detection of major depressive disorder, bipolar disorder, schizophrenia and generalized anxiety disorder using vocal acoustic analysis and machine learning: An exploratory study. Res. Biomed. Eng. 2022, 38, 813–829. [Google Scholar] [CrossRef]

- Ryan, K.A.; Babu, P.; Easter, R.; Saunders, E.; Lee, A.J.; Klasnja, P.; Verchinina, L.; Micol, V.; Doil, B.; McInnis, M.G. A Smartphone App to Monitor Mood Symptoms in Bipolar Disorder: Development and Usability Study. JMIR Ment. Health 2020, 7, e19476. [Google Scholar] [CrossRef] [PubMed]

- Gideon, J.; Matton, K.; Anderau, S.; McInnis, M.; Provost, E.M. When to Intervene: Detecting Abnormal Mood using Everyday Smartphone Conversations. arXiv 2019, arXiv:1909.11248. [Google Scholar]

- Oliva, V.; Roberto, N.; Andreo-Jover, J.; Bobes, T.; Rivero, M.C.; Cebriá, A.; Crespo-Facorro, B.; de la Torre-Luque, A.; Díaz-Marsá, M.; Elices, M.; et al. Anxious and depressive symptoms and health-related quality of life in a cohort of people who recently attempted suicide: A network analysis. J. Affect. Disord. 2024, 355, 210–219. [Google Scholar] [CrossRef]

- Fiaidhi, J. Envisioning Insight-Driven Learning Based on Thick Data Analytics with Focus on Healthcare. IEEE Access 2020, 8, 114998–115004. [Google Scholar] [CrossRef]

- De Prisco, M.; Vieta, E. The never-ending problem: Sample size matters. Eur. Neuropsychopharmacol. 2024, 79, 17–18. [Google Scholar] [CrossRef]

- Tanha, J.; Abdi, Y.; Samadi, N.; Razzaghi, N.; Asadpour, M. Boosting methods for multi-class imbalanced data classification: An experimental review. J. Big Data 2020, 7, 70. [Google Scholar] [CrossRef]

- Ilzarbe, L.; Vieta, E. The elephant in the room: Medication as confounder. Eur. Neuropsychopharmacol. 2023, 71, 6–8. [Google Scholar] [CrossRef] [PubMed]

- Burdick, K.E.; Millett, C.E.; Yocum, A.K.; Altimus, C.M.; Andreassen, O.A.; Aubin, V.; Belzeaux, R.; Berk, M.; Biernacka, J.M.; Blumberg, H.P.; et al. Predictors of functional impairment in bipolar disorder: Results from 13 cohorts from seven countries by the global bipolar cohort collaborative. Bipolar Disord. 2022, 24, 709–719. [Google Scholar] [CrossRef] [PubMed]

| Feature | Description |

|---|---|

| Source Features | |

| Jitter [%] | Deviations in individual consecutive f0 period lengths, indicating irregular closure and asymmetric vocal-fold vibrations. |

| Shimmer [%] | Difference in the peak amplitudes of consecutive f0 periods, indicating irregularities in voice intensity. |

| Tremor [Hz] | Frequency of the most intense low-frequency fundamental frequency-modulating component in a specified analysis range. |

| Harmonics-to-noise ratio (HNR) [dB] | Ratio between f0 and noise components, indirectly correlating with perceived aspiration. |

| Frequency disturbance ratio (FDR) [%] | Relative mean value of the frequency disturbance from 5 to 5 periods (five points average). |

| Amplitude Disturbance ratio (ADR) [%] | Relative mean amplitude value over a set of windows. |

| Quasi-open quotient (QOQ) | Ratio of the vocal folds’ opening time, often reduced in functional dysphonia. |

| Normalized amplitude quotient (NAQ) | Ratio between peak-to-peak pulse amplitude and the negative peak of the differentiated flow glottogram, normalized with respect to the period time. |

| Peak slope | Slope of the regression line that is fit to log10 of the maxima of each frame. |

| Filter Features | |

| F1 mean [Hz] | First peak in the spectrum of voiced utterances resulting from a resonance of the human vocal tract. |

| F2 mean [Hz] | Second peak in the spectrum of voiced utterances resulting from a resonance of the human vocal tract. |

| F1 variability [Hz] | Measures of dispersion of F1 (variance, standard deviation). |

| F2 variability [Hz] | Measures of dispersion of F2 (variance, standard deviation). |

| F1 range [Hz] | Difference between the lowest and highest F1 values. |

| Vowel space | F1 and F2 2D space for the vowels /a/, /i/, /u/. |

| Linear predictive coding (LPC) coefficients | Coefficients predicting the next time point of the audio signal using previous values. |

| Spectral Features | |

| Mel-frequency cepstral coefficients (MFCCs) | Coefficients derived by computing a spectrum of the log-magnitude Mel-spectrum of the audio segment. |

| Prosodic Features | |

| f0 mean [Hz] | Fundamental frequency, perceived as pitch (mean, median). |

| f0 variability [Hz] | Measures of dispersion of f0 (variance, standard deviation). |

| f0 range [Hz] | Difference between the lowest and highest f0 values. |

| Intensity [dB] | Acoustic intensity in decibels relative to a reference value. |

| Intensity variability [dB] | Measures of dispersion of intensity (variance, standard deviation). |

| Energy velocity | Mean-squared central difference across frames, possibly correlating with motor coordination. |

| Maximum phonation time [s] | Maximum time during which phonation of a vowel is sustained. |

| Speech rate | Number of speech units per second over the duration of the speech sample (including pauses). |

| Articulation rate | Number of speech units per second over the duration of the speech sample (excluding pauses). |

| Time talking [s] | Sum of the duration of all speech segments. |

| Utterance duration mean [s] | Mean duration of utterance length. |

| Pause duration mean [s] | Mean duration of pause length. |

| Pause variability [s] | Measures of dispersion of pause duration (variance, standard deviation). |

| Pause total [s] | Total duration of pauses. |

| Feature | Description |

|---|---|

| Syntactic Features | |

| Syntactic Complexity | Degree of complexity in sentence structures, including the use of subordination and coordination. |

| Sentence Length | Average number of words per sentence. |

| Clause Density | Number of clauses per sentence. |

| Use of Grammatical Constructions | Frequency and variety of specific grammatical forms. |

| Part-of-Speech Distribution | Relative frequency of different parts of speech. |

| Semantic Features | |

| Semantic Coherence | Logical consistency and relevance of ideas within and across sentences. |

| Semantic Density | Amount of meaningful content per unit of speech. |

| Lexical Diversity | Variety of words used, measured by metrics such as type–token ratio. |

| Use of Abstract vs. Concrete Language | Proportion of abstract terms versus concrete terms. |

| Referential Clarity | Clarity with which entities are referred to and tracked throughout the discourse. |

| Thematic Consistency | Maintenance of a central theme or topic throughout a discourse. |

| Propositional Density | Number of propositions or ideas expressed per clause or sentence. |

| Use of Figurative Language | Frequency and types of non-literal language used. |

| Word Concreteness | Degree to which words refer to tangible, perceptible objects or experiences. |

| Sentiment and Emotion | Emotional tone conveyed through word choice. |

| Lexical-Semantic Relationships | |

| Synonymy | Use of different words with similar meanings. |

| Antonymy | Use of opposites to create contrast. |

| Hyponymy and Hypernymy | Use of specific terms and their general categories. |

| Collocations | Common pairings or groupings of words. |

| Semantic Fields | Grouping of related words that belong to the same domain of meaning. |

| Discourse Features | |

| Narrative Structure | Organization of content into a coherent story with elements such as setting, characters, plot, and resolution. |

| Argumentation and Reasoning | Use of logical arguments, evidence, and reasoning to support claims and ideas. |

| Topic Introduction and Maintenance | Ability to introduce new topics and maintain focus on them throughout the discourse. |

| Conclusion and Summarization | Effective wrapping up of discourse with a summary or conclusion. |

| Feature | Description |

|---|---|

| Speech Organization | |

| Coherence | Logical arrangement of ideas in speech, ensuring it is easy to follow and understand. |

| Cohesion | Use of linguistic devices to link sentences and parts of discourse together. |

| Topicality | Relevance of the content to the topic at hand, maintaining focus without unnecessary digressions. |

| Flow and Fluency | |

| Speech Rate | Number of speech units per second, including pauses. Note: Also listed under Acoustic Features. |

| Articulation Rate | Number of speech units per second, excluding pauses. Note: Also listed under Acoustic Features. |

| Disfluencies | Interruptions in the flow of speech, such as filled pauses, repetitions, and self-corrections. |

| Smoothness | Degree to which speech is uninterrupted and flows naturally. |

| Rhythm | |

| Stress Patterns | Distribution of emphasis on syllables within words and across phrases. |

| Intonation | Variation in pitch across an utterance. |

| Pacing | Timing and spacing of speech sounds and silences. |

| Quantity | |

| Verbose vs. Concise | Amount of speech produced relative to what is necessary. |

| Word Count | Total number of words spoken in a given time frame or speech segment. |

| Information Density | Amount of information conveyed per unit of speech. |

| Latency | |

| Response Latency | Time taken to respond to a question or prompt. |

| Onset Time | Time from the beginning of an utterance to the start of the first spoken word. |

| Pause Length | Duration of pauses within speech. |

| Additional Speech Features | |

| Lexical Richness | Variety and sophistication of vocabulary used. |

| Pronunciation Accuracy | Correctness of phoneme production. |

| Speech Intelligibility | Clarity of speech, making it understandable to listeners. |

| Turn-Taking | Ability to appropriately manage and transition between speaker and listener roles. |

| Feature | Description |

|---|---|

| Emotion Words | Use of specific words that convey emotions (e.g., joy, sadness, anger, fear, surprise, trust, etc.). |

| Sentiment Analysis | Overall positive or negative sentiment of the speech content. |

| Intensity of Emotion Words | Degree of emotional intensity conveyed through word choice (e.g., “furious” vs. “angry”). |

| Metaphors and Figurative Language | Use of metaphors or similes to convey emotions (e.g., “I feel like I’m walking on air” to express happiness). |

| Prosodic Features | Variations in pitch, loudness, and duration that convey meaning and emotion. Note: Also listed under Acoustic Features. |

| Hospital Clinic of Barcelona | Mayo Clinic | |||

|---|---|---|---|---|

| Acute Phase | Response | Acute Phase | Response | |

| Total patients recruited | ||||

| Manic Episode (acute phase) N (%) | 13 (20) | 9 (69.2) | 11 (100) | 8 (73) |

| Major Depressive Episode (acute phase) N (%) | 21 (32.3) | 9 (42.9) | ||

| Euthymia N (%) | 31 (47.7) | |||

| Total N (%) | 65 (100) | 11 (100) | ||

| Symptoms and functional variables | ||||

| Patients with acute episodes | ||||

| YMRS score (manic patients only) (M ± SD) | 24 ± 8.5 | 5.9 ± 6.2 | 21.7 ± 5 | 4.6 ± 4.3 |

| HDRS-17 score (depressed patients only) (M ± SD) | 17.1 ± 4.4 | 3.3 ± 2.8 | ||

| PANSS positive symptoms score (M ± SD) | 11.0 ± 7.3 | 8.5 ± 3.0 | ||

| PANSS negative symptoms score (M ± SD) | 12.1 ± 5.1 | 9.7 ± 4.5 | ||

| PANSS general symptoms score (M ± SD) | 27.3 ± 5.5 | 21.2 ± 4.2 | ||

| PANSS total symptoms score (M ± SD) | 50.4 ± 10.6 | 39.4 ± 7.8 | ||

| CGI-S score (M ± SD) | 4.3 ± 0.9 | 2.4 ± 1.3 | ||

| SOFAS score (M ± SD) | 50.0 ± 13.0 | 69.1 ± 23.9 | ||

| Euthymic patients | ||||

| YMRS score (M ± SD) | 0.97 ± 1.4 | |||

| HDRS-17 score (M ± SD) | 3.9 ± 2.9 | |||

| PANSS positive symptoms score (M ± SD) | 7.0 ± 0.2 | |||

| PANSS negative symptoms score (M ± SD) | 8.7 ± 3.1 | |||

| PANSS general symptoms score (M ± SD) | 19.6 ± 3.2 | |||

| PANSS total symptoms score (M ± SD) | 35.3 ± 5.2 | |||

| CGI-S score (M ± SD) | 1.7 ± 0.7 | |||

| SOFAS score (M ± SD) | 78.8 ± 9.9 | |||

| Sociodemographic and clinical variables | ||||

| Age (M ± SD) | 48.1 ± 13.3 | 33.6 ± 14.5 | ||

| Sex: Females N (%) | 42 (64.6) | 6 (54.5) | ||

| Age of Onset (M ± SD) | 32.9 ± 10.9 | 26.8 ± 12.2 | ||

| Illness Duration (years) (M ± SD) | 14.9 ± 12.6 | 7.8 ± 7.3 | ||

| Number of Previous Affective Episodes (Median, IQR) | 1, 1–2 | 4, 2–6 | ||

| Psychotic Features (patients on acute episodes only) N (%) | 9 (24.3) | 0 (0.0) | ||

| Anxious Features (patients on acute episodes only) N (%) | 29 (78.4) | 11 (100.0) | ||

| Mixed Features (patients on acute episodes only) N (%) | 9 (24.3) | 6 (54.5) | ||

| Active Suicidality (patients on acute episodes only) N (%) | 12 (32.4) | 3 (27.3) | ||

| Non-Psychiatric Medical Comorbidities N (%) | 44 (67.7) | 10 (90.9) | ||

| Psychiatric Comorbidities N (%) | 10 (15.4) | 9 (81.8) | ||

| Past Drug Use N (%) | 14 (21.5) | 6 (54.5) | ||

| Current Drug Use N (%) | 15 (23.1) | 7 (63.6) | ||

| Setting | ||||

| Outpatient N (%) | 52 (80) | 4 (13) | ||

| Psychopharmacological Treatment | ||||

| Antipsychotics N (%) | 46 (70.8) | 11 (100) | ||

| Lithium N (%) | 41 (63.1) | 7 (63.6) | ||

| Other Mood Stabilizers N (%) | 29 (44.6) | 5 (45.4) | ||

| Antidepressants N (%) | 23 (38.5) | 1 (9.0) | ||

| Benzodiazepines N (%) | 32 (49.2) | 8 (0.72) | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anmella, G.; De Prisco, M.; Joyce, J.B.; Valenzuela-Pascual, C.; Mas-Musons, A.; Oliva, V.; Fico, G.; Chatzisofroniou, G.; Mishra, S.; Al-Soleiti, M.; et al. Automated Speech Analysis in Bipolar Disorder: The CALIBER Study Protocol and Preliminary Results. J. Clin. Med. 2024, 13, 4997. https://doi.org/10.3390/jcm13174997

Anmella G, De Prisco M, Joyce JB, Valenzuela-Pascual C, Mas-Musons A, Oliva V, Fico G, Chatzisofroniou G, Mishra S, Al-Soleiti M, et al. Automated Speech Analysis in Bipolar Disorder: The CALIBER Study Protocol and Preliminary Results. Journal of Clinical Medicine. 2024; 13(17):4997. https://doi.org/10.3390/jcm13174997

Chicago/Turabian StyleAnmella, Gerard, Michele De Prisco, Jeremiah B. Joyce, Claudia Valenzuela-Pascual, Ariadna Mas-Musons, Vincenzo Oliva, Giovanna Fico, George Chatzisofroniou, Sanjeev Mishra, Majd Al-Soleiti, and et al. 2024. "Automated Speech Analysis in Bipolar Disorder: The CALIBER Study Protocol and Preliminary Results" Journal of Clinical Medicine 13, no. 17: 4997. https://doi.org/10.3390/jcm13174997

APA StyleAnmella, G., De Prisco, M., Joyce, J. B., Valenzuela-Pascual, C., Mas-Musons, A., Oliva, V., Fico, G., Chatzisofroniou, G., Mishra, S., Al-Soleiti, M., Corponi, F., Giménez-Palomo, A., Montejo, L., González-Campos, M., Popovic, D., Pacchiarotti, I., Valentí, M., Cavero, M., Colomer, L., ... Vieta, E. (2024). Automated Speech Analysis in Bipolar Disorder: The CALIBER Study Protocol and Preliminary Results. Journal of Clinical Medicine, 13(17), 4997. https://doi.org/10.3390/jcm13174997