Impact of Reverberation on Speech Perception in Noise in Bimodal/Bilateral Cochlear Implant Users with and without Residual Hearing

Abstract

:1. Introduction

2. Materials and Methods

2.1. Subject Demographics

2.2. Speech Perception Test in Reverberation

2.3. Statistics

3. Results

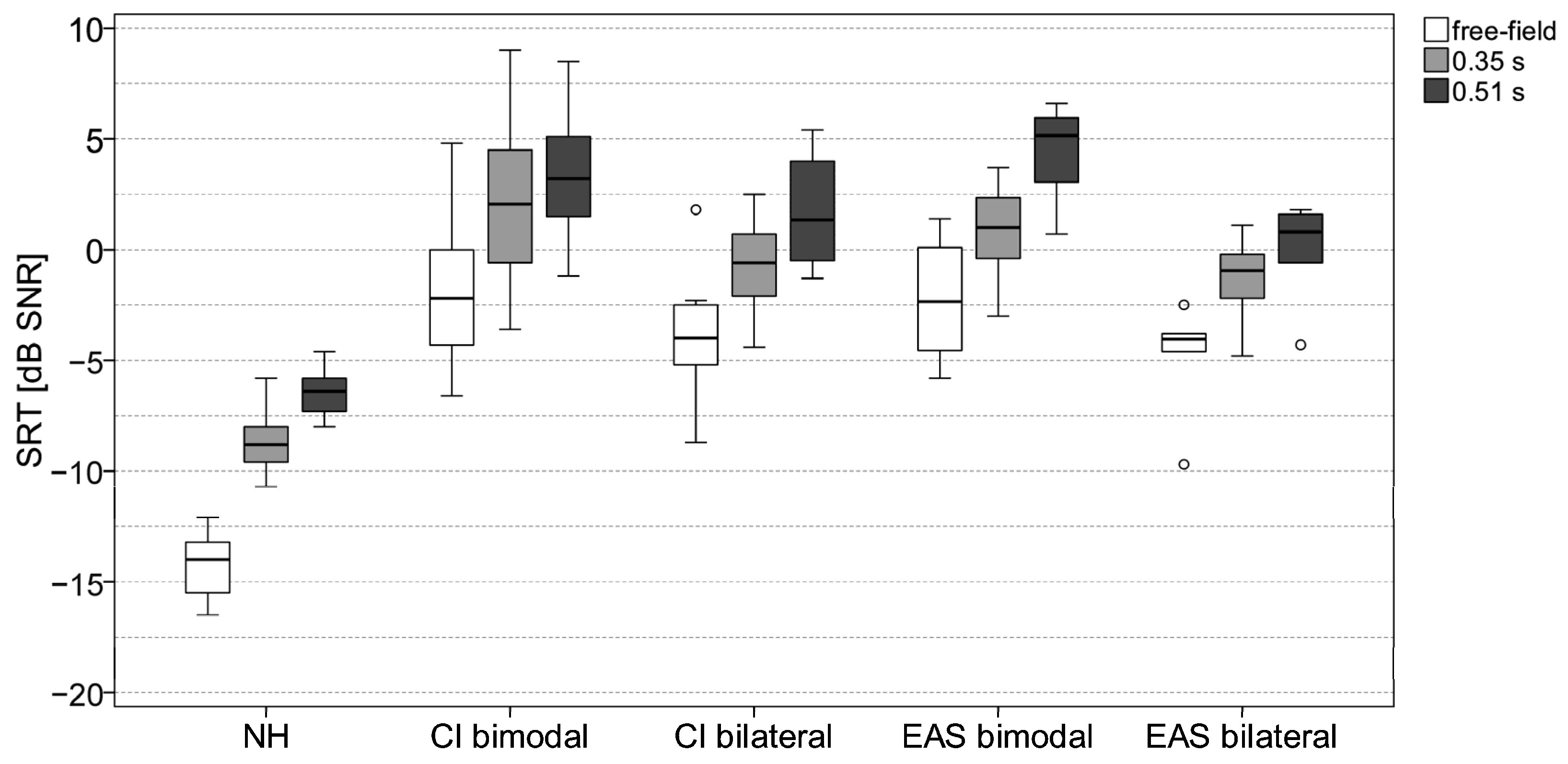

3.1. Impact of Reverberation on SRTs

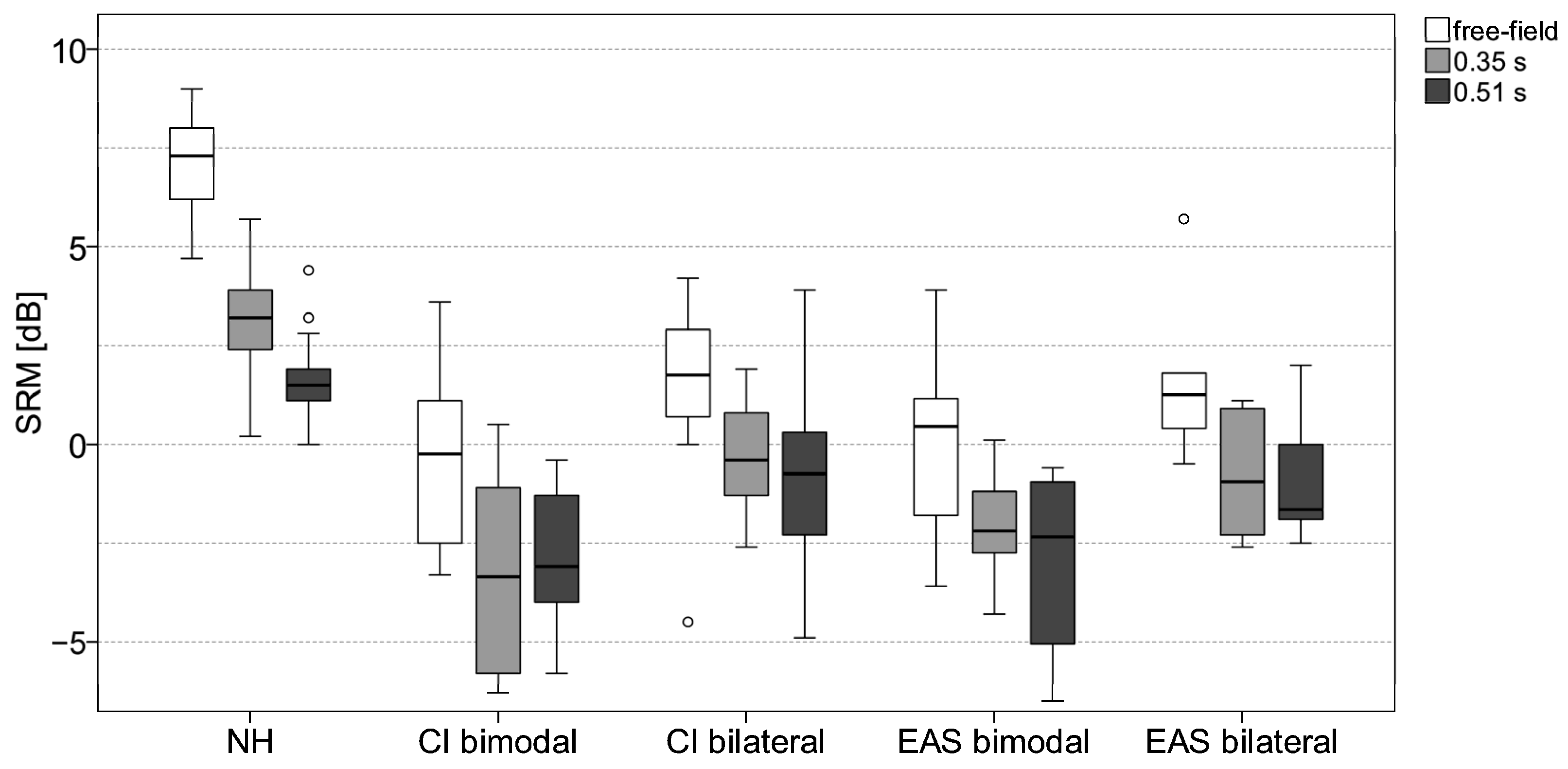

3.2. Impact of Reverberation on Spatial Release from Masking

3.3. Impact of Acoustic Hearing on SRT and SRM

4. Discussion

4.1. Impact of Reverberation on SRTs

4.2. Impact of Reverberation on Spatial Release from Masking

4.3. Impact of Subject Group

4.4. Limitation of the Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gifford, R.H.; Loiselle, L.; Natale, S.; Sheffield, S.W.; Sunderhaus, L.W.; S Dietrich, M.; Dorman, M.F. Speech Under-standing in Noise for Adults with Cochlear Implants: Effects of Hearing Configuration, Source Location Certainty, and Head Movement. J. Speech Lang. Hear. Res. 2018, 61, 1306–1321. [Google Scholar] [CrossRef] [PubMed]

- Poissant, S.F.; Whitmal, N.A.; Freyman, R.L. Effects of reverberation and masking on speech intelligibility in cochlear implant simulations. J. Acoust. Soc. Am. 2006, 119, 1606–1615. [Google Scholar] [CrossRef] [PubMed]

- Whitmal, N.A.; Poissant, S.F. Effects of source-to-listener distance and masking on perception of cochlear implant processed speech in reverberant rooms. J. Acoust. Soc. Am. 2009, 126, 2556–2569. [Google Scholar] [CrossRef]

- Misurelli, S.M.; Litovsky, R.Y. Spatial release from masking in children with bilateral cochlear implants and with normal hearing: Effect of target-interferer similarity. J. Acoust. Soc. Am. 2015, 138, 319–331. [Google Scholar] [CrossRef]

- Litovsky, R.Y. Spatial Release from Masking. Acoust. Today 2012, 8, 18–25. [Google Scholar] [CrossRef]

- Gifford, R.H.; Dorman, M.F.; Sheffield, S.W.; Teece, K.; Olund, A.P. Availability of binaural cues for bilateral implant recipients and bimodal listeners with and without preserved hearing in the implanted ear. Audiol. Neurootol. 2014, 19, 57–71. [Google Scholar] [CrossRef] [PubMed]

- Williges, B.; Dietz, M.; Hohmann, V.; Jürgens, T. Spatial Release From Masking in Simulated Cochlear Implant Users With and Without Access to Low-Frequency Acoustic Hearing. Trends Hear. 2015, 19, 2331216515616940. [Google Scholar] [CrossRef] [PubMed]

- Kokkinakis, K.; Loizou, P.C. The impact of reverberant self-masking and overlap-masking effects on speech intelligibility by cochlear implant listeners (L). J. Acoust. Soc. Am. 2011, 130, 1099–1102. [Google Scholar] [CrossRef]

- Mühler, R.; Ziese, M.; Rostalski, D.; Verhey, J.L. Zur Wahrnehmung verhallter Sprache mit Cochleaimplantaten. [On the perception of reverberated speech with cochlear implants]. HNO 2014, 1, 35–40. [Google Scholar] [CrossRef]

- Desmond, J.M.; Collins, L.M.; Throckmorton, C.S. The effects of reverberant self- and overlap-masking on speech recognition in cochlear implant listeners. J. Acoust. Soc. Am. 2014, 135, EL304–EL310. [Google Scholar] [CrossRef]

- Eichenauer, A.; Baumann, U.; Stöver, T.; Weißgerber, T. Interleaved Acoustic Environments: Impact of an Auditory Scene Classification Procedure on Speech Perception in Cochlear Implant Users. Trends Hear. 2021, 25, 23312165211014118. [Google Scholar] [CrossRef]

- Kressner, A.A.; Westermann, A.; Buchholz, J.M. The impact of reverberation on speech intelligibility in cochlear implant recipients. J. Acoust. Soc. Am. 2018, 144, 1113. [Google Scholar] [CrossRef] [PubMed]

- Hazrati, O.; Lee, J.; Loizou, P.C. Blind binary masking for reverberation suppression in cochlear implants. J. Acoust. Soc. Am. 2013, 133, 1607–1614. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Kokkinakis, K. Effects of early and late reflections on intelligibility of reverberated speech by cochlear im-plant listeners. J. Acoust. Soc. Am. 2014, 135, EL22–EL28. [Google Scholar] [CrossRef]

- Hazrati, O.; Loizou, P.C. The combined effects of reverberation and noise on speech intelligibility by cochlear implant listeners. Int. J. Audiol. 2012, 51, 437–443. [Google Scholar] [CrossRef]

- Darwin, C.J.; Hukin, R.W. Effectiveness of spatial cues, prosody, and talker characteristics in selective attention. J. Acoust. Soc. Am. 2000, 107, 970–977. [Google Scholar] [CrossRef]

- Helms Tillery, K.; Brown, C.A.; Bacon, S.P. Comparing the effects of reverberation and of noise on speech recognition in simulated electric-acoustic listening. J. Acoust. Soc. Am. 2012, 131, 416–423. [Google Scholar] [CrossRef] [PubMed]

- Badajoz-Davila, J.; Buchholz, J.M.; Van-Hoesel, R. Effect of noise and reverberation on speech intelligibility for cochlear implant recipients in realistic sound environments. J. Acoust. Soc. Am. 2020, 147, 3538–3549. [Google Scholar] [CrossRef] [PubMed]

- Von Ilberg, C.; Baumann, U.; Kiefer, J.; Tillein, J.; Adunka, O.F. Electric-acoustic stimulation of the auditory system: A review of the first decade. Audiol. Neurootol. 2011, 16 (Suppl. S2), 1–30. [Google Scholar] [CrossRef]

- Lorens, A.; Polak, M.; Piotrowska, A.; Skarzynski, H. Outcomes of treatment of partial deafness with cochlear implantation: A DUET study. Laryngoscope 2008, 118, 288–294. [Google Scholar] [CrossRef]

- Helbig, S.; Baumann, U.; Helbig, M.; von Malsen-Waldkirch, N.; Gstoettner, W. A new combined speech processor for electric and acoustic stimulation--eight months experience. ORL J. Oto-Rhino-Lary 2008, 70, 359–365. [Google Scholar] [CrossRef]

- Weißgerber, T.; Stöver, T.; Baumann, U. Speech perception in noise: Impact of directional microphones in users of combined electric-acoustic stimulation. PLoS ONE 2019, 14, e0213251. [Google Scholar] [CrossRef] [PubMed]

- Dorman, M.F.; Gifford, R.H. Combining acoustic and electric stimulation in the service of speech recognition. Int. J. Audiol. 2010, 49, 912–919. [Google Scholar] [CrossRef]

- Dunn, C.C.; Perreau, A.; Gantz, B.; Tyler, R.S. Benefits of localization and speech perception with multiple noise sources in listeners with a short-electrode cochlear implant. J. Am. Acad. Audiol. 2010, 21, 44–51. [Google Scholar] [CrossRef] [PubMed]

- Rader, T.; Fastl, H.; Baumann, U. Speech perception with combined electric-acoustic stimulation and bilateral cochlear implants in a multisource noise field. Ear Hear. 2013, 34, 324–332. [Google Scholar] [CrossRef]

- Gifford, R.H.; Dorman, M.F.; Skarzynski, H.; Lorens, A.; Polak, M.; Driscoll, C.L.; Roland, P.; Buchman, C.A. Cochlear implantation with hearing preservation yields significant benefit for speech recognition in complex listening environments. Ear Hear. 2013, 34, 413–425. [Google Scholar] [CrossRef] [PubMed]

- Dorman, M.F.; Spahr, A.J.; Loiselle, L.; Zhang, T.; Cook, S.; Brown, C.; Yost, W. Localization and speech understanding by a patient with bilateral cochlear implants and bilateral hearing preservation. Ear Hear. 2013, 34, 245–248. [Google Scholar] [CrossRef]

- Zhang, T.; Dorman, M.F.; Spahr, A.J. Information From the Voice Fundamental Frequency (F0) Region Accounts for the Majority of the Benefit When Acoustic Stimulation Is Added to Electric Stimulation. Ear Hear. 2010, 31, 63–69. [Google Scholar] [CrossRef]

- Fu, Q.-J.; Shannon, R.V.; Wang, X. Effects of noise and spectral resolution on vowel and consonant recognition: Acoustic and electric hearing. J. Acoust. Soc. Am. 1998, 104, 3586–3596. [Google Scholar] [CrossRef]

- Hahlbrock, K.-H. Über Sprachaudiometrie und neue Wörterteste. [Speech audiometry and new word-tests]. Archiv. Ohren-Nasen-Kehlkopfheilkd. 1953, 162, 394–431. [Google Scholar] [CrossRef]

- Weißgerber, T.; Rader, T.; Baumann, U. Impact of a moving noise masker on speech perception in cochlear implant users. PLoS ONE 2015, 10, e0126133. [Google Scholar] [CrossRef] [PubMed]

- Eichenauer, A.; Baumann, U.; Weißgerber, T. Implementierung und Evaluation einer akustischen Raumsimulation für audiologische Testungen. [Implementation and evaluation of acoustic room simulation for audiological testing]. GMS Z. Audiol.—Audiol. Acoust. 2020, 2, Doc06. [Google Scholar] [CrossRef]

- Wagener, K.; Brand, T.; Kollmeier, B. Entwicklung und Evaluation eines Satztests für die deutsche Sprache. Teil II: Optimierung des Oldenburger Satztests. [Development and evaluation of a German sentence test. Part II: Optimization of the Oldenburg sentence test]. Z. Audiol. 1999, 38, 44–56. [Google Scholar]

- Kokkinakis, K.; Hazrati, O.; Loizou, P.C. A channel-selection criterion for suppressing reverberation in cochlear implants. J. Acoust. Soc. Am. 2011, 129, 3221–3232. [Google Scholar] [CrossRef] [PubMed]

- Rennies, J.; Kidd, G. Benefit of binaural listening as revealed by speech intelligibility and listening effort. J. Acoust. Soc. Am. 2018, 144, 2147. [Google Scholar] [CrossRef]

- Weißgerber, T.; Rader, T.; Baumann, U. Effectiveness of Directional Microphones in Bilateral/Bimodal Cochlear Implant Users-Impact of Spatial and Temporal Noise Characteristics. Otol. Neurotol. 2017, 38, e551–e557. [Google Scholar] [CrossRef]

- Gantz, B.J.; Turner, C.W. Combining acoustic and electrical hearing. Laryngoscope 2003, 113, 1726–1730. [Google Scholar] [CrossRef]

- Büchner, A.; Schüssler, M.; Battmer, R.D.; Stöver, T.; Lesinski-Schiedat, A.; Lenarz, T. Impact of Low-Frequency Hearing. Audiol. Neurootol. 2009, 14, 8–13. [Google Scholar] [CrossRef]

- Turner, C.W.; Gantz, B.J.; Vidal, C.; Behrens, A.; Henry, B.A. Speech recognition in noise for cochlear implant listeners: Benefits of residual acoustic hearing. J. Acoust. Soc. Am. 2004, 115, 1729. [Google Scholar] [CrossRef]

- Weißgerber, T.; Müller, C.; Stöver, T.; Baumann, U. Age Differences in Speech Perception in Noise and Sound Localization in Individuals with Subjective Normal Hearing. Front. Psychol. 2022, 13, 845285. [Google Scholar] [CrossRef]

- Weissgerber, T.; Löschner, M.; Stöver, T.; Baumann, U. Outcome Prediction of Speech Perception in Quiet and in Noise for Cochlear Implant Candidates Based on Pre-Operative Measures. J. Clin. Med. 2024, 13, 994. [Google Scholar] [CrossRef] [PubMed]

| ID | Implant Type (Ear) | Age [yrs] | Sound Processor | CI Listening Experience [yrs] | Hearing Aid Type | FMS CI [%] | FMS HA [%] | PTA (Contralateral) [dB HL] |

|---|---|---|---|---|---|---|---|---|

| BM 1 | Concerto Flex24 (right) | 57.3 | OPUS 2 | 4.2 | Unitron Moda 2 | 95 | 95 | 35.0 |

| BM 2 | Concerto Flex24 (right) | 73.6 | OPUS 2 | 3.6 | Phonak Dalia SC | 75 | 25 | 88.8 |

| BM 3 | Pulsar Standard (left) | 23.6 | OPUS 2 | 16.3 | Phonak Naida S | 90 | 0 | 106.3 |

| BM 4 | Concerto Flex28 (right) | 71.9 | OPUS 2 | 4.3 | Phonak Naida S3 | 55 | 85 | 47.5 |

| BM 5 | Synchrony Flex28 (left) | 67.6 | SONNET | 2.2 | Audio Service Mezzo Duo | 70 | 0 | 77.5 |

| BM 6 | Synchrony Flex28 (left) | 34.7 | SONNET | 2.0 | ReSound Preza | 80 | 40 | 103.8 |

| BM 7 | Concerto Flex28 (left) | 73.0 | OPUS 2 | 5.0 | Phonak Naida S V UP | 85 | 55 | 77.5 |

| BM 8 | Synchrony Flex28 (left) | 33.0 | OPUS 2 | 2.1 | Phonak Naida 5 S1 | 65 | 60 | 61.3 |

| BM 9 | Sonata Flex24 (right) | 55.2 | OPUS 2 | 7.1 | Widex Inteo | 60 | 30 | 101.3 |

| BM 10 | Synchrony Flex28 (left) | 35.7 | SONNET | 1.6 | Phonak Naida Q50-SP | 80 | 80 | 43.8 |

| ID | Implant Type (Left/Right) | Age [yrs] | Sound Processor | CI Listening Experience Left [yrs] | CI Listening Experience Right [yrs] | FMS Left [%] | FMS Right [%] |

|---|---|---|---|---|---|---|---|

| BL 1 | Concerto Flex28/FlexSoft | 63.3 | OPUS 2 | 4.1 | 5.2 | 70 | 85 |

| BL 2 | Concerto FLEXsoft/FLEX28 | 57.6 | OPUS 2 | 5.0 | 4.0 | 95 | 95 |

| BL 3 | Concerto FLEXsoft/FLEX28 | 70.1 | OPUS 2 | 4.2 | 5.4 | 85 | 85 |

| BL 4 | Sonata FLEXsoft Pulsar Standard | 50.1 | OPUS 2 | 6.3 | 9.9 | 90 | 80 |

| BL 5 | Concerto FLEX24 | 59.5 | OPUS 2 | 3.7 | 2.9 | 75 | 80 |

| BL 6 | Concerto FLEX28 C40+ Standard | 38.1 | OPUS 2 | 4.4 | 13.6 | 85 | 90 |

| BL 7 | Sonata Standard Sonata FLEXsoft | 65.7 | OPUS 2 | 8.7 | 6.2 | 80 | 55 |

| BL 8 | Concerto FLEX28 | 47.1 | OPUS 2 | 3.4 | 3.4 | 75 | 65 |

| BL 9 | Concerto FLEX28 | 27.3 | OPUS 2 | 3.6 | 2.3 | 90 | 35 |

| BL 10 | C40+ Standard | 30.0 | SONNET | 14.4 | 14.6 | 100 | 100 |

| BL 11 | Synchrony FLEX28 Concerto FLEX28 | 63.2 | OPUS 2/SONNET | 1.0 | 3.6 | 60 | 80 |

| BL 12 | C40+ Standard | 19.2 | OPUS 2 | 15.3 | 17.4 | 95 | 85 |

| BL 13 | Concerto FLEX28 | 41.5 | OPUS 2 | 3.9 | 3.9 | 80 | 70 |

| BL 14 | Concerto FLEX28 | 66.3 | OPUS 2/SONNET | 2.0 | 3.6 | 80 | 95 |

| ID | Implant Type (Ear) | Age [yrs] | Sound Processor | EAS Listening Experience [yrs] | Hearing Aid Type | FMS Score EAS/Contra [%] | PTA EAS/Contra [dB HL] | PTAlow EAS [dB HL] |

|---|---|---|---|---|---|---|---|---|

| EAS_BM1 | Sonata FLEX24 (left) | 55.8 | SONNET EAS | 5.3 | Phonak Naida S IX UP | 70/70 | 117.5/81.3 | 73.3 |

| EAS_BM2 | Sonata FLEX24 (right) | 53.5 | SONNET EAS | 5.8 | Phonak Piconet 2 P2 AZ | 85/20 | 102.5/101.3 | 43.3 |

| EAS_BM3 | Sonata FLEX20 (right) | 73.8 | SONNET EAS | 5.9 | SONNET (CI) | 65/55 | 86.3/94 | 61.7 |

| EAS_BM4 | Synchrony FLEX24 (left) | 70.8 | SONNET EAS | 2.0 | Oticon Chili SP9 | 70/30 | 82.5/76 | 71.7 |

| EAS_BM5 | Synchrony FLEX24 (right) | 59.7 | SONNET EAS | 4.1 | SONNET (CI) | 85/80 | 107.5/120 | 53.3 |

| EAS_BM6 | Concerto FLEX24 (right) | 63.4 | SONNET EAS | 4.4 | Siemens Nitro | 95/35 | 98.8/84 | 38.3 |

| EAS_BM7 | Sonata FLEX24 (right) | 52.7 | SONNET EAS | 6.8 | - | 85/25 | 101.3/110 | 31.7 |

| EAS_BM8 | Concerto FLEX24 (right) | 51.3 | OPUS 2 EAS | 4.1 | Siemens Motion P | 50/30 | 98.8/98 | 51.7 |

| ID | Implant Type (Left/Right) | Age [yrs] | Sound Processor (Left/Right) | EAS Listening Experience Left [yrs] | EAS Listening Experience Right [yrs] | FMS Score Left/Right [%] | PTA L/R [dB HL] | PTAlow L/R [dB HL] |

|---|---|---|---|---|---|---|---|---|

| EAS_BL1 | Synchrony FLEX24 | 72.9 | SONNET EAS | 1.1 | 1.9 | 100/80 | 106.3/93.8 | 66.7/46.7 |

| EAS_BL2 | Sonata FLEX20/FLEX24 | 73.9 | OPUS 2 EAS | 6.2 | 7.6 | 70/80 | 106.3/100 | 85/73.3 |

| EAS_BL3 | Sonata FLEX24 Synchrony FLEX24 | 60.5 | DUET 2 SONNET EAS | 5.1 | 1.1 | 85/70 | 120/108.8 | 81.7/48.3 |

| EAS_BL4 | Concerto FLEX24 | 47.8 | SONNET EAS | 2.6 | 4.2 | 100/70 | 77.5/106.3 | 16.7/53.3 |

| EAS_BL5 | Concerto FLEX24 Sonata FLEX24 | 68.1 | DUET 2 SONNET EAS | 3.6 | 5.0 | 75/65 | 112.5/111.3 | 68.3/56.6 |

| EAS_BL6 | Synchrony FLEX24 | 45.6 | SONNET EAS | 0.2 | 0.3 | 90/80 | 106.3/88.8 | 23.3/21.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

König, C.; Baumann, U.; Stöver, T.; Weissgerber, T. Impact of Reverberation on Speech Perception in Noise in Bimodal/Bilateral Cochlear Implant Users with and without Residual Hearing. J. Clin. Med. 2024, 13, 5269. https://doi.org/10.3390/jcm13175269

König C, Baumann U, Stöver T, Weissgerber T. Impact of Reverberation on Speech Perception in Noise in Bimodal/Bilateral Cochlear Implant Users with and without Residual Hearing. Journal of Clinical Medicine. 2024; 13(17):5269. https://doi.org/10.3390/jcm13175269

Chicago/Turabian StyleKönig, Clara, Uwe Baumann, Timo Stöver, and Tobias Weissgerber. 2024. "Impact of Reverberation on Speech Perception in Noise in Bimodal/Bilateral Cochlear Implant Users with and without Residual Hearing" Journal of Clinical Medicine 13, no. 17: 5269. https://doi.org/10.3390/jcm13175269