Innovative COVID-19 Point-of-Care Diagnostics Suitable for Tuberculosis Diagnosis: A Scoping Review

Abstract

:1. Introduction

2. Methods

2.1. Definitions and Eligibility Criteria

2.2. Information Sources

2.3. Search

2.4. Selection of Sources of Evidence

2.5. Data Charting

2.6. Variables

2.7. Synthesis of Results

| Scoring Category | Scoring Criteria | Scoring Variables |

|---|---|---|

| Category 1: POC features of equipment | Technical specifications | 1 Instrument size |

| 2 Instrument weight | ||

| 3 Power requirements | ||

| 4 Instrument-free | ||

| 5 Connectivity (data export options) | ||

| Data analysis | 6 Integrated data analysis | |

| 7 Integrated electronics and software | ||

| Testing capacity | 8 Time-to-result | |

| 9 Hands-on-time | ||

| 10 Throughput capacity | ||

| Category 2: POC features of test consumables | Operating conditions | 11 Operating Temperature |

| 12 Operating Humidity | ||

| Storage conditions | 13 Shelf life | |

| Category 3: Ease of use | End user requirements | 14 Potential end-user |

| 15 Number of Manual Sample Processing Steps | ||

| Category 4: Performance * | Analytical and clinical performance (COVID-19) | 16 Limit of detection (LoD) |

| 17 Clinical sensitivity | ||

| 18 Clinical specificity | ||

| Category 5: Cost | Upfront and user costs | 19 Capital cost of equipment |

| 20 Consumable cost | ||

| Category 6: Platform versatility | Multi-use ability | 21 Applicability of platform to pathogens other than SARS-CoV-2 |

| Category 7: Parameters | Test parameters | 22 Number of test parameters available for scoring |

3. Results

3.1. Selection of Sources of Evidence

3.2. Characteristics of Sources of Evidence

3.3. Synthesis of Results

| Technology Classification | Antigen Tests | Molecular Tests | ||||

|---|---|---|---|---|---|---|

| Test Classification | Near-POC | POC | Near-POC | Low-Complexity | ||

| Developer, Product Name | LumiraDx, LumiraDx | Pfizer, Lucira Health | Visby, COVID-19 Test | Biocartis, IdyllaTM | ||

| Overall Score *,† | 73/110 (66%) | 83/110 (75%) | 78/110 (71%) | 66/110 (60%) | ||

| Test summary | Platform: Specifications | Dimensions (cm) | 21 × 9.7 × 7.3 | 19.1 × 8.0 × 5.2 | 13.8 × 6.7 × 4.4 | 30.5 × 19 × 50.5 |

| Weight (g) | 1100 | 150 | NR | 18,600 | ||

| Power-supply | Integrated battery (20 tests) | AA batteries | Mains electricity (power adapter) | Standard electricity | ||

| Connectivity | LumiraDx Connect cloud-based services; 2× USB ports; RFID reader; Bluetooth connectivity | None | None | USB port; Direct RJ45 Ethernet cable; Idylla Visualizer (PDF viewer); Idylla Explore (cloud) | ||

| Max. operating temperature (°C)/humidity (%) | 30/90 | 45/95 | 30/80 | 30/80 | ||

| Multi-use ‡ | Yes | Yes | Yes | Yes | ||

| Throughput capacity | 1 | 1 | 1 | 8 | ||

| Costs (USD) | NR | Not applicable (instrument-free) | Not applicable (instrument-free) | NR | ||

| COVID-19 Assay: Specifications | Sample type | NS, NPS | NS | NS, NPS | NPS | |

| Hands-on time (min) | 1 | 1 | <2 | <2 | ||

| Running time (min) | 12 | 30 | 30 | 90 | ||

| Shelf-life (months) | NR | 18 | NR | NR | ||

| Costs per test (USD) | NR | >10/test | NR | NR | ||

| LOD (copies/mL) | NR | 900 | 100–1112 | 500 | ||

| Sensitivity (%)/Specificity (%) | 82.7/96.9 | 93.1/100.0 | 100.0/98.7 | 100.0/100.0 | ||

| POC features of equipment | Score | 39/50 (78%) | 36/50 (72%) | 36/50 (72%) | 32/50 (64%) | |

| Pros |

|

|

|

| ||

| Cons |

|

|

|

| ||

| POC features of consumables | Score | 9/15 (60%) | 14/15 (93%) | 7/15 (47%) | 7/15 (47%) | |

| Pros |

|

| None | None | ||

| Cons |

| None |

|

| ||

| Ease of use | Score | 10/10 (100%) | 10/10 (100%) | 8/10 (80%) | 6/10 (60%) | |

| Pros |

|

|

|

| ||

| Cons | None | None | None |

| ||

| Performance | Score | 5/15 (33%) | 7/15 (47%) | 11/15 (73%) | 11/15 (73%) | |

| Pros | None |

|

|

| ||

| Cons |

|

|

|

| ||

| Cost | Score | 2/10 (20%) | 6/10 (60%) | 6/10 (60%) | 2/10 (20%) | |

| Pros | None |

|

| None | ||

| Cons |

|

|

|

| ||

| Platform versatility | Score | 5/5 (100%) | 5/5 (100%) | 5/5 (100%) | 5/5 (100%) | |

| Pros |

|

|

|

| ||

| Cons | None | None | None | None | ||

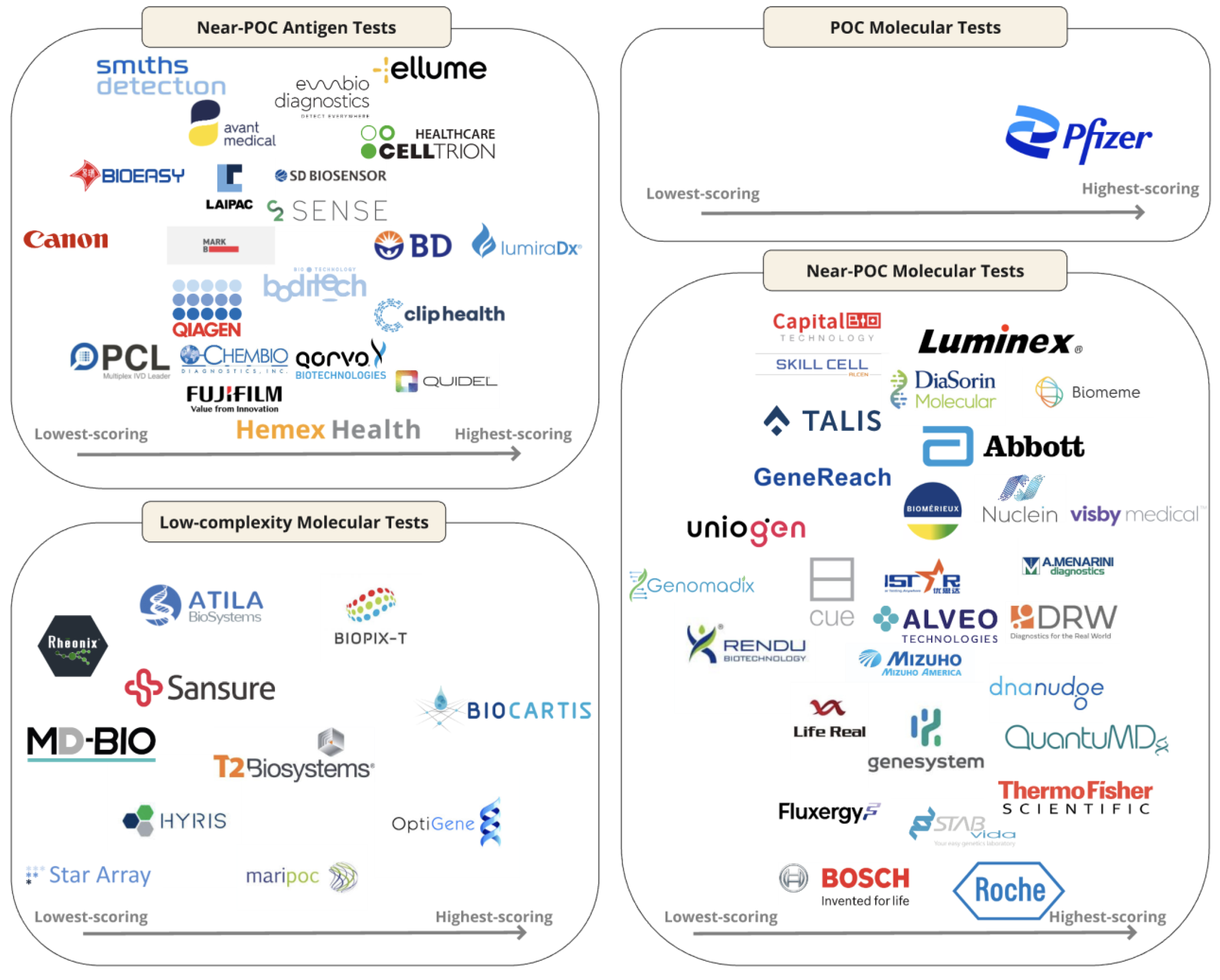

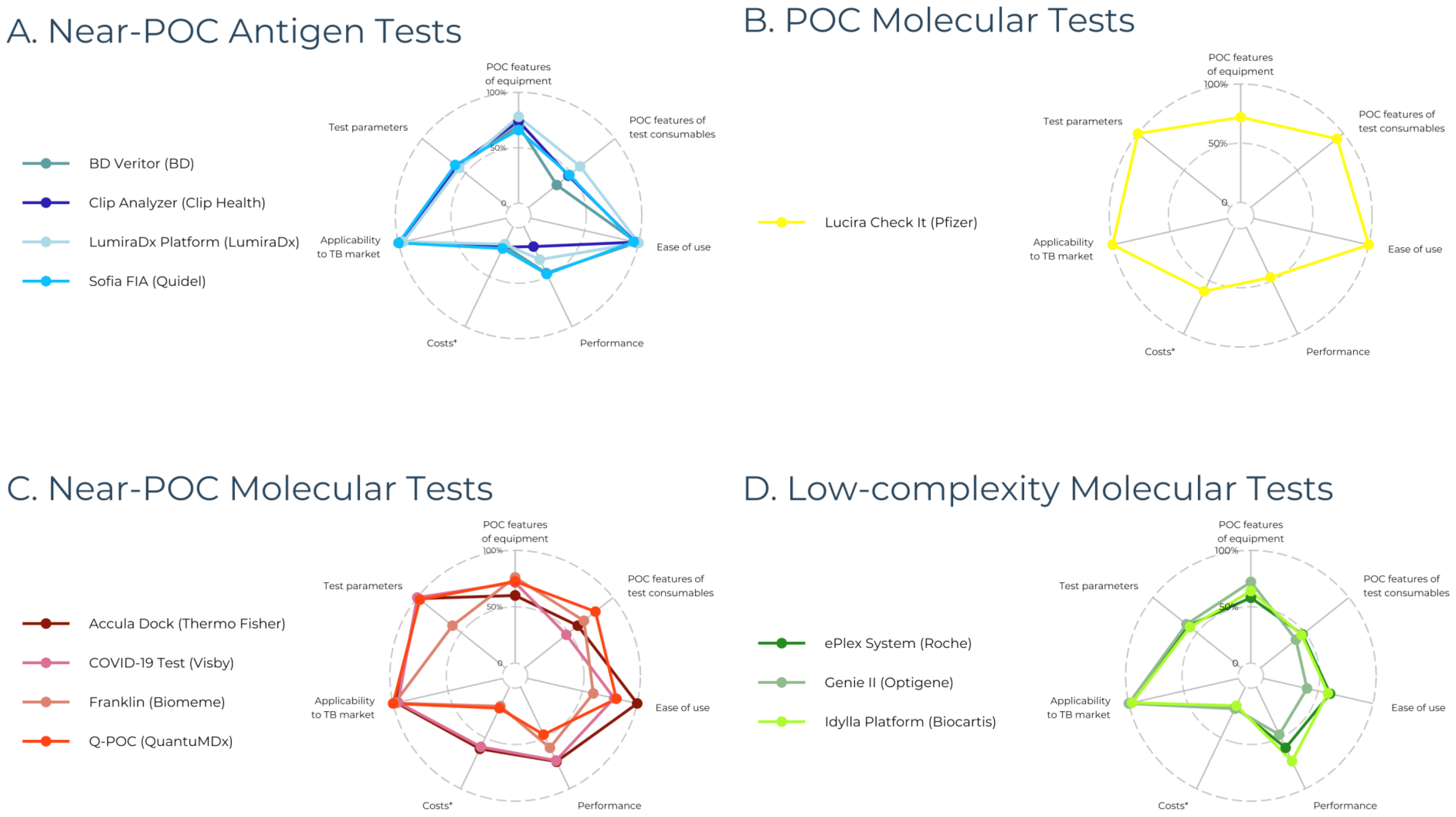

3.4. Near-POC Antigen Tests

3.5. POC Molecular Tests

3.6. Near-POC Molecular Tests

3.7. Low-Complexity Molecular Tests

4. Discussion

4.1. Antigen Tests

4.2. Molecular Tests

4.3. General Findings

4.4. Strengths

4.5. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- PAHO. Tuberculosis Response Recovering from Pandemic But Accelerated Efforts Needed to Meet New Targets. 2023. Available online: https://www.paho.org/en/news/7-11-2023-tuberculosis-response-recovering-pandemic-accelerated-efforts-needed-meet-new#:~:text=7%20November%202023%20(PAHO)%2D,19%20disruptions%20on%20TB%20services (accessed on 30 September 2024).

- Sachdeva, K.S.; Kumar, N. Closing the gaps in tuberculosis detection-considerations for policy makers. Lancet Glob. Health 2023, 11, e185–e186. [Google Scholar] [CrossRef] [PubMed]

- Pai, M.; Dewan, P.K.; Swaminathan, S. Transforming tuberculosis diagnosis. Nat. Microbiol. 2023, 8, 756–759. [Google Scholar] [CrossRef] [PubMed]

- WHO. WHO Standard: Universal Access to Rapid Tuberculosis Diagnostics; WHO: Geneva, Switzerland, 2023. [Google Scholar]

- Ismail, N.; Nathanson, C.M.; Zignol, M.; Kasaeva, T. Achieving universal access to rapid tuberculosis diagnostics. BMJ Glob. Health 2023, 8, e012666. [Google Scholar] [CrossRef] [PubMed]

- WHO. Global Tuberculosis Report; WHO: Geneva, Switzerland, 2023. [Google Scholar]

- Subbaraman, R.; Jhaveri, T.; Nathavitharana, R.R. Closing gaps in the tuberculosis care cascade: An action-oriented research agenda. J. Clin. Tuberc. Other Mycobact. Dis. 2020, 19, 100144. [Google Scholar] [CrossRef]

- WHO. WHO High-Priority Target Product Profiles for New Tuberculosis Diagnostics; WHO: Geneva, Switzerland, 2014. [Google Scholar]

- WHO. Target Product Profile for Tuberculosis Diagnosis and Detection of Drug Resistance; WHO: Geneva, Switzerland, 2024. [Google Scholar]

- Yerlikaya, S.; Broger, T.; Isaacs, C.; Bell, D.; Holtgrewe, L.; Gupta-Wright, A.; Nahid, P.; Cattamanchi, A.; Denkinger, C.M. Blazing the trail for innovative tuberculosis diagnostics. Infection 2024, 52, 29–42. [Google Scholar] [CrossRef]

- Chopra, K.K.; Singh, S. Tuberculosis: Newer diagnostic tests: Applications and limitations. Indian J. Tuberc. 2020, 67, S86–S90. [Google Scholar] [CrossRef]

- Research TM. 2023. Available online: https://www.who.int/publications/i/item/9789240083851 (accessed on 15 June 2024).

- Research, G.V. COVID-19 Diagnostics Market Size, Share & Trends Analysis Report by Product & Service (Instruments, Reagents & Kits, Services), by Sample Type, by Test Type, by Mode, by End Use, by Region, and Segment Forecasts, 2022–2030. 2024. Available online: https://www.grandviewresearch.com/industry-analysis/covid-19-diagnostics-market (accessed on 15 June 2024).

- Cioboata, R.; Biciusca, V.; Olteanu, M.; Vasile, C.M. COVID-19 and Tuberculosis: Unveiling the Dual Threat and Shared Solutions Perspective. J. Clin. Med. 2023, 12, 4784. [Google Scholar] [CrossRef]

- Levac, D.; Colquhoun, H.; O’Brien, K.K. Scoping studies: Advancing the methodology. Implement. Sci. 2010, 5, 69. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Yerlikaya, S.; Holtgrewe, L.M.; Broger, T.; Isaacs, C.; Nahid, P.; Cattamanchi, A.; Denkinger, C.M. Innovative COVID-19 point-of-care diagnostics suitable for tuberculosis diagnosis: A scoping review protocol. BMJ Open 2023, 13, e065194. [Google Scholar] [CrossRef]

- WHO. Public Consultation for the Target Product Profile TB Diagnostic Tests for Peripheral Settings: WHO; 2023. Available online: https://www.who.int/news-room/articles-detail/public-consultation-for-the-target-product-profile-tb-diagnostic-tests-for-peripheral-settings (accessed on 15 June 2024).

- Daily, C. National Medicial Products Administration Database Beijing. 2024. Available online: https://www.nmpa.gov.cn/datasearch/en/search-result-en.html?nmpaItem=%2082808081889a0b5601889a251e33005c (accessed on 24 June 2024).

- India Go. Central Drugs Standard Control Organization New Delhi. 2024. Available online: https://cdsco.gov.in/opencms/opencms/en/Medical-Device-Diagnostics/InVitro-Diagnostics/ (accessed on 24 June 2024).

- Commission E. EUDAMED-European Database on Medical Devices: European Commission. 2021. Available online: https://ec.europa.eu/tools/eudamed/#/screen/home (accessed on 17 June 2024).

- Innovation VH. Covidence [Computer Software] Melbourne, Australia, 2024. Available online: https://www.covidence.org/ (accessed on 30 September 2024).

- Lehe, J.D.; Sitoe, N.E.; Tobaiwa, O.; Loquiha, O.; Quevedo, J.I.; Peter, T.F.; Jani, I.V. Evaluating operational specifications of point-of-care diagnostic tests: A standardized scorecard. PLoS ONE 2012, 7, e47459. [Google Scholar] [CrossRef] [PubMed]

- 360Dx. Roche to Acquire LumiraDx Point-of-Care Testing Tech for Up to $350M. 2024. Available online: https://www.360dx.com/business-news/roche-acquire-lumiradx-point-care-testing-tech-350m?CSAuthResp=1712329219737%3A0%3A2731195%3A1911%3A24%3Asuccess%3AF7A508B010CAA6F6BDBA33CDA751578C&_ga=2.119195471.790131286.1712329165-1006766003.1711110768&adobe_mc=MCMID%3D26734529761092225790874085618419779486%7CMCORGID%3D138FFF2554E6E7220A4C98C6%2540AdobeOrg%7CTS%3D1712329173 (accessed on 5 April 2024).

- Twabi, H.H. TB Diagnostics: Existing Platform and Future Direction. Am. J. Biomed. Sci. Res. 2022, 15. [Google Scholar] [CrossRef]

- Kruger, L.J.; Klein, J.A.F.; Tobian, F.; Gaeddert, M.; Lainati, F.; Klemm, S.; Schnitzler, P.; Bartenschlager, R.; Cerikan, B.; Neufeldt, C.J.; et al. Evaluation of accuracy, exclusivity, limit-of-detection and ease-of-use of LumiraDx: An antigen-detecting point-of-care device for SARS-CoV-2. Infection 2022, 50, 395–406. [Google Scholar] [CrossRef] [PubMed]

- Broger, T.; Nicol, M.P.; Sigal, G.B.; Gotuzzo, E.; Zimmer, A.J.; Surtie, S.; Caceres-Nakiche, T.; Mantsoki, A.; Reipold, E.I.; Székely, R.; et al. Diagnostic accuracy of 3 urine lipoarabinomannan tuberculosis assays in HIV-negative outpatients. J. Clin. Investig. 2020, 130, 5756–5764. [Google Scholar] [CrossRef]

- Broger, T.; Tsionksy, M.; Mathew, A.; Lowary, T.L.; Pinter, A.; Plisova, T.; Bartlett, D.; Barbero, S.; Denkinger, C.M.; Moreau, E.; et al. Sensitive electrochemiluminescence (ECL) immunoassays for detecting lipoarabinomannan (LAM) and ESAT-6 in urine and serum from tuberculosis patients. PLoS ONE 2019, 14, e0215443. [Google Scholar] [CrossRef]

- Bulterys, M.A.; Wagner, B.G.; Redard-Jacot Ml Suresh, A.; Pollock, N.R.; Moreau, E.; Denkinger, C.M.; Drain, P.K.; Broger, T. Point-Of-Care Urine LAM Tests for Tuberculosis Diagnosis: A Status Update. J. Clin. Med. 2019, 9, 111. [Google Scholar] [CrossRef]

- Denkinger, C.M.; Nicolau, I.; Ramsay, A.; Chedore, P.; Pai, M. Are peripheral microscopy centres ready for next generation molecular tuberculosis diagnostics? Eur. Respir. J. 2013, 42, 544–547. [Google Scholar] [CrossRef]

- Andre, E.; Isaacs, C.; Affolabi, D.; Alagna, R.; Brockmann, D.; de Jong, B.C.; Cambau, E.; Churchyard, G.; Cohen, T.; Delmee, M.; et al. Connectivity of diagnostic technologies: Improving surveillance and accelerating tuberculosis elimination. Int. J. Tuberc. Lung Dis. 2016, 20, 999–1003. [Google Scholar] [CrossRef]

- Dowell, D.; Gaffga, N.H.; Weinstock, H.; Peterman, T.A. Integration of Surveillance for STDs, HIV, Hepatitis, and TB: A Survey of U.S. STD Control Programs. Public Health Rep. 2009, 124 (Suppl. 2), 31–38. [Google Scholar] [CrossRef]

- WHO. WHO Releases New List of Essential Diagnostics; New Recommendations for Hepatitis E Virus Tests, Personal Use Glucose Meters. WHO: Geneva, Switzerland, 2023. Available online: https://www.who.int/news/item/19-10-2023-who-releases-new-list-of-essential-diagnostics--new-recommendations-for-hepatitis-e-virus-tests--personal-use-glucose-meters (accessed on 14 March 2024).

- FIND. Diagnostic Network Optimization; FIND: Geneva, Switzerland, 2024. [Google Scholar]

- Girdwood, S.; Pandey, M.; Machila, T.; Warrier, R.; Gautam, J.; Mukumbwa-Mwenechanya, M.; Benade, M.; Nichols, K.; Shibemba, L.; Mwewa, J.; et al. The integration of tuberculosis and HIV testing on GeneXpert can substantially improve access and same-day diagnosis and benefit tuberculosis programmes: A diagnostic network optimization analysis in Zambia. PLOS Glob. Public Health 2023, 3, e0001179. [Google Scholar] [CrossRef]

- Ntinginya, N.E.; Kuchaka, D.; Orina, F.; Mwebaza, I.; Liyoyo, A.; Miheso, B.; Aturinde, A.; Njeleka, F.; Kiula, K.; Msoka, E.F.; et al. Unlocking the health system barriers to maximise the uptake and utilisation of molecular diagnostics in low-income and middle-income country setting. BMJ Glob. Health 2021, 6, e005357. [Google Scholar] [CrossRef] [PubMed]

- Steadman, A.; Andama, A.; Ball, A.; Mukwatamundu, J.; Khimani, K.; Mochizuki, T.; Asege, L.; Bukirwa, A.; Kato, J.B.; Katumba, D.; et al. New manual qPCR assay validated on tongue swabs collected and processed in Uganda shows sensitivity that rivals sputum-based molecular TB diagnostics. Clin. Infect. Dis. 2024, 78, 1313–1320. [Google Scholar] [CrossRef] [PubMed]

- Mesman, A.W.; Rodriguez, C.; Ager, E.; Coit, J.; Trevisi, L.; Franke, M.F. Diagnostic accuracy of molecular detection of Mycobacterium tuberculosis in pediatric stool samples: A systematic review and meta-analysis. Tuberculosis 2019, 119, 101878. [Google Scholar] [CrossRef]

- Hassane-Harouna, S.; Braet, S.M.; Decroo, T.; Camara, L.M.; Delamou, A.; Bock, S.; Ortuño-Gutiérrez, N.; Cherif, G.-F.; Williams, C.M.; Wisniewska, A.; et al. Face mask sampling (FMS) for tuberculosis shows lower diagnostic sensitivity than sputum sampling in Guinea. Ann. Clin. Microbiol. Antimicrob. 2023, 22, 81. [Google Scholar] [CrossRef]

- Aiano, F.; Jones, S.E.I.; Amin-Chowdhury, Z.; Flood, J.; Okike, I.; Brent, A.; Brent, B.; Beckmann, J.; Garstang, J.; Ahmad, S.; et al. Feasibility and acceptability of SARS-CoV-2 testing and surveillance in primary school children in England: Prospective, cross-sectional study. PLoS ONE 2021, 16, e0255517. [Google Scholar] [CrossRef]

- Daniels, B.; Kwan, A.; Pai, M.; Das, J. Lessons on the quality of tuberculosis diagnosis from standardized patients in China, India, Kenya, and South Africa. J. Clin. Tuberc. Other Mycobact. Dis. 2019, 16, 100109. [Google Scholar] [CrossRef] [PubMed]

- Nooy, A.; Ockhuisen, T.; Korobitsyn, A.; Khan, S.A.; Ruhwald, M.; Ismail, N.; Kohli, M.; Nichols, B.E. Trade-offs between clinical performance and test accessibility in tuberculosis diagnosis: A multi-country modelling approach for target product profile development. Lancet Glob. Health 2024, 12, e1139–e1148. [Google Scholar] [CrossRef]

- Williams, C.M.; Abdulwhhab, M.; Birring, S.S.; De Kock, E.; Garton, N.J.; Townsend, E.; Pareek, M.; Al-Taie, A.; Pan, J.; Ganatra, R.; et al. Exhaled Mycobacterium tuberculosis output and detection of subclinical disease by face-mask sampling: Prospective observational studies. Lancet Infect. Dis. 2020, 20, 607–617. [Google Scholar] [CrossRef]

- Williams, C.M.; Cheah, E.S.; Malkin, J.; Patel, H.; Otu, J.; Mlaga, K.; Sutherland, J.S.; Antonio, M.; Perera, N.; Woltmann, G.; et al. Face mask sampling for the detection of Mycobacterium tuberculosis in expelled aerosols. PLoS ONE 2014, 9, e104921. [Google Scholar] [CrossRef]

- Ferguson, T.M.; Weigel, K.M.; Lakey Becker, A.; Ontengco, D.; Narita, M.; Tolstorukov, I.; Doebler, R.; Cangelosi, G.A.; Niemz, A. Pilot study of a rapid and minimally instrumented sputum sample preparation method for molecular diagnosis of tuberculosis. Sci. Rep. 2016, 6, 19541. [Google Scholar] [CrossRef]

- Vandeventer, P.E.; Weigel, K.M.; Salazar, J.; Erwin, B.; Irvine, B.; Doebler, R.; Nadim, A.; Cangelosi, G.A.; Niemz, A. Mechanical disruption of lysis-resistant bacterial cells by use of a miniature, low-power, disposable device. J. Clin. Microbiol. 2011, 49, 2533–2539. [Google Scholar] [CrossRef] [PubMed]

- FDA. Coronavirus (COVID-19) Update: FDA Authorizes First COVID-19 Test for Self-Testing at Home. 2020. Available online: https://www.fda.gov/news-events/press-announcements/coronavirus-covid-19-update-fda-authorizes-first-covid-19-test-self-testing-home (accessed on 18 September 2024).

- FDA. FDA Permits Marketing of First COVID-19 At-Home Test Using Traditional Premarket Review Process. 2024. Available online: https://www.fda.gov/news-events/press-announcements/fda-permits-marketing-first-covid-19-home-test-using-traditional-premarket-review-process (accessed on 28 June 2024).

- Jaroenram, W.; Kampeera, J.; Arunrut, N.; Sirithammajak, S.; Jaitrong, S.; Boonnak, K.; Khumwan, P.; Prammananan, T.; Chaiprasert, A.; Kiatpathomchai, W. Ultrasensitive detection of Mycobacterium tuberculosis by a rapid and specific probe-triggered one-step, simultaneous DNA hybridization and isothermal amplification combined with a lateral flow dipstick. Sci. Rep. 2020, 10, 16976. [Google Scholar] [CrossRef] [PubMed]

- Shanmugakani, R.K.; Bonam, W.; Erickson, D.; Mehta, S. An isothermal amplification-based point-of-care diagnostic platform for the detection of Mycobacterium tuberculosis: A proof-of-concept study. Curr. Res. Biotechnol. 2021, 3, 154–159. [Google Scholar] [CrossRef]

- FIND. Accessible Pricing: FIND. 2024. Available online: https://www.finddx.org/what-we-do/cross-cutting-workstreams/market-innovations/accessible-pricing/ (accessed on 15 March 2024).

- Hannay, E.; Pai, M. Breaking the cycle of neglect: Building on momentum from COVID-19 to drive access to diagnostic testing. EClinicalMedicine 2023, 57, 101867. [Google Scholar] [CrossRef]

- 360Dx. WHO, Medicines Patent Pool License Rapid Diagnostics Technology From SD Biosensor: 360Dx; 2024. Available online: https://www.360dx.com/business-news/who-medicines-patent-pool-license-rapid-diagnostics-technology-sd-biosensor?adobe_mc=MCMID%3D19782619068757078863840638341405804837%7CMCORGID%3D138FFF2554E6E7220A4C98C6%2540AdobeOrg%7CTS%3D1706883764&CSAuthResp=1%3A%3A2548842%3A273%3A24%3Asuccess%3A33042812B2DBBA2FDB7574A1C2C8E150 (accessed on 22 March 2024).

- Bigio, J.; MacLean, E.L.; Das, R.; Sulis, G.; Kohli, M.; Berhane, S.; Dinnes, J.; Deeks, J.J.; Brümmer, L.E.; Denkinger, C.M.; et al. Accuracy of package inserts of SARS-CoV-2 rapid antigen tests: A secondary analysis of manufacturer versus systematic review data. Lancet Microbe 2023, 4, e875–e882. [Google Scholar] [CrossRef]

- Arnaout, R.; Lee, R.A.; Lee, G.R.; Callahan, C.; Yen, C.F.; Smith, K.P.; Arora, R.; Kirby, J.E. SARS-CoV2 Testing: The Limit of Detection Matters. bioRxiv 2020. [Google Scholar] [CrossRef]

- Trang, B. Cue Health, COVID-19 Testing Company, Is Shutting Down: STAT; 2024. Available online: https://www.statnews.com/2024/05/22/cue-health-covid-19-test-maker-layoffs/#:~:text=In%20an%20abrupt%20change,as%20of%20Friday%2C%20May%2024 (accessed on 11 June 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Holtgrewe, L.M.L.; Jain, S.; Dekova, R.; Broger, T.; Isaacs, C.; Theron, G.; Nahid, P.; Cattamanchi, A.; Denkinger, C.M.; Yerlikaya, S. Innovative COVID-19 Point-of-Care Diagnostics Suitable for Tuberculosis Diagnosis: A Scoping Review. J. Clin. Med. 2024, 13, 5894. https://doi.org/10.3390/jcm13195894

Holtgrewe LML, Jain S, Dekova R, Broger T, Isaacs C, Theron G, Nahid P, Cattamanchi A, Denkinger CM, Yerlikaya S. Innovative COVID-19 Point-of-Care Diagnostics Suitable for Tuberculosis Diagnosis: A Scoping Review. Journal of Clinical Medicine. 2024; 13(19):5894. https://doi.org/10.3390/jcm13195894

Chicago/Turabian StyleHoltgrewe, Lydia M. L., Sonal Jain, Ralitza Dekova, Tobias Broger, Chris Isaacs, Grant Theron, Payam Nahid, Adithya Cattamanchi, Claudia M. Denkinger, and Seda Yerlikaya. 2024. "Innovative COVID-19 Point-of-Care Diagnostics Suitable for Tuberculosis Diagnosis: A Scoping Review" Journal of Clinical Medicine 13, no. 19: 5894. https://doi.org/10.3390/jcm13195894