Discrepancies in ChatGPT’s Hip Fracture Recommendations in Older Adults for 2021 AAOS Evidence-Based Guidelines

Abstract

:1. Introduction

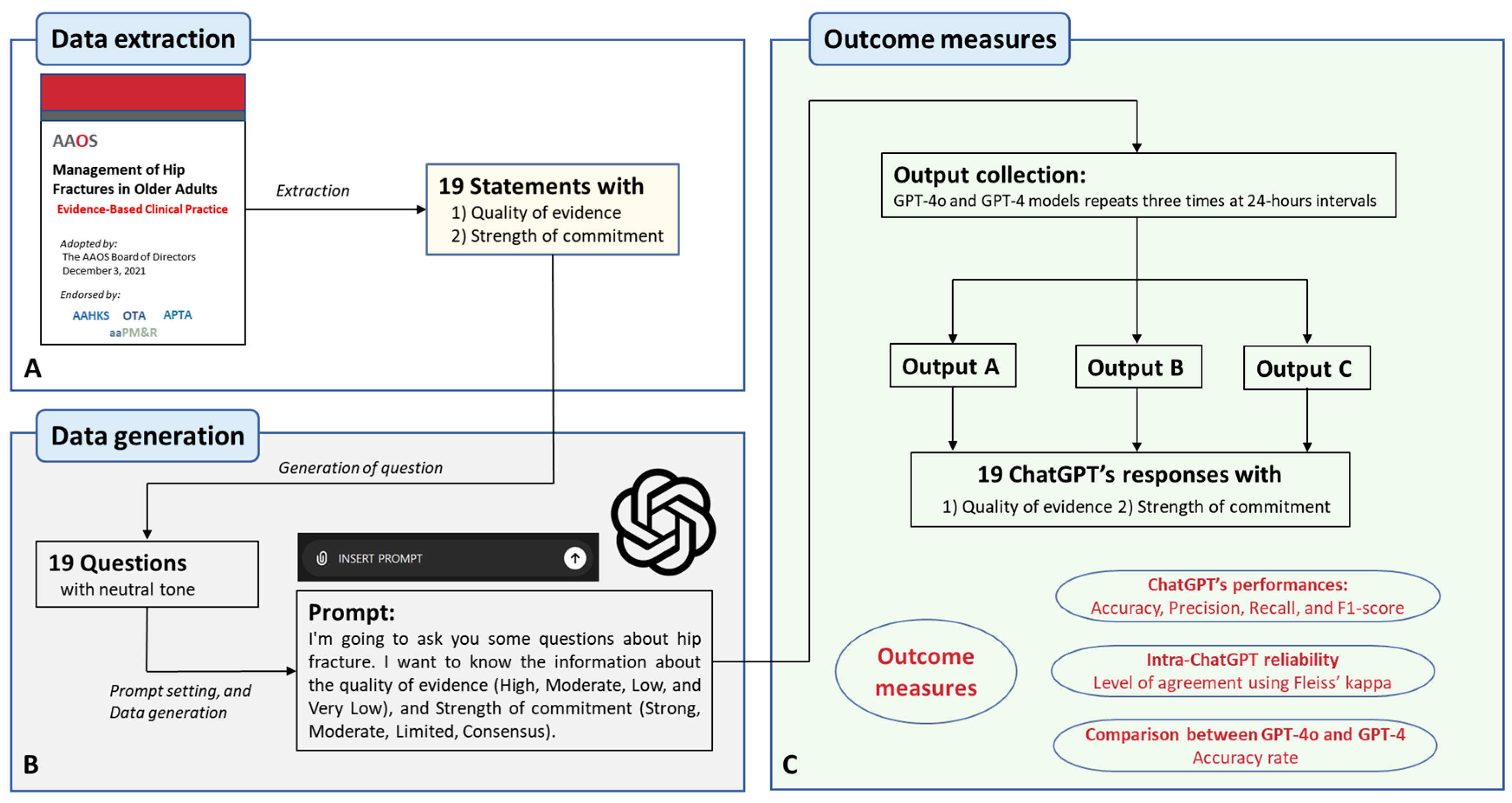

2. Materials and Methods

2.1. 2021 AAOS Guidelines for Management of Hip Fractures in Older Adults

2.2. Data Extraction and Generation

2.3. Outcome Measures

2.4. Statistical Analysis and Visualization

3. Results

3.1. Performance and Reliability of ChatGPT-Generated Responses (GPT-4o Version)

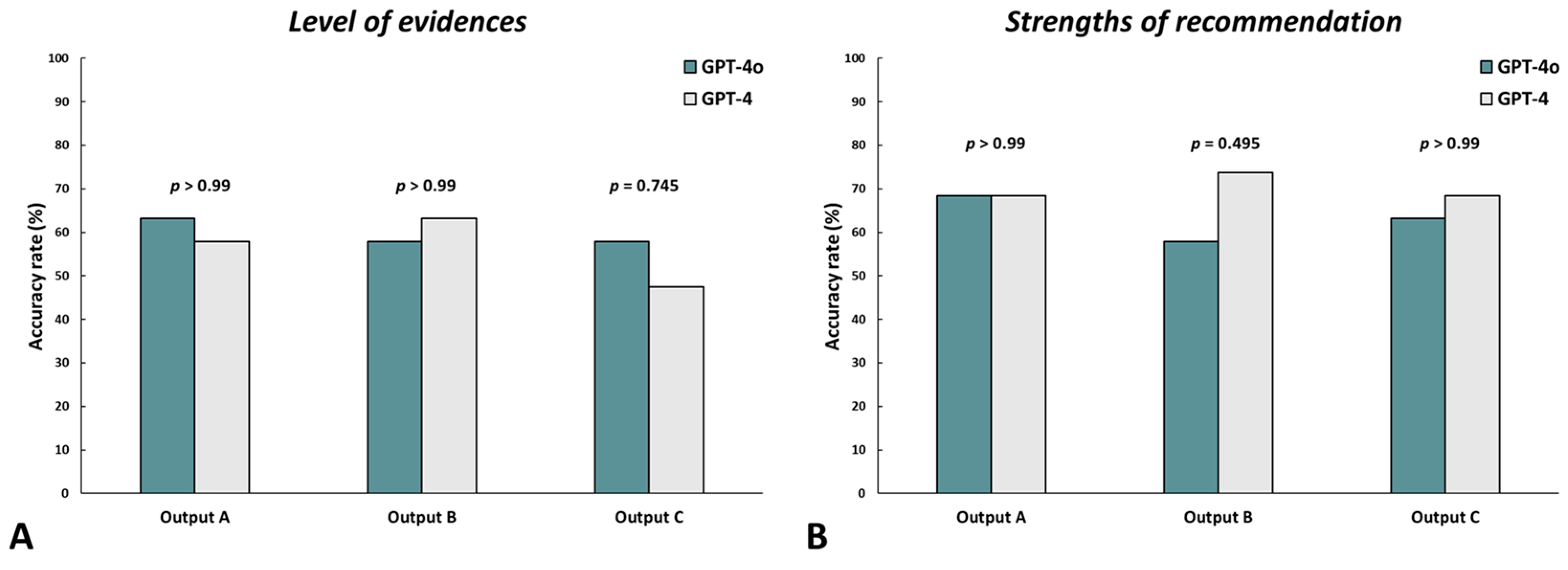

3.2. Comparison of ChatGPT’s Responses between the GPT-4o and GPT-4 Versions

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fernandes, A.C.; Souto, M. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 2399–2400. [Google Scholar] [CrossRef] [PubMed]

- Kohane, I.S. Injecting Artificial Intelligence into Medicine. NEJM AI 2024, 1. [Google Scholar] [CrossRef]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large language models in medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef] [PubMed]

- Jain, N.; Gottlich, C.; Fisher, J.; Campano, D.; Winston, T. Assessing ChatGPT’s orthopedic in-service training exam performance and applicability in the field. J. Orthop. Surg. Res. 2024, 19, 27. [Google Scholar] [CrossRef] [PubMed]

- AlShehri, Y.; McConkey, M.; Lodhia, P. ChatGPT has Educational Potential: Assessing ChatGPT Responses to Common Patient Hip Arthroscopy Questions. Arthroscopy 2024. [Google Scholar] [CrossRef]

- Bi, A.S. What’s Important: The Next Academic-ChatGPT AI? J. Bone Jt. Surg. Am. 2023, 105, 893–895. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.J.; Yang, J.H.; Chang, D.G.; Lenke, L.G.; Pizones, J.; Castelein, R.; Watanabe, K.; Trobisch, P.D.; Mundis, G.M., Jr.; Suh, S.W.; et al. Assessing the Reproducibility of the Structured Abstracts Generated by ChatGPT and Bard Compared to Human-Written Abstracts in the Field of Spine Surgery: Comparative Analysis. J. Med. Internet Res. 2024, 26, e52001. [Google Scholar] [CrossRef]

- Mika, A.P.; Martin, J.R.; Engstrom, S.M.; Polkowski, G.G.; Wilson, J.M. Assessing ChatGPT Responses to Common Patient Questions Regarding Total Hip Arthroplasty. J. Bone Jt. Surg. Am. 2023, 105, 1519–1526. [Google Scholar] [CrossRef]

- Wrenn, S.P.; Mika, A.P.; Ponce, R.B.; Mitchell, P.M. Evaluating ChatGPT’s Ability to Answer Common Patient Questions Regarding Hip Fracture. J. Am. Acad. Orthop. Surg. 2024, 32, 656–659. [Google Scholar] [CrossRef]

- Young, S.D. Recommendations for Using Online Social Networking Technologies to Reduce Inaccurate Online Health Information. Online J. Health Allied Sci. 2011, 10, 2. [Google Scholar]

- Dekkers, T.; Melles, M.; Groeneveld, B.S.; de Ridder, H. Web-Based Patient Education in Orthopedics: Systematic Review. J. Med. Internet Res. 2018, 20, e143. [Google Scholar] [CrossRef] [PubMed]

- Kasthuri, V.S.; Glueck, J.; Pham, H.; Daher, M.; Balmaceno-Criss, M.; McDonald, C.L.; Diebo, B.G.; Daniels, A.H. Assessing the Accuracy and Reliability of AI-Generated Responses to Patient Questions Regarding Spine Surgery. J. Bone Jt. Surg. Am. 2024, 106, 1136–1142. [Google Scholar] [CrossRef] [PubMed]

- Fischer, H.; Maleitzke, T.; Eder, C.; Ahmad, S.; Stöckle, U.; Braun, K.F. Management of proximal femur fractures in the elderly: Current concepts and treatment options. Eur. J. Med. Res. 2021, 26, 86. [Google Scholar] [CrossRef] [PubMed]

- Peng, G.; Guan, Z.; Hou, Y.; Gao, J.; Rao, W.; Yuan, X.; Guo, J.; Huang, X.; Zhong, Z.; Lin, J. Depicting developing trend and core knowledge of hip fracture research: A bibliometric and visualised analysis. J. Orthop. Surg. Res. 2021, 16, 174. [Google Scholar] [CrossRef] [PubMed]

- AAOS. American Academy of Orthopaedic Surgeons Management of Hip Fractures in Older Adults Evidence-Based Clinical Practice Guideline. Published 3 December 2021. Available online: https://www.aaos.org/hipfxcpg.pdf (accessed on 5 July 2024).

- Moon, J.K.; Yoon, J.Y.; Kim, C.H.; Lee, S.H.; Kekatpure, A.L.; Lee, J.S.; Yoon, P.W. Multiple drilling and multiple matchstick-like bone allografts for large osteonecrotic lesions in the femoral head: An average 3-year follow-up study. Arch. Orthop. Trauma Surg. 2020, 140, 1655–1663. [Google Scholar] [CrossRef]

- Boyce, A.M.; Chong, W.H.; Yao, J.; Gafni, R.I.; Kelly, M.H.; Chamberlain, C.E.; Bassim, C.; Cherman, N.; Ellsworth, M.; Kasa-Vubu, J.Z.; et al. Denosumab treatment for fibrous dysplasia. J. Bone Miner. Res. Off. J. Am. Soc. Bone Miner. Res. 2012, 27, 1462–1470. [Google Scholar] [CrossRef]

- Lu, Y.; Uppal, H.S. Hip Fractures: Relevant Anatomy, Classification, and Biomechanics of Fracture and Fixation. Geriatr. Orthop. Surg. Rehabil. 2019, 10, 2151459319859139. [Google Scholar] [CrossRef]

- Zhang, C.; Feng, J.; Wang, S.; Gao, P.; Xu, L.; Zhu, J.; Jia, J.; Liu, L.; Liu, G.; Wang, J.; et al. Incidence of and trends in hip fracture among adults in urban China: A nationwide retrospective cohort study. PLoS Med. 2020, 17, e1003180. [Google Scholar] [CrossRef]

- Leopold, S.S.; Haddad, F.S.; Sandell, L.J.; Swiontkowski, M. Artificial Intelligence Applications and Scholarly Publication in Orthopaedic Surgery. J. Bone Jt. Surg. Am. 2023, 105, 819–820. [Google Scholar] [CrossRef]

- Li, Z. The dark side of chatgpt: Legal and ethical challenges from stochastic parrots and hallucination. arXiv 2023, arXiv:2304.14347. [Google Scholar]

- Li, J.; Cheng, X.; Zhao, W.X.; Nie, J.-Y.; Wen, J.-R. Halueval: A large-scale hallucination evaluation benchmark for large language models. arXiv 2023, arXiv:2305.11747. [Google Scholar]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef] [PubMed]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Nietsch, K.S.; Shrestha, N.; Mazudie Ndjonko, L.C.; Ahmed, W.; Mejia, M.R.; Zaidat, B.; Ren, R.; Duey, A.H.; Li, S.Q.; Kim, J.S.; et al. Can Large Language Models (LLMs) Predict the Appropriate Treatment of Acute Hip Fractures in Older Adults? Comparing Appropriate Use Criteria With Recommendations From ChatGPT. J. Am. Acad. Orthop. Surg. Glob. Res. Rev. 2024, 8, e24.00206. [Google Scholar] [CrossRef]

- Salvagno, M.; Taccone, F.S.; Gerli, A.G. Can artificial intelligence help for scientific writing? Crit. Care 2023, 27, 75. [Google Scholar] [CrossRef]

- Walker, H.L.; Ghani, S.; Kuemmerli, C.; Nebiker, C.A.; Müller, B.P.; Raptis, D.A.; Staubli, S.M. Reliability of Medical Information Provided by ChatGPT: Assessment Against Clinical Guidelines and Patient Information Quality Instrument. J. Med. Internet Res. 2023, 25, e47479. [Google Scholar] [CrossRef]

| Content | Guideline | QE | SR |

|---|---|---|---|

| Preoperative traction | Preoperative traction should not routinely be used for patients with a hip fracture. | High | Strong |

| Surgical timing | Hip fracture surgery within 24–48 h of admission may be associated with better outcomes. | Low | Moderate |

| VTE prophylaxis | VTE prophylaxis should be used in hip fracture patients. | Moderate | Strong |

| Anesthesia | Either spinal or general anesthesia is appropriate for patients with a hip fracture. | High | Strong |

| Unstable femoral neck fractures: arthroplasty vs. fixation | In patients with unstable (displaced) femoral neck fractures, arthroplasty is recommended over fixation. | High | Strong |

| Unipolar/bipolar hemiarthroplasty | In patients with unstable (displaced) femoral neck fractures, unipolar or bipolar hemiarthroplasty can be equally beneficial. | Moderate | Moderate |

| Unstable femoral neck fractures: total arthroplasty vs. hemiarthroplasty | In properly selected patients with unstable (displaced) femoral neck fractures, there may be a functional benefit to total hip arthroplasty over hemiarthroplasty at the risk of increasing complications. | High | Moderate |

| Cemented femoral stems | In patients undergoing arthroplasty for femoral neck fractures, the use of cemented femoral stems is recommended. | High | Strong |

| Surgical approach | In patients undergoing treatment for femoral neck fractures with hip arthroplasty, evidence does not show a favored surgical approach. | Moderate | Moderate |

| Cephalomedullary device: stable intertrochanteric fractures | In patients with stable intertrochanteric fractures, the use of either a sliding hip screw or a cephalomedullary device is recommended. | High | Strong |

| Cephalomedullary device: subtrochanteric/reverse obliquity fractures | In patients with subtrochanteric or reverse obliquity fractures, a cephalomedullary device is recommended. | High | Strong |

| Cephalomedullary device: unstable intertrochanteric fractures | Patients with unstable intertrochanteric fractures should be treated with a cephalomedullary device. | High | Strong |

| Transfusion | A blood transfusion threshold of no higher than 8 g/dL is suggested in asymptomatic postoperative hip fracture patients. | Moderate | Moderate |

| Multimodal analgesia | Multimodal analgesia incorporating a preoperative nerve block is recommended to treat pain after hip fractures. | High | Strong |

| TXA | TXA should be administered to reduce blood loss and blood transfusion in patients with hip fractures. | High | Strong |

| Interdisciplinary care programs | Interdisciplinary care programs should be used in the care of hip fracture patients to decrease complications and improve outcomes. | High | Strong |

| Stable femoral neck factures | In patients with stable (impacted/non-displaced) femoral neck fractures, hemiarthroplasty, internal fixation, or non-operative care may be considered. | Moderate | Limited |

| Cephalomedullary device: pertrochanteric fractures | In patients with pertrochanteric femur fractures, a short or long cephalomedullary nail may be considered. | Low | Limited |

| Weight bearing | Following the surgical treatment of hip fractures, immediate, full weight bearing to tolerance may be considered. | Low | Limited |

| Content | Questions for the Prompt | Output A | Output B | Output C | |||

|---|---|---|---|---|---|---|---|

| QE | SR | QE | SR | QE | SR | ||

| Preoperative traction | Is preoperative traction not routinely used for patients with a hip fracture? | High | Strong | High | Strong | Moderate | Strong |

| Surgical timing | Is hip fracture surgery within 24–48 h of admission associated with better outcomes? | High | Strong | Moderate | Strong | Moderate | Strong |

| VTE prophylaxis | Is venous thromboembolism (VTE) prophylaxis used in hip fracture patients? | High | Strong | Moderate | Strong | High | Strong |

| Anesthesia | Is either spinal or general anesthesia appropriate for patients with a hip fracture? | Moderate | Strong | Moderate | Consensus | High | Strong |

| Unstable femoral neck fractures: arthroplasty vs. fixation | In patients with unstable (displaced) femoral neck fractures, is arthroplasty recommended over fixation? | High | Strong | High | Strong | High | Strong |

| Unipolar/bipolar hemiarthroplasty | In patients with unstable (displaced) femoral neck fractures, are unipolar and bipolar hemiarthroplasty equally beneficial? | Moderate | Limited | Moderate | Consensus | Moderate | Limited |

| Unstable femoral neck fractures: total arthroplasty vs. hemiarthroplasty | In properly selected patients with unstable (displaced) femoral neck fractures, is there a functional benefit to total hip arthroplasty over hemiarthroplasty despite the risk of increasing complications? | High | Strong | Moderate | Moderate | Moderate | Moderate |

| Cemented femoral stems | In patients undergoing arthroplasty for femoral neck fractures, is the use of cemented femoral stems recommended? | Moderate | Strong | High | Strong | High | Strong |

| Surgical approach | In patients undergoing treatment of femoral neck fractures with hip arthroplasty, does evidence show a favored surgical approach? | Moderate | Limited | Low | Limited | Low | Consensus |

| Cephalomedullary device: stable intertrochanteric fractures | In patients with stable intertrochanteric fractures, is the use of either a sliding hip screw or a cephalomedullary device recommended? | High | Strong | Moderate | Consensus | High | Strong |

| Cephalomedullary device: subtrochanteric/reverse obliquity fractures | In patients with subtrochanteric or reverse obliquity fractures, is a cephalomedullary device recommended? | High | Strong | Moderate | Strong | High | Strong |

| Cephalomedullary device: unstable intertrochanteric fractures | In patients with unstable intertrochanteric fractures, is a cephalomedullary device used? | High | Strong | High | Strong | High | Strong |

| Transfusion | Is a blood transfusion threshold of no higher than 8 g/dL suggested in asymptomatic postoperative hip fracture patients? | Moderate | Moderate | Moderate | Moderate | Moderate | Strong |

| Multimodal analgesia | Is multimodal analgesia incorporating preoperative nerve block recommended to treat pain after a hip fracture? | High | Strong | High | Strong | High | Strong |

| TXA | Is TXA administered to reduce blood loss and blood transfusion in patients with hip fractures? | High | Strong | High | Strong | High | Strong |

| Interdisciplinary care programs | Are interdisciplinary care programs used in the care of hip fracture patients to decrease complications and improve outcomes? | High | Strong | High | Strong | High | Strong |

| Stable femoral neck factures | In patients with stable (impacted/non-displaced) femoral neck fractures, are hemiarthroplasty, internal fixation, or non-operative care considered? | Moderate | Strong | Moderate | Consensus | High | Consensus |

| Cephalomedullary device: pertrochanteric fractures | In patients with pertrochanteric femur fractures, is a short or long cephalomedullary nail considered? | Moderate | Limited | Moderate | Consensus | Moderate | Consensus |

| Weight bearing | Following surgical treatment of hip fractures, is immediate, full weight bearing to tolerance considered? | High | Strong | Moderate | Strong | High | Strong |

| ChatGPT’s Performance (GPT-4o Version) | ||||

| Model | Accuracy | Precision (=PPV) | Recall (=Sn) | F1-Score |

| Output A | 0.684 | 0.585 | 0.684 | 0.629 |

| Output B | 0.579 | 0.675 | 0.579 | 0.581 |

| Output C | 0.579 | 0.522 | 0.579 | 0.549 |

| Reliability Test (GPT-4o Version) | ||||

| Variables | Fleiss’ Kappa | Level of Agreement | 95% CI | p |

| Reliability | 0.266 | Fair | 0.248–0.340 | <0.001 |

| ChatGPT’s Performance (GPT-4o Version) | ||||

| Model | Accuracy | Precision (=PPV) | Recall (=Sn) | F1-Score |

| Output A | 0.684 | 0.740 | 0.684 | 0.630 |

| Output B | 0.579 | 0.737 | 0.579 | 0.624 |

| Output C | 0.632 | 0.718 | 0.632 | 0.597 |

| Reliability test (GPT-4o Version) | ||||

| Variables | Fleiss’ Kappa | Level of Agreement | 95% CI | p |

| Reliability | 0.409 | Moderate | 0.119–0.699 | 0.006 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.J.; Yoon, P.W.; Yoon, J.Y.; Kim, H.; Choi, Y.J.; Park, S.; Moon, J.-K. Discrepancies in ChatGPT’s Hip Fracture Recommendations in Older Adults for 2021 AAOS Evidence-Based Guidelines. J. Clin. Med. 2024, 13, 5971. https://doi.org/10.3390/jcm13195971

Kim HJ, Yoon PW, Yoon JY, Kim H, Choi YJ, Park S, Moon J-K. Discrepancies in ChatGPT’s Hip Fracture Recommendations in Older Adults for 2021 AAOS Evidence-Based Guidelines. Journal of Clinical Medicine. 2024; 13(19):5971. https://doi.org/10.3390/jcm13195971

Chicago/Turabian StyleKim, Hong Jin, Pil Whan Yoon, Jae Youn Yoon, Hyungtae Kim, Young Jin Choi, Sangyoon Park, and Jun-Ki Moon. 2024. "Discrepancies in ChatGPT’s Hip Fracture Recommendations in Older Adults for 2021 AAOS Evidence-Based Guidelines" Journal of Clinical Medicine 13, no. 19: 5971. https://doi.org/10.3390/jcm13195971