Abstract

Background/Objectives: Aortic dissection (AD) and aortic intramural hematoma (IMH) are fatal diseases with similar clinical characteristics. Immediate computed tomography (CT) with a contrast medium is required to confirm the presence of AD or IMH. This retrospective study aimed to use CT images to differentiate AD and IMH from normal aorta (NA) using a deep learning algorithm. Methods: A 6-year retrospective study of non-contrast chest CT images was conducted at a university hospital in Seoul, Republic of Korea, from January 2016 to July 2021. The position of the aorta was analyzed in each CT image and categorized as NA, AD, or IMH. The images were divided into training, validation, and test sets in an 8:1:1 ratio. A deep learning model that can differentiate between AD and IMH from NA using non-contrast CT images alone, called YOLO (You Only Look Once) v4, was developed. The YOLOv4 model was used to analyze 8881 non-contrast CT images from 121 patients. Results: The YOLOv4 model can distinguish AD, IMH, and NA from each other simultaneously with a probability of over 92% using non-contrast CT images. Conclusions: This model can help distinguish AD and IMH from NA when applying a contrast agent is challenging.

1. Introduction

Aortic dissection (AD) and aortic intramural hematoma (IMH) are life-threatening conditions that require prompt diagnosis and treatment. Their clinical characteristics and therapeutic strategies are similar, and contrast-enhanced computed tomography (CT) is crucial for confirmation. AD and IMH have incidences of 2.6–3.5 cases per 100,000 person-years. Therefore, patients with these conditions are not commonly seen in emergency departments but do occasionally seek treatment [1,2,3]. Endovascular treatment for aortic disorders, particularly acute aortic syndrome, has become increasingly popular in recent years, and survival rates have increased [4]. However, the mortality rate of untreated type A AD and IMH increases by 1–2% per hour after symptom onset, and the acute-phase mortality rate is high [5]. Therefore, emergency physicians must consider AD and IMH in the initial differential diagnosis for patients who visit the emergency department with severe chest pain.

Contrast-enhanced CT is considered to be the best diagnostic tool because of its affordability, speed, and high specificity and sensitivity. According to data from the International Registry of Acute Aortic Dissection, 63% of respondents selected CT as their first diagnostic modality [5]. If there is a possibility of AD or IMH, an urgent contrast-enhanced CT is required. However, if there is a history of anaphylactic reaction to contrast agents or a high risk of renal failure, deciding whether to use contrast media can be challenging [6,7].

Non-contrast CT is less time-consuming than contrast-enhanced CT media and can be performed in most emergency departments without waiting for serum creatinine levels to be confirmed, which often takes over an hour. However, this method may not be clinically relevant unless the diagnostic accuracy of non-contrast chest CT for AD and IMH is high.

Differentiating AD and IMH from the normal aorta (NA) using only non-contrast CT images in critical situations could enable faster treatment, increasing patient survival rates. Attempts have been made to detect AD using CT without contrast enhancement in order to overcome these issues in artificial intelligence (AI) and deep learning [8], but simultaneous detection of AD and IMH has not yet been researched. Since IMH and AD share common symptoms and require rapid diagnosis and treatment, the ability to simultaneously differentiate AD and IMH in non-contrast CT would be clinically significant for emergency physicians. Deep learning using non-contrast CT images would allow the development of an algorithm that accurately identifies AD and IMH from NA without a contrast agent.

2. Methods

2.1. Study Design and Setting

This retrospective study used CT images to differentiate AD and IMH from NA using a deep learning algorithm. From January 2016 to July 2021, CT images were obtained from a university hospital in Seoul in the Republic of Korea. The Institutional Review Board (IRB) of our hospital approved this study (IRB no. 2021-08-013); since this was a retrospective study, informed consent was not required. Identifying information was removed from each image before the onset of the study. All methods and procedures were performed in accordance with the Declaration of Helsinki.

2.2. Selection of Participants

CT images were categorized into three classes: AD, IMH, and NA. The AD and IMH groups were selected from patients according to the International Classification of Diseases 10th Revision ICD-10 I71.01, dissection of the thoracic aorta and I71.01, dissection of the aorta, unspecified, from the hospital’s electronic medical records. Four experts in emergency medicine (EM), one in cardiothoracic surgery (CS), and one in cardiovascular medicine examined patients’ medical records and chest angiography CT images. Two radiology specialists included patients identified as having AD or IMH. In this study, we obtained and included CT images from the aortic root to the iliac artery bifurcation. The NA group comprised 50 randomly selected cases using the same modality (chest angiography CT), where no abnormalities in the aorta or major arteries were observed. Patients aged <18 years, those with AD or IMH caused by trauma, and those with significant motion artifacts on CT images were excluded. Cases in which the position and morphology of the aorta differed from normal cases, such as those with prior aortic surgery, lobectomy, aortic aneurysm, or dextrocardia, were also excluded. CT images were acquired using Somatom Definition Flash 256 (Siemens, Munich, Germany) and Somatom Sensation 64 (Siemens, Munich, Germany). The selected images were extracted from the patients’ images in jpg format using the INFINITT M6 Picture Archiving and Communication System (PACS) (INFINITT Healthcare, Seoul, Republic of Korea), which is linked to the electronic medical record. The images underwent resizing, normalization, and augmentation. Windowing, which is the process of modifying the CT scan grayscale via the CT numbers (Hounsfield Units), was performed on the images to help highlight key anatomical features for easier recognition. As a result, the metadata Window Width and Window Center values of the images were adjusted to enhance the contrast appearance of the features.

Furthermore, the image size was resized to 512 × 512 pixels to estimate anchor boxes, and the data were normalized between 0 and 1 to maximize data integrity. The mosaic method, which combines four images into one in certain ratios, was used for augmentation. This allows the model to identify objects in smaller scales, thus, improving the generalization of the object detection task.

2.3. Model Development for CT Image-Based AD and an IMH Detection Algorithm

Using the included 8881 images (AD, n = 2965; IMH, n = 1688; and NA, n = 4228) from 121 patients, we first located the aorta on CT images. The You Only Look Once version 4 (YOLOv4) framework was used to determine whether the differentiated aorta contained AD or IMH. NA, AD, and IMH are the class types of the aorta detection data, which were learned using the MakeSense tool “https://www.makesense.ai (accessed on 15 September 2024)” by entering the location coordinates and labels of the bounding box (Figure S1). Images assigned to the test set were not included in the training set to avoid overfitting and generalizability.

After all independent images were collected, the images were divided into training, validation, and test sets in an 8:1:1 ratio. Training, validation, and testing were performed to determine whether AD or IMH was present on images.

The machine learning process had three stages. (1) CT images used in the first step of the study were tagged to the aorta using the YOLO program. (2) The image and labeled data values were used for the training process to locate the aorta on the CT images. (3) Training was performed to confirm the presence or absence of lesions and the type of lesions in the aorta discovered in the previous step.

2.3.1. Aorta Labeling on Chest CT Images and Data Processing

For masking, a researcher painted the entire aortic area on chest CT images in agreement with another for aorta segmentation. Non-contrast and postcontrast CT images from the AD and IMH groups were extracted separately and then coupled at the same level. Because AD and IMH are difficult to identify in non-contrast images, each image was classified as AD, IMH, or NA by assessing the presence of AD or IMH lesions on each non-contrast image based on the paired post-contrast images. All this work was performed by EM specialists. In case of disagreement between the two EM reviewers, a third reviewer (EM or CS) was consulted; differences were resolved through discussion until a consensus was reached.

2.3.2. Object Detection Model

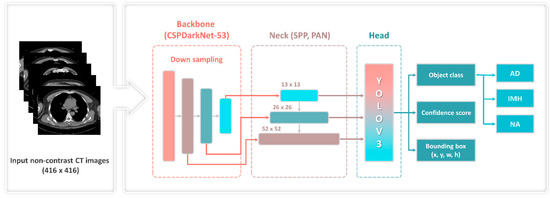

The 1-stage detector YOLOv4, which comprises a backbone, neck, and head, and simultaneously predicts classes and bounding boxes, was used in the object detection model (Figure 1). The input image was converted into a feature map using the backbone. The feature map extracted from the backbone was localized to the head. That is, it contributes to locating the class and bounding boxes of the image. The neck, which connects the backbone to the head, reconstructs and refines the feature map. Additionally, a bag of freebies (BoFs) and a bag of specials (BoSs) were applied to improve the model’s performance. The BoFs method uses data augmentation techniques, regulation, and loss and can improve performance without increasing costs. BoS includes a skip connection, a feature pyramid network, and an activation function, which increases the cost while improving performance.

Figure 1.

YOLOv4 framework with CSP-Darknet53. AD—aortic dissection; CT—computed tomography; CSP—cross-stage partial; IMH—aortic intramural hematoma; NA—normal aorta; PAN—path aggregation network; SPP—spatial pyramid pooling; YOLOv3—You Only Look Once version 3.

2.3.3. Main Characteristics of the YOLOv4 Framework

Figure 1 illustrates the overall structure of the YOLOv4 object detection model. The backbone generated feature maps using a down-sampling technique and a pretrained cross-stage partial (CSP)-Darknet53 neural network with an input image of 416 × 416 pixels. CSP decreased (by ~20%) the computation required by a convolutional neural network. Subsequently, the neck, comprising the spatial pyramid pooling and path aggregation network, connected feature maps of various scales (13, 26, 52) and refined the data. The existing YOLOv3 was used to predict the location of the bounding box, object class, and confidence score of the final head.

BoF used Cutmix, mosaic, and label smoothing in the model as data augmentation techniques, whereas drop-block regulation and label smoothing were used as regulation techniques. Moreover, the Complete Intersection over Union (CIOU) loss, which measures the similarity between predicted and ground-truth bounding boxes, is applied via the loss function. CIOU loss aims to provide a more accurate localization loss by addressing aspect ratio and box overlap issues [9].

In this study, 8881 CT images were used to implement the YOLOv4 object detection model as training (80%), validation (10%) and testing (10%) datasets. We classified the images not at the patient level, but at the image level for AD, IMH, and NA. Non-contrast chest CT images obtained from a single patient comprise approximately 70–100 images, depending on the patient’s physique. Even if a patient is diagnosed with AD or IMH, only some of these images contain the lesion, while the rest show an NA. If images were labeled as AD, IMH, or NA at the patient level instead of the image level during training, it could lead to incorrect learning. Images without AD or IMH could be misclassified due to the diagnosis of AD or IMH. Therefore, to prevent such issues, we labeled whether AD or IMH was present and conducted training and testing at the image level. Each image was assigned three class names (AD, IMH, and NA) and the aortic bounding box locations. The gradient optimization function used was the momentum stochastic gradient descent; the batch size was 64 during network training. The number of epochs was set to 2000 for each class and 6000 in total.

2.3.4. Main Metrics for the Detection Algorithm of AD and IMH

The most popular metrics for object detection models were used: mean average precision (mAP), Intersection Over Union (IoU), and precision–recall (P-R) curve. In object detection frameworks, true negatives (TNs) are not used [10]. TN represents a correctly undetected nonexistent object; in object detection, there are an infinite number of bounding boxes that should not be detected within the image [11]. Therefore, any TN-based metric, such as receiver operating characteristic curve, accuracy, or specificity, was avoided.

In object detection tasks, mAP represents the average precision (AP) across all categories, where represents the AP value of the ith class, N represents the total number of classes, and AP and mAP have values within the range [0, 1].

AP is a comprehensive index that considers precision and recall. Since precision and recall have values between 0 and 1, mAP also has a value between 0 and 1.

In classification tasks with localization and object detection, the IoU (Equation (2)) is frequently used to determine the reliability of the bounding box location, where prediction () and ground truth () are the predicted and ground truth bounding boxes, respectively [12]. IoU equals zero for non-overlapping bounding boxes and one for perfect overlap.

The IoU threshold was set to 0.5. An IoU > 0.5 was considered accurate, and precision and recall values were determined. As shown in Equations (3) and (4), the AP for a specific class is calculated by interpolating all points. Consequently, a P-R curve was developed to determine the AP of all recall values.

The P-R curve displays the precision in accordance with the modification of the recall value produced by modifying the confidence score. This is a technique for assessing the object detection model’s performance, which is typically calculated as the area under the P-R curve.

Below are the mathematical definitions of precision, recall, and F1-score.

2.4. Analysis

Data were compiled using a standard spreadsheet application (Excel 2016; Microsoft, Redmond, WA, USA) and analyzed using SPSS 20 (SPSS Inc., Chicago, IL, USA). The Kolmogorov–Smirnov test was performed to evaluate the distribution normality of all datasets. Descriptive statistics are presented as frequencies and percentages for categorical data and either a median and interquartile range (nonnormal distribution) or a mean and standard deviation (normal distribution) for continuous data. We used Student’s t test or the Mann–Whitney U test to compare the characteristics of segmented and non-segmented data. p-values of <0.05 were considered statistically significant. All statistical calculations were performed using Python and R software (version 3.4.1; “https://www.r-project.org (accessed on 15 September 2024)”.

3. Results

3.1. Characteristics of the Study Subjects

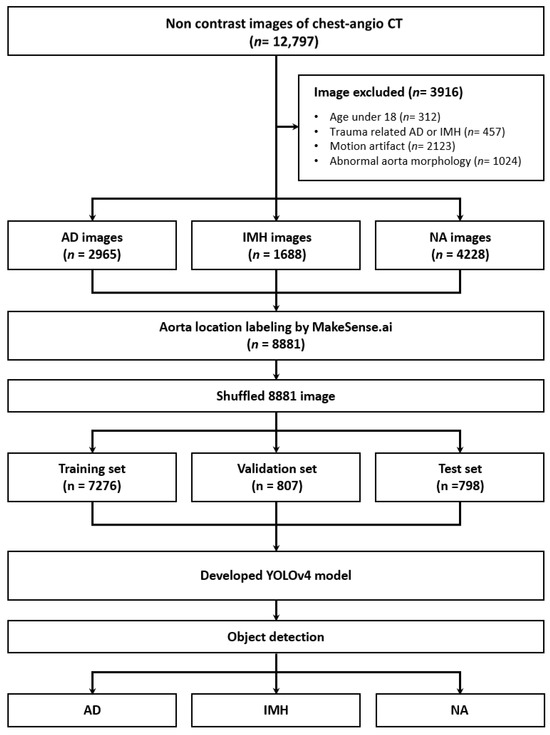

In total, 12,797 images were obtained from 121 patients, 72 of whom were male (59.5%), with a median age of 63.0 (50.0–73.0). Based on the exclusion criteria, 3916 images were excluded. Finally, 8881 images, including 2965 of AD and 1688 of IMH, were included (Figure 2). Among the variables, except for hypertension, no significant differences were observed between the groups (all p > 0.05). Although there were substantial differences in BMI among groups, the retrospective study’s limitations resulted in numerous missing values (55/121, 45.5%). (Table 1).

Figure 2.

Flow diagram of the study. CT—computed tomography; AD—aortic dissection; IMH—aortic intramural hematoma; NA—normal aorta; YOLOv4—You Only Look Once version 4.

Table 1.

Basic patient characteristics.

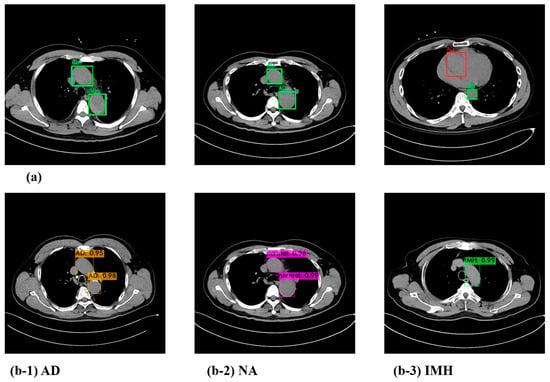

The YOLOv4 model outputs the predicted object class, confidence score, and bounding box coordinates when making the final prediction at the head. The confidence score is calculated as the “probability of an object class × IoU”. When conducting an experiment to detect the presence or absence of a dissection in the aorta, an example test output of the model is shown in Figure 3b, where the bounding box and confidence score for the detected location and class can be confirmed.

Figure 3.

Visualization of the prediction result (a) and test results with the confidence score of the YOLOv4 model (b). (b-1) AD; (b-2) NA; (b-3) IMH. AD—aortic dissection; IMH—aortic intramural hematoma; NA—normal aorta.

Furthermore, we compared the predicted results of the model with the actual ground truth class and the bounding box. Figure 3a depicts cases wherein the model made accurate predictions as well as those in which it did not. The blue box represents the ground truth, the green box represents the predicted result, and the red box represents the box that the detector misidentified. Since the CT images for each case used in the test comprised sequential data, it was possible to detect and correct such sparse errors.

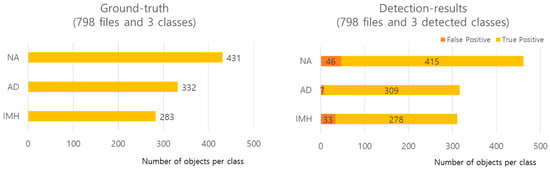

3.2. Model Performance of the Algorithm of AD and IMH Detection

In the experiment aimed at detecting NA, AD, and IMH on CT images, of the 8881 images extracted from 121 patients, 7276 were used for the training set, 807 were used for the validation set, and 798 were used for the test set. The three class distributions and detailed detection results (false positive [FP] and true positive [TP]) of the model using the test data are shown in Figure 4. TP indicates correct detection when the IoU is greater than the threshold value; FP indicates positive detection when the IoU is smaller than the threshold value. Cases without detection were excluded, resulting in a difference between ground truth and predicted results.

Figure 4.

Distribution of each class and detailed detection results. AD—aortic dissection; IMH—aortic intramural hematoma; NA—normal aorta.

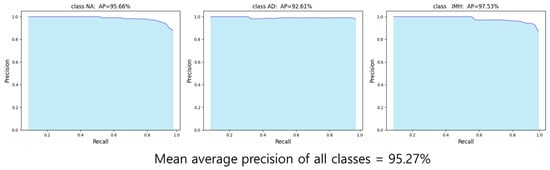

The final test results of the YOLOv4 model for AD and IMH detection, based on the evaluation metrics for object detection, showed an AP of 95.66%, 92.61%, and 97.53% for NA, AD, and IMH, respectively (Figure 5). The final mAP for all classes was 95.27%.

Figure 5.

Average precision graph (P-R curve) for each class. AD—aortic dissection; AP—average precision; IMH—aortic intramural hematoma; NA—normal aorta.

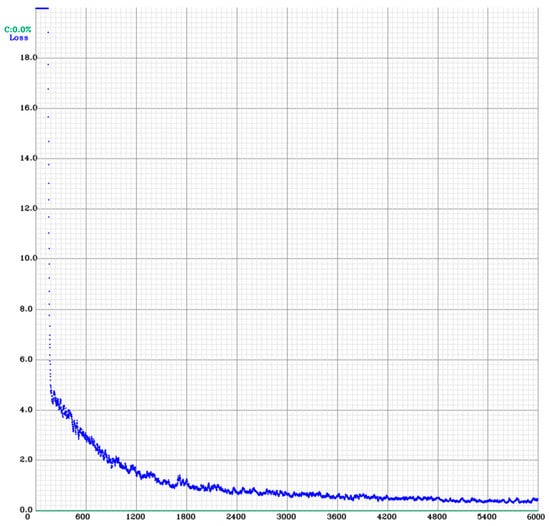

Figure 6 shows a descending trend in the CIOU loss curve, indicating that the model is learning and improving its ability to predict the bounding boxes accurately.

Figure 6.

Results of the YOLOv4 training. CIOU—Complete Intersection over Union; CIOU loss curve graph; YOLOv4—You Only Look Once version 4.

4. Discussion

We developed a deep learning-based algorithm that can accurately detect AD and IMH using non-contrast-enhanced CT images. The proposed YOLOv4 model demonstrated an AP of 95.66% for NA, 92.61% for AD, and 97.53% for IMH.

The diagnosis of AD and IMH is generally based on imaging tests [13]. By necessity, in addition to CT with contrast agents, MR and TEE are also commonly used as imaging examination methods. Compared to CT with contrast agents, MR offers the advantage of having less side effects, lowering radiation exposure, and being the best alternative examination for patients with CT contraindications [14]. TEE is significant in intraprocedural decision-making, as it helps to decrease complications of thoracic endovascular aortic repair (TEVAR) and improve survival rates. However, in emergency settings, contrast-enhanced CT scans are still commonly used [15].

AD and IMH treatment involves aggressive blood pressure control, and surgical intervention may be necessary to repair or replace affected aortic segments. Owing to rapid progression of AD and IMH, early and accurate diagnosis is crucial [16,17]. Recent studies have shown that an AI-based algorithm for detecting AD is more effective than human interpretation of chest CT scans [8]. Nevertheless, this AI study was limited in its ability to detect IMH on CT scans, since it did not include IMH in the training of AI-based algorithms. Although AD and IMH have different underlying pathophysiology, they share many similar symptoms and require prompt medical attention.

Clinicians, especially emergency physicians, may have a reduced diagnostic ability in scenarios where patients present with atypical symptoms or when patient influx to the emergency room is high, leading to time constraints. However, the YOLOv4 model could provide consistent and stable performance even in asymptomatic patients without limiting reading time, potentially improving the accuracy and efficiency of AD and IMH diagnoses [18,19,20]. The YOLOv4 model used in this study may also aid clinical decision-making by alerting nonspecialist clinicians to cases with suspected AD or IMH. It can also indicate specific slice levels suspicious of AD or IMH, enabling clinicians to review the identified images in more detail.

Contrast-enhanced CT for the diagnosis of AD and IMH can lead to complications such as anaphylactic reaction to the contrast agent, contrast-induced nephropathy (CIN), and skin necrosis or vasculitis resulting from contrast agent leakage into the extravascular area [6,7]. Since contrast agents are excreted through the kidneys, emergency physicians confirm adequate renal function in patients before conducting contrast-enhanced CT. However, in urgent cases where prompt use of contrast is imperative to differentiate AD or IMH from NA, physicians must weigh the benefits and risks of using contrast before verifying renal function. Using the YOLOv4 model, emergency physicians could reduce delays in CT scan time and unnecessary complications, such as anaphylactic reactions or CIN caused by contrast exposure from enhanced CT.

The YOLOv4 model is a deep learning framework that is widely used for real-time object detection in images and videos. Its advantages include speed, accuracy, and versatility, making it useful for various object detection applications such as autonomous vehicles, surveillance systems, and robotics [21]. Overall, for this research, YOLOv4 is a powerful object detection model that can achieve state-of-the-art performance while maintaining real-time performance [21,22,23].

In AD or IMH, accurate imaging interpretation is crucial in diagnostic and exclusion processes, as observed symptoms can also be present in other conditions, such as acute coronary syndrome [24,25]. Contrast-enhanced CT not only confirms AD or IMH diagnosis but also provides information on the type of dissection and the extent of involvement in the vessels that supply blood to the brain, heart, kidneys, and other organs, helping determine the appropriate treatment approach [24,25]. Therefore, although the AI algorithm cannot replace contrast-enhanced CT in AD and IMH diagnosis, the proposed YOLOv4 model minimizes the unnecessary use of contrast media and helps diagnose patients with suspected AD or IMH using only non-contrast images, even before verifying the serum creatinine level results, which can take more than an hour. Moreover, the overall AP calculated for the images was >95%. When serial non-contrast CT images from a single patient are sequentially applied to the YOLOv4 model, and if AD or IMH is detected in multiple consecutive images, the accuracy of diagnoses by emergency physicians will improve.

This study has some limitations. First, chest CT images and patient data were obtained from a single center, and our proposed model has not been validated in other hospital settings. Therefore, further investigation using non-contrast chest CT images from various hospitals is needed. Second, we did not assess how different aortic conditions influenced our model’s results. Third, we did not compare the performance of the model and physicians with respect to key factors, such as clinical outcomes and the equipment required to use the model as a screening tool. Fourth, this study did not perform an analysis concerning BMI, which could influence image quality [26]. Fifth, we excluded images that had significant motion artifacts from our study. Because of their instability, critically ill patients with AD and IMH may have more motion artifacts than ordinary patients. As a result, we believe that additional study is required to determine the accuracy of AD and IMH diagnosis using images of patients with motion artifacts, as well as to develop algorithms that can substantially increase the accuracy. Finally, we did not include penetrating aortic ulcers (PAUs). Acute aortic syndrome includes PAUs, along with AD and IMH. However, we excluded PAUs from our study because of insufficient imaging data.

5. Conclusions

Deep learning can improve the detection accuracy for AD and IMH in non-contrast CT images. Our results can help rapidly screen unstable patients with suspected AD or IMH in the emergency department and those with a history of anaphylaxis or CIN risk.

Supplementary Materials

The following supporting information can be downloaded at https://www.mdpi.com/article/10.3390/jcm13226868/s1, Figure S1: Depiction of a masked aortic CT image using MakeSense. CT, computed tomography.

Author Contributions

Y.-S.K. and H.Y.C. conceived the study. Y.-S.K., D.L. and J.-W.K. designed the experiments. D.L. and J.-W.K. wrote the code and performed the experiments. J.K., D.G.S., J.K.P., G.L. and B.K. collected and validated the data. G.H.K., Y.S.J., W.K., Y.L., B.K. and J.G.K. analyzed and interpreted the results. Y.-S.K., J.G.K. and H.Y.C. wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. NRF-2020R1G1A1101903 and No. RS-2023-00214006).

Institutional Review Board Statement

The Institutional Review Board (IRB) of our hospital approved this study (IRB no. 2021-08-013, approval date: 25 August 2021), since this was a retrospective study, informed consent was not required. All methods and procedures were performed in accordance with the Declaration of Helsinki.

Informed Consent Statement

The requirement for informed patient consent was waived, owing to the retrospective nature of the study and the use of de-identified clinical data.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alter, S.M.; Eskin, B.; Allegra, J.R. Diagnosis of Aortic Dissection in Emergency Department Patients is Rare. West. J. Emerg. Med. 2015, 16, 629–631. [Google Scholar] [CrossRef]

- Stevens, L.M.; Madsen, J.C.; Isselbacher, E.M.; Khairy, P.; MacGillivray, T.E.; Hilgenberg, A.D.; Agnihotri, A.K. Surgical management and long-term outcomes for acute ascending aortic dissection. J. Thorac. Cardiovasc. Surg. 2009, 138, 1349–1357.e1341. [Google Scholar] [CrossRef]

- Writing Group Members; Hiratzka, L.F.; Bakris, G.L.; Beckman, J.A.; Bersin, R.M.; Carr, V.F.; Casey, D.E.; Eagle, K.A.; Hermann, L.K.; Isselbacher, E.M.; et al. 2010 ACCF/AHA/AATS/ACR/ASA/SCA/SCAI/SIR/STS/SVM Guidelines for the Diagnosis and Management of Patients With Thoracic Aortic Disease. Circulation 2010, 121, e266–e369. [Google Scholar] [CrossRef]

- Shi, R.; Wooster, M. Hybrid and Endovascular Management of Aortic Arch Pathology. J. Clin. Med. 2024, 13, 6248. [Google Scholar] [CrossRef]

- Harris, K.M.; Nienaber, C.A.; Peterson, M.D.; Woznicki, E.M.; Braverman, A.C.; Trimarchi, S.; Myrmel, T.; Pyeritz, R.; Hutchison, S.; Strauss, C.; et al. Early Mortality in Type A Acute Aortic Dissection: Insights From the International Registry of Acute Aortic Dissection. JAMA Cardiol. 2022, 7, 1009–1015. [Google Scholar] [CrossRef]

- Rose, T.A., Jr.; Choi, J.W. Intravenous Imaging Contrast Media Complications: The Basics That Every Clinician Needs to Know. Am. J. Med. 2015, 128, 943–949. [Google Scholar] [CrossRef]

- Roditi, G.; Khan, N.; van der Molen, A.J.; Bellin, M.-F.; Bertolotto, M.; Brismar, T.; Correas, J.-M.; Dekkers, I.A.; Geenen, R.W.F.; Heinz-Peer, G.; et al. Intravenous contrast medium extravasation: Systematic review and updated ESUR Contrast Media Safety Committee Guidelines. Eur. Radiol. 2022, 32, 3056–3066. [Google Scholar] [CrossRef]

- Hata, A.; Yanagawa, M.; Yamagata, K.; Suzuki, Y.; Kido, S.; Kawata, A.; Doi, S.; Yoshida, Y.; Miyata, T.; Tsubamoto, M.; et al. Deep learning algorithm for detection of aortic dissection on non-contrast-enhanced CT. Eur. Radiol. 2021, 31, 1151–1159. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; Da Silva, E.A. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Padilla, R.; Passos, W.L.; Dias, T.L.; Netto, S.L.; Da Silva, E.A. A comparative analysis of object detection metrics with a companion open-source toolkit. Electronics 2021, 10, 279. [Google Scholar] [CrossRef]

- Kolchev, A.; Pasynkov, D.; Egoshin, I.; Kliouchkin, I.; Pasynkova, O.; Tumakov, D. YOLOv4-Based CNN Model versus Nested Contours Algorithm in the Suspicious Lesion Detection on the Mammography Image: A Direct Comparison in the Real Clinical Settings. J. Imaging 2022, 8, 88. [Google Scholar] [CrossRef]

- Isselbacher, E.M.; Preventza, O.; Hamilton Black, J.; Augoustides, J.G.; Beck, A.W.; Bolen, M.A.; Braverman, A.C.; Bray, B.E.; Brown-Zimmerman, M.M.; Chen, E.P.; et al. 2022 ACC/AHA Guideline for the Diagnosis and Management of Aortic Disease: A Report of the American Heart Association/American College of Cardiology Joint Committee on Clinical Practice Guidelines. Circulation 2022, 146, e334–e482. [Google Scholar] [CrossRef]

- Garg, I.; Grist, T.M.; Nagpal, P. MR Angiography for Aortic Diseases. Magn. Reson. Imaging Clin. N. Am. 2023, 31, 373–394. [Google Scholar] [CrossRef]

- Eissa, M.; Mir-Ghassemi, A.; Nagpal, S.; Talab, H.F. Detection of inadvertent passage of guide wire into the false lumen during thoracic endovascular aortic repair of Type B aortic dissection by transesophageal echocardiography. JA Clin. Rep. 2022, 8, 50. [Google Scholar] [CrossRef]

- Mussa, F.F.; Horton, J.D.; Moridzadeh, R.; Nicholson, J.; Trimarchi, S.; Eagle, K.A. Acute Aortic Dissection and Intramural Hematoma: A Systematic Review. JAMA 2016, 316, 754–763. [Google Scholar] [CrossRef]

- Sorber, R.; Hicks, C.W. Diagnosis and Management of Acute Aortic Syndromes: Dissection, Penetrating Aortic Ulcer, and Intramural Hematoma. Curr. Cardiol. Rep. 2022, 24, 209–216. [Google Scholar] [CrossRef]

- Richens, J.G.; Lee, C.M.; Johri, S. Improving the accuracy of medical diagnosis with causal machine learning. Nat. Commun. 2020, 11, 3923. [Google Scholar] [CrossRef]

- Mirbabaie, M.; Stieglitz, S.; Frick, N.R.J. Artificial intelligence in disease diagnostics: A critical review and classification on the current state of research guiding future direction. Health Technol. 2021, 11, 693–731. [Google Scholar] [CrossRef]

- Henry, K.E.; Kornfield, R.; Sridharan, A.; Linton, R.C.; Groh, C.; Wang, T.; Wu, A.; Mutlu, B.; Saria, S. Human–machine teaming is key to AI adoption: Clinicians’ experiences with a deployed machine learning system. npj Digit. Med. 2022, 5, 97. [Google Scholar] [CrossRef]

- Guo, C.; Lv, X.-L.; Zhang, Y.; Zhang, M.-L. Improved YOLOv4-tiny network for real-time electronic component detection. Sci. Rep. 2021, 11, 22744. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jiang, Y.; Li, W.; Zhang, J.; Li, F.; Wu, Z. YOLOv4-dense: A smaller and faster YOLOv4 for real-time edge-device based object detection in traffic scene. IET Image Process. 2023, 17, 570–580. [Google Scholar] [CrossRef]

- Sayed, A.; Munir, M.; Bahbah, E.I. Aortic Dissection: A Review of the Pathophysiology, Management and Prospective Advances. Curr. Cardiol. Rev. 2021, 17, e230421186875. [Google Scholar] [CrossRef] [PubMed]

- Carrel, T.; Sundt, T.M., 3rd; von Kodolitsch, Y.; Czerny, M. Acute aortic dissection. Lancet 2023, 401, 773–788. [Google Scholar] [CrossRef]

- Bouchareb, Y.; Tag, N.; Sulaiman, H.; Al-Riyami, K.; Jawa, Z.; Al-Dhuhli, H. Optimization of BMI-Based Images for Overweight and Obese Patients—Implications on Image Quality, Quantification, and Radiation Dose in Whole Body (18)F-FDG PET/CT Imaging. Nucl. Med. Mol. Imaging 2023, 57, 180–193. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).