A Novel Artificial Intelligence-Based Mobile Application for Pediatric Weight Estimation

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Population and Design

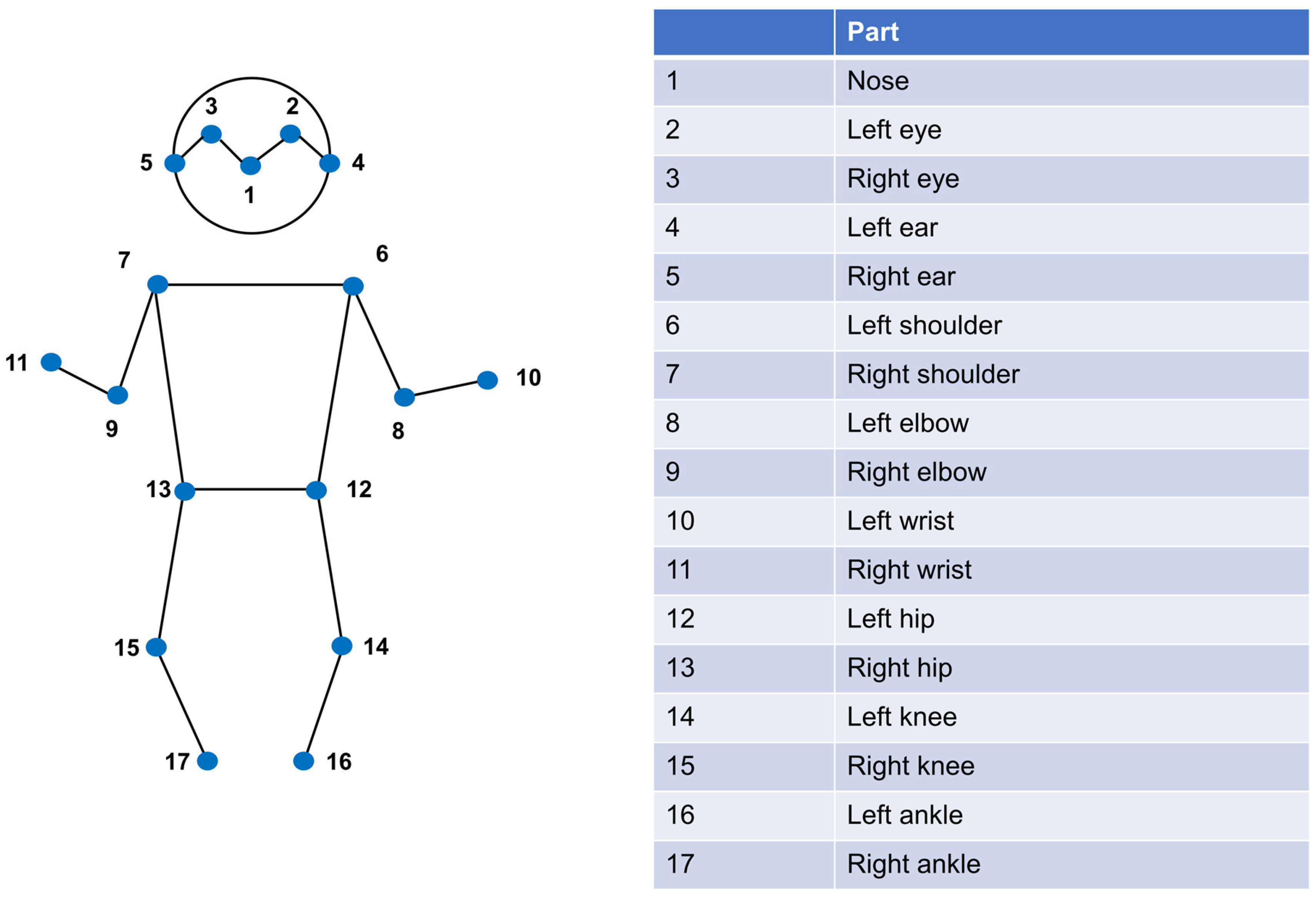

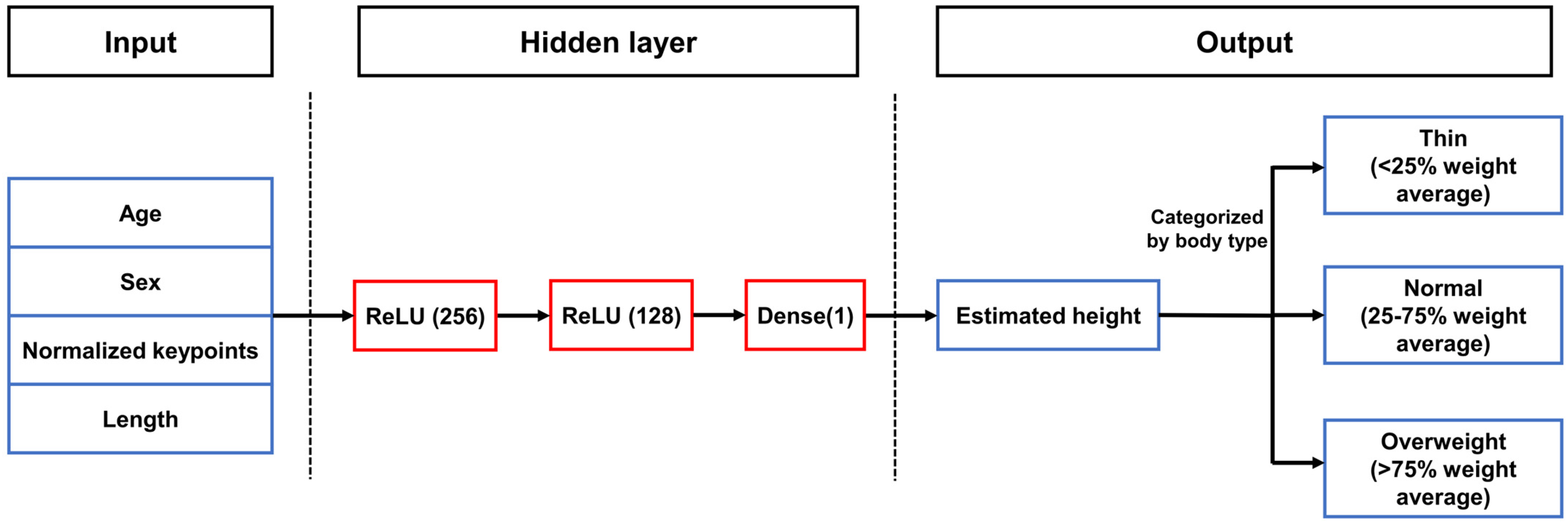

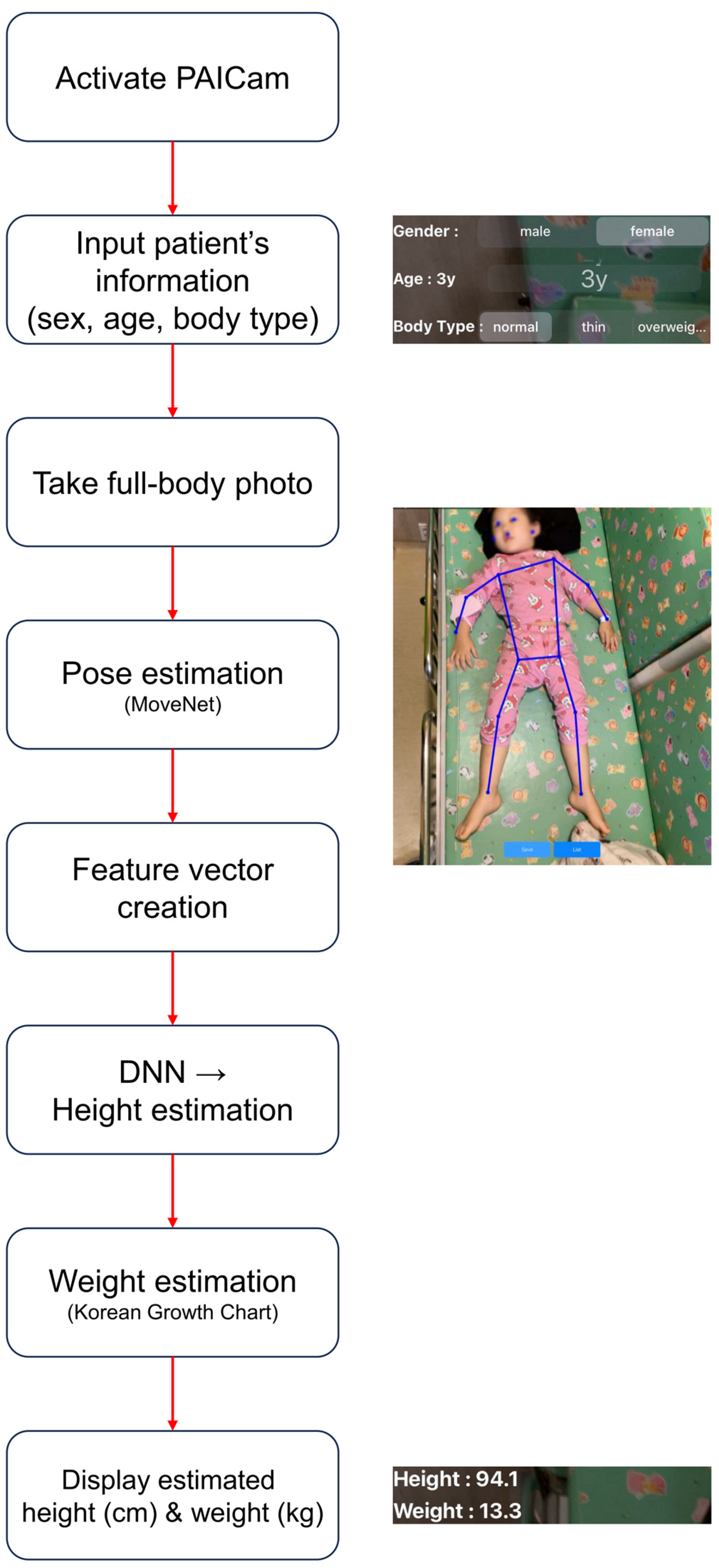

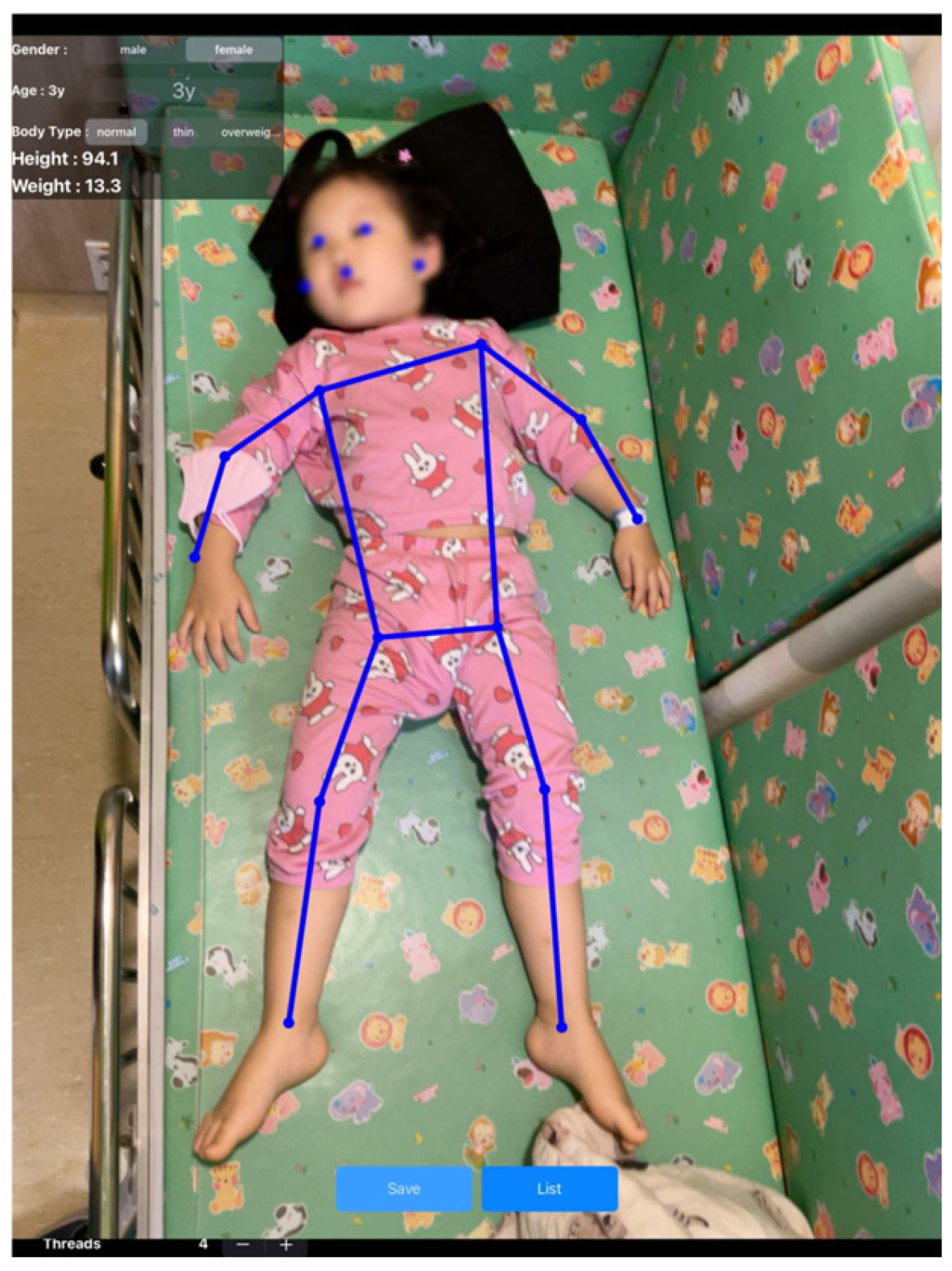

2.2. Pediatric Artificial Intelligence Weight-Estimating Camera (PAICam) and DNN

2.3. Data Collection and Sample Size

2.4. Data Analysis

3. Results

3.1. General Characteristics

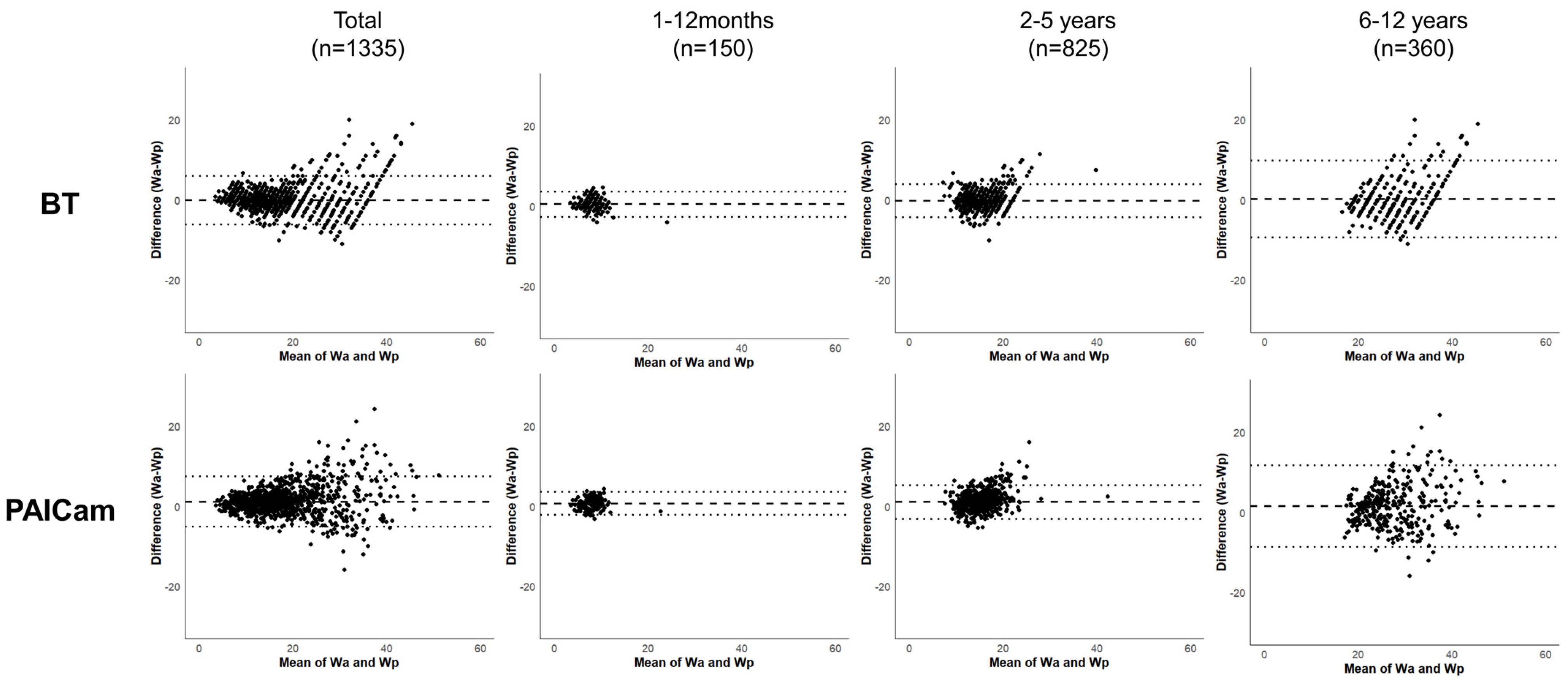

3.2. Performance of Each Weight Estimation Method

3.3. Correlation Between Actual and Predicted Weight

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BT | Broselow Tape |

| DNN | Deep Neural Network |

| ICC | Intraclass Correlation Coefficient |

| IRB | Institutional Review Board |

| MAPE | Mean Absolute Percentage Error |

| MPE | Mean Percentage Error |

| PAICam | Pediatric Artificial Intelligence weight-estimating Camera |

| PW10 | Percentage of Weight Estimations within 10% of Actual Weight |

| PW20 | Percentage of Weight Estimations within 20% of Actual Weight |

| RMSPE | Root Mean Square Percentage Error |

Appendix A

References

- Hoyle, J.D.; Davis, A.T.; Putman, K.K.; Trytko, J.A.; Fales, W.D. Medication dosing errors in pediatric patients treated by emergency medical services. Prehosp. Emerg. Care 2012, 16, 59–66. [Google Scholar] [CrossRef] [PubMed]

- Wong, I.C.; Ghaleb, M.A.; Franklin, B.D.; Barber, N. Incidence and nature of dosing errors in paediatric medications: A systematic review. Drug Saf. 2004, 27, 661–670. [Google Scholar] [CrossRef] [PubMed]

- Luscombe, M.; Owens, B. Weight estimation in resuscitation: Is the current formula still valid? Arch. Dis. Child. 2007, 92, 412–415. [Google Scholar] [CrossRef] [PubMed]

- Edobor-Osula, F.; Wenokor, C.; Bloom, T.; Zhao, C. Ipsilateral osteochondritis dissecans-like distal femoral lesions in children with BLOUNT disease: Prevalence and associated findings. Strateg. Trauma Limb Reconstr. 2019, 14, 121. [Google Scholar]

- Lubitz, D.S.; Seidel, J.S.; Chameides, L.; Luten, R.C.; Zaritsky, A.L.; Campbel, F.W. A rapid method for estimating weight and resuscitation drug dosages from length in the pediatric age group. Ann. Emerg. Med. 1988, 17, 576–581. [Google Scholar] [CrossRef]

- Choi, S.; Nah, S.; Kim, S.; Seong, E.O.; Kim, S.H.; Han, S. A validation of newly developed weight estimating tape for Korean pediatric patients. PLoS ONE 2022, 17, e0271109. [Google Scholar] [CrossRef]

- Wells, M.; Coovadia, A.; Kramer, E.; Goldstein, L. The PAWPER tape: A new concept tape-based device that increases the accuracy of weight estimation in children through the inclusion of a modifier based on body habitus. Resuscitation 2013, 84, 227–232. [Google Scholar] [CrossRef]

- Lim, C.A.; Kaufman, B.J.; O’Connor, J.; Cunningham, S.J. Accuracy of weight estimates in pediatric patients by prehospital emergency medical services personnel. Am. J. Emerg. Med. 2013, 31, 1108–1112. [Google Scholar] [CrossRef]

- Waltuch, T.; Munjal, K.; Loo, G.T.; Lim, C.A. AiRDose: Developing and validating an augmented reality smartphone application for weight estimation and dosing in children. Pediatr. Emerg. Care 2022, 38, e1257–e1261. [Google Scholar] [CrossRef]

- Park, J.W.; Kwon, H.; Jung, J.Y.; Choi, Y.J.; Lee, J.S.; Cho, W.S.; Lee, J.C.; Kim, H.C.; Lee, S.U.; Kwak, Y.H.; et al. “Weighing Cam”: A new mobile application for weight estimation in pediatric resuscitation. Prehosp. Emerg. Care 2020, 24, 441–450. [Google Scholar] [CrossRef]

- Nah, S.; Choi, S.; Kang, N.; Bae, K.Y.; Kim, Y.R.; Kim, M.; Moon, J.E.; Han, S. An augmented reality mobile application for weight estimation in paediatric patients: A prospective single-blinded cross-sectional study. Ann. Acad. Med. Singap. 2023, 52, 660–668. [Google Scholar] [CrossRef] [PubMed]

- Wetzel, O.; Schmidt, A.R.; Seiler, M.; Scaramuzz, D.; Seifert, B.; Spahn, D.R.; Stein, P. A smartphone application to determine body length for body weight estimation in children: A prospective clinical trial. J. Clin. Monit. Comput. 2018, 32, 571–578. [Google Scholar] [CrossRef] [PubMed]

- Gheisari, M.; Ghaderzadeh, M.; Li, H.; Taami, T.; Fernández-Campusano, C.; Sadeghsalehi, H.; Afzaal Abbasi, A. Mobile Apps for COVID-19 Detection and Diagnosis for Future Pandemic Control: Multidimensional Systematic Review. JMIR Mhealth Uhealth 2024, 12, e44406. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, A.; Eshraghi, M.A.; Taami, T.; Sadeghsalehi, H.; Hoseinzadeh, Z.; Ghaderzadeh, M.; Rafiee, M. A mobile application based on efficient lightweight CNN model for classification of B-ALL cancer from non-cancerous cells: A design and implementation study. Inf. Med. Unlocked 2023, 39, 101244. [Google Scholar] [CrossRef]

- Chung, J.L.; Ong, L.Y.; Leow, M.C. Comparative analysis of skeleton-based human pose estimation. Future Internet 2022, 14, 380. [Google Scholar] [CrossRef]

- Saeed, W.; Talathi, S.; Suneja, U.; Gupta, N.; Manadi, A.R.; Xu, H.; Leber, M.; Waseem, M. Utility of body habitus parameters to determine and improve the accuracy of the Broselow tape. Pediatr. Emerg. Care 2022, 38, e111–e116. [Google Scholar] [CrossRef]

- Safonova, A.; Ghazaryan, G.; Stiller, S.; Main-Knorn, M.; Nendel, C.; Ryo, M. Ten deep learning techniques to address small data problems with remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103569. [Google Scholar] [CrossRef]

- Ebrahimi, M.S.; Abadi, H.K. Study of Residual Networks for Image Recognition. In Intelligent Computing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 754–763. [Google Scholar]

- Vaughn, L.H.; Upperman, J.S. Sepsis. In Pediatric Surgery: Diagnosis and Management; Springer: Berlin/Heidelberg, Germany, 2023; pp. 85–95. [Google Scholar]

- Han, S.S.; Park, I.; Chang, S.E.; Lim, W.; Kim, M.S.; Park, G.H.; Chae, J.B.; Huh, C.H.; Na, J.I. Augmented intelligence dermatology: Deep neural networks empower medical professionals in diagnosing skin cancer and predicting treatment options for 134 skin disorders. J. Investig. Dermatol. 2020, 140, 1753–1761. [Google Scholar] [CrossRef]

- Smith, S.W.; Walsh, B.; Grauer, K.; Wang, K.; Rapin, J.; Li, J.; Fennell, W.; Taboulet, P. A deep neural network learning algorithm outperforms a conventional algorithm for emergency department electrocardiogram interpretation. J. Electrocardiol. 2019, 52, 88–95. [Google Scholar] [CrossRef]

- Galloway, C.D.; Valys, A.V.; Shreibati, J.B.; Treiman, D.L.; Petterson, F.L.; Gundotra, V.P.; Albert, D.E.; Attia, Z.I.; Carter, R.E.; Asirvastham, S.J.; et al. Development and validation of a deep-learning model to screen for hyperkalemia from the electrocardiogram. JAMA Cardiol. 2019, 4, 428–436. [Google Scholar] [CrossRef]

- Nawaz, S. Distinguishing between effectual, ineffectual, and problematic smartphone use: A comprehensive review and conceptual pathways model for future research. Comput. Hum. Behav. Rep. 2024, 14, 100424. [Google Scholar] [CrossRef]

- Wells, M.; Yende, P.J.C. Is there evidence that length-based tapes with precalculated drug doses increase the accuracy of drug dose calculations in children? A systematic review. Clin. Exp. Emerg. Med. 2024, 11, 145. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

| Total (N = 1335) | 1–12 Months (n = 150) | 2–5 Years (n = 825) | 6–12 Years (n = 360) | |

|---|---|---|---|---|

| Age, years | 4 [2–6] | 0.6 [0.3–0.8] | 3 [2–4] | 7 [6–9] |

| Sex | ||||

| Male | 767 (57.4) | 77 (51.3) | 480 (58.2) | 210 (58.3) |

| Female | 568 (42.6) | 73 (48.7) | 345 (41.8) | 150 (41.7) |

| Weight, kg | 16 [12.5–22] | 8.6 [7.0–9.7] | 15 [12.8–17.2] | 26.4 [23.0–32.0] |

| Height, cm | 102 [88–117] | 68.8 [32.1–73.5] | 97.2 [88.9–105.1] | 127.0 [120.0–134.6] |

| BMI, kg/m2 | 16.1 [15.0–17.8] | 17.5 [15.6–19.6] | 15.9 [14.9–17.2] | 16.3 [15.3–18.6] |

| Body type | ||||

| Thin | 58 (4.4) | 0 (0.0) | 22 (2.7) | 36 (10.0) |

| Normal | 1171 (87.7) | 141 (94.0) | 749 (90.8) | 281 (78.1) |

| Overweight | 106 (7.9) | 9 (6.0) | 54 (6.5) | 43 (11.9) |

| BT | PAICam | |

|---|---|---|

| All participants (N = 1335) | ||

| MPE, % | −1.44 | 5.29 |

| MAPE, % | 11.28 | 12.41 |

| RMSPE, % | 3.09 | 3.42 |

| LOA, kg | −6.05 to 6.06 | −5.12 to 7.48 |

| PW10, % | 52.6 | 51.2 |

| PW20, % | 79.1 | 77.7 |

| 1–12 months of age (n = 150) | ||

| MPE, % | 5.08 | 6.79 |

| MAPE, % | 14.55 | 16.1 |

| RMSPE, % | 1.67 | 1.62 |

| LOA, kg | −2.64 to 3.63 | −2.17 to 3.57 |

| PW10, % | 43.3 | 42 |

| PW20, % | 68 | 66.7 |

| 2–5 years of age (n = 825) | ||

| MPE, % | −2.57 | 5.74 |

| MAPE, % | 10.22 | 10.91 |

| RMSPE, % | 2.14 | 2.41 |

| LOA, kg | −4.37 to 3.99 | −3.09 to 5.31 |

| PW10, % | 61.33 | 60.61 |

| PW20, % | 87.88 | 86.67 |

| 6–12 years of age (n = 360) | ||

| MPE, % | −1.57 | 3.65 |

| MAPE, % | 12.3 | 14.3 |

| RMSPE, % | 4.87 | 5.39 |

| LOA, kg | −9.3 to 9.79 | −8.58 to 11.69 |

| PW10, % | 46.4 | 43.3 |

| PW20, % | 78.6 | 76.4 |

| BT | PAICam | |||||

|---|---|---|---|---|---|---|

| ICC | 95% CI | p-Value | ICC | 95% CI | p-Value | |

| All patients (N = 1335) | 0.959 | 0.955–0.963 | <0.001 | 0.955 | 0.950–0.960 | <0.001 |

| 1–12 months (n = 150) | 0.870 | 0.821–0.906 | <0.001 | 0.872 | 0.823–0.907 | <0.001 |

| 2–5 years (n = 825) | 0.900 | 0.886–0.913 | <0.001 | 0.889 | 0.872–0.903 | <0.001 |

| 6–12 years (n = 360) | 0.829 | 0.790–0.861 | <0.001 | 0.830 | 0.791–0.862 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, S.; Nah, S.; Moon, J.E.; Han, S. A Novel Artificial Intelligence-Based Mobile Application for Pediatric Weight Estimation. J. Clin. Med. 2025, 14, 2873. https://doi.org/10.3390/jcm14092873

Choi S, Nah S, Moon JE, Han S. A Novel Artificial Intelligence-Based Mobile Application for Pediatric Weight Estimation. Journal of Clinical Medicine. 2025; 14(9):2873. https://doi.org/10.3390/jcm14092873

Chicago/Turabian StyleChoi, Sungwoo, Sangun Nah, Ji Eun Moon, and Sangsoo Han. 2025. "A Novel Artificial Intelligence-Based Mobile Application for Pediatric Weight Estimation" Journal of Clinical Medicine 14, no. 9: 2873. https://doi.org/10.3390/jcm14092873

APA StyleChoi, S., Nah, S., Moon, J. E., & Han, S. (2025). A Novel Artificial Intelligence-Based Mobile Application for Pediatric Weight Estimation. Journal of Clinical Medicine, 14(9), 2873. https://doi.org/10.3390/jcm14092873