Musical Training for Auditory Rehabilitation in Hearing Loss

Abstract

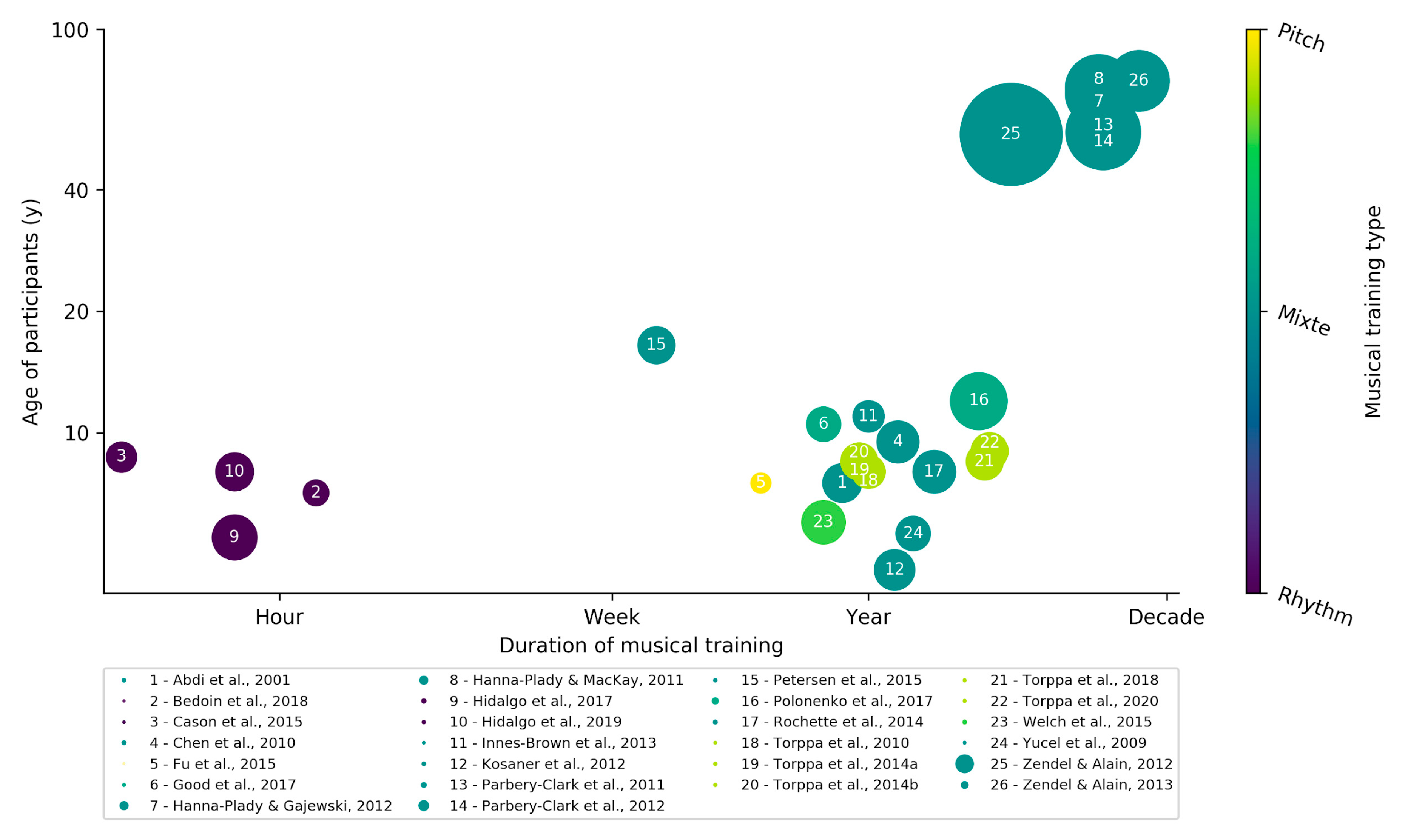

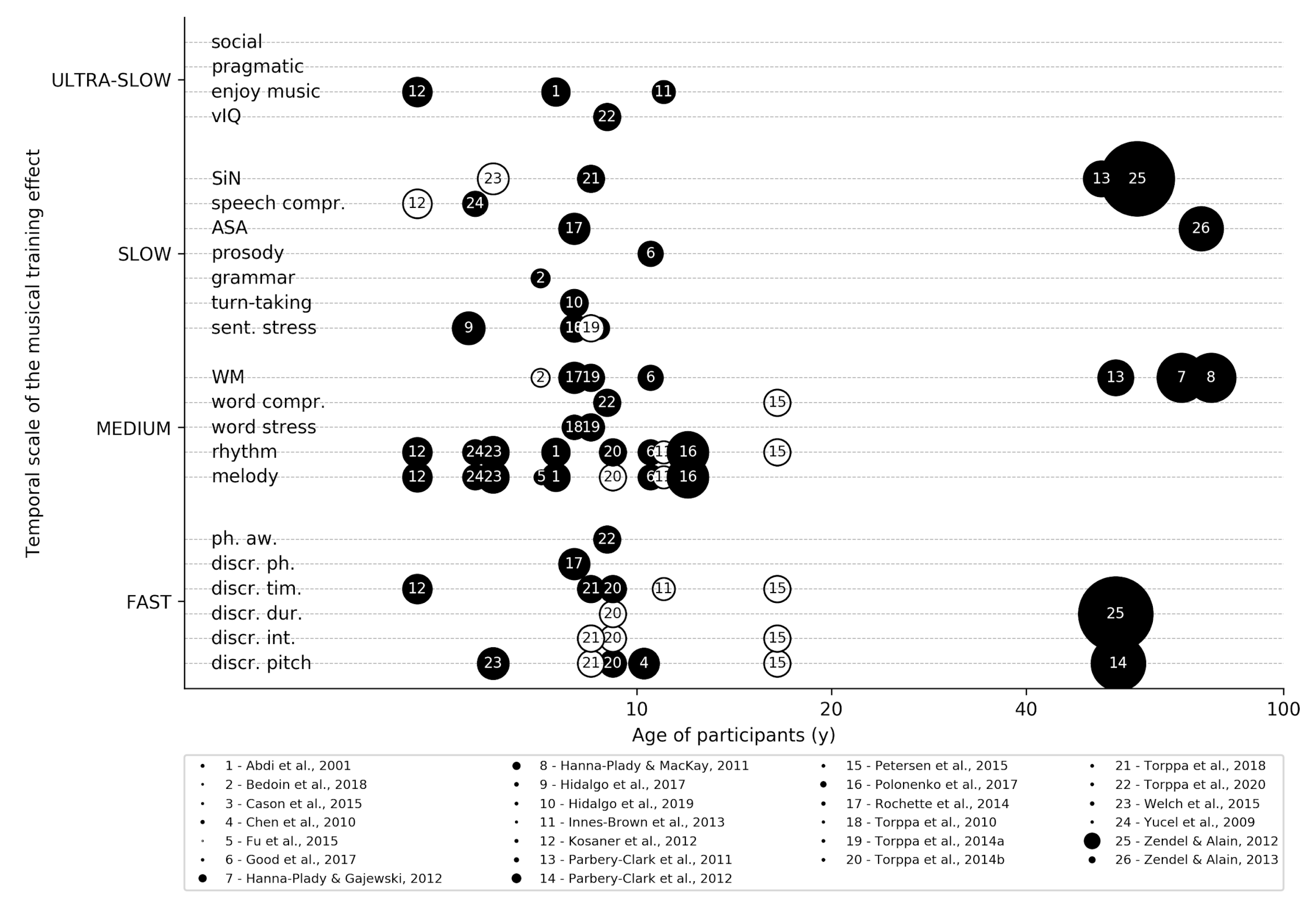

:1. Introduction

2. Discriminate Sounds Based on Pitch, Duration, and Timbre

2.1. Pitch

2.2. Duration

2.3. Timbre

3. Exploit the Temporal Structure and Group Sounds Together

3.1. Temporal Structure

3.2. Working Memory

4. Perceive Speech in Noise, Prosody, and Syntax

4.1. Speech in Noise

4.2. Prosody

4.3. Syntax

5. Toward a Remediation of Dialogue by Musical Training

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shannon, R.V.; Zeng, F.G.; Kamath, V.; Wygonski, J.; Ekelid, M. Speech recognition with primarily temporal cues. Science 1995, 270, 303–304. [Google Scholar] [CrossRef]

- Schon, D.; Morillon, B. Music and Language. In The Oxford Handbook of Music and the Brain; Oxford Press: Oxford, UK, 2018. [Google Scholar]

- Patel, A.D. Why would Musical Training Benefit the Neural Encoding of Speech? The OPERA Hypothesis. Front. Psychol. 2011, 2, 142. [Google Scholar] [CrossRef] [Green Version]

- Herholz, S.C.; Zatorre, R.J. Musical training as a framework for brain plasticity: Behavior, function, and structure. Neuron 2012, 76, 486–502. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fujii, S.; Wan, C.Y. The role of rhythm in speech and language rehabilitation: The SEP hypothesis. Front. Hum. Neurosci. 2014, 8, 777. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schön, D.; Tillmann, B. Short- and long-term rhythmic interventions: Perspectives for language rehabilitation. Ann. N. Y. Acad. Sci. 2015, 1337, 32–39. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torppa, R.; Faulkner, A.; Huotilainen, M.; Järvikivi, J.; Lipsanen, J.; Laasonen, M.; Vainio, M. The perception of prosody and associated auditory cues in early-implanted children: The role of auditory working memory and musical activities. Int. J. Audiol. 2014, 53, 182–191. [Google Scholar] [CrossRef]

- Moore, B.C.J. Speech processing for the hearing-impaired: Successes, failures, and implications for speech mechanisms. Speech Commun. 2003, 41, 81–91. [Google Scholar] [CrossRef] [Green Version]

- Eisenberg, L.S. Current state of knowledge: Speech recognition and production in children with hearing impairment. Ear Hear. 2007, 28, 766–772. [Google Scholar] [CrossRef]

- Asp, F.; Mäki-Torkko, E.; Karltorp, E.; Harder, H.; Hergils, L.; Eskilsson, G.; Stenfelt, S. Bilateral versus unilateral cochlear implants in children: Speech recognition, sound localization, and parental reports. Int. J. Audiol. 2012, 51, 817–832. [Google Scholar] [CrossRef]

- Caldwell, A.; Nittrouer, S. Speech perception in noise by children with cochlear implants. J. Speech Lang. Hear. Res. 2013, 56, 13–30. [Google Scholar] [CrossRef] [Green Version]

- Crandell, C.C. Individual differences in speech recognition ability: Implications for hearing aid selection. Ear Hear. 1991, 12, 100S–108S. [Google Scholar] [CrossRef] [PubMed]

- Humes, L.E.; Halling, D.; Coughlin, M. Reliability and Stability of Various Hearing-Aid Outcome Measures in a Group of Elderly Hearing-Aid Wearers. J. Speech Lang. Hear. Res. 1996, 39, 923–935. [Google Scholar] [CrossRef] [PubMed]

- Feng, G.; Ingvalson, E.M.; Grieco-Calub, T.M.; Roberts, M.Y.; Ryan, M.E.; Birmingham, P.; Burrowes, D.; Young, N.M.; Wong, P.C.M. Neural preservation underlies speech improvement from auditory deprivation in young cochlear implant recipients. Proc. Natl. Acad. Sci. USA 2018, 115, E1022–E1031. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, D.S.; Lee, J.S.; Oh, S.H.; Kim, S.K.; Kim, J.W.; Chung, J.K.; Lee, M.C.; Kim, C.S. Cross-modal plasticity and cochlear implants. Nature 2001, 409, 149–150. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.J.; Kang, E.; Oh, S.-H.; Kang, H.; Lee, D.S.; Lee, M.C.; Kim, C.-S. Preoperative differences of cerebral metabolism relate to the outcome of cochlear implants in congenitally deaf children. Hear. Res. 2005, 203, 2–9. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.-J.; Giraud, A.-L.; Kang, E.; Oh, S.-H.; Kang, H.; Kim, C.-S.; Lee, D.S. Cortical activity at rest predicts cochlear implantation outcome. Cereb. Cortex 2007, 17, 909–917. [Google Scholar] [CrossRef] [Green Version]

- Kaipa, R.; Danser, M.L. Efficacy of auditory-verbal therapy in children with hearing impairment: A systematic review from 1993 to 2015. Int. J. Pediatr. Otorhinolaryngol. 2016, 86, 124–134. [Google Scholar] [CrossRef]

- Henshaw, H.; Ferguson, M.A. Efficacy of individual computer-based auditory training for people with hearing loss: A systematic review of the evidence. PLoS ONE 2013, 8, e62836. [Google Scholar] [CrossRef] [Green Version]

- Chandrasekaran, B.; Kraus, N. The scalp-recorded brainstem response to speech: Neural origins and plasticity. Psychophysiology 2010, 47, 236–246. [Google Scholar] [CrossRef] [Green Version]

- Torppa, R.; Huotilainen, M. Why and how music can be used to rehabilitate and develop speech and language skills in hearing-impaired children. Hear. Res. 2019, 380, 108–122. [Google Scholar] [CrossRef]

- Alain, C.; Zendel, B.R.; Hutka, S.; Bidelman, G.M. Turning down the noise: The benefit of musical training on the aging auditory brain. Hear. Res. 2014, 308, 162–173. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K. Music as Communication and Training for Children with Cochlear Implants. In Pediatric Cochlear Implantation; Young, N.M., Iler Kirk, K., Eds.; Springer: New York, NY, USA, 2016; ISBN 978-1-4939-2787-6. [Google Scholar]

- Abdi, S.; Khalessi, M.H.; Khorsandi, M.; Gholami, B. Introducing music as a means of habilitation for children with cochlear implants. Int. J. Pediatr. Otorhinolaryngol. 2001, 59, 105–113. [Google Scholar] [CrossRef]

- Bedoin, N.; Besombes, A.M.; Escande, E.; Dumont, A.; Lalitte, P.; Tillmann, B. Boosting syntax training with temporally regular musical primes in children with cochlear implants. Ann. Phys. Rehabil. Med. 2018, 61, 365–371. [Google Scholar] [CrossRef] [PubMed]

- Cason, N.; Hidalgo, C.; Isoard, F.; Roman, S.; Schön, D. Rhythmic priming enhances speech production abilities: Evidence from prelingually deaf children. Neuropsychology 2015, 29, 102–107. [Google Scholar] [CrossRef]

- Chen, J.L.; Penhune, V.B.; Zatorre, R.J. Moving on time: Brain network for auditory-motor synchronization is modulated by rhythm complexity and musical training. J. Cogn. Neurosci. 2008, 20, 226–239. [Google Scholar] [CrossRef]

- Fu, Q.-J.; Galvin, J.J.; Wang, X.; Wu, J.-L. Benefits of music training in mandarin-speaking pediatric cochlear implant users. J. Speech Lang. Hear. Res. 2015, 58, 163–169. [Google Scholar] [CrossRef]

- Good, A.; Gordon, K.A.; Papsin, B.C.; Nespoli, G.; Hopyan, T.; Peretz, I.; Russo, F.A. Benefits of music training for perception of emotional speech prosody in deaf children with cochlear implants. Ear Hear. 2017, 38, 455–464. [Google Scholar] [CrossRef] [Green Version]

- Hanna-Pladdy, B.; Gajewski, B. Recent and past musical activity predicts cognitive aging variability: Direct comparison with general lifestyle activities. Front. Hum. Neurosci. 2012, 6, 198. [Google Scholar] [CrossRef] [Green Version]

- Hanna-Pladdy, B.; MacKay, A. The relation between instrumental musical activity and cognitive aging. Neuropsychology 2011, 25, 378–386. [Google Scholar] [CrossRef] [Green Version]

- Hidalgo, C.; Falk, S.; Schön, D. Speak on time! Effects of a musical rhythmic training on children with hearing loss. Hear. Res. 2017, 351, 11–18. [Google Scholar] [CrossRef] [Green Version]

- Hidalgo, C.; Pesnot-Lerousseau, J.; Marquis, P.; Roman, S.; Schön, D. Rhythmic training improves temporal anticipation and adaptation abilities in children with hearing loss during verbal interaction. J. Speech Lang. Hear. Res. 2019, 62, 3234–3247. [Google Scholar] [CrossRef] [PubMed]

- Innes-Brown, H.; Marozeau, J.P.; Storey, C.M.; Blamey, P.J. Tone, rhythm, and timbre perception in school-age children using cochlear implants and hearing aids. J. Am. Acad. Audiol. 2013, 24, 789–806. [Google Scholar] [CrossRef] [PubMed]

- Koşaner, J.; Kilinc, A.; Deniz, M. Developing a music programme for preschool children with cochlear implants. Cochlear Implants Int. 2012, 13, 237–247. [Google Scholar] [CrossRef] [PubMed]

- Parbery-Clark, A.; Strait, D.L.; Anderson, S.; Hittner, E.; Kraus, N. Musical experience and the aging auditory system: Implications for cognitive abilities and hearing speech in noise. PLoS ONE 2011, 6, e18082. [Google Scholar] [CrossRef] [Green Version]

- Parbery-Clark, A.; Anderson, S.; Hittner, E.; Kraus, N. Musical experience offsets age-related delays in neural timing. Neurobiol. Aging 2012, 33, 1483.e1-4. [Google Scholar] [CrossRef]

- Petersen, B.; Weed, E.; Sandmann, P.; Brattico, E.; Hansen, M.; Sørensen, S.D.; Vuust, P. Brain responses to musical feature changes in adolescent cochlear implant users. Front. Hum. Neurosci. 2015, 9, 7. [Google Scholar] [CrossRef] [Green Version]

- Polonenko, M.J.; Giannantonio, S.; Papsin, B.C.; Marsella, P.; Gordon, K.A. Music perception improves in children with bilateral cochlear implants or bimodal devices. J. Acoust. Soc. Am. 2017, 141, 4494. [Google Scholar] [CrossRef] [PubMed]

- Rochette, F.; Moussard, A.; Bigand, E. Music lessons improve auditory perceptual and cognitive performance in deaf children. Front. Hum. Neurosci. 2014, 8, 488. [Google Scholar] [CrossRef] [Green Version]

- Torppa, R.; Faulkner, A.; Järvikivi, J. Acquisition of focus by normal hearing and cochlear implanted children: The role of musical experience. Speech Prosody 2010. [Google Scholar]

- Torppa, R.; Huotilainen, M.; Leminen, M.; Lipsanen, J.; Tervaniemi, M. Interplay between singing and cortical processing of music: A longitudinal study in children with cochlear implants. Front. Psychol. 2014, 5, 1389. [Google Scholar] [CrossRef] [Green Version]

- Torppa, R.; Faulkner, A.; Kujala, T.; Huotilainen, M.; Lipsanen, J. Developmental Links Between Speech Perception in Noise, Singing, and Cortical Processing of Music in Children with Cochlear Implants. Music Percept. 2018, 36, 156–174. [Google Scholar] [CrossRef] [Green Version]

- Torppa, R.; Faulkner, A.; Laasonen, M.; Lipsanen, J.; Sammler, D. Links of prosodic stress perception and musical activities to language skills of children with cochlear implants and normal hearing. Ear Hear. 2020, 41, 395–410. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Welch, G.F.; Saunders, J.; Edwards, S.; Palmer, Z.; Himonides, E.; Knight, J.; Mahon, M.; Griffin, S.; Vickers, D.A. Using singing to nurture children’s hearing? A pilot study. Cochlear Implants Int. 2015, 16 (Suppl. 3), S63–S70. [Google Scholar] [CrossRef]

- Yucel, E.; Sennaroglu, G.; Belgin, E. The family oriented musical training for children with cochlear implants: Speech and musical perception results of two year follow-up. Int. J. Pediatr. Otorhinolaryngol. 2009, 73, 1043–1052. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R.; Alain, C. Musicians experience less age-related decline in central auditory processing. Psychol. Aging 2012, 27, 410–417. [Google Scholar] [CrossRef] [PubMed]

- Zendel, B.R.; Alain, C. The influence of lifelong musicianship on neurophysiological measures of concurrent sound segregation. J. Cogn. Neurosci. 2013, 25, 503–516. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Gandour, J.T.; Krishnan, A. Musicians and tone-language speakers share enhanced brainstem encoding but not perceptual benefits for musical pitch. Brain Cogn. 2011, 77, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Kishon-Rabin, L.; Amir, O.; Vexler, Y.; Zaltz, Y. Pitch discrimination: Are professional musicians better than non-musicians? J. Basic Clin. Physiol. Pharmacol. 2001, 12, 125–143. [Google Scholar] [CrossRef] [PubMed]

- Micheyl, C.; Delhommeau, K.; Perrot, X.; Oxenham, A.J. Influence of musical and psychoacoustical training on pitch discrimination. Hear. Res. 2006, 219, 36–47. [Google Scholar] [CrossRef]

- Spiegel, M.F.; Watson, C.S. Performance on frequency-discrimination tasks by musicians and nonmusicians. J. Acoust. Soc. Am. 1984, 76, 1690–1695. [Google Scholar] [CrossRef]

- Gottfried, T.L.; Staby, A.M.; Ziemer, C.J. Musical experience and Mandarin tone discrimination and imitation. J. Acoust. Soc. Am. 2004, 115, 2545. [Google Scholar] [CrossRef]

- Gottfried, T.L.; Riester, D. Relation of pitch glide perception and Mandarin tone identification. J. Acoust. Soc. Am. 2000, 108, 2604. [Google Scholar] [CrossRef]

- Marie, C.; Delogu, F.; Lampis, G.; Belardinelli, M.O.; Besson, M. Influence of musical expertise on segmental and tonal processing in Mandarin Chinese. J. Cogn. Neurosci. 2011, 23, 2701–2715. [Google Scholar] [CrossRef] [PubMed]

- Magne, C.; Schön, D.; Besson, M. Musician children detect pitch violations in both music and language better than nonmusician children: Behavioral and electrophysiological approaches. J. Cogn. Neurosci. 2006, 18, 199–211. [Google Scholar] [CrossRef] [PubMed]

- Sares, A.G.; Foster, N.E.V.; Allen, K.; Hyde, K.L. Pitch and time processing in speech and tones: The effects of musical training and attention. J. Speech Lang. Hear. Res. 2018, 61, 496–509. [Google Scholar] [CrossRef]

- Krizman, J.; Kraus, N. Analyzing the FFR: A tutorial for decoding the richness of auditory function. Hear. Res. 2019, 382, 107779. [Google Scholar] [CrossRef]

- Musacchia, G.; Sams, M.; Skoe, E.; Kraus, N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proc. Natl. Acad. Sci. USA 2007, 104, 15894–15898. [Google Scholar] [CrossRef] [Green Version]

- Kraus, N.; Chandrasekaran, B. Music training for the development of auditory skills. Nat. Rev. Neurosci. 2010, 11, 599–605. [Google Scholar] [CrossRef]

- Chen, J.K.-C.; Chuang, A.Y.C.; McMahon, C.; Hsieh, J.-C.; Tung, T.-H.; Li, L.P.-H. Music training improves pitch perception in prelingually deafened children with cochlear implants. Pediatrics 2010, 125, e793–e800. [Google Scholar] [CrossRef]

- Rosen, S. Temporal information in speech: Acoustic, auditory and linguistic aspects. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 1992, 336, 367–373. [Google Scholar] [CrossRef]

- Kuman, P.V.; Rana, B.; Krishna, R. Temporal processing in musicians and non-musicians. J. Hear Sci. 2014. [Google Scholar]

- Rammsayer, T.; Altenmüller, E. Temporal information processing in musicians and nonmusicians. Music Percept. 2006, 24, 37–48. [Google Scholar] [CrossRef]

- Wang, X.; Ossher, L.; Reuter-Lorenz, P.A. Examining the relationship between skilled music training and attention. Conscious. Cogn. 2015, 36, 169–179. [Google Scholar] [CrossRef] [PubMed]

- Marie, C.; Magne, C.; Besson, M. Musicians and the metric structure of words. J. Cogn. Neurosci. 2011, 23, 294–305. [Google Scholar] [CrossRef] [PubMed]

- Parbery-Clark, A.; Tierney, A.; Strait, D.L.; Kraus, N. Musicians have fine-tuned neural distinction of speech syllables. Neuroscience 2012, 219, 111–119. [Google Scholar] [CrossRef] [Green Version]

- Ambrose, S.E.; Fey, M.E.; Eisenberg, L.S. Phonological awareness and print knowledge of preschool children with cochlear implants. J. Speech Lang. Hear. Res. 2012, 55, 811–823. [Google Scholar] [CrossRef] [Green Version]

- Soleymani, Z.; Mahmoodabadi, N.; Nouri, M.M. Language skills and phonological awareness in children with cochlear implants and normal hearing. Int. J. Pediatr. Otorhinolaryngol. 2016, 83, 16–21. [Google Scholar] [CrossRef] [PubMed]

- Chartrand, J.-P.; Belin, P. Superior voice timbre processing in musicians. Neurosci. Lett. 2006, 405, 164–167. [Google Scholar] [CrossRef] [PubMed]

- Pitt, M.A. Perception of pitch and timbre by musically trained and untrained listeners. J. Exp. Psychol. Hum. Percept. Perform. 1994, 20, 976–986. [Google Scholar] [CrossRef]

- Musacchia, G.; Strait, D.; Kraus, N. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hear. Res. 2008, 241, 34–42. [Google Scholar] [CrossRef]

- Intartaglia, B.; White-Schwoch, T.; Kraus, N.; Schön, D. Music training enhances the automatic neural processing of foreign speech sounds. Sci. Rep. 2017, 7, 12631. [Google Scholar] [CrossRef] [PubMed]

- Ding, N.; Patel, A.D.; Chen, L.; Butler, H.; Luo, C.; Poeppel, D. Temporal modulations in speech and music. Neurosci. Biobehav. Rev. 2017, 81, 181–187. [Google Scholar] [CrossRef] [PubMed]

- Giraud, A.-L.; Poeppel, D. Cortical oscillations and speech processing: Emerging computational principles and operations. Nat. Neurosci. 2012, 15, 511–517. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ding, N.; Melloni, L.; Zhang, H.; Tian, X.; Poeppel, D. Cortical tracking of hierarchical linguistic structures in connected speech. Nat. Neurosci. 2016, 19, 158–164. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buzsáki, G.; Draguhn, A. Neuronal oscillations in cortical networks. Science 2004, 304, 1926–1929. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nozaradan, S.; Peretz, I.; Missal, M.; Mouraux, A. Tagging the neuronal entrainment to beat and meter. J. Neurosci. 2011, 31, 10234–10240. [Google Scholar] [CrossRef]

- Rauschecker, J.P.; Scott, S.K. Maps and streams in the auditory cortex: Nonhuman primates illuminate human speech processing. Nat. Neurosci. 2009, 12, 718–724. [Google Scholar] [CrossRef]

- Zatorre, R.J.; Chen, J.L.; Penhune, V.B. When the brain plays music: Auditory-motor interactions in music perception and production. Nat. Rev. Neurosci. 2007, 8, 547–558. [Google Scholar] [CrossRef]

- Drake, C. Reproduction of musical rhythms by children, adult musicians, and adult nonmusicians. Percept. Psychophys. 1993, 53, 25–33. [Google Scholar] [CrossRef] [Green Version]

- Krause, V.; Pollok, B.; Schnitzler, A. Perception in action: The impact of sensory information on sensorimotor synchronization in musicians and non-musicians. Acta Psychol. (Amst.) 2010, 133, 28–37. [Google Scholar] [CrossRef]

- Repp, B.H. Sensorimotor synchronization and perception of timing: Effects of music training and task experience. Hum. Mov. Sci. 2010, 29, 200–213. [Google Scholar] [CrossRef] [PubMed]

- Repp, B.H.; Doggett, R. Tapping to a very slow beat: A comparison of musicians and nonmusicians. Music Percept. Interdiscip. J. 2007, 24, 367–376. [Google Scholar] [CrossRef]

- Farrugia, N.; Benoit, C.; Harding, E. BAASTA: Battery for the Assessment of Auditory Sensorimotor and Timing Abilities. Behav. Res. Methods 2017, 49, 1128–1145. [Google Scholar] [CrossRef]

- Tierney, A.; Kraus, N. Neural responses to sounds presented on and off the beat of ecologically valid music. Front. Syst. Neurosci. 2013, 7, 14. [Google Scholar] [CrossRef] [Green Version]

- Bouwer, F.L.; Burgoyne, J.A.; Odijk, D.; Honing, H.; Grahn, J.A. What makes a rhythm complex? The influence of musical training and accent type on beat perception. PLoS ONE 2018, 13, e0190322. [Google Scholar] [CrossRef] [Green Version]

- Kung, S.-J.; Tzeng, O.J.L.; Hung, D.L.; Wu, D.H. Dynamic allocation of attention to metrical and grouping accents in rhythmic sequences. Exp. Brain Res. 2011, 210, 269–282. [Google Scholar] [CrossRef] [PubMed]

- Tierney, A.; Kraus, N. Music training for the development of reading skills. Prog. Brain Res. 2013, 207, 209–241. [Google Scholar] [CrossRef] [PubMed]

- Albert, M.L.; Sparks, R.W.; Helm, N.A. Melodic intonation therapy for aphasia. Arch. Neurol. 1973, 29, 130–131. [Google Scholar] [CrossRef]

- Sparks, R.; Helm, N.; Albert, M. Aphasia rehabilitation resulting from melodic intonation therapy. Cortex 1974, 10, 303–316. [Google Scholar] [CrossRef]

- Stahl, B.; Kotz, S.A.; Henseler, I.; Turner, R.; Geyer, S. Rhythm in disguise: Why singing may not hold the key to recovery from aphasia. Brain 2011, 134, 3083–3093. [Google Scholar] [CrossRef] [Green Version]

- Goswami, U.; Thomson, J.; Richardson, U.; Stainthorp, R.; Hughes, D.; Rosen, S.; Scott, S.K. Amplitude envelope onsets and developmental dyslexia: A new hypothesis. Proc. Natl. Acad. Sci. USA 2002, 99, 10911–10916. [Google Scholar] [CrossRef] [Green Version]

- Overy, K. Dyslexia and music. From timing deficits to musical intervention. Ann. N. Y. Acad. Sci. 2003, 999, 497–505. [Google Scholar] [CrossRef] [PubMed]

- Flaugnacco, E.; Lopez, L.; Terribili, C.; Montico, M.; Zoia, S.; Schön, D. Music training increases phonological awareness and reading skills in developmental dyslexia: A randomized control trial. PLoS ONE 2015, 10, e0138715. [Google Scholar] [CrossRef] [PubMed]

- Tierney, A.T.; Krizman, J.; Kraus, N. Music training alters the course of adolescent auditory development. Proc. Natl. Acad. Sci. USA 2015, 112, 10062–10067. [Google Scholar] [CrossRef] [Green Version]

- Moreno, S.; Friesen, D.; Bialystok, E. Effect of music training on promoting preliteracy skills: Preliminary causal evidence. Music Percept. Interdisci. J. 2011, 29, 165–172. [Google Scholar] [CrossRef]

- Baddeley, A. Working memory. Science 1992, 255, 556–559. [Google Scholar] [CrossRef]

- Baddeley, A. Working memory and language: An overview. J. Commun. Disord. 2003, 36, 189–208. [Google Scholar] [CrossRef]

- Daneman, M.; Merikle, P.M. Working memory and language comprehension: A meta-analysis. Psychon. Bull. Rev. 1996, 3, 422–433. [Google Scholar] [CrossRef] [Green Version]

- Francois, C.; Schön, D. Musical expertise boosts implicit learning of both musical and linguistic structures. Cereb. Cortex 2011, 21, 2357–2365. [Google Scholar] [CrossRef]

- Conway, C.M.; Pisoni, D.B.; Anaya, E.M.; Karpicke, J.; Henning, S.C. Implicit sequence learning in deaf children with cochlear implants. Dev. Sci. 2011, 14, 69–82. [Google Scholar] [CrossRef] [Green Version]

- Torkildsen, J. Von K.; Arciuli, J.; Haukedal, C.L.; Wie, O.B. Does a lack of auditory experience affect sequential learning? Cognition 2018, 170, 123–129. [Google Scholar] [CrossRef]

- Nittrouer, S.; Caldwell-Tarr, A.; Lowenstein, J.H. Working memory in children with cochlear implants: Problems are in storage, not processing. Int. J. Pediatr. Otorhinolaryngol. 2013, 77, 1886–1898. [Google Scholar] [CrossRef] [Green Version]

- AuBuchon, A.M.; Pisoni, D.B.; Kronenberger, W.G. Short-Term and Working Memory Impairments in Early-Implanted, Long-Term Cochlear Implant Users Are Independent of Audibility and Speech Production. Ear Hear. 2015, 36, 733–737. [Google Scholar] [CrossRef] [Green Version]

- Talamini, F.; Altoè, G.; Carretti, B.; Grassi, M. Musicians have better memory than nonmusicians: A meta-analysis. PLoS ONE 2017, 12, e0186773. [Google Scholar] [CrossRef] [PubMed]

- Vliegen, J.; Oxenham, A.J. Sequential stream segregation in the absence of spectral cues. J. Acoust. Soc. Am. 1999, 105, 339–346. [Google Scholar] [CrossRef]

- Bidelman, G.M.; Krishnan, A. Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Res. 2010, 1355, 112–125. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Parbery-Clark, A.; Skoe, E.; Lam, C.; Kraus, N. Musician enhancement for speech-in-noise. Ear Hear. 2009, 30, 653–661. [Google Scholar] [CrossRef] [PubMed]

- Slater, J.; Kraus, N.; Woodruff Carr, K.; Tierney, A.; Azem, A.; Ashley, R. Speech-in-noise perception is linked to rhythm production skills in adult percussionists and non-musicians. Lang. Cogn. Neurosci. 2017, 33, 1–8. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Strait, D.L.; Parbery-Clark, A.; Hittner, E.; Kraus, N. Musical training during early childhood enhances the neural encoding of speech in noise. Brain Lang. 2012, 123, 191–201. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Coffey, E.B.J.; Mogilever, N.B.; Zatorre, R.J. Speech-in-noise perception in musicians: A review. Hear. Res. 2017, 352, 49–69. [Google Scholar] [CrossRef]

- Middelweerd, M.J.; Festen, J.M.; Plomp, R. Difficulties with Speech Intelligibility in Noise in Spite of a Normal Pure-Tone Audiogram. Int. J. Audiol. 1990, 29, 1–7. [Google Scholar] [CrossRef]

- Boebinger, D.; Evans, S.; Rosen, S.; Lima, C.F.; Manly, T.; Scott, S.K. Musicians and non-musicians are equally adept at perceiving masked speech. J. Acoust. Soc. Am. 2015, 137, 378–387. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Escobar, J.; Mussoi, B.S.; Silberer, A.B. The effect of musical training and working memory in adverse listening situations. Ear Hear. 2020, 41, 278–288. [Google Scholar] [CrossRef] [PubMed]

- Madsen, S.M.K.; Marschall, M.; Dau, T.; Oxenham, A.J. Speech perception is similar for musicians and non-musicians across a wide range of conditions. Sci. Rep. 2019, 9, 10404. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Madsen, S.M.K.; Whiteford, K.L.; Oxenham, A.J. Musicians do not benefit from differences in fundamental frequency when listening to speech in competing speech backgrounds. Sci. Rep. 2017, 7, 12624. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ruggles, D.R.; Freyman, R.L.; Oxenham, A.J. Influence of musical training on understanding voiced and whispered speech in noise. PLoS ONE 2014, 9, e86980. [Google Scholar] [CrossRef] [PubMed]

- Slater, J.; Skoe, E.; Strait, D.L.; O’Connell, S.; Thompson, E.; Kraus, N. Music training improves speech-in-noise perception: Longitudinal evidence from a community-based music program. Behav. Brain Res. 2015, 291, 244–252. [Google Scholar] [CrossRef]

- Thompson, W.F.; Schellenberg, E.G.; Husain, G. Decoding speech prosody: Do music lessons help? Emotion 2004, 4, 46–64. [Google Scholar] [CrossRef] [Green Version]

- Schön, D.; Magne, C.; Besson, M. The music of speech: Music training facilitates pitch processing in both music and language. Psychophysiology 2004, 41, 341–349. [Google Scholar] [CrossRef] [Green Version]

- Chomsky, N. Logical structures in language. Am. Doc. 1957, 8, 284–291. [Google Scholar] [CrossRef]

- Hauser, M.D.; Chomsky, N.; Fitch, W.T. The faculty of language: What is it, who has it, and how did it evolve? Science 2002, 298, 1569–1579. [Google Scholar] [CrossRef]

- Lerdahl, F.; Jackendoff, R.S. A Generative Theory of Tonal Music; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Friederici, A.D. Towards a neural basis of auditory sentence processing. Trends Cogn. Sci. (Regul. Ed.) 2002, 6, 78–84. [Google Scholar] [CrossRef] [Green Version]

- Frisch, S.; Schlesewsky, M.; Saddy, D.; Alpermann, A. The P600 as an indicator of syntactic ambiguity. Cognition 2002, 85, B83–B92. [Google Scholar] [CrossRef]

- Maess, B.; Koelsch, S.; Gunter, T.C.; Friederici, A.D. Musical syntax is processed in Broca’s area: An MEG study. Nat. Neurosci. 2001, 4, 540–545. [Google Scholar] [CrossRef] [PubMed]

- Patel, A.D.; Gibson, E.; Ratner, J.; Besson, M.; Holcomb, P.J. Processing syntactic relations in language and music: An event-related potential study. J. Cogn. Neurosci. 1998, 10, 717–733. [Google Scholar] [CrossRef]

- Koelsch, S. Neural substrates of processing syntax and semantics in music. In Music that Works; Haas, R., Brandes, V., Eds.; Springer: Vienna, Austria, 2009; pp. 143–153. ISBN 978-3-211-75120-6. [Google Scholar]

- Patel, A.D. Language, music, syntax and the brain. Nat. Neurosci. 2003, 6, 674–681. [Google Scholar] [CrossRef] [PubMed]

- Kotz, S.A.; Gunter, T.C.; Wonneberger, S. The basal ganglia are receptive to rhythmic compensation during auditory syntactic processing: ERP patient data. Brain Lang. 2005, 95, 70–71. [Google Scholar] [CrossRef]

- Kotz, S.A.; Frisch, S.; von Cramon, D.Y.; Friederici, A.D. Syntactic language processing: ERP lesion data on the role of the basal ganglia. J. Int. Neuropsychol. Soc. 2003, 9, 1053–1060. [Google Scholar] [CrossRef]

- Bedoin, N.; Brisseau, L.; Molinier, P.; Roch, D.; Tillmann, B. Temporally Regular Musical Primes Facilitate Subsequent Syntax Processing in Children with Specific Language Impairment. Front. Neurosci. 2016, 10, 245. [Google Scholar] [CrossRef] [Green Version]

- Przybylski, L.; Bedoin, N.; Krifi-Papoz, S.; Herbillon, V.; Roch, D.; Léculier, L.; Kotz, S.A.; Tillmann, B. Rhythmic auditory stimulation influences syntactic processing in children with developmental language disorders. Neuropsychology 2013, 27, 121–131. [Google Scholar] [CrossRef] [Green Version]

- Pickering, M.J.; Garrod, S. Toward a mechanistic psychology of dialogue. Behav. Brain Sci. 2004, 27, 169–190, discussion 190. [Google Scholar] [CrossRef]

- Garrod, S.; Pickering, M.J. Joint action, interactive alignment, and dialog. Top. Cogn. Sci. 2009, 1, 292–304. [Google Scholar] [CrossRef] [PubMed]

- Tuller, B.; Lancia, L. Speech dynamics: Converging evidence from syllabification and categorization. J. Phon. 2017, 64, 21–33. [Google Scholar] [CrossRef]

- Wiltermuth, S.S.; Heath, C. Synchrony and cooperation. Psychol. Sci. 2009, 20, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Kirschner, S.; Tomasello, M. Joint music making promotes prosocial behavior in 4-year-old children. Evol. Hum. Behav. 2010, 31, 354–364. [Google Scholar] [CrossRef]

- Cirelli, L.K.; Einarson, K.M.; Trainor, L.J. Interpersonal synchrony increases prosocial behavior in infants. Dev. Sci. 2014, 17, 1003–1011. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pesnot Lerousseau, J.; Hidalgo, C.; Schön, D. Musical Training for Auditory Rehabilitation in Hearing Loss. J. Clin. Med. 2020, 9, 1058. https://doi.org/10.3390/jcm9041058

Pesnot Lerousseau J, Hidalgo C, Schön D. Musical Training for Auditory Rehabilitation in Hearing Loss. Journal of Clinical Medicine. 2020; 9(4):1058. https://doi.org/10.3390/jcm9041058

Chicago/Turabian StylePesnot Lerousseau, Jacques, Céline Hidalgo, and Daniele Schön. 2020. "Musical Training for Auditory Rehabilitation in Hearing Loss" Journal of Clinical Medicine 9, no. 4: 1058. https://doi.org/10.3390/jcm9041058

APA StylePesnot Lerousseau, J., Hidalgo, C., & Schön, D. (2020). Musical Training for Auditory Rehabilitation in Hearing Loss. Journal of Clinical Medicine, 9(4), 1058. https://doi.org/10.3390/jcm9041058