Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD

Abstract

1. Introduction

2. Data Material

2.1. Image Acquisition

2.2. Sample Data Set

3. Theoretical Background

3.1. Classical SSD Deep Learning Model

3.2. Improved SSD Deep Learning Model

3.3. Overview of Detection Algorithm

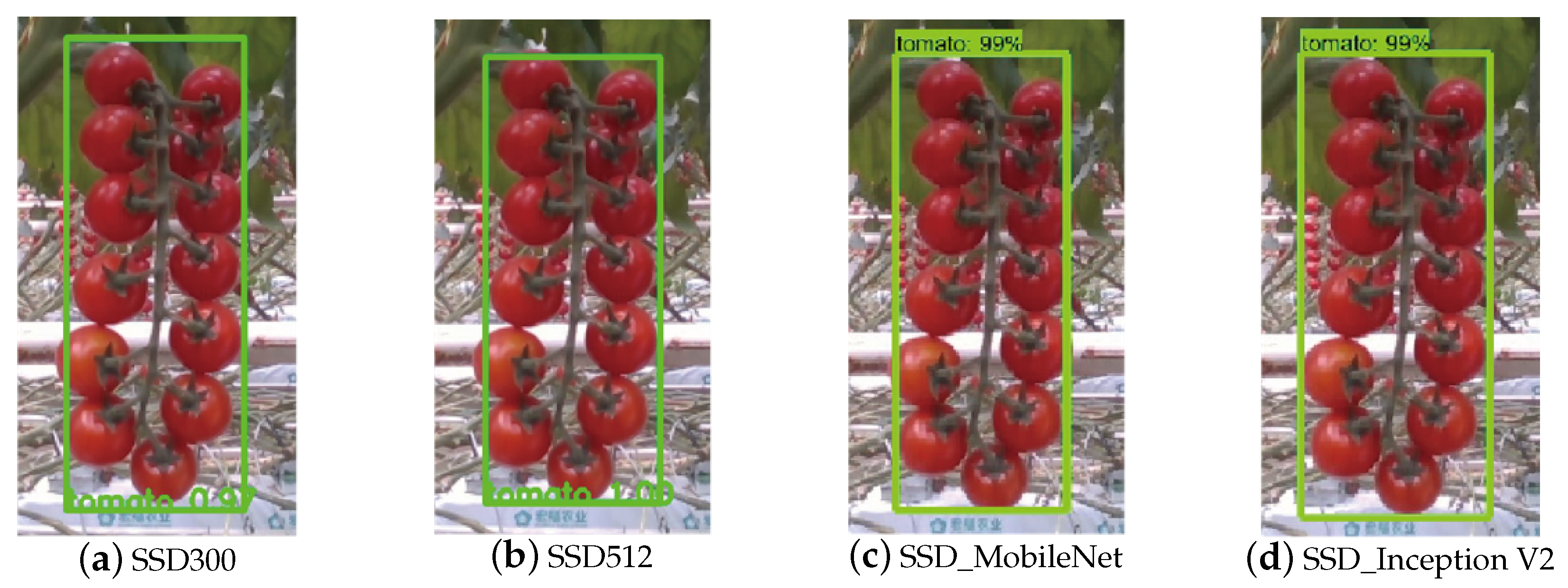

4. Results and Disscussion

4.1. Experimental Setup

4.2. Experiment Design

4.2.1. Experiment Parameters

4.2.2. Evaluation Standard

4.3. Experiment Results Analysis

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zheng, W. Investigation and analysis on production input and output of farmers in main tomato producing areas. Rural Econ. Sci.-Technol. 2019, 30, 112. [Google Scholar]

- Du, Y.; Wei, J. A new technique for high yield and high efficiency cultivation of cherry tomato. Jiangxi Agric. 2019, 12, 9. [Google Scholar]

- Li, Y. Research on key techniques of object detection and grasping of tomato picking robot. New Technol. New Products China 2018, 23, 55–57. [Google Scholar]

- Jianjun, Y.; Wang, X.; Mao, H.; Chen, S.; Zhang, J. A comparative study on tomato image segmentation in RGB and HSI color space. J. Agric. Mech. Res. 2006, 11, 171–174. [Google Scholar]

- Kondo, N.; Nishitsuji, Y.; Ling, P.P.; Ting, K.C. Visual feedback guided robotic cherry tomato harvesting. Trans. ASAE 1996, 39, 2331–2338. [Google Scholar] [CrossRef]

- Ruihe, Z.; Changying, J.; Shen, M.; Cao, K. Application of Computer Vision to Tomato Harvestin. Trans. Chin. Soc. Agric. Mach. 2001, 32, 50–58. [Google Scholar]

- Qingchun, F.; Wei, C.; Jianjun, Z.; Xiu, W. Design of structured-light vision system for tomato harvesting robot. Int. J. Agric. Biol. Eng. 2014, 7, 19–26. [Google Scholar]

- Zhao, J.; Liu, M.; Yang, G. Discrimination of Mature Tomato Based on HIS Color Space in Natural Outdoor Scenes. Trans. Chin. Soc. Agric. Mach. 2004, 35, 122–124. [Google Scholar]

- Wei, X.; Jia, K.; Lan, J.; Li, Y.; Zeng, Y.; Wang, C. Automatic method of fruit object extraction under complex agricultural background for vision system of fruit picking robot. Optik 2014, 125, 5684–5689. [Google Scholar] [CrossRef]

- Wang, C.; Zou, X.; Tang, Y.; Luo, L.; Feng, W. Localisation of litchi in an unstructured environment using binocular stereo vision. Biosyst. Eng. 2016, 145, 39–51. [Google Scholar] [CrossRef]

- Alex, K.; Ilya, S.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, CA, USA, 3 December 2012; pp. 1097–1105. [Google Scholar]

- Fukushima, K. A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef] [PubMed]

- Yuncheng, Z.; Tongyu, X.; Wei, Z.; Hanbing, D. Classification and recognition approaches of tomato main organs based on DCNN. Trans. Chin. Soc. Agric. Eng. 2017, 33, 219–226. [Google Scholar]

- Fu, L.; Feng, Y.; Tola, E.; Liu, Z.; Li, R.; Cui, Y. Image recognition method of multi-cluster kiwifruit in field based on convolutional neural networks. Trans. Chin. Soc. Agric. Eng. 2018, 34, 205–211. [Google Scholar]

- Hongxing, P.; Bo, H.; Yuanyuan, S.; Zesen, L.; Chaowu, Z.; Yan, C.; Juntao, X. General improved SSD model for picking object recognition of multiple fruits in natural environment. Trans. Chin. Soc. Agric. Eng. 2018, 34, 155–162. [Google Scholar]

- Wei, L.; Dragomir, A.; Dumitru, E.; Christian, S.; Scott, R.; Cheng-Yang, F. SSD:Single Shot MultiBox Detector. arXiv 2016, arXiv:1512.02325. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 2, 303–338. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 1 March 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Sturm, R.A.; Duffy, D.L.; Zhao, Z.Z.; Leite, F.P.N.; Stark, M.S.; Hayward, N.K.; Martin, N.G.; Montgomery, G.W. A single SNP in an evolutionary conserved region within intron 86 of the HERC2 gene determines human blue-brown eye color. Am. J. Hum. Gen. 2008, 82, 424–431. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June 2016; pp. 779–788. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Howard, A.G.; Menglong, Z.; Bo, C.; Kalenichenko, D.; Weijun, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sergey, I.; Christian, S. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Van Gool, A.N.L. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 24 August 2006. [Google Scholar]

- Feng Qingchun, Z.C.W.X. Fruit bunch measurement method for cherry tomato based on visual servo. Trans. Chin. Soc. Agric. Eng. 2015, 16, 206. [Google Scholar]

- Qingchun Feng, W.Z.P.F. Design and test of robotic harvesting system for cherry tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar]

| Research Object | Data Sets | |||

|---|---|---|---|---|

| Training Set | Test Set | Validation Set | Total Quantity | |

| Cherry tomato | 2768 | 346 | 346 | 3460 |

| Conditions | Methods | Tomato Amount | Correctly Identified Amount | P | Missed Amount | F |

|---|---|---|---|---|---|---|

| Separated | SSD300 | 50 | 44 | 88% | 6 | 12% |

| SSD512 | 46 | 92% | 4 | 8% | ||

| SSD_MobileNet | 46 | 92% | 4 | 8% | ||

| SSD_Inception V2 | 47 | 94% | 3 | 6% |

| Conditions | Methods | Amount | Correctly Identified Amount | P | Missed Amount | F |

|---|---|---|---|---|---|---|

| Separated | SSD300 | 50 | 30 | 60% | 20 | 40% |

| SSD512 | 45 | 90% | 5 | 10% | ||

| SSD_MobileNet | 47 | 94% | 3 | 6% | ||

| SSD_Inception V2 | 46 | 92% | 4 | 8% |

| Conditions | Methods | Amount | Correctly Identified Amount | P | Missed Amount | F |

|---|---|---|---|---|---|---|

| Sunny or Uneven Illumination | SSD300 | 50 | 20 | 40% | 30 | 60% |

| SSD512 | 45 | 90% | 5 | 10% | ||

| SSD_MobileNet | 46 | 92% | 4 | 8% | ||

| SSD_Inception V2 | 48 | 96% | 2 | 4% | ||

| Shadow | SSD300 | 50 | 40 | 80% | 10 | 20% |

| SSD512 | 48 | 96% | 2 | 4% | ||

| SSD_MobileNet | 48 | 96% | 2 | 4% | ||

| SSD_Inception V2 | 49 | 98% | 1 | 2% |

| Conditions | Methods | Amount | Correctly Identified Amount | P | Missed Amount | F |

|---|---|---|---|---|---|---|

| Side-grown | SSD300 | 50 | 15 | 30% | 35 | 70% |

| SSD512 | 43 | 86% | 7 | 14% | ||

| SSD_MobileNet | 38 | 76% | 12 | 24% | ||

| SSD_Inception V2 | 37 | 74% | 13 | 26% |

| Evaluation Index | Different Model | |||

|---|---|---|---|---|

| SSD300 | SSD512 | SSD_MobileNet | SSD_Inception V2 | |

| AP/% | 92.73 | 93.87 | 97.98 | 98.85 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, T.; Lv, L.; Zhang, F.; Fu, J.; Gao, J.; Zhang, J.; Li, W.; Zhang, C.; Zhang, W. Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD. Agriculture 2020, 10, 160. https://doi.org/10.3390/agriculture10050160

Yuan T, Lv L, Zhang F, Fu J, Gao J, Zhang J, Li W, Zhang C, Zhang W. Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD. Agriculture. 2020; 10(5):160. https://doi.org/10.3390/agriculture10050160

Chicago/Turabian StyleYuan, Ting, Lin Lv, Fan Zhang, Jun Fu, Jin Gao, Junxiong Zhang, Wei Li, Chunlong Zhang, and Wenqiang Zhang. 2020. "Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD" Agriculture 10, no. 5: 160. https://doi.org/10.3390/agriculture10050160

APA StyleYuan, T., Lv, L., Zhang, F., Fu, J., Gao, J., Zhang, J., Li, W., Zhang, C., & Zhang, W. (2020). Robust Cherry Tomatoes Detection Algorithm in Greenhouse Scene Based on SSD. Agriculture, 10(5), 160. https://doi.org/10.3390/agriculture10050160