Application of Machine Learning Algorithms to Predict Body Condition Score from Liveweight Records of Mature Romney Ewes

Abstract

:1. Introduction

2. Materials and Methods

2.1. Farms and Animals Used and Data Collection

2.2. Statistical Analyses

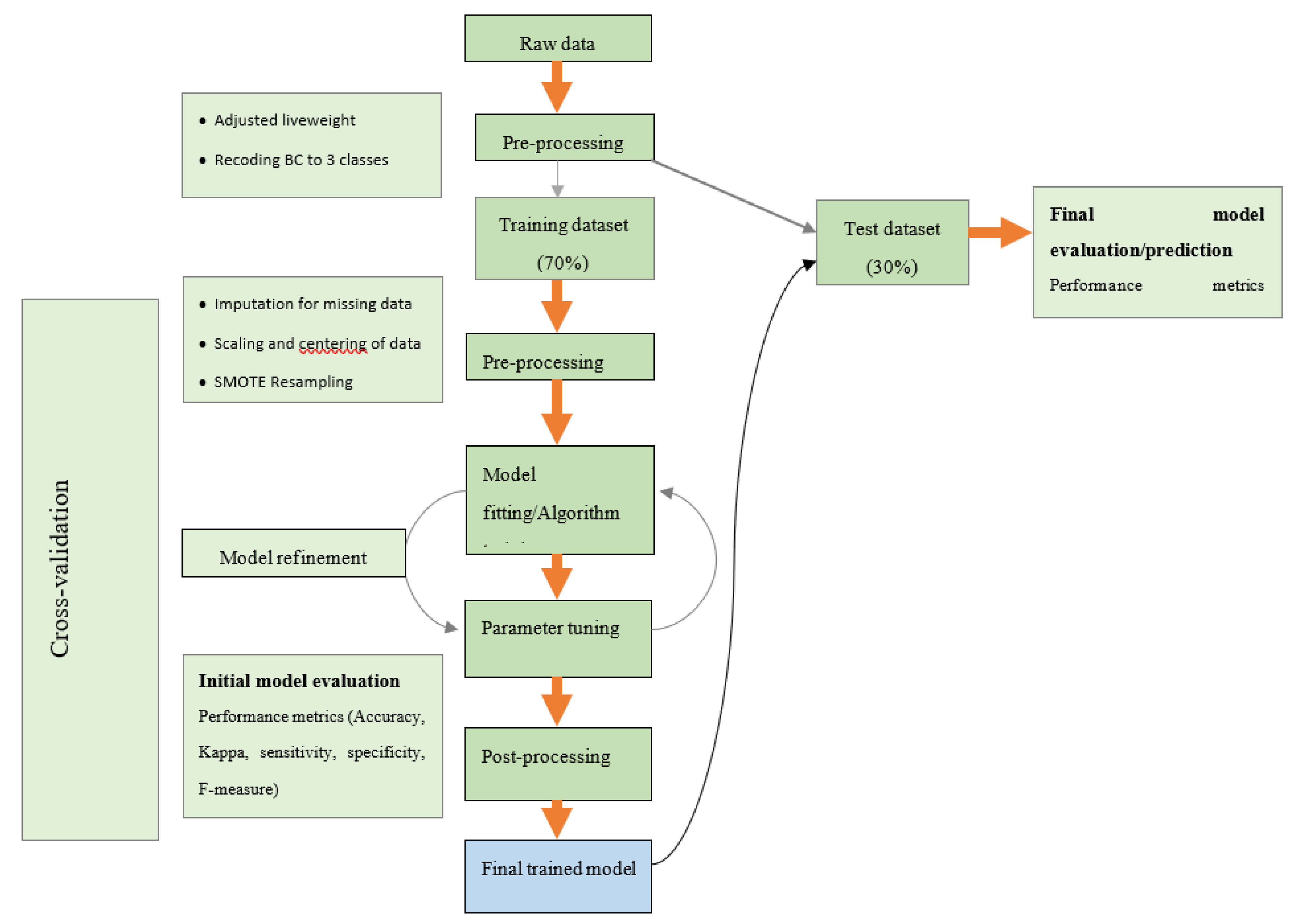

2.2.1. Variable Selection and Model Building

2.2.2. Model Performance Evaluation

3. Results

3.1. Overall Performance of Machine Learning Models

3.1.1. Pre-Breeding

3.1.2. Pregnancy Diagnosis

3.1.3. Pre-Lambing

3.1.4. Weaning

3.1.5. The Balance between Sensitivity and Specificity

3.1.6. Overall Model Ranking

4. Discussion

4.1. Overall Accuracy

4.2. Class-Level Accuracy

4.3. Class-Level Model Authenticity

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Ethics Statement

Appendix A

| Model 1 | Concept 2 | Parameter and Processes Required 3 | Sample Size and Data Dimensionality | Assumptions and Data Requirements | Covariate Pools 4 | Computational Time | Interpretability 5 | Prone to Overfitting | References |

|---|---|---|---|---|---|---|---|---|---|

| Ordinal | Probabilistic regression | No hyperparameters | Affected by small sample sizes | proportional odds, linearity | No | Fast | White box | Yes | [32,56,58] |

| Multinom | Probabilistic regression | No hyperparameters | Yes | proportional odds, linearity | No | Fast | White box | Yes | [32,58,61] |

| LDA | Dimension reduction + separability between classes | No hyperparameters | Affected by small sample sizes, Good for high dimension data | Normality, linearity & continuous independent variables | No | Fast | White box | Yes | [62,63,64] |

| CART | Decision trees and regression | Hyperparameters | Performs well with large datasets | numerical or categorical outcome | can remove redundant covariates | Fast | Low-level black box | No | [65,66] |

| RF | Decision trees, regression and bugging | Up to three hyperparameters | Performs well on small & high dimensionality data | numerical or categorical outcome | can remove redundant covariates | Decreases with sample size | Low-level black box | No | [17,67] |

| XGB | Regression trees + gradient boosting | Hyperparameter | Require large datasets | numerical or categorical outcome | can remove redundant covariates | Very fast | High-level black box | Yes, if large number of trees | [68,69] |

| K-NN | Regression curve + hyperparameter (k) | One hyperparameter | Not good for large & high dimensionality data | No assumptions but requires scaled data | No | Decreases with sample size | Fairly interpretable | Yes | [17,70] |

| SVM | Maximal margins + kennel functions | Two hyperparameters | Not good for high dimension data | No assumptions | No | Decreases with sample size | High-level black box | Yes | [71,72] |

| ANN | Nodes (artificial neurons) | Up to seven hyperparameters | Sensitive to sample size and data dimensionality | numerical or categorical outcome | No | computationally very expensive and time consuming | High-level black box | Yes | [73] |

| Model A | Model B | PB | PD | PL | W |

|---|---|---|---|---|---|

| XGB | K-NN | 0.011 | 0.000 | 0.000 | 0.000 |

| RF | 1.000 | 0.000 | 0.245 | 0.007 | |

| SVM | 0.010 | 0.000 | 0.000 | 0.000 | |

| ANN | 0.000 | 0.000 | 0.001 | 0.000 | |

| Multinorm | 0.000 | 0.000 | 0.000 | 0.000 | |

| LDA | 0.000 | 0.000 | 0.000 | 0.000 | |

| Ordinal | 0.000 | 0.000 | 0.000 | 0.000 | |

| CART | 0.000 | 0.000 | 0.000 | 0.000 | |

| K-NN | RF | 0.003 | 0.281 | 0.000 | 0.041 |

| SVM | 1.000 | 1.000 | 0.000 | 1.000 | |

| ANN | 0.231 | 0.000 | 1.000 | 0.000 | |

| Multinorm | 0.000 | 0.000 | 0.779 | 0.000 | |

| LDA | 0.000 | 0.000 | 1.000 | 0.000 | |

| Ordinal | 0.000 | 0.000 | 0.000 | 0.000 | |

| CART | 0.000 | 0.000 | 0.004 | 0.000 | |

| RF | SVM | 0.203 | 0.014 | 0.008 | 0.002 |

| ANN | 0.002 | 0.000 | 0.002 | 0.000 | |

| Multinorm | 0.000 | 0.000 | 0.000 | 0.000 | |

| LDA | 0.000 | 0.000 | 0.000 | 0.000 | |

| Ordinal | 0.000 | 0.000 | 0.000 | 0.000 | |

| CART | 0.000 | 0.000 | 0.000 | 0.000 | |

| SVM | ANN | 0.563 | 0.000 | 0.021 | 0.000 |

| Multinorm | 0.000 | 0.000 | 0.000 | 0.000 | |

| LDA | 0.000 | 0.000 | 0.000 | 0.000 | |

| Ordinal | 0.000 | 0.000 | 0.000 | 0.000 | |

| CART | 0.000 | 0.000 | 0.000 | 0.000 | |

| ANN | Multinorm | 0.002 | 0.000 | 1.000 | 0.000 |

| LDA | 0.000 | 0.000 | 1.000 | 0.000 | |

| Ordinal | 0.002 | 0.000 | 0.000 | 0.000 | |

| CART | 0.000 | 0.000 | 0.903 | 0.000 | |

| Multinorm | LDA | 0.019 | 1.000 | 1.000 | 1.000 |

| Ordinal | 0.004 | 0.000 | 0.000 | 0.000 | |

| CART | 0.000 | 0.000 | 0.023 | 0.000 | |

| LDA | Ordinal | 0.019 | 0.000 | 1.000 | 0.006 |

| CART | 0.000 | 0.000 | 0.032 | 0.000 | |

| Ordinal | CART | 0.000 | 0.002 | 0.047 | 0.008 |

| PB | PD | PL | W | |||||

|---|---|---|---|---|---|---|---|---|

| Model | Precision % | F-Measure % | Precision % | F-Measure % | Precision % | F-Measure % | Precision % | F-Measure % |

| XGB | 86.1(78.2–97.7) | 86.0(80.1–96.9) | 87.9(80.8–94.5) | 87.6(84.1–90.0) | 87.9(80.8–94.5) | 87.6(84.1–90.0) | 89.1(84.2–92.8) | 89.0(87.5–91.3) |

| RF | 85.3(78.1–95.9) | 85.3(79.0–95.6) | 86.9(83.2–91.1) | 86.7(83.6–90.7) | 86.1(77.0–91.7) | 85.7(81.0–89.0) | 84.9(79.3–88.8) | 84.7(83.2–86.4) |

| SVM | 82.7(74.1–95.1) | 82.7(74.5–94.4) | 83.4(74.6–90.3) | 82.6(80.0–87.2) | 83.5(68.7–95.0) | 81.8(76.0–86.4) | 82.8(71.6–89.4) | 82.0(78.0–85.7) |

| K-NN | 82.3(75.0–94.4) | 82.0(71.8–95.3) | 84.7(77.5–89.5) | 84.5(80.9–90.6) | 64.5(58.1–68.6) | 64.1(61.8–65.5) | 84.9(79.3–88.8) | 85.1(80.5–88.1) |

| ANN | 80.3(71.9–93.4) | 80.3(71.6–92.6) | 76.3(72.1–83.7) | 76.1(73.2–80.7) | 73.5(64.5–83.3) | 71.5(67.4–76.2) | 79.5(70.0–85.0) | 78.7(76.4–82.6) |

| Multinom | 76.8(67.7–89.3) | 76.8(68.1–89.1) | 70.2(65.6–76.4) | 70.0(64.1–73.8) | 64.8(62.8–65.9) | 64.6(62.1–67.1) | 68.1(65.0–70.7) | 67.7(65.7–70.2) |

| LDA | 75.0(64.3–89.0) | 74.9(64.5–88.3) | 70.5(65.1–79.0) | 69.3(61.8–73.3) | 65.3(61.9–67.9) | 64.9(61.5–67.7) | 68.3(63.4–70.8) | 67.6(65.8–70.7) |

| Ordinal | 73.2(59.2–88.5) | 72.9(60.4–85.3) | 64.9(55.0–77.4) | 63.8(58.4–68.1) | 57.3(45.8–64.2) | 57.5(43.5–66.7) | 64.2(52.9–70.9) | 63.4(57.4–68.7) |

| CART | 62.1(47.3–77.7) | 62.3(41.5–80.0) | 61.1(55.5–68.9) | 59.2(48.5–64.6) | 57.3(55.1–60.5) | 55.7(46.6–62.5) | 55.4(53.4–59.0) | 54.8(45.3–60.9) |

References

- Jefferies, B. Body condition scoring and its use in management. Tasman. J. Agr. 1961, 32, 19–21. [Google Scholar]

- Kenyon, P.R.; Maloney, S.K.; Blache, D. Review of sheep body condition score in relation to production characteristics. NZJ Agric. Res. 2014, 57, 38–64. [Google Scholar] [CrossRef]

- Coates, D.B.; Penning, P. Measuring animal performance. In Field and Laboratory Methods for Grassland and Animal Production Research; Jones, L., Ed.; CABI Publishing: Wallingford, UK, 2000; pp. 353–402. [Google Scholar]

- Morel, P.C.H.; Schreurs, N.M.; Corner-Thomas, R.A.; Greer, A.W.; Jenkinson, C.M.C.; Ridler, A.L.; Kenyon, P.R. Live weight and body composition associated with an increase in body condition score of mature ewes and the relationship to dietary energy requirements. Small Ruminant Res. 2016, 143, 8–14. [Google Scholar] [CrossRef]

- Jones, A.; van Burgel, A.J.; Behrendt, R.; Curnow, M.; Gordon, D.J.; Oldham, C.M.; Rose, I.J.; Thompson, A.N. Evaluation of the impact of Lifetimewool on sheep producers. Anim. Prod. Sci. 2011, 51, 857–865. [Google Scholar] [CrossRef]

- Corner-Thomas, R.A.; Kenyon, P.R.; Morris, S.T.; Ridler, A.L.; Hickson, R.E.; Greer, A.W.; Logan, C.M.; Blair, H.T. Brief communication: The use of farm-management tools by New Zealand sheep farmers: Changes with time. Proc. NZ Soc. Anim. Prod. 2016, 76, 78–80. [Google Scholar]

- Besier, R.B.; Hopkins, D. Farmers’ estimations of sheep weights to calculate drench dose. J. Dept. Agr. West. Aust., Series 4 1989, 30, 120–121. [Google Scholar]

- McHugh, N.; McGovern, F.M.; Creighton, P.; Pabiou, T.; McDermott, K.; Wall, E.; Berry, D.P. Mean difference in live-weight per incremental difference in body condition score estimated in multiple sheep breeds and crossbreds. Animal 2019, 13, 1–5. [Google Scholar] [CrossRef]

- Semakula, J.; Corner-Thomas, R.A.; Morris, S.T.; Blair, H.T.; Kenyon, P.R. The Effect of Age, Stage of the Annual Production Cycle and Pregnancy-Rank on the Relationship between Liveweight and Body Condition Score in Extensively Managed Romney Ewes. Animals 2020, 10, 784. [Google Scholar] [CrossRef]

- Semakula, J.; Corner-Thomas, R.A.; Morris, S.T.; Blair, H.T.; Kenyon, P.R. Predicting Ewe Body Condition Score Using Lifetime Liveweight and Liveweight Change, and Previous Body Condition Score Record. Animals 2020, 10, 1182. [Google Scholar] [CrossRef]

- Bishop, P.A.; Herron, R.L. Use and misuse of the Likert item responses and other ordinal measures. Int. J. Exerc. Sci. 2015, 8, 297. [Google Scholar]

- Blaikie, N. Analyzing Quantitative Data: From Description to Explanation; Sage: New York, NY, USA, 2003. [Google Scholar] [CrossRef] [Green Version]

- Sullivan, G.M.; Artino, A.R. Analyzing and interpreting data from Likert-type scales. J. Grad. Med. Educ. 2013, 5, 541–542. [Google Scholar] [CrossRef] [Green Version]

- Wicker, J.E. Applications of modern statistical methods to analysis of data in physical science. Ph.D. Thesis, University of Tennessee, Knoxville, TN, USA, May 2006. [Google Scholar]

- Shahinfar, S.; Kahn, L. Machine learning approaches for early prediction of adult wool growth and quality in Australian Merino sheep. Comput. Electron. Agric. 2018, 148, 72–81. [Google Scholar] [CrossRef]

- Shahinfar, S.; Kelman, K.; Kahn, L. Prediction of sheep carcass traits from early-life records using machine learning. Comput. Electron. Agric. 2019, 156, 159–177. [Google Scholar] [CrossRef]

- Khaledian, Y.; Miller, B.A. Selecting appropriate machine learning methods for digital soil mapping. Appl. Math. Model. 2020, 81, 401–418. [Google Scholar] [CrossRef]

- Morota, G.; Ventura, R.V.; Silva, F.F.; Koyama, M.; Fernando, S.C. Big data analytics and precision animal agriculture symposium: Machine learning and data mining advance predictive big data analysis in precision animal agriculture. Big data analysis in Animal Science 2018, 96, 1540–1550. [Google Scholar] [CrossRef] [PubMed]

- Bakoev, S.; Getmantseva, L.; Kolosova, M.; Kostyunina, O.; Chartier, D.R.; Tatarinova, T.V. PigLeg: Prediction of swine phenotype using machine learning. PeerJ 2020, 8, e8764. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016; R version 3.4.4 (2018-03-15) ed2016; Available online: https://cran.r-project.org (accessed on 15 March 2018).

- Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Triguero, I.; del Río, S.; López, V.; Bacardit, J.; Benítez, J.M.; Herrera, F. ROSEFW-RF: The winner algorithm for the ECBDL’14 big data competition: An extremely imbalanced big data bioinformatics problem. Knowl-Based. Syst. 2015, 87, 69–89. [Google Scholar] [CrossRef] [Green Version]

- Leevy, J.L.; Khoshgoftaar, T.M.; Bauder, R.A.; Seliya, N. A survey on addressing high-class imbalance in big data. J. Big Data 2018, 5, 42. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2020. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Branco, P.; Ribeiro, R.P.; Torgo, L. UBL: An R package for utility-based learning. arXiv 2016, arXiv:160408079. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Regularization Paths for Generalized Linear Models via Coordinate Descent. J. Stat. Soft. 2010, 33, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Archer, K.J.; Williams, A.A. L 1 penalized continuation ratio models for ordinal response prediction using high-dimensional datasets. Stat. Med. 2012, 31, 1464–1474. [Google Scholar] [CrossRef] [Green Version]

- Tropsha, A.; Gramatica, P.; Gombar, V.K. The Importance of Being Earnest: Validation is the Absolute Essential for Successful Application and Interpretation of QSPR Models. QSAR & Comb. Sci. 2003, 22, 69–77. [Google Scholar] [CrossRef]

- Valletta, J.J.; Torney, C.; Kings, M.; Thornton, A.; Madden, J. Applications of machine learning in animal behaviour studies. Anim. Behav. 2017, 124, 203–220. [Google Scholar] [CrossRef]

- Torgo, L. Data Mining with R: Learning with Case Studies; Chapman and Hall/CRC: Boca Raton, FL, USA, 2016; p. 426. [Google Scholar]

- Agresti, A.; Kateri, M. Categorical Data Analysis. In International Encyclopedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 206–208. [Google Scholar]

- Zhao, H.; Wang, Z.; Nie, F. A new formulation of linear discriminant analysis for robust dimensionality reduction. Trans. Knowl. Data Eng. 2018, 31, 629–640. [Google Scholar] [CrossRef]

- Rennie, J.D.; Shih, L.; Teevan, J.; Karger, D.R. Tackling the poor assumptions of naive bayes text classifiers. In Proceedings of the 20th International Conference on Machine Learning (ICML-03), Washington, DC, USA, 21–24 August 2003. [Google Scholar] [CrossRef]

- Zhu, F.; Tang, M.; Xie, L.; Zhu, H. A Classification Algorithm of CART Decision Tree based on MapReduce Attribute Weights. Int. J.Performability Eng. 2018, 14. [Google Scholar] [CrossRef]

- Zeng, Z.Q.; Yu, H.B.; Xu, H.R.; Xie, Y.Q.; Gao, J. Fast training support vector machines using parallel sequential minimal optimization. In Proceedings of the 3rd International Conference on Intelligent System and Knowledge Engineering, Xiamen, China, 17–19 November 2008. [Google Scholar] [CrossRef]

- Breiman, L. Arcing classifier (with discussion and a rejoinder by the author). The ann. Stat. 1998, 26, 801–849. [Google Scholar] [CrossRef]

- Sun, S.; Huang, R. An adaptive k-nearest neighbor algorithm. In Proceedings of the 2010 Seventh International Conference on Fuzzy Systems and Knowledge Discovery, Yantai, China, 10–12 August 2010. [Google Scholar] [CrossRef]

- Ebrahimi, M.; Mohammadi-Dehcheshmeh, M.; Ebrahimie, E.; Petrovski, K.R. Comprehensive analysis of machine learning models for prediction of sub-clinical mastitis: Deep learning and gradient-boosted trees outperform other models. Comput. Biol. Med. 2019, 114, 103456. [Google Scholar] [CrossRef]

- Fisher, D.H. Knowledge acquisition via incremental conceptual clustering. Mach. Learn. 1987, 2, 139–172. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef] [PubMed]

- Botchkarev, A. Performance Metrics (Error Measures) in Machine Learning Regression, Forecasting and Prognostics: Properties and Typology. IJIKM 2019, 14, 45–79. [Google Scholar] [CrossRef] [Green Version]

- Lan, Y.; Zhou, D.; Zhang, H.; Lai, S. Development of Early Warning Models. In Early Warning for Infectious Disease Outbreak; Yang, W., Ed.; Academic Press: Cambridge, MA, USA, 2017; pp. 35–74. [Google Scholar]

- Glorfeld, L.W. An improvement on Horn’s parallel analysis methodology for selecting the correct number of factors to retain. Educ. Psychol. Meas. 1995, 55, 377–393. [Google Scholar] [CrossRef]

- Horn, J.L. A rationale and test for the number of factors in factor analysis. Psychometrika 1965, 30, 179–185. [Google Scholar] [CrossRef] [PubMed]

- Lê, S.; Josse, J.; Husson, F. FactoMineR: An R package for multivariate analysis. J. Stat. Softw 2008, 25, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Dinga, R.; Penninx, B.W.; Veltman, D.J.; Schmaal, L.; Marquand, A.F. Beyond accuracy: Measures for assessing machine learning models, pitfalls and guidelines. bioRxiv 2019, 743138. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M. A review on evaluation metrics for data classification evaluations. IJDKP 2015, 5, 1. [Google Scholar] [CrossRef]

- Galdi, P.; Tagliaferri, R. Data mining: Accuracy and error measures for classification and prediction. Encycl. Bioinform. Comput. Biol. 2018, 416–431. [Google Scholar] [CrossRef]

- Dietterich, T.G. Ensemble Methods in Machine Learning. In Multiple Classifier Systems. MCS 2000. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1857. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 159–174. [Google Scholar] [CrossRef] [Green Version]

- Fleiss, J.L. The measurement of interrater agreement. In Statistical Methods for Rates and Proportions, 2nd ed.; John Wiley & Sons: New York, NY, USA, 1981; pp. 212–236. [Google Scholar]

- Kenyon, P.R.; Pain, S.J.; Hutton, P.G.; Jenkinson, C.M.C.; Morris, S.T.; Peterson, S.W.; Blair, H.T. Effects of twin-bearing ewe nutritional treatments on ewe and lamb performance to weaning. Anim. Prod. Sci. 2011, 51, 406–415. [Google Scholar] [CrossRef]

- Obuchowski, N.A.; Bullen, J.A. Receiver operating characteristic (ROC) curves: Review of methods with applications in diagnostic medicine. Phys. Med. Biol. 2018, 63, 07TR1. [Google Scholar] [CrossRef] [PubMed]

- Agresti, A. Modelling ordered categorical data: Recent advances and future challenges. Stat. Med. 1999, 18, 2191–2207. [Google Scholar] [CrossRef]

- Kenyon, P.R.; Morris, S.T.; Burnham, D.L.; West, D.M. Effect of nutrition during pregnancy on hogget pregnancy outcome and birthweight and liveweight of lambs. N. Z. J. Agric. Res. 2008, 51, 77–83. [Google Scholar] [CrossRef]

- Liao, T.F. Interpreting Probability Models: Logit, Probit, and other Generalized Linear Models; Sage: New York, NY, USA, 1994. [Google Scholar]

- Naeger, D.M.; Kohi, M.P.; Webb, E.M.; Phelps, A.; Ordovas, K.G.; Newman, T.B. Correctly using sensitivity, specificity, and predictive values in clinical practice: How to avoid three common pitfalls. Am. J. Roentgenol 2013, 200, W566–W570. [Google Scholar] [CrossRef] [Green Version]

- Parikh, R.; Mathai, A.; Parikh, S.; Sekhar, G.C.; Thomas, R. Understanding and using sensitivity, specificity and predictive values. Indian J. Ophthalmol 2008, 56, 45. [Google Scholar] [CrossRef] [PubMed]

- Böhning, D. Multinomial logistic regression algorithm. Annals of the Institute of Statistical Mathematics 1992, 44, 197–200. [Google Scholar] [CrossRef]

- Chen, L.F.; Liao, H.Y.M.; Ko, M.T.; Lin, J.C.; Yu, G.-J. A new LDA-based face recognition system which can solve the small sample size problem. Pattern recognition 2000, 33, 1713–1726. [Google Scholar] [CrossRef]

- Yu, H.; Yang, J. A direct LDA algorithm for high-dimensional data—with application to face recognition. Pattern recognition 2001, 34, 2067–2070. [Google Scholar] [CrossRef] [Green Version]

- Zheng, W.; Zhao, L.; Zou, C. An efficient algorithm to solve the small sample size problem for LDA. Pattern Recognition 2004, 37, 1077–1079. [Google Scholar] [CrossRef]

- Quinlan, J.R. Simplifying decision trees. Int. J. Man. Mach. Stud. 1987, 27, 221–234. [Google Scholar] [CrossRef] [Green Version]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef] [Green Version]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference On Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhan, C. Machine learning in rock facies classification: An application of XGBoost. In Proceedings of the International Geophysical Conference, Qingdao, China, 17–20 April 2017. Society of Exploration Geophysicists and Chinese Petroleum Society. [Google Scholar] [CrossRef]

- Imandoust, S.B.; Bolandraftar, M. Application of k-nearest neighbor (knn) approach for predicting economic events: Theoretical background. IJERA 2013, 3, 605–610. [Google Scholar]

- Gunn, S.R. Support vector machines for classification and regression. ISIS Tech. Rep. 1998, 14, 5–16. [Google Scholar]

- Durgesh, K.S.; Lekha, B. Data classification using support vector machine. J. Theor. Appl. Inf. Technol. 2010, 12, 1–7. [Google Scholar]

- Tu, J.V. Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J. Clin. Epidemiol. 1996, 49, 1225–1231. [Google Scholar] [CrossRef]

| Model | Definition | Formula |

|---|---|---|

| Balanced accuracy | The proportion of correctly classified subjects for each class. Useful especially when there is class imbalance. | |

| Precision | The proportion of correctly classified subjects for a given class given that they truly belonged to that class | |

| F-measure | The harmonic mean of the precision and sensitivity best if there is some sort of balance between precision and sensitivity. | |

| Sensitivity | The proportion of correctly classified subjects for a given class to those who truly belong to that class. | |

| Specificity | The proportion of subjects correctly classified as not belonging to a given class to those that truly do not belong to that class. | |

| Positive likelihood rate (PLR) | The ratio between the true positive and the false positive rates for “positive” events that are detected by a model. | |

| Negative likelihood rate (NLR) | The ratio between the false negative and true negative rates and mirrors the probability for “negative” events to be detected by a model. | |

| Youden’s index (YI) | The sum of sensitivity and specificity minus one | |

| Cohen’s kappa (κ) | Measures the degree of agreement between two raters or ratings (inter-rater or interrater reliability) | κ |

| Pre-Breeding | Pregnancy Diagnosis | Pre-Lambing | Weaning | |||||

|---|---|---|---|---|---|---|---|---|

| Model | Accuracy | Kappa (κ) | Accuracy | Kappa (κ) | Accuracy | Kappa (κ) | Accuracy | Kappa (κ) |

| XGB | 89.5(85.6–97.5) 3,1 | 79.6 | 91.2(88.5–93.4) 3,1 | 82.3 | 90.6(88.8–91.4) 2,1 | 82.9 | 91.7(90.1–93.2) 1,3 | 83.4 |

| RF | 89.0(84.7–96.6) 2,1 | 78.0 | 90.0(87.5–92.9) 3,1 | 78.0 | 89.2(86.6–91.6) 2,3 | 78.5 | 88.6(88.2–89.6) 1,3 | 77.1 |

| K-NN | 87.0(81.2–95.7) 2,1 | 75.5 | 86.8(84.7–89.8) 3,1 | 75.5 | 86.2(83.0–89.7) 2,3 | 66.0 | 86.4(84.6–88.8) 2,3 | 77.7 |

| SVM | 86.7(78.8–96.6) 2,1 | 75.9 | 88.5(84.8–93.1) 2,1 | 73.7 | 73.8(72.0–74.7) 2,1 | 71.7 | 88.8(85.3–91.2) 2,3 | 72.7 |

| ANN | 85.2(79.0–94.2) 2,1 | 72.2 | 82.0(80.5–85.1) 2,1 | 65.6 | 78.9(75.5–82.4) 1,3 | 69.5 | 84.0(82.0–86.9) 1,3 | 68.0 |

| Multinorm | 82.7(76.4–91.7) 2,1 | 66.8 | 77.6(73.8–80.0) 3,1 | 56.1 | 73.5(71.8–75.1) 1,3 | 48.8 | 75.9(74.4–78.1) 3,2 | 51.8 |

| LDA | 81.2(73.8–91.1) 2,1 | 63.6 | 77.1(72.2–79.6) 3,1 | 54.6 | 73.8(71.5–75.5) 1,3 | 49.5 | 75.9(74.4–78.7) 1,2 | 51.7 |

| Ordinal | 79.6(70.7–88.4) 2,1 | 48.4 | 72.7(67.6–75.8) 2,1 | 47.7 | 68.4(58.7–74.8) 2,3 | 37.0 | 72.4(67.8–76.2) 2,1 | 44.9 |

| CART | 72.6(58.6–85.1) 2,1 | 47.3 | 69.8(64.0–73.3) 3,1 | 40.5 | 67.5(62.8–71.1) 1,2 | 41.8 | 66.6(61.4–70.1) 2,1 | 33.2 |

| Pre-Breeding | Pregnancy Diagnosis | Pre-Lambing | Weaning | |||||

|---|---|---|---|---|---|---|---|---|

| Model | Sensitivity | Specificity | Sensitivity | Specificity | Sensitivity | Specificity | Sensitivity | Specificity |

| XGB | 86.0(79.7–96.3) 3,1 | 93.1(89.1–98.9) 2,1 | 88.2(83.7–90.4) 3,1 | 94.2(93.1–96.3) 2,1 | 87.5(85.9–88.8) 1,3 | 93.8(89.7–97.5) 2,1 | 89.0(84.8–92.3) 1,2 | 94.5(91.6–96.5) 2,3 |

| RF | 85.3(80.0–95.3) 2,1 | 92.8(89.3–97.9) 2,1 | 86.7(80.9–90.3) 3,1 | 93.4(90.5–95.5) 2,1 | 85.6(82.6–88.6) 1,3 | 92.8(87.5–96.4) 2,1 | 84.8(82.5–87.6) 1,2 | 92.4(88.9–93.4) 2,3 |

| SVM | 82.6(74.8–93.8) 2,1 | 91.4(87.5–97.5) 2,3 | 82.3(75.3–84.2) 3,2 | 91.2(84.2–95.4) 2,1 | 81.5(73.5–86.1) 1,3 | 90.8(81.1–98.1) 2,1 | 81.9(77.6–85.6) 3,2 | 90.9(83.5–95.1) 2,3 |

| K-NN | 82.2(66.8–96.2) 2,1 | 91.2(85.9–97.0) 3,1 | 84.7(75.5–91.8) 2,1 | 92.3(88.4–94.5) 3,1 | 65.0(63.0–67.3) 1,2 | 82.5(76.8–86.4) 2,1 | 85.1(78.6–88.9) 2,3 | 92.6(91.9–93.6) 2,3 |

| ANN | 80.2(71.3–91.7) 2,1 | 90.2(86.7–96.7) 2,1 | 76.0(73.2–78.0) 3,1 | 88.0(84.3–92.2) 2,1 | 71.8(56.5–80.2) 1,3 | 85.9(78.8–94.4) 2,1 | 78.7(70.5–84.1) 1,2 | 89.3(82.4–93.5) 2,1 |

| Multinom | 76.8(68.5–89.0) 2,1 | 88.5(84.4–94.5) 2,1 | 70.0(62.7–71.4) 3,2 | 85.1(81.8–88.7) 2,1 | 64.7(58.6–68.7) 1,3 | 82.4(80.6–84.9) 2,1 | 67.9(63.3–76.2) 3,1 | 83.9(80.1–86.2) 2,1 |

| LDA | 74.9(64.7–87.7) 2,1 | 87.6(82.8–94.4) 2,1 | 69.4(57.1–82.7) 3,2 | 84.8(76.6–90.7) 2,1 | 65.0(56.3–69.4) 1,3 | 82.5(79.2–86.8) 2,1 | 67.8(61.5–79.8) 3,2 | 83.9(77.6–87.4) 2,3 |

| Ordinal | 72.7(61.6–82.4) 2,1 | 86.5(79.7–94.5) 2,1 | 63.6(60.7–67.9) 2,3 | 81.7(73.1–90.9) 2,1 | 57.9(41.4–69.3) 2,3 | 79.0(76.1–80.8) 2,1 | 63.2(58.3–68.5) 3,1 | 81.6(72.8–88.2) 2,3 |

| CART | 63.3(37.0–82.5) 2,1 | 81.9(77.6–87.8) 3,1 | 59.7(41.1–77.3) 3,2 | 80.0(67.1–86.0) 2,3 | 56.7(37.9–72.3) 1,2 | 78.3(71.2–87.7) 2,1 | 55.4(39.2–62.9) 2,1 | 77.7(72.4–83.6) 3,2 |

| Pre-Breeding | Pregnancy Diagnosis | Pre-Lambing | Weaning | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | PLR | NLR | YI | PLR | NLR | YI | PLR | NLR | YI | PLR | NLR | YI |

| XGB | 33.41 | 0.15 | 0.79 | 16.48 | 0.13 | 0.82 | 19.39 | 0.13 | 0.81 | 18.32 | 0.12 | 0.83 |

| RF | 20.49 | 0.16 | 0.78 | 14.45 | 0.14 | 0.80 | 15.33 | 0.16 | 0.78 | 12.25 | 0.16 | 0.77 |

| SVM | 16.88 | 0.19 | 0.74 | 12.13 | 0.19 | 0.74 | 18.48 | 0.20 | 0.72 | 11.79 | 0.20 | 0.73 |

| K-NN | 15.21 | 0.20 | 0.73 | 12.3 | 0.17 | 0.77 | 3.90 | 0.42 | 0.48 | 11.64 | 0.16 | 0.78 |

| ANN | 13.04 | 0.22 | 0.70 | 6.94 | 0.27 | 0.64 | 6.32 | 0.32 | 0.58 | 8.66 | 0.24 | 0.68 |

| Multinom | 8.65 | 0.27 | 0.65 | 4.87 | 0.35 | 0.55 | 3.69 | 0.43 | 0.47 | 4.28 | 0.38 | 0.52 |

| LDA | 8.16 | 0.29 | 0.62 | 5.12 | 0.36 | 0.54 | 3.78 | 0.42 | 0.48 | 4.37 | 0.38 | 0.52 |

| Ordinal | 7.66 | 0.32 | 0.59 | 4.20 | 0.45 | 0.45 | 2.83 | 0.54 | 0.37 | 3.83 | 0.45 | 0.45 |

| CART | 3.92 | 0.46 | 0.45 | 3.27 | 0.49 | 0.40 | 2.70 | 0.54 | 0.35 | 2.49 | 0.57 | 0.33 |

| Model | Pre-Breeding | Pregnancy Diagnosis | Pre-Lambing | Weaning | Overall |

|---|---|---|---|---|---|

| XGB | 1 | 1 | 1 | 1 | 1(1.0) |

| RF | 3 | 2 | 2 | 2 | 2(2.3) |

| SVM | 4 | 3 | 4 | 3 | 3(3.5) |

| K-NN | 2 | 6 | 3 | 4 | 4(3.8) |

| ANN | 5 | 4 | 5 | 5 | 5(4.8) |

| Miltinom | 6 | 5 | 6 | 6 | 6(5.8) |

| LDA | 7 | 7 | 7 | 7 | 7(7.0) |

| Ordinal | 8 | 8 | 8 | 8 | 8(8.0) |

| CART | 9 | 9 | 9 | 9 | 9(9.0) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Semakula, J.; Corner-Thomas, R.A.; Morris, S.T.; Blair, H.T.; Kenyon, P.R. Application of Machine Learning Algorithms to Predict Body Condition Score from Liveweight Records of Mature Romney Ewes. Agriculture 2021, 11, 162. https://doi.org/10.3390/agriculture11020162

Semakula J, Corner-Thomas RA, Morris ST, Blair HT, Kenyon PR. Application of Machine Learning Algorithms to Predict Body Condition Score from Liveweight Records of Mature Romney Ewes. Agriculture. 2021; 11(2):162. https://doi.org/10.3390/agriculture11020162

Chicago/Turabian StyleSemakula, Jimmy, Rene A. Corner-Thomas, Stephen T. Morris, Hugh T. Blair, and Paul R. Kenyon. 2021. "Application of Machine Learning Algorithms to Predict Body Condition Score from Liveweight Records of Mature Romney Ewes" Agriculture 11, no. 2: 162. https://doi.org/10.3390/agriculture11020162