Plant and Weed Identifier Robot as an Agroecological Tool Using Artificial Neural Networks for Image Identification

Abstract

:1. Introduction

2. Literature Review

2.1. Studies on Weed Killing Herbicides and Its Effects

2.2. Deep Machine Learning in Agriculture

2.2.1. Disease Identification

2.2.2. Crop Yield Forecasting

2.2.3. Plant Leaf Classification and Identification

2.2.4. Weed Classification and Detection

2.3. Artificial Neural Networks

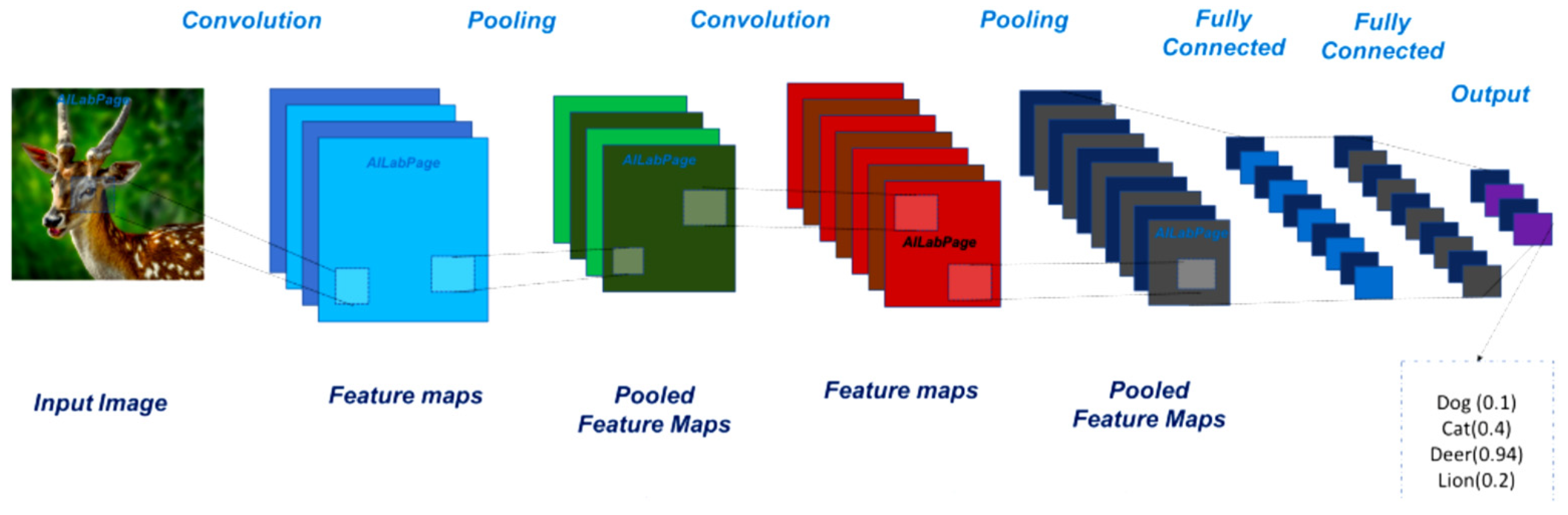

2.4. Convolution Neural Networks

2.5. State-of-the-Art Object Detection Methods

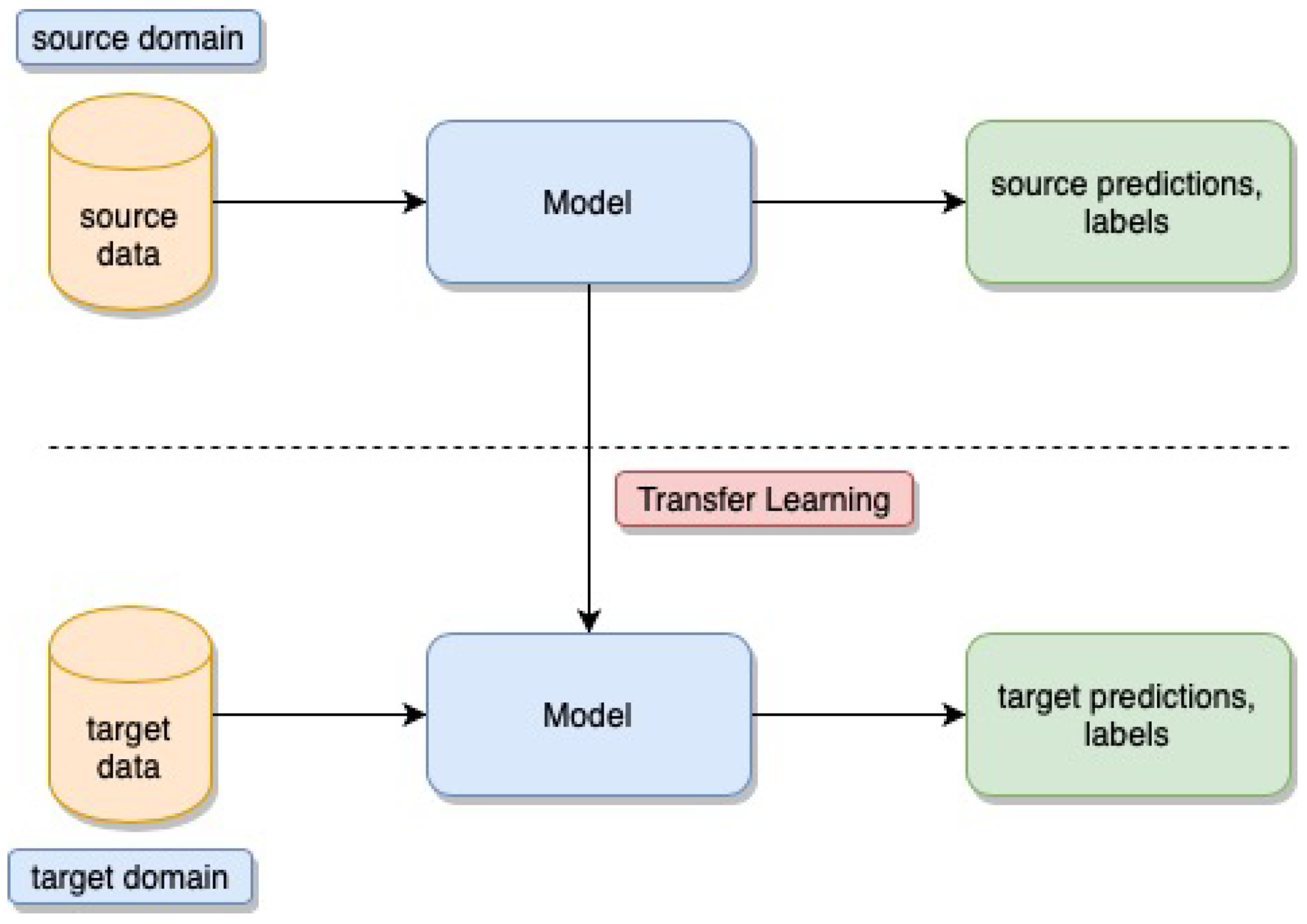

2.6. Transfer Learning Technique

3. Materials and Methods

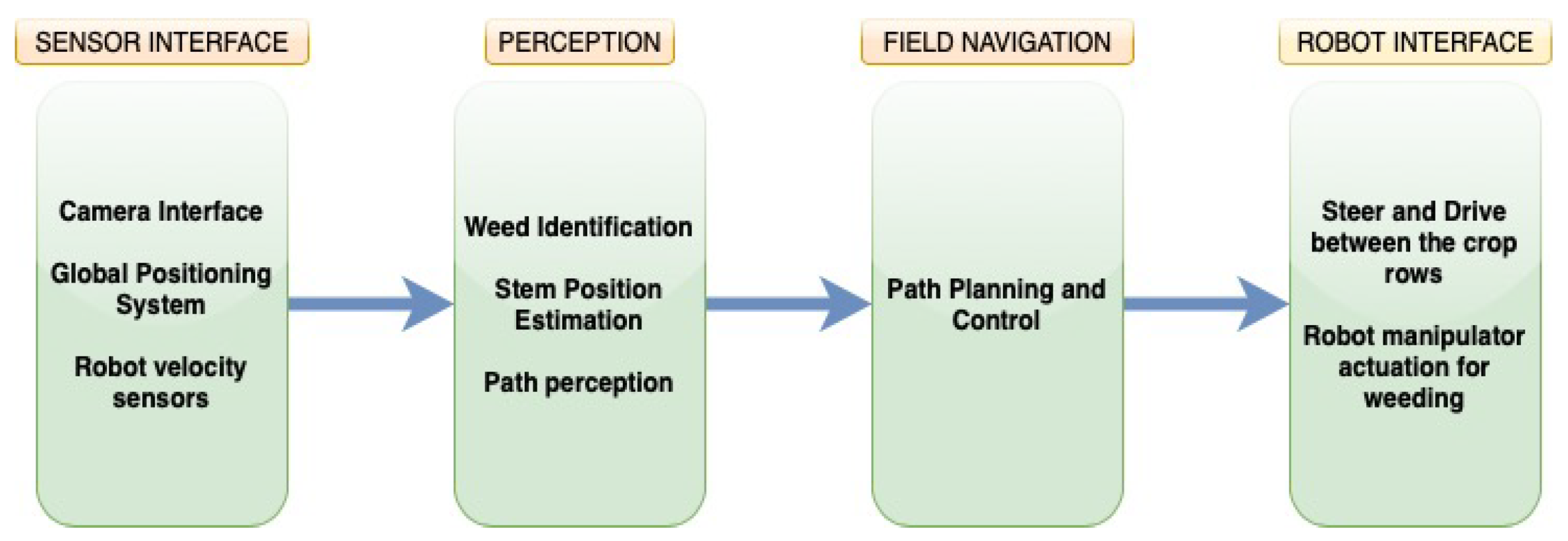

3.1. Conceptualisation and High-Level Design of the Robot

- Articulated arm

- Cartesian robot

- Parallel manipulator

3.2. Hardware Design Approach of the Weeding Robot

3.3. Software Design Approach of the Weeding Robot

3.4. Training and Implementation

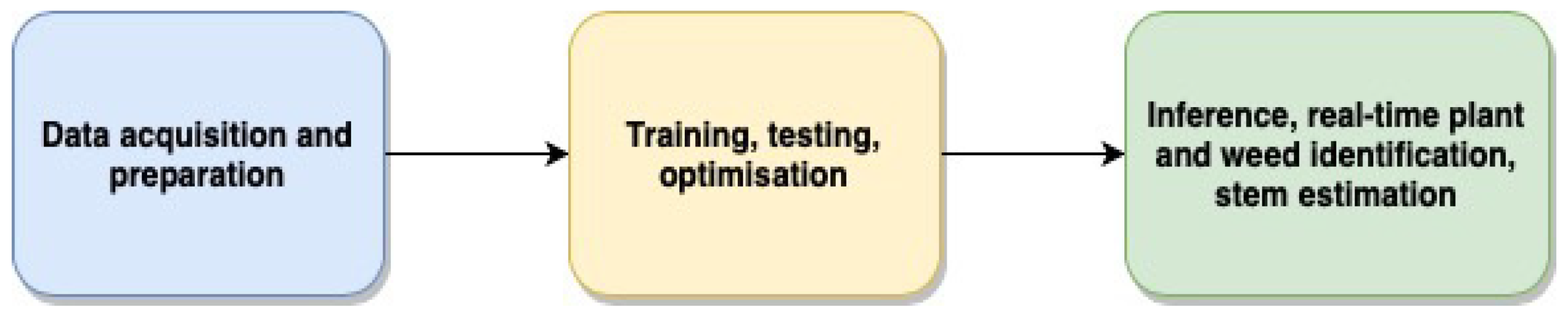

3.4.1. Plant and Weed Identification Pipeline

3.4.2. Experimental Setup

3.4.3. Data Acquisition and Pre-Processing

3.4.4. Training and Analysis of the Neural Network Model

Evaluation Metrics

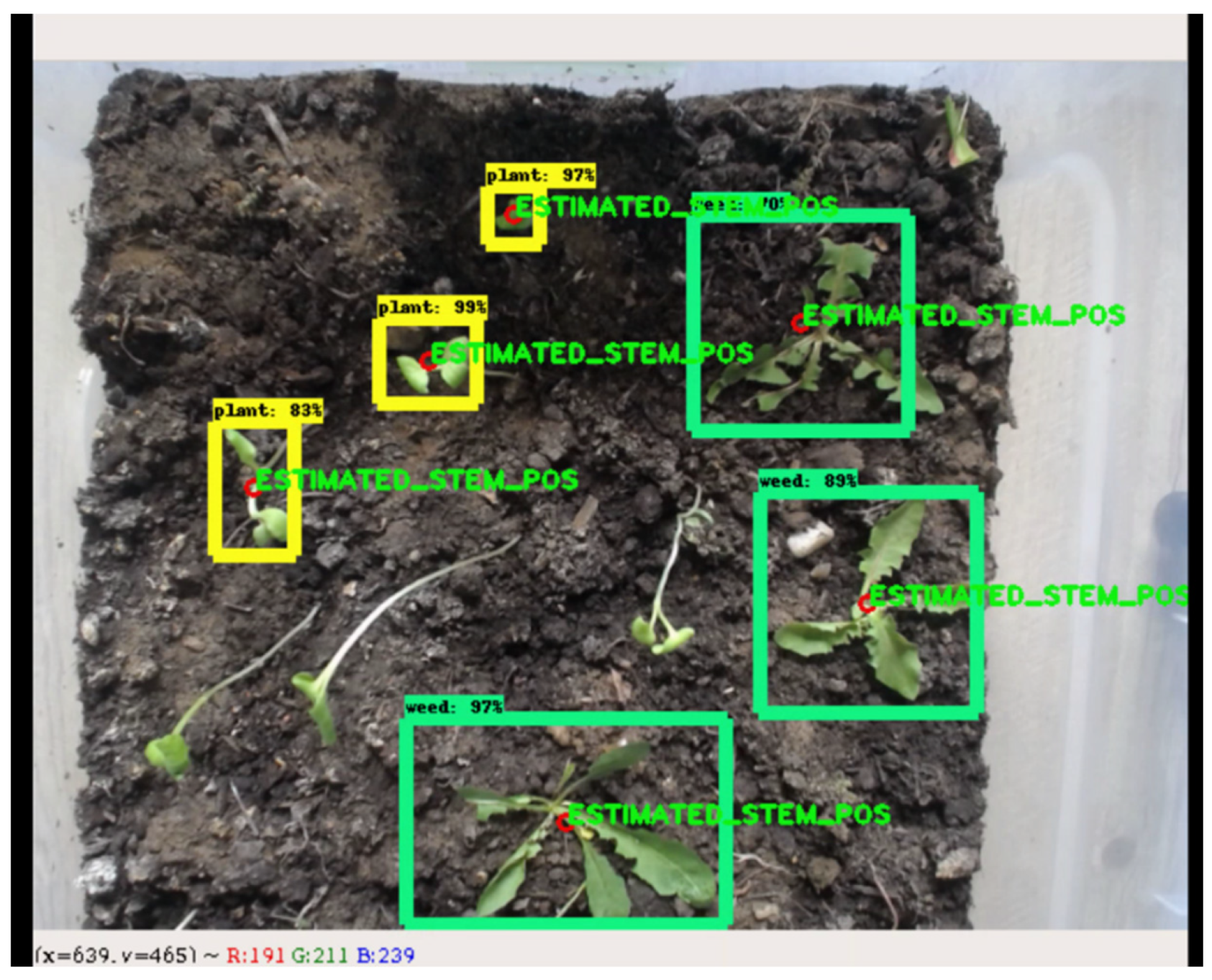

3.4.5. Stem Position Extraction

4. Results and Discussions

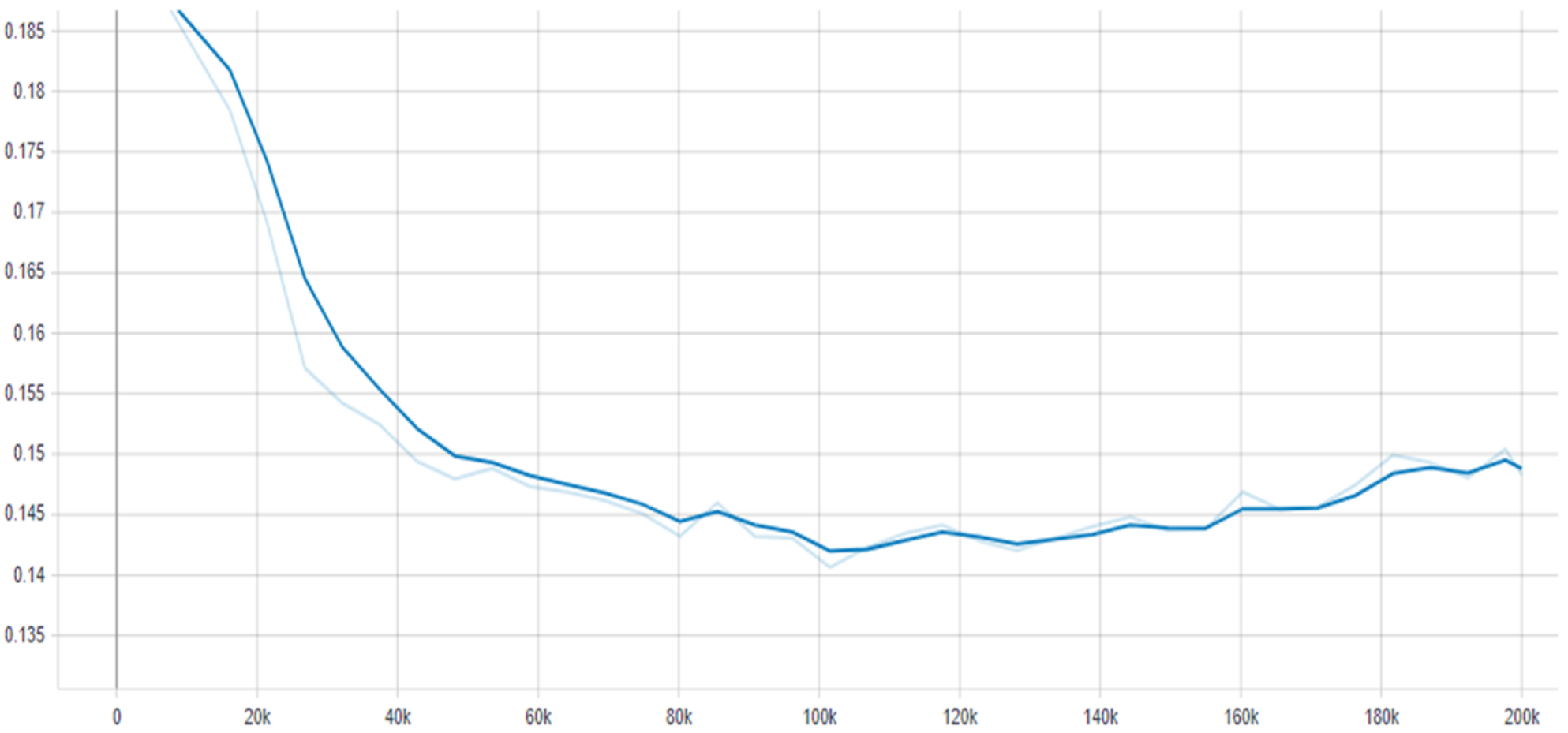

4.1. Training

4.1.1. Case 1: Configuration 1

4.1.2. Case 2: Configuration 2

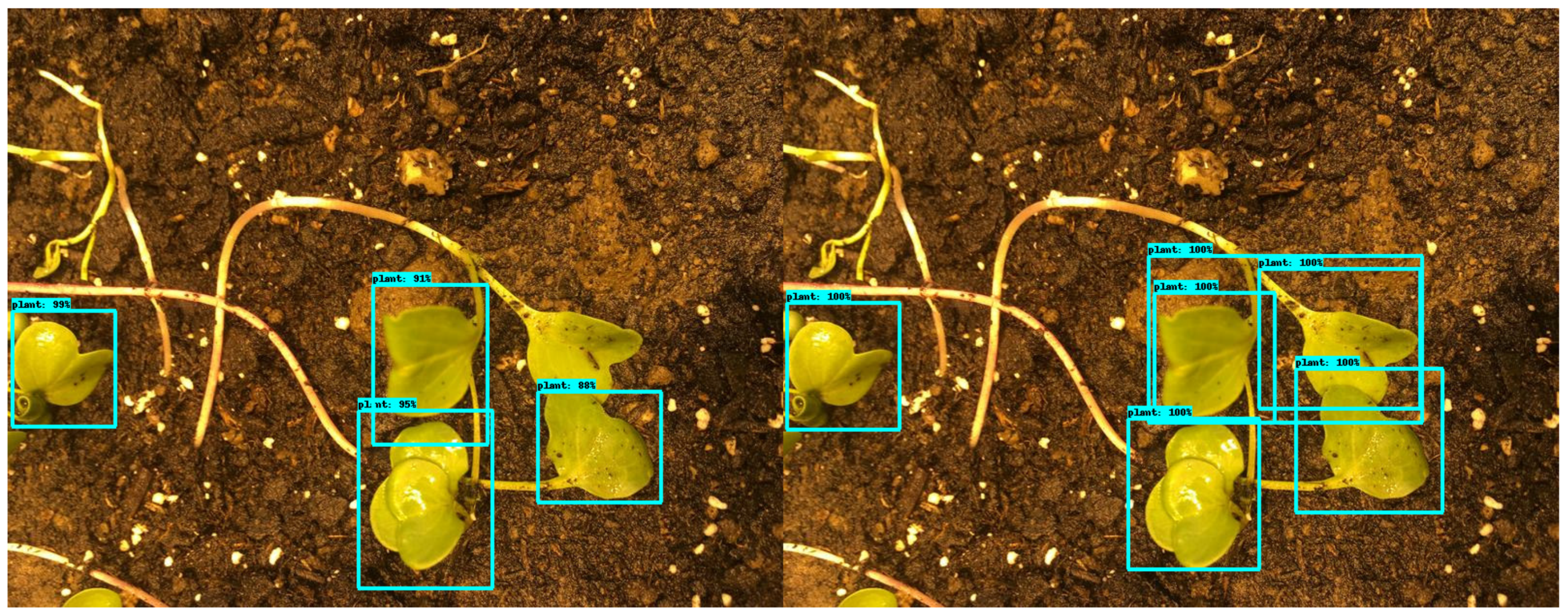

4.2. Plant and Weed Identification

4.3. Extracted Stem Positions

4.4. Discussion of Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Varma, P. Adoption of System of Rice Intensification under Information Constraints: An Analysis for India. J. Dev. Stud. 2018, 54, 1838–1857. [Google Scholar] [CrossRef]

- Delmotte, S.; Tittonell, P.; Mouret, J.-C.; Hammond, R.; Lopez-Ridaura, S. On farm assessment of rice yield variability and productivity gaps between organic and conventional cropping systems under Mediterranean climate. Eur. J. Agron. 2011, 35, 223–236. [Google Scholar] [CrossRef]

- Shennan, C.; Krupnik, T.J.; Baird, G.; Cohen, H.; Forbush, K.; Lovell, R.J.; Olimpi, E.M. Organic and Conventional Agriculture: A Useful Framing? Annu. Rev. Environ. Resour. 2017, 42, 317–346. [Google Scholar] [CrossRef]

- John, A.; Fielding, M. Rice production constraints and “new” challenges for South Asian smallholders: Insights into de facto research priorities. Agric. Food Secur. 2014, 3, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Hazra, K.K.; Swain, D.K.; Bohra, A.; Singh, S.S.; Kumar, N.; Nath, C.P. Organic rice: Potential production strategies, challenges and prospects. Org. Agric. 2018, 8, 39–56. [Google Scholar] [CrossRef]

- Sujaritha, M.; Annadurai, S.; Satheeshkumar, J.; Kowshik Sharan, S.; Mahesh, L. Weed detecting robot in sugarcane fields using fuzzy real time classifier. Comput. Electron. Agric. 2017, 134, 160–171. [Google Scholar] [CrossRef]

- Zahm, S.H.; Ward, M.H. Pesticides and childhood cancer. Environ. Health Perspect. 1998, 106, 893–908. [Google Scholar] [CrossRef]

- Chitra, G.A.; Muraleedharan, V.R.; Swaminathan, T.; Veeraraghavan, D. Use of pesticides and its impact on health of farmers in south India. Int. J. Occup. Environ. Health 2006, 12, 228–233. [Google Scholar] [CrossRef]

- Wilson, C. Environmental and human costs of commercial agricultural production in South Asia. Int. J. Soc. Econ. 2000, 27, 816–846. [Google Scholar] [CrossRef] [Green Version]

- Uphoff, N. SRI: An agroecological strategy to meet multiple objectives with reduced reliance on inputs. Agroecol. Sustain. Food Syst. 2017, 41, 825–854. [Google Scholar] [CrossRef]

- Wayayok, A.; Soom, M.A.M.; Abdan, K.; Mohammed, U. Impact of Mulch on Weed Infestation in System of Rice Intensification (SRI) Farming. Agric. Agric. Sci. Procedia 2014, 2, 353–360. [Google Scholar] [CrossRef] [Green Version]

- Krupnik, T.J.; Rodenburg, J.; Haden, V.R.; Mbaye, D.; Shennan, C. Genotypic trade-offs between water productivity and weed competition under the System of Rice Intensification in the Sahel. Agric. Water Manag. 2012, 115, 156–166. [Google Scholar] [CrossRef]

- RCEP. Royal Commission for Environmental Pollution 1979 Seventh Report. Agriculture and Pollution; RCEP: London, UK, 1979. [Google Scholar]

- Moss, B. Water pollution by agriculture. Philos. Trans. R. Soc. B Biol. Sci. 2008, 363, 659–666. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- James, C.; Fisher, J.; Russell, V.; Collings, S.; Moss, B. Nitrate availability and hydrophyte species richness in shallow lakes. Freshw. Biol. 2005, 50, 1049–1063. [Google Scholar] [CrossRef]

- Mehaffey, M.H.; Nash, M.S.; Wade, T.G.; Ebert, D.W.; Jones, K.B.; Rager, A. Linking land cover and water quality in New York City’s water supply watersheds. Environ. Monit. Assess. 2005, 107, 29–44. [Google Scholar] [CrossRef]

- Sala, O.E.; Chapin, F.S.; Armesto, J.J.; Berlow, E.; Bloomfield, J.; Dirzo, R.; Huber-Sanwald, E.; Huenneke, L.F.; Jackson, R.B.; Kinzig, A.; et al. Global biodiversity scenarios for the year 2100. Science 2000, 287, 1770–1774. [Google Scholar] [CrossRef]

- Pimentel, D.; Pimentel, M. Comment: Adverse environmental consequences of the Green Revolution. In Resources, Environment and Population-Present Knowledge, Future Options, Population and Development Review; Davis, K., Bernstam, M., Eds.; Oxford University Press: Oxford, UK, 1991. [Google Scholar]

- Pimentel, D.; Acquay, H.; Biltonen, M.; Rice, P.; Silva, M.; Nelson, J.; Lipner, V.; Horowitz, A.; Amore, M.D. Environmental and Economic Costs of Pesticide Use. Am. Inst. Biol. Sci. 1992, 42, 750–760. [Google Scholar] [CrossRef]

- Orlando, F.; Alali, S.; Vaglia, V.; Pagliarino, E.; Bacenetti, J.; Bocchi, S.; Bocchi, S. Participatory approach for developing knowledge on organic rice farming: Management strategies and productive performance. Agric. Syst. 2020, 178, 102739. [Google Scholar] [CrossRef]

- Barker, R.; Herdt, R.W.; Rose, H. The Rice Economy of Asia; The Johns Hopkins University Press: Baltimore, MD, USA, 1985. [Google Scholar]

- FAO. FAO Production Yearbooks (1961–1988); FAO Statistics Series; FAO: Rome, Italy, 1988. [Google Scholar]

- Chen, Z.; Shah, T.M. An Introduction to the Global Soil Status; RUVIVAL Publication Series; Schaldach, R., Otterpohl, R., Eds.; Hamburg University of Technology: Hamburg, Germany, 2019; Volume 5, pp. 7–17. [Google Scholar]

- Kopittke, P.M.; Menzies, N.W.; Wang, P.; McKenna, B.A.; Lombi, E. Soil and the intensification of agriculture for global food security. Environ. Int. 2019, 132, 105078. [Google Scholar] [CrossRef]

- Nawaz, A.; Farooq, M. Weed management in resource conservation production systems in Pakistan. Crop Prot. 2016, 85, 89–103. [Google Scholar] [CrossRef]

- Sreekanth, M.; Hakeem, A.H.; Peer, Q.J.A.; Rashid, I.; Farooq, F. Adoption of Recommended Package of Practices by Rice Growers in District Baramulla. J. Appl. Nat. Sci. 2019, 11, 188–192. [Google Scholar] [CrossRef]

- Holt-Giménez, E.; Altieri, M.A. Agroecology, food sovereignty, and the new green revolution. Agroecol. Sustain. Food Syst. 2013, 37, 90–102. [Google Scholar] [CrossRef]

- Wezel, A.; Bellon, S.; Doré, T.; Francis, C.; Vallod, D.; David, C. Agroecology as a science, a movement and a practice. A review. Agron. Sustain. Dev. 2009, 29, 503–515. [Google Scholar] [CrossRef] [Green Version]

- Bajwa, A.A.; Mahajan, G.; Chauhan, B.S. Nonconventional weed management strategies for modern agriculture. Weed Sci. 2015, 63, 723–747. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Lamb, D.W.; Weedon, M. Evaluating the accuracy of mapping weeds in fallow fields using airborne digital imaging: Panicum effusum in oilseed rape stubble. Weed Res. 1998, 38, 443–451. [Google Scholar] [CrossRef]

- Medlin, C.R.; Shaw, D.R. Economic comparison of broadcast and site-specific herbicide applications in nontransgenic and glyphosate-tolerant Glycine max. Weed Sci. 2000, 48, 653–661. [Google Scholar] [CrossRef]

- Huang, Y.; Reddy, K.N.; Fletcher, R.S.; Pennington, D. UAV low-altitude remote sensing for precision weed management. Weed Technol. 2018, 32, 2–6. [Google Scholar] [CrossRef]

- Freeman, P.K.; Freeland, R.S. Agricultural UAVs in the US: Potential, policy, and hype. Remote Sens. Appl. Soc. Environ. 2015, 2, 35–43. [Google Scholar]

- Pérez-Pérez, B.D.; García Vázquez, J.P.; Salomón-Torres, R. Evaluation of Convolutional Neural Networks’ Hyperparameters with Transfer Learning to Determine Sorting of Ripe Medjool Dates. Agriculture 2021, 11, 115. [Google Scholar] [CrossRef]

- Graeub, B.E.; Chappell, M.J.; Wittman, H.; Ledermann, S.; Kerr, R.B.; Gemmill-Herren, B. The State of Family Farms in the World. World Dev. 2016, 87, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Wezel, A.; Casagrande, M.; Celette, F.; Vian, J.F.; Ferrer, A.; Peigné, J. Agroecological practices for sustainable agriculture. A review. Agron. Sustain. Dev. 2014, 34, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Harker, K.N.; O’Donovan, J.T. Recent Weed Control, Weed Management, and Integrated Weed Management. Weed Technol. 2013, 27, 1–11. [Google Scholar] [CrossRef]

- Melander, B.; Rasmussen, I.A.; Bàrberi, P. Integrating physical and cultural methods of weed control—Examples from European research. Weed Sci. 2005, 53, 369–381. [Google Scholar] [CrossRef]

- Benbrook, C.M. Trends in glyphosate herbicide use in the United States and globally. Environ. Sci. Eur. 2016, 28, 3. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Helander, M.; Saloniemi, I.; Saikkonen, K. Glyphosate in northern ecosystems. Trends Plant Sci. 2012, 17, 569–574. [Google Scholar] [CrossRef]

- IARC. IARC Monographs Volume 112: Evaluation of Five Organophosphate Insecticides and Herbicides; IARC: Lyon, France, 2017. [Google Scholar]

- Jayasumana, C.; Paranagama, P.; Agampodi, S.; Wijewardane, C.; Gunatilake, S.; Siribaddana, S. Drinking well water and occupational exposure to Herbicides is associated with chronic kidney disease, in Padavi-Sripura, Sri Lanka. Environ. Health 2015, 14, 6. [Google Scholar] [CrossRef] [Green Version]

- Monarch Butterfles: The Problem with Herbicides. Available online: https://www.sciencedaily.com/releases/2017/05/170517143600.htm (accessed on 23 January 2021).

- Motta, E.V.S.; Raymann, K.; Moran, N.A. Glyphosate perturbs the gut microbiota of honey bees. Proc. Natl. Acad. Sci. USA 2018, 115, 10305–10310. [Google Scholar] [CrossRef] [Green Version]

- Kanissery, R.; Gairhe, B.; Kadyampakeni, D.; Batuman, O.; Alferez, F. Glyphosate: Its environmental persistence and impact on crop health and nutrition. Plants 2019, 8, 499. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012; ISBN 978-0-262-01802-9. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0262035613. [Google Scholar]

- Brownlee, J. What Is Deep Learning? Available online: https://machinelearningmastery.com/what-is-deep-learning/ (accessed on 23 January 2021).

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [Green Version]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Narayanasamy, P. Biological Management of Diseases of Crops; Springer: Dordrecht, The Netherlands, 2013; ISBN 978-94-007-6379-1. [Google Scholar]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Mahmood Arif Plant Disease Detection and Classification by Deep Learning. Plants 2019, 8, 468. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fuentes, A.; Yoon, S.; Kim, S.; Park, D. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Raun, W.R.; Solie, J.B.; Johnson, G.V.; Stone, M.L.; Lukina, E.V.; Thomason, W.E.; Schepers, J.S. In-season prediction of potential grain yield in winter wheat using canopy reflectance. Agron. J. 2001, 93, 131–138. [Google Scholar] [CrossRef] [Green Version]

- Filippi, P.; Jones, E.J.; Wimalathunge, N.S.; Somarathna, P.D.S.N.; Pozza, L.E.; Ugbaje, S.U.; Jephcott, T.G.; Paterson, S.E.; Whelan, B.M.; Bishop, T.F.A. An approach to forecast grain crop yield using multi-layered, multi-farm data sets and machine learning. Precis. Agric. 2019, 20, 1015–1029. [Google Scholar] [CrossRef]

- Ashapure, A.; Oh, S.; Marconi, T.G.; Chang, A.; Jung, J.; Landivar, J.; Enciso, J. Unmanned aerial system based tomato yield estimation using machine learning. In Proceedings Volume 11008, Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; SPIE: Baltimore, MD, USA, 2019; p. 22. [Google Scholar] [CrossRef]

- Khaki, S.; Wang, L.; Archontoulis, S.V. A CNN-RNN Framework for Crop Yield Prediction. Front. Plant Sci. 2020, 10, 1–14. [Google Scholar] [CrossRef]

- Russello, H. Convolutional Neural Networks for Crop Yield Prediction Using Satellite Images. Master’s Thesis, University of Amsterdam, Amsterdam, The Netherland, 2018. [Google Scholar]

- Sun, Y.; Liu, Y.; Wang, G.; Zhang, H. Deep Learning for Plant Identification in Natural Environment. Comput. Intell. Neurosci. 2017, 2017, 1–6. [Google Scholar] [CrossRef]

- Du, J.-X.; Wang, X.-F.; Zhang, G.-J. Leaf shape based plant species recognition. Appl. Math. Comput. 2007, 185, 883–893. [Google Scholar] [CrossRef]

- Grinblat, G.L.; Uzal, L.C.; Larese, M.G.; Granitto, P.M. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 2016, 127, 418–424. [Google Scholar] [CrossRef] [Green Version]

- Wu, S.G.; Bao, F.S.; Xu, E.Y.; Wang, Y.-X.; Chang, Y.-F.; Xiang, Q.-L. A Leaf Recognition Algorithm for Plant Classification Using Probabilistic Neural Network. In Proceedings of the 2007 IEEE International Symposium on Signal Processing and Information Technology, Giza, Egypt, 15–18 December 2007; pp. 11–16. [Google Scholar] [CrossRef] [Green Version]

- Goëau, H.; Bonnet, P.; Baki, V.; Barbe, J.; Amap, U.M.R.; Carré, J.; Barthélémy, D. Pl@ntNet Mobile App. In Proceedings of the 21st ACM international conference on Multimedia, Barcelona, Spain, 21–25 October 2013; pp. 423–424. [Google Scholar]

- Wäldchen, J.; Mäder, P. Machine learning for image based species identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Liebman, M.; Baraibar, B.; Buckley, Y.; Childs, D.; Christensen, S.; Cousens, R.; Eizenberg, H.; Heijting, S.; Loddo, D.; Merotto, A.; et al. Ecologically sustainable weed management: How do we get from proof-of-concept to adoption? Ecol. Appl. 2016, 26, 1352–1369. [Google Scholar] [CrossRef]

- Farooq, A.; Jia, X.; Hu, J.; Zhou, J. Knowledge Transfer via Convolution Neural Networks for Multi-Resolution Lawn Weed Classification. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; Volume 2019. [Google Scholar]

- Dadashzadeh, M.; Abbaspour, G.Y.; Mesri, G.T.; Sabzi, S.; Hernandez-Hernandez, J.L.; Hernandez-Hernandez, M.; Arribas, J.I. Weed Classification for Site-Specific Weed. Plants 2020, 9, 559. [Google Scholar] [CrossRef]

- Ashqar, B.A.; Abu-Nasser, B.S.; Abu-Naser, S.S. Plant Seedlings Classification Using Deep Learning. Int. J. Acad. Inf. Syst. Res. 2019, 46, 745–749. [Google Scholar]

- Smith, L.N.; Byrne, A.; Hansen, M.F.; Zhang, W.; Smith, M.L. Weed classification in grasslands using convolutional neural networks. Int. Soc. Opt. Photonics 2019, 11139, 1113919. [Google Scholar] [CrossRef]

- Simon, H. Neural Networks: A Comprehensive Foundation; McMaster University: Hamilton, ON, Canada, 2005; p. 823. [Google Scholar]

- Park, S.H. Artificial Intelligence in Medicine: Beginner’s Guide. J. Korena Soc Radiol. 2018, 78, 301–308. [Google Scholar] [CrossRef]

- Chollet, F. Deep Learning with Python; Manning: New York, NY, USA, 2018; Volume 361. [Google Scholar]

- Convolutional Neural Networks (CNNs/ConvNets). Available online: https://cs231n.github.io/convolutional-networks/ (accessed on 23 January 2021).

- Brownlee, J. A Gentle Introduction to Object Recognition with Deep Learning. Available online: https://machinelearningmastery.com/object-recognition-with-deep-learning/ (accessed on 23 January 2021).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In ECCV 2016: Computer Vision–ECCV 2016; Lecture Notes in Computer Science; Springer: Cham, Germany, 2016; Volume 9905, pp. 21–37. ISBN 9783319464473. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 779–788. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Weng, L. Object Detection for Dummies Part 3: R-CNN Family. Available online: https://lilianweng.github.io/lil-log/2017/12/31/object-recognition-for-dummies-part-3.html (accessed on 23 January 2021).

- Olivas, E.S.; Guerrero, J.D.M.; Martinez-Sober, M.; Magdalena-Benedito, J.R.; Serrano, L. Handbook of Research on machine Learning Applications and Trends: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2009; ISBN 1605667676. [Google Scholar]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of transfer learning for deep neural network based plant classification models. Comput. Electron. Agric. 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Williams, J.; Tadesse, A.; Sam, T.; Sun, H.; Montanez, G.D. Limits of Transfer Learning. In Proceedings of the International Conference on Machine Learning, Optimization, and Data Science, Siena, Italy, 19–23 July 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 382–393. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Zhao, W. Research on the deep learning of the small sample data based on transfer learning. AIP Conf. Proc. 2017, 1864, 20018. [Google Scholar]

- Onshape. Available online: https://www.onshape.com/en/ (accessed on 23 January 2021).

- Pandilov, Z.; Dukovski, V. Comparison of the characteristics between serial and parallel robots. Acta Tech. Corviniensis-Bull. Eng. 2014, 7, 143. [Google Scholar]

- Wu, L.; Zhao, R.; Li, Y.; Chen, Y.-H. Optimal Design of Adaptive Robust Control for the Delta Robot with Uncertainty: Fuzzy Set-Based Approach. Appl. Sci. 2020, 10, 3472. [Google Scholar] [CrossRef]

- Pimentel, D. Environmental and Economic Costs of the Application of Pesticides Primarily in the United States. Environ. Dev. Sustain. 2005, 7, 229–252. [Google Scholar] [CrossRef]

- Siemens, M.C. Robotic weed control. In Proceedings of the California Weed Science Society, Monterey, CA, USA, 23 June 2014; Volume 66, pp. 76–80. [Google Scholar]

- Ecorobotix. Available online: https://www.ecorobotix.com/en/autonomous-robot-weeder/ (accessed on 9 February 2021).

- Pilz, K.H.; Feichter, S. How robots will revolutionize agriculture. In Proceedings of the 2017 European Conference on Educational Robotics, Sofia, Bulgaria, 24–28 April 2017. [Google Scholar]

- Schnitkey, G. Historic Fertilizer, Seed, and Chemical Costs with 2019 Projections. Farmdoc Daily, 5 June 2018; 102. [Google Scholar]

- ROS Documentation. Available online: http://wiki.ros.org/Documentation (accessed on 23 January 2021).

- Raspberry Pi. Available online: https://www.raspberrypi.org/products/ (accessed on 23 January 2021).

- Coral Dev Board. Available online: https://coral.ai/products/dev-board/ (accessed on 23 January 2021).

- Embedded Systems for Next-Generation Autonmous Machines. Available online: https://www.nvidia.com/de-de/autonomous-machines/embedded-systems/ (accessed on 23 January 2021).

- Lottes, P.; Behley, J.; Chebrolu, N.; Milioto, A.; Stachniss, C. Robust joint stem detection and crop-weed classification using image sequences for plant-specific treatment in precision farming. J. F. Robot. 2020, 37, 20–34. [Google Scholar] [CrossRef]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeon, W.-S.; Rhee, S.-Y. Plant Leaf Recognition Using a Convolution Neural Network. Int. J. Fuzzy Log. Intell. Syst. 2017, 17, 26–34. [Google Scholar] [CrossRef] [Green Version]

- Abdullahi, H.S.; Sheriff, R.; Mahieddine, F. Convolution neural network in precision agriculture for plant image recognition and classification. In Proceedings of the 2017 Seventh International Conference on Innovative Computing Technology (INTECH), Luton, UK, 16–18 August 2017; Volume 10. [Google Scholar]

- Asad, M.H.; Bais, A. Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

| CPU | AMD Ryzen 7 2700X 8x 3.70 GHz |

|---|---|

| Memory | 16 GB DDR4 RAM 3000 MHz |

| GPU | NVIDIA 8 GB RAM |

| OS | Ubuntu 18.04 LTS 64-bit |

| Reference | Number of Species | Growth Stages | Number of Images (Dataset) | Highest Classification Accuracy | Object Detection: Mean Average Precision (mAP) |

|---|---|---|---|---|---|

| Perez-Perez et al. (2021) | 1 | Ripe and Unripe | 1002 | 99.32% | n.a. |

| Dyrmann et al. (2016) | 22 | Seedling | 10,413 | 86.2% | n.a. |

| Asad and Bais (2020) | 2 | Two | 906 | 99.48% | n.a. |

| Current study | 3 | Multiple | 200 | Plant: 95% Weed: 99% | 31% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shah, T.M.; Nasika, D.P.B.; Otterpohl, R. Plant and Weed Identifier Robot as an Agroecological Tool Using Artificial Neural Networks for Image Identification. Agriculture 2021, 11, 222. https://doi.org/10.3390/agriculture11030222

Shah TM, Nasika DPB, Otterpohl R. Plant and Weed Identifier Robot as an Agroecological Tool Using Artificial Neural Networks for Image Identification. Agriculture. 2021; 11(3):222. https://doi.org/10.3390/agriculture11030222

Chicago/Turabian StyleShah, Tavseef Mairaj, Durga Prasad Babu Nasika, and Ralf Otterpohl. 2021. "Plant and Weed Identifier Robot as an Agroecological Tool Using Artificial Neural Networks for Image Identification" Agriculture 11, no. 3: 222. https://doi.org/10.3390/agriculture11030222

APA StyleShah, T. M., Nasika, D. P. B., & Otterpohl, R. (2021). Plant and Weed Identifier Robot as an Agroecological Tool Using Artificial Neural Networks for Image Identification. Agriculture, 11(3), 222. https://doi.org/10.3390/agriculture11030222