Compact Convolutional Transformer (CCT)-Based Approach for Whitefly Attack Detection in Cotton Crops

Abstract

:1. Introduction

- A dataset for whitefly attacks in cotton crops comprising 5135 images was developed and published to help future researchers.

- A multi-class dataset with ground truth annotation was prepared for our model.

- A Compact Convolutional Transformer (CCT)-based approach is proposed for the classification.

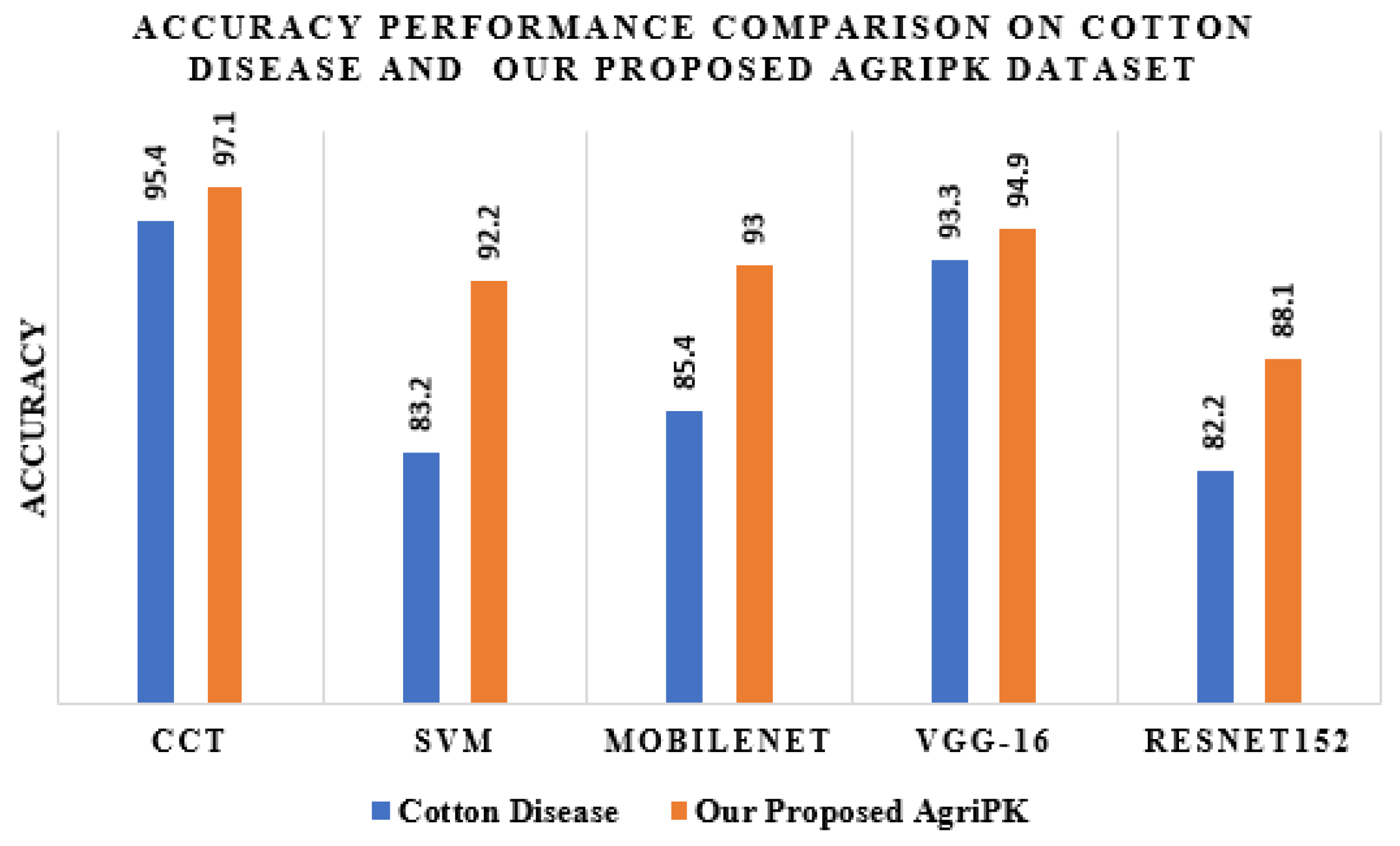

- The performance of the proposed CCT-based approach is compared with those of various state-of-the-art models, such as MobileNet, ResNet152v2, VGG-16, and SVM. Experimental results demonstrate that the CCT approach outperformed the compared approaches.

2. Related Work

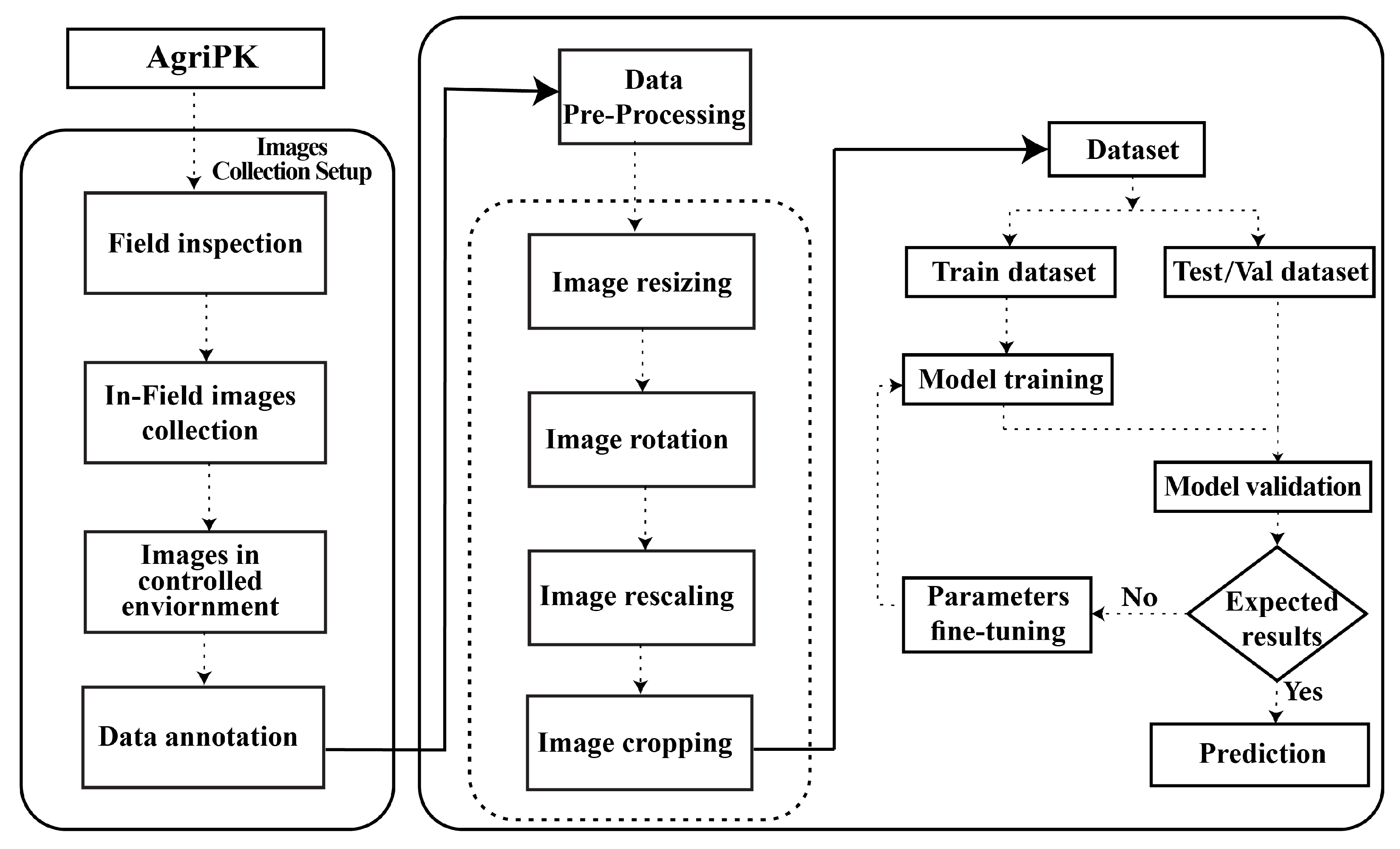

3. Materials

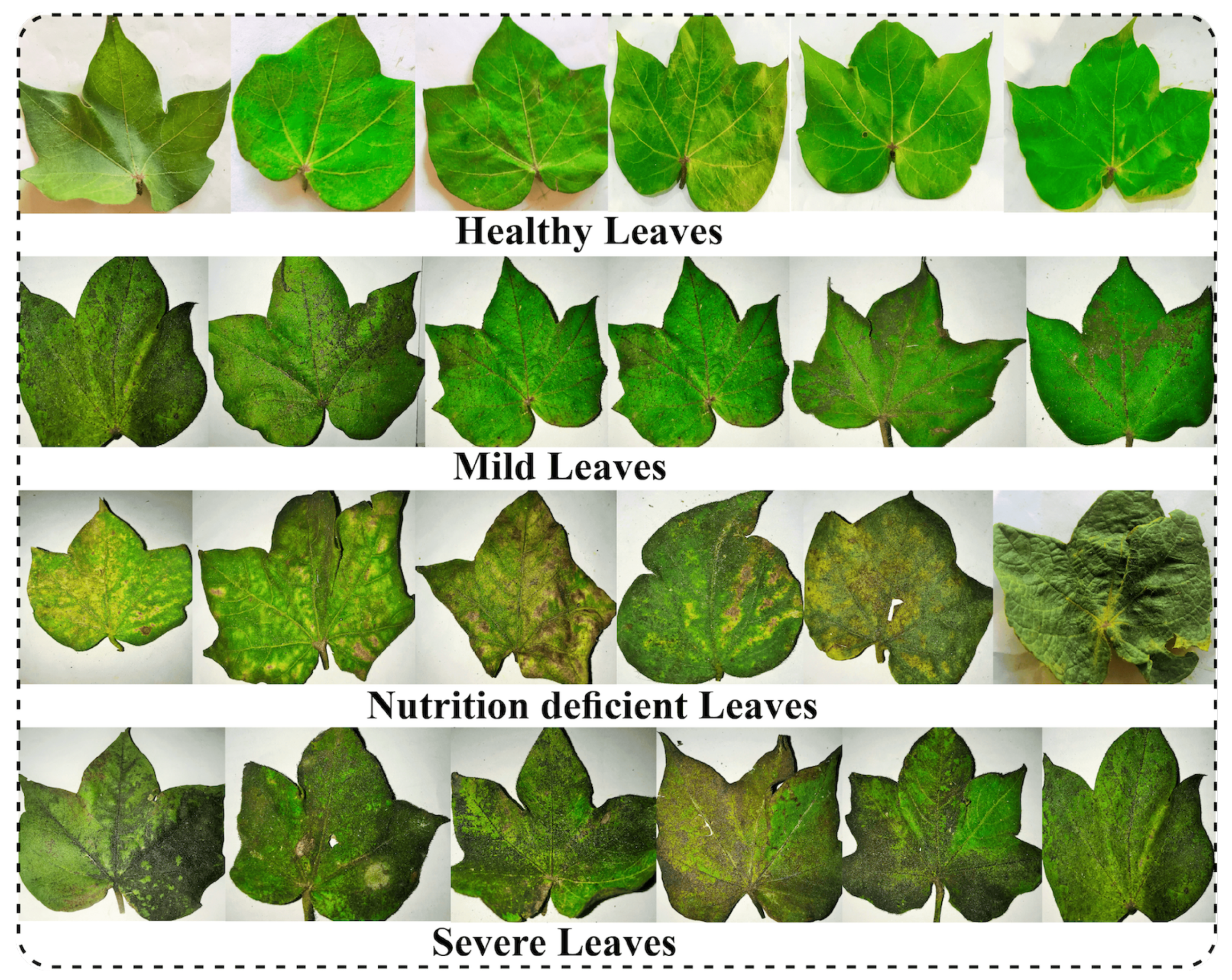

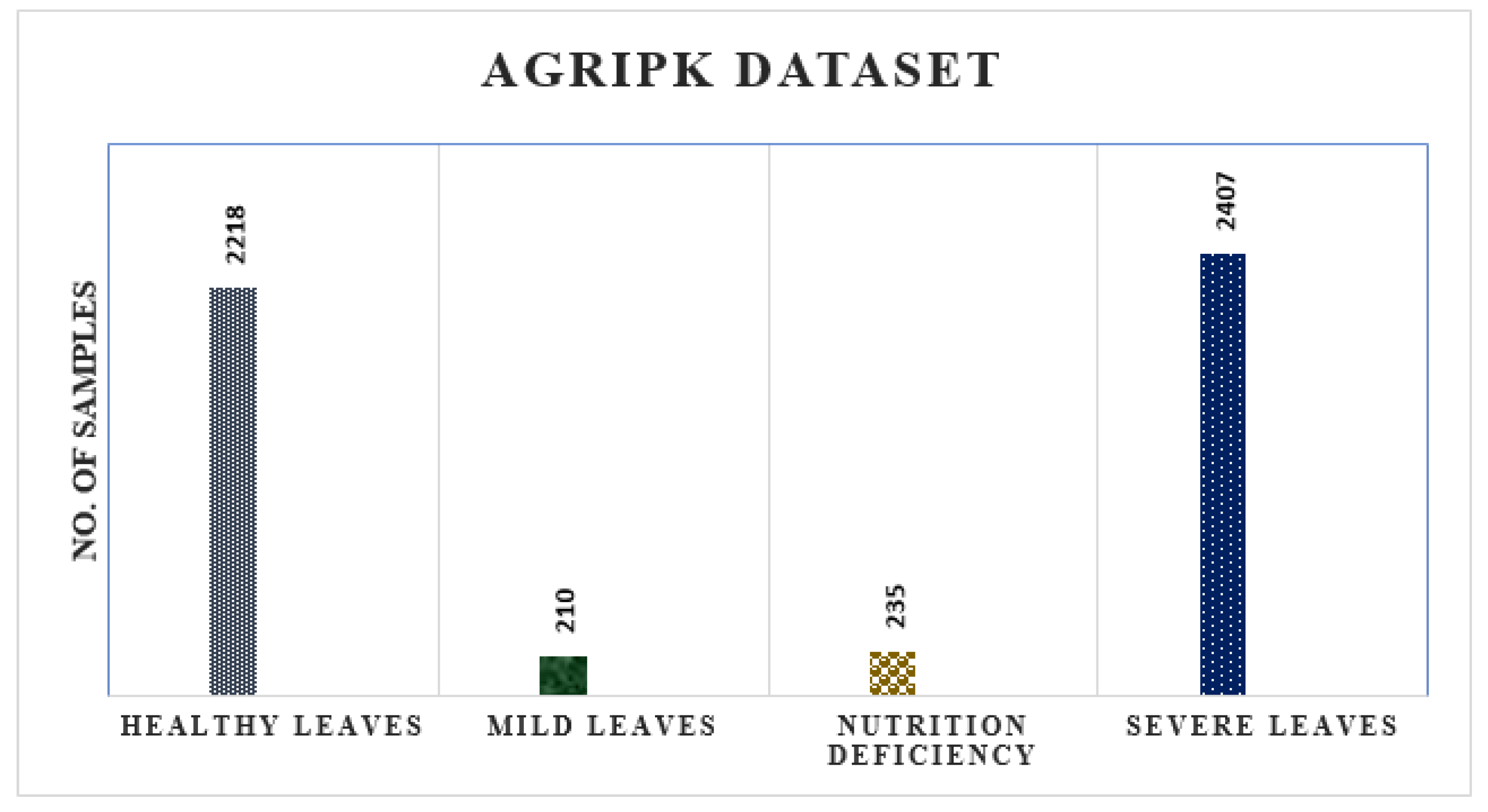

3.1. AgriPK Dataset

3.1.1. Image Collection

3.1.2. Professional Annotation

3.2. Existing Cotton Disease Dataset

4. Methods

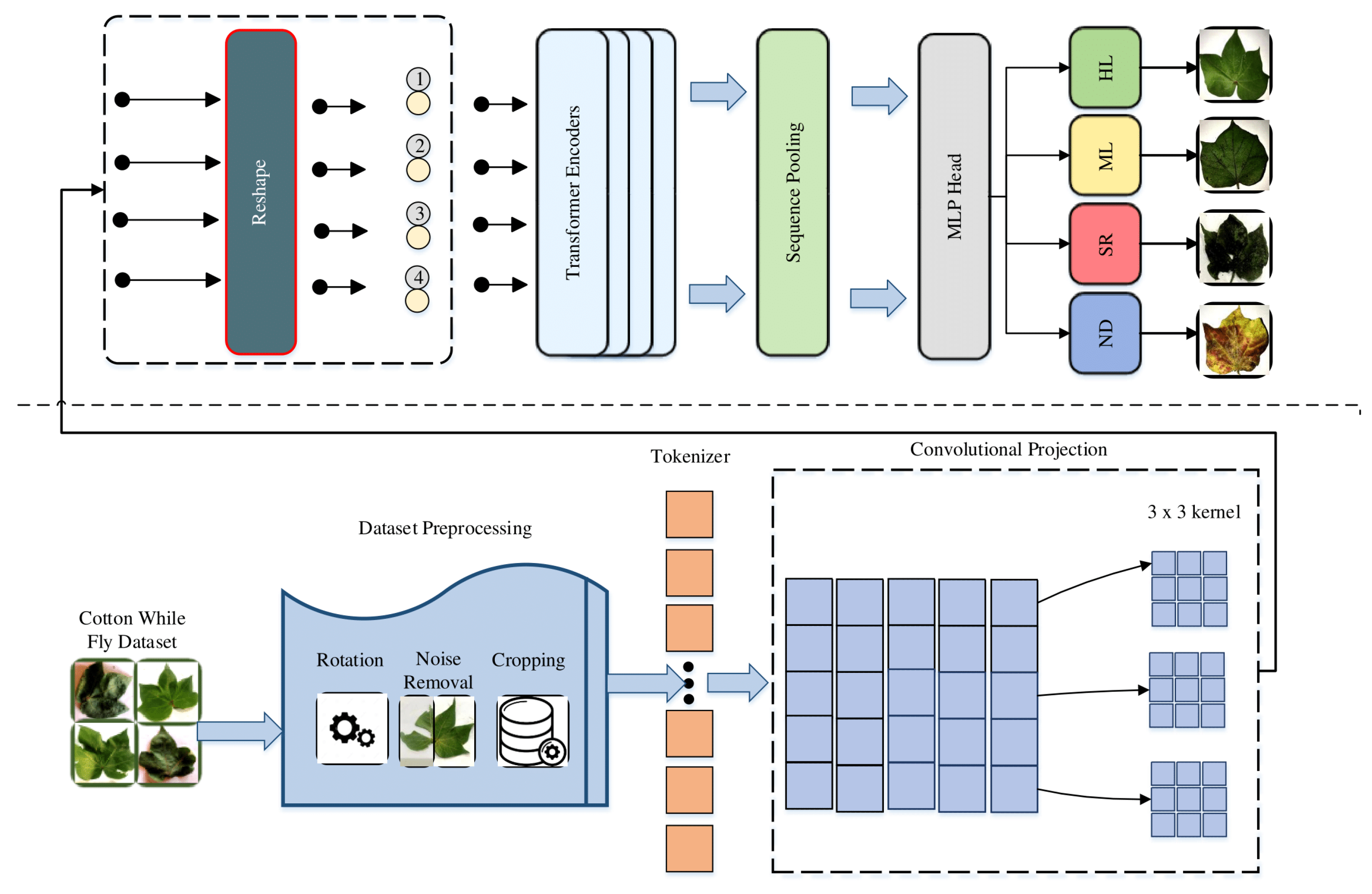

4.1. Data Pre-Processing Module

4.2. Classification Model

Compact Convolutional Transformer

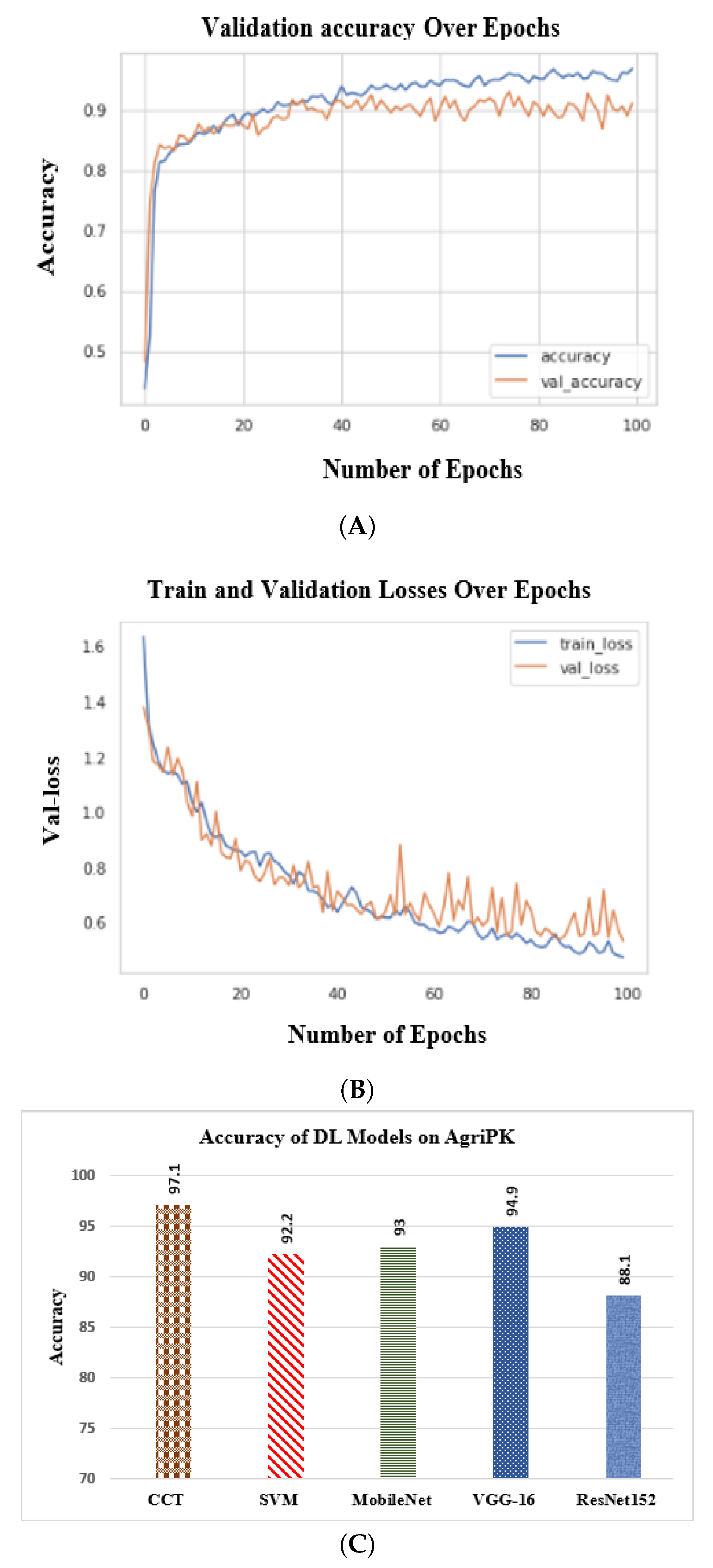

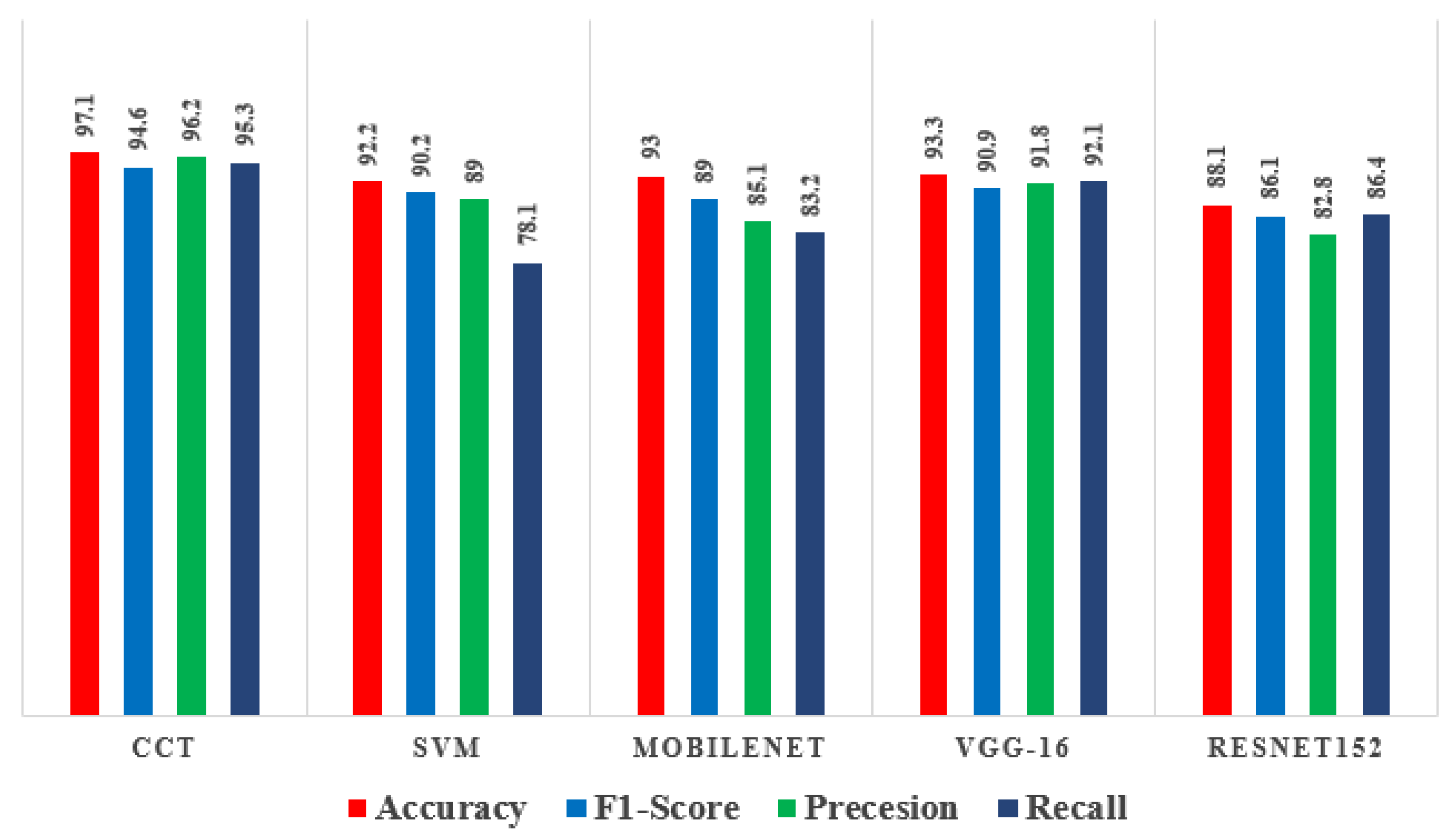

5. Results and Discussion

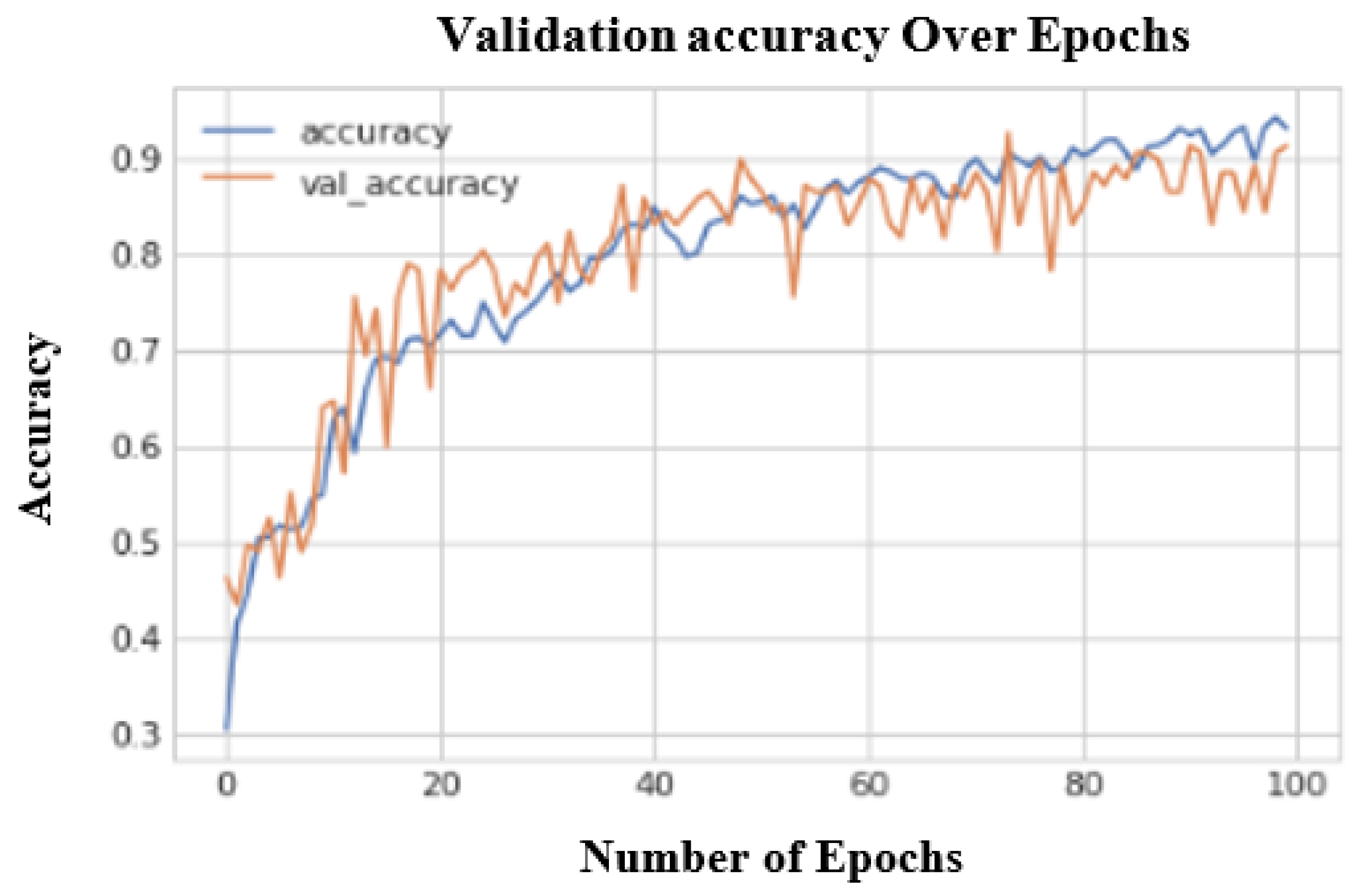

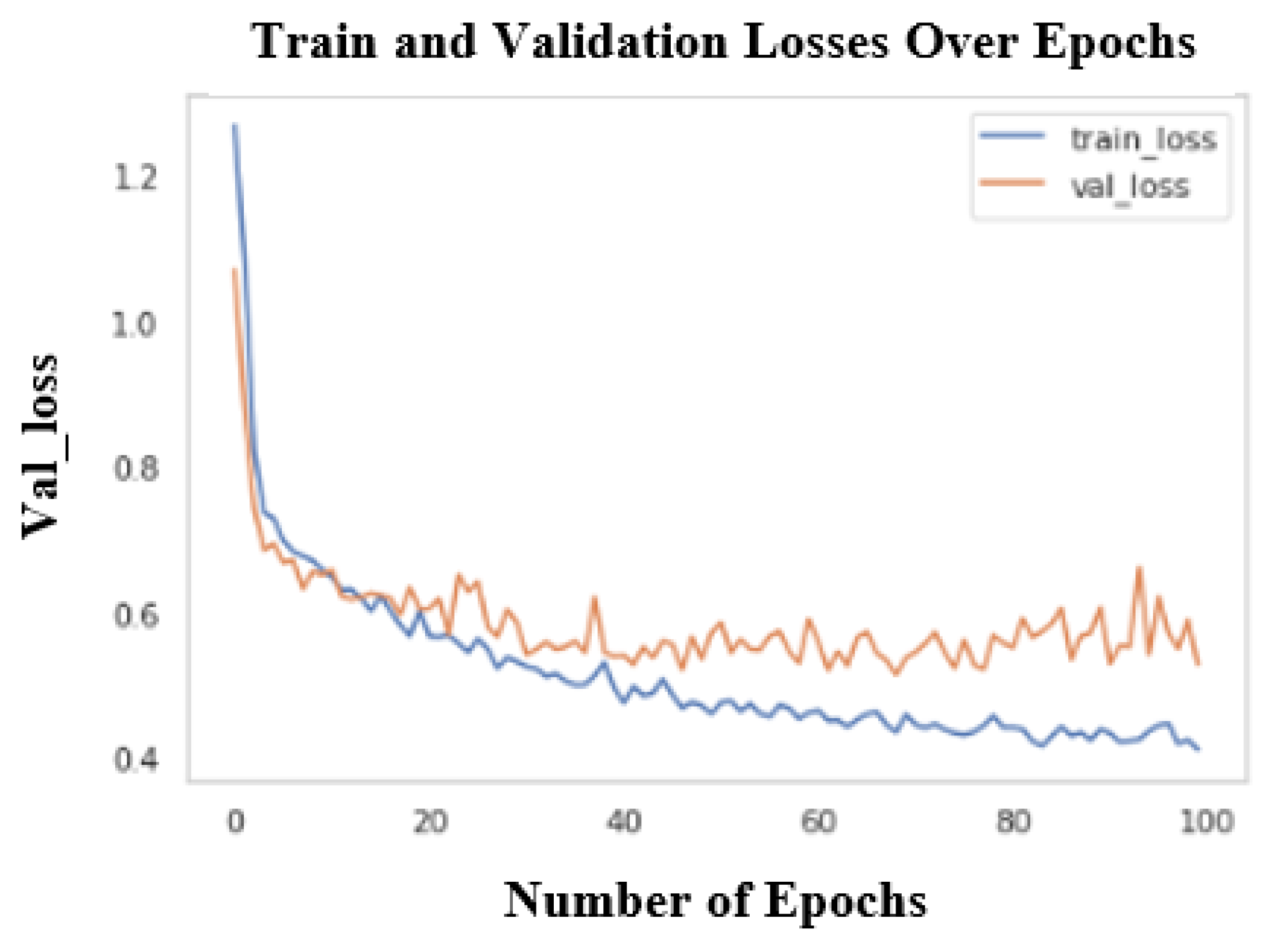

5.1. MOBILE NET

5.2. VGG-16

5.3. RESNET 152-V2

5.4. Yolo V5

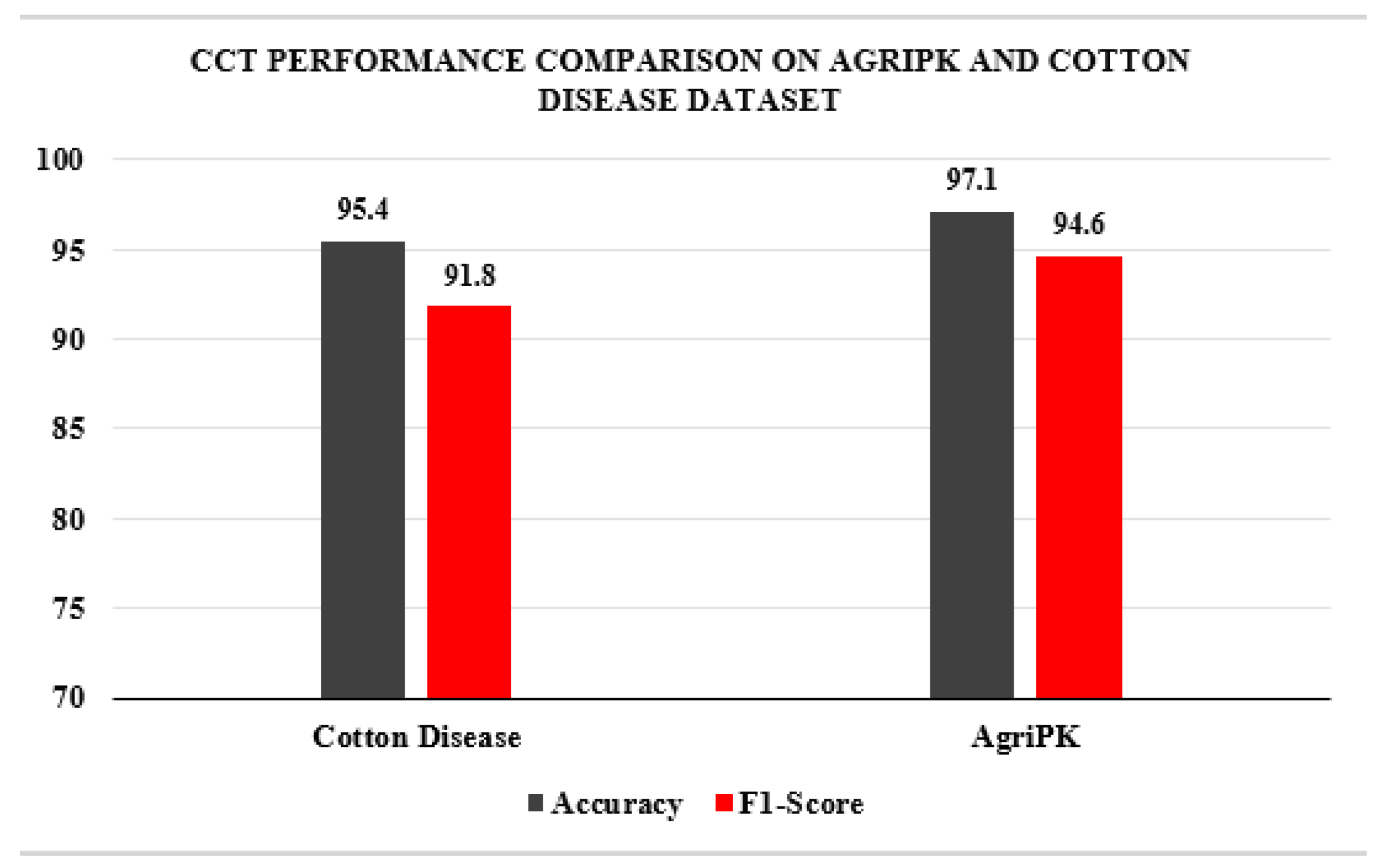

5.5. Performance on AgriPK

5.6. Performance on Cotton Disease Dataset

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shuli, F.; Jarwar, A.H.; Wang, X.; Wang, L.; Ma, Q. Overview of the cotton in Pakistan and its future prospects. Pak. J. Agric. Res. 2018, 31, 291–418. [Google Scholar] [CrossRef]

- Ali, A.; Ahmed, Z. Revival of Cotton Pest Management Strategies in Pakistan. Outlooks Pest Manag. 2021, 32, 144–148. [Google Scholar] [CrossRef]

- Poorter, M.d.; Browne, M. The Global Invasive Species Database (GISD) and international information exchange: Using global expertise to help in the fight against invasive alien species. In Plant Protection and Plant Health in Europe: Introduction and Spread of Invasive Species, Held at Humboldt University, Berlin, Germany, 9–11 June 2005; British Crop Protection Council: Alton, UK, 2005; pp. 49–54. [Google Scholar]

- Zia, K.; Hafeez, F.; Bashir, M.H.; Khan, B.S.; Khan, R.R.; Khan, H.A.A. Severity of cotton whitefly (Bemisia tabaci Genn.) population with special reference to abiotic factors. Pak. J. Agric. Sci. 2013, 50, 217–222. [Google Scholar]

- Hara, P.; Piekutowska, M.; Niedbała, G. Selection of independent variables for crop yield prediction using artificial neural network models with remote sensing data. Land 2021, 10, 609. [Google Scholar] [CrossRef]

- Sedri, M.H.; Niedbała, G.; Roohi, E.; Niazian, M.; Szulc, P.; Rahmani, H.A.; Feiziasl, V. Comparative Analysis of Plant Growth-Promoting Rhizobacteria (PGPR) and Chemical Fertilizers on Quantitative and Qualitative Characteristics of Rainfed Wheat. Agronomy 2022, 12, 1524. [Google Scholar] [CrossRef]

- Legaspi, K.R.B.; Sison, N.W.S.; Villaverde, J.F. Detection and Classification of Whiteflies and Fruit Flies Using YOLO. In Proceedings of the 2021 13th International Conference on Computer and Automation Engineering (ICCAE), Melbourne, Australia, 20–22 March 2021; pp. 1–4. [Google Scholar]

- Tulshan, A.S.; Raul, N. Plant leaf disease detection using machine learning. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–6. [Google Scholar]

- Nesarajan, D.; Kunalan, L.; Logeswaran, M.; Kasthuriarachchi, S.; Lungalage, D. Coconut disease prediction system using image processing and deep learning techniques. In Proceedings of the 2020 IEEE 4th International Conference on Image Processing, Applications and Systems (IPAS), Genova, Italy, 9–11 December 2020; pp. 212–217. [Google Scholar]

- Sujatha, R.; Chatterjee, J.M.; Jhanjhi, N.; Brohi, S.N. Performance of deep learning vs machine learning in plant leaf disease detection. Microprocess. Microsyst. 2021, 80, 103615. [Google Scholar] [CrossRef]

- Azath, M.; Zekiwos, M.; Bruck, A. Deep learning-based image processing for cotton leaf disease and pest diagnosis. J. Electr. Comput. Eng. 2021, 2021. [Google Scholar]

- Caldeira, R.F.; Santiago, W.E.; Teruel, B. Identification of cotton leaf lesions using deep learning techniques. Sensors 2021, 21, 3169. [Google Scholar] [CrossRef] [PubMed]

- Saleem, R.M.; Kazmi, R.; Bajwa, I.S.; Ashraf, A.; Ramzan, S.; Anwar, W. IOT-Based Cotton Whitefly Prediction Using Deep Learning. Sci. Program. 2021, 2021, 8824601. [Google Scholar] [CrossRef]

- Pechuho, N.; Khan, Q.; Kalwar, S. Cotton Crop Disease Detection using Machine Learning via Tensorflow. Pak. J. Eng. Technol. 2020, 3, 126–130. [Google Scholar]

- Rothe, P.; Kshirsagar, R. Cotton leaf disease identification using pattern recognition techniques. In Proceedings of the 2015 International Conference on Pervasive Computing (ICPC), Pune, India, 8–10 January 2015; pp. 1–6. [Google Scholar]

- Mojjada, R.K.; Kumar, K.K.; Yadav, A.; Prasad, B.S.V. Detection of plant leaf disease using digital image processing. Mater. Today Proc. 2020. [Google Scholar] [CrossRef]

- Bisong, E. Autoencoders. In Building Machine Learning and Deep Learning Models on Google Cloud Platform; Springer: Cham, Switzerland, 2019; pp. 475–482. [Google Scholar]

- Bedi, P.; Gole, P. Plant disease detection using hybrid model based on convolutional autoencoder and convolutional neural network. Artif. Intell. Agric. 2021, 5, 90–101. [Google Scholar] [CrossRef]

- Chowdhury, M.E.; Rahman, T.; Khandakar, A.; Ayari, M.A.; Khan, A.U.; Khan, M.S.; Al-Emadi, N.; Reaz, M.B.I.; Islam, M.T.; Ali, S.H.M. Automatic and reliable leaf disease detection using deep learning techniques. AgriEngineering 2021, 3, 294–312. [Google Scholar] [CrossRef]

- Singh, V. Sunflower leaf diseases detection using image segmentation based on particle swarm optimization. Artif. Intell. Agric. 2019, 3, 62–68. [Google Scholar] [CrossRef]

- Bernardes, A.A.; Rogeri, J.G.; Oliveira, R.B.; Marranghello, N.; Pereira, A.S.; Araujo, A.F.; Tavares, J.M.R. Identification of foliar diseases in cotton crop. In Topics in Medical Image Processing and Computational Vision; Springer: Cham, Switzerland, 2013; pp. 67–85. [Google Scholar]

- Naeem, S.; Ali, A.; Chesneau, C.; Tahir, M.H.; Jamal, F.; Sherwani, R.A.K.; Ul Hassan, M. The classification of medicinal plant leaves based on multispectral and texture feature using machine learning approach. Agronomy 2021, 11, 263. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, J.; Song, H. Corn ear test using SIFT-based panoramic photography and machine vision technology. Artif. Intell. Agric. 2020, 4, 162–171. [Google Scholar] [CrossRef]

- Islam, M.A.; Islam, M.S.; Hossen, M.S.; Emon, M.U.; Keya, M.S.; Habib, A. Machine learning based image classification of papaya disease recognition. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 5–7 November 2020; pp. 1353–1360. [Google Scholar]

- Arsenovic, M.; Karanovic, M.; Sladojevic, S.; Anderla, A.; Stefanovic, D. Solving current limitations of deep learning based approaches for plant disease detection. Symmetry 2019, 11, 939. [Google Scholar] [CrossRef]

- Ngugi, L.C.; Abelwahab, M.; Abo-Zahhad, M. Recent advances in image processing techniques for automated leaf pest and disease recognition—A review. Inf. Process. Agric. 2021, 8, 27–51. [Google Scholar] [CrossRef]

- D3v. Cotton Disease Dataset, Version 1. 2020. Available online: https://www.kaggle.com/datasets/janmejaybhoi/cotton-disease-dataset (accessed on 6 January 2022).

- Hassani, A.; Walton, S.; Shah, N.; Abuduweili, A.; Li, J.; Shi, H. Escaping the big data paradigm with compact transformers. arXiv 2021, arXiv:2104.05704. [Google Scholar]

- d’Ascoli, S.; Touvron, H.; Leavitt, M.L.; Morcos, A.S.; Biroli, G.; Sagun, L. Convit: Improving vision transformers with soft convolutional inductive biases. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 2286–2296. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 22–31. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.H.; Tay, F.E.; Feng, J.; Yan, S. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 558–567. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- ultralytics/yolov5: v6.2. YOLOv5 Classification Models, Apple M1, Reproducibility, ClearML and Deci.ai Integrations. Available online: https://github.com/ultralytics/yolov5/releases (accessed on 10 August 2022).

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

| Our AgriPK Dataset | ||||

|---|---|---|---|---|

| No. of Classes | Categories | No. of Images | Test/Train | Total No. of Images |

| 1 | Healthy | 2213 | 1600/713 | 5137 |

| 2 | Unhealthy | 2852 | 2110/741 | |

| 3 | Mild | 210 | 152/58 | |

| 4 | Nutrition Deficiency | 235 | 160/75 | |

| 5 | Severe | 2407 | 1801/675 | |

| Cotton Diseased Dataset | ||||

| 1 | Diseased Cotton Leaves | 288 | 235/53 | 1951 |

| 2 | Diseased Cotton Plant | 815 | 602/203 | |

| 3 | Fresh Cotton Leaves | 427 | 324/104 | |

| 4 | Fresh Cotton Plant | 421 | 321/101 | |

| Parameter | Value |

|---|---|

| Learning rate | 0.06 |

| Batch size | 64 |

| Input size | 224 |

| No. of epochs | 100 |

| Weight decay | 0.006 |

| Parameters | Mobile Net | VGG-16 | ResNet-152 | YoloV5 | SVM | CCT |

|---|---|---|---|---|---|---|

| Accuracy (%) | 93 | 93.3 | 88.1 | 95.1 | 92.2 | 97.1 |

| F1-Score | 89 | 90.9 | 86.1 | 93.2 | 90.2 | 94.6 |

| Precision | 85.1 | 91.8 | 82.8 | 91.1 | 89 | 96.2 |

| Recall | 83.2 | 92.1 | 86.4 | 85.7 | 78.1 | 95.3 |

| Model | No. of Params. | Training Time |

|---|---|---|

| CCT | 897,413 | 7200 ms |

| VGG-16 | 750,567 | 6600 ms |

| MobileNet | 699,156 | 6300 ms |

| Resnet 152 | 950,567 | 8900 ms |

| Model | Cotton Disease Dataset | AgriPK Dataset | F1-Score Increment |

|---|---|---|---|

| CCT | 91.8 | 94.6 | 2.8% |

| SVM | 80.2 | 90.2 | 9.6% |

| Mobile Net | 80.4 | 89 | 8.6% |

| VGG-16 | 89.8 | 90.6 | 0.8% |

| ResNet152-v2 | 80.7 | 86.1 | 5.7% |

| Yolo V5 | 92.6 | 93.2 | 0.5% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jajja, A.I.; Abbas, A.; Khattak, H.A.; Niedbała, G.; Khalid, A.; Rauf, H.T.; Kujawa, S. Compact Convolutional Transformer (CCT)-Based Approach for Whitefly Attack Detection in Cotton Crops. Agriculture 2022, 12, 1529. https://doi.org/10.3390/agriculture12101529

Jajja AI, Abbas A, Khattak HA, Niedbała G, Khalid A, Rauf HT, Kujawa S. Compact Convolutional Transformer (CCT)-Based Approach for Whitefly Attack Detection in Cotton Crops. Agriculture. 2022; 12(10):1529. https://doi.org/10.3390/agriculture12101529

Chicago/Turabian StyleJajja, Aqeel Iftikhar, Assad Abbas, Hasan Ali Khattak, Gniewko Niedbała, Abbas Khalid, Hafiz Tayyab Rauf, and Sebastian Kujawa. 2022. "Compact Convolutional Transformer (CCT)-Based Approach for Whitefly Attack Detection in Cotton Crops" Agriculture 12, no. 10: 1529. https://doi.org/10.3390/agriculture12101529

APA StyleJajja, A. I., Abbas, A., Khattak, H. A., Niedbała, G., Khalid, A., Rauf, H. T., & Kujawa, S. (2022). Compact Convolutional Transformer (CCT)-Based Approach for Whitefly Attack Detection in Cotton Crops. Agriculture, 12(10), 1529. https://doi.org/10.3390/agriculture12101529