An Enhanced YOLOv5 Model for Greenhouse Cucumber Fruit Recognition Based on Color Space Features

Abstract

1. Introduction

2. Materials and Methods

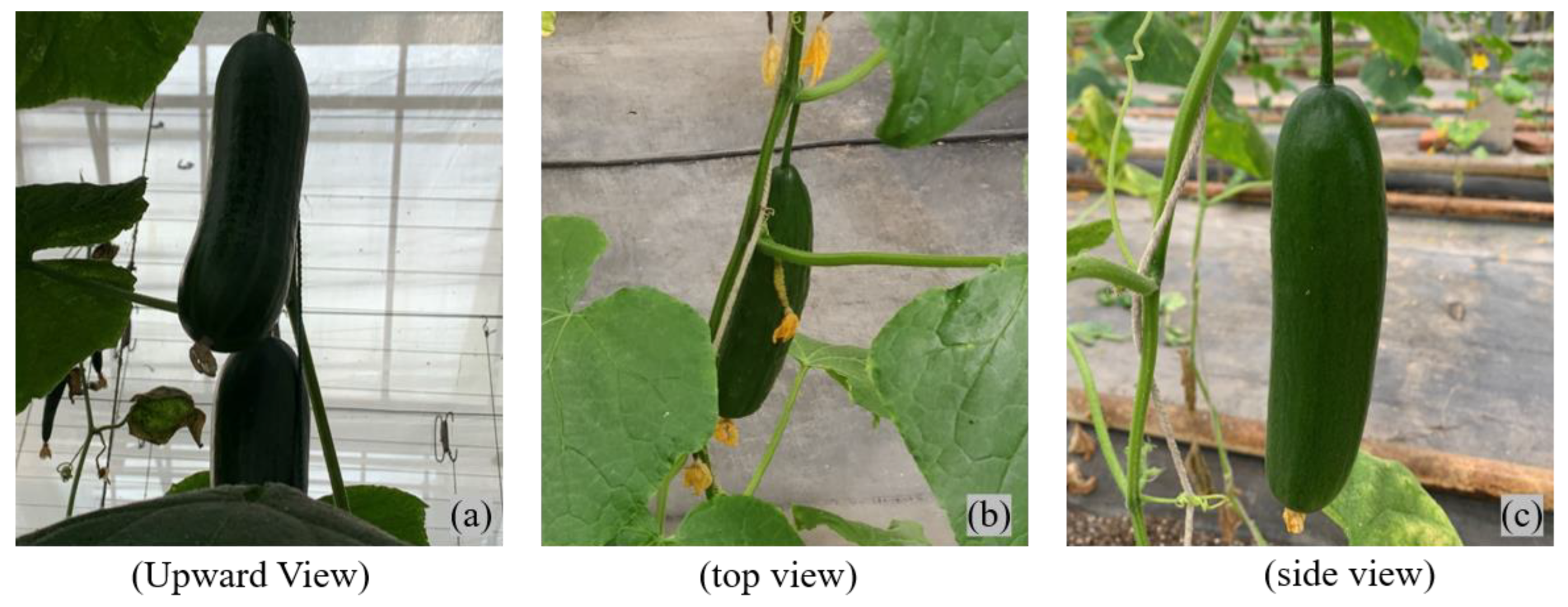

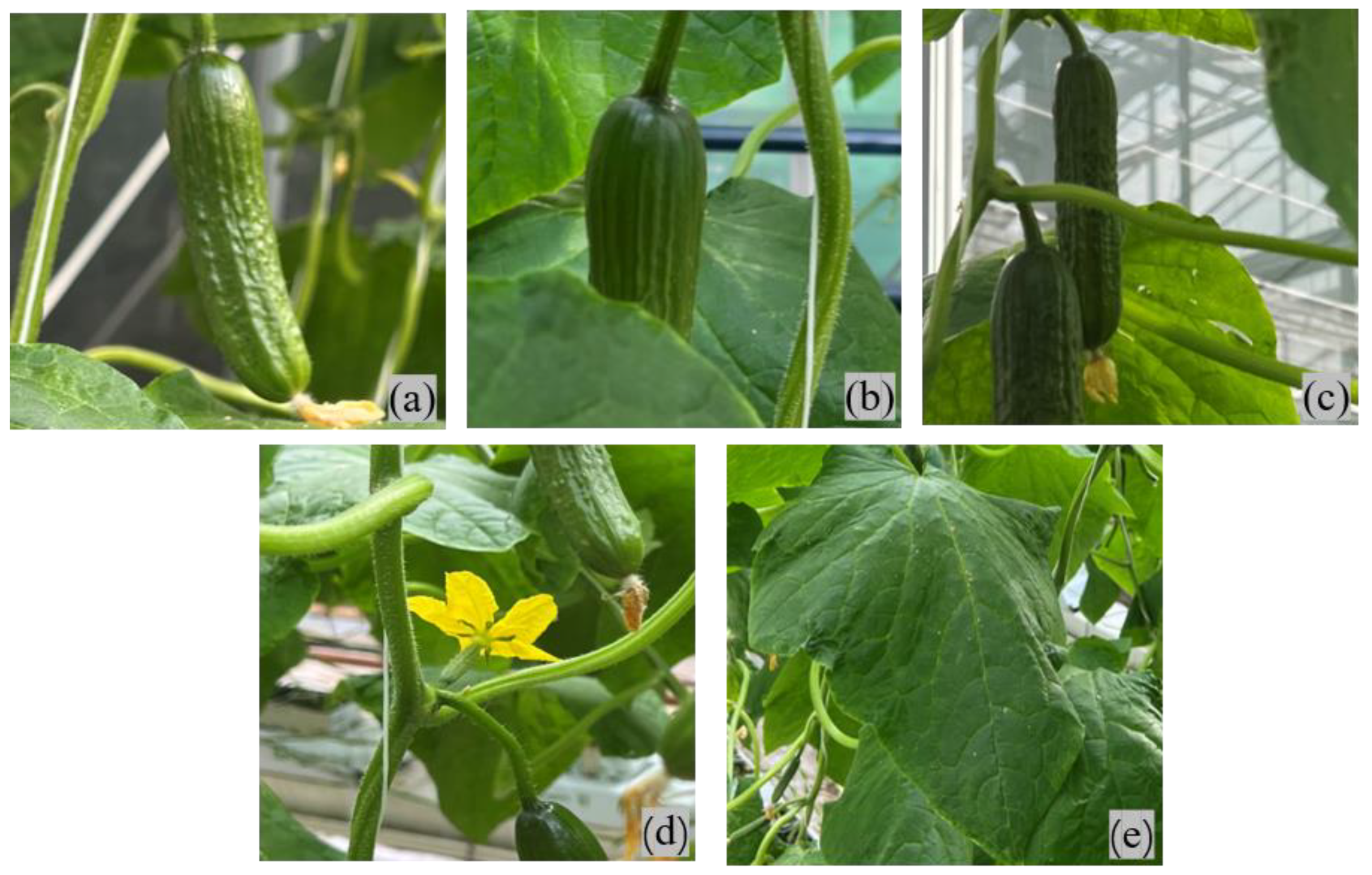

2.1. Acquisition and Processing of Datasets

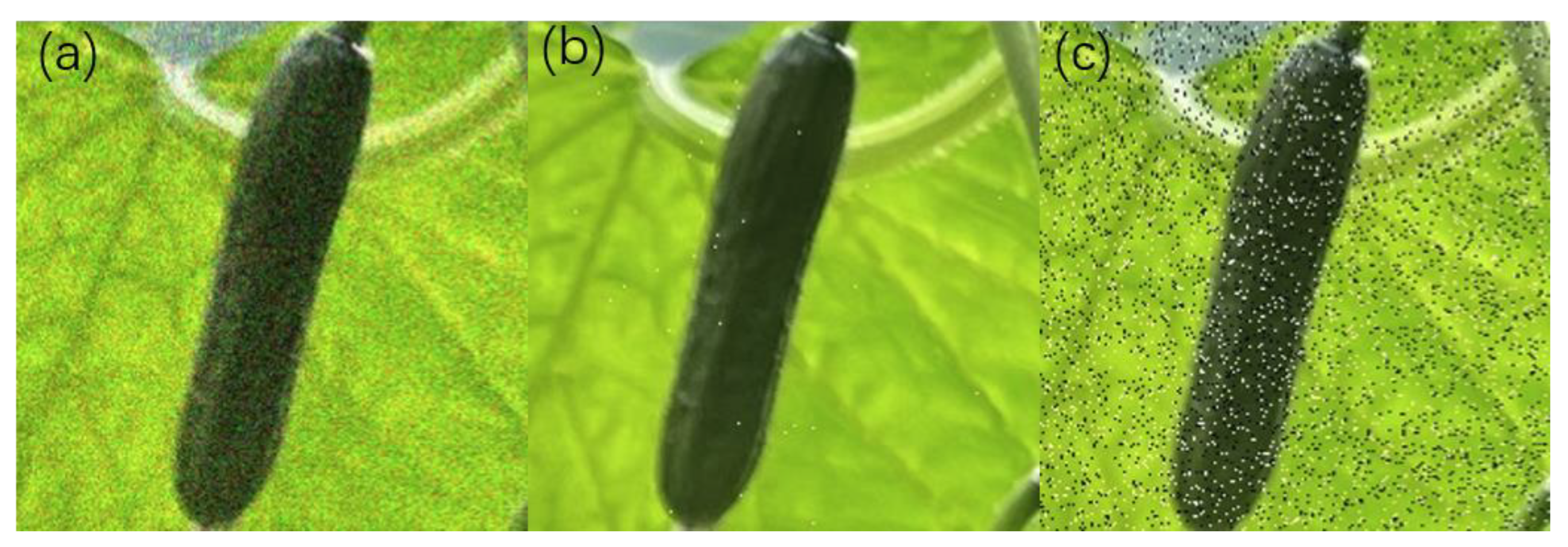

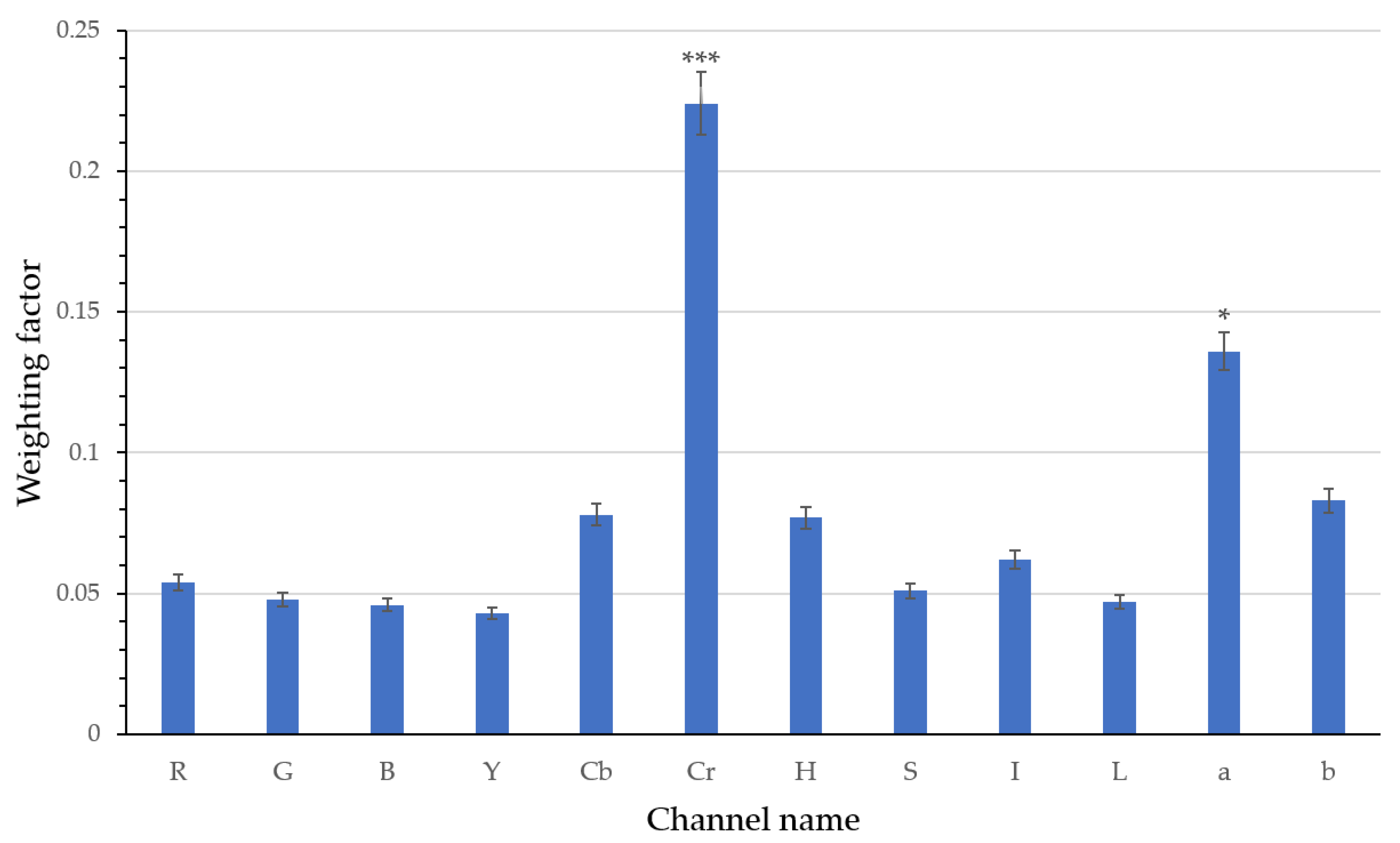

2.2. Color Space Conversion and Weight Analysis

2.2.1. Conversion Principles

2.2.2. ReliefF Weight Analysis Method

2.3. Model Selection and Experimental Environment

2.3.1. YOLOv5

2.3.2. Operating Environment

2.4. Experimental Process

2.5. Evaluation Indicators

3. Results

3.1. Comparative Analysis of Different Color Spaces

3.2. Cr Channel Enhances YOLOv5 Recognition Ability

3.3. Ablation Experiments of Different Object Detection Models

3.4. Comparison of Detection Effects

4. Discussion

4.1. Result Analysis

4.2. The Future Research Focus

4.2.1. Multi-Channel Parallel Convolution Neural Network

4.2.2. Modify Model Operation Dimension

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Barbashov, N.N.; Shanygin, S.V.; Barkova, A.A. Agricultural robots for fruit harvesting in horticulture application. IOP Conf. Ser. Earth Environ. Sci. 2022, 981, 032009. [Google Scholar] [CrossRef]

- HE, B.; Zhang, Y.; Gong, J.; Fu, G.; Zhao, Y.; Wu, R. Fast Recognition of Tomato Fruit in Greenhouse at Night Based on Improved YOLOv5. Trans. Chin. Soc. Agric. Mach. 2022, 53, 201–208. [Google Scholar]

- Shilei, L.; Sihua, L.; Zhen, L.; Tiansheng, H.; Yueju, X.; Benlei, W. Orange recognition method using improved YOLOv3-LITE lightweight neural network. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2019, 35, 205–214. [Google Scholar]

- He, Z.L.; Xiong, J.T.; Lin, R.; Zou, X.; Tang, L.-X.; Yang, Z.-G.; Liu, Z.; Song, G. A method of green litchi recognition in natural environment based on improved LDA classifier. Comput. Electron. Agric. 2017, 140, 159–167. [Google Scholar] [CrossRef]

- Zhao, H.; Qiao, Y.; Wang, H.; Yue, Y. Apple fruit recognition in complex orchard environment based on improved YOLOv3. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2021, 37, 127–135. [Google Scholar]

- Tang, Y.; Chen, M.; Wang, C.; Luo, L.; Li, J.; Lian, G.; Zou, X. Recognition and Localization Methods for Vision-Based Fruit Picking Robots: A Review. Front. Plant Sci. 2020, 11, 510. [Google Scholar] [CrossRef]

- Zheng, C.; Chen, P.; Pang, J.; Yang, X.; Chen, C.; Tu, S.; Xue, Y. A mango picking vision algorithm on instance segmentation and key point detection from RGB images in an open orchard. Biosyst. Eng. 2021, 206, 32–54. [Google Scholar] [CrossRef]

- Malik, M.H.; Zhang, T.; Han, L.; Zhang, M.; Shabbir, S.; Saeed, A. Mature Tomato Fruit Detection Algorithm Based on improved HSV and Watershed Algorithm. IFAC-Pap. 2018, 51, 431–436. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, D.; Jia, W.; Ji, W.; Sun, Y. A detection method for apple fruits based on color and shape features. IEEE Access 2019, 7, 67923–67933. [Google Scholar] [CrossRef]

- Li, D.; Zhao, H.; Zhao, X.; Gao, Q.; Xu, L. Cucumber Detection Based on Texture and Color in Greenhouse. Int. J. Pattern Recognit. Artif. Intell. 2017, 31, 1754016. [Google Scholar] [CrossRef]

- Mao, Z.; Sun, Y. Algorithm of male tassel recognition based on HSI space. Transducer Microsyst. Technol. 2018, 37, 117–119. [Google Scholar]

- Wei, X.; He, X.; Chen, Y.; Fang, K. Shadow removal method in close-up image based on YCbCr. J. Chin. Agric. Mech. 2020, 6, 159. [Google Scholar]

- Ballester, C.; Bugeau, A.; Carrillo, H.; Clément, M.; Giraud, R.; Raad, L.; Vitoria, P. Influence of Color Spaces for Deep Learning Image Colorization. arXiv 2022, arXiv:2204.02850. [Google Scholar]

- Johnson, J.; Sharma, G.; Srinivasan, S.; Msakapalli, S.K.; Sharma, S.; Sharma, J.; Dua, V.K. Enhanced field-based detection of potato blight in complex backgrounds using deep learning. Plant Phenomics 2021, 2021, 9835724. [Google Scholar] [CrossRef] [PubMed]

- Abayomi-Alli, O.O.; Damaševičius, R.; Misra, S.; Maskeliūnas, R. Cassava disease recognition from low-quality images using enhanced data augmentation model and deep learning. Expert Syst. 2021, 38, e12746. [Google Scholar] [CrossRef]

- Cao, Z.; Shao, M.; Shi, A.; Qu, H. HCHODetector: Formaldehyde concentration detection based on deep learning. J. Phys. Conf. Ser. 2021, 1848, 012047. [Google Scholar] [CrossRef]

- Kim, H.K.; Park, J.H.; Jung, H.Y. An efficient color space for deep-learning based traffic light recognition. J. Adv. Transp. 2018, 2018, 2365414. [Google Scholar] [CrossRef]

- Dai, G.W.; Fan, J.C. An industrial-grade solution for crop disease image detection tasks. Front. Plant Sci. 2022, 13, 921057. [Google Scholar] [CrossRef]

- Ganesan, G.; Chinnappan, J. Hybridization of ResNet with YOLO classifier for automated paddy leaf disease recognition: An optimized model. J. Field Robot. 2022, 39, 1087–1111. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, Q.; Kong, S.; Xing, L.; Wang, Q.; Cong, X.; Zhou, Y. Real-time object detection method of melon leaf diseases under complex background in greenhouse. J. Real-Time Image Processing 2022, 19, 985–995. [Google Scholar] [CrossRef]

- Xin, M.; Wang, Y. Image recognition of crop diseases and insect pests based on deep learning. Wirel. Commun. Mob. Comput. 2021, 2021, 5511676. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X. Tomato diseases and pests detection based on improved Yolo V3 convolutional neural network. Front. Plant Sci. 2020, 11, 898. [Google Scholar] [CrossRef] [PubMed]

- Saleem, M.H.; Velayudhan, K.K.; Potgieter, J.; Arif, K.M. Weed identification by single-stage and two-stage neural networks: A study on the impact of image resizers and weights optimization algorithms. Front. Plant Sci. 2022, 13, 850666. [Google Scholar] [CrossRef]

- Rajalakshmi, T.S.; Panikulam, P.; Sharad, P.K.; Nair, R.R. Development of a small scale cartesian coordinate farming robot with deep learning-based weed detection. J. Phys. Conf. Ser. 2021, 1969, 012007. [Google Scholar] [CrossRef]

- Gao, J.; French, A.P.; Pound, M.P.; He, Y.; Pridmore, T.P.; Pieters, J.G. Deep convolutional neural networks for image-based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Nouaze, J.C.; Touko Mbouembe, P.L.; Kim, J.H. YOLO-tomato: A robust algorithm for tomato detection based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef] [PubMed]

- Spolaôr, N.; Cherman, E.A.; Monard, M.C.; Lee, H.D. ReliefF for multi-label feature selection. In Proceedings of the 2013 Brazilian Conference on Intelligent Systems, Fortaleza, Brazil, 19–24 October 2013; pp. 6–11. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Misra, D. Mish: A self-regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. DropBlock: A regularization method for convolutional networks. Adv. Neural Inf. Processing Syst. (NIPS) 2018, 31, 10727–10737. [Google Scholar]

- Zhao, Q.Z.; Liu, W.; Yin, X.J.; Zhang, T.Y. Selection of Optimum Bands Combination Based on Multispectral Images of UAV. Trans. Chin. Soc. Agric. Mach. 2016, 47, 242–248. [Google Scholar]

- Xi, X.S.; Xia, K.; Yang, Y.H.; Du, X.C.; Feng, H.L. Urban individual tree crown detection research using multispectral image dimensionality reduction with deep learning. Natl. Remote Sens. Bull. 2022, 26, 711–721. [Google Scholar]

- Li, D.; Bao, X.; Yu, X.; Gao, Q. Green apple detection and recognition based on YOLOv3 network in natural environment. Laser J. 2021, 42, 71–77. [Google Scholar]

- Liao, J.; Wang, Y.; Yin, J.; Liu, L.; Zhang, S.; Zhu, D. Segmentation of rice seedlings using the YcrCb color space and an improved Otsu method. Agronomy 2018, 8, 269. [Google Scholar] [CrossRef]

- Quiroz, R.A.A.; Guidotti, F.P.; Bedoya, A.E. A method for automatic identification of crop lines in drone images from a man-go tree plantation using segmentation over YcrCb color space and Hough transform. In Proceedings of the 2019 XXII Symposium on Image, Signal Processing and Artificial Vision (STSIVA), Bucaramanga, Colombia, 24–26 April 2019; pp. 1–5. [Google Scholar]

- Hernández-Hernández, J.L.; Hernández-Hernández, M.; Feliciano-Morales, S.; Álvarez-Hilario, V.; Herrera-Miranda, I. Search for optimum color space for the recognition of oranges in agricultural fields. In Proceedings of the International Conference on Technologies and Innovation, Guayaquil, Ecuador, 24–27 October 2017; Springer: Cham, Switzerland, 2017; pp. 296–307. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Yang, H.Y.; Jiang, X.H.; Nie, Z.X. Facial key points location based on parallel convolutional neural network. Appl. Res. Comput. 2015, 32, 2517–2519. [Google Scholar]

- Wei, S.W.; Zeng, S.Y.; Zhou, Y.; Wang, X.J. Multiple types of leaves′ classification based on parallel residual convolution neural network. Mod. Electron. Tech. 2020, 43, 96–100. [Google Scholar]

- Gilchrist, A.; Nobbs, J. Colorimetry, Theory, Encyclopedia of Spectroscopy and Spectrometry; Academic Press: London, UK; San Diego, CA, USA, 2000; Volume 1, pp. 337–343. [Google Scholar]

- BT. 601: Studio Encoding Parameters of Digital Television for Standard 4:3 and Wide Screen 16:9 Aspect Ratios. Available online: https://www.itu.int/rec/R-REC-BT.601-7-201103-I/en (accessed on 27 July 2022).

| Images | Exposure | Orientation | |||

| Under-exposed | Over-exposed | Upward view | Top view | Side view | |

| 336 | 102 | 102 | 109 | 227 | |

| Labels | Positive | Negative | |||

| Intact fruits | Occluded fruits | Segmented fruits | Female flowers | Leaves and stems | |

| 1841 | 326 | 170 | 237 | 1515 | |

| F1 | Precision | Recall | mAP | |

|---|---|---|---|---|

| without Cr | 0.58 | 83.70% | 44.40% | 50.00% |

| with Cr | 0.65 | 85.19% | 53.10% | 58.00% |

| F1 | Precision | Recall | mAP | FPS | ||

|---|---|---|---|---|---|---|

| SSD | original | 0.41 | 53.65% | 26.11% | 42% | 28.3 |

| enhanced | 0.41 | 55.16% | 26.11% | 42% | 28.9 | |

| Faster R-CNN | original | 0.50 | 51.66% | 48.23% | 46% | 21.7 |

| enhanced | 0.54 | 54.75% | 53.54% | 51% | 22.5 | |

| YOLOv5s | original | 0.63 | 83.70% | 52.21% | 53% | 53.2 |

| enhanced | 0.67 | 85.19% | 54.03% | 58% | 53.3 | |

| YOLOv5m | original | 0.64 | 80.54% | 52.21% | 53% | 38.6 |

| enhanced | 0.65 | 83.69% | 53.10% | 56% | 39.1 | |

| YOLOv5l | original | 0.64 | 80.54% | 53.10% | 54% | 24.4 |

| enhanced | 0.66 | 81.17% | 55.31% | 57% | 24.5 | |

| YOLOv5x | original | 0.61 | 78.95% | 52.21% | 55% | 13.2 |

| enhanced | 0.64 | 81.38% | 53.10% | 55% | 14.7 |

| Manual Statistics | Model Detected | P | |||

|---|---|---|---|---|---|

| Complete Fruit | Incomplete Fruit | Complete Fruit | Incomplete Fruit | ||

| Group 1 | 35 | 11 | 28 | 9 | 80.4% |

| Group 2 | 25 | 9 | 21 | 5 | 76.4% |

| Group 3 | 33 | 12 | 27 | 8 | 77.8% |

| Group 4 | 24 | 10 | 19 | 7 | 76.5% |

| Group 5 | 25 | 11 | 22 | 7 | 80.6% |

| Group 6 | 32 | 6 | 29 | 3 | 84.2% |

| Group 7 | 34 | 5 | 31 | 2 | 84.6% |

| Group 8 | 35 | 9 | 29 | 6 | 79.5% |

| Group 9 | 32 | 7 | 28 | 5 | 84.6% |

| Group 10 | 26 | 11 | 21 | 7 | 78.4% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, N.; Qian, T.; Yang, J.; Li, L.; Zhang, Y.; Zheng, X.; Xu, Y.; Zhao, H.; Zhao, J. An Enhanced YOLOv5 Model for Greenhouse Cucumber Fruit Recognition Based on Color Space Features. Agriculture 2022, 12, 1556. https://doi.org/10.3390/agriculture12101556

Wang N, Qian T, Yang J, Li L, Zhang Y, Zheng X, Xu Y, Zhao H, Zhao J. An Enhanced YOLOv5 Model for Greenhouse Cucumber Fruit Recognition Based on Color Space Features. Agriculture. 2022; 12(10):1556. https://doi.org/10.3390/agriculture12101556

Chicago/Turabian StyleWang, Ning, Tingting Qian, Juan Yang, Linyi Li, Yingyu Zhang, Xiuguo Zheng, Yeying Xu, Hanqing Zhao, and Jingyin Zhao. 2022. "An Enhanced YOLOv5 Model for Greenhouse Cucumber Fruit Recognition Based on Color Space Features" Agriculture 12, no. 10: 1556. https://doi.org/10.3390/agriculture12101556

APA StyleWang, N., Qian, T., Yang, J., Li, L., Zhang, Y., Zheng, X., Xu, Y., Zhao, H., & Zhao, J. (2022). An Enhanced YOLOv5 Model for Greenhouse Cucumber Fruit Recognition Based on Color Space Features. Agriculture, 12(10), 1556. https://doi.org/10.3390/agriculture12101556