Lightweight Detection Algorithm of Kiwifruit Based on Improved YOLOX-S

Abstract

:1. Introduction

2. Experimental Platform and Materials

2.1. Vision Platform System

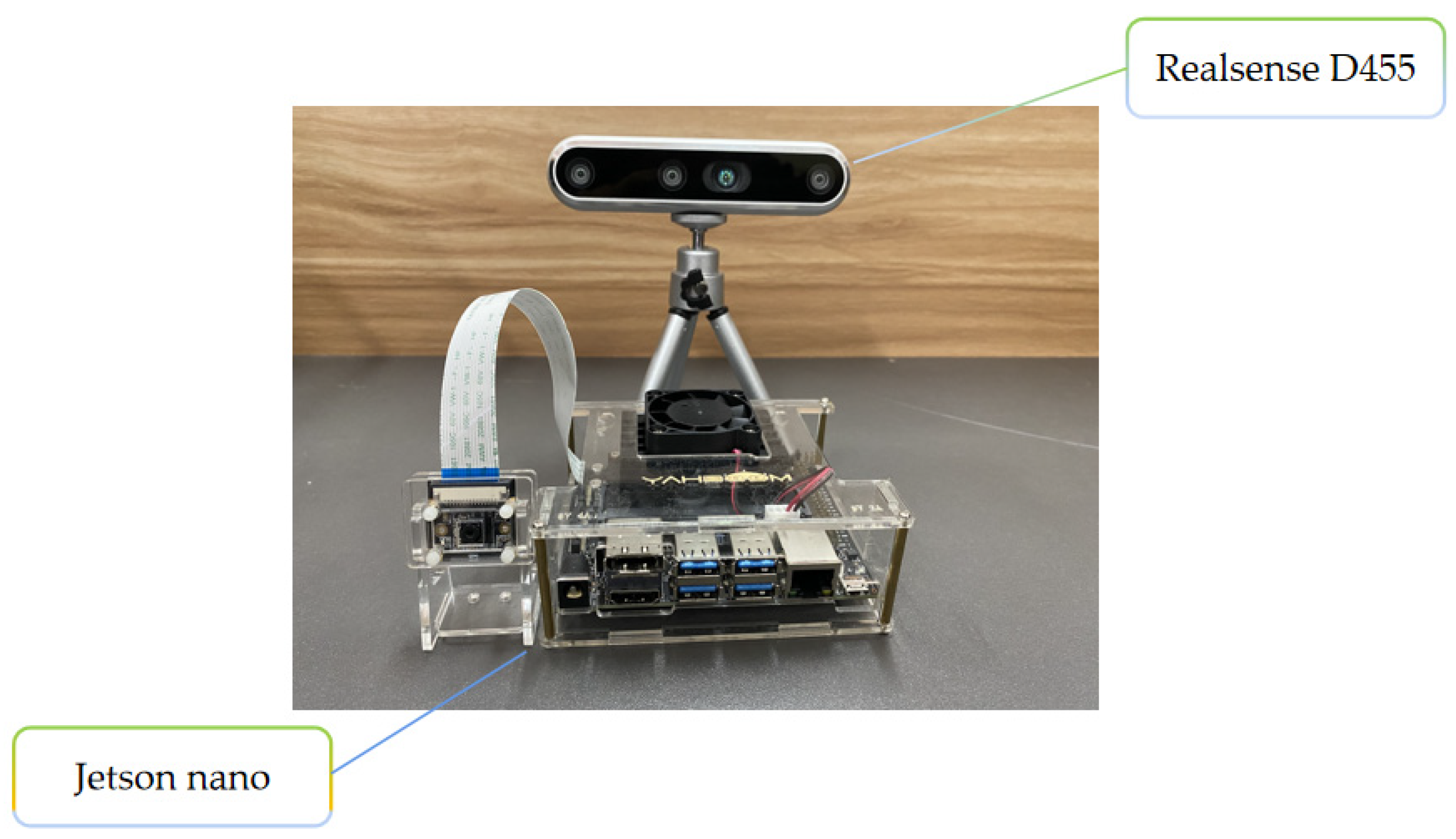

2.2. Hardware Platform

2.3. Experimental Configuration and Environment

2.4. Experimental Sample Dataset

3. Principles and Methods

3.1. Pre-Processing of the Data Set

3.2. Improved YOLOX-S Network

3.2.1. Perturbing the Activation Function Gradient

3.2.2. Nearest Neighbor Interpolation Up-Sampling of 80 × 80 Feature Map

3.2.3. Transfer of Shallow Features

3.2.4. Enhancing the Loss Function

4. Results and Discussion

4.1. Evaluation of Model Performance

4.2. Analysis and Comparison of the Enhanced Model

5. Conclusions

- (a)

- At present, the AP of the model has not reached the ideal state. Next, the data set will be enriched to further improve the performance and accuracy of the model.

- (b)

- We will use pre-processing of the depth image data and color image data by utilizing the camera’s external and internal parameters, triangulation principles, and the conversion of pixel coordinates to 3D spatial coordinates to carry out fruit localization.

- (c)

- The proposed algorithm effectively met the basic requirements for fruit picking using a large-end actuator. However, due to the large number of kiwifruit that need to be picked, in order to further enhance the efficiency of the manipulator, it is necessary to further research the picking sequence allocation for kiwifruit.

- (d)

- We will analyze the correlation between data, identify a variety of other types of fruit through transfer learning, and design a multi-classification general picking model for orchards.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Junaid, M.; Shaikh, A.; Hassan, M.U.; Alghamdi, A.; Rajab, K.; Al Reshan, M.S.; Alkinani, M. Smart Agriculture Cloud Using AI Based Techniques. Energies 2021, 14, 5129. [Google Scholar] [CrossRef]

- Liu, C.; Feng, Q.; Tang, Z.; Wang, X.; Geng, J.; Xu, L. Motion Planning of the Citrus-Picking Manipulator Based on the TO-RRT Algorithm. Agriculture 2022, 12, 581. [Google Scholar] [CrossRef]

- Kong, J.; Wang, H.; Yang, C.; Jin, X.; Zuo, M.; Zhang, X. A Spatial Feature-Enhanced Attention Neural Network with High-Order Pooling Representation for Application in Pest and Disease Recognition. Agriculture 2022, 12, 500. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, C.; Qiao, Y.; Zhang, Z.; Zhang, W.; Song, C. CNN feature based graph convolutional network for weed and crop recognition in smart farming. Comput. Electron. Agric. 2020, 174, 105450. [Google Scholar] [CrossRef]

- Mesa, A.R.; Chiang, J.Y. Multi-Input Deep Learning Model with RGB and Hyperspectral Imaging for Banana Grading. Agriculture 2021, 11, 687. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Wu, J.; Liu, Z.; Gao, F.; Majeed, Y.; AI-Mallahi, A.; Zhang, Q.; Li, R.; Cui, Y. Fast and accurate detection of kiwifruit in orchard using improved YOLOv3-tiny model. Precis. Agric. 2020, 13, 754–776. [Google Scholar] [CrossRef]

- Jia, W.; Zhang, Y.; Lian, J.; Zheng, Y.; Zhao, D.; Li, C. Apple harvesting robot under information technology: A review. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420925310. [Google Scholar] [CrossRef]

- Kang, H.; Chen, C. Fruit detection and segmentation for apple harvesting using visual sensor in orchards. Sensors 2019, 19, 4599. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, L.; Luo, J.; Song, X.; Li, M.; Wen, P.; Xiong, Z. Robust Vehicle Speed Measurement Based on Feature Information Fusion for Vehicle Multi-Characteristic Detection. Entropy 2021, 23, 910. [Google Scholar] [CrossRef]

- Zhou, J.; Jiang, P.; Zou, A.; Chen, X.; Hu, W. Ship Target Detection Algorithm Based on Improved YOLOv5. J. Mar. Sci. Eng. 2021, 9, 908. [Google Scholar] [CrossRef]

- Liu, T.; Ma, Y.J.; Yang, W.; Li, W.; Wang, R.; Jiang, P. Spatial-temporal interaction learning based two-stream network for action recognition. Inform. Sci. 2022, 606, 864–876. [Google Scholar] [CrossRef]

- Liu, C.; Su, J.; Wang, L.; Lu, S.; Li, L. LA-DeepLab V3+: A Novel Counting Network for Pigs. Agriculture 2022, 12, 284. [Google Scholar] [CrossRef]

- Arefi, A.; Motlagh, A.; Mollazade, K.; Teimourlou, R. Recognition and Localization of Ripen Tomato Basedon Machine Vision. Aust. J. Crop. Sci. 2011, 5, 1144–1149. [Google Scholar]

- Xiang, R.; Ying, Y.; Jiang, H.; Rao, X.; Peng, Y. Recognition of Overlapping Tomatoes Based on Edge Curvature Analysis. Trans. Chin. Soc. Agric. Mach. 2012, 43, 157–162. [Google Scholar]

- Si, Y.; Liu, G.; Gao, R. Segmentation Algorithm for Green Apples Recognition Based on K-means Algorithm. In Proceedings of the 3rd Asian Conference on Precision Agriculture, Beijing, China, 14–17 October 2009; pp. 100–105. [Google Scholar]

- Zulkifley, M.A.; Moubark, A.M.; Saputro, A.H.; Abdani, S.R. Automated Apple Recognition System Using Semantic Segmentation Networks with Group and Shuffle Operators. Agriculture 2022, 12, 756. [Google Scholar] [CrossRef]

- Jing, W.; Leqi, W.; Yanling, H.; Yun, Z.; Ruyan, Z. On Combining DeepSnake and Global Saliency for Detection of Orchard Apples. Appl. Sci. 2021, 11, 6269. [Google Scholar] [CrossRef]

- Henten, E.; Tuijl, B.; Hoogakker, G.J.; Weerd, M.; Hemming, J.; Kornet, J.G.; Bontsema, J. An autonomous robot for de-leafing cucumber plants in a high-wire cultivation system. Biosyst. Eng. 2006, 94, 317–323. [Google Scholar] [CrossRef]

- Liu, C.; Zhao, C.; Wu, H.; Han, X.; Li, S. ADDLight: An Energy-Saving Adder Neural Network for Cucumber Disease Classification. Agriculture 2022, 12, 452. [Google Scholar] [CrossRef]

- Xie, Z.; Zhang, T.; Zhao, J. Ripened Strawberry Recognition Based on Hough Transform. Trans. Chin. Soc. Agric. Mach. 2007, 38, 106–109. [Google Scholar]

- Lu, S.; Wen, Y.; Ge, W.; Peng, H. Recogntition and Features Extraction of Suagrcane Nodes Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2010, 41, 190–194. [Google Scholar]

- Li, B.; Wang, M.; Li, L. In-field pineapple recognition based on monocular vision. Trans. Chin. Soc. Agric. Eng. 2010, 26, 345–349. [Google Scholar]

- Cui, Y.; Su, S.; Lyu, Z.; Li, P.; Ding, X. A Method for Separation of Kiwifruit Adjacent Fruits Based on Hough Transformation. J. Agric. Mech. Res. 2012, 34, 166–169. [Google Scholar]

- Fu, L.; Wang, B.; Cui, Y.; Gejima, Y.; Taiichi, K. Kiwifruit recognition at nighttime using artificial lighting based on machine vision. Int. J. Agric. Biol. Eng. 2015, 8, 52–59. [Google Scholar]

- Lowe, D.G. Object Recognition from Local Scale-Invariant Features. In Proceedings of the Seventh IEEE International Conference on Computer Vision (ICCV), Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Dalal, N.; Tniggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 July 2005; pp. 886–893. [Google Scholar]

- Lin, C.; Xu, G.L.; Cao, Y.J.; Liang, C.H.; Li, Y. Improved contour detection model with spatial summation properties based on nonclassical receptive field. J. Electron. Imaging 2016, 25, 043018. [Google Scholar] [CrossRef]

- Suh, H.K.; Hofstee, J.W.; De Ijsselmui, N.J.; Henten, E.V. Sugar beet and volunteer potato classification using Bag-of-Visual Words model, Scale-Invariant Feature Transform, or Speeded Up Robust Feature descriptors and crop row information. Biosyst. Eng. 2018, 166, 210–226. [Google Scholar] [CrossRef] [Green Version]

- Mukherjee, P.; Lall, B. Saliency and KAZE features assisted object segmentation. Image Vis. Comput. 2017, 65, 82–97. [Google Scholar] [CrossRef]

- Song, Z.; Fu, L.; Wu, J.; Liu, Z.; Li, R.; Cui, Y. Kiwifruit detection in field images using Faster R-CNN with VGG16. IFAC-PapersOnLine 2019, 52, 76–81. [Google Scholar] [CrossRef]

- Fu, L.; Feng, Y.; Elkamil, T.; Liu, Z.; Li, R.; Cui, Y. Image recognition method of multi-cluster kiwifruit in field based on convolutional neural networks. Trans. Chin. Soc. Agric. Eng. 2018, 34, 205–211. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding Yolo Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Leng, H.; Tan, M.; Liu, C.; Cubuk, E.; Shi, X.; Cheng, S.; Anguelov, D. PolyLoss: A Polynomial Expansion Perspective of Classification Loss Functions. In Proceedings of the Tenth International Conference on Learning Representations (ICLR), Virtual, 25–29 April 2022. [Google Scholar]

- Chen, L. The Multi-Objectrecognition Method of Cluster Kiwifruits Based on Machinevision. Master’s Thesis, Northwest A&F University, Yangling, China, 2018. [Google Scholar]

- Cui, Y.; Su, S.; Wang, X.; Tian, Y.; Li, P.; Zhang, F. Recognition and Feature Extraction of Kiwifruit in Natural Environment Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2013, 44, 247–252. [Google Scholar]

- Fu, L.; Tola, E.; Al-Mallahi, A.; Li, R.; Cui, Y. A novel image proo cessing algorithm to separate linearly clustered kiwifruits. Biosyst. Eng. 2019, 183, 184–195. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Momentum | 0.937 |

| Weight_decay | 0.0005 |

| Batch_size | 45 |

| Learning_rate | 0.0001 |

| Epochs | 500 |

| Model | AP | Param | Time/ms | FPS |

|---|---|---|---|---|

| IMPROVED | 82.62 | 5,483,590 | 15.6 | 101 |

| ORI | 76.10 | 9,937,682 | 43.2 | 88 |

| Model | [email protected]/% | FPS |

|---|---|---|

| Ours | 82.62 | 101 |

| YOLOv5s | 74.12 | 83 |

| YOLOv3 | 69.46 | 68 |

| YOLOv2 | 67.83 | 63 |

| Fast R-CNN | 80.15 | 52 |

| Reference | Description | Advantages | Disadvantages |

|---|---|---|---|

| [24] | Utilized the L*a*b* color space a* channel for kiwifruit image segmentation | Accurate segmentation of a single fruit | susceptible to external changes |

| [25] | Combines least circumscribed rectangle method and elliptic Hough transform | Accurate segmentation of a single fruit | Not ideal for fruit cluster identification |

| [35] | Improved K-means algorithm | Multi-target detection possible | Easily disturbed by shape and texture |

| [36] | Built a color classifier | Low hardware requirements | slow detection |

| [37] | Construction of the positional relationship between fruit and calyx in linear clusters | high speed | Does not meet the multi-robot collaborative operation |

| [31] | Merge Region Proposal Network (RPN) and Fast R-CNN Network | high speed | Poor adaptability in complex situations |

| [32] | Convolutional Neural Network Based on LeNet | Multiple fruit clusters can be recognized | High equipment requirements and large amount of parameters |

| Ours | Cancel the large object detection layer Concatenate shallow features with final features | high speed few parameters Suitable for embedded mobile devices | AP can be further improved |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Hu, W.; Zou, A.; Zhai, S.; Liu, T.; Yang, W.; Jiang, P. Lightweight Detection Algorithm of Kiwifruit Based on Improved YOLOX-S. Agriculture 2022, 12, 993. https://doi.org/10.3390/agriculture12070993

Zhou J, Hu W, Zou A, Zhai S, Liu T, Yang W, Jiang P. Lightweight Detection Algorithm of Kiwifruit Based on Improved YOLOX-S. Agriculture. 2022; 12(7):993. https://doi.org/10.3390/agriculture12070993

Chicago/Turabian StyleZhou, Junchi, Wenwu Hu, Airu Zou, Shike Zhai, Tianyu Liu, Wenhan Yang, and Ping Jiang. 2022. "Lightweight Detection Algorithm of Kiwifruit Based on Improved YOLOX-S" Agriculture 12, no. 7: 993. https://doi.org/10.3390/agriculture12070993