1. Introduction

Eggplant (

Solanum melongena) is a popular vegetable crop. Plant health is important for the yield of nutrient-rich eggplants. However, eggplant plants and crops can be attacked by various diseases and pests, such as

Cercospora melangenae, bacterial wilt, aphids, anthracnose fruit rot,

Alternaria rot, collar rot, damping off, phytophthora blight,

Phomopsis blight and fruit rot, leaf spot, and mosaic [

1]. Despite continuous efforts to combat diseases in different ways, e.g., disease-tolerant varieties and targeted pest and disease control using selective chemicals, many diseases cause significant crop losses.

An automated eggplant disease diagnostic system could provide information for the prevention and control of eggplant diseases. Several deep learning and imaging-based techniques have been proposed in the literature. In recent years, deep convolutional neural networks (CNNs) have been applied extensively in image detection and classification tasks [

2,

3,

4,

5]. Deep learning-based high performing object detection and classification models include R-CNN [

6], fast R-CNN [

7], YOLO family [

8], faster R-CNN [

9], SSD (single-shot multi-box detector) [

10], and R-FCN (region-based fully convolutional network) [

11]. Due to its significant impact and outstanding classification performance, the use of deep learning in the agricultural field, especially in agricultural image processing areas increased tremendously over the years, e.g., weed detection [

12], frost detection [

13], pest detection [

14], agriculture robot [

15], and crop disease [

16,

17,

18,

19]. However, there is a problem concerning the availability of large datasets with reliable ground truth, which is needed to build a good predictive model with high predictive performance [

20,

21]. It is also the same for the plant disease dataset.

Table 1 shows an overview of different approaches used in eggplant disease recognition.

Aravind et al. (2019) worked on the classification of

Solanum Melongena using transfer learning (VGG16 and AlexNet) and achieved an accuracy of 96.7%. However, their accuracy dropped sharply with illumination and color variations [

22]. Maggay et al. (2020) proposed a mobile-based eggplant disease recognition system using image processing techniques. However, their accuracy was around 80% to 85% [

23], which is rather low. Xie [

24] worked on early blight disease of eggplant leaves with spectral and texture features using KNN (K-nearest neighbor) and AdaBoost methods. This work demonstrated that spectrum and texture features are effective for early blight disease detection on eggplant leaves and achieved an accuracy over 88.46%. However, they reported that HLS images performed a little worse, while other types of images achieved better results.

Wei et al. [

25] used infrared spectroscopy to identify young seedlings in eggplant infected by root knot nematodes using multiple scattering correction (MSC) and Savitzky–Golay (SG) smoothing pretreatment method. Their method gave 90% accuracy but worked well only in normal conditions. Sabrol et al. proposed a GLCM matrix to compute the features and then an ANFIS algorithm to classify the diseases of eggplant, providing 98.0% accuracy, but their image dataset was too small and classified only four categories of diseases [

26]. Wu et al. proposed visible and near-infrared reflectance spectroscopy for early detection of the disease on eggplant leaves before the symptoms appeared. They used principal component analysis along with backpropagation neural networks and achieved an accuracy of 85% [

27]. Krishnaswamy et al. applied pretrained VGG16 and multiclass SVM to predict different eggplant diseases. They used different color spaces (RGB, HSV, YCbCr, and grayscale) and gained the highest accuracy (99.4%) using RGB images [

28]. However, there has been limited work on eggplant disease recognition from images. Hence, the present paper tries to fill this gap by developing a deep CNN with a transfer learning-based automated system. In addition, there is no publicly available benchmark dataset for eggplant disease classification.

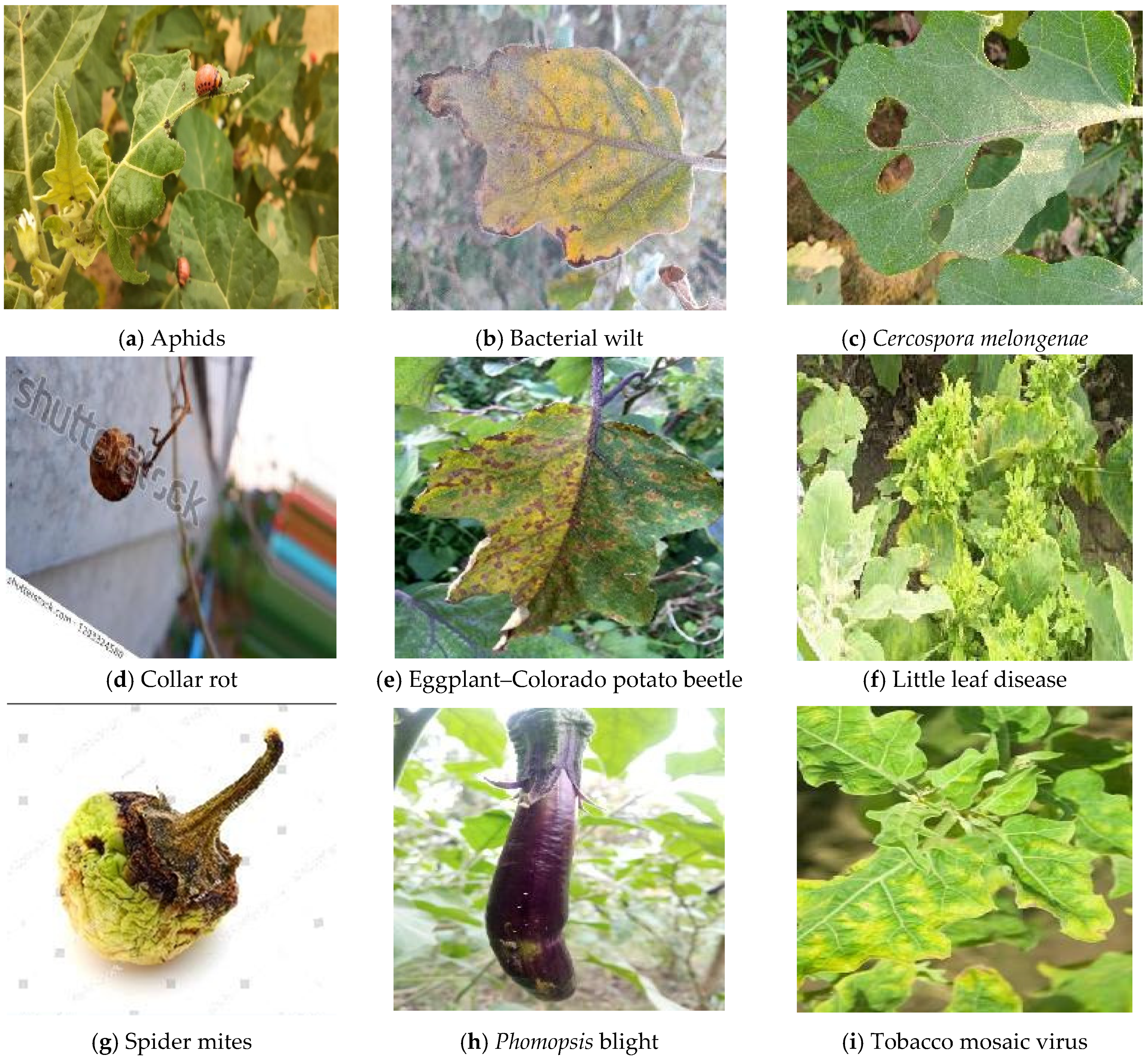

To fill this gap, an eggplant disease dataset was prepared in this work with nine (existing works cover a maximum of five classes) disease classes. A transfer learning with pretrained CNN models (with fine-tuning of the structure and hyperparameter optimization) was applied to recognize the diseases. In addition, we employed a fusion of CNN-SVM and CNN-Softmax strategies with the inference of the best three deep CNN models. In this study, nine diseases (namely, aphids, bacterial wilt, Cercospora melongenae, collar rot, eggplant–Colorado potato beetle, little leaf disease, spider mites, Phomopsis blight, and tobacco mosaic virus) of eggplant were considered, and we verified the effectiveness of the method. The key contributions of this paper are summarized as follows:

The (L, n) transfer feature learning method is applied before retraining with six pretrained transfer learning networks with diverse settings.

A deep CNN based on adaptive learning rate is implemented using the trend of the loss function.

Score level fusion using the CNN-SVM and CNN-Softmax methods, as well as an inference model, is developed from the best three pretrained CNN models.

A dataset containing the nine most frequent eggplant diseases in Bangladesh (with mostly tropical climate) is developed.

3. Results

To investigate the performance of the six feature extraction methods after applying transfer feature learning (

L,

n) and an adaptive learning rate, the t-SNE method was used to visualize the feature maps.

Figure 3 presents the maps of the features. The maps in

Figure 3a–c indicate that classes were almost separable in the two-dimensional space as compared to other methods. The results suggest that the features extracted using Inception V3, VGG19, and MobileNet were more discriminative than other models. Hence, these three models were selected for fusion and inference to generate the final output.

To explore the effect of score level fusion by CNN-Softmax and CNN-SVM from the features extracted by deep CNNs, we conducted a comparative experiment and quantitative analysis in terms of accuracy. The individual accuracy (top three models: Inception V3, MobileNet, and VGG19) achieved after applying score level fusion is shown in

Table 3,

Table 4 and

Table 5. We obtained 96.11%, 93.74%, and 92.11% accuracy for Inception V3, MobileNet, and VGG19, respectively. In addition, the confusion matrices of

Table 3,

Table 4 and

Table 5 (generated after the fusion of CNN-Softmax and CNN-SVM) represent the interclass variability in disease recognition accuracy of the three selected CNNs. In all cases, the three least classified diseases were

Cercospora melongenae, eggplant–Colorado potato beetle, and little leaf disease. The accuracy of these three classes was approximately 5–10% lower than the mean accuracy. Here, the recognition accuracy of

Cercospora melongenae and eggplant–colorado potato beetle was lower due to their similar structure and features. Furthermore, the size and the shape of the leaves, variation in illumination, and poor resolution may have been the reason for the lower accuracy when classifying little leaf disease.

To examine whether the model was overfitting or not, we plotted accuracy and loss function (training and validation) graphs.

Figure 4,

Figure 5 and

Figure 6 demonstrate the training accuracy and loss function during the training process for the three standard well-known transfer learning models using CNN-Softmax and CNN-SVM. It is shown that, initially, the accuracy was low; however, the accuracy gradually increased and finally reached 99% in the training samples for most cases. Moreover, the loss function also gradually decreased. Furthermore, it can be seen from the graphs that, during the training process, the training and validation accuracy was almost similar and increased gradually with the number of epochs. The same decreasing trend was followed by the loss function. In these graphs, it can be observed that CNN-Softmax performed better than CNN-SVM. In addition, Inception V3 performed better than the other CNNs.

Moreover, the result was further improved when the fusion method was applied.

Table 6 describes the evaluation result of the proposed framework and several other state-of-the-art transfer learning models using CNN-Softmax and CNN-SVM. It appears that individual accuracy was slightly improved when the fusion method was applied, and the result was additionally improved after applying the inference method. In addition, we present the F1-score, specificity, and sensitivity values of nine diseases after applying the fusion model using Inception V3, which performed best in this work and is shown in

Table 7.

As shown in

Figure 7, we can see that some images of infected eggplants were not correctly classified by some methods due to similarities in shapes or blurry situations. Hence, when the inference rule was applied, the misclassification could be avoided easily, which improved the accuracy. Furthermore, without much surprise, methods were confused between categories with similar visual characteristics. Thus, they were more likely to be classified by only two or three CNNs and misclassified by others. The inference rule was perfectly applicable in these scenarios. As shown in

Figure 7f,g, the deep learning techniques failed to correctly detect the disease due to low resolution and high noise in the data. This was mainly due to the limitations of deep networks against adversarial variations. While preprocessing of the data can address these problems to some extent, a full investigation is needed to address such problems, which will be performed in a future study.

Moreover, to check the robustness of our system, we examined the comparative performance of VGG16, InceptionV3, VGG19, MobileNet, ResNet50, and NasNetMobile methods for rotated (both clockwise and anticlockwise) images. Here, Inception V3 (9°) and MobileNet (8.5°) performed better than other transfer learning methods. The computation time of our investigated deep CNNs is shown in

Table 8. We can see that the computation time of the fusion method was slightly more ample than others; however, the other algorithms were deemed acceptable when considering accuracy.

4. Discussion

The identification and the recognition of plant diseases using deep learning techniques have recently made tremendous progress. They have been widely used due to their high speed and better accuracy. However, few studies were conducted on eggplant disease recognition, and these works mostly focused on the detection or recognition of a small number of disease categories [

24,

25,

27]. To fill the gap, this paper proposed deep learning-based identification of leaf blight and fruit rot diseases of eggplant according to nine distinct categories through two steps of improvement. In the feature extraction step, transfer feature learning with an adaptive learning rate was applied to improve the accuracy, as well as to reduce overfitting. In the second step, score level fusion and inference strategies were implemented to minimize the false-positive rate and increase the genuine acceptance rate.

To check the robustness of our system, we tested in complex environments such as different rotational situations. The model showed substantial improvement over other state-of-the-art deep learning methods.

The application of the proposed system has many benefits. Firstly, rural farmers can give the right medicine for the specific disease rather than using common medicine. Secondly, the production rate can be increased, thus economically benefitting farmers. Moreover, human effort and time can be reduced. Although the method achieved high success rates in the recognition of eggplant diseases, it has some limitations. The system is relatively slow. Novel network architectures need to be investigated for time-efficient and low-resource systems (e.g., for handheld consumer devices).