Weed Identification in Soybean Seedling Stage Based on Optimized Faster R-CNN Algorithm

Abstract

:1. Introduction

2. Materials and Methods

2.1. Materials

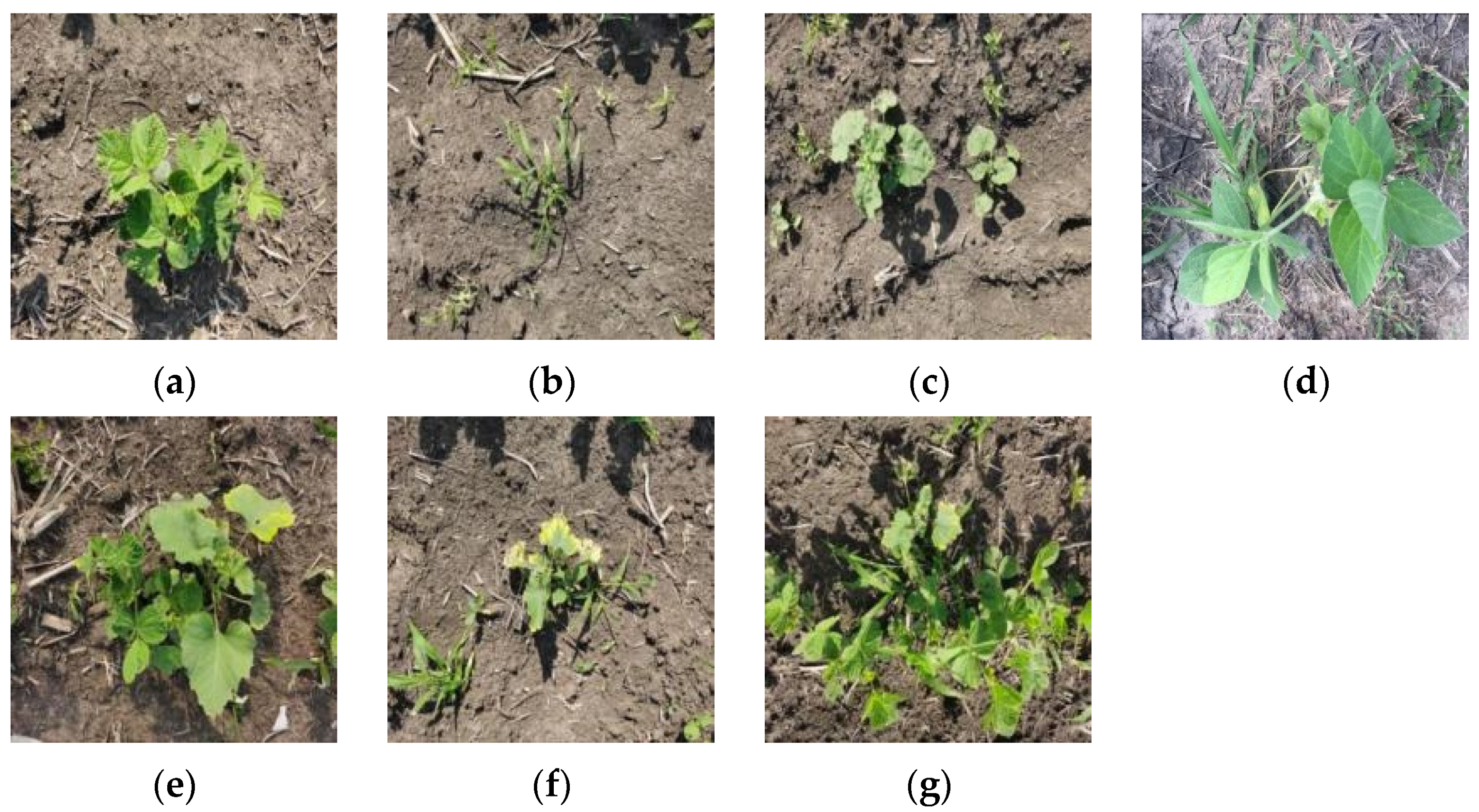

2.1.1. Data Acquisition

2.1.2. Data Pre-Processing

2.2. Methods

2.2.1. Introduction to the Faster R-CNN Target Detection Algorithm

2.2.2. Faster R-CNN Target Detection Algorithm Optimization

Feature Extraction Network Meritocracy

Embedded Attention Mechanism Module

Dropout Algorithm Optimization

2.2.3. Comparison of Weed Detection Methods at the Seedling Stage

2.2.4. Test Platform

2.2.5. Network Model Training

2.2.6. Trial Evaluation Indicators

3. Results

3.1. Impact of Three Feature Extraction Networks (ResNet50, VGG16, VGG19) on the Model

3.2. Effect of Adding CBAM on Model Accuracy

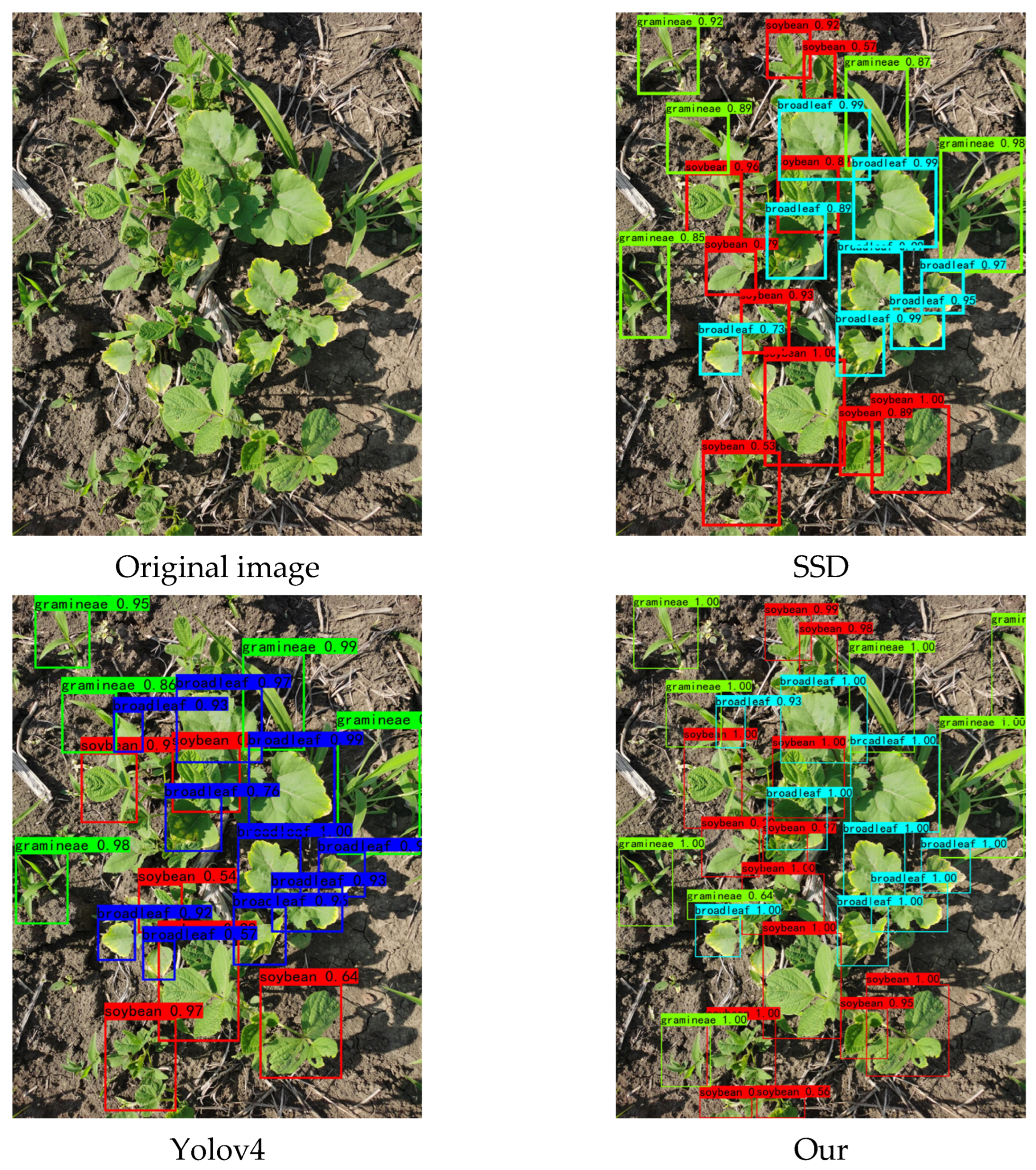

3.3. Comparison with Other Detection Model Algorithms

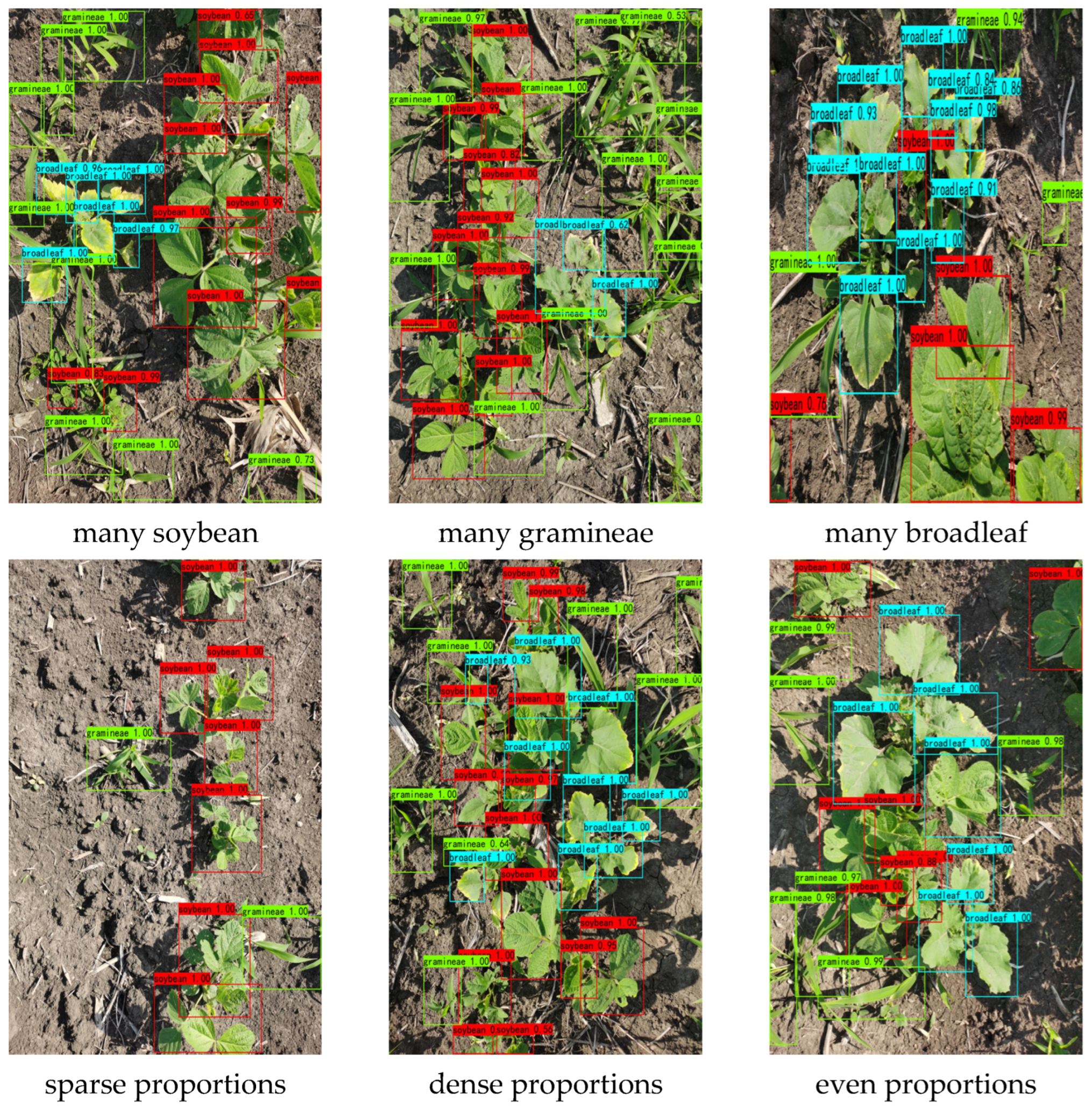

3.4. Application of Weed Detection at Seedling Stage in the Natural Environment

4. Discussion

4.1. Deep Learning-Based Target Detection

4.2. Disadvantages and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, Z.; Ying, H.; Chen, M.; Bai, J.; Xue, Y.; Yin, Y.; Batchelor, W.D.; Yang, Y.; Bai, Z.; Du, M.; et al. Optimization of China’s maize and soy production can ensure feed sufficiency at lower nitrogen and carbon footprints. Nat. Food 2021, 2, 426–433. [Google Scholar] [CrossRef]

- Nursyazyla, S.; Norasma, C.N.; Huzaifah, M.R.M.; Shukor, J.A.; Nisfariza, M.N.; Fazilah, F.I.W. The Application of Hyperspectral Remote Sensing Imagery (HRSI) for Weed Detection Analysis in Rice Fields: A Review. Appl. Sci. 2022, 12, 2570. [Google Scholar]

- Zhao, X.; Wang, X.; Li, C.; Fu, H.; Yang, S.; Zhai, C. Cabbage and Weed Identification Based on Machine Learning and Target Spraying System Design. Front. Plant Sci. 2022, 13, 2299. [Google Scholar] [CrossRef]

- Islam, N.; Rashid, M.M.; Wibowo, S.; Xu, C.Y.; Morshed, A.; Wasimi, S.A.; Moore, S.; Rahman, S.M. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Oh, C.K.; Kim, T.; Cho, Y.K.; Cheung, D.Y.; Lee, B.I.; Cho, Y.S.; Kim, J.I.; Choi, M.G.; Lee, H.H.; Lee, S. Convolutional neural network-based object detection model to identify gastrointestinal stromal tumors in endoscopic ultrasound images. J. Gastroenterol. Hepatol. 2021, 36, 3387–3394. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Li, Y.; Yang, Y.; Guo, R.; Yang, J.; Yue, J.; Wang, Y. A high-precision detection method of hydroponic lettuce seedlings status based on improved Faster RCNN. Comput. Electron. Agric. 2021, 182, 106054. [Google Scholar] [CrossRef]

- Arora, N.; Kumar, Y.; Karkra, R.; Kumar, M. Automatic vehicle detection system in different environment conditions using fast R-CNN. Multimed Tools Appl. 2022, 81, 18715–18735. [Google Scholar] [CrossRef]

- Subeesh, A.; Bhole, S.; Singh, K.; Chandel, N.; Rajwade, Y.; Rao, K.; Kumar, S.; Jat, D. Deep convolutional neural network models for weed detection in polyhouse grown bell peppers. Artif. Intell. Agric. 2022, 6, 47–54. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep learning-based identification system of weeds and crops in strawberry and pea fields for a precision agriculture sprayer. Precis. Agric. 2021, 22, 1711–1727. [Google Scholar] [CrossRef]

- Aaron, E.; Aanis, A.; Varun, A.; Dharmendra, S. Deep Learning-Based Object Detection System for Identifying Weeds Using UAS Imagery. Remote Sens. 2021, 13, 5182. [Google Scholar]

- Hennessy, J.; Esau, J.; Schumann, W.; Zaman, U.; Corscadden, W.; Farooque, A. Evaluation of cameras and im-age distance for CNN-based weed detection in wild blueberry. Smart Agric. Technol. 2022, 2, 100030. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Wang, Y.-H.; Su, W.-H. Convolutional Neural Networks in Computer Vision for Grain Crop Phenotyping: A Review. Agronomy 2022, 12, 2659. [Google Scholar] [CrossRef]

- Rani, S.V.; Kumar, P.S.; Priyadharsini, R.; Srividya, S.J.; Harshana, S. Automated weed detection system in smart farming for developing sustainable agriculture. Int. J. Environ. Sci. Technol. 2022, 19, 9083–9094. [Google Scholar] [CrossRef]

- Hasan, A.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Jin, X.; Che, J.; Chen, Y. Weed Identification Using Deep Learning and Image Processing in Vegetable Plantation. IEEE Access 2021, 9, 10940–10950. [Google Scholar] [CrossRef]

- Gerassimos, P.G.; Philipp, R.; Jeremy, K.; Dionisio, A.; Roland, G. Weed Identification in Maize, Sunflower, and Potatoes with the Aid of Convolutional Neural Networks. Remote Sens. 2020, 12, 4185. [Google Scholar]

- Yu, J.; Schumann, A.W.; Cao, Z.; Sharpe, S.; Boyd, N.S. Weed Detection in Perennial Ryegrass With Deep Learning Convolutional Neural Network. Front. Plant Sci. 2019, 10, 1422. [Google Scholar] [CrossRef] [Green Version]

- Ying, B.; Xu, Y.; Zhang, S.; Shi, Y.; Liu, L. Weed Detection in Images of Carrot Fields Based on Improved YOLO v4. Trait. Signal 2021, 38, 341–348. [Google Scholar] [CrossRef]

- Li, Q.; Zhao, F.; Xu, Z.; Li, K.; Wang, J.; Liu, H.; Qin, L.; Liu, K. Improved YOLOv4 algorithm for safety management of on-site power system work. J. Egyr. 2022, 8, 739–746. [Google Scholar] [CrossRef]

- Hamid, Y.; Wani, S.; Soomro, A.B.; Alwan, A.A.; Gulzar, Y. Smart Seed Classification System based on Mo-bileNetV2 Architecture. In Proceedings of the 2022 2nd International Conference on Computing and Information Technology (ICCIT), Tabuk, Saudi Arabia, 25–27 January 2022; pp. 217–222. [Google Scholar]

- Albarrak, K.; Gulzar, Y.; Hamid, Y.; Mehmood, A.; Soomro, A.B. A Deep Learning-Based Model for Date Fruit Classification. Sustainability 2022, 14, 6339. [Google Scholar] [CrossRef]

- Zhou, H.; Zhao, Y.; Xiang, W. Method for judging parking status based on yolov2 target detection algorithm. Procedia Comput. Sci. 2022, 199, 1355–1362. [Google Scholar] [CrossRef]

- Chen, J. IOT Monitoring System for Ship Operation Management Based on YOLOv3 Algorithm. J. Control. Sci. Eng. 2022, 2022, 2408550. [Google Scholar] [CrossRef]

- Zuo, J.; Han, F.; Meng, Q. A SECI Method Based on Improved YOLOv4 for Traffic Sign Detection and Recognition. J. Phys. Conf. Ser. 2022, 2337, 012001. [Google Scholar] [CrossRef]

- Gao, X.; Xu, J.; Luo, C.; Zhou, J.; Huang, P.; Deng, J. Detection of Lower Body for AGV Based on SSD Algorithm with ResNet. Sensors 2022, 22, 2008. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Luo, J.; Liu, B.; Feng, R.; Lu, L.; Zou, H. Automated diabetic retinopathy grading and lesion detection based on the modified R-FCN object-detection algorithm. IET Comput. Vis. 2020, 14, 1–8. [Google Scholar] [CrossRef]

- Lee, Y.S.; Park, W.H. Diagnosis of Depressive Disorder Model on Facial Expression Based on Fast R-CNN. Diagnostics 2022, 12, 317. [Google Scholar] [CrossRef]

- Yan, D.; Li, G.; Li, X.; Zhang, H.; Lei, H.; Lu, K.; Cheng, M.; Zhu, F. An Improved Faster R-CNN Method to Detect Tailings Ponds from High-Resolution Remote Sensing Images. Remote. Sens. 2021, 13, 2052. [Google Scholar] [CrossRef]

- Sheriff, S.T.M.; Kumar, J.V.; Vigneshwaran, S.; Jones, A.; Anand, J. Lung Cancer Detection using VGG NET 16 Architecture. J. Physics Conf. Ser. 2021, 2040, 012001. [Google Scholar] [CrossRef]

- Alyaa, J.J.; Naglaa, M.R. Infrared Thermal Image Gender Classifier Based on the Deep ResNet Model. Adv. Hum-Compute. Inter. 2022, 2022, 3852054. [Google Scholar]

- Zhang, H.; Wang, Z.; Guo, Y.; Ma, Y.; Cao, W.; Chen, D.; Yang, S.; Gao, R. Weed Detection in Peanut Fields Based on Machine Vision. Agriculture 2022, 12, 1541. [Google Scholar] [CrossRef]

- Fu, L.; Lv, X.; Wu, Q.; Pei, C. Field Weed Recognition Based on an Improved VGG With Inception Module. Int. J. Agric. Environ. Inf. Syst. 2020, 11, 13. [Google Scholar] [CrossRef]

- Haq, M.A. CNN Based Automated Weed Detection System Using UAV Imagery. Comput. Syst. Sci. Eng. 2022, 42, 837–849. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vision 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Awan, M.J.; Masood, O.A.; Mohammed, M.A.; Yasin, A.; Zain, A.M.; Damaševičius, R.; Abdulkareem, K.H. Image-Based Malware Classification Using VGG19 Network and Spatial Convolutional Attention. Electronics 2021, 10, 2444. [Google Scholar] [CrossRef]

- Cao, W.; Feng, Z.; Zhang, D.; Huang, Y. Facial Expression Recognition via a CBAM Embedded Network. Procedia Comput. Sci. 2020, 174, 463–477. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, J.; Guo, X.; Chen, K.; Wang, N. Two-Stream RGB-D Human Detection Algorithm Based on RFB Network. IEEE Access 2020, 8, 123175–123181. [Google Scholar] [CrossRef]

- Xie, J.; Zhu, M.; Hu, K. Improved seabird image classification based on dual transfer learning framework and spatial pyramid pooling. Ecol. Inform. 2022, 72, 101832. [Google Scholar] [CrossRef]

| Configuration | Parameter |

|---|---|

| Operating System | Windows 10 Professional Workstation Edition |

| CPU | Xeon Gold 6248R*2 [48 cores 3 GHz] |

| GPU | NVIDIA RTX4000 |

| Accelerate Environment | CUDA10.0 CuDNN7.4.1.5 |

| TensorFlow | 1.13.2 |

| Python | 3.7 |

| Data Annotation Tools | LabelImg |

| Feature Extraction Network | Accuracy (AP)/% | Average Recognition Time/ms | |||

|---|---|---|---|---|---|

| Soybean | Grass Weed | Broadleaf Weed | mAP | ||

| ResNet50 | 95.68 | 89.38 | 95.29 | 93.45 | 338 |

| VGG16 | 95.46 | 89.46 | 95.62 | 93.51 | 330 |

| VGG19 | 95.34 | 89.58 | 95.71 | 93.55 | 316 |

| Methods | F1 | R/% | P/% | mAP | Average Recognition Time/ms |

|---|---|---|---|---|---|

| Faster R-CNN (VGG19) | 0.86 | 93.22 | 80.66 | 93.55 | 316 |

| Faster R-CNN (VGG19) + CBAM(Block4) | 0.92 | 97.57 | 87.26 | 98.94 | 350 |

| Faster R-CNN (VGG19) + CBAM(Block5) | 0.94 | 98.20 | 89.4 | 99.05 | 347 |

| Faster R-CNN (VGG19) + CBAM(Block4,5) | 0.95 | 98.96 | 90.2 | 99.16 | 336 |

| Methods | Faster R-CNN + CBAM (VGG19) | SSD (VGG16) | Yolov4 (CSPDarkNet53) |

|---|---|---|---|

| Indicators | |||

| Soybean identification accuracy | 99.02 | 96.99 | 97.83 |

| Grass weed identification accuracy | 99.30 | 95.39 | 97.00 |

| Accuracy of broadleaf weed recognition | 99.16 | 97.90 | 98.21 |

| F1/% | 0.95 | 0.91 | 0.94 |

| R/% | 98.96 | 94.28 | 91.94 |

| P/% | 90.20 | 87.79 | 96.64 |

| mAP/% | 99.16 | 96.92 | 97.92 |

| Average recognition time/ms | 336 | 450 | 386 |

| Category | Accuracy Rate/% | Average Accuracy Rate/% | Average Recognition Time/ms |

|---|---|---|---|

| Soybean | 90.06 | 92.69 | 590 |

| Grass weed | 87.96 | ||

| Broadleaf weed | 92.69 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Cui, J.; Liu, H.; Han, Y.; Ai, H.; Dong, C.; Zhang, J.; Chu, Y. Weed Identification in Soybean Seedling Stage Based on Optimized Faster R-CNN Algorithm. Agriculture 2023, 13, 175. https://doi.org/10.3390/agriculture13010175

Zhang X, Cui J, Liu H, Han Y, Ai H, Dong C, Zhang J, Chu Y. Weed Identification in Soybean Seedling Stage Based on Optimized Faster R-CNN Algorithm. Agriculture. 2023; 13(1):175. https://doi.org/10.3390/agriculture13010175

Chicago/Turabian StyleZhang, Xinle, Jian Cui, Huanjun Liu, Yongqi Han, Hongfu Ai, Chang Dong, Jiaru Zhang, and Yunxiang Chu. 2023. "Weed Identification in Soybean Seedling Stage Based on Optimized Faster R-CNN Algorithm" Agriculture 13, no. 1: 175. https://doi.org/10.3390/agriculture13010175