1. Introduction

The pear leaf blister moth (

Leucoptera malifoliella (O. Costa, 1836)) (Lepidoptera: Lyonetiidae) is one of the most important economic pests in apple production [

1]. It occurs in orchards in Europe and Asia [

2]. It is a multivoltine species [

3], and due to climate change, it is becoming more common and with larger populations [

4].

L. malifoliella is a typical physiological pest, whose larvae penetrate the leaf and feed on the mesophyll of the leaf tissue, leaving the epidermis untouched, forming circular mines. One mine has an average size of 0.88–1.04 cm

2 and represents a loss of 3.4–4.6% of the leaf surface. A higher number of mines (more than 40 per leaf) causes premature defoliation during August or early September [

1]. Early defoliation has a negative effect on bud differentiation, while a severe infection on the leaves directly affects the size, yield, and quality of apple fruits [

5]. Namely, the overwintering population is noticed too late due to the small dimensions of this pest and its hiding behavior, which is mostly why the damage in apple orchards is not noticed in time [

6]. Chemical treatments that are applied too late can only lead to worse results [

5].

For successful control of

L. malifoliella, monitoring of the first-generation flight and the beginning of its oviposition need to be conducted, as well as monitoring of the embryonic development of larvae, their perforation in the leaf and the initial development of mines up to 2 mm in diameter [

5]. Synthetic pheromones have proven useful for monitoring

L. malifoliella [

4]. The most favorable time for insecticide treatment is the occurrence of mines of the first generation. It is considered that the critical number of mines per leaf is two to three. Such an infection enables the further development of

L. malifoliella leading to damage of an economically significant scale [

7,

8]. Considering that mines are difficult to notice due to their small dimensions, it is necessary to use more precise and faster monitoring methods, in order to react in time and prevent the occurrence of significant damage.

Decision thresholds based on pest capture are the basis of integrated pest management (IPM) and are used to optimize the timing of insecticide treatment. However, IPM requires frequent visits to inaccessible orchards and checking traps on a weekly basis can lead to late interventions and ineffective control [

9]. Due to climate change, agricultural systems are exposed to increasing pressures, and significant changes have been recorded in pest phenology [

10]. Changes in air temperature directly affect the population dynamics, the relationship with natural enemies, and creates an increase in pest reproduction, which results in the occurrence of a greater number of generations and, consequently, greater damage. Considering the unpredictability of pest occurrence and the impracticality of existing monitoring methods, it is crucial to develop more sophisticated monitoring methods [

11]. Therefore, automatic pest monitoring systems have recently been intensively developed [

12,

13]. The need to use automatic systems for pest monitoring is particularly important in crops that are grown on large areas, such as apples.

Apple (

Malus domestica Borkh., 1803) is one of the most economically important fruit crops worldwide, whose fruits are consumed fresh or processed throughout the year [

14]. It is grown on an area of 4.6 million hectares, and the global production in 2020 was 86.4 million tons [

15]. The apple is the most commonly consumed fruit in Croatia and occupies 36% of the total fruit production [

16]. Despite its importance, pest management, as well as early pest monitoring methods in apple production are mostly outdated and unreliable. Therefore, scientists developed automatic systems for monitoring apple pests [

17,

18], mostly for monitoring the most dangerous apple pest, codling moth (

Cydia pomonella (Linnaeus, 1758)) (Lepidoptera: Tortricidae) [

19,

20].

L. malifoliella is a significant apple pest as well, and due to its morphology (small dimensions of all developmental stages), its detection is difficult using classical monitoring methods. Therefore, there is a need to develop and deploy automatic systems for monitoring this pest as well.

The use of deep learning for automatic pest detection from photos is increasingly being used to detect insect pests in a timely manner [

21]. These works can serve as a basis for developing automatic systems to monitor other important pests and their damage. For example, Sabanci et al. [

22] used two different deep learning architectures for detecting sunn pest-damaged wheat grains and achieved high classification success, with a classification accuracy of 98.50% and 99.50%. Zhong et al. [

23] used neural networks to detect flying insects, and the detection accuracy was over 92%. Moreover, Sütő [

24] developed a smart trap using a deep learning-based method for insect counting and proposed solutions to problems such as “lack of data” and “false insect detection”. El Massi et al. [

25] used a neural network and a support vector machine as a classifier for classifying and detecting damage caused by leaf miners, with more than 91% accuracy. Grünig et al. [

26] presented a deep learning-based system for monitoring damage by

L. malifoliella. The used neural network showed good results in categorizing different damage classes from 52,322 leaf photos taken under standardized conditions as well as in the field. However, for the above system to be fully effective in the timely detection of pests, it is critical that the system monitors the number of adult individuals in addition to leaf damage, as this will allow for earlier response and damage prevention.

In addition, Ding and Taylor [

19] used deep learning techniques to develop a fully automated codling moth monitoring system. Namely, artificial neural networks were trained to recognize adult codling moths based on 177 collected red-green-blue (RGB) photos. The model was effective, but for the system to be even more reliable and successful in detection, a larger data set needs to be collected. Albanese et al. [

27] developed a smart trap for monitoring codling moth using various deep learning algorithms. The advantage of this system is that photo processing is performed in the trap where big data is limited to a small message. In this case, only the detection results are sent to the external server, and the limited energy available in the field is used optimally. However, there is a need to develop these types of systems for monitoring

L. malifoliella as well, so that they can be practically used under field conditions.

Most deep learning models are based on artificial neural networks (ANNs) [

28,

29], which have recently been applied in various fields, including agriculture [

30]. In light of this, many researchers have adopted ANN-based detection methods for pest monitoring in agriculture [

21,

31]. Besides insect detection, artificial neural networks and their variants have been shown to be the most effective method for object detection and recognition [

32].

Considering that there are no particularly effective ecological friendly control measures for

L. malifoliella and that its management relies mostly on chemical control [

2], it is extremely important to use as precise monitoring methods as possible, in order to limit chemical insecticides to only targeted and thus effective applications.

Therefore, this paper makes two main contributions. Firstly, the development of the model using artificial neural networks for early detection of L. malifoliella and its damage on apple leaves that is accurate, precise, fast, and requires minimal pre-processing of data. Secondly, the development of a Pest Monitoring Device (PMD) for monitoring L. malifoliella individuals and a Vegetation Monitoring Device (VMD) for detecting its damages. This system is based on detecting pests and their damage from photos taken with a monitoring device in the field. In these devices, data processing is performed on-the-node, which enables lower energy consumption, and thus a longer lifetime of the entire system, as well as less need for human intervention. Automatic pest monitoring is still in its infancy, and this system is an innovative solution for faster and more reliable pest monitoring.

The hypothesis of this study is that artificial neural networks and the proposed monitoring devices are a reliable tool for monitoring the pear leaf blister moth individuals and their damage if the detection accuracy is more than 90% compared to visual inspection by an expert entomologist.

2. Materials and Methods

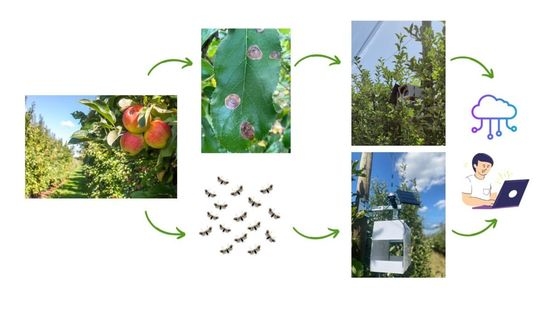

The study is divided into four phases, which are shown in

Figure 1. The first phase involves photo acquisition for the purpose of learning the ANN. In the second phase, data were processed, and the photos labelled. In the third phase, the learning (or training) ANNs were provided, in order to build an analytical model for automatic detection of

L. malifoliella and its damage. In the final, fourth phase, monitoring devices were built to implement the developed model for monitoring pests and damage (

Figure 1).

2.1. Data Acquisition

The collection of photos was set up and structured so that it might be possible to obtain enough photos for all classes of defined observations. This was performed so that two EfficientDet Object identification (ANN) models could be trained [

33]. No more than two ANN models were used in this work. Each of the two ANN models was used to identify a separate class. The two classes were: 1. the pest,

L. malifoliella; and 2. the damage caused by this pest (leaf mines). The number of classes that can be seen is dependent on the quality of the photos taken, the accuracy of the detection methods, and the economic significance of the classes. How the characteristics of each class could be seen under different weather conditions was examined. This was done before the decision was made to observe a certain class.

In the period between April and September 2022, 400 photos for each model (photos of adhesive pads with adult L. malifoliella and photos of mines on the apple leaves) were taken in apple orchards in Zagreb County, Croatia (Petrovina Turopoljska, Mičevec, and Staro Čiče). Petrovina Turopoljska and Mičevec are orchards that use IPM strategies, whereas Staro Čiče is an orchard with organic production. Adults of L. malifoliella were caught on adhesive pads with traditional Delta traps, and pheromone lures (Csalomon®) were made for this species. Traps were inspected, adhesive pads were changed, and photos were taken on a weekly basis.

RGB camera was mounted in the polycarbonate housing, which mimics the housing of the Pest Monitoring Device (PMD), and used for data acquisition, in order to achieve similar shooting conditions as those taken later by the PMD. The adhesive pads were transferred from the Delta traps to the housing. Photos of adult L. malifoliella and apple leaves were taken manually in the field in a real production situation under different lighting conditions (sunny, cloudy, etc.) in order to create the model for automatic pest detection. The RGB cameras for both models were connected to the SBC (single board computer) of the Raspberry Pi 4 foundation, from where the camera is controlled. Photos of adult L. malifoliella from the Delta pheromone trap were taken in parallel with the collection of photos of vegetation. The data were collected on a weekly basis using an RGB camera. Photos of the central part of the apple tree were taken from a distance of 50 cm to detect mines on the leaves.

2.2. Data Preparation

The collected photos, both of the adhesive pads with pests, and of vegetation, were processed with the program LabelImg. Entomological experts were used to detect

L. malifoliella adults (

Figure 2) and their damage on apple leaves (

Figure 3), which were marked manually with bounding boxes.

For establishing a model for detecting

L. malifoliella adults in the PMD, three classes were defined and annotated: MINER i.e.,

L. malifoliella adults, INSECT i.e., other insects and OTHER i.e., other objects (e.g., remains of leaves, branches, etc.). To make a model for detecting mines on apple leaves, two classes: MINES i.e., damage caused by

L. malifoliella, and OTHER i.e., other objects (e.g., healthy leaves, nutrient deficiency on leaves, fruit coloration, etc.), were taken out of the 32-class model that is still under development. There are several less important subclasses for the development of both models, all of which have been set as class OTHER to better distinguish them from the important classes listed above. The 1880 annotations of the class MINES (damage) and 4700 annotations of the class MINER (pests) were used for the learning ANNs. The annotation format is PascalVOC, an XML structure. Thus, the images from the PMD consist of the segments shown in

Figure 4, while the images from the VMD consist of the segments shown in

Figure 5.

2.3. Creating Analytical Models for Automatic Detection of L. malifoliella and Its Damage

Automatic object detection was performed by an artificial intelligence algorithm. The annotated images were used to create two ANNs. The ANNs are computer processing systems that are based on biological nervous systems. They are mostly made up of a large number of connected computing nodes called neurons, which work together in a distributed way to learn from inputs and improve the final output [

32].

Learning (or training) ANNs to make an analytical model starts with raw images, extracting important features, such as edges and blobs, and bounding boxes of the objects (L. malifoliella adults and their damage) for the data set. During image processing, they were changed to fit the purpose by applying annotations and modeling concepts. A data set of 400 images was used for learning each ANN. Images were rotated by 0, 90, 180, and 270°, and copies were made that were mirrored on the horizontal and vertical axes. In the end, 12 images were taken from each original image. Then, the original image (size 4000 × 3900) was cut into smaller images (size 640 × 640), and 30 images were obtained from each of these 12 images. This gave 144,000 images for training ANN for pest detection and 144,000 images for training ANN for damage detection.

The images were randomly divided into three sets: the training set, the validation set, and the test set. For training, 80–95% of the images were used, while 20–5% of the images were used for validation. The validation of the model is the first step of the model’s quality examination, which is carried out during the model’s creation [

34]. For the validation phase, various basic statistical elements that define the precision of object detection were used. Namely, Average Recall (AR) and Average Precision (AP), as well as statistical indicators, which refer to indicators during the model’s creation and validation at the end of each epoch, and final statistical indicators of the entire model and the classes included in the model.

Checking the accuracy of the validation during training allows for early termination and avoidance of overfitting [

35]. Learning loss is a measure of how well a deep learning model fits the training data. Namely, it evaluates the error of the model on the training set. The training set is a portion of the data set used to initially train the model. The learning loss was calculated using the sum of the errors for each training sample [

36]. Validation loss is a metric used to evaluate how well a deep learning model performs on the validation set. The validation set is a subset of the data set used to evaluate the performance of the model. Similar to the learning loss, the validation loss was calculated by accumulating the errors for each validation set [

36].

For the test data set, it was important to select the most complicated images that the model had never seen before and test the model on this data set. The test results were statistically evaluated using the confusion matrix. Confusion matrices represent the number of predicted and actual values and indicate the accuracy of the model. The confusion matrix consists of 4 categories (TP—“true positive”, TN—‘‘true negative’’, FP—“false positive”, and FN—“false negative”) [

37]. The confusion matrix was used to calculate the data on accuracy, precision, sensitivity (recall) and F1 score. The F1 score is the harmonic mean of Precision and Recall. Recall indicates the number of miners correctly identified as positive relative to the total number of miners in the image [

38]. All of the aforementioned metrics were calculated using the equations of Aslan et al. [

39]. The equation for each metric was defined from Equations (1)–(4). All metrics were calculated using correspondences across the entire data set and do not represent averages across individual images [

19].

The analytical models, themselves, were made through a series of algorithm tests and parameter changes to obtain the best performance for the model’s intended use. The Python 3.6 programming language was chosen to run the program using the TensorFlow artificial intelligence library. Python makes it easy to write scripts quickly [

40], and TensorFlow has all the functions needed to make models already built in. For the production application, the TensorFlow Lite platform was used. This means that the analytical models that were built can be used on ‘’end’’ devices with less power consumption, such as SBC [

41]. Taking into account the use of analytical models on “end” devices with the TensorFlow Lite application, the quality of detection was tested on different concepts of analytical models, such as SSD (Single-Shot Detector) MobileNet in different versions, SSD ResNet in different versions, and EfficientDet-Lite in different versions. Finally, the network structure of EfficientDet-Lite 4 showed the best detection quality. EfficientDet is a new family of object detectors that are more accurate and use fewer resources than the current state-of-the-art [

42].

Emerging technologies, such as computer vision, require accurate object identification but have limited processing resources. These requirements are not met by many high-accuracy detectors. Real-world object detection solutions use different platforms and resources. “Scalable and Efficient Object Detection” (EfficientDet), an accurate object detector that uses few resources, was made by the authors in [

33]. EfficientDet is nine times smaller and uses less computation than preceding state-of-the-art detectors. For its backbone for image feature extraction, it uses EfficientNet (

Figure 6) [

33]. The weighted bi-directional feature pyramid network (BiFPN) gives the input features more weight based on their resolution. This helps the network understand how important they are. In the next step, the feature network takes multiple levels of features from the input and sends out fused features that show the most important aspects of the image. All regular convolutions are replaced with depth-wise separable convolutions. Finally, a class or box network uses fused features to predict the class and location of each object. The EfficientDet neural network architecture can have a different number of layers in BiFPN and class or box networks based on how much processing power is available [

43].

Two separate ANNs were trained to identify L. malifoliella (MINER) and mines caused by this pest (MINES). Objects in RGB images (adult L. malifoliella and mines on leaves) were used to create analytical models based on what was seen and the structure of the corresponding object detection classes. The analytical models were divided into groups depending on what they are used for. For example, the PMD has an analytical model for insects (L. malifoliella) and the VMD has an analytical model for mines. Different concepts of algorithms have been used to develop analytical models depending on their intended use (e.g., the quality of detection, the speed of detection required, the amount of energy required for detection, etc.).

Once a model is created, each one must be tested and adjusted, and production parameters must be changed. Analytical models are constantly being improved. Regardless of the purpose or version of the camera control server, a way has been found to automatically update the analytical models. Computers specifically set up for this purpose have been used for storing data and to create analytical models. A computer with a special hardware configuration was acquired to ensure that models requiring more processing power could be created. A separate virtual server was set up for the appropriate storage of image material.

2.4. The Pest Monitoring Device (PMD) and Vegetation Monitoring Device (VMD)

The Pest Monitoring Device (PMD) for pest monitoring (

Figure 7a) was housed in a milky-white polycarbonate shell. The housing contains an RGB camera, a temperature sensor for the battery and electronics, an adhesive pad, a pheromone lure, and a power supply system with a battery and a solar panel. On the other hand, in the Vegetation Monitoring Device (VMD), the RGB camera was placed in a separate housing (

Figure 7b). The software for both cameras allows users to use a remote monitoring service with image transmission and processing. One PMD is sufficient for use on 1 to 3 ha of the orchard. The devices (including the associated models) have been designed to be used throughout the vegetation and are resistant to weather conditions thanks to an external structure.

2.4.1. Trap Housing

During the building process, a lot of thought was put into how to protect the house from things like rain and sunlight. Because the devices were attached to the stakes in the orchard, special mounting stands had to be made. The trap, which was made specifically for several sorts of insects, also protects the camera within. The rectangular-shaped trap features two entrances on its two opposing sides. The openings are 16 cm wide and 10 cm high, while the house is 25 cm long, 24 cm high, and 16 cm broad. The antenna for the 4G and 5G networks is on top of the housing, along with the solar panel.

2.4.2. Camera

When making the camera, the features of the orchard, especially during the growing season, as well as how it would be used and the conditions in which it would work, were taken into account. The cameras were built around single-board computers (SBCs) from the Raspberry Pi Foundation. The cameras have an Rpi HQ sensor, which allows different M12 lenses to be used depending on what the camera is going to be used for. Lens specifications for the PMD are 75° HFOV, 1/23”, and 3.9 mm, while those for the VMD are 28° HFOV, 1/25”, and 12 mm. The resolution utilized was 12.5 megapixels, or 4056 × 3040 pixels. A SIM card was included in each camera to enable 4G network communication. The temperature of the battery and electronics can be observed from the temperature sensors.

2.4.3. Battery

The power supply system was made up of three Panasonic NCR18650B rechargeable lithium-ion batteries connected in series. These batteries have a 3350 mAh capacity, a 5 A maximum current capacity, and a 10.8 to 12.6 V voltage range. The Battery Management System (BMS) is in charge of making sure that charging and discharging work well so that the battery lasts as long as possible. The system is autonomous and has a long lifespan without assistance from people. The batteries were recharged using solar panels. A solar panel charges the battery system during the day so that it can run on its own.

4. Discussion

In this study, while testing the model for the PMD, there were no false positive (FP) detections, which points to the high precision of the model. In contrast, Preti et al. [

20] developed a smart trap for monitoring the codling moth (

C. pomonella) (Lepidoptera: Tortricidae), which had a high number of FP detections, accounting for 90.7% of the automatic counts. The majority of FP detections were represented by shadows on the adhesive pads, lures, and flies. The low precision was caused by the high number of FP detections, suggesting that adjustments to the detection algorithm are required. They point out that the reason is a small data set, and Du et al. [

46] proved this with a theoretical calculation that the error in the ANN algorithms class is correlated to the data set size.

Moreover, in the model for the PMD, several detected objects were marked as false negatives (FN), mostly due to the change in color and decomposition of the insects over time. Ding and Taylor [

19] analyzed errors caused by different factors and emphasized that many errors are related to time. Hence, the same insect pest could have different wing poses and decay conditions over time. Moreover, decaying insects could make the originally transparent adhesive pad become dirty and reduce the contrast between the insects and the background. Errors caused by time-related factors could be largely avoided in real production systems if adhesive pads in the smart trap are changed approximately once in every ten days, in order to avoid insect decomposition and dirt accumulation, and thus, a higher number of misdetections.

The difference between counts detected by an algorithm and counts detected manually by an entomologist is called detection accuracy. An accuracy equal to 100% means that the number of objects marked and counted automatically by the ANN matches the number of

L. malifoliella adults on the adhesive pad. An accuracy lower than 100% means that there were

L. malifoliella adults that were not recognized by the model. An accuracy greater than 100% represents counting non-target insects or other items as the miner; thus, the automatic identification overestimated the true occurrence of miners, which was the case in Preti et al. [

20]. In our case, there were no FP detections in the model for the PMD, whereas in the case of the model for the VMD’s accuracy, this was not impacted by FP detections, due to the similar number of FN detections (

Table 3). The precision parameter can range between 0 and 1. Values of precision close to 1 mean that the occurrence of false positives is very low, and therefore, the total automatic detection corresponded to the correct detections of

L. malifoliella adults (showing that the algorithm is precise and does not mark non-target insects or other items) [

20].

In the model for the VMD, several FP and FN detections were recorded (

Figure 12). The reason for more FP detections is that the mines on leaves were misidentified as part of a branch or dry leaf. Most of these missed detections happened at the edges of the picture, where the mines were not fully shown and were, therefore, not seen. Even though there were more FP and FN than in the PMD model, and the overall accuracy, as well as the other parameters (

Table 3) were slightly lower, the results are oddly satisfying, and the model is usable in practice, because there was a high number of annotations and TP detections.

When compared to other successful works concerning detecting Lepidopteran apple pests using ANNs, both models demonstrated the potential to work in practice. The accuracy of the considered models is higher than 90% [

27,

47], as well as in this work. Therefore, one can consider the models proposed in this work efficient for practical use. Suárez et al. [

47] used the TensorFlow library and the programming language Python to make a model for detecting codling moths. The overall accuracy of the developed model was 94.8%. Similar results were obtained by Albanese et al. [

27]. By using different deep learning algorithms in their trap, they achieved an accuracy of 95.1–97.9% in detecting codling moths. Grünig et al. [

26] created an image classification model for detecting damage on apple leaves, using neural networks to achieve good results in categorizing different damage classes from photos of leaves taken under standardized and field conditions. The model was 93.1% accurate at detecting damage caused by

L. malifoliella. This means that the model is good for automatic damage detection. However, there is no available model for the automatic detection of this pest in a trap, nor the developed automatic system, and works by Grünig et al. [

26] and El Massi et al. [

25] only classify damage caused by this pest. For the above-mentioned system to be fully effective in controlling pests in a timely manner, it is critical that the system monitors the number of adult individuals, in addition to leaf damage, so that it can intervene earlier and prevent damage (mines) from occurring.

The proposed model is accurate, precise, fast, and requires minimal preprocessing of data. In addition, the Pest Monitoring Device (PMD) for monitoring

L. malifoliella adults and Vegetation Monitoring Device (VMD) for detecting its damages on apple leaves are both portable, independent devices that require no additional infrastructure installation. Most of the time, they are operating in sleep mode. This means that they use less energy, last longer, and have less need for human intervention. This system is specific, because the output of each device is the number of objects of a certain class that it detects (not the whole image). Due to the fact that the output is represented as a small amount of data, it is sent quickly over a mobile network. The results of detection are sent to the web portal, which is where all further analysis and reporting are performed. Several smart traps for monitoring apple pests are available on the market [

48,

49], but there is no available trap, nor comprehensive system for automatic monitoring of

L. malifoliella or its damage.

Therefore, in this work, a comprehensive system consisting of two object detection models and accompanying devices for pest and vegetation damage monitoring (the PMD and VMD) was developed to obtain complete information in the orchard about L. malifoliella occurrence, to react in a timely manner, and prevent the occurrence of economically important damage. This proposed system contributes to the improvement of automatic pest monitoring and, thus, to its wider application. The use of this system allows for targeted pest control, thus reducing the use of pesticides, decreasing the negative impact on the environment, and ultimately allowing for higher quality and more profitable apple production.