Applying RGB-Based Vegetation Indices Obtained from UAS Imagery for Monitoring the Rice Crop at the Field Scale: A Case Study in Portugal

Abstract

:1. Introduction

2. Material and Methods

2.1. Study Area

2.2. UAS Data Acquisition and Processing

2.3. Vegetation Indices Calculation

2.4. Vegetation Indices Analysis

3. Results and Discussion

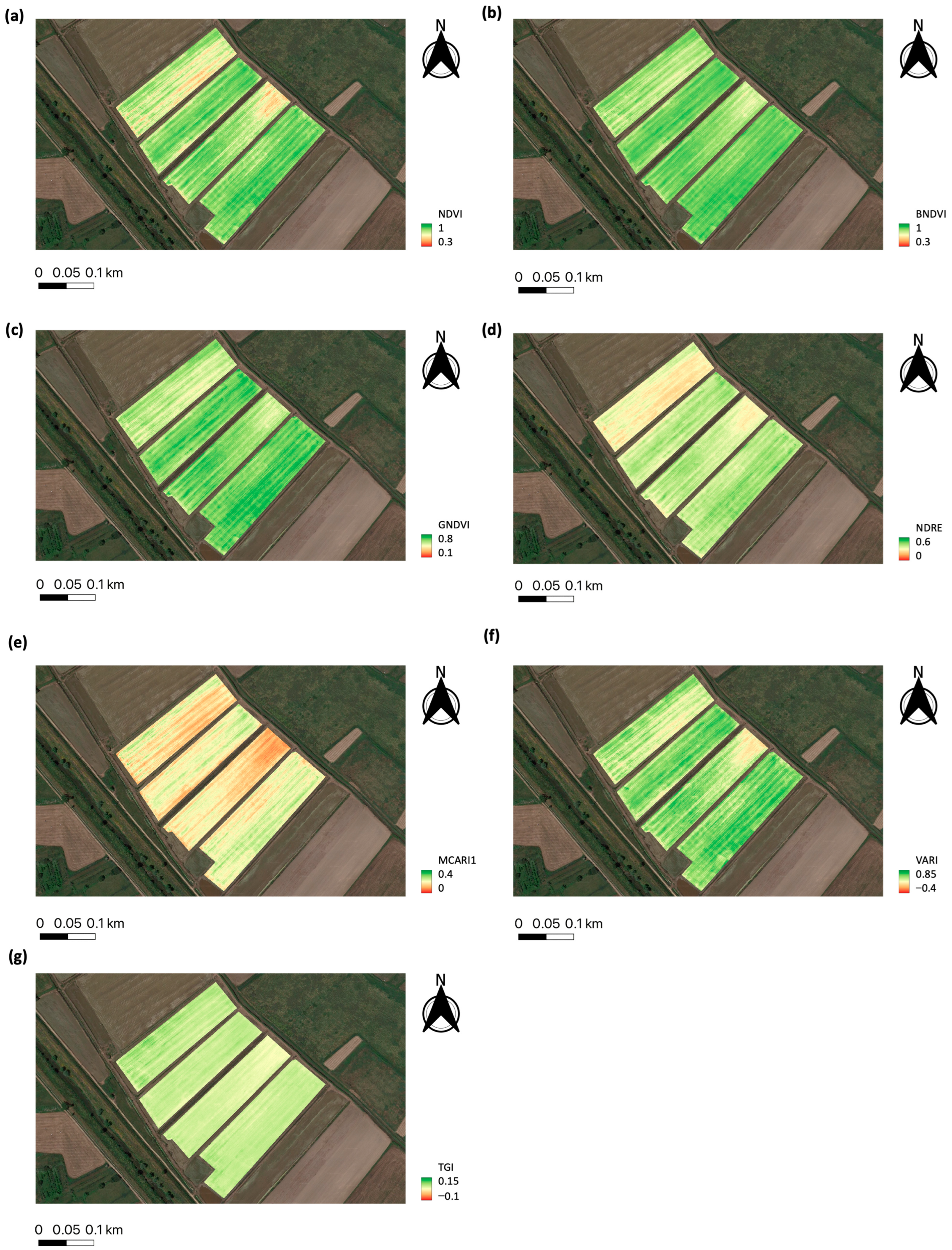

3.1. Rice Monitoring at the Field Plot Scale

3.2. Basic Descriptive Statistics

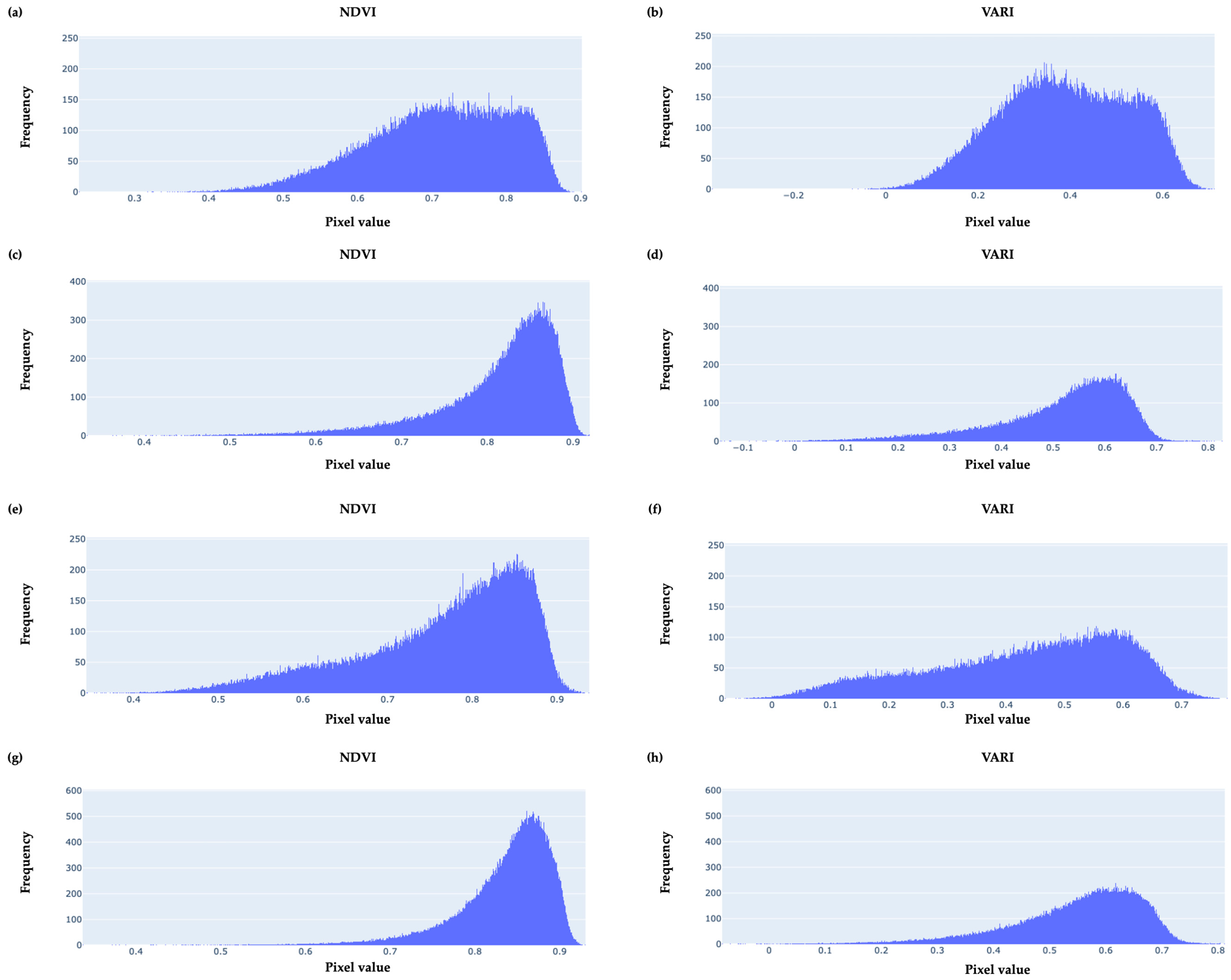

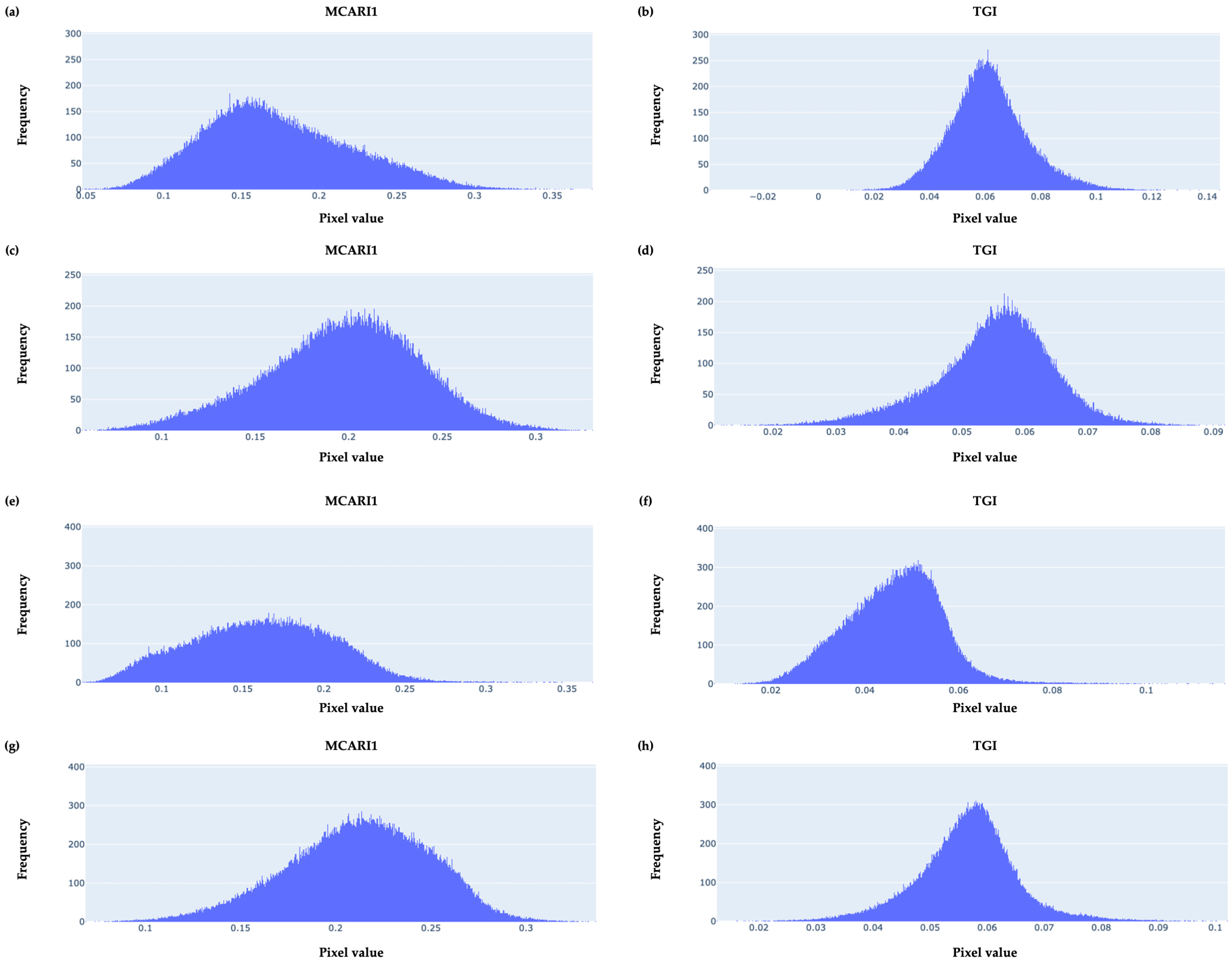

3.3. Empirical Frequency Distributions of Pixel-Value Data

3.4. Cross-Correlation Analysis

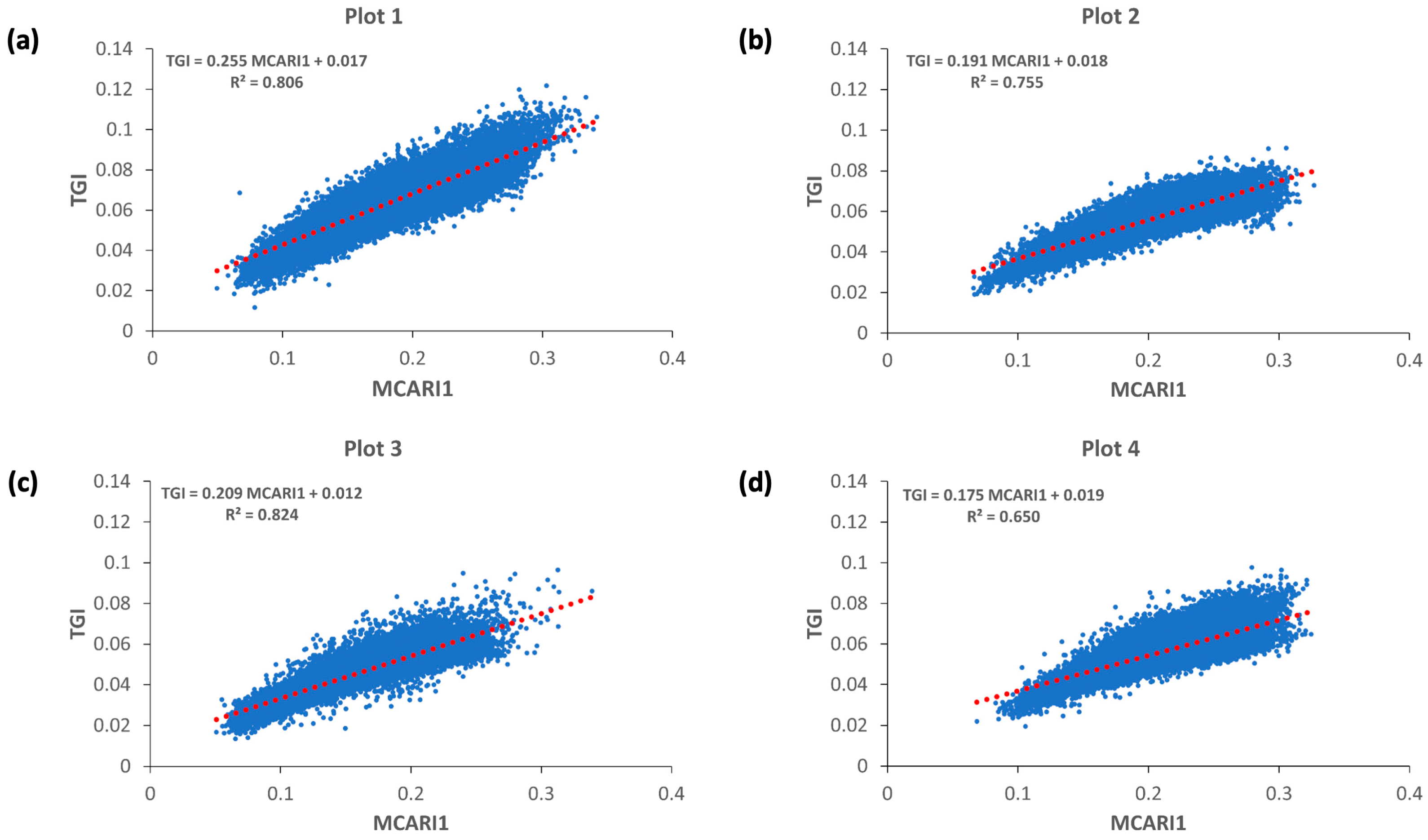

3.5. MS and RGB Indices Relationships

4. Conclusions

- (i).

- High-resolution UAS sensors and photogrammetric techniques that are commonly applied to collect MS imagery have the capability to generate data for creating VIs’ maps that provide useful information for agriculture, namely for rice farming.

- (ii).

- RGB VIs, namely VARI and TGI, which can be calculated from visible RGB bands only, could provide valuable assistance for monitoring and managing rice field plots.

- (iii).

- The access to VIs’ mapping of rice fields (such as VARI and TGI mapping) through the use of digital cameras mounted on UASs, which are able to collect RS imagery at a lower cost than MS cameras, may constitute an opportunity to a larger number of farmers to use RS products to monitor paddy fields in a quick and cost-effective manner and, therefore, improve rice crop management, towards an increasingly sustainable rice agriculture and protection of the environment.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Cantrell, R.P.; Reeves, T.G. The Rice Genome: The Cereal of the World’s Poor Takes Center Stage. Science 2002, 296, 53. [Google Scholar] [CrossRef] [PubMed]

- Fageria, N.K. Plant tissue test for determination of optimum concentration and uptake of nitrogen at different growth stages in lowland rice. Commun. Soil Sci. Plant Anal. 2003, 34, 259–270. [Google Scholar] [CrossRef]

- Arellano, C.A.; Reyes, J.A.D. Effects of farmer-entrepreneurial competencies on the level of production and technical efficiency of rice farms in Laguna, Philippines. J. Int. Soc. Southeast Asian Agric. Sci. 2019, 25, 45–57. Available online: http://issaasphil.org/wp-content/uploads/2019/11/5.-Arellano_Delos-Reyes-2019-entrepreneurial_competencies-FINAL.pdf (accessed on 17 November 2022).

- Wang, L.; Liu, J.; Yang, L.; Chen, Z.; Wang, X.; Ouyang, B. Applications of unmanned aerial vehicle images on agricultural remote sensing monitoring. Trans. Chin. Soc. Agric. Eng. 2013, 29, 136–145. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of unmanned aerial vehicle low-altitude remote sensing (UAV-LARS) use in agricultural monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar] [CrossRef]

- Elmetwalli, A.H.; Mazrou, Y.S.A.; Tyler, A.N.; Hunter, P.D.; Elsherbiny, O.; Yaseen, Z.M.; Elsayed, S. Assessing the efficiency of remote sensing and machine learning algorithms to quantify wheat characteristics in the Nile Delta region of Egypt. Agriculture 2022, 12, 332. [Google Scholar] [CrossRef]

- San Bautista, A.; Fita, D.; Franch, B.; Castiñeira-Ibáñez, S.; Arizo, P.; Sánchez-Torres, M.J.; Becker-Reshef, I.; Uris, A.; Rubio, C. Crop Monitoring Strategy Based on Remote Sensing Data (Sentinel-2 and Planet), Study Case in a Rice Field after Applying Glycinebetaine. Agronomy 2022, 12, 708. [Google Scholar] [CrossRef]

- Tucker, C.J. Remote sensing of leaf water content in the near infrared. Remote Sens. Environ. 1980, 10, 23–32. [Google Scholar] [CrossRef]

- Pan, Z.; Huang, J.; Zhou, Q.; Wang, L.; Cheng, Y.; Zhang, H.; Blackburn, G.A.; Yan, J.; Liu, J. Mapping crop phenology using NDVI time-series derived from HJ-1 A/B data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 188–197. [Google Scholar] [CrossRef]

- Van Niel, T.G.; McVicar, T.R. Current and potential uses of optical remote sensing in rice-based irrigation systems: A review. Aust. J. Agric. Res. 2004, 55, 155–185. [Google Scholar] [CrossRef]

- Hively, W.; Lang, M.; McCarty, G.; Keppler, J.; Sadeghi, A.; McConnell, L. Using satellite remote sensing to estimate winter cover crop nutrient uptake efficiency. J. Soil Water Conserv. 2009, 64, 303–313. [Google Scholar] [CrossRef]

- Zulfa, A.W.; Norizah, K. Remotely sensed imagery data application in mangrove forest: A review. Pertanika J. Sci. Technol. 2018, 26, 899–922. Available online: http://www.pertanika.upm.edu.my/pjtas/browse/regular-issue?article=JST-0904-2017 (accessed on 10 May 2023).

- Ren, H.; Zhou, G.; Zhang, F. Using Negative Soil Adjustment Factor in Soil-Adjusted Vegetation Index (SAVI) for Aboveground Living Biomass Estimation in Arid Grasslands. Remote Sens. Environ. 2018, 209, 439–445. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.; Miller, P.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Huang, Y.; Thomson, S.J.; Hoffmann, W.C.; Lan, Y.; Fritz, B.K. Development and Prospect of Unmanned Aerial Vehicle Technologies for Agricultural Production Management. Int. J. Agric. Biol. Eng. 2013, 6, 11. Available online: https://ijabe.org/index.php/ijabe/article/view/900/0 (accessed on 28 April 2023).

- Li, C.; Li, H.; Li, J.; Lei, Y.; Li, C.; Manevski, K.; Shen, Y. Using NDVI Percentiles to Monitor Real-Time Crop Growth. Comput. Electron. Agric. 2019, 162, 357–363. [Google Scholar] [CrossRef]

- Abdullah, S.; Tahar, K.N.; Rashid, M.F.A.; Osoman, M.A. Camera calibration performance on different non-metric cameras. Pertanika J. Sci. Technol. 2019, 27, 1397–1406. Available online: http://www.pertanika2.upm.edu.my/resources/files/Pertanika%20PAPERS/JST%20Vol.%2027%20%20Jul.%202019/25%20JST-1183-2018.pdf (accessed on 4 May 2023).

- Pinguet, B. The Role of Drone Technology in Sustainable Agriculture. Available online: https://www.precisionag.com/in-field-technologies/drones-uavs/the-role-of-drone-technology-in-sustainable-agriculture/ (accessed on 4 May 2023).

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Cen, H.; Wan, L.; Zhu, J.; Li, Y.; Li, X.; Zhu, Y.; Weng, H.; Wu, W.; Yin, W.; Xu, C.; et al. Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image-frame snapshot cameras. Plant Methods 2019, 15, 32. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Wang, B.W.; Fan, J.H.; Ma, Y.C.; Wang, Y.; Zhang, Z. A Systematic Study of Estimating Potato N Concentrations Us-ing UAV-Based Hyper- and Multi-Spectral Imagery. Agronomy 2022, 12, 2533. [Google Scholar] [CrossRef]

- Pádua, L.; Matese, A.; Gennaro, S.F.D.; Morais, R.; Peres, E.; Sousa, J.J. Vineyard classification using OBIA on UAV-based RGB and multispectral data: A case study in different wine regions. Comput. Electron. Agric. 2022, 196, 106905. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.H. Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Singh, K.D.; Starnes, R.; Kluepfel, D.; Nansen, C. Qualitative analysis of walnut trees rootstock using airborne remote sensing. In Proceedings of the Sixth Annual Plant Science Symposium, UC Davis, CA, USA, 31 January 2017. [Google Scholar]

- Hasan, U.; Sawut, M.; Chen, S. Estimating the Leaf Area Index of Winter Wheat Based on Unmanned Aerial Vehicle RGB-Image Parameters. Sustainability 2019, 11, 6829. [Google Scholar] [CrossRef]

- Andrade, R.G.; Hott, M.C.; Magalhães Junior, W.C.P.D.; D’Oliveira, P.S. Monitoring of Corn Growth Stages by UAV Platform Sensors. Int. J. Adv. Eng. Res. Sci. 2019, 6, 54–58. [Google Scholar] [CrossRef]

- Choroś, T.; Oberski, T.; Kogut, T. UAV Imaging at RGB for Crop Condition Monitoring. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2020, 43, 1521–1525. [Google Scholar] [CrossRef]

- García-Martínez, H.; Flores, H.; Ascencio-Hernández, R.; Khalil-Gardezi, A.; Tijerina-Chávez, L.; Mancilla-Villa, O.R.; Vázquez-Peña, M.A. Corn Grain Yield Estimation from Vegetation Indices, Canopy Cover, Plant Density, and a Neural Network Using Multispectral and RGB Images Acquired with Unmanned Aerial Vehicles. Agriculture 2020, 10, 277. [Google Scholar] [CrossRef]

- Singh, A.P.; Yerudkar, A.; Mariani, V.; Iannelli, L.; Glielmo, L. A Bibliometric Review of the Use of Unmanned Aerial Vehicles in Precision Agriculture and Precision Viticulture for Sensing Applications. Remote Sens. 2022, 14, 1604. [Google Scholar] [CrossRef]

- Cheng, M.; Jiao, X.; Liu, Y.; Shao, M.; Yu, X.; Bai, Y.; Wang, Z.; Wang, S.; Tuohuti, N.; Liu, S.; et al. Estimation of soil moisture content under high maize canopy coverage from UAV multimodal data and machine learning. Agric. Water Manag. 2022, 264, 107530. [Google Scholar] [CrossRef]

- Ge, H.; Xiang, H.; Ma, F.; Li, Z.; Qiu, Z.; Tan, Z.; Du, C. Estimating Plant Nitrogen Concentration of Rice through Fusing Vegetation Indices and Color Moments Derived from UAV-RGB Images. Remote Sens. 2021, 13, 1620. [Google Scholar] [CrossRef]

- Dimyati, M.; Supriatna, S.; Nagasawa, R.; Pamungkas, F.D.; Pramayuda, R. A Comparison of Several UAV-Based Multispectral Imageries in Monitoring Rice Paddy (A Case Study in Paddy Fields in Tottori Prefecture, Japan). IJGI 2023, 12, 36. [Google Scholar] [CrossRef]

- Kazemi, F.; Ghanbari Parmehr, E. Evaluation of RGB Vegetation Indices Derived from UAV Images for Rice Crop Growth Monitoring. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 385–390. [Google Scholar] [CrossRef]

- Ristorto, G.; Mazzetto, F.; Guglieri, G.; Quagliotti, F. Monitoring performances and cost estimation of multirotor Unmanned Aerial Systems in precision farming. Int. Conf. Unmanned Aircr. Syst. 2015, 7152329, 502–509. [Google Scholar] [CrossRef]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multi-spectral UAV data. Int. J. Remote Sens. 2018, 39, 5432–5452. [Google Scholar] [CrossRef]

- de Lima, I.P.; Jorge, R.G.; de Lima, J.L.M.P. Remote Sensing Monitoring of Rice Fields: Towards Assessing Water Saving Irrigation Management Practices. Front. Remote Sens. 2021, 2, 762093. [Google Scholar] [CrossRef]

- Gonçalves, J.M.; Ferreira, S.; Nunes, M.; Eugénio, R.; Amador, A.; Filipe, O.; Duarte, I.M.; Teixeira, M.; Vasconcelos, T.; Oliveira, F.; et al. Developing Irrigation Management at District Scale Based on Water Monitoring: Study on Lis Valley, Portugal. Agric. Eng. 2020, 2, 78–95. [Google Scholar] [CrossRef]

- USDA. Portuguese Rice Imports Pick up as Production Declines—USDA Gain Report; USDA: Washington, DC, USA, 2017; p. 13. [Google Scholar]

- IPMA. IPMA Home Page. Available online: https://www.ipma.pt (accessed on 10 November 2022).

- Mora, C.; Vieira, G. The Climate of Portugal. In Landscapes and Landforms of Portugal; Vieira, G., Zêzere, J.L., Mora, C., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 33–46. ISBN 978-3-319-03641-0. [Google Scholar] [CrossRef]

- SNIRH. SNIRH Home Page. Available online: https://snirh.apambiente.pt/ (accessed on 1 December 2022).

- Fonseca, A.; Botelho, C.; Boaventura, R.A.R.; Vilar, V.J.P. Integrated hydrological and water quality model for river management: A case study on Lena River. Sci. Total Environ. 2014, 485–486, 474–489. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, J.M.; Nunes, M.; Jordão, A.; Ferreira, S.; Eugénio, R.; Bigeriego, J.; Duarte, I.; Amador, P.; Filipe, O.; Damásio, H.; et al. The Challenges of Water Saving in Rice Irrigation: Field Assessment of Alternate Wetting and Drying Flooding and Drip Irrigation Techniques in the Lis Valley, Portugal. In Proceedings of the 1st International Conference on Water Energy Food and Sustainability (ICoWEFS 2021), Leiria, Portugal, 10–12 May 2021; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Rice Knowledge Bank. Rice Knowledge Bank Home Page. Available online: http://www.knowledgebank.irri.org/step-by-step-production/pre-planting/crop-calendar (accessed on 10 December 2022).

- de Lima, I.P.; Jorge, R.G.; de Lima, J.L.M.P. Aplicação de Técnicas de Deteção Remota na Avaliação da Cultura do Arroz. In Proceedings of the 15° Congresso da Água, Lisboa, Portugal, 22–26 March 2021; Available online: https://www.aprh.pt/congressoagua2021/docs/15ca_142.pdf (accessed on 14 January 2023).

- Ferreira, S.; Sánchez, J.M.; Gonçalves, J.M. A Remote-Sensing-Assisted Estimation of Water Use in Rice Paddy Fields: A Study on Lis Valley, Portugal. Agronomy 2023, 13, 1357. [Google Scholar] [CrossRef]

- Daughtry, C.S.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey Iii, J. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Pix4Dmapper 4.1 User Manual. Available online: https://support.pix4d.com/hc/en-us/articles/204272989-Offline-Getting-Started-and-Manual-pdf (accessed on 21 July 2023).

- Avtar, R.; Suab, S.A.; Syukur, M.S.; Korom, A.; Umarhadi, D.A.; Yunus, A.P. Assessing the influence of UAV altitude on extracted biophysical parameters of young oil palm. Remote Sens. 2020, 12, 3030. [Google Scholar] [CrossRef]

- Cubero-Castan, M.; Schneider-Zapp, K.; Bellomo, M.; Shi, D.; Rehak, M.; Strecha, C. Assessment of the Radiometric Accuracy in A Target Less Work Flow Using Pix4D Software. In Proceedings of the Workshop on Hyperspectral Image and Signal Processing, Evolution in Remote Sensing, Amsterdam, The Netherlands, 23–26 September 2018. [Google Scholar] [CrossRef]

- Qin, Z.; Li, X.; Gu, Y. An Illumination Estimation and Compensation Method for Radiometric Correction of UAV Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5545012. [Google Scholar] [CrossRef]

- Daniels, L.; Eeckhout, E.; Wieme, J.; Dejaegher, Y.; Audenaert, K.; Maes, W.H. Identifying the Optimal Radiometric Calibration Method for UAV-Based Multispectral Imaging. Remote Sens. 2023, 15, 2909. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T.; Eitel, J.U.; Long, D.S. Remote Sensing Leaf Chlorophyll Content Using a Visible Band Index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Stark, R.; Grits, U.; Rundquist, D.; Kaufman, Y.; Derry, D. Vegetation and soil lines in visible spectral space: A concept and technique for remote estimation of vegetation fraction. Int. J. Remote Sens. 2002, 23, 2537–2562. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Doraiswamy, P.C.; McMurtrey, J.E.; Daughtry, C.S.; Perry, E.M.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef]

- Wan, L.; Cen, H.; Zhu, J.; Zhang, J.; Zhu, Y.; Sun, D.; Du, X.; Zhai, L.; Weng, H.; Li, Y.; et al. Grain yield prediction of rice using multi-temporal UAV-based RGB and multispectral images and model transfer—A case study of small farmlands in the South of China. Agric. For. Meteorol. 2020, 291, 108096. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Wójcik-Gront, E.; Gozdowski, D.; Stępień, W. UAV-Derived Spectral Indices for the Evaluation of the Condition of Rye in Long-Term Field Experiments. Agriculture 2022, 12, 1671. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third ERTS (Earth Resources Technology Satellite) Symposium, NASA SP-351, Washington, DC, USA, 10–14 December 1973; NASA: Washington, DC, USA, 1973; Volume 1, pp. 309–317. [Google Scholar]

- Yang, C.; Everitt, J.H.; Bradford, J.M.; Murden, D. Airborne Hyperspectral Imagery and Yield Monitor Data for Mapping Cotton Yield Variability. Precis. Agric. 2004, 5, 445–461. [Google Scholar] [CrossRef]

- Gitelson, A.; Kaufman, Y.; Merzlyak, M. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Quantitative Estimation of Chlorophyll-a Using Reflectance Spectra: Experiments with Autumn Chestnut and Maple Leaves. J. Photochem. Photobiol. B Biol. 1994, 22, 247–252. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Suud, H.M. An image processing approach for monitoring soil plowing based on drone RGB images. BDA 2022, 5, 1–5. [Google Scholar]

- Sedlar, A.; Gvozdenac, S.; Pejović, M.; Višacki, V.; Turan, J.; Tanasković, S.; Burg, P.; Vasić, F. The Influence of Wetting Agent and Type of Nozzle on Copper Hydroxide Deposit on Sugar Beet Leaves (Beta vulgaris L.). Appl. Sci. 2022, 12, 2911. [Google Scholar] [CrossRef]

- Ryu, J.-H.; Jeong, H.; Cho, J. Performances of Vegetation Indices on Paddy Rice at Elevated Air Temperature, Heat Stress, and Herbicide Damage. Remote Sens. 2020, 12, 2654. [Google Scholar] [CrossRef]

- Taylor-Zavala, R.; Ramírez-Rodríguez, O.; de Armas-Ricard, M.; Sanhueza, H.; Higueras-Fredes, F.; Mattar, C. Quantifying Biochemical Traits over the Patagonian Sub-Antarctic Forests and Their Relation to Multispectral Vegetation Indices. Remote Sens. 2021, 13, 4232. [Google Scholar] [CrossRef]

- Gerardo, R.; de Lima, I. Comparing the capability of Sentinel-2 and Landsat 9 imagery for mapping water and sandbars in the river bed of the Lower Tagus River (Portugal). Remote Sens. 2023, 15, 1927. [Google Scholar] [CrossRef]

- QGIS. 2023. QGIS Project. Available online: http://www.qgis.org/ (accessed on 10 March 2022).

- Boiarskii, B. Comparison of NDVI and NDRE Indices to Detect Differences in Vegetation and Chlorophyll Content. J. Mech. Contin. Math. Sci. 2019, 4, 20–29. [Google Scholar] [CrossRef]

- Miniotti, E.; Romani, M.; Said-Pullicino, D.; Facchi, A.; Bertora, C.; Peyron, M.; Sacco, D.; Bischetti, G.; Lerda, C.; Tenni, D.; et al. Agro-environmental sustainability of different water management practices in temperate rice agro-ecosystems. Agric. Ecosyst. Environ. 2016, 222, 235–248. [Google Scholar] [CrossRef]

- Zhang, J.; Wan, L.; Igathinathane, C.; Zhang, Z.; Guo, Y.; Sun, D.; Cen, H. Spatiotemporal Heterogeneity of Chlorophyll Content and Fluorescence Response Within Rice (Oryza sativa L.) Canopies Under Different Nitrogen Treatments. Front. Plant Sci. 2021, 12, 645977. [Google Scholar] [CrossRef] [PubMed]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Jelínek, Z.; Mašek, J.; Starỳ, K.; Lukáš, J.; Kumhálová, J. Winter wheat, Winter Rape and Poppy Crop Growth Evaluation with the Help of Remote and Proximal Sensing Measurements. Agron. Res. 2020, 18, 2049–2059. [Google Scholar]

| Type of Index | VI | Equation |

|---|---|---|

| RGB | VARI | |

| TGI | ||

| Multispectral | NDVI | |

| BNDVI | ||

| GNDVI | ||

| NDRE | ||

| MCARI1 |

| Rice Plot | Index | Min | Max | Range | Mean | CV |

|---|---|---|---|---|---|---|

| Plot 1 (n = 311,507) | NDVI | 0.23 | 0.90 | 0.68 | 0.71 | 0.13 |

| BNDVI | 0.40 | 0.91 | 0.51 | 0.78 | 0.07 | |

| GNDVI | 0.15 | 0.74 | 0.59 | 0.55 | 0.10 | |

| NDRE | 0.09 | 0.48 | 0.38 | 0.29 | 0.15 | |

| MCARI1 | 0.05 | 0.38 | 0.33 | 0.17 | 0.26 | |

| VARI | −0.38 | 0.71 | 1.09 | 0.39 | 0.34 | |

| TGI | −0.04 | 0.14 | 0.18 | 0.06 | 0.20 | |

| Plot 2 (n = 306,347) | NDVI | 0.33 | 0.92 | 0.59 | 0.82 | 0.08 |

| BNDVI | 0.37 | 0.92 | 0.55 | 0.82 | 0.05 | |

| GNDVI | 0.31 | 0.77 | 0.46 | 0.64 | 0.07 | |

| NDRE | 0.14 | 0.52 | 0.38 | 0.38 | 0.10 | |

| MCARI1 | 0.06 | 0.33 | 0.27 | 0.20 | 0.19 | |

| VARI | −0.14 | 0.83 | 0.97 | 0.53 | 0.21 | |

| TGI | 0.01 | 0.09 | 0.08 | 0.06 | 0.14 | |

| Plot 3 (n = 322,544) | NDVI | 0.34 | 0.94 | 0.59 | 0.77 | 0.12 |

| BNDVI | 0.49 | 0.93 | 0.44 | 0.81 | 0.07 | |

| GNDVI | 0.32 | 0.79 | 0.47 | 0.61 | 0.10 | |

| NDRE | 0.17 | 0.54 | 0.37 | 0.36 | 0.13 | |

| MCARI1 | 0.05 | 0.37 | 0.32 | 0.16 | 0.25 | |

| VARI | −0.08 | 0.78 | 0.86 | 0.44 | 0.36 | |

| TGI | 0.01 | 0.12 | 0.11 | 0.05 | 0.20 | |

| Plot 4 (n = 436,216) | NDVI | 0.34 | 0.93 | 0.59 | 0.84 | 0.06 |

| BNDVI | 0.51 | 0.93 | 0.42 | 0.86 | 0.04 | |

| GNDVI | 0.33 | 0.79 | 0.46 | 0.66 | 0.06 | |

| NDRE | 0.17 | 0.56 | 0.38 | 0.40 | 0.09 | |

| MCARI1 | 0.07 | 0.34 | 0.27 | 0.21 | 0.16 | |

| VARI | −0.08 | 0.81 | 0.90 | 0.56 | 0.19 | |

| TGI | 0.01 | 0.10 | 0.09 | 0.06 | 0.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gerardo, R.; de Lima, I.P. Applying RGB-Based Vegetation Indices Obtained from UAS Imagery for Monitoring the Rice Crop at the Field Scale: A Case Study in Portugal. Agriculture 2023, 13, 1916. https://doi.org/10.3390/agriculture13101916

Gerardo R, de Lima IP. Applying RGB-Based Vegetation Indices Obtained from UAS Imagery for Monitoring the Rice Crop at the Field Scale: A Case Study in Portugal. Agriculture. 2023; 13(10):1916. https://doi.org/10.3390/agriculture13101916

Chicago/Turabian StyleGerardo, Romeu, and Isabel P. de Lima. 2023. "Applying RGB-Based Vegetation Indices Obtained from UAS Imagery for Monitoring the Rice Crop at the Field Scale: A Case Study in Portugal" Agriculture 13, no. 10: 1916. https://doi.org/10.3390/agriculture13101916

APA StyleGerardo, R., & de Lima, I. P. (2023). Applying RGB-Based Vegetation Indices Obtained from UAS Imagery for Monitoring the Rice Crop at the Field Scale: A Case Study in Portugal. Agriculture, 13(10), 1916. https://doi.org/10.3390/agriculture13101916