Detection of Respiratory Rate of Dairy Cows Based on Infrared Thermography and Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Test Data

2.1.1. Data Acquisition

2.1.2. Dataset Production

- Data preprocessing: approximately 15 min of video is cut into 10 s of video data, totalling 92 segments;

- Picture acquisition: each frame picture is extracted from the recorded IRT video file, and the picture is saved in a JPG format;

- Dividing the dataset: to better train the parameters and predict the performance of the model, the dataset is divided into a training set and a test set at a ratio of 7:3 by random sampling. The training set is used as a training object to extract the neural network model. When the amount of data in the training set is sufficient, it is helpful to enhance the recognition ability of the model application. The test set is used to verify the accuracy of the model.

- Data labelling: the nose, left nostril, and right nostril of the cow in each frame image of the training set are manually labelled. After the picture is annotated, a corresponding file is generated, which records the annotated area, object category, and other information;

- Dataset format: the file is converted into a standard format dataset for model training and testing.

2.2. Nostril Detection Based on Cascaded Deep Learning

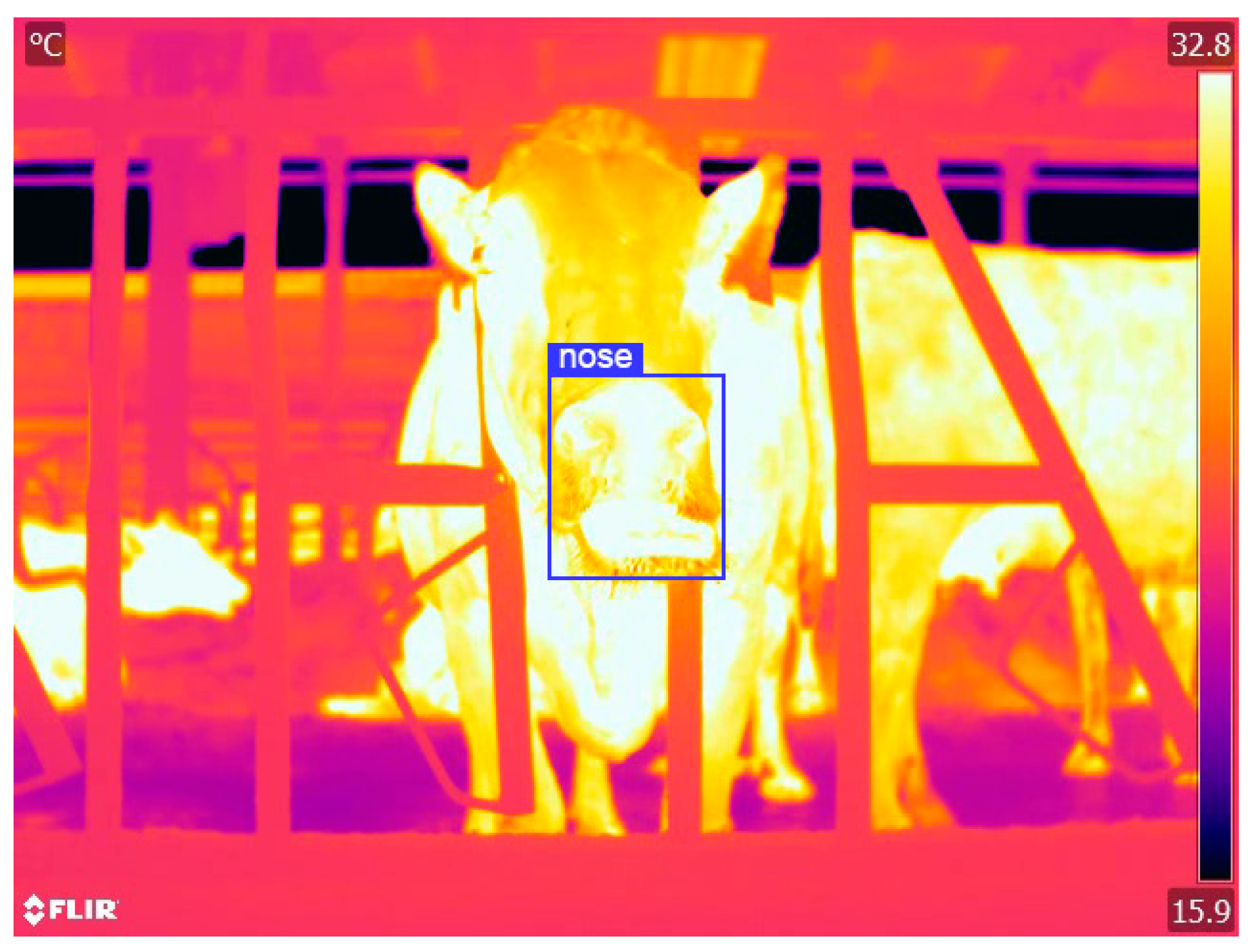

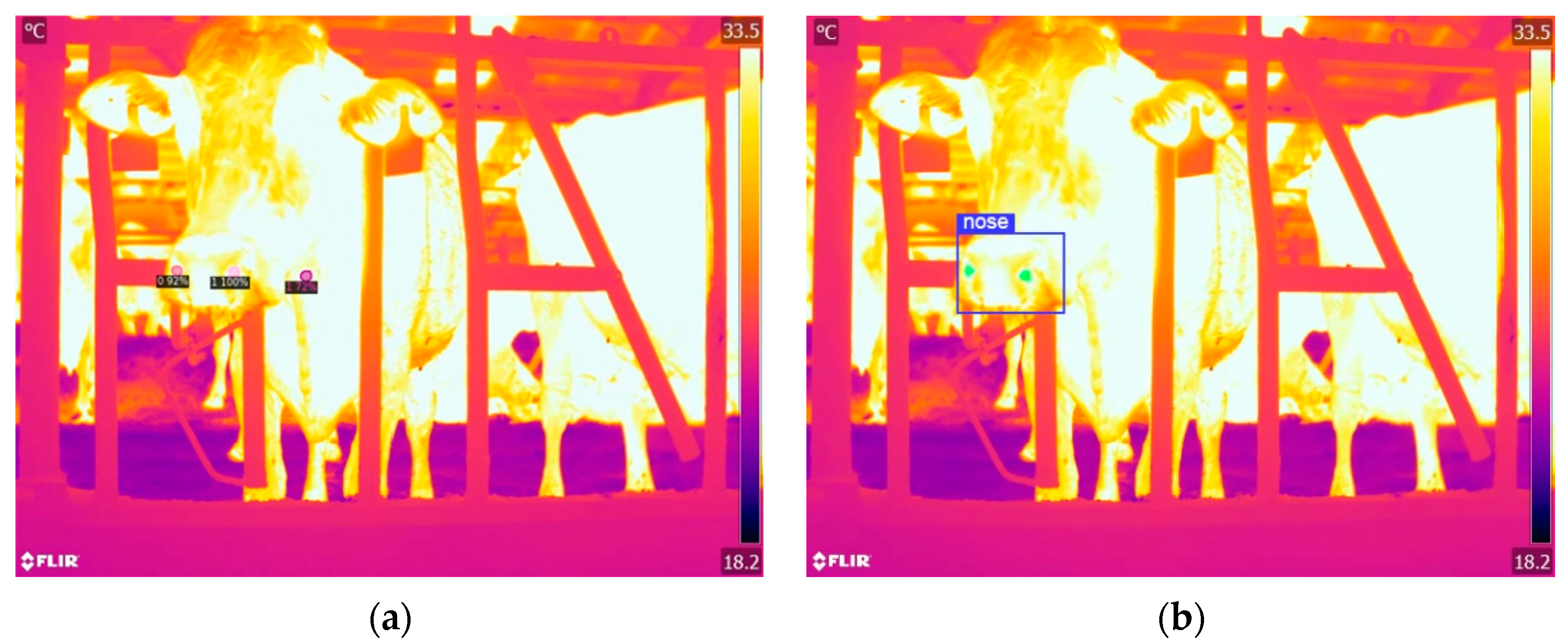

2.2.1. Nose Detection Based on YOLO V8

2.2.2. Nostril Segmentation Based on Deep Learning

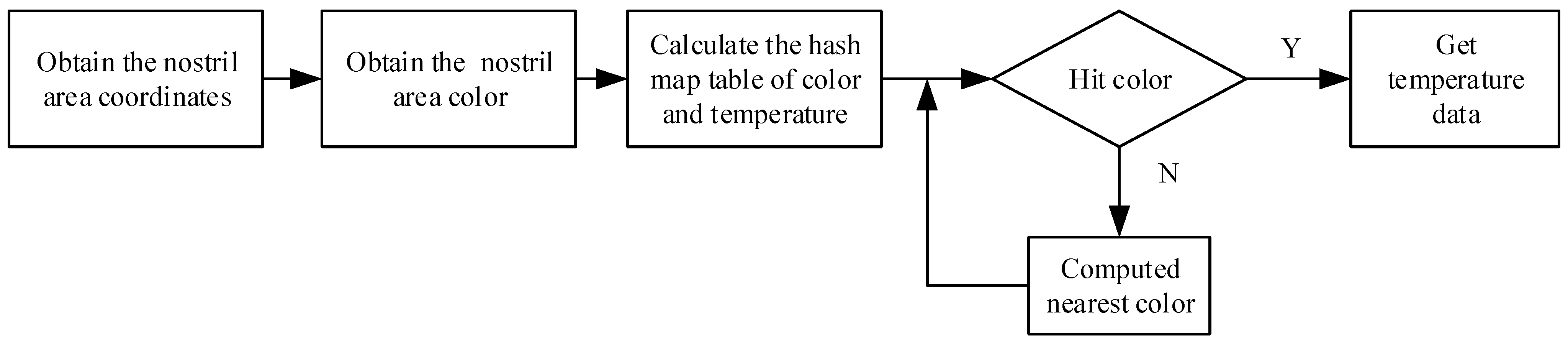

2.3. Temperature Extraction of the Nostril Area Based on the Hash Algorithm

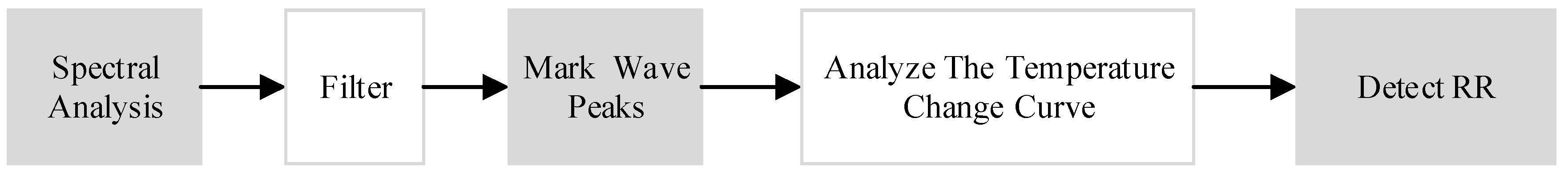

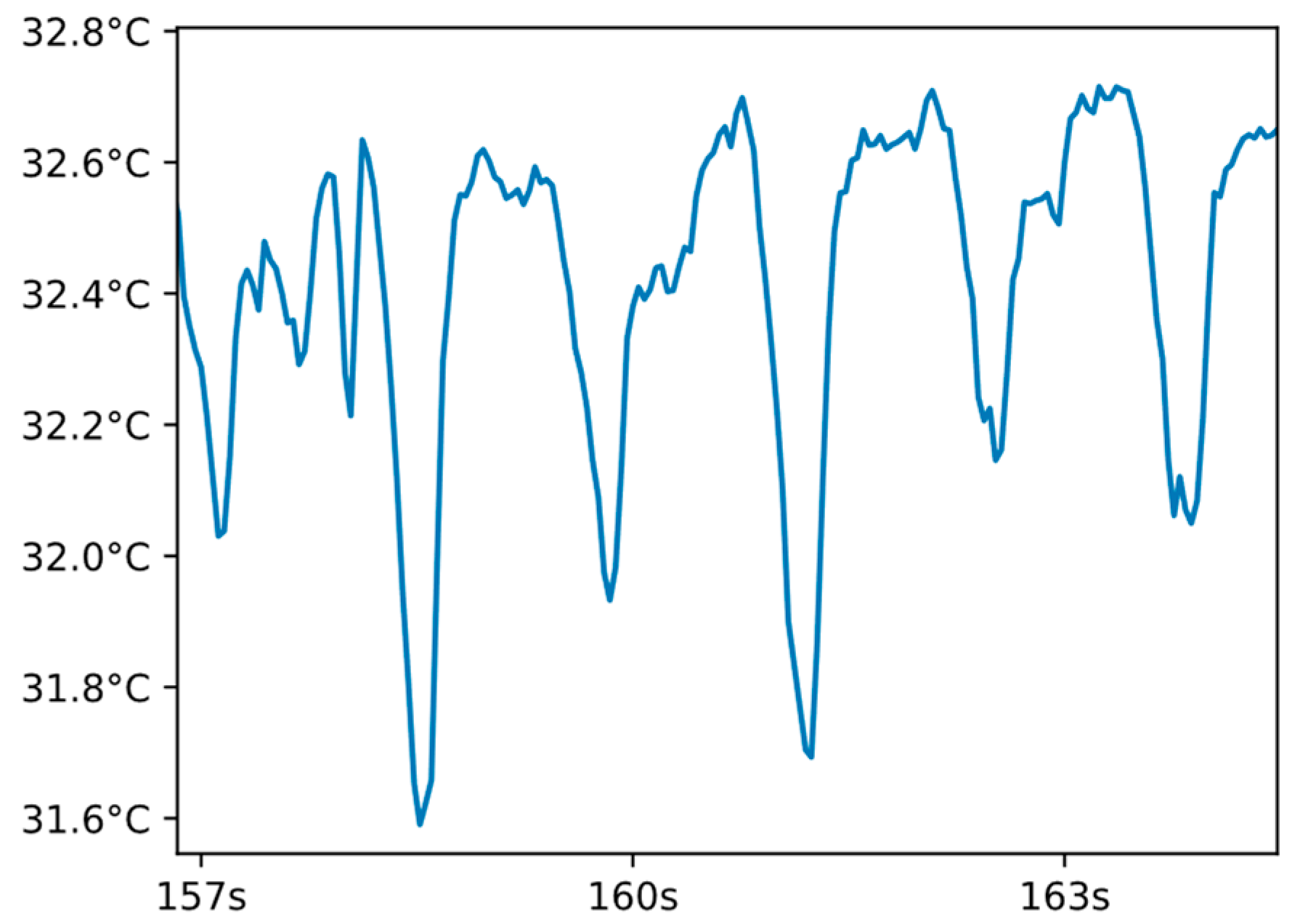

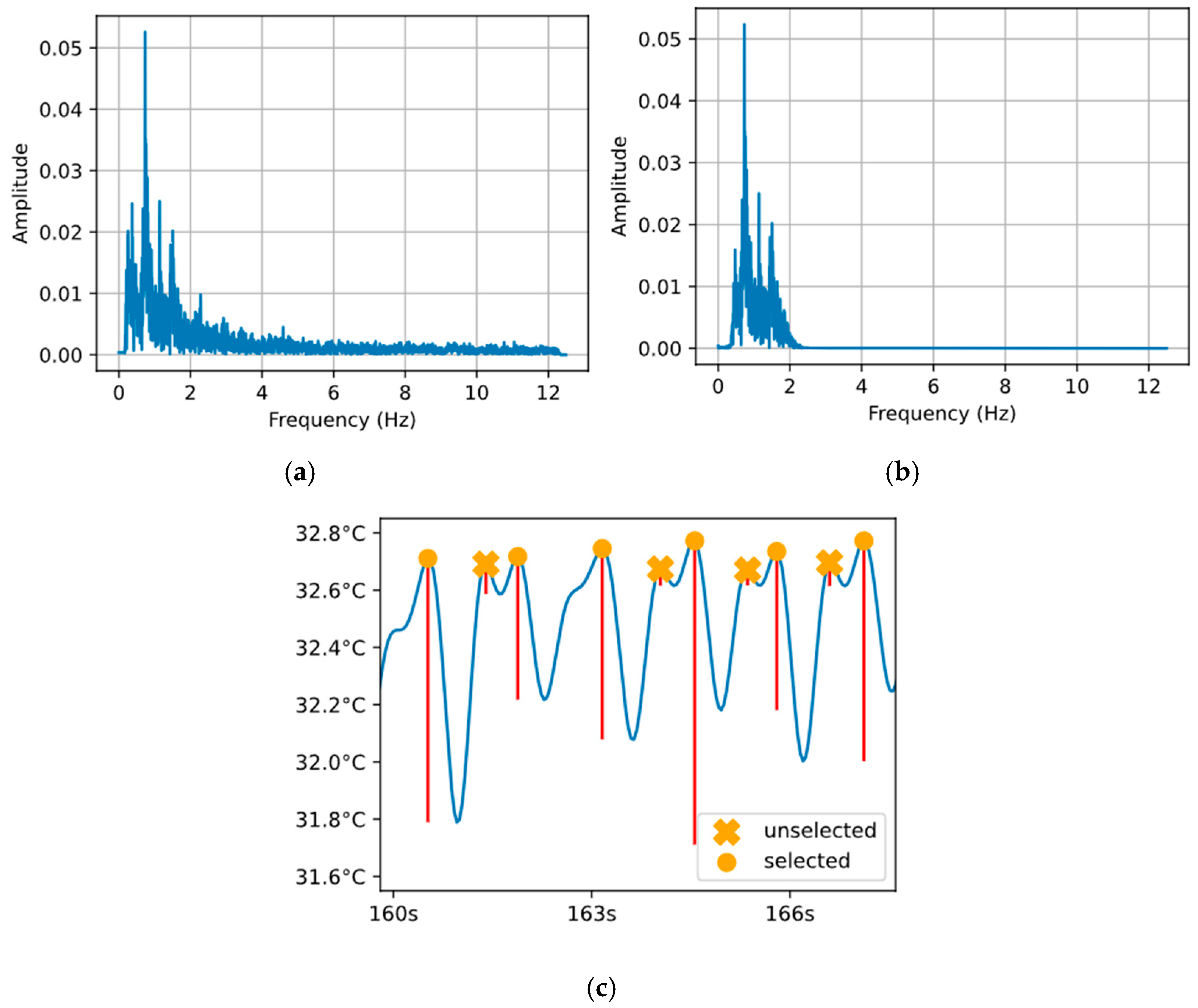

2.4. Detection of RR

2.5. Evaluation Index of the Model

3. Results and Discussion

3.1. Analysis of Test Results

3.1.1. Nose Object Detection Result

3.1.2. Comparison of Instance Segmentation Algorithms

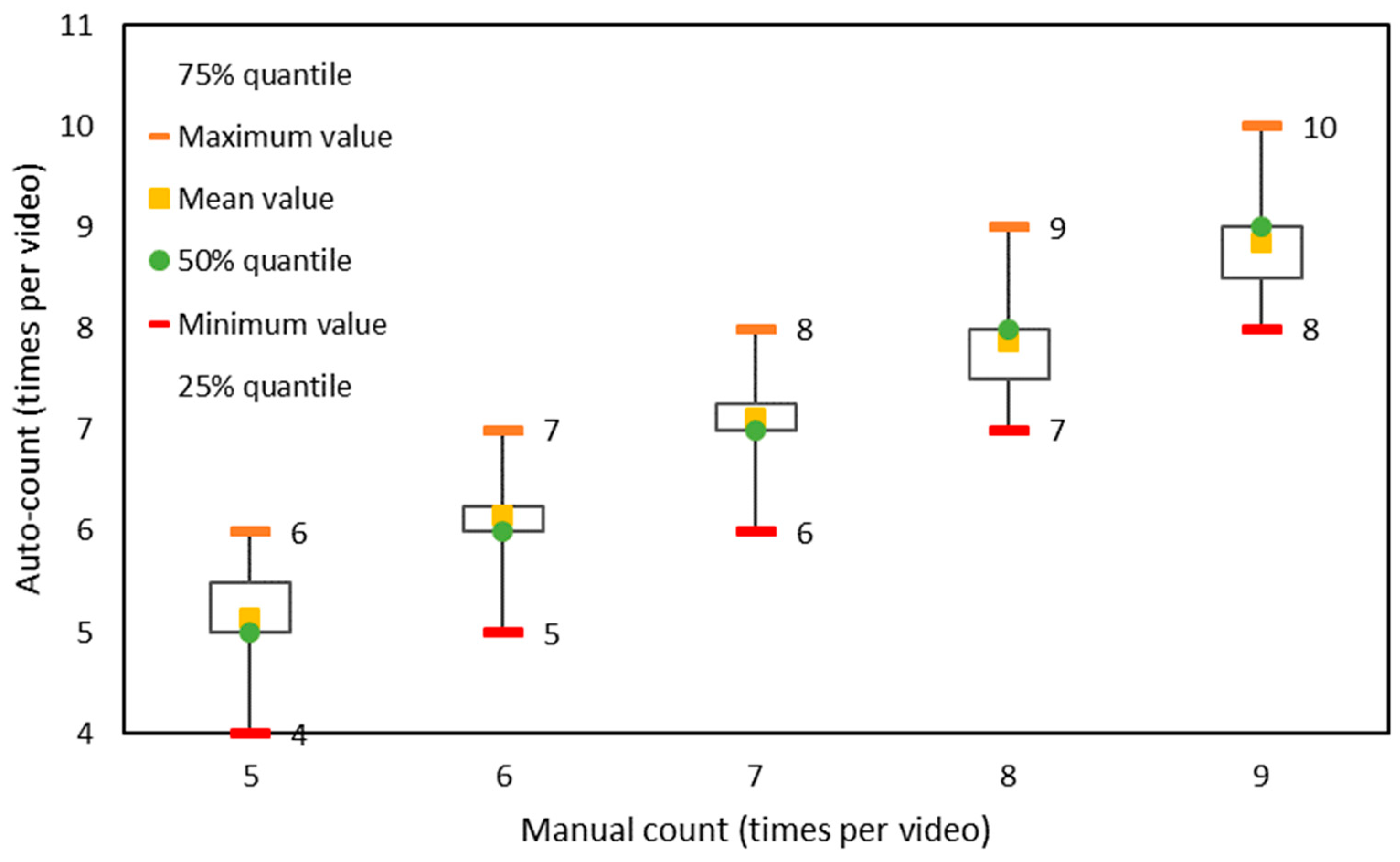

3.1.3. Results of RR Detecting

3.2. Discussion

3.2.1. Analysis of Nose–Nostril Detection

3.2.2. Analysis of the Influence of Head Swing on the Results of RR Detecting

3.2.3. Analysis of the Impact of Waveform Noise on the Results of RR Detecting

3.3. Comparison with Other Detection Methods

3.4. Outlook

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cui, Y. Normal physiological indicators and examination methods of dairy cows. Tech. Advis. Anim. Husb. 2013, 18, e0206520. [Google Scholar]

- Polsky, L.; von Keyserlingk, M.A. Invited review: Effects of heat stress on dairy cattle welfare. J. Dairy Sci. 2017, 100, 8645–8657. [Google Scholar] [CrossRef] [PubMed]

- Das, R.; Sailo, L.; Verma, N.; Bharti, P.; Saikia, J.; Kumar, R. Impact of heat stress on health and performance of dairy animals: A review. Vet. World 2016, 9, 260–268. [Google Scholar] [CrossRef] [PubMed]

- De Rensis, F.; Garcia-Ispierto, I.; López-Gatius, F. Seasonal heat stress: Clinical implications and hormone treatments for the fertility of dairy cows. Theriogenology 2015, 84, 659–666. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.; WANG, J.; Li, F. Thoughts and Suggestions on the Determination of the Dairy Herd Improvement in China under the Background of Construction of a Modern Dairy Industry. Chin. J. Anim. Sci. 2015, 51, 7–11. [Google Scholar]

- Milan, H.F.M.; Maia, A.S.C.; Gebremedhin, K.G. Technical note: Device for measuring respiration rate of cattle under field conditions. J. Anim. Sci. 2016, 94, 5434–5438. [Google Scholar] [CrossRef]

- He, D.; Liu, D.; Zhao, K. Review of Perceiving Animal Information and Behavior in Precision Livestock Farming. Trans. Chin. Soc. Agric. Mach. 2016, 47, 231–244. [Google Scholar] [CrossRef]

- Yan, X.; Liu, H.; Jia, Z.; Tian, S.; Pi, X. Advances in the detection of respiratory rate. Beijing Biomed. Eng. 2017, 36, 545–549. [Google Scholar] [CrossRef]

- Chen, Y.; Hou, Z.; Chen, C.; Liang, J.; Su, H. Research on non-contact respiratory detection algorithm based on depth images. Comput. Meas. Control. 2017, 25, 213–217. [Google Scholar] [CrossRef]

- Eigenberg, R.A.; Hahn, G.L.; Nienaber, J.A.; Brown-Brandl, T.M.; Spiers, D.E. Development of a new respiration rate monitor for cattle. Trans. ASAE 2000, 43, 723–728. [Google Scholar] [CrossRef]

- Strutzke, S.; Fiske, D.; Hoffmann, G.; Ammon, C.; Heuwieser, W.; Amon, T. Technical note: Development of a noninvasive respiration rate sensor for cattle. J. Dairy Sci. 2019, 102, 690–695. [Google Scholar] [CrossRef] [PubMed]

- Li, D. Research on the Model and Efficiency of Dairy Cow Breeding in China. Ph.D. Thesis, Chinese Academy of Agricultural Sciences, Beijing, China, 2013. [Google Scholar]

- Liang, T.; Weixing, Z.; Xincheng, L.; Lei, Y. Identification and detection of swine respiratory frequency based on area feature operator. Inf. Technol. 2015, 2, 73–77. [Google Scholar] [CrossRef]

- Benetazzo, F.; Freddi, A.; Monteriù, A.; Longhi, S. Respiratory rate detection algorithm based on RGB-D camera: Theoretical background and experimental results. Healthc. Technol. Lett. 2014, 1, 81–86. [Google Scholar] [CrossRef] [PubMed]

- Zhao, K.; He, D.; Wang, E. Detection of Breathing Rate and Abnormity of Dairy Cattle Based on Video Analysis. Trans. Chin. Soc. Agric. Mach. 2014, 45, 258–263. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, D.; Xiong, J.; Liu, J.; Liu, Z.; Zhang, D. MultiSense: Enabling multi-person respiration sensing with commodity wifi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–29. [Google Scholar] [CrossRef]

- Ma, T. Principle and Application Analysis of Infrared Thermal Camera. In Proceedings of the the 14th Ningxia Youth Scientists Forum Petrochemical Special Forum, Yinchuan, China, 24 July 2018; pp. 323 325+329. [Google Scholar]

- Stewart, M.; Wilson, M.T.; Schaefer, A.L.; Huddart, F.; Sutherland, M.A. The use of infrared thermography and accelerometers for remote monitoring of dairy cow health and welfare. J. Dairy Sci. 2017, 100, 3893–3901. [Google Scholar] [CrossRef]

- Wang, Z.; Song, H.; Wang, Y.; Hua, Z.; Li, R.; Xu, X. Research Progress and Technology Trend of Intelligent Morning of Dairy Cow Motion Behavior. Smart Agric. 2022, 4, 36. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics (Version 8.0.0) [Computer Software]. Available online: https://github.com/ultralytics/ultralytics (accessed on 30 May 2023).

- Wang, M.; Song, W. An RGB-D SLAM Algorithm Based on Adaptive Semantic Segmentation in Dynamic Environment. Robot 2023, 45, 16–27. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Agrawal, N.; Kumar, A.; Bajaj, V.; Lee, H.-N. Controlled ripple based design of digital IIR filter. In Proceedings of the 2016 IEEE International Conference on Digital Signal Processing (DSP), Beijing, China, 16–18 October 2016; pp. 627–631. [Google Scholar]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. Solov2: Dynamic and fast instance segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar]

- Huang, T.; Hua, L.; Gui, Z.; Shaobo, L.; Yang, W. Survey of Research on Instance Segmentation Methods. J. Front. Comput. Sci. Technol. 2023, 17, 810–825. [Google Scholar] [CrossRef]

- Qi, L.; Wang, Y.; Chen, Y.; Chen, Y.-C.; Zhang, X.; Sun, J.; Jia, J. Pointins: Point-based instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6377–6392. [Google Scholar] [CrossRef] [PubMed]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J.; Cho, K. Augmentation for small object detection. arXiv 2019, arXiv:1902.07296. [Google Scholar] [CrossRef]

- Jorquera-Chavez, M.; Fuentes, S.; Dunshea, F.R.; Warner, R.D.; Poblete, T.; Jongman, E.C. Modelling and Validation of Computer Vision Techniques to Assess Heart Rate, Eye Temperature, Ear-Base Temperature and Respiration Rate in Cattle. Animals 2019, 9, 1089. [Google Scholar] [CrossRef] [PubMed]

- Lowe, G.; Sutherland, M.; Waas, J.; Schaefer, A.; Cox, N.; Stewart, M. Infrared thermography—A non-invasive method of measuring respiration rate in calves. Animals 2019, 9, 535. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Yin, X.; Jiang, B.; Jiang, M.; Li, Z.; Song, H. Detection of the respiratory rate of standing cows by combining the Deeplab V3+ semantic segmentation model with the phase-based video magnification algorithm. Biosyst. Eng. 2020, 192, 72–89. [Google Scholar] [CrossRef]

| Algorithm | AP50 | AP75 | AP50–95 |

|---|---|---|---|

| YOLO v8 | 98.6 | 84.3 | 81.2 |

| Algorithm | AP50 |

|---|---|

| Mask2Former | 75.71 |

| Mask R-CNN | 67.32 |

| SOLOv2 | 68.27 |

| Cow Number | Total Detection (/Frame) | False Detection (/Frame) | Missed Detection (/Frame) | Proportion |

|---|---|---|---|---|

| 1386 | 4871 | 297 | 3 | 6.16% |

| 1563 | 3500 | 171 | 12 | 5.23% |

| 1568 | 4500 | 215 | 17 | 5.16% |

| 1809 | 3449 | 156 | 10 | 4.81% |

| 2855 | 3750 | 134 | 6 | 3.73% |

| Cow Number | 1386 | 1809 | 2855 | 1563 | 1568 |

|---|---|---|---|---|---|

| Accuracy ω | 92.57% | 95.24% | 97.22% | 93.89% | 93.96% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, K.; Duan, Y.; Chen, J.; Li, Q.; Hong, X.; Zhang, R.; Wang, M. Detection of Respiratory Rate of Dairy Cows Based on Infrared Thermography and Deep Learning. Agriculture 2023, 13, 1939. https://doi.org/10.3390/agriculture13101939

Zhao K, Duan Y, Chen J, Li Q, Hong X, Zhang R, Wang M. Detection of Respiratory Rate of Dairy Cows Based on Infrared Thermography and Deep Learning. Agriculture. 2023; 13(10):1939. https://doi.org/10.3390/agriculture13101939

Chicago/Turabian StyleZhao, Kaixuan, Yijie Duan, Junliang Chen, Qianwen Li, Xing Hong, Ruihong Zhang, and Meijia Wang. 2023. "Detection of Respiratory Rate of Dairy Cows Based on Infrared Thermography and Deep Learning" Agriculture 13, no. 10: 1939. https://doi.org/10.3390/agriculture13101939

APA StyleZhao, K., Duan, Y., Chen, J., Li, Q., Hong, X., Zhang, R., & Wang, M. (2023). Detection of Respiratory Rate of Dairy Cows Based on Infrared Thermography and Deep Learning. Agriculture, 13(10), 1939. https://doi.org/10.3390/agriculture13101939