Real-Time Monitoring System of Seedling Amount in Seedling Box Based on Machine Vision

Abstract

1. Introduction

2. Design of Image Acquisition Platform

3. Camera Calibration

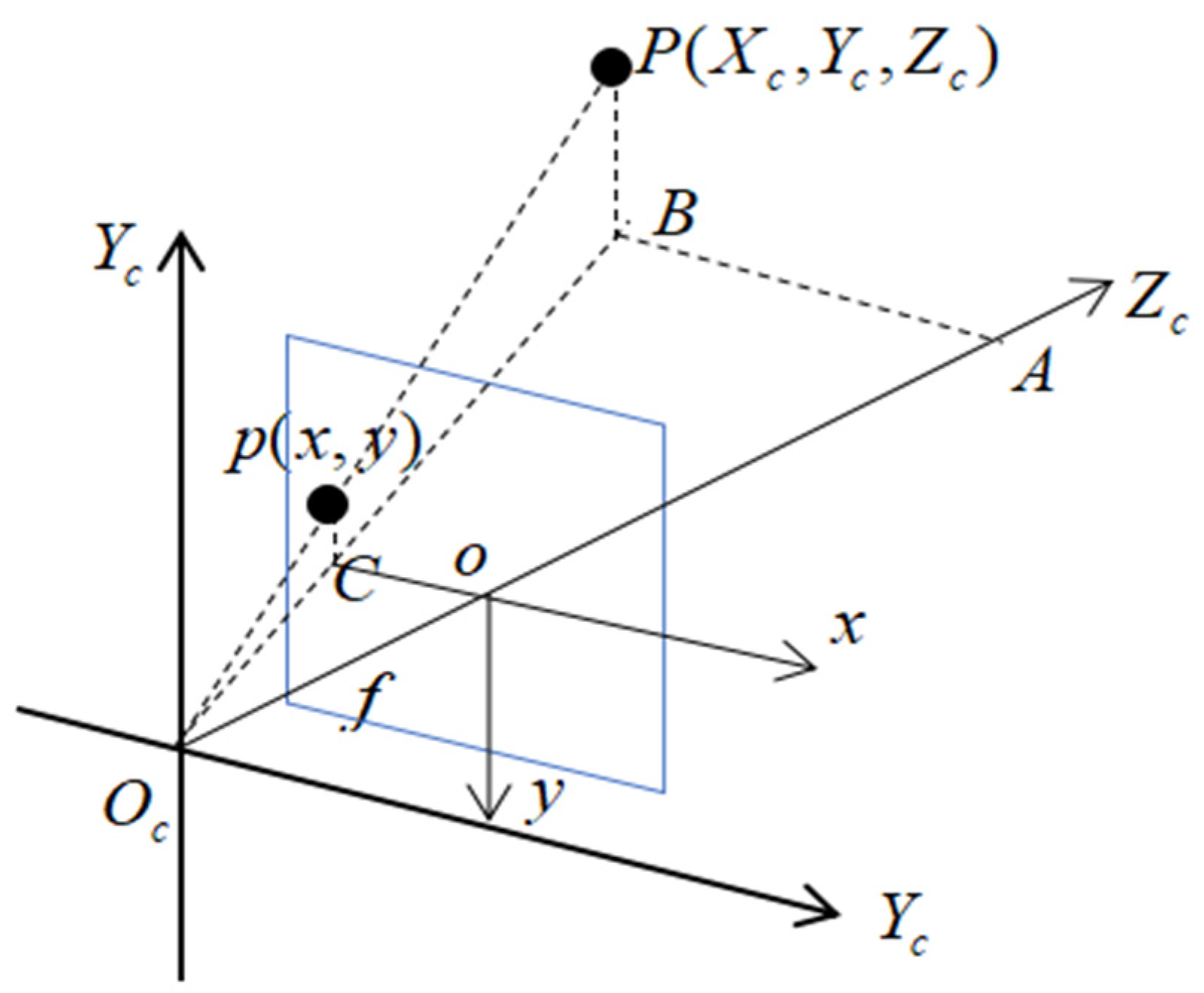

3.1. Development of Camera Model

3.1.1. Conversion of Pixel Coordinate System to Image Coordinate System

3.1.2. Conversion from Camera Coordinate System to Image Coordinate System

3.1.3. Conversion from World Coordinate System to Camera Coordinate System

3.1.4. Lens Distortion

3.1.5. Camera Calibration Method

4. Image Processing

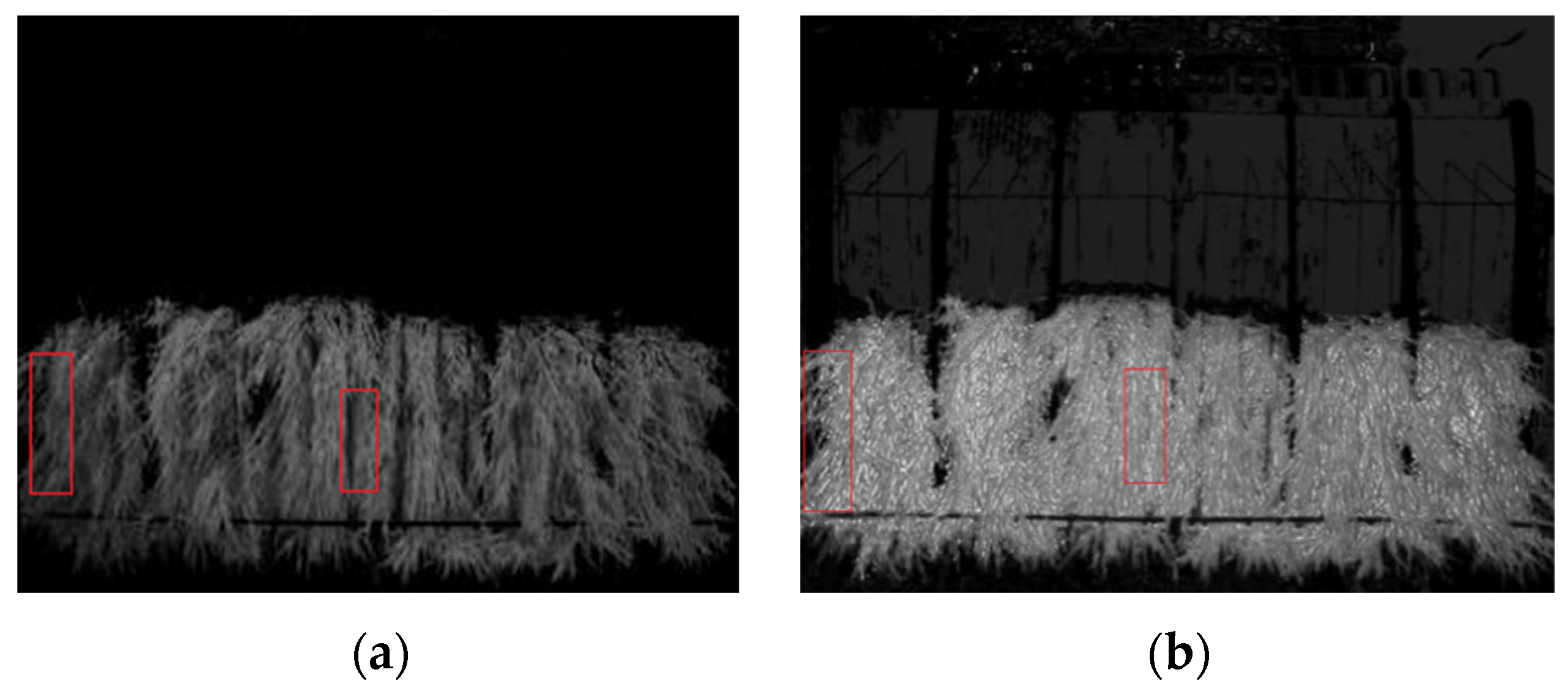

4.1. Image Calibration

4.2. Image Preprocessing

4.2.1. Grey Scale Processing

4.2.2. Image Enhancement

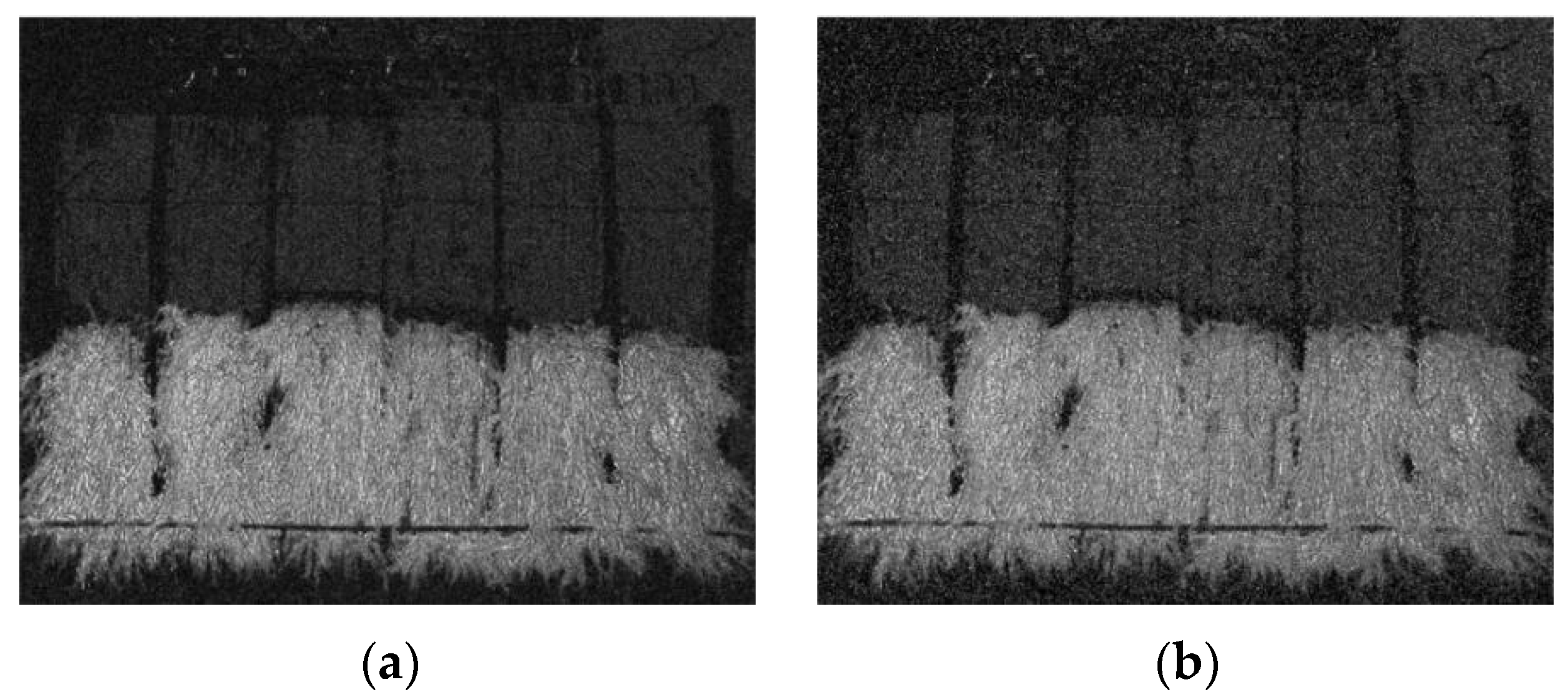

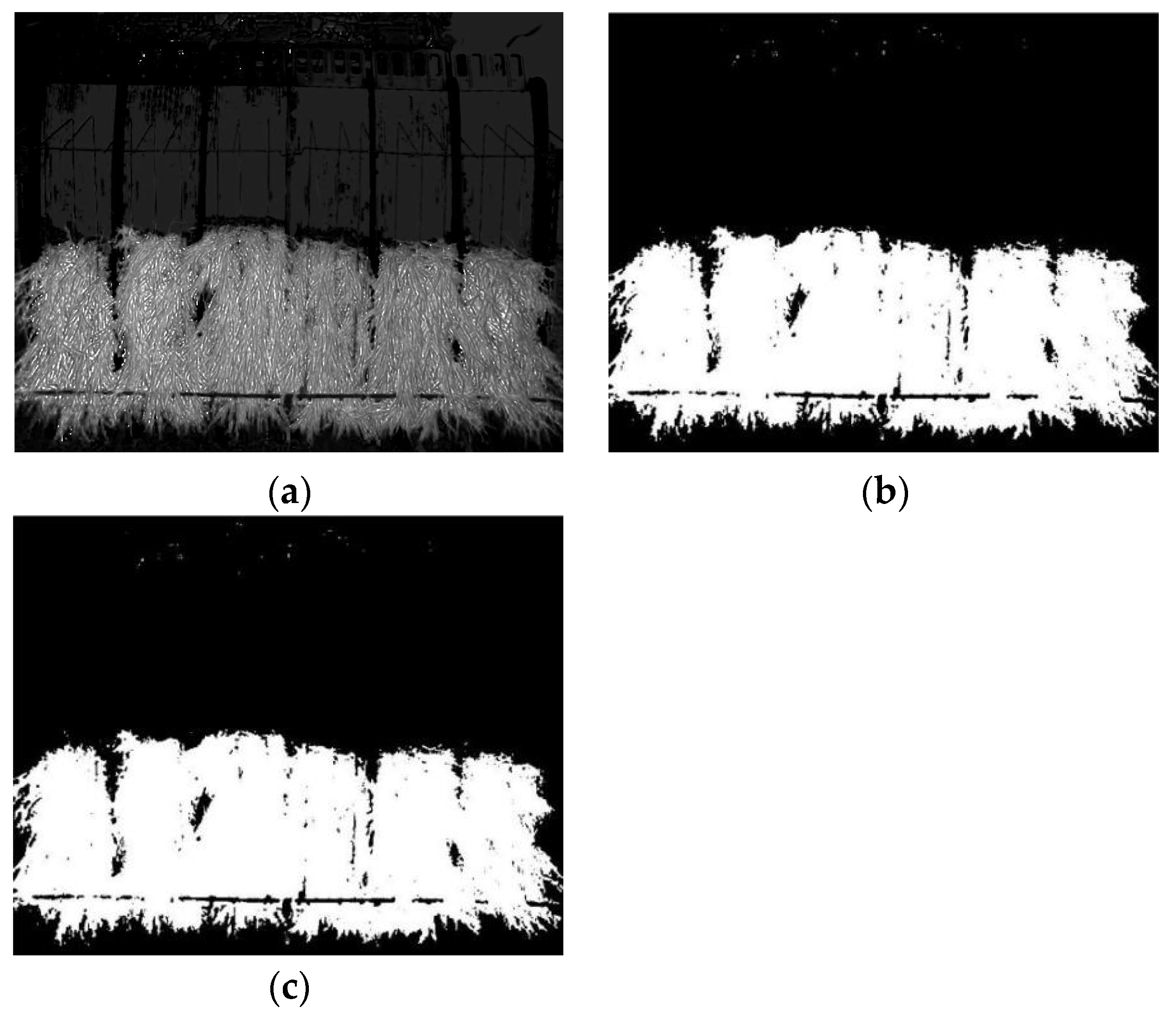

4.2.3. Background Segmentation

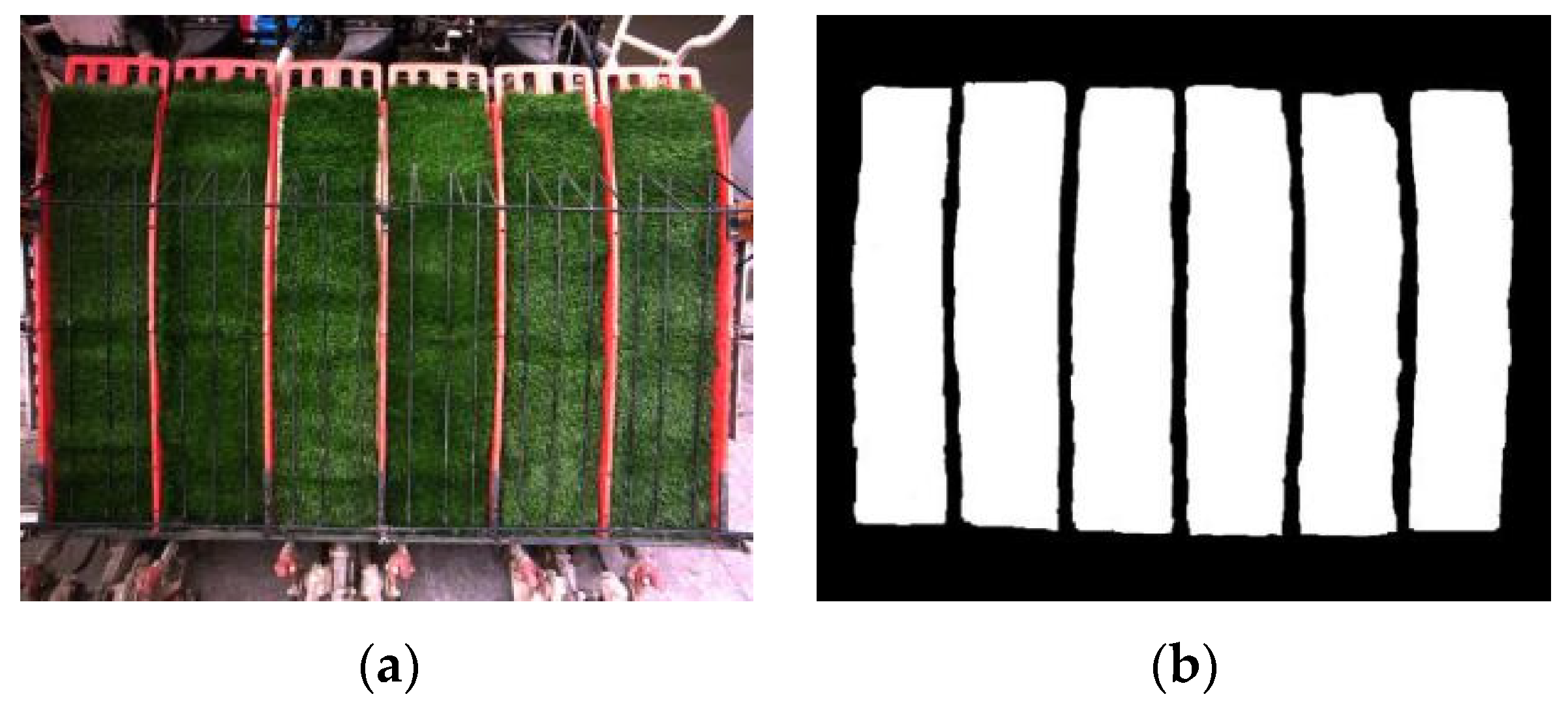

4.3. Segmentation Algorithm of Rice Seedling Row

4.3.1. Motion Region Segmentation Algorithm Based on Background Subtraction Method

4.3.2. Image Morphological Processing

4.4. Image Analysis

4.4.1. Diagnosis of Separation of Seedling Pieces

4.4.2. Calculation of Seedling Residual Amount in Seedling Box

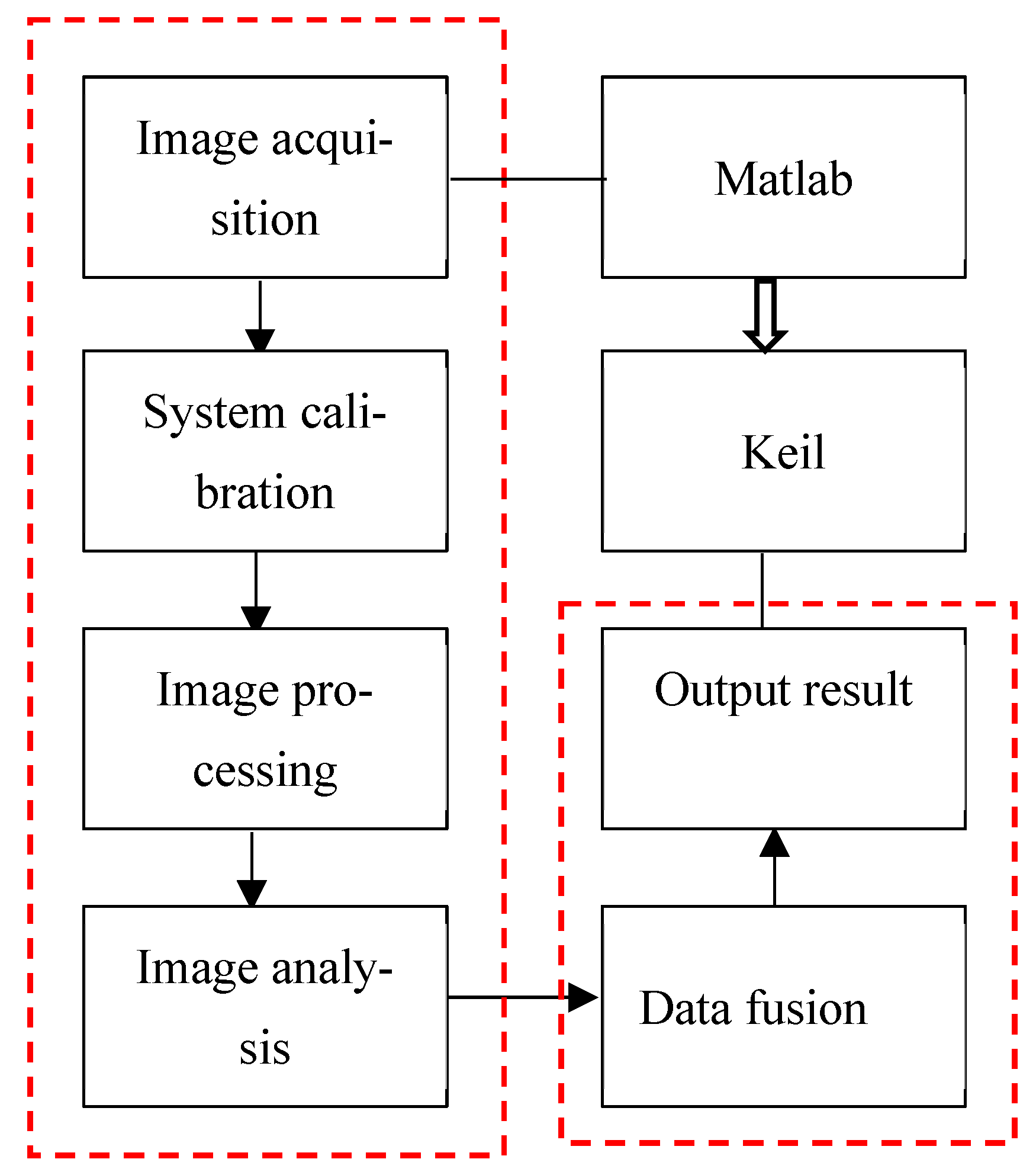

4.5. Design of Monitoring Software

4.5.1. Function Analysis of Software

4.5.2. Image Development Tools and Environment

4.5.3. Design of Software Data Processing Process

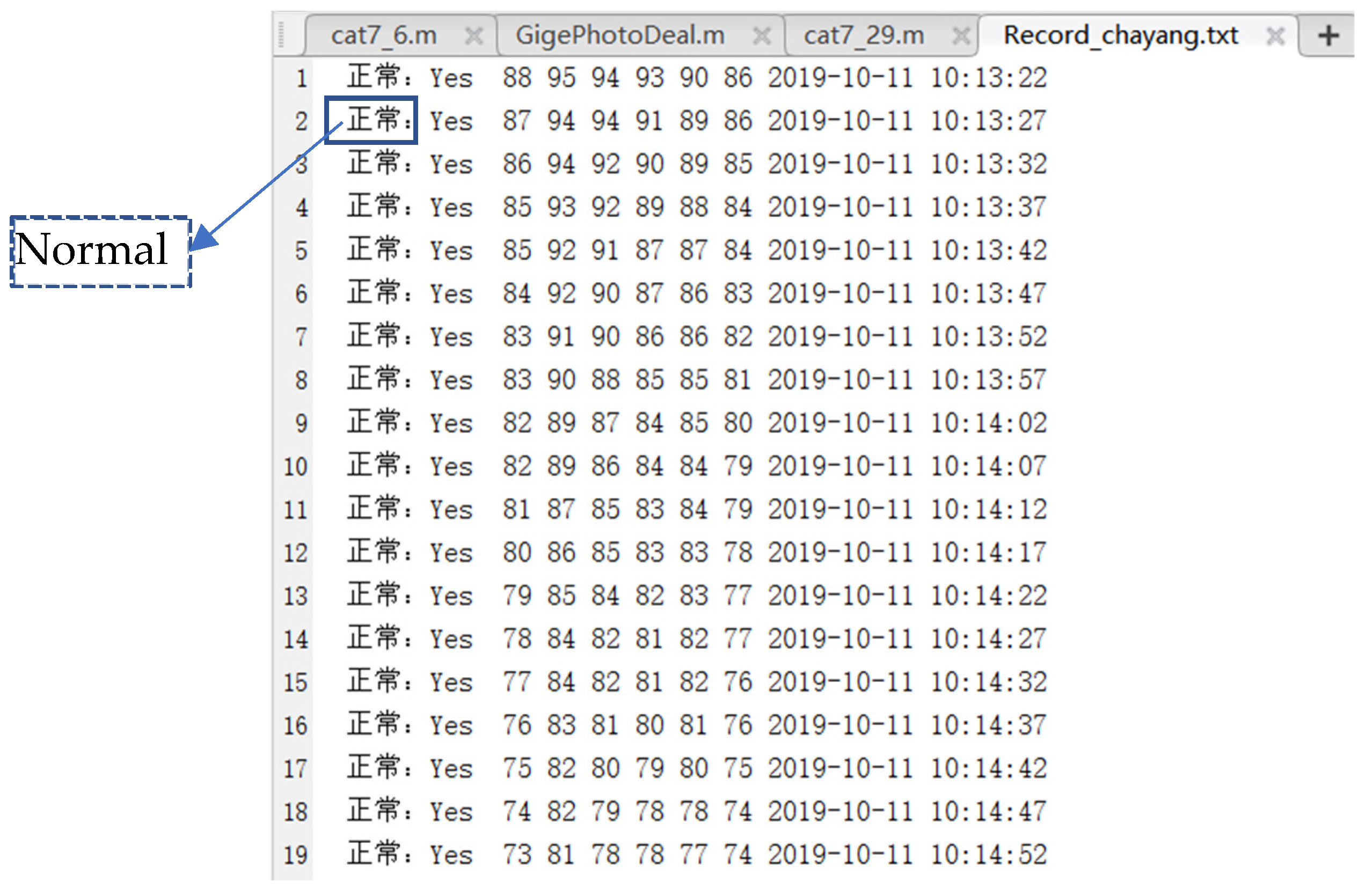

5. Field Test and Data Analysis

5.1. Performance Test for the Seedling Amount

5.2. Performance Test for Seedling Fault

5.3. Performance Test of the Plantable Distance of the Seedling

5.4. Real-Time Test of the System

6. Conclusions

- (1)

- Based on the actual operation requirements, the image acquisition platform of the seedlings was developed, which moved as the seedling box moved. Because the seedling box and the platform are relatively stationary, our designed platform can easily acquire a high-quality image and avoid applying the complex image processing method.

- (2)

- By the information fusion of navigation data and the remaining seedling amount in the seedling box, the distance can be planted based on the remaining seedlings amount in each row that can be obtained in real-time. According to this distance, the person supplying the seedlings can decide whether there is a need to reload seedlings into the seedling box or not when the rice transplanter travels across the seedling site in the field.

- (3)

- The seedlings in the seedling box shield the partition of the lattice. To this problem, the combination of the background subtraction method and image morphological processing was used to segment the seedling image, which could separate the region of interest from the background. Thus, the individual region of each row was obtained to facilitate the calculation of the remaining seedling amount and the fault diagnosis of each row.

- (4)

- The combination of the median filter and Otsu method was determined by comparing it with other filter methods and background separation methods. Our proposed method has a good real-time performance and robustness.

- (5)

- The experimental results show that the image processing time is less than 1.5 s and the relative error of the seedling amount is below 3%, which indicates that the designed monitoring system can accurately realize the fault diagnosis of the seedling pieces and monitor the remaining amount of each row.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhou, J.; He, Y.Q. Research progress on navigation path planning of agricultural machinery. Trans. CSAM 2021, 52, 1–14. (In Chinese) [Google Scholar]

- Yoshisada, N.; Hidefumi, S.; Katsuhiko, T.; Masahiro, S.; Kyo, K.; Ken, T. An autonomous rice transplanter guided by global positioning system and inertial measurement unit. J. Field Robot. 2009, 26, 537–548. [Google Scholar]

- Yin, X.; Du, J.; Noguchi, N.; Yang, T.X.; Jin, C.Q. Development of autonomous navigation system for rice transplanter. Int. J. Agric. Biol. Eng. 2018, 11, 89–94. [Google Scholar] [CrossRef]

- Lohan, S.K.; Narang, M.K.; Singh, M.; Singh, D.; Sidhu, H.S.; Singh, S.; Dixit, A.K.; Karkee, M. Design and development of remote-control system for two-wheel paddy transplanter. J. Field Robot. 2021, 39, 177–187. [Google Scholar] [CrossRef]

- Li, J.Y.; Shang, Z.J.; Li, R.F.; Cui, B.B. Adaptive sliding mode path tracking control of unmanned rice transplanter. Agriculture 2022, 12, 1225. [Google Scholar] [CrossRef]

- Nagasaka, Y.; Umeda, N.; Kanetai, Y.; Taniwaki, K.; Sasaki, Y. Autonomous guidance for rice transplanting using global positioning and gyroscopes. Comput. Electron. Agric. 2004, 43, 223–234. [Google Scholar] [CrossRef]

- Nagasaka, Y.; Kitagawa, H.; Mizushima, A.; Noguchi, N.; Saito, H.; Kobayashi, K. Unmanned rice-transplanting operation using a GPS-Guided rice transplanter with long mat-type hydroponic seedlings. Agric. Eng. Int. CIGR Ejournal 2007, 9, 1–10. [Google Scholar]

- Kohei, T.; Akio, O.; Motomu, K.; Hiroyuki, N.; Manabu, N.; Tatsumi, K. Development and field test of rice transplanters for long mat type hydroponic rice seedlings. J. JSAM 1997, 59, 87–98. [Google Scholar]

- Li, Y.X.; He, Z.Z.; Li, X.C.; Ding, Y.F.; Li, G.H.; Liu, Z.H.; Tang, S.; Wang, S.H. Quality and field growth characteristics of hydroponically grown long-mat seedlings. Agron. J. 2016, 108, 1581–1591. [Google Scholar] [CrossRef]

- John Deere. Available online: https://www.deere.com/en/technology-products/precision-ag-technology/data-management/operations-center/ (accessed on 19 March 2022).

- Trimble. Available online: https://agriculture.trimble.com/product/farmer-core/ (accessed on 12 February 2022).

- CLAAS. Available online: https://www.claas.cn/products/claas/easy-2018/connectedmachines (accessed on 18 June 2018).

- Zhang, F.; Zhang, W.; Luo, X.; Zhang, Z.; Lu, Y.; Wang, B. Developing an IoT-Enabled cloud management platform for agricultural machinery equipped with automatic navigation systems. Agriculture 2022, 12, 310. [Google Scholar] [CrossRef]

- Cao, R.Y.; Li, S.C.; Wei, S.; Ji, Y.H.; Zhang, M.; Li, H. Remote monitoring platform for multi-machine cooperation based on Web-GIS. Trans. CSAM 2017, 48, 52–57. [Google Scholar]

- Lan, Y.B.; Zhao, D.N.; Zhang, Y.F.; Zhu, J.K. Exploration and development prospect of eco-unmanned farm modes. Trans. CSAE 2021, 37, 312–327. [Google Scholar]

- LianShi Navigation. Available online: https://allynav.cn/nongyejiance (accessed on 24 March 2022).

- Wang, A.C.; Zhang, W.; Wei, X.H. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Moshou, D.; Tamouridou, A.A. Automated leaf disease detection in different crop species through image features analysis and One Class Classifiers. Comput. Electron. Agric. 2019, 156, 96–104. [Google Scholar] [CrossRef]

- Picon, A.; Alvarez-Gila, A.; Seitz, M.; Ortiz-Barredo, A.; Echazarra, J.; Johannes, A. Deep convolutional neural networks for mobile capture device-based crop disease classification in the wild. Comput. Electron. Agric. 2019, 161, 280–290. [Google Scholar] [CrossRef]

- Qiu, Z.J.; Zhao, N.; Zhou, L.; Wang, M.C.; Yang, L.L.; Fang, H.; He, Y.; Liu, Y.F. Vision-based moving obstacle detection and tracking in paddy field using improved Yolov3 and deep SORT. Sensors 2020, 20, 4082. [Google Scholar] [CrossRef] [PubMed]

- Montalvo, M.; Pajares, G.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; Ribeiro, A.; Ruz, J.J.; Cruz, J.M. Automatic detection of crop rows in maize fields with high weeds pressure. Expert Syst. Appl. 2012, 39, 11189–11897. [Google Scholar] [CrossRef]

- Rovira-Mas, F.; Zhang, Q.; Reid, J.F.; Will, J.D. Hough-transform-based vision algorithm for crop row detection of an automated agricultural vehicle. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2005, 219, 999–1010. [Google Scholar] [CrossRef]

- Dionisio, A.; Angela, R.; César, F.Q.; José, D. Using depth cameras to extract structural parameters to assess the growth state and yield of cauliflower crops. Comput. Electron. Agric. 2016, 122, 67–73. [Google Scholar]

- Jean, F.I.N.; Umezuruike, L.O. Machine learning applications to non-destructive defect detection in horticultural products. Biosyst. Eng. 2020, 189, 60–83. [Google Scholar]

- Lim, J.H.; Choi, K.H.; Cho, J.; Lee, H.K. Integration of GPS and monocular vision for land vehicle navigation in urban area. Int. J. Automot. Technol. 2017, 18, 345–356. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, M.E.S.J.; Li, B. A visual navigation algorithm for paddy field weeding robot based on image understanding. Comput. Electron. Agri. 2017, 143, 66–78. [Google Scholar] [CrossRef]

- Chen, X.Y.; Wang, S.A.; Zhang, B.Q.; Luo, L. Multi-feature fusion tree trunk detection and orchard mobile robot localization using camera/ultrasonic sensors. Comput. Electron. Agric. 2018, 147, 91–108. [Google Scholar] [CrossRef]

- Opiyo, S.; Okinda, C.; Zhou, J.; Mwangi, E.; Makange, N. Medial axis-based machine-vision system for orchard robot navigation. Comput. Electron. Agric. 2021, 185, 106153–106164. [Google Scholar] [CrossRef]

- Liu, F.C.; Yang, Y.; Zeng, Y.M.; Liu, Z.Y. Bending diagnosis of rice seedling lines and guidance line extraction of automatic weeding equipment in paddy field. Mech. Syst. Signal Process. 2020, 142, 106791. [Google Scholar] [CrossRef]

- Yutaka, K.; Kenji, I. Dual-spectral camera system for paddy rice seedling row detection. Comput. Electron. Agric. 2008, 63, 49–56. [Google Scholar]

- José, M.G.; José, J.R.; Gonzalo, P. Crop rows and weeds detection in maize fields applying a computer vision system based on geometry. Comput. Electron. Agric. 2017, 142, 461–472. [Google Scholar]

- Wang, S.S.; Zhang, W.Y.; Wang, X.S.; Yu, S.S. Recognition of rice seedling rows based on row vector grid classification. Comput. Electron. Agric. 2021, 190, 106454. [Google Scholar] [CrossRef]

- Ma, Z.H.; Yin, C.; Du, X.Q.; Zhao, L.J.; Lin, L.P.; Zhang, G.F.; Wu, C.Y. Rice row tracking control of crawler tractor based on the satellite and visual integrated navigation. Comput. Electron. Agric. 2022, 197, 106935. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, J.H.; Li, B. A method for extracting the centerline of seedling column based on YOLOv3 object detection. Trans. Chin. Soc. Agr. Mach. 2020, 51, 34–43. [Google Scholar]

- Zhang, Z.R. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef]

- Mei, N.N.; Wang, Z.J. Moving object detection algorithm based on Gaussian mixture model. CED 2012, 33, 3149–3153. [Google Scholar]

- Stauffer, C.; Grimson, W. Adaptive background mixture models for real-time tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Fort Collins, CO, USA, 23–25 June 1999. [Google Scholar]

| Methods | Source Image | Applications | Results or Accuracy | References |

|---|---|---|---|---|

| Row vector grid classification | Color images | Recognition of rice seedling rows | 89.22% | [32] |

| System geometry | RGB images | Crop rows and weeds detection in maize fields | Maximum deviation for path following: 7.7 cm for crops with height 14 cm | [31] |

| Standardization thresholding AND operation | NIR images Red images | Rice seedling row detection | It can successfully detect the seedling rows under cloudy conditions | [30] |

| Deep learning-based method | Video stream | Bending diagnosis of rice seedling lines and guidance line extraction | / | [29] |

| Gabor filter PCA K-means clustering algorithm | Raw color image | Orchard robot navigation | RMSE for the lateral deviation was 45.3 cm; maximum trajectory tracking error: 14.6 mm; SD: 6.8 mm. | [28] |

| Yolov3 and deep SORT | RGB images | Moving obstacle detection | / | [20] |

| Multi-feature fusion | RGB images | Tree trunk detection and orchard mobile robot localization | Average localization error: 62 mm; recall rate: 92.14%; Accuracy: 95.49%. | [27] |

| Type | Parameters |

|---|---|

| Camera dimension (mm) | 29 × 29 × 40 |

| Size of the target area | 1/1.8″ |

| Pixel size (μm) | 4.5 × 4.5 |

| Shutter mode | Global shutter |

| Effective pixel | 2 million pixels (600 × 1200) |

| Resolution @ frame rate | 1600 × 1200@60 fps/1280 × 1024@70 fps/800 × 600@118 fps |

| Data interface | RJ45 |

| Trigger mode | Continuous/soft/hard trigger |

| Exposure control (ms) | 0.016~91 |

| Data format | Mono8p;RGB8p;YUV422p; BayerRG8/10p, BayerGB8/10p; |

| power supply mode (V) | DC 12 |

| Compatible target area | 1/1.8″ |

| Len interface | C-Mount |

| Focus length (mm) | 6 |

| Focusing range (mm) | 100~ |

| Lens size (mm) | 38.2 × 27.9 |

| Parameters | Output Results |

|---|---|

| Internal parameter matrix | [763.1827 0 0; −0.3839 814.2960 0; 631.7540 445.5892 1] |

| Radial distortion | = −0.0957, = 0.2147, = −0.1740 |

| Tangential distortion | = −0.0100, = 0.0025 |

| Mean error of pixel | 0.2430 |

| Filtering Method | Gaussian Noise (s) | Salt and Pepper Noise (s) | Enhancement Effect |

|---|---|---|---|

| Mean filtering | 0.022 | 0.062 | poor |

| Median filtering | 0.148 | 0.101 | good |

| Adaptive median filtering | 1.538 | 1.271 | optimal |

| Real Value (%) | Measurement Value (%) | Relative Error (%) |

|---|---|---|

| 13.72 | 12.89 | −2.54 |

| 24.59 | 25.13 | 2.15 |

| 35.86 | 36.61 | 2.05 |

| 46.78 | 47.08 | 0.64 |

| 53.63 | 52.98 | −1.22 |

| 62.69 | 61.77 | −1.49 |

| 71.21 | 73.28 | 2.82 |

| 80.96 | 79.65 | −1.64 |

| 88.76 | 89.01 | 0.28 |

| 96.56 | 95.89 | −0.70 |

| No. | Time (s) | No. | Time (s) |

|---|---|---|---|

| 1 | 1.455876 | 11 | 1.387314 |

| 2 | 1.321593 | 12 | 1.399905 |

| 3 | 1.314241 | 13 | 1.176554 |

| 4 | 1.297413 | 14 | 1.400023 |

| 5 | 1.421732 | 15 | 1.441549 |

| 6 | 1.384999 | 16 | 1.335629 |

| 7 | 1.405213 | 17 | 1.364401 |

| 8 | 1.438917 | 18 | 1.365961 |

| 9 | 1.401312 | 19 | 1.396413 |

| 10 | 1.404978 | 20 | 1.401021 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Zhang, M.; Zhang, G.; Ge, D.; Li, M. Real-Time Monitoring System of Seedling Amount in Seedling Box Based on Machine Vision. Agriculture 2023, 13, 371. https://doi.org/10.3390/agriculture13020371

Li J, Zhang M, Zhang G, Ge D, Li M. Real-Time Monitoring System of Seedling Amount in Seedling Box Based on Machine Vision. Agriculture. 2023; 13(2):371. https://doi.org/10.3390/agriculture13020371

Chicago/Turabian StyleLi, Jinyang, Miao Zhang, Gong Zhang, Deqiang Ge, and Meiqing Li. 2023. "Real-Time Monitoring System of Seedling Amount in Seedling Box Based on Machine Vision" Agriculture 13, no. 2: 371. https://doi.org/10.3390/agriculture13020371

APA StyleLi, J., Zhang, M., Zhang, G., Ge, D., & Li, M. (2023). Real-Time Monitoring System of Seedling Amount in Seedling Box Based on Machine Vision. Agriculture, 13(2), 371. https://doi.org/10.3390/agriculture13020371