Extraction of Canal Distribution Information Based on UAV Remote Sensing System and Object-Oriented Method

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Drainage System Image Data Acquisition

2.3. Methods

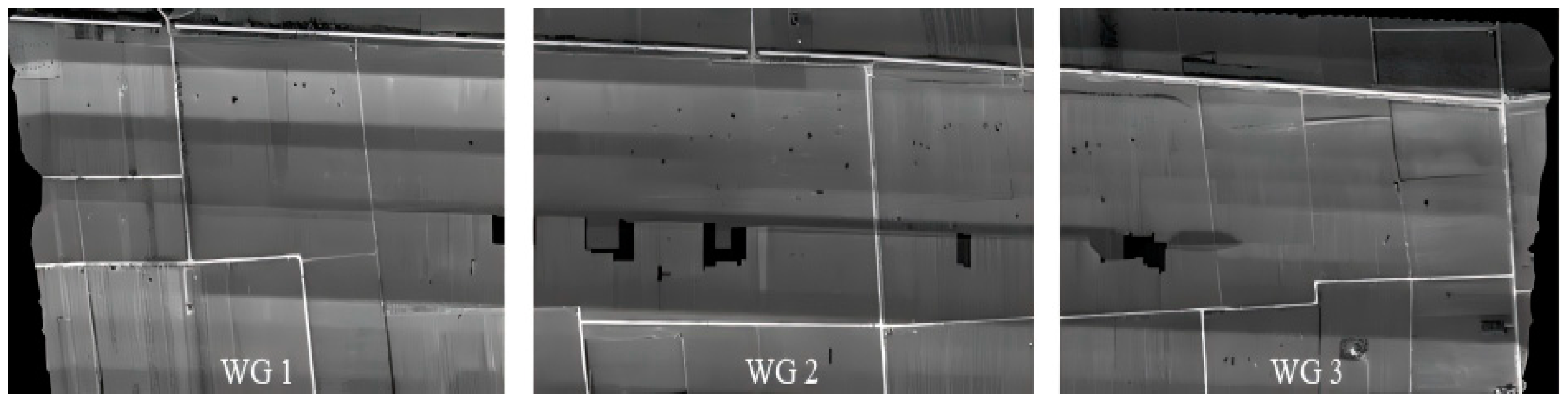

3. Image Preprocessing

3.1. Band Stacking

3.2. Image Cropping

3.3. Mask Processing

4. Research on the Extraction of Drainage System Distribution Information Based on Object-Oriented Approach

4.1. Research on the Extraction of Drainage System Distribution Information by Rule-Based Object-Oriented Method

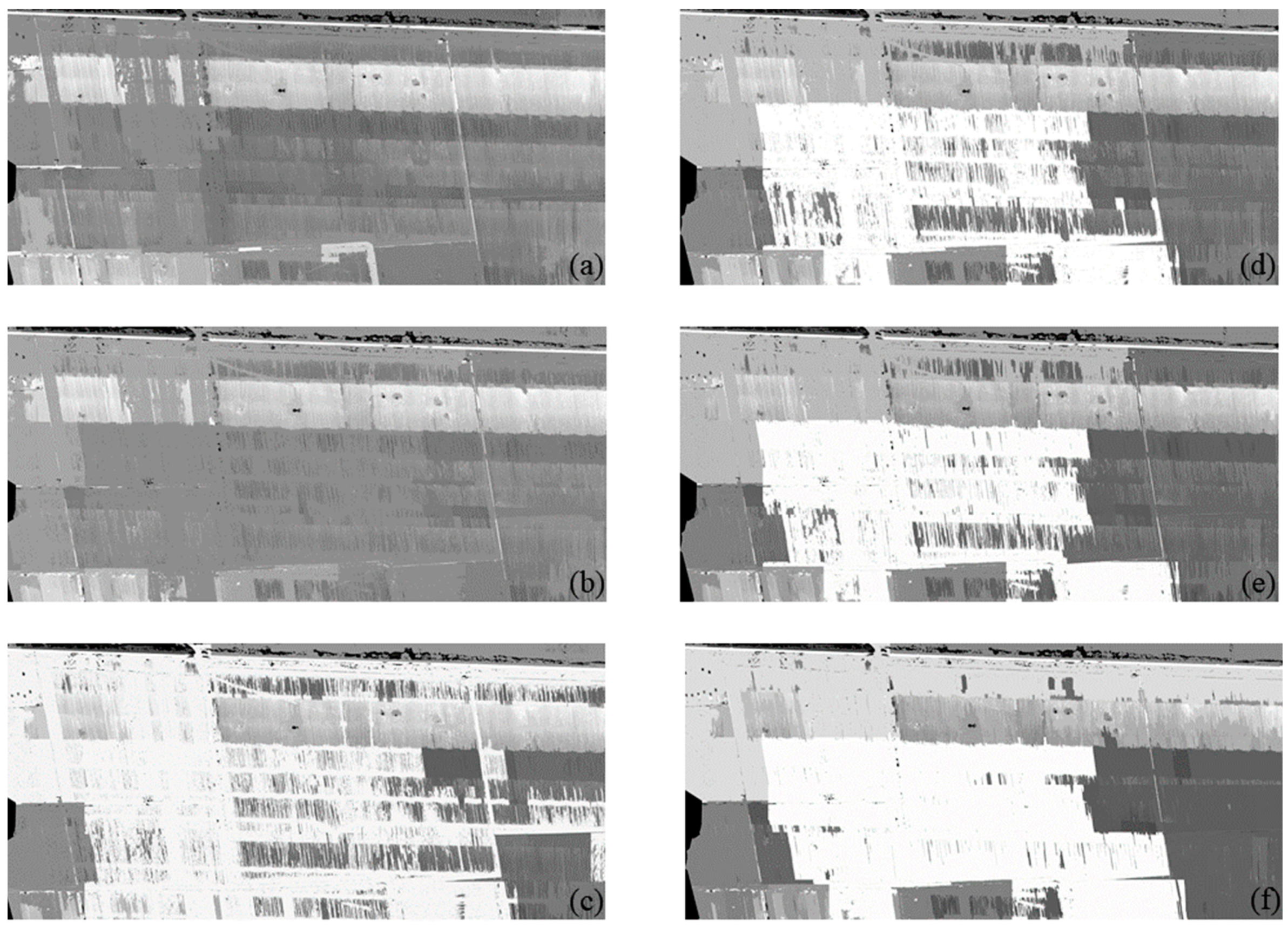

4.2. Remote Sensing Image Segmentation Methods and Determination of Segmentation Parameters

4.3. Feature Extraction and Rule Creation

4.3.1. Classification Based on Spectral Mean

4.3.2. Classification Based on Area and Rectangular Fit Rules

4.3.3. Extraction Based on Elongation and Compactness

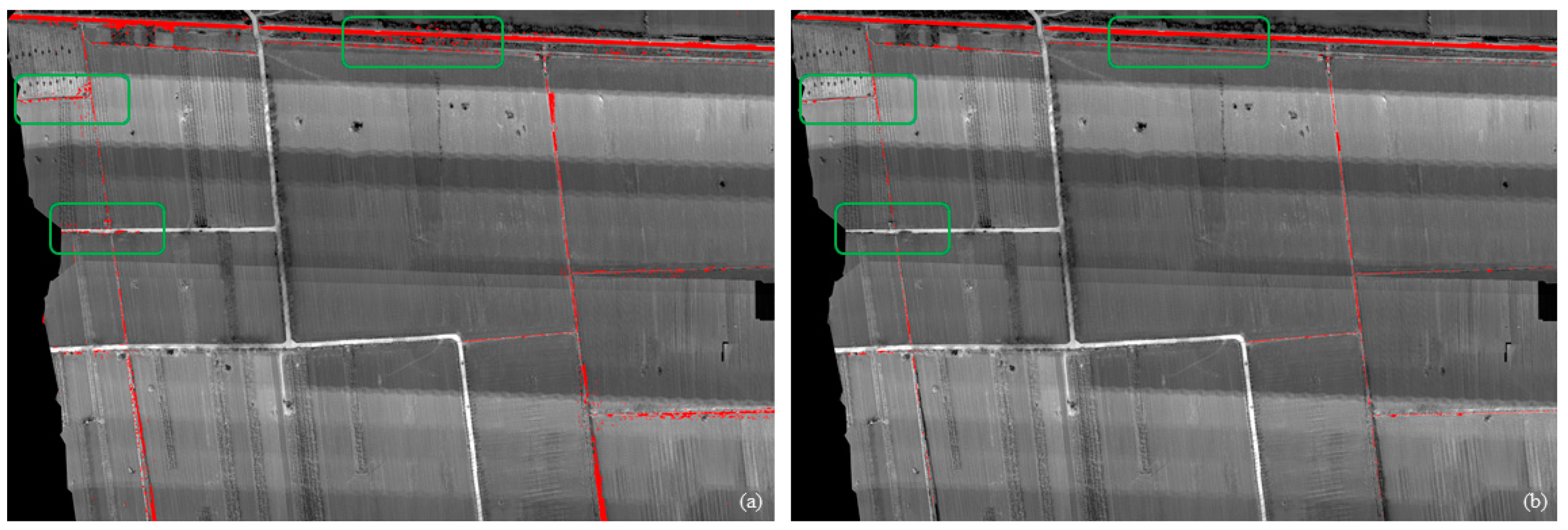

4.4. Image Post-Processing

5. Results and Analyses

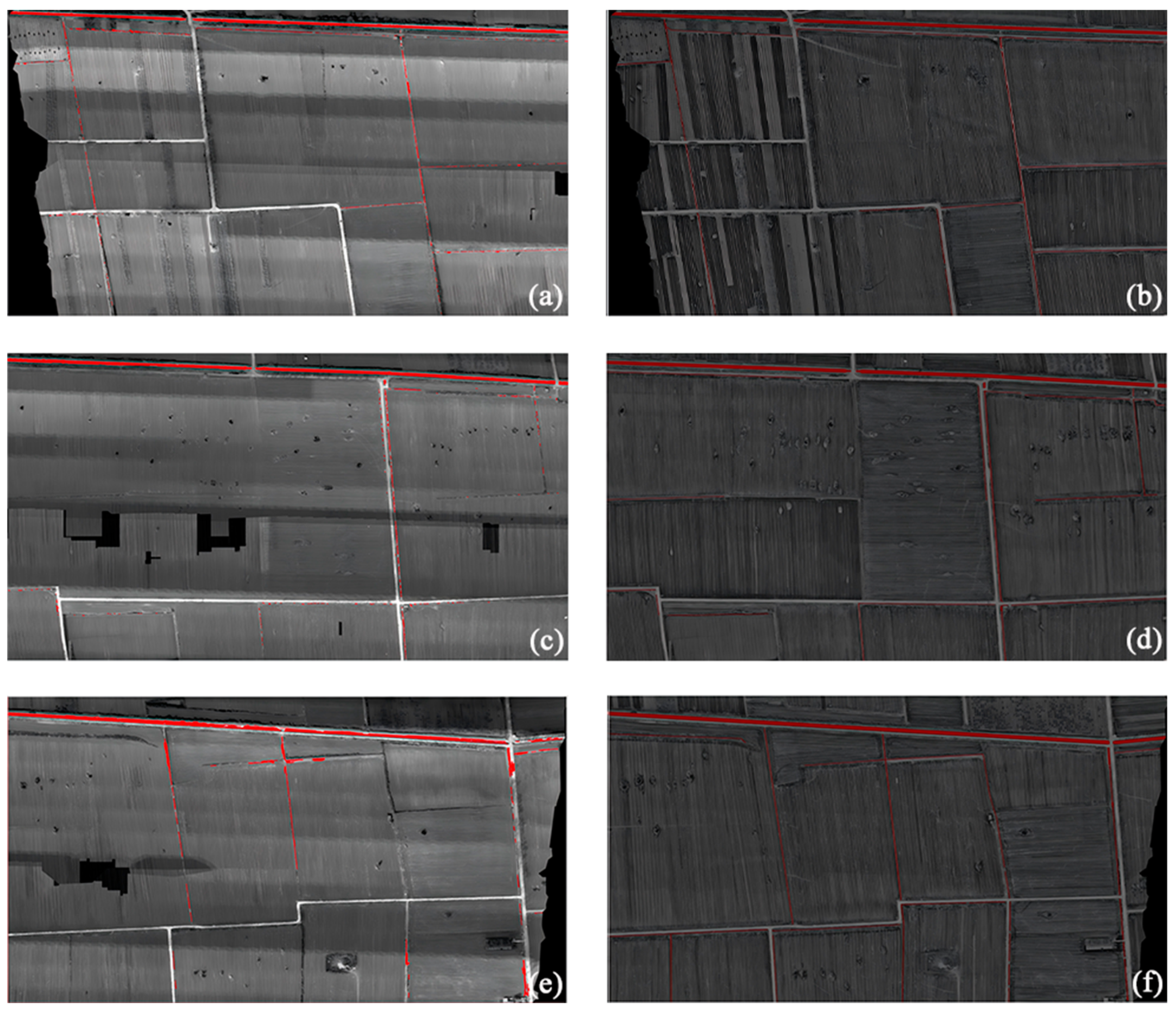

5.1. Drainage System Extraction Results

5.2. Precision Evaluation and Analysis

6. Discussion

6.1. Impact of Object-Oriented Approach Based on Extraction Accuracy

6.2. Effect of Canal Type on Extraction Accuracy

7. Conclusions and Recommendations

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rahman, M.F.F.; Fan, S.; Zhang, Y.; Chen, L. A Comparative Study on Application of Unmanned Aerial Vehicle Systems in Agriculture. Agriculture 2021, 11, 22. [Google Scholar] [CrossRef]

- Yang, B.; Pei, Z.; Zhou, Q.; Liu, H. Key Technologies of Crop Monitoring Using Remote Sensing at a National Scale: Progress and Problems. Trans. Chin. Soc. Agric. Eng. 2002, 18, 191–194. [Google Scholar]

- Shi, Y.; Bai, M.; Li, Y.; Li, Y. Study on UAV Remote Sensing Technology in Irrigation District Informationization Construction and Application. In Proceedings of the 2018 10th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), Changsha, China, 10–11 February 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 252–255. [Google Scholar]

- Wang, P.; Luo, X.; Zhou, Z.; Zang, Y.; Hu, L. Key technology for remote sensing information acquisition based on micro UAV. Trans. Chin. Soc. Agric. Eng. 2014, 30, 1–12. [Google Scholar]

- Yin, X. Study on Development Evaluation and Operation System of Regional Circular Economy. Ph.D. Thesis, Tianjin University, Tianjin, China, 2009. [Google Scholar]

- Huang, Y.; Thomson, S.J.; Hoffmann, W.C.; Lan, Y.; Fritz, B.K. Development and prospect of unmanned aerial vehicle technologies for agricultural production management. Int. J. Agric. Biol. Eng. 2013, 6, 1. [Google Scholar]

- Huang, Y.; Fipps, G.; Maas, S.J.; Fletcher, R.S. Airborne remote sensing for detection of irrigation canal leakage. Irrig. Drain. 2010, 59, 524–534. [Google Scholar] [CrossRef]

- Haq, M.A.; Rahaman, G.; Baral, P.; Ghosh, A. Deep Learning Based Supervised Image Classification Using UAV Images for Forest Areas Classification. J. Indian Soc. Remote Sens. 2021, 49, 601–606. [Google Scholar] [CrossRef]

- Zhang, X.; Chen, G.; Wang, W.; Wang, Q.; Dai, F. Object-Based Land-Cover Supervised Classification for Very-High-Resolution UAV Images Using Stacked Denoising Autoencoders. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 3373–3385. [Google Scholar] [CrossRef]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Iqbal, J.; Alam, M. A novel semi-supervised framework for UAV based crop/weed classification. PLoS ONE 2021, 16, e251008. [Google Scholar] [CrossRef]

- Xu, D.; Li, Y.; Cai, L.; Vincent, B. Applied study of satellite image in identification of irrigation and drainage system. Trans. Chin. Soc. Agric. Eng. 2004, 20, 36–39. [Google Scholar]

- Bahadur, K.C.K. Improving Landsat and IRS Image Classification: Evaluation of Unsupervised and Supervised Classification through Band Ratios and DEM in a Mountainous Landscape in Nepal. Remote Sens. 2009, 1, 1257–1272. [Google Scholar] [CrossRef]

- Garcia-Dias, R.; Prieto, C.A.; Almeida, J.S.; Ordovás-Pascual, I. Machine learning in APOGEE. Astron. Astrophys. 2018, 612, A98. [Google Scholar] [CrossRef]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised Deep Feature Extraction for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1349–1362. [Google Scholar] [CrossRef]

- Sun, H.; Wang, L.; Liu, H.; Sun, Y. Hyperspectral Image Classification with the Orthogonal Self-Attention ResNet and Two-Step Support Vector Machine. Remote Sens. 2024, 16, 1010. [Google Scholar] [CrossRef]

- Traoré, F.; Palé, S.; Zaré, A.; Traoré, M.K.; Ouédraogo, B.; Bonkoungou, J. A Comparative Analysis of Random Forest and Support Vector Machines for Classifying Irrigated Cropping Areas in The Upper-Comoé Basin, Burkina Faso. Indian J. Sci. Technol. 2024, 17, 713–722. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS-J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Mather, M.P.A.P. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar]

- Gu, Z.; Zhang, C.; Yang, J.; Zhu, D.; Yue, A. Method of Extracting Irrigation and Drainage Ditches Based on High Remote Sensing Image. Agric. Eng. 2014, 4, 64–67– 72. [Google Scholar]

- Wu, J.; Liu, J.; Huang, X.; Peng, J.; Li, H. Automatic identification of irrigation and drainage system in land reclamation area based on object-oriented classification. Trans. Chin. Soc. Agric. Eng. 2012, 28, 25–31. [Google Scholar]

- Han, Y.; Wang, P.; Zheng, Y.; Yasir, M.; Xu, C.; Nazir, S.; Hossain, M.S.; Ullah, S.; Khan, S. Extraction of Landslide Information Based on Object-Oriented Approach and Cause Analysis in Shuicheng, China. Remote Sens. 2022, 14, 502. [Google Scholar] [CrossRef]

- Dahiya, S.; Garg, P.K.; Jat, M.K. Object oriented approach for building extraction from high resolution satellite images. In Proceedings of the 2013 3rd IEEE International Advance Computing Conference (IACC), Ghaziabad, India, 22–23 February 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1300–1305. [Google Scholar]

- Zhang, Z.; Han, Y.; Chen, J.; Cao, Y.; Wang, S.; Wang, G.; Du, N. Fusion rules and image enhancement of unmanned aerial vehicle remote sensing imagery for ecological canal data extraction. Desalin. Water Treat. 2019, 166, 168–179. [Google Scholar] [CrossRef]

- Han, W.; Zhang, L.; Zhang, H.; Shi, Z.; Yuan, M.; Wang, Z. Extraction Method of Sublateral Canal Distribution Information Based on UAV Remote Sensing. Trans. Chin. Soc. Agric. Mach. 2017, 48, 205–214. [Google Scholar]

- Mhangara, P.; Odindi, J.; Kleyn, L.; Remas, H. Road Extraction Using Object Oriented Classification. 2011. Available online: https://www.researchgate.net/profile/John-Odindi/publication/267856733_Road_extraction_using_object_oriented_classification/links/55b9fec108aed621de09550a/Road-extraction-using-object-oriented-classification.pdf (accessed on 30 July 2015).

- Yue, Y.; Gong, J.; Wang, D. The extraction of water information based on SPOT5 image using object-oriented method. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 1–5. [Google Scholar]

- Kumar, M.; Singh, R.K.; Raju, P.L.N.; Krishnamurthy, Y.V.N. Road Network Extraction from High Resolution Multispectral Satellite Imagery Based on Object Oriented Techniques. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 8, 107–110. [Google Scholar] [CrossRef]

- Yi, Z. The Application of Remote Sensing Technology in Irrigation Management. Master’s Thesis, Lanzhou Jiaotong University, Lanzhou, China, 2014. [Google Scholar]

- Ranawana, R.; Palade, V. Optimized Precision—A New Measure for Classifier Performance Evaluation. In Proceedings of the 2006 IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 2254–2261. [Google Scholar]

- Su, L.T. The Relevance of Recall and Precision in User Evaluation. J. Am. Soc. Inf. Sci. 1994, 45, 207–217. [Google Scholar] [CrossRef]

- Junker, M.; Hoch, R.; Dengel, A. On the evaluation of document analysis components by recall, precision, and accuracy. In Proceedings of the Fifth International Conference on Document Analysis and Recognition. ICDAR ′99 (Cat. No.PR00318), Bangalore, India, 22–22 September 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 713–716. [Google Scholar]

- Zhang, X.; Feng, X.; Xiao, P.; He, G.; Zhu, L. Segmentation quality evaluation using region-based precision and recall measures for remote sensing images. ISPRS-J. Photogramm. Remote Sens. 2015, 102, 73–84. [Google Scholar] [CrossRef]

- Yu, X.; Xia, Y.; Zhou, J.; Jiang, W. Landslide Susceptibility Mapping Based on Multitemporal Remote Sensing Image Change Detection and Multiexponential Band Math. Sustainability 2023, 15, 2226. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, J.; Gao, Y. High Resolution Remote Sensing Image Classification of Coastal Zone and Its Automatic Realization. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; IEEE: Piscataway, NJ, USA; Volume 12008, pp. 827–829. [Google Scholar]

- Kayet, N.; Pathak, K.; Chakrabarty, A.; Kumar, S.; Chowdary, V.M.; Singh, C.P.; Sahoo, S.; Basumatary, S. Assessment of foliar dust using Hyperion and Landsat satellite imagery for mine environmental monitoring in an open cast iron ore mining areas. J. Clean. Prod. 2019, 218, 993–1006. [Google Scholar] [CrossRef]

- Samani Majd, A.M.; Bleiweiss, M.P.; DuBois, D.; Shukla, M.K. Estimation of the fractional canopy cover of pecan orchards using Landsat 5 satellite data, aerial imagery, and orchard floor photographs. Int. J. Remote Sens. 2013, 34, 5937–5952. [Google Scholar] [CrossRef]

- Md Tarmizi, N.; Samad, A.M.; Yusop, M.S.M. Shoreline data extraction from QuickBird satellite image using semi-automatic technique. In Proceedings of the 2014 IEEE 10th International Colloquium on Signal Processing and its Applications, Kuala Lumpur, Malaysia, 7–9 March 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 157–162. [Google Scholar]

- Gao, X.; Wang, M.; Yang, Y.; Li, G. Building Extraction From RGB VHR Images Using Shifted Shadow Algorithm. IEEE Access 2018, 6, 22034–22045. [Google Scholar] [CrossRef]

- Chen, J.; Xu, Y.; Lu, S.; Liang, R.; Nan, L. 3D Instance Segmentation of MVS Buildings; Cornell University Library: Ithaca, NY, USA, 2022. [Google Scholar]

- Araújo, G.K.D.; Rocha, J.V.; Lamparelli, R.A.C.; Rocha, A.M. Mapping of summer crops in the State of Paraná, Brazil, through the 10-day spot vegetation NDVI composites. Eng. Agrícola 2011, 31, 760–770. [Google Scholar] [CrossRef]

- Hao, J.; Dong, F.; Li, Y.; Wang, S.; Cui, J.; Zhang, Z.; Wu, K. Investigation of the data fusion of spectral and textural data from hyperspectral imaging for the near geographical origin discrimination of wolfberries using 2D-CNN algorithms. Infrared Phys. Technol. 2022, 125, 104286. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S.; Chen, Y.; Luo, W.; Huang, Y.; Tao, D.; Zhan, B.; Liu, X. Non-destructive determination of fat and moisture contents in Salmon (Salmo salar) fillets using near-infrared hyperspectral imaging coupled with spectral and textural features. J. Food Compos. Anal. 2020, 92, 103567. [Google Scholar] [CrossRef]

- Gao, Y.; Mas, J.F. A comparison of the performance of pixel-based and object-based classifications over images with various spatial resolutions. Online J. Earth Sci. 2008, 2, 27–35. [Google Scholar]

- Ye, Z.; Yang, K.; Lin, Y.; Guo, S.; Sun, Y.; Chen, X.; Lai, R.; Zhang, H. A comparison between Pixel-based deep learning and Object-based image analysis (OBIA) for individual detection of cabbage plants based on UAV Visible-light images. Comput. Electron. Agric. 2023, 209, 107822. [Google Scholar] [CrossRef]

- Sibaruddin, H.I.; Shafri, H.Z.M.; Pradhan, B.; Haron, N.A. Comparison of pixel-based and object-based image classification techniques in extracting information from UAV imagery data. Iop Conf. Series. Earth Environ. Sci. 2018, 169, 12098. [Google Scholar] [CrossRef]

- Chen, B.; Qiu, F.; Wu, B.; Du, H. Image Segmentation Based on Constrained Spectral Variance Difference and Edge Penalty. Remote Sens. 2015, 7, 5980–6004. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Cheng, H.D.; Jiang, X.H.; Sun, Y.; Wang, J. Color image segmentation: Advances and prospects. Pattern Recognit. 2001, 34, 2259–2281. [Google Scholar] [CrossRef]

- Ghosh, S.; Das, N.; Das, I.; Maulik, U. Understanding Deep Learning Techniques for Image Segmentation. ACM Comput. Surv. 2020, 52, 1–35. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, L.; Wang, Y.; Qi, Q. An Improved Hybrid Segmentation Method for Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2019, 8, 543. [Google Scholar] [CrossRef]

- Kucharczyk, M.; Hay, G.J.; Ghaffarian, S.; Hugenholtz, C.H. Geographic Object-Based Image Analysis: A Primer and Future Directions. Remote Sens. 2020, 12, 2012. [Google Scholar] [CrossRef]

- Hamedianfar, A.; Shafri, H.Z.M.; Mansor, S.; Ahmad, N. Improving detailed rule-based feature extraction of urban areas from WorldView-2 image and lidar data. Int. J. Remote Sens. 2014, 35, 1876–1899. [Google Scholar] [CrossRef]

- Khatriker, S.; Kumar, M. Building Footprint Extraction from High Resolution Satellite Imagery Using Segmentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 5, 123–128. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Tonbul, H. A comparative study of segmentation quality for multi-resolution segmentation and watershed transform. In Proceedings of the 2017 8th International Conference on Recent Advances in Space Technologies (RAST), Istanbul, Turkey, 19–22 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 113–117. [Google Scholar]

- Yang, J.; He, Y.; Weng, Q. An Automated Method to Parameterize Segmentation Scale by Enhancing Intrasegment Homogeneity and Intersegment Heterogeneity. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1282–1286. [Google Scholar] [CrossRef]

- Cheng, J.; Li, L.; Luo, B.; Wang, S.; Liu, H. High-resolution remote sensing image segmentation based on improved RIU-LBP and SRM. Eurasip. J. Wirel. Commun. Netw. 2013, 2013, 263. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, J.; Feng, L.; Zhang, X.; Hu, X. Adaptive Scale Selection for Multiscale Segmentation of Satellite Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 3641–3651. [Google Scholar] [CrossRef]

- Hu, Z.; Zhang, Q.; Zou, Q.; Li, Q.; Wu, G. Stepwise Evolution Analysis of the Region-Merging Segmentation for Scale Parameterization. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 2461–2472. [Google Scholar] [CrossRef]

- Wang, Y.; Meng, Q.; Qi, Q.; Yang, J.; Liu, Y. Region Merging Considering Within- and Between-Segment Heterogeneity: An Improved Hybrid Remote-Sensing Image Segmentation Method. Remote Sens. 2018, 10, 781. [Google Scholar] [CrossRef]

- Gu, H.; Han, Y.; Yang, Y.; Li, H.; Liu, Z.; Soergel, U.; Blaschke, T.; Cui, S. An Efficient Parallel Multi-Scale Segmentation Method for Remote Sensing Imagery. Remote Sens. 2018, 10, 590. [Google Scholar] [CrossRef]

- Jahjah, M.; Ulivieri, C. Automatic archaeological feature extraction from satellite VHR images. Acta Astronaut. 2010, 66, 1302–1310. [Google Scholar] [CrossRef]

- Musleh, A.A.; Jaber, H.S. Comparative Analysis of Feature Extraction and Pixel-based Classification of High-Resolution Satellite Images Using Geospatial Techniques. E3S Web Conf. 2021, 318, 4007. [Google Scholar] [CrossRef]

- Salas, E.; Boykin, K.; Valdez, R. Multispectral and Texture Feature Application in Image-Object Analysis of Summer Vegetation in Eastern Tajikistan Pamirs. Remote Sens. 2016, 8, 78. [Google Scholar] [CrossRef]

- Lai, X.; Yang, J.; Li, Y.; Wang, M. A Building Extraction Approach Based on the Fusion of LiDAR Point Cloud and Elevation Map Texture Features. Remote Sens. 2019, 11, 1636. [Google Scholar] [CrossRef]

- Ruiz, L.A.; Recio, J.A.; Fernández-Sarría, A.; Hermosilla, T. A feature extraction software tool for agricultural object-based image analysis. Comput. Electron. Agric. 2011, 76, 284–296. [Google Scholar] [CrossRef]

- Domínguez-Beisiegel, M.; Castañeda, C.; Mougenot, B.; Herrero, J. Analysis and Mapping of the Spectral Characteristics of Fractional Green Cover in Saline Wetlands (NE Spain) Using Field and Remote Sensing Data. Remote Sens. 2016, 8, 590. [Google Scholar] [CrossRef]

- Salleh, S.A.; Khalid, N.; Danny, N.; Zaki, N.A.M.; Ustuner, M.; Latif, Z.A.; Foronda, V. Support Vector Machine (SVM) and Object Based Classification in Earth Linear Features Extraction: A Comparison. Rev. Int. De Géomatique 2024, 33, 183–199. [Google Scholar] [CrossRef]

- Lv, Z.; Zhang, P.; Atli Benediktsson, J. Automatic Object-Oriented, Spectral-Spatial Feature Extraction Driven by Tobler’s First Law of Geography for Very High Resolution Aerial Imagery Classification. Remote Sens. 2017, 9, 285. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Gong, J.; Feng, Q.; Zhou, J.; Sun, J.; Shi, C.; Hu, W. Urban Water Extraction with UAV High-Resolution Remote Sensing Data Based on an Improved U-Net Model. Remote Sens. 2021, 13, 3165. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV Remote Sensing for Urban Vegetation Mapping Using Random Forest and Texture Analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Xie, H.; Tong, X.; Heipke, C.; Lohmann, P.; Sorgel, U. Object-based binary encoding algorithm -an integration of hyperspectral data and DSM. In Proceedings of the 2009 Joint Urban Remote Sensing Event, Shanghai, China, 20–22 May 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1–6. [Google Scholar]

- Peng, K.; Jiang, W.; Hou, P.; Wu, Z.; Cui, T. Detailed wetland-type classification using Landsat-8 time-series images: A pixel- and object-based algorithm with knowledge (POK). Gisci. Remote Sens. 2024, 61, 2293525. [Google Scholar] [CrossRef]

- De Angeli, S.; Serpetti, M.; Battistin, F. A Newly Developed Tool for the Post-Processing of GPR Time-Slices in A GIS Environment. Remote Sens. 2022, 14, 3459. [Google Scholar] [CrossRef]

- Ezeomedo, I.C.A.J. Mapping of Urban Features of Nnewi Metropolis Using High Resolution Satellite Image and Support Vector Machine Classifier. J. Environ. Earth Sci. 2019, 9, 116–130. [Google Scholar]

- MohanRajan, S.N.; Loganathan, A.; Manoharan, P. Survey on Land Use/Land Cover (LU/LC) change analysis in remote sensing and GIS environment: Techniques and Challenges. Environ. Sci. Pollut. Res. 2020, 27, 29900–29926. [Google Scholar] [CrossRef] [PubMed]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS-J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Fetai, B.; Oštir, K.; Fras, M.K.; Lisec, A. Extraction of Visible Boundaries for Cadastral Mapping Based on UAV Imagery. Remote Sens. 2019, 11, 1510. [Google Scholar] [CrossRef]

- Zhao, X.; Jing, L.; Zhang, G.; Zhu, Z.; Liu, H.; Ren, S. Object-Oriented Convolutional Neural Network for Forest Stand Classification Based on Multi-Source Data Collaboration. Forests 2024, 15, 529. [Google Scholar] [CrossRef]

- Xue, Y.; Qin, C.; Wu, B.; Li, D.; Fu, X. Automatic Extraction of Mountain River Surface and Width Based on Multisource High-Resolution Satellite Images. Remote Sens. 2022, 14, 2370. [Google Scholar] [CrossRef]

- Kotluri, S.K.; Pandey, P.; Pandey, A.K. A Google Earth and ArcGIS-based protocol for channel width extraction. J. Earth Syst. Sci. 2024, 133, 9. [Google Scholar] [CrossRef]

- Zhou, T.; Sun, C.; Fu, H. Road Information Extraction from High-Resolution Remote Sensing Images Based on Road Reconstruction. Remote Sens. 2019, 11, 79. [Google Scholar] [CrossRef]

- Zhang, Z.; Han, Y.; Chen, J.; Wang, S.; Wang, G.; Du, N. Information extraction of ecological canal system based on remote sensing data of unmanned aerial vehicle. J. Drain. Irrig. Mach. Eng. 2018, 36, 1006–1011. [Google Scholar]

| Channel Type | Width of Canal Top (m) | Canal Depth (m) |

|---|---|---|

| Spur canal | 5.5 | 1.5 |

| Lateral canal | 2.77 | 0.9 |

| Lateral canal | 0.73 | 0.6 |

| Sublateral ditches | 0.51 | 0.45 |

| Parameters | Numerical Values (during Normal Operation) |

|---|---|

| Bare metal weight | 951 g |

| Maximum take-off weight | 1050 g |

| Hovering accuracy (windless or breezy conditions) | Vertical: ±0.1 m; ±0.5 m; ±0.1 m Horizontal: ±0.3 m; ±0.5 m; ±0.1 m |

| Maximum flight time (windless environment) | 43 min |

| Maximum rotational angular velocity | 200°·s−1 |

| Maximum rising speed | 6 m·s−1 |

| Maximum descending speed | 6 m·s−1 |

| Maximum horizontal flight speed | 15 m·s−1 |

| Image sensor | 1/2.8 in CMOS; Effective pixels 5 million |

| Equivalent focal length | 25 mm |

| Aperture | f/2.0 |

| Maximum photo size | 2592 × 1944 |

| Band | Green (G): 560 nm ± 16 nm; Red (R): 650 nm ± 16 nm Red Edge (RE): 730 nm ± 16 nm; Near Infrared (NIR): 860 nm ± 26 nm |

| Images | FP | FN | TP | Recall (%) | Precision (%) |

|---|---|---|---|---|---|

| WG 1 | 3,832,217 | 585,731 | 4,862,618 | 89.25 | 55.93 |

| WG 2 | 3,790,257 | 454,871 | 5,145,910 | 91.88 | 57.59 |

| WG 3 | 4,603,314 | 1,452,242 | 4,983,187 | 77.43 | 51.98 |

| Total | 12,225,788 | 2,492,844 | 14,991,715 | 85.74 | 55.08 |

| Canal Type | FP | FN | TP | Recall (%) | Precision (%) |

|---|---|---|---|---|---|

| Spur canal | 5,599,689 | 510,613 | 13,292,422 | 96.30 | 70.36 |

| Lateral canal | 2,450,368 | 886,100 | 971,910 | 52.31 | 24.40 |

| Sublateral ditches | 4,175,731 | 1,096,130 | 727,383 | 39.89 | 14.84 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huo, X.; Li, L.; Yu, X.; Qian, L.; Yin, Q.; Fan, K.; Pi, Y.; Wang, Y.; Wang, W.; Hu, X. Extraction of Canal Distribution Information Based on UAV Remote Sensing System and Object-Oriented Method. Agriculture 2024, 14, 1863. https://doi.org/10.3390/agriculture14111863

Huo X, Li L, Yu X, Qian L, Yin Q, Fan K, Pi Y, Wang Y, Wang W, Hu X. Extraction of Canal Distribution Information Based on UAV Remote Sensing System and Object-Oriented Method. Agriculture. 2024; 14(11):1863. https://doi.org/10.3390/agriculture14111863

Chicago/Turabian StyleHuo, Xuefei, Li Li, Xingjiao Yu, Long Qian, Qi Yin, Kai Fan, Yingying Pi, Yafei Wang, Wen’e Wang, and Xiaotao Hu. 2024. "Extraction of Canal Distribution Information Based on UAV Remote Sensing System and Object-Oriented Method" Agriculture 14, no. 11: 1863. https://doi.org/10.3390/agriculture14111863

APA StyleHuo, X., Li, L., Yu, X., Qian, L., Yin, Q., Fan, K., Pi, Y., Wang, Y., Wang, W., & Hu, X. (2024). Extraction of Canal Distribution Information Based on UAV Remote Sensing System and Object-Oriented Method. Agriculture, 14(11), 1863. https://doi.org/10.3390/agriculture14111863