Precision Weed Management for Straw-Mulched Maize Field: Advanced Weed Detection and Targeted Spraying Based on Enhanced YOLO v5s

Abstract

:1. Introduction

2. Materials and Methods

2.1. Overall Overview

2.2. Image Acquisition

2.2.1. Dataset Preparation

2.2.2. Image Annotation

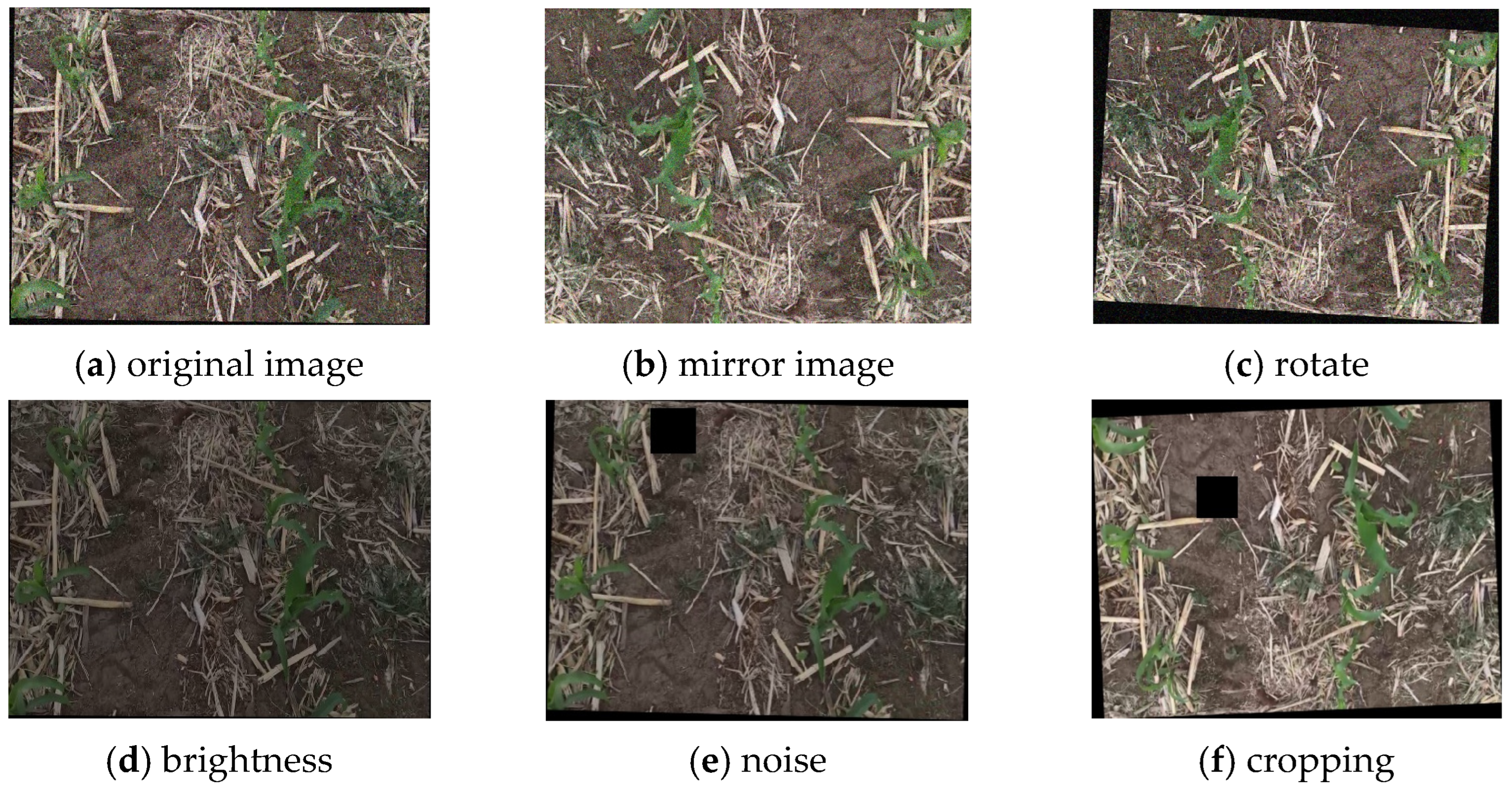

2.2.3. Image Preprocessing

2.3. Construction of Weed Detection Model

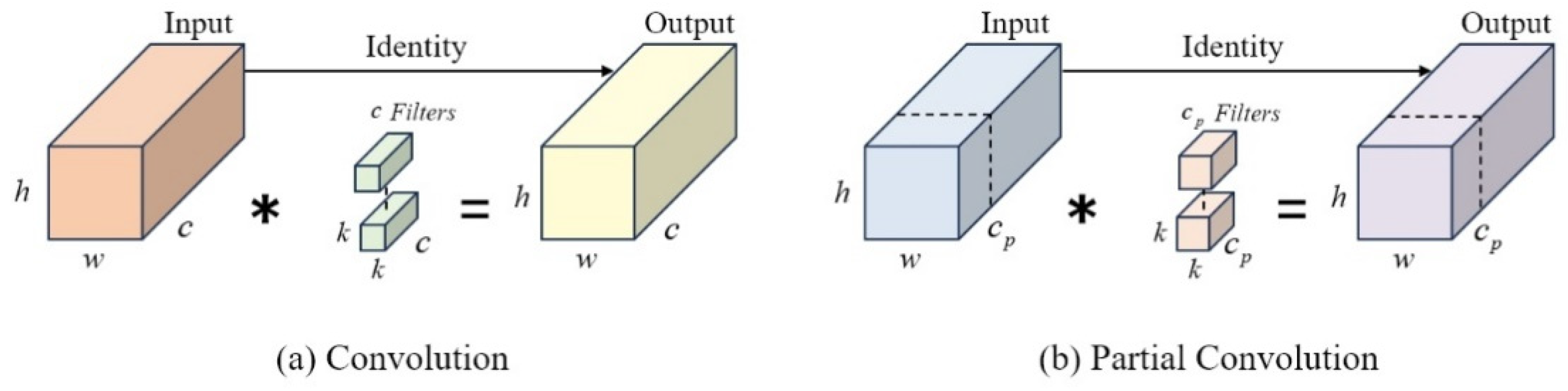

- FasterNet feature extraction network

- 2.

- CBAM attention mechanism

- 3.

- Optimization of loss function

2.4. Evaluation Index of Weed Identification Accuracy

2.5. Implementation Details

3. Results and Discussion of Weed Identification Accuracy

3.1. Performance Evaluation of Different Detect Models

3.2. Performance Evaluation of Different Network Architectures

3.3. Performance Evaluation of Improvement Modules

3.4. Effectiveness Evaluation of Weed Identification Model

3.4.1. Comparative Analysis of Training Results of Weed Identification

3.4.2. Comparative Analysis of Model Identification Applications Effects

4. Application of Targeted Spraying Weeding

4.1. Targeted Spraying Device and Its Structure Composition

4.2. Localization and Position Extraction of Weeds Target

4.3. Evaluation of Model Application Effect

4.3.1. Evaluation of Weed Localization Error

4.3.2. Evaluation of Targeted Spraying Weeding Performance

4.4. Testing and Analysis of Targeted Spraying Application

4.4.1. Experimental Results and Analysis of Weed Localization Error

4.4.2. Experimental Results and Analysis of Targeted Spraying Weeding

5. Conclusions

- Weed Image Dataset Construction: We constructed a weed image dataset during the seedling stage of corn under straw mulching conditions.

- Precise Weed Identification and Localization: We proposed an improved YOLO v5s model by incorporating a Convolutional Block Attention Module (CBAM), FasterNet feature extraction network, and an improved Weighted Intersection over Union (WIoU) loss function to optimize the network structure and training strategy. This enhancement highlights weed feature information, reduces network redundancy and model memory, and enhances the model’s robustness and stability. Compared to the original network, our model has a 0.9% decrease in average detection accuracy for weeds and corn seedlings but achieves an 8.46% increase in detection speed, with model memory and computational load reduced by 50.36% and 53.16%, respectively. These improvements enhance the model’s ability to recognize and extract small weed features, making it more suitable for deployment on edge intelligent agricultural devices and achieving accurate real-time weed detection.

- Targeted Spraying System: Based on the improved YOLO v5s model, we designed an intelligent targeted spraying system. This system can accurately spray herbicides according to the identified weed positions, avoiding the waste and environmental pollution associated with traditional spraying methods. This not only improves weeding efficiency but also reduces herbicide usage, contributing to environmental protection.

- Application Effectiveness Verification: We conducted targeted spraying experiments, and the results showed that the proposed method achieved an effective weed identification rate of 90%, an effective spraying rate of 96.3%, a missed spraying rate of 13.3%, and an erroneous spraying rate of 3.7%. These results demonstrate the robustness of the model and the feasibility of the spraying device for weed-targeted spraying.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wen, L.; Peng, Y.; Zhou, Y.; Cai, G.; Lin, Y.; Li, B. Effects of conservation tillage on soil enzyme activities of global cultivated land: A meta-analysis. J. Environ. Manag. 2023, 345, 118904. [Google Scholar] [CrossRef] [PubMed]

- Salimi Koochi, M.; Madandoust, M. Integrated weed management of cumin (Cuminum cyminum L.) using reduced rates of herbicides and straw mulch. Iran. J. Med. Aromat. Plants Res. 2023, 39, 352–366. [Google Scholar] [CrossRef]

- Su, Y.; Ye, S.; Lu, M.; Ma, Y.; Wang, Y.; Wang, S.; Chai, R.; Ye, X.; Zhang, Z.; Ma, C. Effects of straw return on farmland weed abundance and diversity: A meta-analysis. Acta Prataculturae Sin. 2024, 33, 150–160. [Google Scholar] [CrossRef]

- Zhang, X.; Xing, S.; Wu, Y. Effects of different straw returning methods on farmland ecological environment: A review. Jiangsu Agric. Sci. 2023, 51, 31–39. [Google Scholar]

- Mao, Y.; Li, G.; Shen, J. Weed control efficiency of corn straw residue mulching combining herbicide application in paddy field and its effect on rice yield. Jiangsu Agric. Sci. 2014, 30, 1336–1344. [Google Scholar] [CrossRef]

- Fonteyne, S.; Singh, R.G.; Govaerts, B.; Verhulst, N. Rotation, Mulch and Zero Tillage Reduce Weeds in a long-Term Conservation Agriculture Trial. Agronomy 2020, 10, 962. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (uav) imagery. PLoS ONE 2018, 13, e196302. [Google Scholar] [CrossRef]

- Liu, B.; Bruch, R. Weed Detection for Selective Spraying: A Review. Curr. Robot. Rep. 2020, 1, 19–26. [Google Scholar] [CrossRef]

- Meshram, A.T.; Vanalkar, A.V.; Kalambe, K.B.; Badar, A.M. Pesticide spraying robot for precision agriculture: A categorical literature review and future trends. J. Field Robot. 2022, 39, 153–171. [Google Scholar] [CrossRef]

- Darbyshire, M.; Salazar-Gomez, A.; Gao, J.; Sklar, E.I.; Parsons, S. Towards practical object detection for weed spraying in precision agriculture. Front. Plant Sci. 2023, 14, 1183277. [Google Scholar] [CrossRef]

- Li, H.; Guo, C.; Yang, Z.; Chai, J.; Shi, Y.; Liu, J.; Zhang, K.; Liu, D.; Xu, Y. Design of field real-time target spraying system based on improved YOLOv5. Front. Plant Sci. 2022, 13, 1072631. [Google Scholar] [CrossRef] [PubMed]

- Zhao, X.; Li, Y.; Liu, X.; Niu, Z.; Yuan, J. Ultrasonic Sensing System Design and Accurate Target Identification for Targeted Spraying. Adv. Manuf. Autom. VII 2018, 451, 245–253. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Zahid, A.; He, L.; Choi, D.; Krawczyk, G.; Zhu, H. LiDAR-sensed tree canopy correction in uneven terrain conditions using a sensor fusion approach for precision sprayers. Comput. Electron. Agric. 2021, 191, 106565. [Google Scholar] [CrossRef]

- Fawakherji, M.; Potena, C.; Pretto, A.; Bloisi, D.D.; Nardi, D. Multi-Spectral Image Synthesis for Crop/Weed Segmentation in Precision Farming. Robot. Auton. Syst. 2021, 146, 103861. [Google Scholar] [CrossRef]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- Tang, J.; Chen, X.; Miao, R.; Wang, D. Weed detection using image processing under different illumination for site-specific areas spraying. Comput. Electron. Agric. 2016, 122, 103–111. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A.; Nassiri, S.M.; Zare, D. Weed segmentation using texture features extracted from wavelet sub-images. Biosyst. Eng. 2017, 157, 1–12. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Ma, G.; Du, X.; Shaheen, N.; Mao, H. Recognition of weeds at asparagus fields using multi-feature fusion and backpropagation neural network. Int. J. Agric. Biol. Eng. 2021, 14, 190–198. [Google Scholar] [CrossRef]

- Wang, B.; Yan, Y.; Lan, Y.; Wang, M.; Bian, Z. Accurate Detection and Precision Spraying of Corn and Weeds Using the Improved YOLOv5 Model. IEEE Access 2023, 11, 29868–29882. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, Y.; Zhao, B.; Kang, X.; Ding, Y. Review of Weed Detection Methods Based on Computer Vision. Sensors 2021, 21, 3647. [Google Scholar] [CrossRef]

- Deng, X.; Qi, L.; Liu, Z.; Liang, S.; Gong, K.; Qiu, G. Weed target detection at seedling stage in paddy fields based on YOLOX. PLoS ONE 2023, 18, e294709. [Google Scholar] [CrossRef] [PubMed]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Wang, C.; Wu, X.; Li, Z. Recognition of maize and weed based on multi-scale hierarchical features extracted by convolutional neural network. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2018, 34, 144–151. [Google Scholar] [CrossRef]

- Ding, P.; Qian, H.; Bao, J.; Zhou, Y.; Yan, S. L-YOLOv4: Lightweight YOLOv4 based on modified RFB-s and depth-wise separable convolution for multi-target detection in complex scenes. J. Real-Time Image Process. 2023, 20, 71. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Villamill, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wu, H.; Wang, Y.; Zhao, P.; Qian, M. Small-target weed-detection model based on YOLO-V4 with improved backbone and neck structures. Precis. Agric. 2023, 24, 2149–2170. [Google Scholar] [CrossRef]

- Wang, A.; Peng, T.; Cao, H.; Xu, Y.; Wei, X.; Cui, B. TIA-YOLOv5: An improved YOLOv5 network for real-time detection of crop and weed in the field. Front. Plant Sci. 2022, 13, 1091655. [Google Scholar] [CrossRef]

- Gong, H.; Wang, X.; Zhuang, W. Research on Real-Time Detection of Maize Seedling Navigation Line Based on Improved YOLOv5s Light-weighting Technology. Agriculture 2024, 14, 124. [Google Scholar] [CrossRef]

- Vijayakumar, V.; Ampatzidis, Y.; Schueller, J.K.; Burks, T. Smart spraying technologies for precision weed management: A review. Smart Agric. Technol. 2023, 6, 100337. [Google Scholar] [CrossRef]

- Zhao, D.; Zhao, Y.; Wang, X.; Zhang, B. Theoretical Design and First Test in Laboratory of a Composite Visual Servo-Based Target Spray Robotic System. J. Robot. 2016, 2016, 1801434. [Google Scholar] [CrossRef]

- Underwood, J.P.; Calleija, M.; Taylor, Z.; Hung, C.; Nieto, J.I.; Fitch, R.; Sukkarieh, S. Real-time target detection and steerable spray for vegetable crops. In Proceedings of the International Conference on Robotics and Automation: Robotics in Agriculture Workshop, Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Raja, R.; Slaughter, D.C.; Fennimore, S.A.; Siemens, M.C. Real-time control of high-resolution micro-jet sprayer integrated with machine vision for precision weed control. Biosyst. Eng. 2023, 228, 31–48. [Google Scholar] [CrossRef]

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, S.; Zhao, C.; Zhang, J. Navigation line extraction algorithm for corn spraying robot based on improved YOLOv8s network. Comput. Electron. Agric. 2023, 212, 108049. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, X.; Li, C.; Fu, H.; Yang, S.; Zhai, C. Cabbage and Weed Identification Based on Machine Learning and Target Spraying System Design. Front. Plant Sci. 2022, 13, 924973. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.; Zhao, X.; Wu, H.; Zheng, S.; Zheng, K.; Zhai, C. Design and Experimental Verification of the YOLOV5 Model Implanted with a Transformer Module for Target-Oriented Spraying in Cabbage Farming. Agronomy 2022, 12, 2551. [Google Scholar] [CrossRef]

- Sabóia, H.D.S.; Mion, R.L.; Silveira, A.D.O.; Mamiya, A.A. Real-time selective spraying for viola rope control in soybean and cotton crops using deep learning. Eng. Agric. 2022, 42, e20210163. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a yolo model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Balabantaray, A.; Behera, S.; Liew, C.; Chamara, N.; Singh, M.; Jhala, A.J.; Pitla, S. Targeted weed management of palmer amaranth using robotics and deep learning (yolov7). Front. Robot. AI 2024, 11, 1441371. [Google Scholar] [CrossRef]

- Song, Y.; Sun, H.; Li, M.; Zhang, Q. Technology Application of Smart Spray in Agriculture: A Review. Intell. Autom. Soft Comput. 2015, 21, 319–333. [Google Scholar] [CrossRef]

- Fan, X.; Chai, X.; Zhou, J.; Sun, T. Deep learning based weed detection and target spraying robot system at seedling stage of cotton field. Comput. Electron. Agric. 2023, 214, 108317. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar] [CrossRef]

- Woo, S.H.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), PT VII, Munich, Germany, 8–14 September 2018; p. 11211. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Zhang, R.; Li, L.; Fu, W.; Chen, L.; Yi, T.; Tang, Q.; Andrew, J.H. Spraying atomization performance by pulse width modulated variable and droplet deposition characteristics in wind tunnel. Trans. Chin. Soc. Agric. Eng. (Trans. CSAE) 2019, 35, 42–51. [Google Scholar] [CrossRef]

- Zhang, X.; Cao, C.; Luo, K.; Wu, Z.; Qin, K.; An, M.; Ding, W.; Xiang, W. Design and operation of a peucedani radix weeding device based on yolov5 and a parallel manipulator. Front. Plant Sci. 2023, 14, 1171737. [Google Scholar] [CrossRef] [PubMed]

| Equipment | Parameter | Value/Type |

|---|---|---|

| Industrial Camera (The Imaging Source, Bremen, Germany) | dynamic range | 12 bit |

| sensor type | CMOS Pregius | |

| data interface | USB 3.0, compatible with USB 2.0 | |

| resolution/(pixels × pixels) | 2048 × 1536 | |

| maximum frame rate/(f·s−1) | 100 | |

| CCD size/(inch) | 1/1.8 | |

| pixel size/(μm × μm) | 3.45 × 3.45 | |

| shutter/(s) | 5 × 10−6~4 | |

| Lens (AZURE, Fuzhou, China) | resolution/(pixels) | 5 million |

| image size | 2/3 inch | |

| aperture | F1.6 | |

| focal length/(mm) | 8 | |

| Canon Camera (Canon, Tokyo, Japan) | a sensor | CMOS |

| effective pixels/(pixels) | 20 million 200 thousand | |

| pixels/(pixels × pixels) | 3648 × 2432 | |

| image Format | JPEG |

| Models | P/% | R/% | mAP/% | Fps/f/s | Parameters/MB |

|---|---|---|---|---|---|

| Fast R-CNN | 52.71 | 78.73 | 71.89 | 18.02 | 108 |

| SSD | 88.29 | 79.56 | 88.29 | 33.66 | 91.1 |

| YOLO v4 | 90.1 | 87.3 | 92.2 | 48.08 | 17.6 |

| YOLO v5s | 90.3 | 87.5 | 92.3 | 70.92 | 13.7 |

| YOLO v8 | 89.4 | 85.3 | 91 | 94.43 | 7.01 |

| Models | P/% | R/% | mAP/% | Fps/f/s | Parameters/MB |

|---|---|---|---|---|---|

| FasterNet | 90.3 | 87.5 | 92.3 | 74.6 | 6.34 |

| MobileViT | 85.0 | 78.2 | 84.9 | 65.7 | 2.24 |

| EfficientViT | 87.5 | 84.6 | 89.8 | 67.5 | 3.24 |

| Models | mAP/% | Fps/f/s | Parameters/MB | GFLOPs |

|---|---|---|---|---|

| Original YOLO v5s | 92.3 | 70.92 | 13.7 | 15.8 |

| YOLO v5s-FasterNet | 91 | 74.63 | 6.34 | 7.1 |

| YOLO v5s-CBAM | 92.5 | 64.10 | 15.1 | 16.9 |

| YOLO v5s-WIoU | 92.4 | 83.33 | 13.7 | 15.8 |

| YOLO v5s-FasterNet-CBAM | 91.2 | 68.03 | 6.8 | 7.4 |

| YOLO v5s-FasterNet-WIoU | 91.3 | 75.76 | 6.46 | 7.1 |

| YOLO v5s-CBAM-WIoU | 92.4 | 72.46 | 30.2 | 16.9 |

| YOLO v5s-FasterNet-CBAM_WIoU | 91.4 | 76.92 | 6.8 | 7.4 |

| Index | Definition | Formulas |

|---|---|---|

| Effective Recognition rate (ERR) | The proportion of weed targets correctly identified as weeds (n) relative to the total number of weeds (N) within the experimental area. | |

| Relative spraying rate (RSR) | The proportion of sprayed targets (n1 + n1′) relative to the total number of targets identified as weeds (n + n3′) within the experimental area. | |

| Effective spraying rate (ESR) | The proportion of weed targets that were correctly identified as weeds and successfully sprayed (n1) relative to the total number of sprayed targets (n1 + n1′) within the experimental area. | |

| Leakage spraying rate (LSR) | The proportion of unsprayed weed targets (n2 + n3 + n4) relative to the total number of weeds (N) within the experimental area. | |

| Mistaken spraying rate (MSR) | The proportion of corn incorrectly identified as weeds and successfully sprayed (n1′) relative to the total number of sprayed targets (n1 + n1′) within the experimental area. |

| Coordinate of Weed Placement Position | Coordinate of Actual Spraying Position | Relative Error/% | RMSE |

|---|---|---|---|

| (−6.3, −9.9) | (−6.508, −9.915) | 3.4 | 0.12 |

| (7.3, −5.8) | (7.189, −5.822) | 1.9 | |

| (−26.9, 11.2) | (−27.388, 11.171) | 2.1 | |

| (18.6, 13.9) | (18.184, 13.724) | 3.5 |

| Category | N(N′) | n(n′) | n1(n1′) | n2(n2′) | n3(n3′) | n4(n4′) | ESR/% | LSR/% | MSR/% |

|---|---|---|---|---|---|---|---|---|---|

| Weeds | 30 | 27 | 26 | 1 | 1 | 2 | 90 | 13.3 | 3.7 |

| Corns | 30 | 29 | 1 | 0 | 1 | 0 | 96.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Wang, Q.; Qiao, Y.; Zhang, X.; Lu, C.; Wang, C. Precision Weed Management for Straw-Mulched Maize Field: Advanced Weed Detection and Targeted Spraying Based on Enhanced YOLO v5s. Agriculture 2024, 14, 2134. https://doi.org/10.3390/agriculture14122134

Wang X, Wang Q, Qiao Y, Zhang X, Lu C, Wang C. Precision Weed Management for Straw-Mulched Maize Field: Advanced Weed Detection and Targeted Spraying Based on Enhanced YOLO v5s. Agriculture. 2024; 14(12):2134. https://doi.org/10.3390/agriculture14122134

Chicago/Turabian StyleWang, Xiuhong, Qingjie Wang, Yichen Qiao, Xinyue Zhang, Caiyun Lu, and Chao Wang. 2024. "Precision Weed Management for Straw-Mulched Maize Field: Advanced Weed Detection and Targeted Spraying Based on Enhanced YOLO v5s" Agriculture 14, no. 12: 2134. https://doi.org/10.3390/agriculture14122134

APA StyleWang, X., Wang, Q., Qiao, Y., Zhang, X., Lu, C., & Wang, C. (2024). Precision Weed Management for Straw-Mulched Maize Field: Advanced Weed Detection and Targeted Spraying Based on Enhanced YOLO v5s. Agriculture, 14(12), 2134. https://doi.org/10.3390/agriculture14122134