Research on the Corn Stover Image Segmentation Method via an Unmanned Aerial Vehicle (UAV) and Improved U-Net Network

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Materials and Image Acquisition

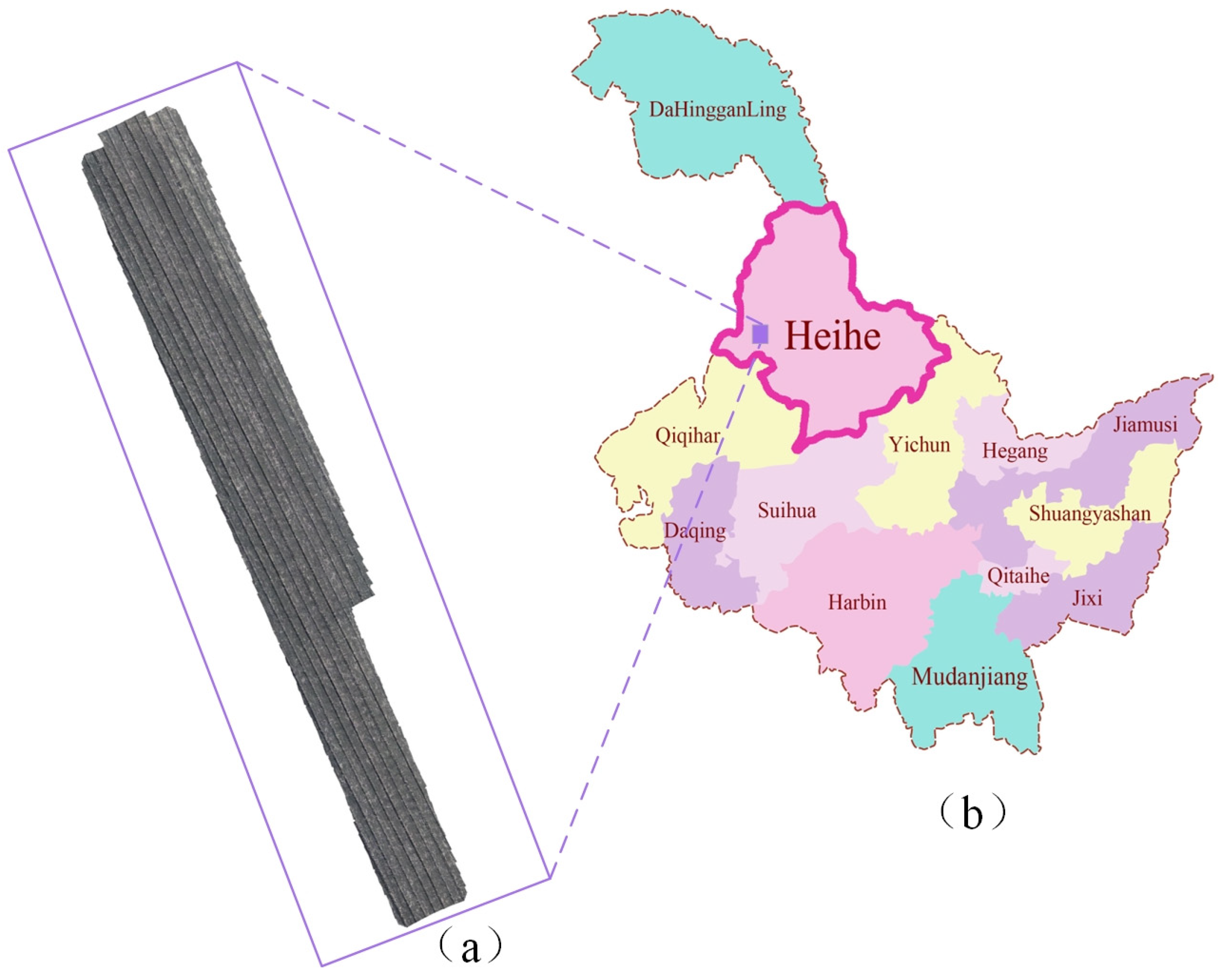

2.1.1. Experimental Materials

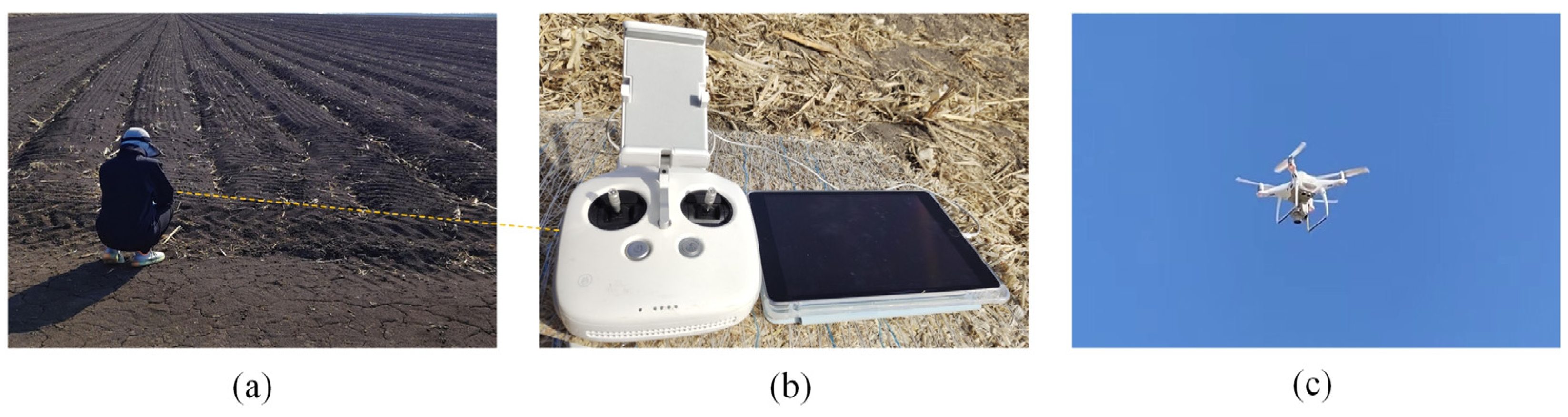

2.1.2. Image Acquisition

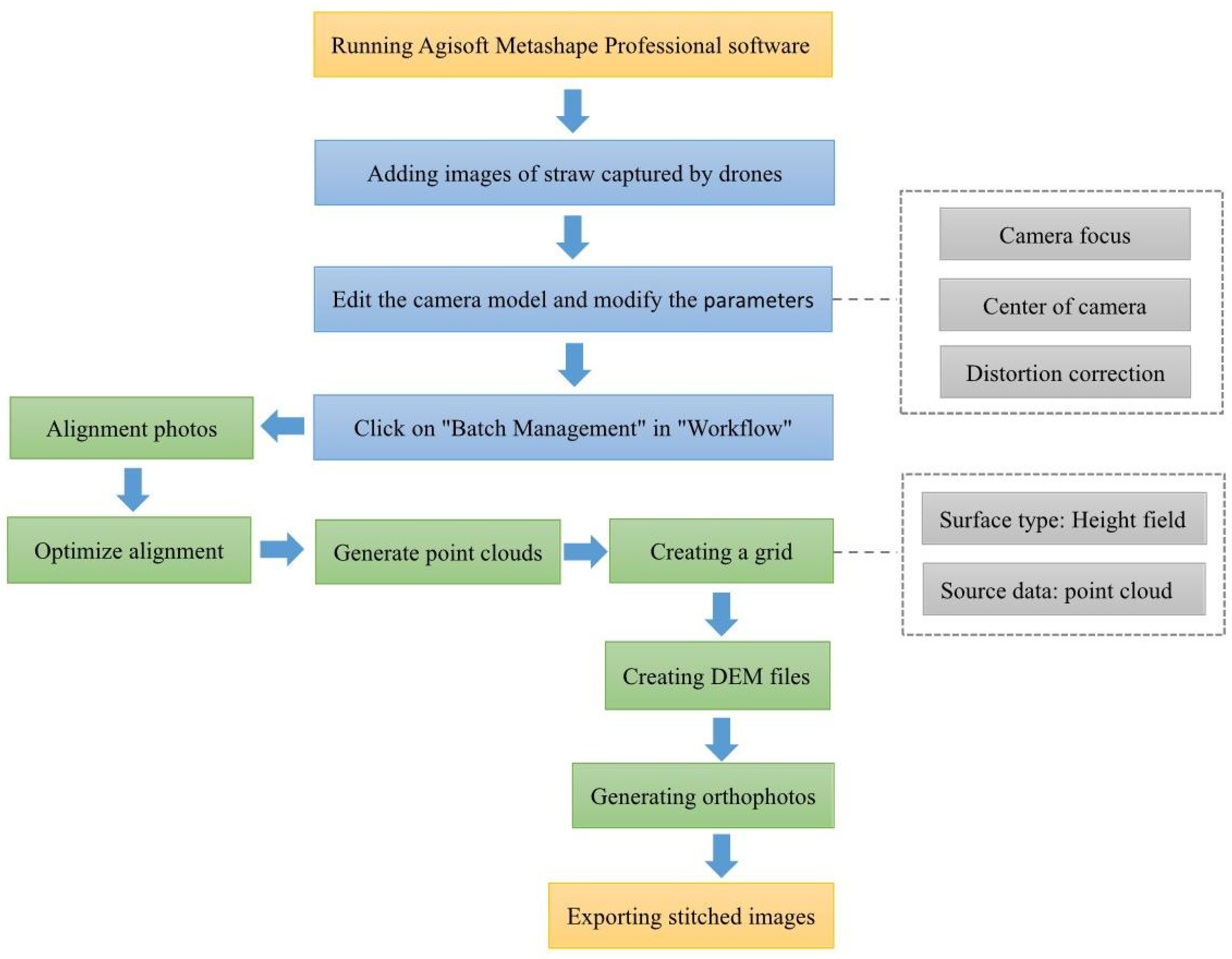

2.2. Image Preprocessing

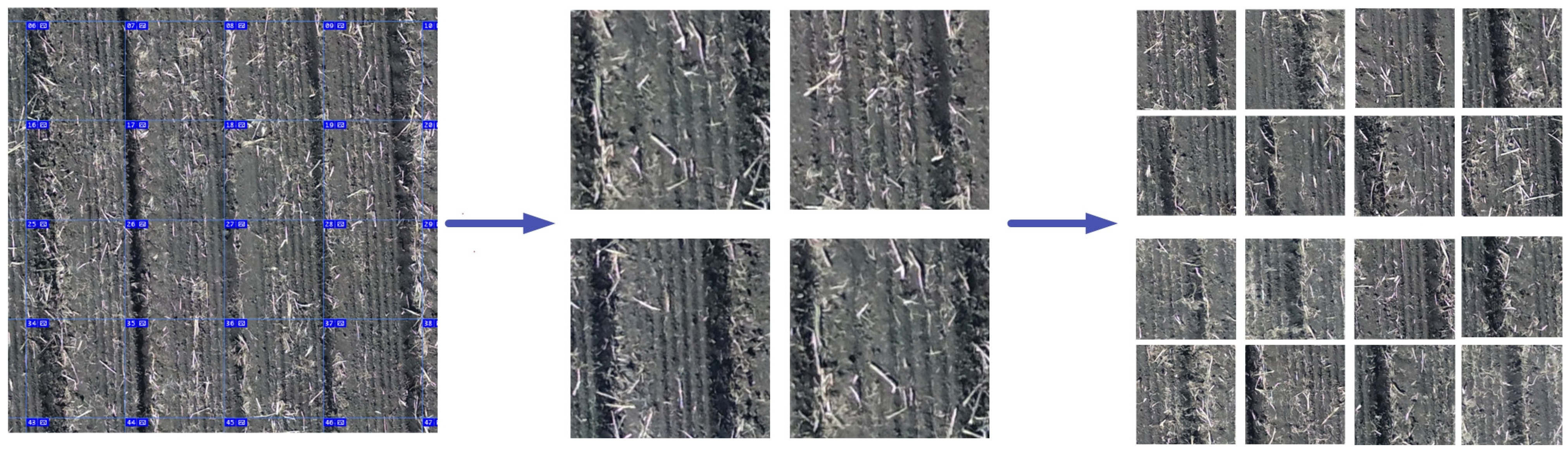

2.3. Corn Stover Dataset Production

3. Corn Stover Splitting Model

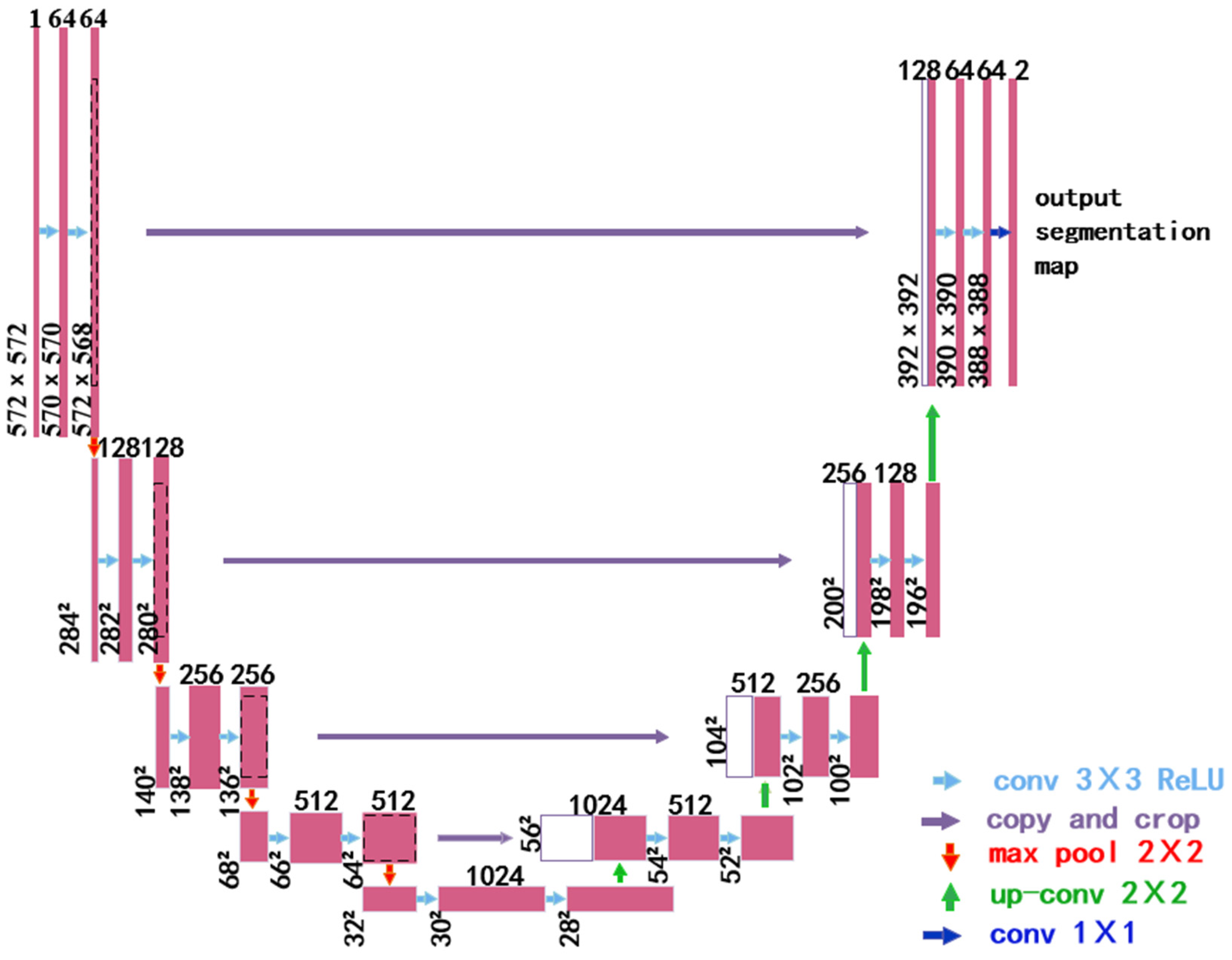

3.1. U-Net Model

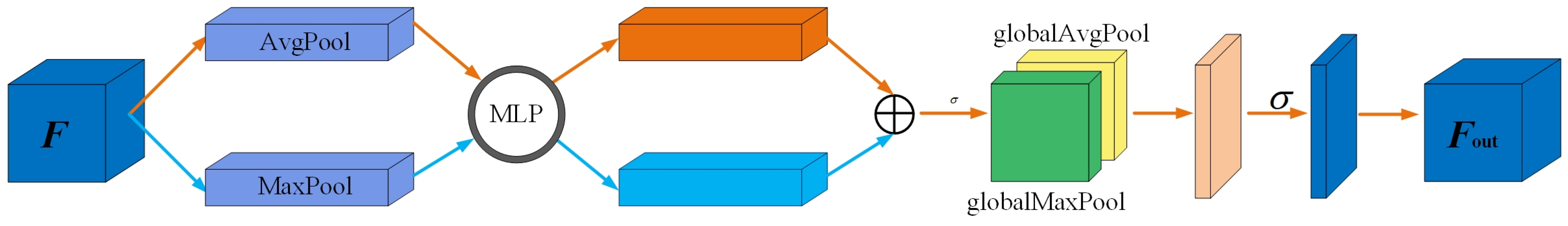

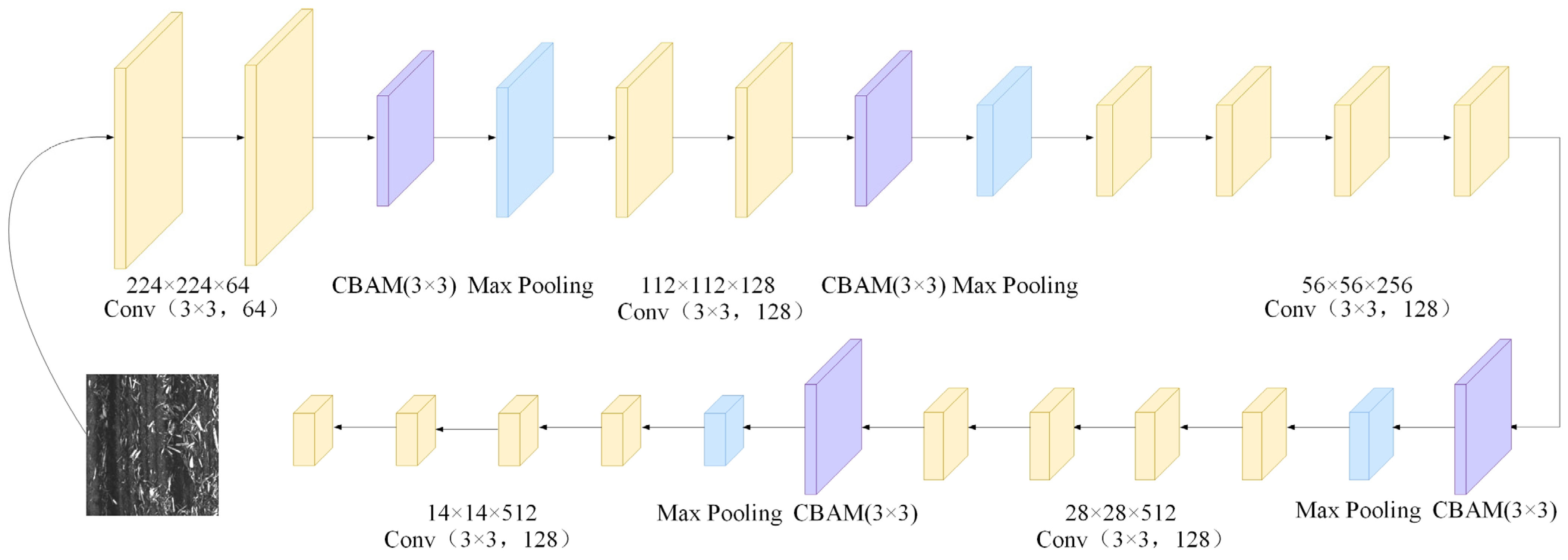

3.2. Improved U-Net Model

4. Experiments and Results

4.1. Model Training

4.1.1. Development Environment

4.1.2. Parameter Settings

4.1.3. Evaluation Indicators Comparison Model Setup

4.2. Ablation Experiment

4.3. Comparison Model Setup

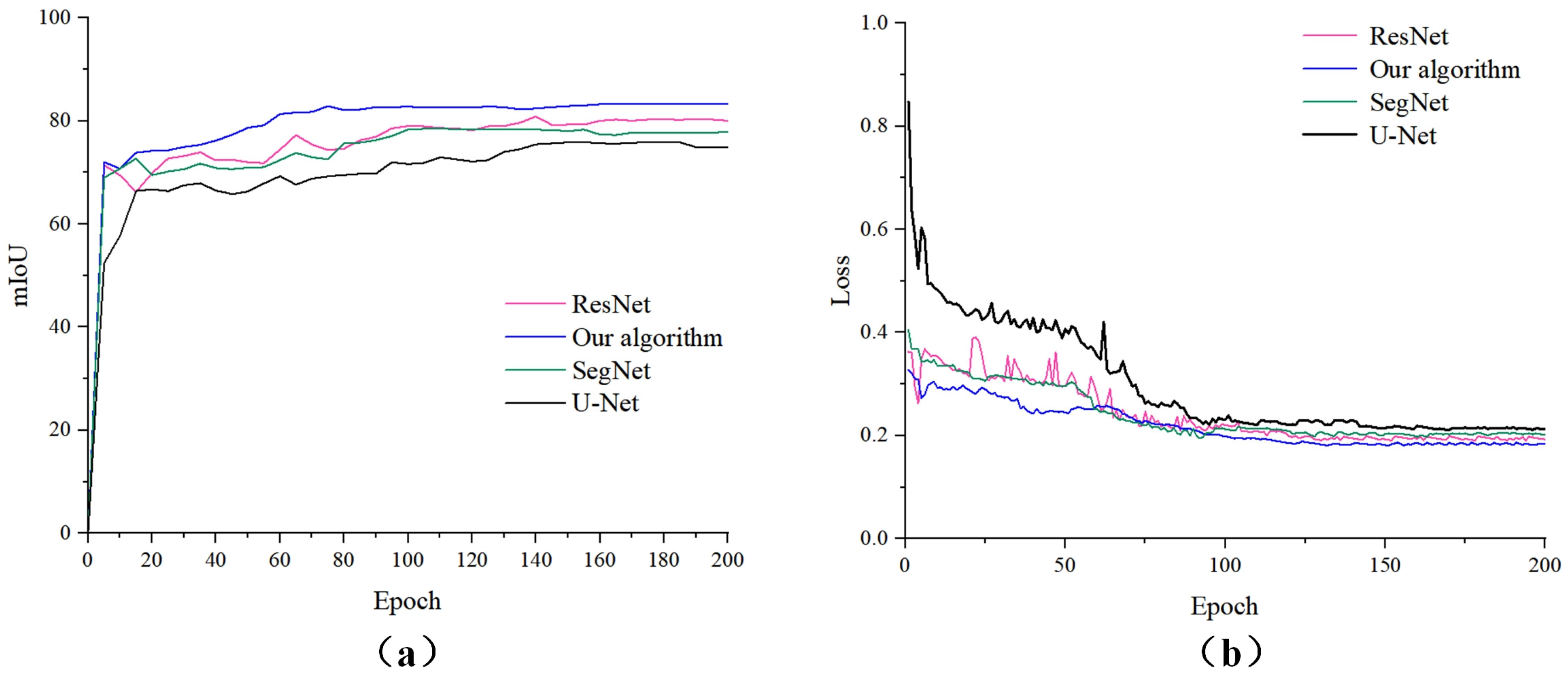

4.4. Comparison and Analysis of Results

5. Conclusions

- (1)

- To address the issue of the inaccurate segmentation of straw images caused by shadows and the challenges in identifying fragmented straws due to varying shooting angles during data collection, we propose utilizing a transfer learning approach. This method replaces the encoding phase of the original U-Net architecture with the first five layers of the VGG19 backbone network. Additionally, we integrate the CBAM convolutional attention mechanism and optimize the entire network using the Focal-Dice Loss function. This enhancement focuses on segmenting fine-grained straw edge details, reducing parameters and computational complexity, and improving the accuracy of segmenting corn straws in complex backgrounds.

- (2)

- During the training process, the model demonstrates the expected performance when the input data size is 256 × 256. Compared to the other three algorithms, this algorithm demonstrates superior performance in corn stalk segmentation tasks. Consequently, our algorithm is suitable for segmenting the stalks of various crops and other tasks where there is minimal distinction between the foreground and background.

- (3)

- The straw segmentation algorithm advanced in this study fulfills the processing requirements for capturing aerial images of straw coverage; however, it is exclusively designed for the low-altitude, small-scale, and high-precision detection of straw coverage, making it unsuitable for large-scale detection.

- (4)

- Our algorithm serves as a technical reference for detecting the straw coverage rate of corn and other crops in the field, providing valuable insights to enhance the U-Net model. It constitutes a valuable resource for assessing the straw coverage rate of crops while also providing innovative ideas to enhance the U-Net model.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, C.; Li, C. Research on Comprehensive Utilization of Straw and Agroecological Environment Protection. Agric. Technol. 2021, 41, 83–85. [Google Scholar]

- Wang, G.; Duan, Y.; Liu, Z.; Wang, Y.; Liu, F.; Sun, L.; Li, Y. Effects on carbon, nitrogen, and phosphorus cycling functional genes under straw mulching and fallow cultivation. Agric. Resour. Environ. 2023, 8, 1–12. [Google Scholar]

- Fan, Z.; Yang, Q.; Li, Z.; Huang, G.; Zhu, S.; Li, M.; Tang, Y. Application and Prospects of Straw Mulching. Sichuan Agric. Sci. Technol. 2023, 5, 12–14. [Google Scholar]

- He, L. Corn Stover Returns to The Field and Wheat Pest Control Supporting Technology. Mod. Agric. Mach. 2023, 4, 80–82. [Google Scholar]

- Meng, X.; Yang, Y.; Zhou, S. Study on the Current Situation of Crop Straw Resource Utilization and Countermeasures in Heilongjiang Province. Agric. Econ. 2018, 3, 38–40. [Google Scholar]

- Pei, H.; Miao, Y.; Hou, R. Meta analysis of the effects of global organic material returning on soil organic carbon sequestration in Mollisols. Trans. Chin. Soc. Agric. Eng. 2023, 39, 79–88. [Google Scholar]

- LÜ, Y.; Qiu, Y.; Yang, Q.; Zhao, Q.; Jin, H. Energy Utilization of Corn Stalks. Energy Energy Conserv. 2023, 4, 34–37. [Google Scholar]

- Liu, Y.; Wang, Y.; Yu, H.; Qin, M.; Sun, J. Detection of Straw Coverage Rate Based on Multi-threshold Image Segmentation Algorithm. Trans. Chin. Soc. Agric. Mach. 2018, 49, 27–35+55. [Google Scholar]

- Huo, A. Ministry of Agriculture and Rural Development’s Action Plan for Protective Cultivation of Black Soil in Northeast China (2020–2025)” by the Ministry of Agriculture and Rural Development. Pract. Rural Technol. 2021, 3, 24–25. [Google Scholar]

- Li, H.; Li, H.; He, J.; Li, S. Measuring System for Residue Cover Rate in Field Based on BP Neural Network. Trans. Chin. Soc. Agric. Mach. 2009, 40, 58–62. [Google Scholar]

- Li, S.; Li, H. A Counting Method for Residue Cover Rate in Field Based on Computer Vision. J. Agric. Mech. Res. 2009, 31, 20–22+25. [Google Scholar]

- Barbedo, J.G.A. A Review on the Use of Unmanned Aerial Vehicles and Imaging Sensors for Monitoring and Assessing Plant Stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Xu, T.; Yang, J.; Bai, J.; Jin, Z.; Guo, Z.; Yu, F. An Inversion Model of Nitrogen Content in Rice Canopy Based on UAV Polarimetric Remote Sensing. Trans. Chin. Soc. Agric. Mach. 2023, 54, 171–178. [Google Scholar]

- Wang, X.; Yang, H.; Li, X.; Zheng, Y.; Yan, H.; Li, N. Research on Maize Growth Monitoring Based on the Visible Spectrum of UAV Remote Sensing. Spectrosc. Spectr. Anal. 2021, 41, 265–270. [Google Scholar]

- He, H.; Yan, Y.; Ling, M.; Yang, Q.; Chen, T.; Li, L. Extraction of Soybean Coverage from UAV Images Combined with 3D Dense Point Cloud. Trans. Chin. Soc. Agric. Eng. 2022, 38, 201–209. [Google Scholar]

- Yang, N.; Zhou, M.; Chen, H.; Cao, C.; Du, S.; Huang, Z. Estimation of Wheat Leaf Area Index and Yield Based on UAV RGB Images. J. Triticeae Crops 2023, 43, 920–932. [Google Scholar]

- Gausman, H.W.; Gerbermann, A.H.; Wiegand, C.L.; Leamer, R.W.; Rodriguez, R.R.; Noriega, J.R. Reflectance differences between crop residues and bare soils. Soil Sci. Soc. Am. J. 1975, 39, 752–755. [Google Scholar] [CrossRef]

- Memon, M.S.; Jun, Z.; Sun, C.; Jiang, C.; Ji, C. Assessment of Wheat Straw Cover and Yield Performance in a Rice-Wheat Cropping System by Using Landsat Satellite Data. Sustainability 2019, 11, 5369. [Google Scholar] [CrossRef]

- Yu, G.; Hao, R.; Ma, H.; Wu, S.; Chen, M. Research on Image Recognition Method Based on SVM Algorithm and ESN Algorithm for Crushed Straw Mulching Rate. J. Henan Agric. Sci. 2018, 47, 155–160. [Google Scholar]

- Li, J.; LÜ, C.; Fan, Y.; Li, Y.; Wei, L.; Qin, Q. Automatic recognition of corn straw coverage based on fast Fourier transform and SVM. Trans. Chin. Soc. Agric. Eng. 2019, 35, 194–201. [Google Scholar]

- Wang, L.; XÜ, L.; Wei, S.; Wei, C.; Zhao, B.; Fan, Y.; Fan, J. Straw Coverage Detection Method Based on Sauvola and Otsu Segmentation Algorithm. Agric. Eng. 2017, 7, 29–35. [Google Scholar]

- Ma, Q.; Wan, C.; Wei, J.; Wang, W.; Wu, C. Calculation Method of Straw Coverage Based on U Net Network and Feature Pyramid Network. Trans. Chin. Soc. Agric. Mach. 2023, 54, 224–234. [Google Scholar]

- Zhou, D.; Li, M.; Li, Y.; Qi, J.; Liu, K.; Cong, X.; Tian, X. Detection of ground straw coverage under conservation tillage based on deep learning. Comput. Electron. Agric. 2020, 172, 105369. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, J.; Zhang, S.; Yu, H.; Wang, Y. Detection of straw coverage based on multi-threshold and multi-target UAV image segmentation optimization algorithm. Trans. Chin. Soc. Agric. Eng. 2020, 36, 134–143. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.; Winn, J.; Zisserman, A. Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 2, 88. [Google Scholar] [CrossRef]

- Wen, J.; Liang, X.; Wang, Y. Research on semantic segmentation of parents in hybrid rice breeding based on improved DeepLabV3+ network model. J. Zhejiang Univ. 2023, 49, 893–902. [Google Scholar]

- Min, F.; Peng, W.; Kuang, Y.; Mao, Y.; Hao, L. Remote Sensing Ground Object Segmentation Algorithm Based on Edge Optimization and Attention Fusion. Comput. Eng. Appl. 2024, 1, 1–11. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 640–651. [Google Scholar] [CrossRef]

- Kaur, G.; Garg, M.; Gupta, S.; Juneja, S.; Rashid, J.; Gupta, D.; Shah, A.; Shaikh, A. Automatic Identification of Glomerular in Whole-Slide Images Using a Modified UNet Model. Diagnostics 2023, 13, 3152. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R.; Member, S. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Arunkumar, M.; Mohanarathinam, A.; Subramaniam, K. Detection of varicose vein disease using optimized kernel Boosted ResNet-Dropped long Short term Memor. Biomed. Signal Process. Control 2024, 87, 105432. [Google Scholar] [CrossRef]

- Wu, Z.; Shen, C.; Van Den Hengel, A. Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recognit. 2019, 90, 119–133. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhu, Y.; Zabaras, N. Bayesian deep convolutional Encoder–Decoder networks for surrogate modeling and uncertainty quantification. J. Comput. Phys. 2018, 366, 415–447. [Google Scholar] [CrossRef]

- Yadav, S.; Murugan, R.; Goel, T. H-Deep-Net: A deep hybrid network with stationary wavelet packet transforms for Retinal detachment classification through fundus images. Med. Eng. Phys. 2023, 120, 104048. [Google Scholar] [CrossRef] [PubMed]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 1–40. [Google Scholar] [CrossRef]

- Maheswari, P.; Raja, P.; Hoang, V.T. Intelligent yield estimation for tomato crop using SegNet with VGG19 architecture. Sci. Rep. 2022, 12, 13601. [Google Scholar] [CrossRef]

- Mengiste, E.; Mannem, K.R.; Prieto, S.A.; Garcia de Soto, B. Transfer-Learning and Texture Features for Recognition of the Conditions of Construction Materials with Small Data Sets. J. Comput. Civ. Eng. 2024, 38, 04023036. [Google Scholar] [CrossRef]

- Fang, X.; Li, W.; Huang, J.; Li, W.; Feng, Q.; Han, Y.; Ding, X.; Zhang, J. Ultrasound image intelligent diagnosis in community-acquired pneumonia of children using convolutional neural network-based transfer learning. Front. Pediatr. 2022, 10, 1063587. [Google Scholar] [CrossRef]

- Li, Y.; Ma, Y.; Li, Y.; Feng, J.; Zhao, M. Study on wheat seed variety identification based on transfer learning. J. Chin. Agric. Mech. 2023, 44, 220–228+280. [Google Scholar]

- Hui, B.; Li, Y. A Detection Method for Pavement Cracks Based on an improved U-Shaped Network. J. Transp. Inf. Saf. 2023, 41, 105–114+131. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 2016, 3, 47–57. [Google Scholar] [CrossRef]

- Bosman, A.S.; Engelbrecht, C.; Van Den Hengel, A. Visualising basins of atraction for the cross-entropy and the squared errorneural network loss functions. Neurocomputing 2020, 400, 113–136. [Google Scholar] [CrossRef]

- You, X.X.; Liang, Z.M.; Wang, Y.Q.; Zang, H. A study on loss function against data imbalance in deep learning correction of precipitation forecasts. Atmos. Res. 2023, 281, 106500. [Google Scholar] [CrossRef]

- Cao, Y.; Zhao, Y.; Yang, L.; Li, J.; Qin, L. Weed Identification Method in Rice Field Based on Improved DeepLabv3+. Trans. Chin. Soc. Agric. Mach. 2023, 54, 242–252. [Google Scholar]

- Wang, X.; Li, H.; LÜ, L.; Han, D.; Wang, Z. Segmentation of Cucumber Target Leaf Spot Based on U-Net and Visible Spectral Images. Spectrosc. Spectr. Anal. 2021, 41, 1499–1504. [Google Scholar]

| Dataset Category | Division Ratio | Sample Size |

|---|---|---|

| Training set | 60% | 960 |

| Validation set | 20% | 320 |

| Test set | 20% | 320 |

| Model | Sample Size (px) | Iterations (it/s) | PA (%) |

|---|---|---|---|

| U-Net | 128 × 128 | 18.57 | 82.07 |

| 256 × 256 | 12.32 | 85.34 | |

| 512 × 512 | 3.42 | 83.99 | |

| SegNet | 128 × 128 | 20.39 | 84.69 |

| 256 × 256 | 12.09 | 88.92 | |

| 512 × 512 | 4.10 | 87.01 | |

| Our algorithm | 128 × 128 | 24.95 | 88.43 |

| 256 × 256 | 13.35 | 93.87 | |

| 512 × 512 | Max out memory | / | |

| ResNet | 128 × 128 | 23.54 | 85.48 |

| 256 × 256 | 6.33 | 90.62 | |

| 512 × 512 | 4.95 | 88.73 |

| Feature Extraction Network | mIoU/% | PA/% |

|---|---|---|

| U-Net | 73.54 | 85.34 |

| ResNet18 + U-Net | 79.07 | 89.88 |

| ResNet50 + U-Net | 79.41 | 90.03 |

| VGG19 + U-Net | 81.93 | 92.66 |

| Number | Encoder | mIoU/% | PA/% | ||

|---|---|---|---|---|---|

| VGG19 | CBAM | Focal-Dice Loss | |||

| 1 | 73.54 | 85.34 | |||

| 2 | ✓ | 81.93 | 92.66 | ||

| 3 | ✓ | ✓ | 82.75 | 93.52 | |

| 4 | ✓ | ✓ | ✓ | 83.23 | 93.87 |

| Model | Encoder | Decoder |

|---|---|---|

| U-Net | Ten convolutional blocks | Deconvolution linked to an encoder |

| SegNet | VGG16 | Inverse convolution for multi-layer feature fusion |

| Our algorithm | VGG19 | Same as U-Net |

| ResNet | ResNet50 | Same as U-Net |

| Model | mIoU/% | Recall/% | Precision/% | PA/% |

|---|---|---|---|---|

| U-Net | 73.54 | 82.96 | 83.11 | 85.34 |

| SegMet | 77.86 | 86.06 | 88.47 | 88.92 |

| Our algorithm | 83.23 | 90.66 | 91.98 | 93.87 |

| ResNet | 80.02 | 89.75 | 90.11 | 90.62 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Gao, Y.; Fu, C.; Qiu, J.; Zhang, W. Research on the Corn Stover Image Segmentation Method via an Unmanned Aerial Vehicle (UAV) and Improved U-Net Network. Agriculture 2024, 14, 217. https://doi.org/10.3390/agriculture14020217

Xu X, Gao Y, Fu C, Qiu J, Zhang W. Research on the Corn Stover Image Segmentation Method via an Unmanned Aerial Vehicle (UAV) and Improved U-Net Network. Agriculture. 2024; 14(2):217. https://doi.org/10.3390/agriculture14020217

Chicago/Turabian StyleXu, Xiuying, Yingying Gao, Changhao Fu, Jinkai Qiu, and Wei Zhang. 2024. "Research on the Corn Stover Image Segmentation Method via an Unmanned Aerial Vehicle (UAV) and Improved U-Net Network" Agriculture 14, no. 2: 217. https://doi.org/10.3390/agriculture14020217

APA StyleXu, X., Gao, Y., Fu, C., Qiu, J., & Zhang, W. (2024). Research on the Corn Stover Image Segmentation Method via an Unmanned Aerial Vehicle (UAV) and Improved U-Net Network. Agriculture, 14(2), 217. https://doi.org/10.3390/agriculture14020217