YOLOv7-Based Intelligent Weed Detection and Laser Weeding System Research: Targeting Veronica didyma in Winter Rapeseed Fields

Abstract

:1. Introduction

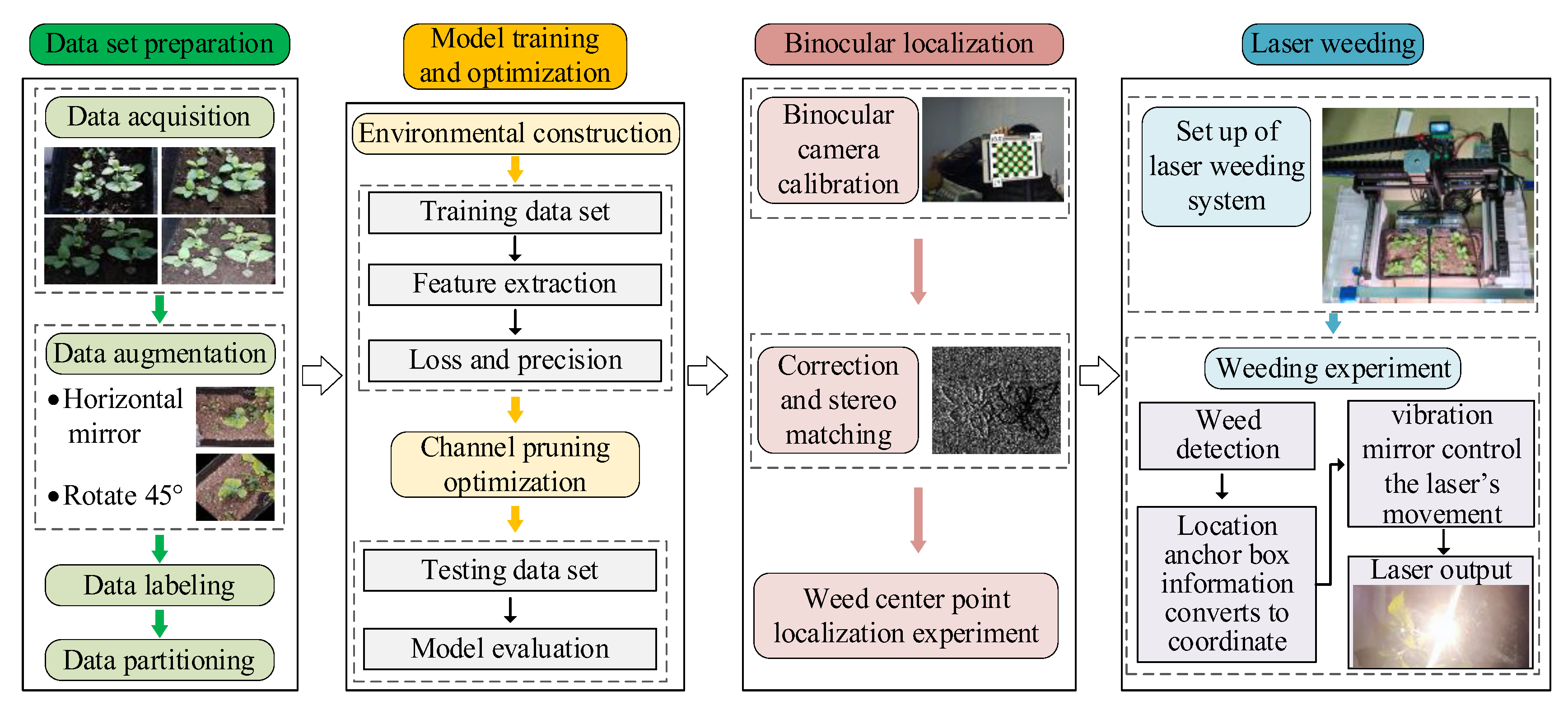

2. Overall Technical Route

3. Detection and Localization of Veronica didyma

3.1. Dataset Preparation

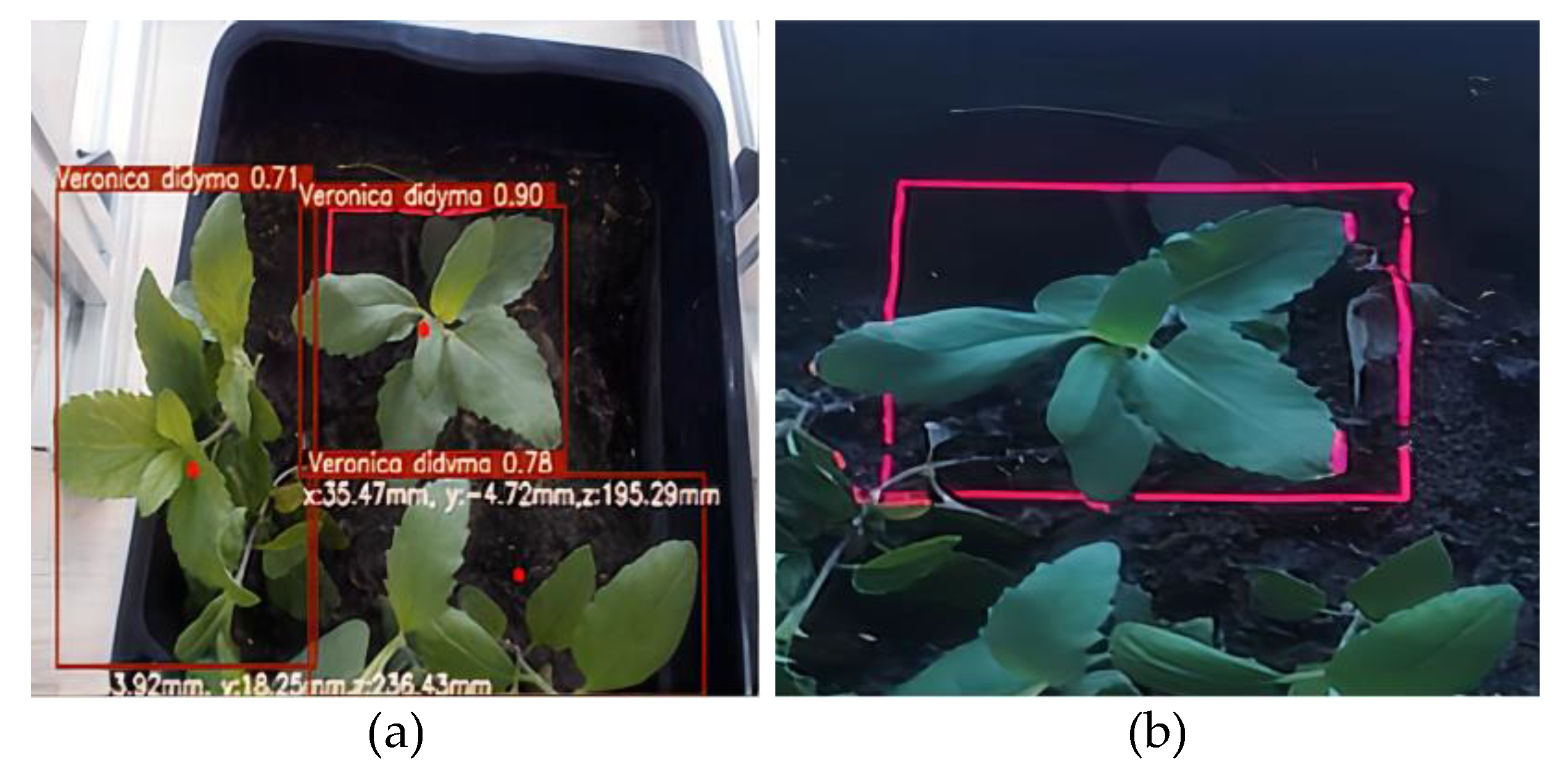

3.2. YOLOv7 Detection of Veronica didyma

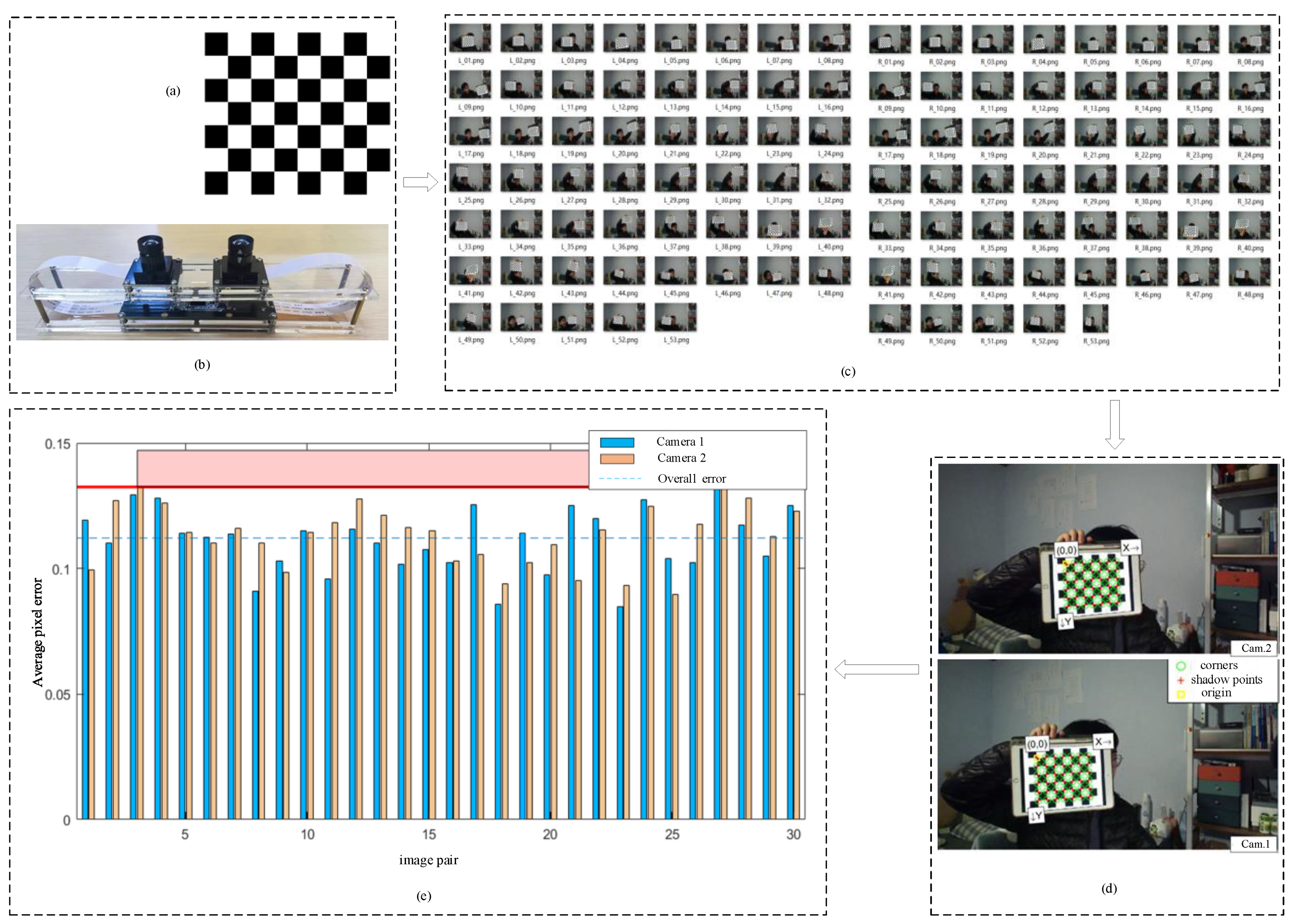

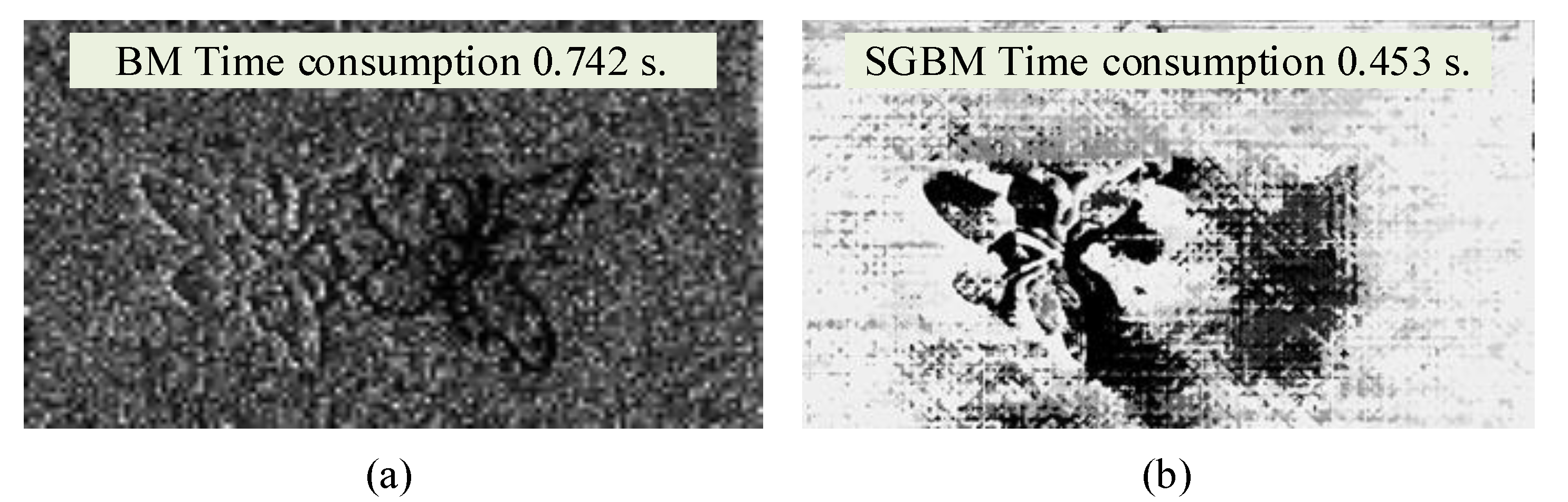

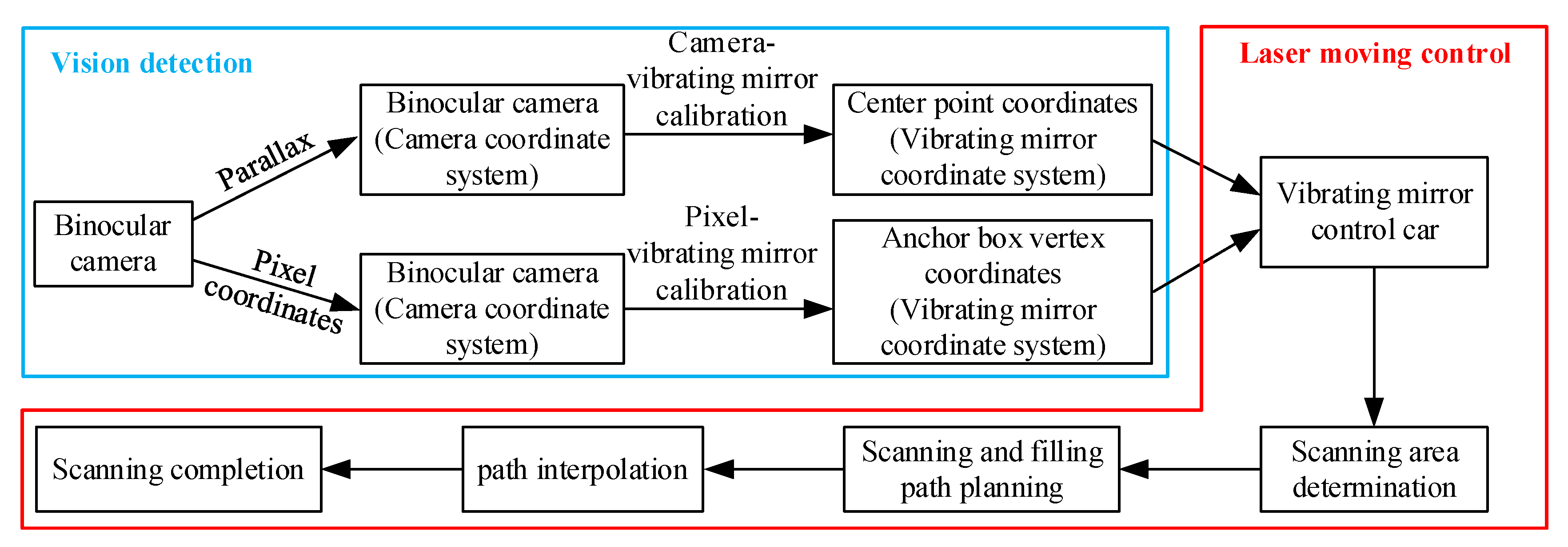

3.3. Localization of Veronica didyma

4. Weeding Experiment and Result Analysis

4.1. The Intelligent Weed Detection and Laser Weeding System Set-Up

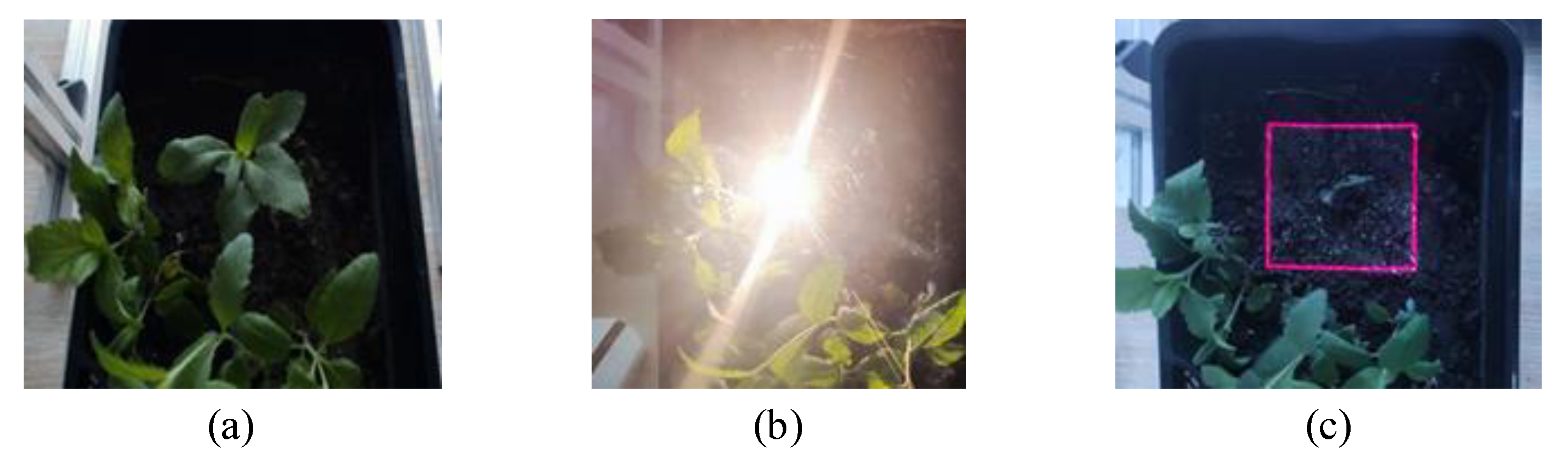

4.2. Laser Weeding of Veronica didyma

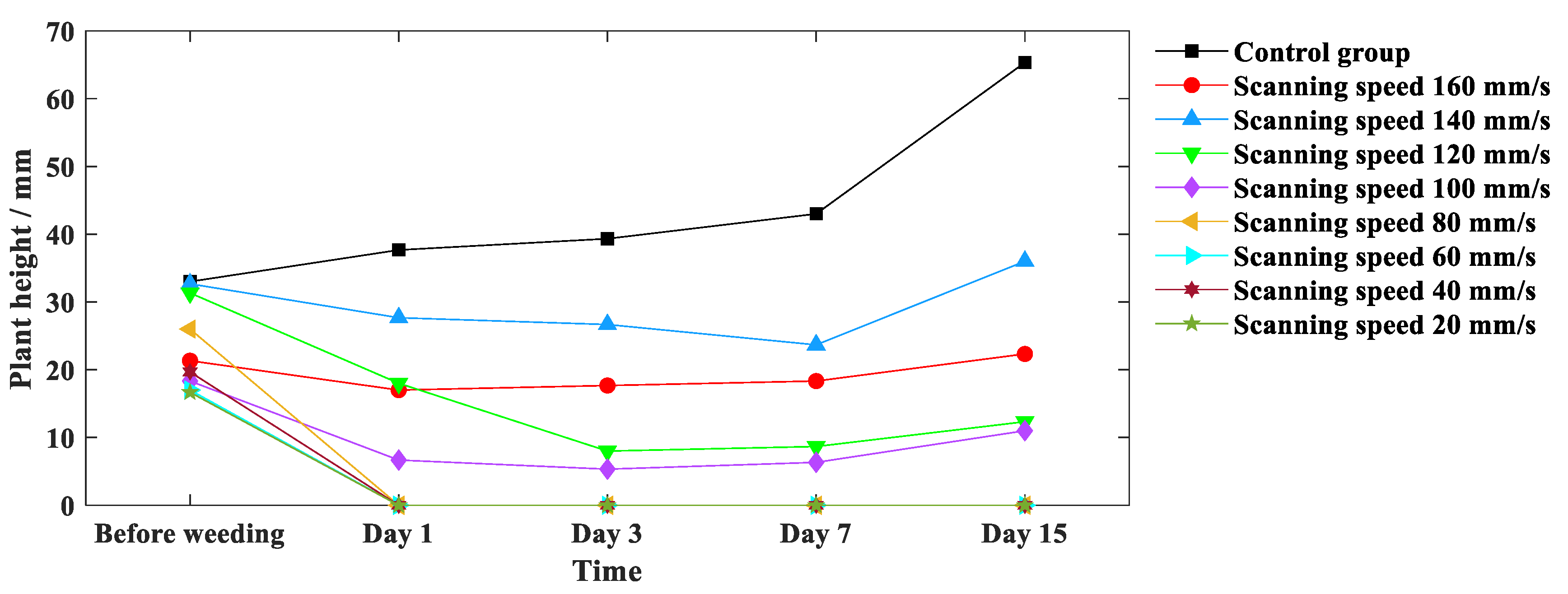

4.3. Determination of Optimal Scanning Parameters

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, J.M.; Long, Y.; Ye, H.; Wu, Y.L.; Zhu, Q.; Zhang, J.H.; Huang, H.; Zhong, Y.B.; Luo, Y.; Wang, M.Y. Effects of rapeseed oil on body composition and glucolipid metabolism in people with obesity and overweight: A systematic review and meta-analysis. Eur. J. Clin. Nutr. 2024, 78, 6–18. [Google Scholar] [CrossRef] [PubMed]

- Todorović, Z.B.; Mitrović, P.M.; Zlatković, V.; Grahovac, N.L.; Banković-Ilić, I.B.; Troter, D.Z.; Marjanović-Jeromela, A.M.; Veljković, V.B. Optimization of oil recovery from oilseed rape by cold pressing using statistical modeling. Food Meas. 2024, 18, 474–488. [Google Scholar] [CrossRef]

- Ji, C.X.; Zhai, Y.J.; Zhang, T.Z.; Shen, X.X.; Bai, Y.Y.; Hong, J.L. Carbon, energy and water footprints analysis of rapeseed oil production: A case study in China. J. Environ. Manag. 2021, 287, 112359. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Xu, X.L.; Hu, Y.M.; Liu, Z.J.; Qiao, Z. Efficiency analysis of bioenergy potential on winter fallow fields: A case study of rape. Sci. Total Environ. 2018, 628–629, 103–109. [Google Scholar] [CrossRef]

- Tian, Z.; Ji, Y.H.; Xu, H.Q.; Sun, L.X.; Zhong, H.; Liu, J.G. The potential contribution of growing rapeseed in winter fallow fields across Yangtze River Basin to energy and food security in China. Resour. Conserv. Recycl. 2021, 164, 105159. [Google Scholar] [CrossRef]

- Biswas, B.; Timsina, J.; Garai, S.; Mondal, M.; Banerjee, H.; Adhikary, S.; Kanthal, S. Weed control in transplanted rice with post-emergence herbicides and their effects on subsequent rapeseed in Eastern India. Int. J. Pest Manag. 2023, 69, 89–101. [Google Scholar] [CrossRef]

- Li, R.H.; Qiang, S.; Qiu, D.S.; Chu, Q.H.; Pan, G.X. Effects of long-term diferent fertilization regimes on the diversity of weed communities in oilseed rape fields under rice-oilseed rape cropping system. Biodivers. Sci. 2008, 2, 118–125. [Google Scholar]

- Zheng, X.; Koopmann, B.; Ulber, B.; Tiedemann, A.V. A Global Survey on Diseases and Pests in Oilseed Rape-Current Challenges and Innovative Strategies of Control. Front. Agron. 2020, 2, 590908. [Google Scholar] [CrossRef]

- Williams, I.H. The Major Insect Pests of Oilseed Rape in Europe and Their Management: An Overview; Springer: Dordrecht, The Netherlands, 2020; pp. 1–43. [Google Scholar] [CrossRef]

- Diepenbrock, W. Yield analysis of winter oilseed rape (Brassica napus L.): A review. Field Crop. Res. 2000, 67, 35–49. [Google Scholar] [CrossRef]

- Sundaram, P.K.; Rahman, A.; Singh, A.K.; Sarkar, B. A novel method for manual weeding in row crops. Indian J. Agric. Sci. 2021, 91, 946–948. [Google Scholar] [CrossRef]

- Altmanninger, A.; Brandmaier, V.; Spangl, B.; Gruber, E.; Takács, E.; Mörtl, M.; Klátyik, S.; Székács, A.; Zaller, J.G. Glyphosate-Based Herbicide Formulations and Their Relevant Active Ingredients Affect Soil Springtails Even Five Months after Application. Agriculture 2023, 13, 2260. [Google Scholar] [CrossRef]

- Cordova-Cardenas, R.; Emmi, L.; Gonzalez-de-Santos, P. Enabling Autonomous Navigation on the Farm: A Mission Planner for Agricultural Tasks. Agriculture 2023, 13, 2181. [Google Scholar] [CrossRef]

- Zingsheim, M.L.; Döring, T.F. What weeding robots need to know about ecology. Agric. Ecosyst. Environ. 2024, 364, 108861. [Google Scholar] [CrossRef]

- Pessina, A.; Humair, L.; Naderi, R.; Röder, G.; Seehausen, M.L.; Rasmann, S.; Weyl, P. Investigating the host finding behaviour of the weevil Phytobius vestitus for the biological control of the invasive aquatic weed Myriophyllum aquaticum. Biol. Control 2024, 192, 105509. [Google Scholar] [CrossRef]

- Hanley, M.E. Thermal shock and germination in North-West European Genisteae: Implications for heathland management and invasive weed control using fire. Appl. Veg. Sci. 2009, 12, 385–390. [Google Scholar] [CrossRef]

- Krupanek, J.; Santos, P.G.; Emmi, L.; Wollweber, M.; Sandmann, H.; Scholle, K.; Tran, D.D.M.; Schouteten, J.J.; Andreasen, C. Environmental performance of an autonomous laser weeding robot-a case study. Int. J. Life Cycle Assess. 2024, 29, 1021–1052. [Google Scholar] [CrossRef]

- N’cho, S.A.; Mourits, M.; Rodenburg, J.; Lansink, A.O. Inefficiency of manual weeding in rainfed rice systems affected by parasitic weeds. Agric. Econ. 2018, 50, 151–163. [Google Scholar] [CrossRef]

- Jacquet, F.; Delame, N.; Vita, J.L.; Huyghe, C.; Reboud, X. The micro-economic impacts of a ban on glyphosate and its replacement with mechanical weeding in French vineyards. Crop Prot. 2021, 150, 105778. [Google Scholar] [CrossRef]

- Pannacci, E.; Tei, F.; Guiducci, M. Evaluation of mechanical weed control in legume crops. Crop Prot. 2018, 104, 52–59. [Google Scholar] [CrossRef]

- Radicetti, E.; Mancinelli, R. Sustainable Weed Control in the Agro-Ecosystems. Sustainability 2021, 13, 8639. [Google Scholar] [CrossRef]

- Bajwa, A.A. Sustainable weed management in conservation agriculture. Crop Prot. 2014, 65, 105–113. [Google Scholar] [CrossRef]

- Katie-Kangas, D.V.M. A Perspective on Glyphosate Toxicity: The Expanding Prevalence of This Chemical Herbicide and Its Vast Impacts on Human and Animal Health. J. Am. Holist. Vet. Med. Assoc. 2022, 68, 11–21. [Google Scholar] [CrossRef]

- Raj, J.; Kumar, P.; Jat, S.; Yadav, A. A Review on Weed Management Techniques. Int. J. Plant Soil Sci. 2023, 35, 66–74. [Google Scholar] [CrossRef]

- Shams, M.Y.; Gamel, S.A.; Talaat, F.M. Enhancing crop recommendation systems with explainable artificial intelligence: A study on agricultural decision-making. Neural Comput. Appl. 2024, 36, 5695–5714. [Google Scholar] [CrossRef]

- Vijayakumar, V.; Ampatzidis, Y.; Schueller, J.K.; Burks, T. Smart spraying technologies for precision weed management: A review. Smart Agric. Technol. 2023, 6, 100337. [Google Scholar] [CrossRef]

- Lee, W.S.; Slaughter, D.C.; Giles, D.K. Robotic weed control system for tomatoes. Precis. Agric. 1999, 1, 95–113. [Google Scholar] [CrossRef]

- Partel, V.; Kakarla, S.C.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- Zhang, J.; Su, W.; Zhang, H.; Peng, Y. SE-YOLOv5x: An Optimized Model Based on Transfer Learning and Visual Attention Mechanism for Identifying and Localizing Weeds and Vegetables. Agronomy 2022, 12, 2061. [Google Scholar] [CrossRef]

- Rai, N.; Zhang, Y.; Villamil, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural weed identification in images and videos by integrating optimized deep learning architecture on an edge computing technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar] [CrossRef]

- Rai, N.; Sun, X. WeedVision: A single-stage deep learning architecture to perform weed detection and segmentation using drone-acquired images. Comput. Electron. Agric. 2024, 219, 108792. [Google Scholar] [CrossRef]

- Liu, T.H.; Nie, X.N.; Wu, J.M.; Zhang, D.; Liu, W.; Cheng, Y.; Zheng, Y.; Qiu, J.; Qi, L. Pineapple (Ananas comosus) fruit detection and localization in natural environment based on binocular stereo vision and improved YOLOv3 model. Precis. Agric. 2023, 24, 139–160. [Google Scholar] [CrossRef]

- Sun, X.; Jiang, Y.; Ji, Y.; Fu, W.; Yan, S.; Chen, Q.; Yu, B.; Gan, X. Distance measurement system based on binocular stereo vision. IOP Conf. Ser. Earth Environ. Sci. 2019, 252, 052051. [Google Scholar] [CrossRef]

- Wu, Y.; Qiu, C.; Liu, S.; Zou, X.; Li, X. Tomato Harvesting Robot System Based on Binocular Vision. In Proceedings of the 2021 IEEE International Conference on Unmanned Systems (ICUS), Beijing, China, 15–17 October 2021; pp. 757–761. [Google Scholar] [CrossRef]

- Li, T.F.; Fang, W.T.; Zhao, G.A.; Gao, F.F.; Wu, Z.C.; Li, R.; Fu, L.S.; Dhupia, J. An improved binocular localization method for apple based on fruit detection using deep learning. Inf. Process. Agric. 2021, 10, 276–287. [Google Scholar] [CrossRef]

- Pal, A.; Leite, A.C.; From, P.J. A novel end-to-end vision-based architecture for agricultural human–robot collaboration in fruit picking operations. Robot. Auton. Syst. 2024, 172, 104567. [Google Scholar] [CrossRef]

- Shu, Y.F.; Zheng, W.B.; Xiong, C.W.; Xie, Z.M. Research on the vision system of lychee picking robot based on stereo vision. J. Radiat. Res. Appl. Sci. 2024, 17, 100777. [Google Scholar] [CrossRef]

- Thai, H.; Le, K.; Nguyen, N. FormerLeaf: An efficient vision transformer for Cassava Leaf Disease detection. Comput. Electron. Agric. 2023, 204, 107518. [Google Scholar] [CrossRef]

- Wójtowicz, A.; Piekarczyk, J.; Wójtowicz, M.; Jasiewicz, J.; Królewicz, S.; Starzycka-Korbas, E. Classification of Plenodomus lingam and Plenodomus biglobosus in Co-Occurring Samples Using Reflectance Spectroscopy. Agriculture 2023, 13, 2228. [Google Scholar] [CrossRef]

- Tang, Y.C.; Zhou, H.; Wang, H.J.; Zhang, Y.Q. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Özlüoymak, Ö.B. Determination of Plant Height for Crop and Weed Discrimination by Using Stereo Vision System. J. Tekirdag Agric. Fac. 2020, 17, 97–107. [Google Scholar] [CrossRef]

- Zhang, R.R.; Lian, S.K.; Li, L.L.; Zhang, L.H.; Zhang, C.C.; Chen, L.P. Design and experiment of a binocular vision-based canopy volume extraction system for precision pesticide application by UAVs. Comput. Electron. Agric. 2023, 213, 108197. [Google Scholar] [CrossRef]

- Miao, Y.L.; Wang, L.Y.; Peng, C.; Li, H.; Li, X.H.; Zhang, M. Banana plant counting and morphological parameters measurement based on terrestrial laser scanning. Plant Methods 2022, 18, 66. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.Y.; Xia, J.F.; Zheng, K.; Cheng, J.; Wang, K.X.; Liu, Z.Y.; Wei, Y.S.; Xie, D.Y. Measurement and evaluation method of farmland microtopography feature information based on 3D LiDAR and inertial measurement unit. Soil Tillage Res. 2024, 236, 105921. [Google Scholar] [CrossRef]

- Thanpattranon, P.; Ahamed, T.; Takigawa, T. Navigation of an Autonomous Tractor for a Row-Type Tree Plantation Using a Laser Range Finder—Development of a Point-to-Go Algorithm. Robotics 2015, 4, 341–364. [Google Scholar] [CrossRef]

- Mathiassen, S.K.; Bak, T.; Christensen, S.; Kudsk, P. The effect of laser treatment as a weed control method. Biosyst. Eng. 2006, 95, 497–505. [Google Scholar] [CrossRef]

- Shah, R.; Lee, W.S. An approach to a laser weeding system for elimination of in-row weeds. In Precision Agriculture ’15; Stafford, J.V., Ed.; Wageningen Academic: Wageningen, The Netherlands, 2015; pp. 307–312. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Rao, K.S.; Paramkusam, A.V.; Darimireddy, N.K.; Chehri, A. Block Matching Algorithms for the Estimation of Motion in Image Sequences: Analysis. Procedia Comput. Sci. 2021, 192, 2980–2989. [Google Scholar] [CrossRef]

- Yin, W.; Ji, Y.F.; Chen, J.T.; Li, R.; Feng, S.J.; Chen, Q.; Pan, B.; Jiang, Z.Y.; Zou, C. Initializing and accelerating Stereo-DIC computation using semi-global matching with geometric constraints. Opt. Lasers Eng. 2024, 172, 107879. [Google Scholar] [CrossRef]

| System | Conda | GPU | Cuda | PyTorch | Torchvision |

|---|---|---|---|---|---|

| Win10 | 23.3.1 | NVIDIA GeForce GTX 4070 Ti (AUSU, Shenzhen, China) | 11.3 | 1.12.1 | 0.13.1 |

| Weed No. | Coordinate before Moving | Coordinate after Moving (Moved 80 mm) | Actual Movement Distance of X-axis (σ [%]) | Actual Movement Distance of Y-axis (σ [%]) |

|---|---|---|---|---|

| 1 | (10.31, 13.10) | (93.47, −70.32) | 83.16 (3.16%) | 83.42 (3.42%) |

| 2 | (81.42, 25.48) | (159.26, −58.06) | 77.84 (2.16%) | 83.54 (3.54%) |

| 3 | (−78.14, 67.69) | (3.97, −13.68) | 82.11 (2.11%) | 81.37 (1.37%) |

| 4 | (1.04, 63.71) | (85.46, −20.61) | 84.42 (4.42%) | 84.32 (4.32%) |

| 5 | (20.69, −74.38) | (−60.54, 5.56) | 81.23 (1.23%) | 79.94 (0.06%) |

| 6 | (137.03, −69.90) | (61.54, 13.64) | 75.49 (4.51%) | 83.54 (3.54%) |

| 7 | (65.96, −54.06) | (−17.06, 22.53) | 83.02 (3.02%) | 76.59 (3.41%) |

| 8 | (153.53, −18.64) | (69.65, 64.21) | 83.88 (3.88%) | 82.85 (2.85%) |

| Average error | 3.06% | 2.81% | ||

| Experiment No. | Confidence [%] | Camera Height [cm] | Recognition Rate |

|---|---|---|---|

| 1 | 1 (70%) | 1 | 1.00 |

| 2 | 1 | 2 | 0.98 |

| 3 | 1 | 3 | 0.94 |

| 4 | 1 | 4 | 0.94 |

| 5 | 1 | 5 | 0.61 |

| 6 | 2 (75%) | 1 | 1.00 |

| 7 | 2 | 2 | 0.94 |

| 8 | 2 | 3 | 0.90 |

| 9 | 2 | 4 | 0.84 |

| 10 | 2 | 5 | 0.48 |

| 11 | 3 (80%) | 1 | 1.00 |

| 12 | 3 | 2 | 0.84 |

| 13 | 3 | 3 | 0.87 |

| 14 | 3 | 4 | 0.71 |

| 15 | 3 | 5 | 0.39 |

| 16 | 4 (85%) | 1 | 0.90 |

| 17 | 4 | 2 | 0.77 |

| 18 | 4 | 3 | 0.65 |

| 19 | 4 | 4 | 0.55 |

| 20 | 4 | 5 | 0.26 |

| 21 | 5 (90%) | 1 | 0.57 |

| 22 | 5 | 2 | 0.48 |

| 23 | 5 | 3 | 0.19 |

| 24 | 5 | 4 | 0.16 |

| 25 | 5 | 5 | 0.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, L.; Xu, Z.; Wang, W.; Wu, X. YOLOv7-Based Intelligent Weed Detection and Laser Weeding System Research: Targeting Veronica didyma in Winter Rapeseed Fields. Agriculture 2024, 14, 910. https://doi.org/10.3390/agriculture14060910

Qin L, Xu Z, Wang W, Wu X. YOLOv7-Based Intelligent Weed Detection and Laser Weeding System Research: Targeting Veronica didyma in Winter Rapeseed Fields. Agriculture. 2024; 14(6):910. https://doi.org/10.3390/agriculture14060910

Chicago/Turabian StyleQin, Liming, Zheng Xu, Wenhao Wang, and Xuefeng Wu. 2024. "YOLOv7-Based Intelligent Weed Detection and Laser Weeding System Research: Targeting Veronica didyma in Winter Rapeseed Fields" Agriculture 14, no. 6: 910. https://doi.org/10.3390/agriculture14060910