Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton

Abstract

1. Introduction

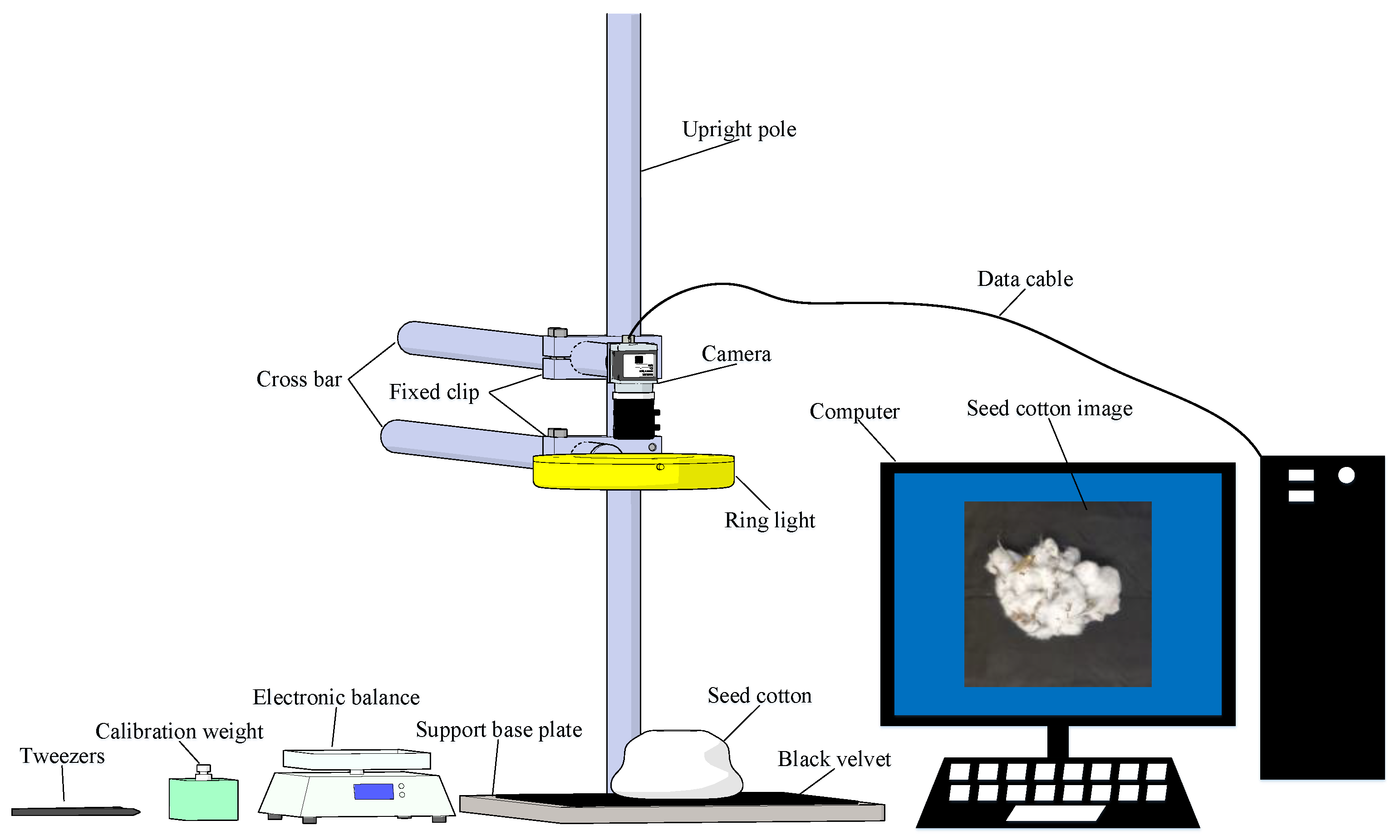

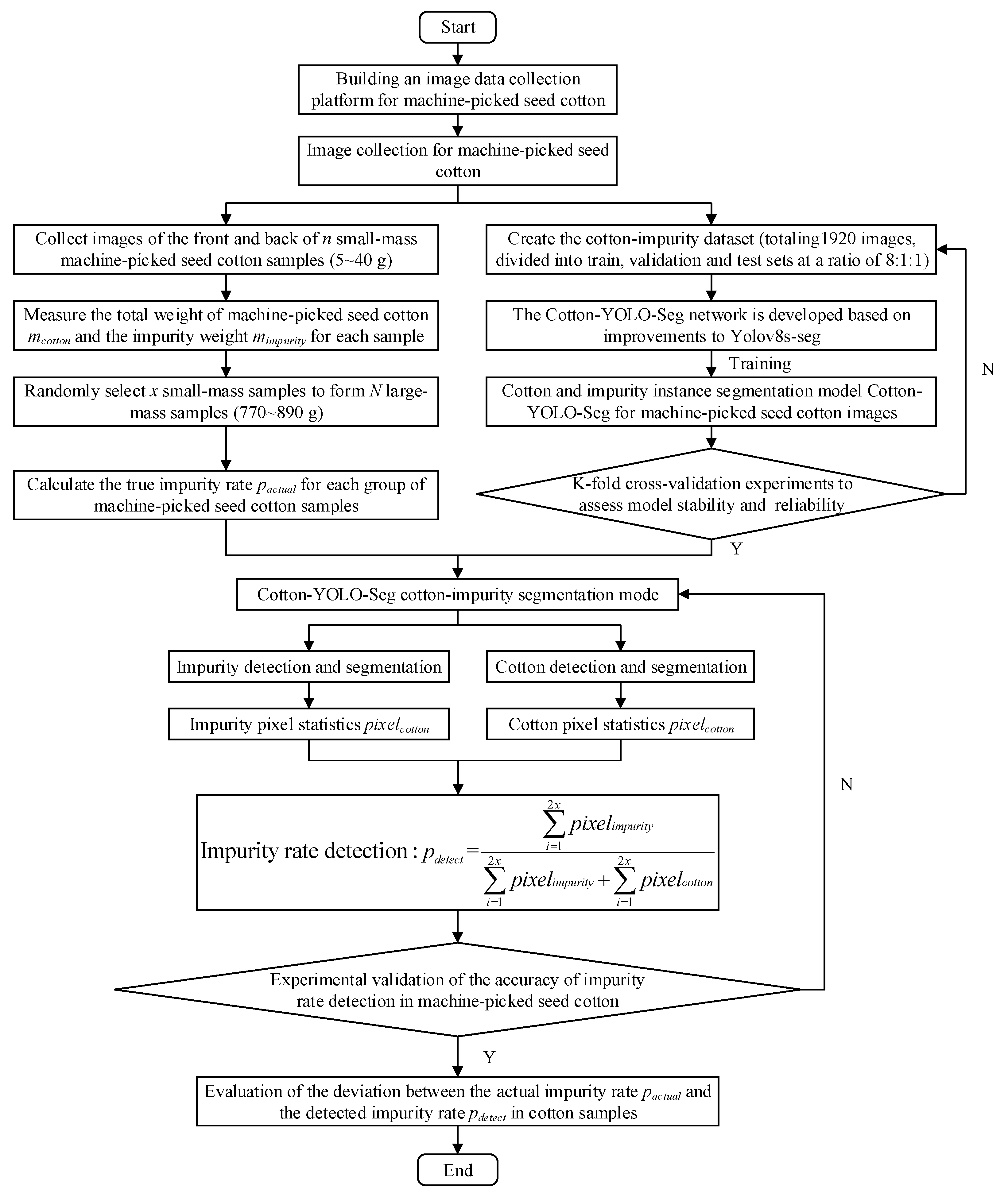

2. Materials and Methods

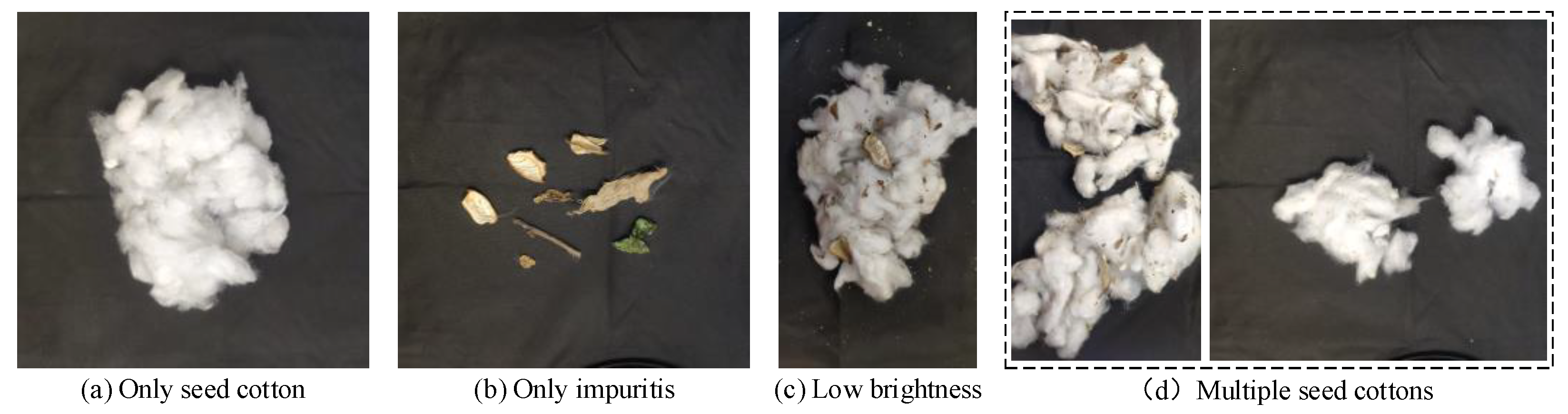

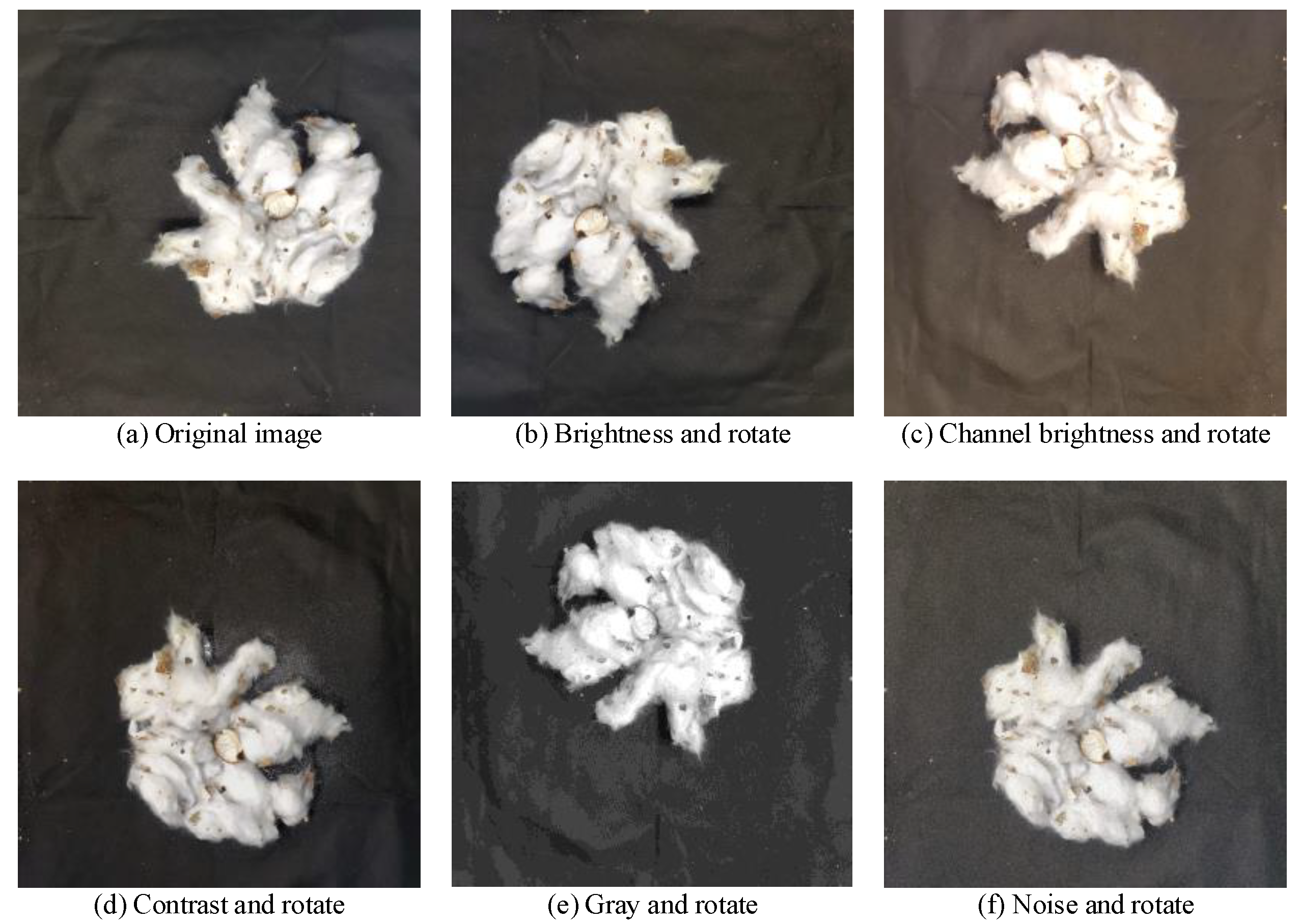

2.1. Datasets for Machine-Picked Seed Cotton

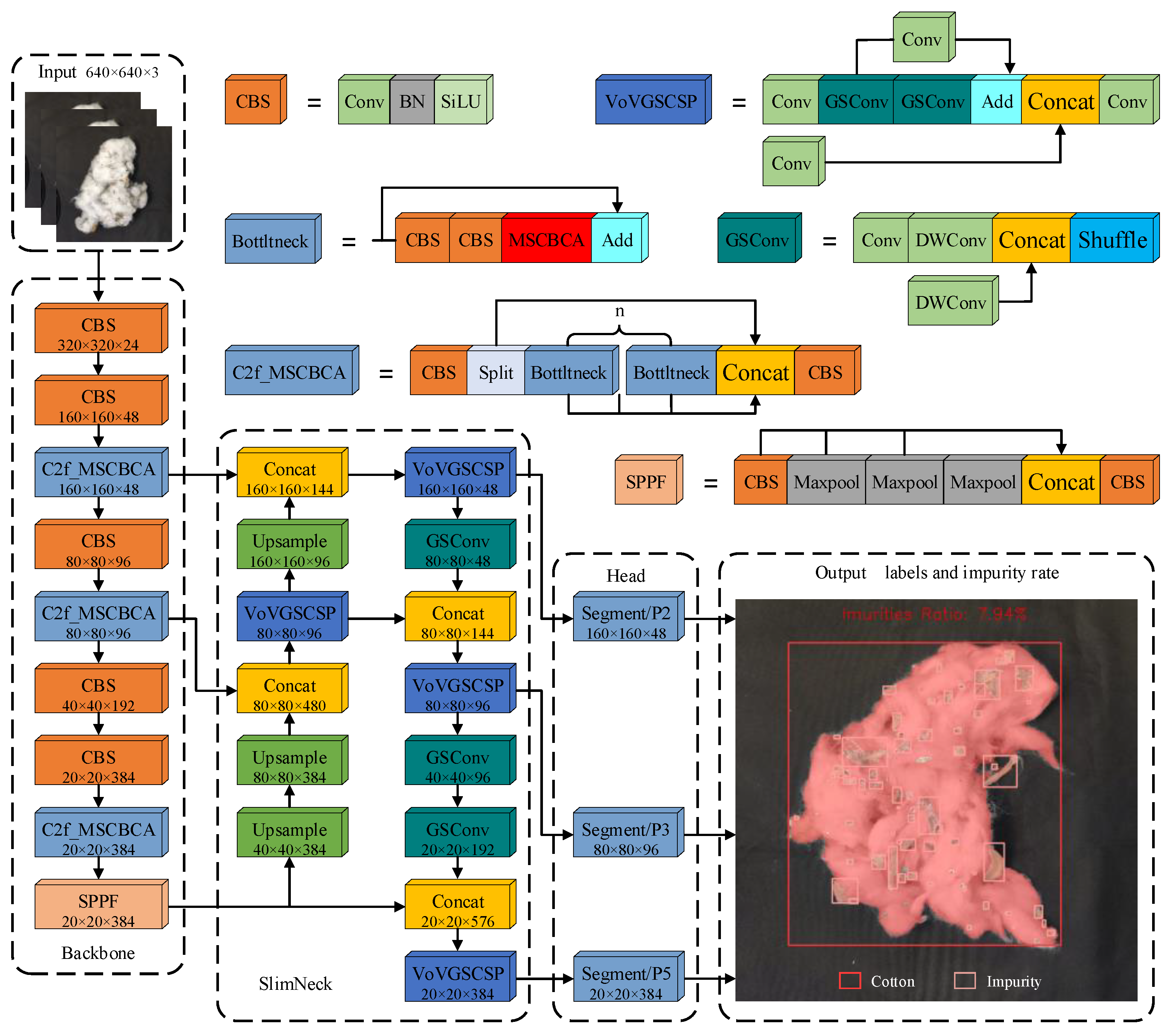

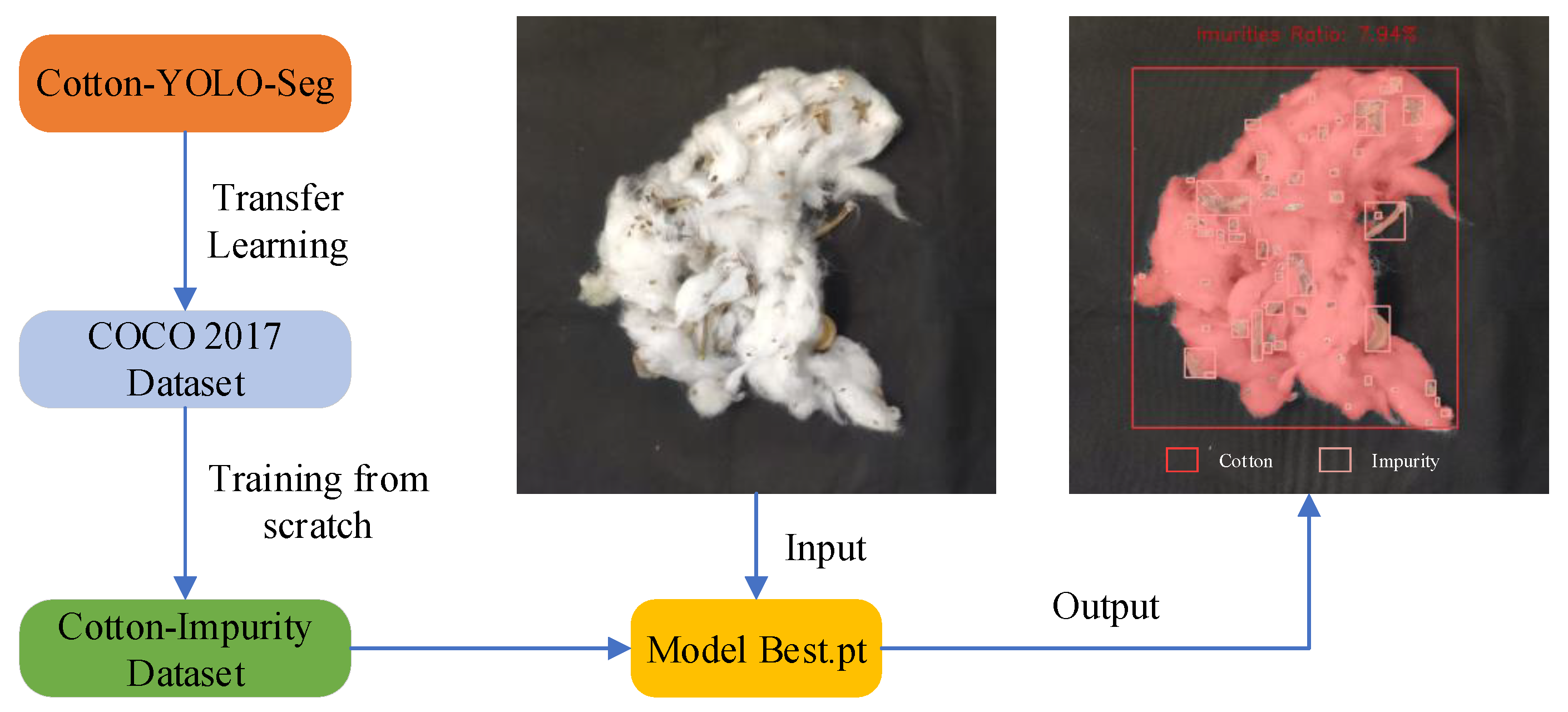

2.2. Cotton-YOLO-Seg Model

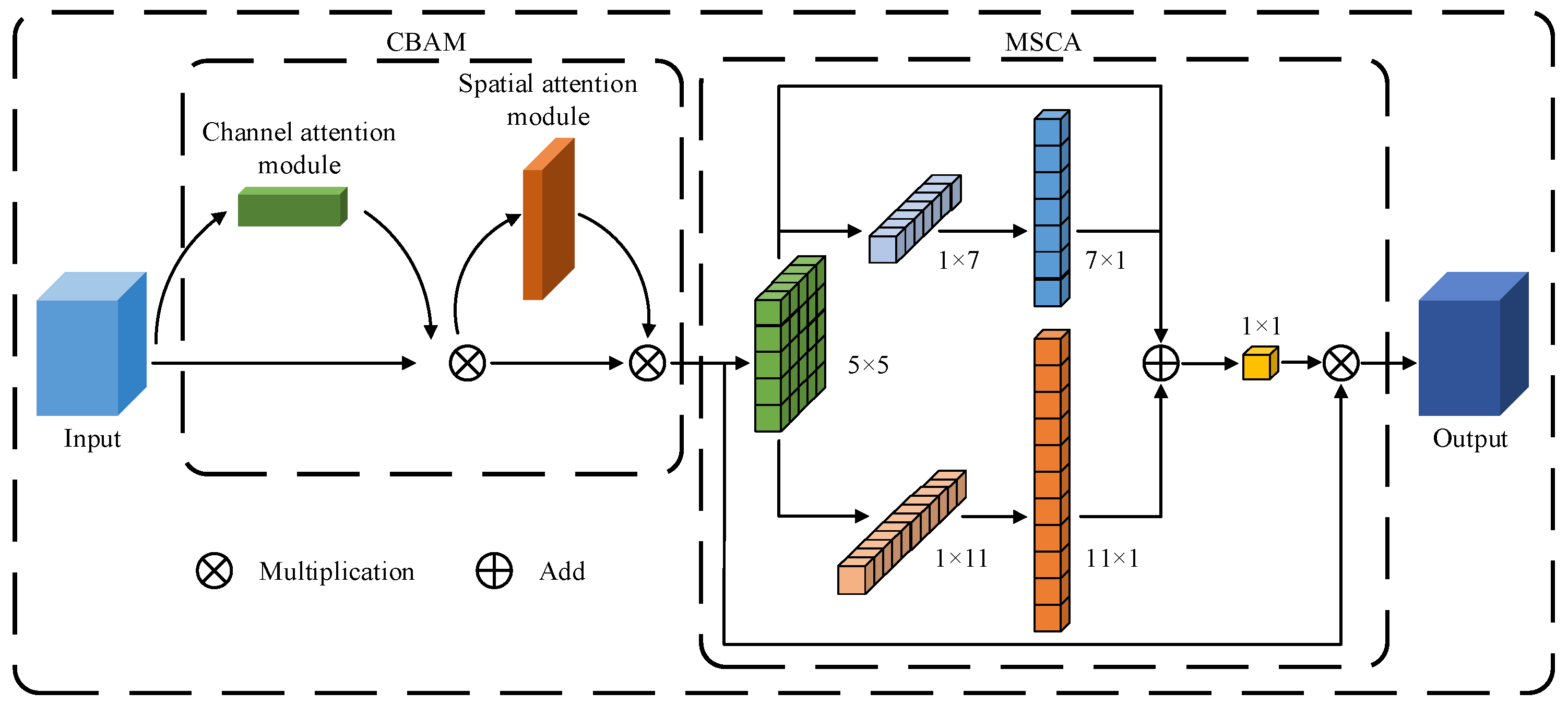

2.2.1. A Novel MSCBCA Attention Module

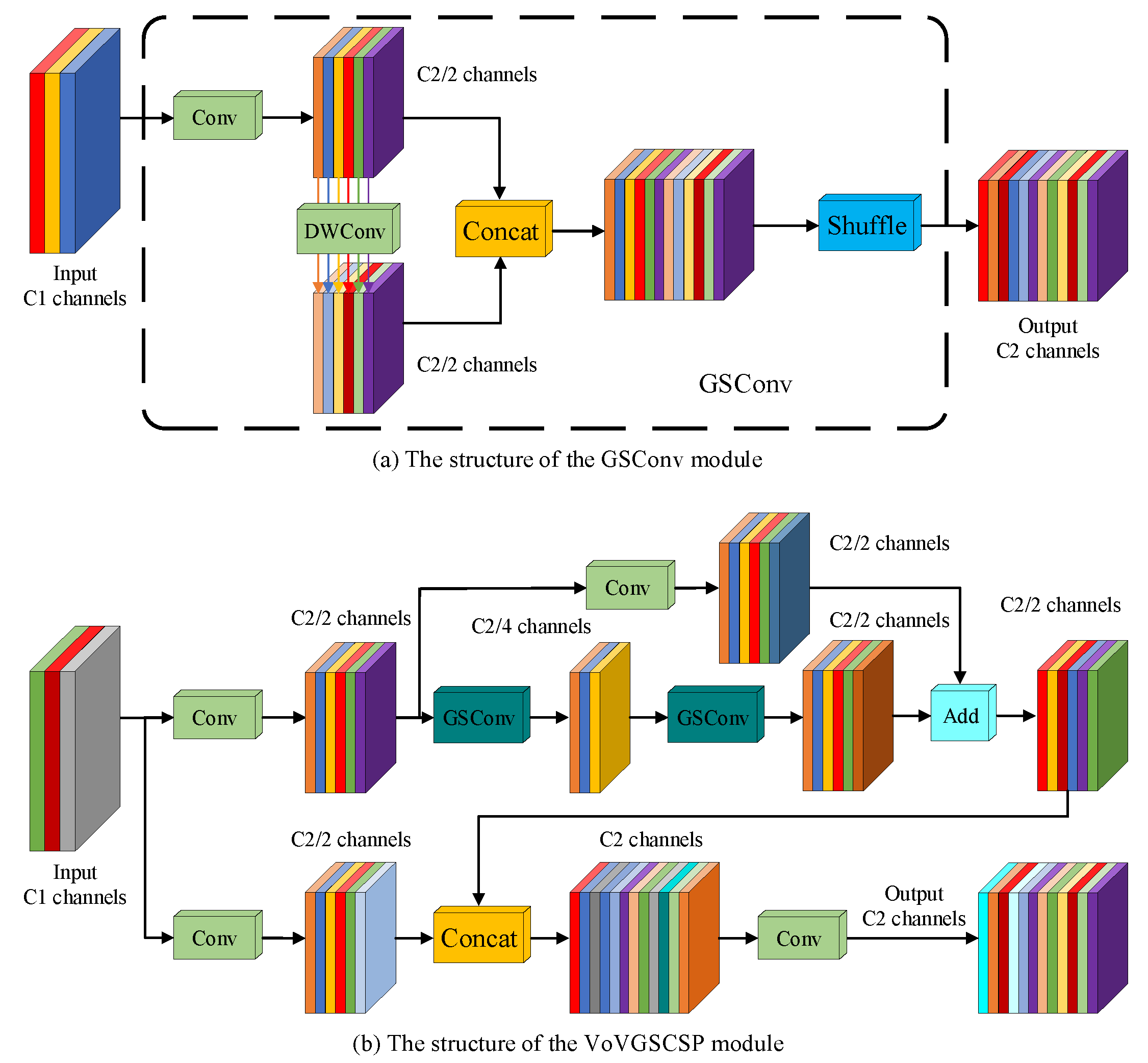

2.2.2. Neck Network Improvements

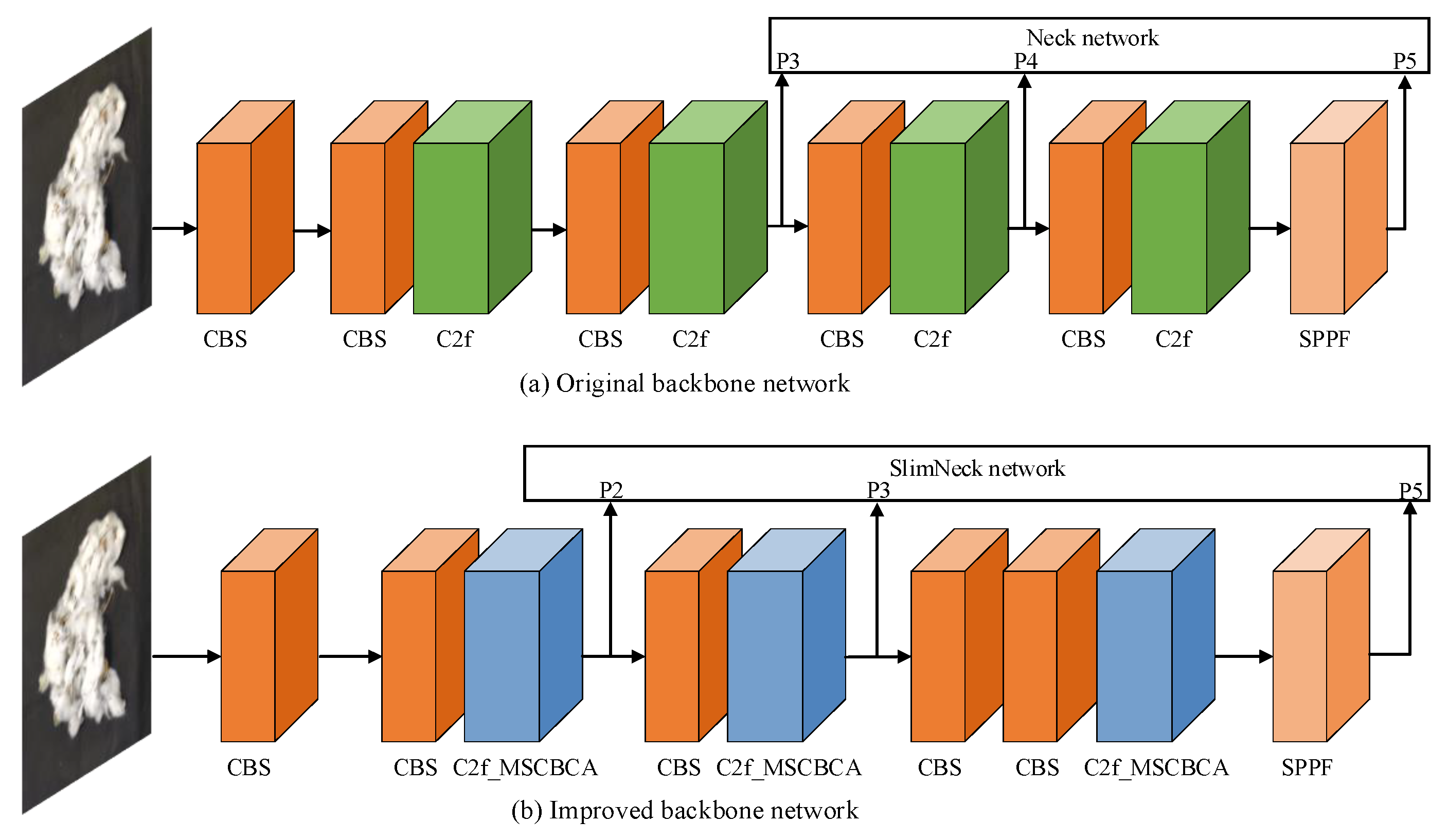

2.2.3. Backbone Network Lightweight

2.2.4. Transfer Learning from the COCO Dataset

3. Results

3.1. Experimental Environment Configuration

3.2. Precision Evaluation Indicators

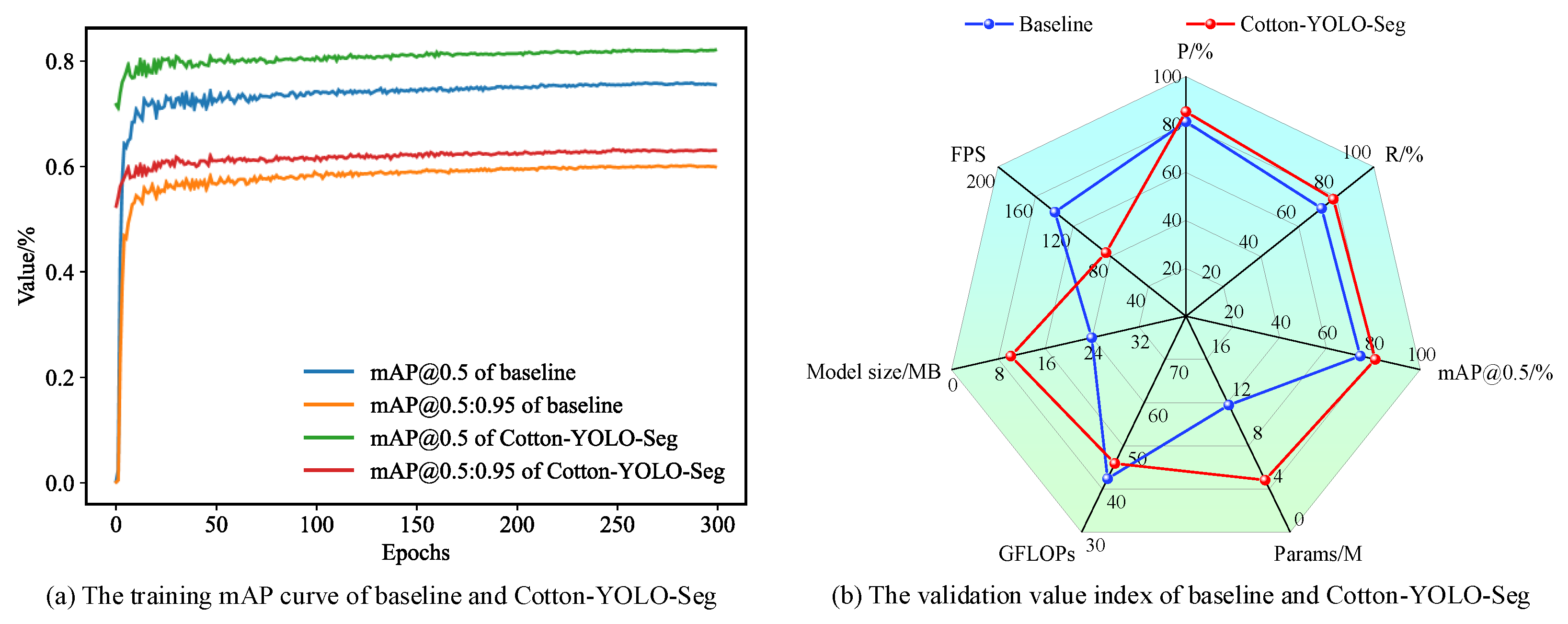

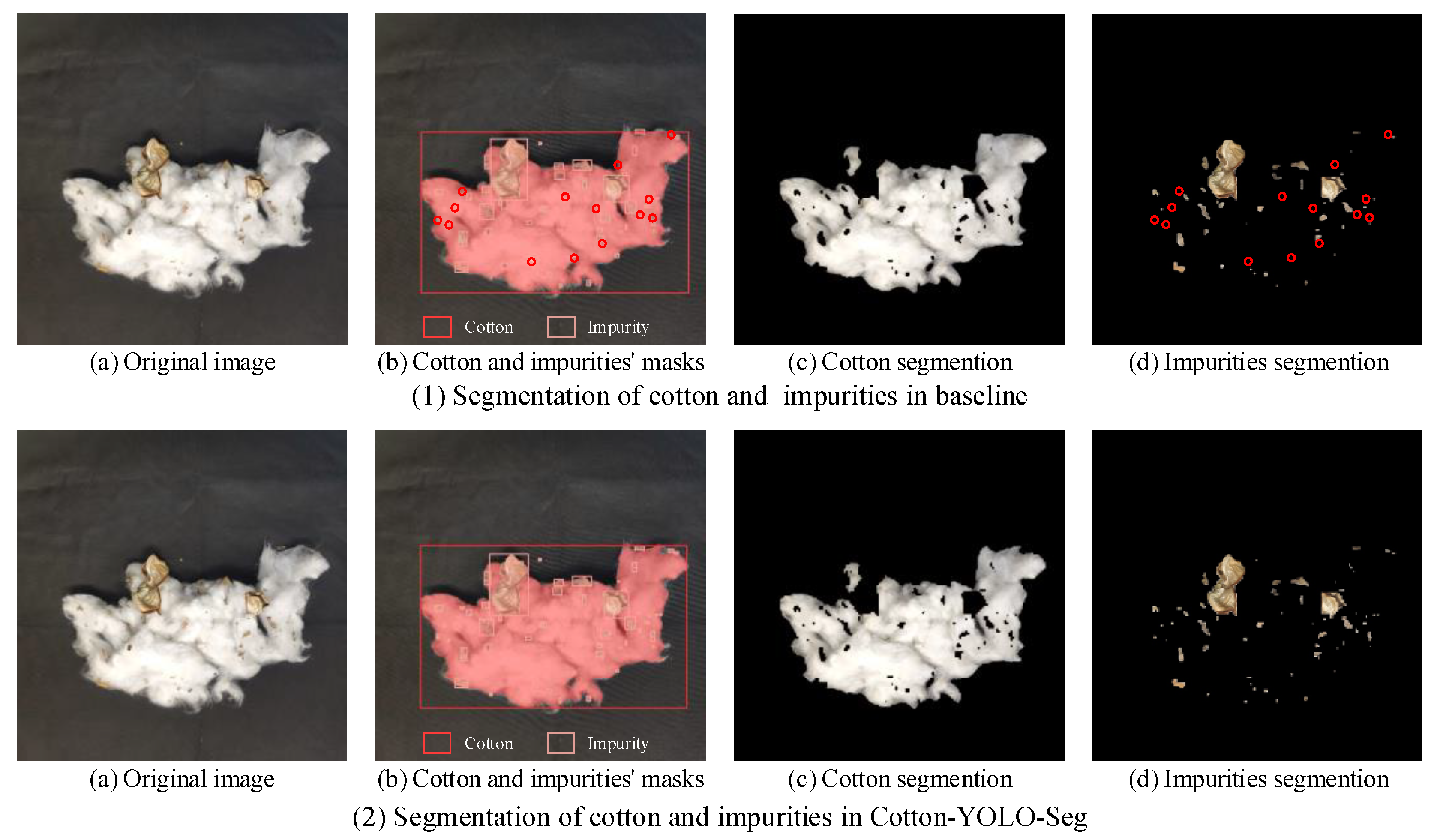

3.3. Comparison of Model Experiments

3.3.1. Comparative Experiments on Improving MSCBCA Attention

3.3.2. Ablation Experiments

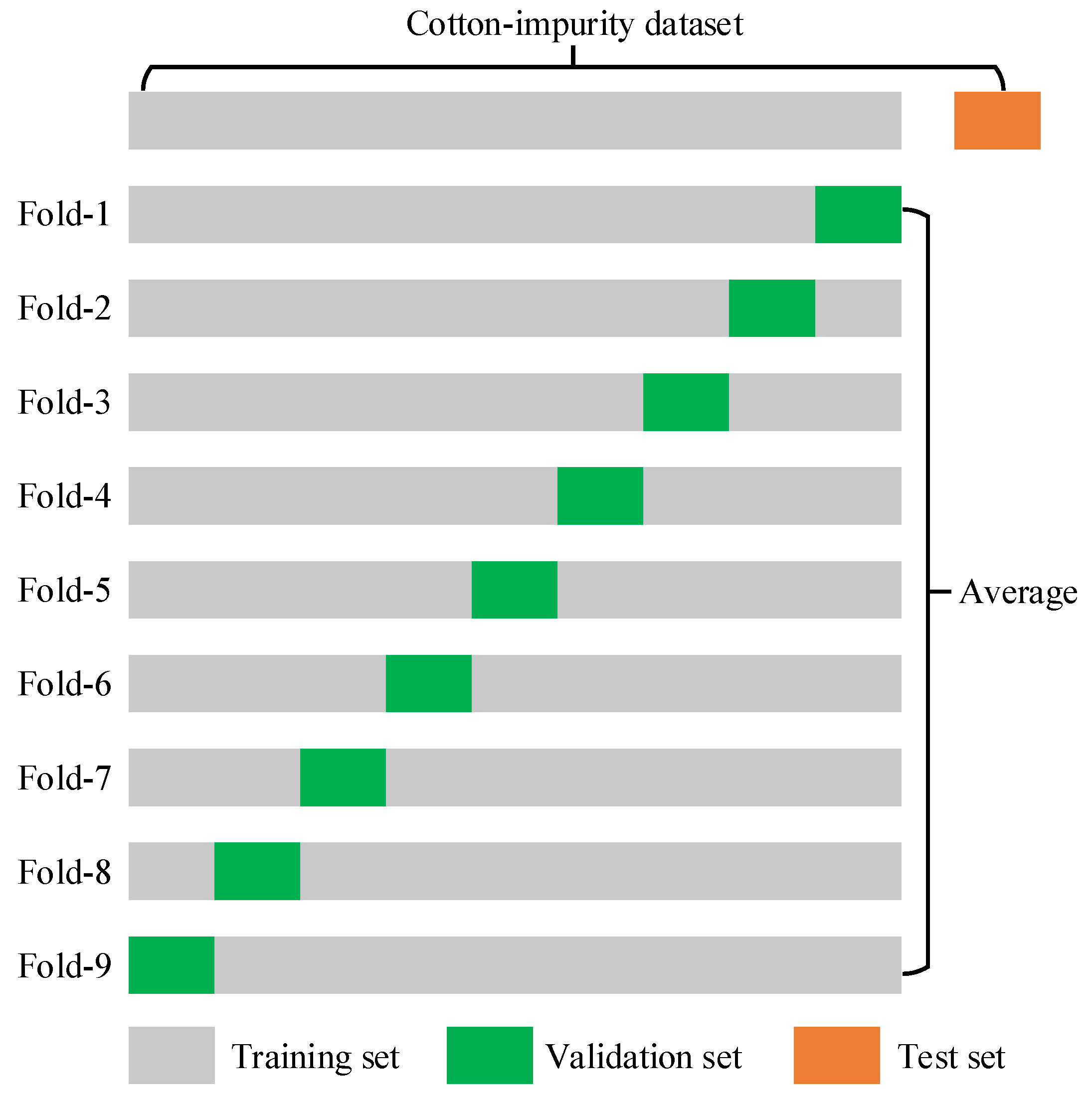

3.3.3. K-Fold Cross-Validation Experiments

3.3.4. Comparative Experiments with Different Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Li, H.; Zhang, R.; Zhou, W.; Liu, X.; Wang, K.; Zhang, M.; Li, Q. A novel method for seed cotton color measurement based on machine vision technology. Comput. Electron. Agric. 2023, 215, 108381. [Google Scholar] [CrossRef]

- Wang, X.; Yang, W.; Li, Z. A fast image segmentation algorithm for detection of pseudo-foreign fibers in lint cotton. Comput. Electr. Eng. 2015, 46, 500–510. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, W.; Zhang, X. Cotton-Net: Efficient and accurate rapid detection of impurity content in machine-picked seed cotton using near-infrared spectroscopy. Front. Plant Sci. 2024, 15, 1334961. [Google Scholar] [CrossRef] [PubMed]

- Wan, L.; Pang, Y.; Zhang, R.; Jiang, Y.; Zhang, M.; Song, F.; Chang, J.; Xia, B. Rapid measurement system for the impurity rate of machine-picked seed cotton in acquisition. Trans. Chin. Soc. Agric. Eng. 2021, 37, 182–189. [Google Scholar] [CrossRef]

- Zhou, W.; Xv, S.; Liu, C.; Zhang, J. Applications of near infrared spectroscopy in cotton impurity and fiber quality detection: A review. Appl. Spectrosc. Rev. 2016, 51, 318–332. [Google Scholar] [CrossRef]

- Zhang, C.; Li, T.; Li, J. Detection of impurity rate of machine-picked cotton based on improved canny operator. Electronics 2022, 11, 974. [Google Scholar] [CrossRef]

- Zhang, C.; Li, T.; Zhang, W. The detection of impurity content in machine-picked seed cotton based on image processing and improved YOLO V4. Agronomy 2021, 12, 66. [Google Scholar] [CrossRef]

- Zhang, C.; Li, L.; Dong, Q.; Ge, R. Recognition for machine picking seed cotton impurities based on GA-SVM model. Trans. Chin. Soc. Agric. Eng 2016, 32, 189–196. [Google Scholar] [CrossRef]

- Haider, A.; Arsalan, M.; Choi, J.; Sultan, H.; Park, K.R. Robust segmentation of underwater fish based on multi-level feature accumulation. Front. Mar. Sci. 2022, 9, 1010565. [Google Scholar] [CrossRef]

- Fernandes, A.F.; Turra, E.M.; de Alvarenga, É.R.; Passafaro, T.L.; Lopes, F.B.; Alves, G.F.; Singh, V.; Rosa, G.J. Deep Learning image segmentation for extraction of fish body measurements and prediction of body weight and carcass traits in Nile tilapia. Comput. Electron. Agric. 2020, 170, 105274. [Google Scholar] [CrossRef]

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Deep learning image segmentation and extraction of blueberry fruit traits associated with harvestability and yield. Hortic. Res. 2020, 7, 110. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Wang, A.; Liu, J.; Faheem, M. A comparative study of semantic segmentation models for identification of grape with different varieties. Agriculture 2021, 11, 997. [Google Scholar] [CrossRef]

- Lu, S.; Liu, X.; He, Z.; Zhang, X.; Liu, W.; Karkee, M. Swin-Transformer-YOLOv5 for real-time wine grape bunch detection. Remote Sens. 2022, 14, 5853. [Google Scholar] [CrossRef]

- Wang, X.; Wu, Z.; Jia, M.; Xu, T.; Pan, C.; Qi, X.; Zhao, M. Lightweight SM-YOLOv5 tomato fruit detection algorithm for plant factory. Sensors 2023, 23, 3336. [Google Scholar] [CrossRef]

- Bai, Y.; Mao, S.; Zhou, J.; Zhang, B. Clustered tomato detection and picking point location using machine learning-aided image analysis for automatic robotic harvesting. Precis. Agric. 2023, 24, 727–743. [Google Scholar] [CrossRef]

- Egi, Y.; Hajyzadeh, M.; Eyceyurt, E. Drone-computer communication based tomato generative organ counting model using YOLO V5 and deep-sort. Agriculture 2022, 12, 1290. [Google Scholar] [CrossRef]

- Chen, S.; Xiong, J.; Guo, W.; Bu, R.; Zheng, Z.; Chen, Y.; Yang, Z.; Lin, R. Colored rice quality inspection system using machine vision. J. Cereal Sci. 2019, 88, 87–95. [Google Scholar] [CrossRef]

- Liu, L.; Liang, J.; Wang, J.; Hu, P.; Wan, L.; Zheng, Q. An improved YOLOv5-based approach to soybean phenotype information perception. Comput. Electr. Eng. 2023, 106, 108582. [Google Scholar] [CrossRef]

- He, Y.; Fan, B.; Sun, L.; Fan, X.; Zhang, J.; Li, Y.; Suo, X. Rapid appearance quality of rice based on machine vision and convolutional neural network research on automatic detection system. Front. Plant Sci. 2023, 14, 1190591. [Google Scholar] [CrossRef]

- Taylor, R.A. Estimating the quantity of usable fibers in baled cotton. Text. Res. J. 1986, 56, 705–711. [Google Scholar] [CrossRef]

- Taylor, R.A. Estimating the size of cotton trash with video images. Text. Res. J. 1990, 60, 185–193. [Google Scholar] [CrossRef]

- Yang, W.; Li, D.; Zhu, L.; Kang, Y.; Li, F. A new approach for image processing in foreign fiber detection. Comput. Electron. Agric. 2009, 68, 68–77. [Google Scholar] [CrossRef]

- Zhang, X.; Li, D.; Yang, W.; Wang, J.; Liu, S. A fast segmentation method for high-resolution color images of foreign fibers in cotton. Comput. Electron. Agric. 2011, 78, 71–79. [Google Scholar] [CrossRef]

- Singh, N.; Tewari, V.; Biswas, P.; Dhruw, L.; Pareek, C.; Singh, H.D. Semantic segmentation of in-field cotton bolls from the sky using deep convolutional neural networks. Smart Agric. Technol. 2022, 2, 100045. [Google Scholar] [CrossRef]

- Singh, N.; Tewari, V.; Biswas, P.; Dhruw, L. Lightweight convolutional neural network models for semantic segmentation of in-field cotton bolls. Artif. Intell. Agric. 2023, 8, 1–19. [Google Scholar] [CrossRef]

- Tedesco-Oliveira, D.; da Silva, R.P.; Maldonado Jr, W.; Zerbato, C. Convolutional neural networks in predicting cotton yield from images of commercial fields. Comput. Electron. Agric. 2020, 171, 105307. [Google Scholar] [CrossRef]

- Wei, W.; Zhang, C.; Deng, D. Content estimation of foreign fibers in cotton based on deep learning. Electronics 2020, 9, 1795. [Google Scholar] [CrossRef]

- Li, Q.; Ma, W.; Li, H.; Zhang, X.; Zhang, R.; Zhou, W. Cotton-YOLO: Improved YOLOV7 for rapid detection of foreign fibers in seed cotton. Comput. Electron. Agric. 2024, 219, 108752. [Google Scholar] [CrossRef]

- Xu, T.; Ma, A.; Lv, H.; Dai, Y.; Lin, S.; Tan, H. A lightweight network of near cotton-coloured impurity detection method in raw cotton based on weighted feature fusion. IET Image Proc. 2023, 17, 2585–2595. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, G.; Liu, Y.; Wang, C.; Yin, Y. An improved YOLO network for unopened cotton boll detection in the field. J. Intell. Fuzzy Syst. 2022, 42, 2193–2206. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, Y.; Yang, G. Small unopened cotton boll counting by detection with MRF-YOLO in the wild. Comput. Electron. Agric. 2023, 204, 107576. [Google Scholar] [CrossRef]

- Zhu, L.; Li, X.; Sun, H.; Han, Y. Research on CBF-YOLO detection model for common soybean pests in complex environment. Comput. Electron. Agric. 2024, 216, 108515. [Google Scholar] [CrossRef]

- Tian, Y.; Wang, S.; Li, E.; Yang, G.; Liang, Z.; Tan, M. MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Comput. Electron. Agric. 2023, 213, 108233. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. pp. 740–755. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar] [CrossRef]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A better design paradigm of detector architectures for autonomous vehicles. arXiv 2022. [Google Scholar] [CrossRef]

- Chu, B.; Shao, R.; Fang, Y.; Lu, Y. Weed Detection Method Based on Improved YOLOv8 with Neck-Slim. In Proceedings of the 2023 China Automation Congress (CAC), Nanjing, China, 2–5 October 2023; pp. 9378–9382. [Google Scholar] [CrossRef]

- Lin, B. Safety Helmet Detection Based on Improved YOLOv8. IEEE Access 2024, 12, 28260–28272. [Google Scholar] [CrossRef]

- Kandel, I.; Castelli, M. The effect of batch size on the generalizability of the convolutional neural networks on a histopathology dataset. ICT Express 2020, 6, 312–315. [Google Scholar] [CrossRef]

- Wang, X.; Kong, T.; Shen, C.; Jiang, Y.; Li, L. Solo: Segmenting objects by locations. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVIII 16 2020. pp. 649–665. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, R.; Kong, T.; Li, L.; Shen, C. Solov2: Dynamic and fast instance segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 17721–17732. [Google Scholar] [CrossRef]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J. MMDetection: Open mmlab detection toolbox and benchmark. arXiv 2019. [Google Scholar] [CrossRef]

| Hardware Platform or Software Environment | Model Identity or Designation | Parametric or Version |

|---|---|---|

| CPU | Intel Xeon E5-2695 v4 | Frequency: 2.10 GHz |

| GPU | NVIDIA GeForce RTX 3060M | Memory: 12 GB |

| Computer system | Windows 10 Professional | RAM: 32GB |

| Deep learning framework | Pytorch | 2.0.0 |

| Computational platform | CUDA | 11.7 |

| Integrated development environment | PyCharm | Community 2022.3.3 |

| Programming language | Python | 3.10.11 |

| Method | P/% | R/% | mAP@0.5/% | Params/M | Model Size/MB | GFLOPs |

|---|---|---|---|---|---|---|

| Baseline | 81.2 | 72.2 | 74.4 | 11.78 | 23.9 | 42.4 |

| Baseline +MSCA | 80.7 | 72.5 | 74.6 | 12.13 | 24.7 | 43.6 |

| Baseline +CBAM | 82.1 | 71.7 | 74.3 | 11.99 | 24.3 | 42.5 |

| Baseline +MSCBCA | 81.9 | 72.3 | 74.9 | 12.28 | 25.0 | 43.4 |

| MSCBCA | SlimNeck | Remove P4 | Transfer Learning | P/% | R/% | mAP@0.5/% |

|---|---|---|---|---|---|---|

| 81.2 | 72.2 | 74.4 | ||||

| ✓ | 81.9 (+0.7) | 72.3 (+0.1) | 74.9 (+0.5) | |||

| ✓ | ✓ | 85.9 (+4.7) | 77.4 (+5.2) | 80.4 (+6.0) | ||

| ✓ | ✓ | ✓ | 85.2 (+4.0) | 78.1 (+5.9) | 80.8 (+6.4) | |

| ✓ | ✓ | ✓ | ✓ | 85.4 (+4.2) | 78.4 (+6.2) | 80.8 (+6.4) |

| K-Fold | P/% | R/% | mAP@0.5/% |

|---|---|---|---|

| Fold-1 | 85.7 | 78.1 | 80.9 |

| Fold-2 | 85.1 | 78.4 | 80.8 |

| Fold-3 | 85.4 | 78.4 | 81.0 |

| Fold-4 | 85.2 | 78.5 | 80.8 |

| Fold-5 | 85.4 | 78.4 | 80.8 |

| Fold-6 | 85.0 | 78.5 | 81.0 |

| Fold-7 | 85.3 | 78.3 | 80.7 |

| Fold-8 | 85.0 | 78.4 | 80.7 |

| Fold-9 | 85.0 | 78.5 | 80.8 |

| Average | 85.2 | 78.4 | 80.8 |

| Model | P/% | R/% | mAP@0.5/% | Params/M | Model Size/MB | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|

| Baseline | 81.2 | 72.2 | 74.4 | 11.78 | 23.9 | 42.4 | 139.4 |

| Yolov5s-seg | 80.0 | 71.5 | 73.8 | 9.77 | 19.9 | 37.8 | 145.5 |

| Yolov8n-seg | 78.8 | 71.5 | 72.9 | 3.26 | 6.8 | 12.0 | 302.2 |

| Yolov8m-seg | 81.5 | 72.2 | 74.8 | 27.22 | 54.8 | 110.0 | 59.2 |

| Yolov8l-seg | 82.0 | 72.6 | 75.4 | 45.91 | 92.3 | 220.1 | 37.2 |

| Yolov8x-seg | 83.2 | 72.4 | 75.8 | 71.72 | 144.0 | 343.7 | 22.1 |

| Yolov9-gelan-c-seg | 80.6 | 72.1 | 74.0 | 27.36 | 55.7 | 144.2 | 37.3 |

| Yolov10s-seg | 80.9 | 72.4 | 74.1 | 9.17 | 18.8 | 40.5 | 135.7 |

| YOLACT | 87.1 | 60.3 | 56.9 | 34.73 | 133.0 | 81.5 | 8.3 |

| SOLO | 89.5 | 63.1 | 61.6 | 36.12 | 138.0 | 143.0 | 7.6 |

| SOLOV2 | 91.8 | 64.2 | 63.0 | 46.23 | 177 | 132.0 | 7.9 |

| Mask R-CNN | 88.9 | 67.7 | 68.2 | 43.98 | 169.0 | 135.0 | 6.4 |

| Cotton-YOLO-Seg | 85.4 | 78.4 | 80.8 | 4.82 | 10.1 | 45.9 | 85.1 |

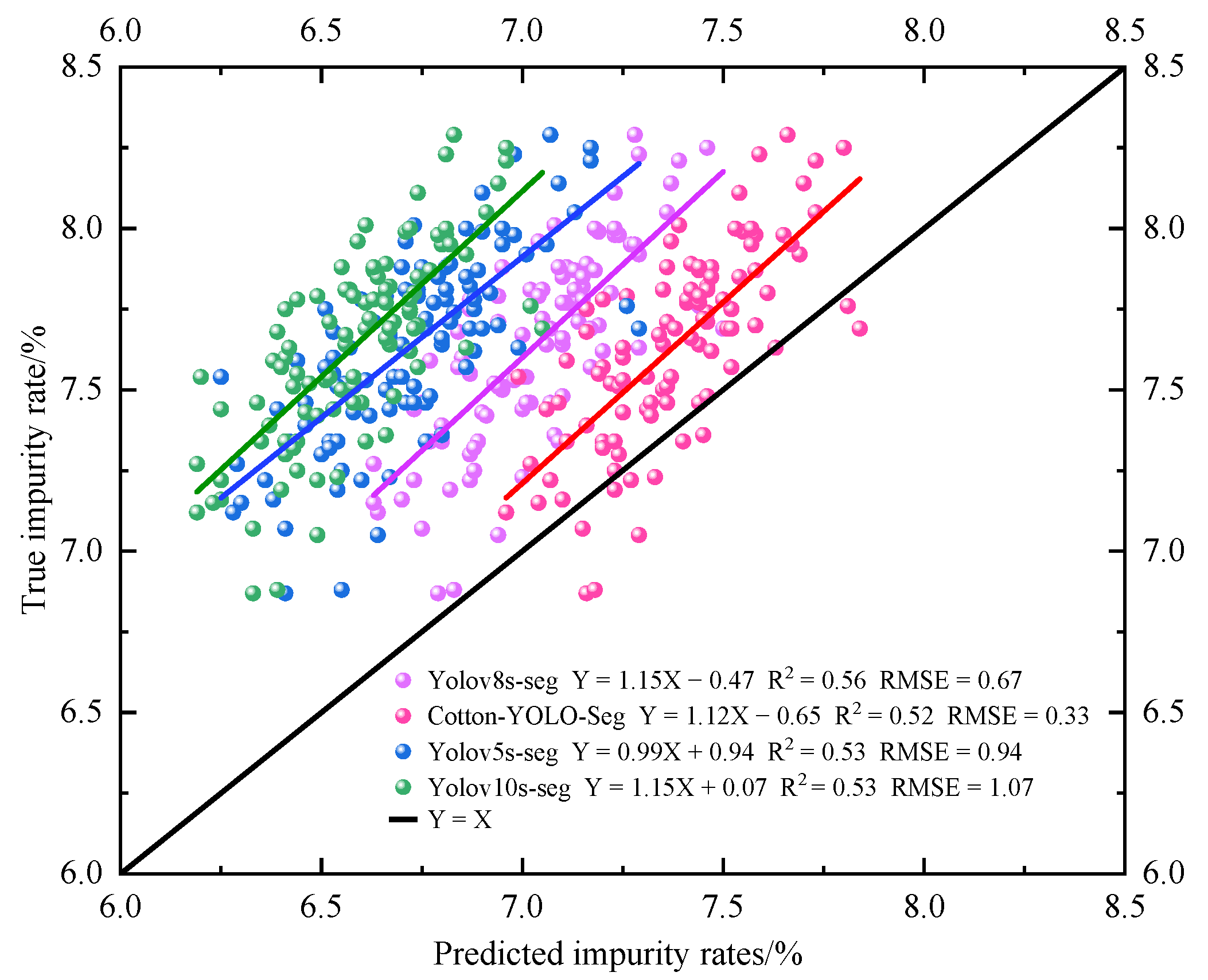

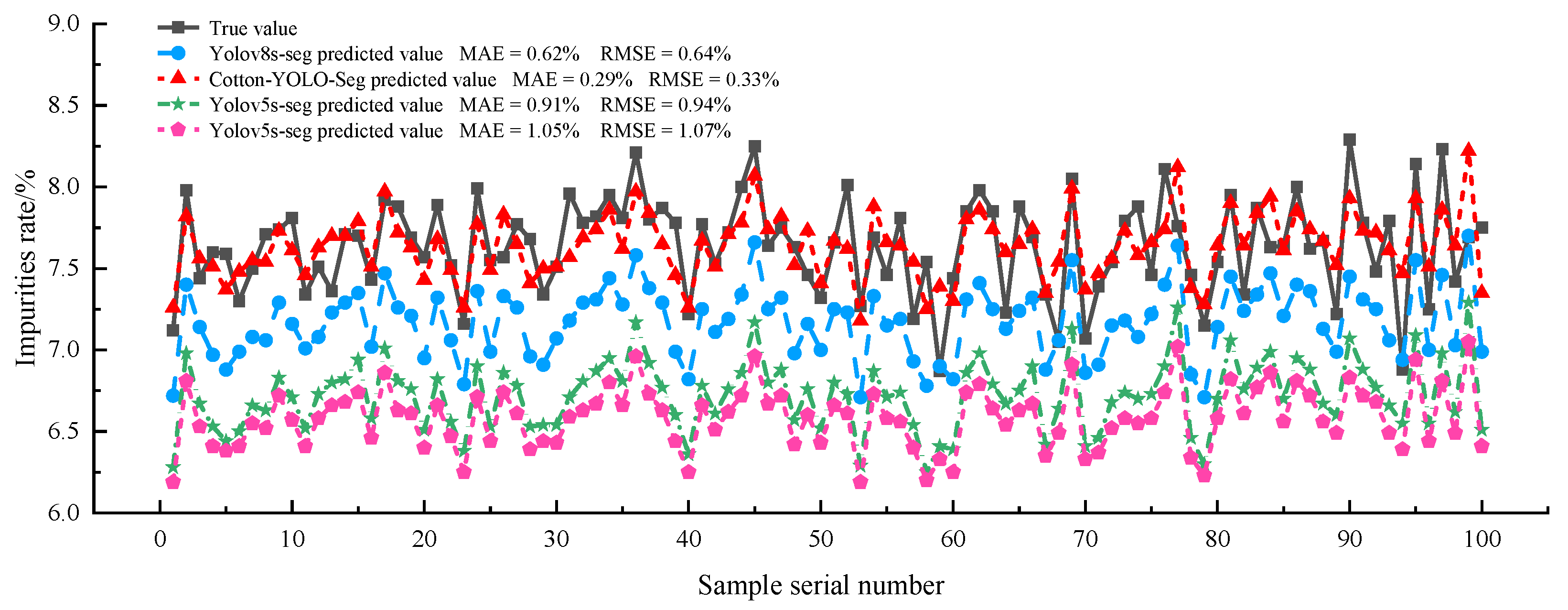

| Model | Average Actual Impurity Rate/% | Average Detected Impurity Rate/% | MAE/% | RMSE/% | MAPE/% |

|---|---|---|---|---|---|

| Baseline | 7.64 | 7.01 | 0.61 | 0.64 | 8.00 |

| Cotton-YOLO-Seg | 7.64 | 7.38 | 0.29 | 0.33 | 3.70 |

| Yolov5s-seg | 7.64 | 6.73 | 0.91 | 0.94 | 11.90 |

| Yolov10s-seg | 7.64 | 6.59 | 1.05 | 1.07 | 13.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, L.; Chen, W.; Shi, H.; Zhang, H.; Wang, L. Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton. Agriculture 2024, 14, 1499. https://doi.org/10.3390/agriculture14091499

Jiang L, Chen W, Shi H, Zhang H, Wang L. Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton. Agriculture. 2024; 14(9):1499. https://doi.org/10.3390/agriculture14091499

Chicago/Turabian StyleJiang, Long, Weitao Chen, Hongtai Shi, Hongwen Zhang, and Lei Wang. 2024. "Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton" Agriculture 14, no. 9: 1499. https://doi.org/10.3390/agriculture14091499

APA StyleJiang, L., Chen, W., Shi, H., Zhang, H., & Wang, L. (2024). Cotton-YOLO-Seg: An Enhanced YOLOV8 Model for Impurity Rate Detection in Machine-Picked Seed Cotton. Agriculture, 14(9), 1499. https://doi.org/10.3390/agriculture14091499