Abstract

This study presents a comprehensive solution for precise and timely pest monitoring in field environments through the development of an advanced rice pest detection system based on the YOLO-RMD model. Addressing critical challenges in real-time detection accuracy and environmental adaptability, the proposed system integrates three innovative components: (1) a novel Receptive Field Attention Convolution module enhancing feature extraction in complex backgrounds; (2) a Mixed Local Channel Attention module balances local and global features to improve detection precision for small targets in dense foliage; (3) an enhanced multi-scale detection architecture incorporating Dynamic Head with an additional detection head, enabling simultaneous improvement in multi-scale pest detection capability and coverage. The experimental results demonstrate a 3% accuracy improvement over YOLOv8n, achieving 98.2% mean Average Precision at 50% across seven common rice pests while maintaining real-time processing capabilities. This integrated solution addresses the dual requirements of precision and timeliness in field monitoring, representing a significant advancement for agricultural vision systems. The developed framework provides practical implementation pathways for precision pest management under real-world farming conditions.

1. Introduction

Rice (Oryza sativa L.), a cornerstone of global food security, serves as the primary staple for over half of the world’s population and contributes approximately 20% of human dietary energy requirements [1]. As highlighted in the 2024 State of Food and Agriculture report by the Food and Agriculture Organization, rice cultivation is a critical component of agricultural food systems, particularly in regions where food security is most vulnerable. However, rice production faces significant threats from pest infestations, with studies indicating annual yield losses of up to 30% due to delayed pest detection [2]. This substantial economic impact is further exacerbated by the exponential growth of pest populations, which typically increase two to threefold before detection using conventional methods. Consequently, how to develop precise and timely pest monitoring in the field environment becomes a critical question for enhancing rice productivity and ensuring food security. This aligns with the FAO’s emphasis on precision agricultural practices and the reduction of hidden costs associated with pest-related losses.

In the early stages of pest detection technology development, manual methods were primarily employed. These methods included the use of pest trap lights and yellow sticky boards to track pest populations. However, these methods relied heavily on manual labor, which was not only costly and inefficient for farmers but also highly subjective and prone to errors. The labor-intensive nature of manual monitoring meant that pest detection was often delayed, leading to significant yield losses.

To improve the efficiency of pest management, the development of unmanned intelligent monitoring is crucial, with artificial intelligence (AI) technology serving as the foundation. As technology advances, AI has become a transformative force in agriculture. Among these innovations, deep learning techniques have gained significant attention for their ability to detect plant pests and diseases with minimal manual intervention. Key algorithms such as Convolutional Neural Networks (CNNs), Single Shot MultiBox Detector (SSD) [3], and You Only Look Once (YOLO) [4,5,6] have demonstrated notable potential in object detection tasks. For instance, Thenmozhi et al. introduced an efficient deep CNN model that utilizes transfer learning to fine-tune pre-trained models [7]. As a result, the model achieved classification accuracies of 96.75%, 97.47%, and 95.97% on three publicly available insect datasets. However, CNNs struggle with computational complexity and multi-scale object detection in complex environments. Similarly, Fuentes et al. utilized Faster R-CNN combined with deep feature extractors like VGG-Net and ResNet to detect tomato pests and diseases [8]. They achieved an average precision of 85.98% on a dataset of 5000 images with nine types of pests and diseases. However, Faster R-CNN’s high computational demands hinder its real-time applications. The SSD method, while balancing speed and accuracy, faces challenges in detecting small objects in cluttered agricultural settings. The recent YOLO model, known for its speed and efficiency, has been adapted for various agricultural applications. Yang et al. developed Maize-YOLO, a modified version of YOLOv7, by integrating CSPResNeXt-50 and VoVGSCSP modules [9]. This approach reduced computational load while improving detection accuracy, achieving a mean Average Precision (mAP) of 76.3% on a dataset of 4533 images with 13 categories of maize pests. Despite its advantages, YOLO’s performance declines with small objects or complex backgrounds. Recent advancements, such as Wang et al.’s attention-based CNN (93.16% accuracy) [10] and Jiao et al.’s adaptive Pyramid Network (77% accuracy on AgriPest21) [11], highlight the potential of domain-specific optimizations. Despite these promising results, the application of deep learning in plant pest detection still faces several challenges that need to be addressed.

The challenges are particularly pronounced in rice cultivation, where the dense planting environment and complex field backgrounds further complicate the efforts of rice pest detection. Additionally, the significant variations in size among different species of rice pests, as well as between the larvae and adult forms of the same pest species, complicate the accurate identification and monitoring of multiple pest types. Despite extensive research, a universally effective algorithm to address these complexities has yet to emerge.

Rice cultivation presents particularly pronounced challenges in pest detection. Primarily, the dense planting environment and complex field background significantly increase the complexity of target feature extraction. Secondly, pest targets exhibit remarkable multi-scale characteristics, with size variations among different rice pest species (e.g., planthoppers and rice borers) potentially spanning an order of magnitude. Even within the same pest species, the scale difference between larvae and adults can reach a quantitative magnitude, presenting substantial challenges to the scale adaptability of detection models. More critically, when detection models are required to process mixed pest targets simultaneously, the constraining effects of multi-scale issues are exponentially amplified, rendering traditional single-pest detection solutions inadequate for direct migration and application.

In the evolution of YOLO-based models, continuous refinements have been made to address the challenges of small-object detection, particularly in agricultural applications such as rice pest identification. Early detection models primarily relied on conventional CNN-based architectures with fixed-scale convolution operations, which often resulted in sparse feature extraction and missed detections in complex environments involving occlusion and overlapping targets. To mitigate these limitations, Hu et al. integrated a generative adversarial network (GAN) with a multi-scale dual-branch recognition model, enhancing residual network (ResNet) capabilities for rice disease and pest identification [12]. The optimized GAN-MSDB-ResNet model demonstrated superior recognition performance, achieving an accuracy of 99.34% and outperforming classical networks like AlexNet. However, its reliance on fixed receptive fields limited its adaptability to morphological variations. To enhance feature adaptability, Li et al. introduced a self-attention feature fusion model, SAFFPest, based on VarifocalNet, incorporating a deformable convolution module to improve feature extraction from pests with variable shapes [13]. The model successfully detected nine rice pest species, including the rice leaf folder and rice armyworm, achieving an average precision improvement of 33.7%, 6.5%, 4.5%, 2.9%, and 2% over Faster R-CNN, RetinaNet, CP-FCOS, VFNet, and BiFA-YOLO, respectively. This study underscored the role of attention mechanisms in adapting to morphological variability.

Furthering YOLO-based advancements, Hu et al. proposed YOLO-GBS, an improved version of YOLOv5s, designed to enhance small-object detection through multiple architectural refinements [14]. The integration of Global Context (GC) attention improved feature extraction, while the replacement of PANet with Bidirectional Feature Pyramid Network (BiFPN) optimized feature fusion. Additionally, Swin Transformer blocks were introduced to replace partial convolutional structures, enabling better global information capture, and an additional detection head was incorporated to expand the detection scale. These modifications collectively contributed to a mAP of 79.8% on pest datasets, reflecting a 5.4% improvement over the original YOLOv5s. However, despite these advancements, the dataset lacked real-world environmental considerations, such as weather variations, raising concerns about robustness and real-time performance degradation in field conditions. Despite improvements in feature spatial correlation and dynamic receptive field adjustment, existing solutions still face efficiency bottlenecks in balancing accuracy and computational costs. Moreover, current models exhibit significant limitations in addressing multi-class detection requirements. For instance, Yao et al. developed a handheld device leveraging Haar features and Histogram of Oriented Gradients (HOG) to detect white-backed rice planthoppers, achieving an accuracy of 85.2% [15]. He et al. introduced a two-tier Faster R-CNN framework, strategically selecting different backbone networks for each tier to optimize computational resources and enhance detection under varying population densities [16]. The model outperformed YOLOv3 in empirical evaluations, achieving a mean recall of 81.92% and an average precision of 94.64%. However, it remained constrained to a single pest type, limiting its broader applicability. Similarly, Zhang et al. proposed RPH-Counter, incorporating a Self-Attention Feature Pyramid Network (SAFPN) and Spatial Self-Attention (SSA) to enhance rice planthopper detection [17]. Liu et al. leveraged Mask R-CNN combined with GAN-based data augmentation to improve pest information sensitivity, achieving superior precision, recall, and F1-score compared to Faster R-CNN, SSD, and YOLOv5 [18]. Nevertheless, these models were limited to detecting only specific pest species, such as the gray planthopper, and lacked generalization to broader pest detection scenarios. Despite the enhanced spatial correlation enabled by attention mechanisms, existing models struggle with multi-scale pest detection, as traditional single-scale attention remains inadequate for capturing feature differences spanning millimeter- to centimeter-scale targets. These challenges highlight the necessity for further research in optimizing YOLO-based architectures for real-world agricultural applications, ensuring robust multi-species detection while maintaining computational efficiency.

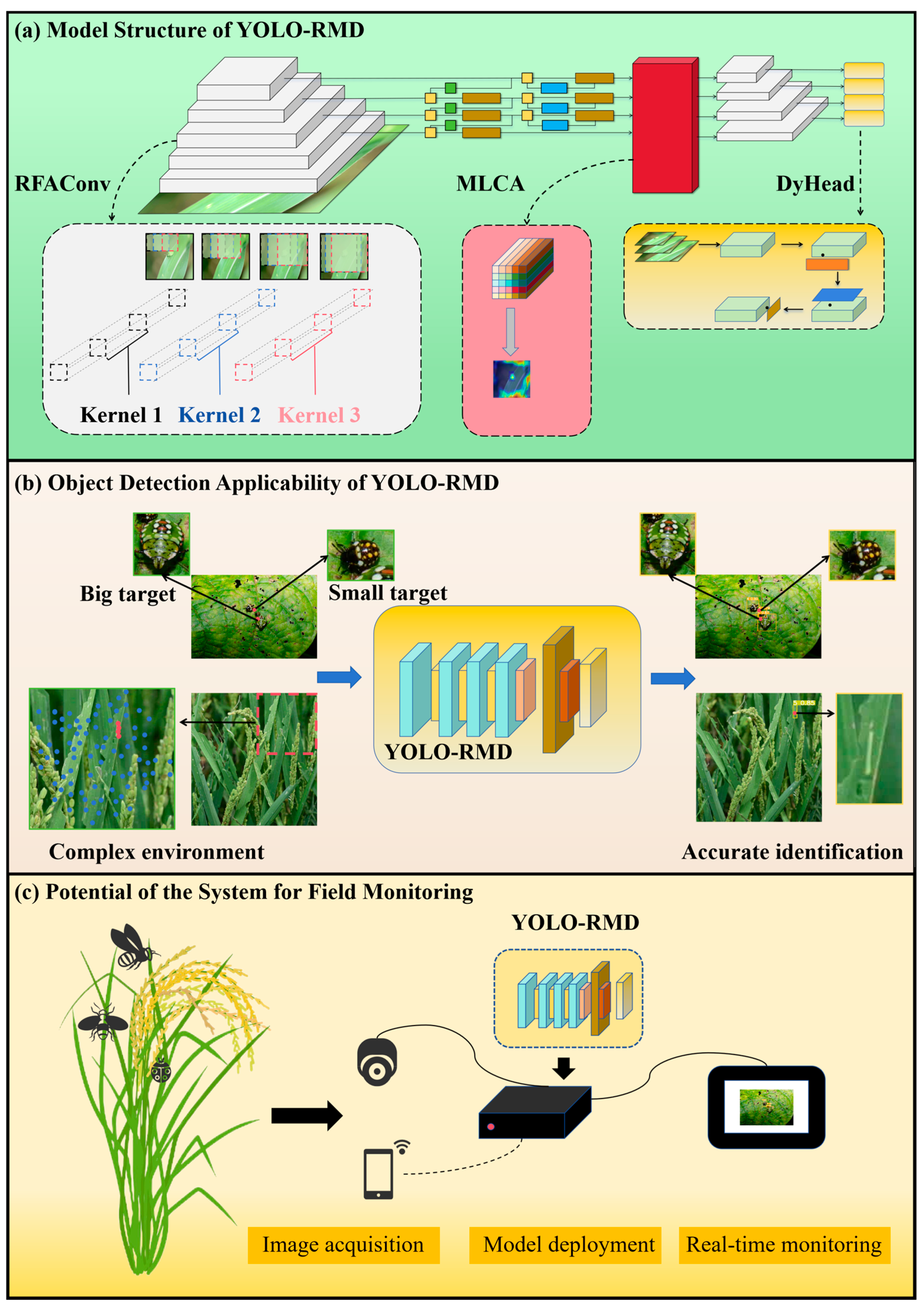

To address these limitations, this study proposes YOLO-RMD, a novel algorithm based on YOLOv8, enhanced with multi-attention to improve feature extraction in complex environments. By modifying the detection head and integrating an additional one (total four heads for detection), the technical route is shown in Figure 1a. YOLO-RMD significantly enhances multi-scale target detection capabilities. This research makes three key innovative contributions:

Figure 1.

The technical route of this study: (a) the model structure of YOLO-RMD; (b) the object detection applicability of YOLO-RMD model; (c) the potential of the pest detection system for field monitoring.

- (1)

- High-precision perception in complex field environments: The proposed YOLO-RMD algorithm improves the ability to accurately detect pests in challenging field conditions, where complex backgrounds and varying lighting can hinder detection performance. This ensures robust pest recognition even in cluttered or dynamic agricultural environments.

- (2)

- Multi-scale perception for various rice pest species: By enhancing the model’s detection capabilities across different scales, YOLO-RMD addresses the challenge of detecting rice pests that may appear at varying sizes. This ensures better detection of both large and small pests, improving overall detection accuracy for multiple species of pests in rice fields. The technical route of the above two points is shown in Figure 1b.

- (3)

- Potential for accurate and real-time detection in practical applications: YOLO-RMD offers a practical solution for real-time pest detection in agricultural settings, providing accurate monitoring that can be applied directly to field operations. This enhances the timely management of pest outbreaks, bridging the gap between laboratory-based research and actual field usage. The technical route is shown in Figure 1c.

The present study does not merely pursue disruptive innovations at the theoretical level but rather focuses on addressing the unique demands of agricultural scenarios (e.g., multi-scale targets, complex field backgrounds) through systematic reorganization of existing modules to resolve practical application bottlenecks. Although RFAConv, DyHead and MLCA are previously published techniques, their combinatorial configuration and optimization directions have been specifically tailored for agricultural object detection tasks for the first time. This problem-driven module reorganization constitutes a critical innovative pathway in this research, whose value was empirically validated through field applications. Furthermore, advancement is critical for developing precise and real-time rice pest–disease monitoring systems, effectively bridging the gap between laboratory research and field implementation. Collectively, these contributions enable the creation of enhanced and scalable pest surveillance frameworks for rice cultivation, demonstrating significant potential for translational agricultural applications.

2. Materials and Methods

2.1. Image Dataset

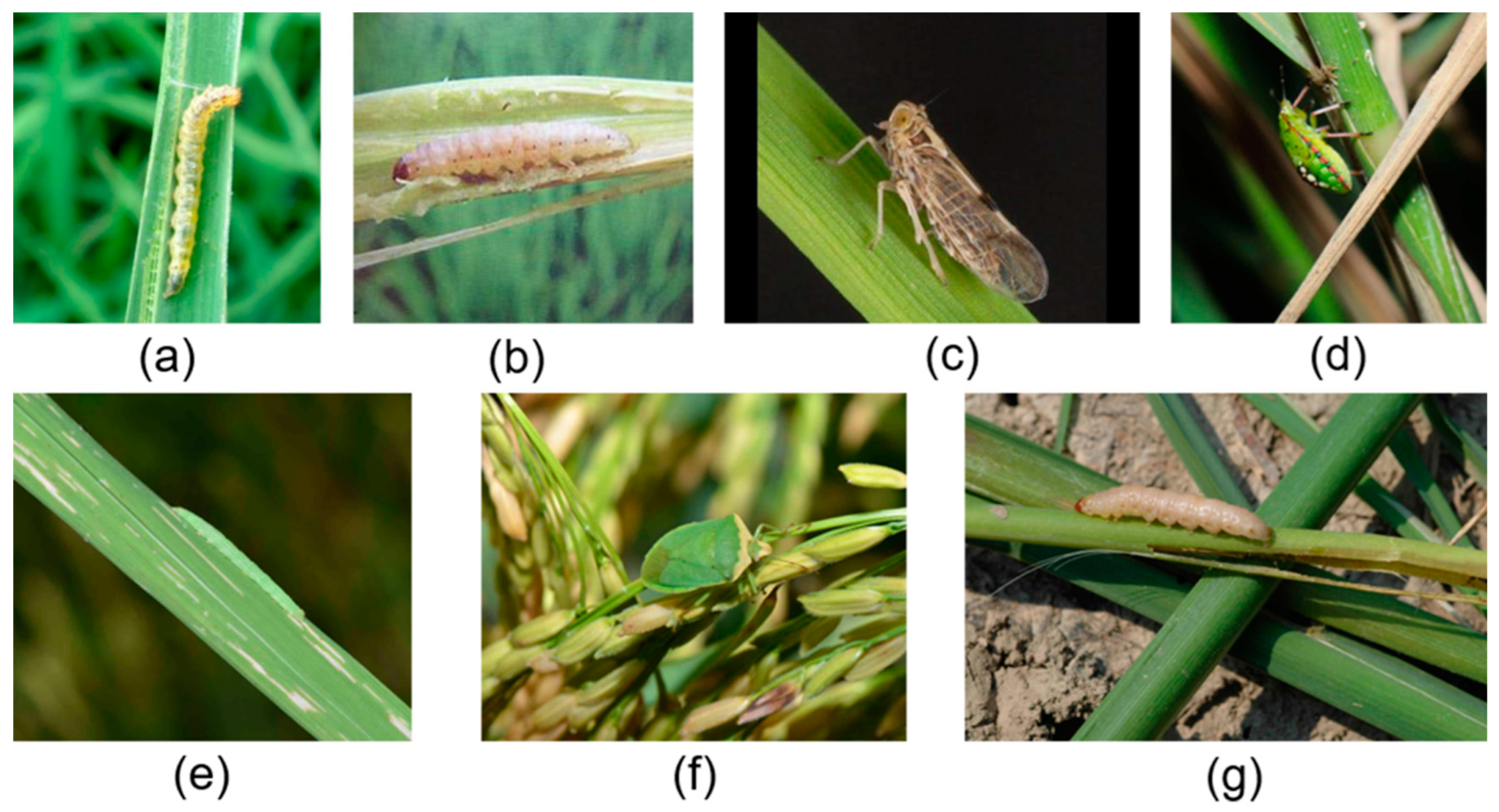

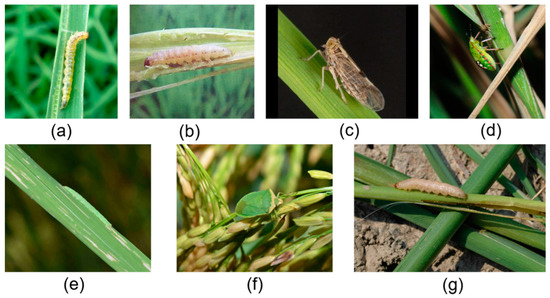

This study focused on detecting seven common rice pests, namely Nilaparvata lugens (Homoptera: Delphacidae), Cnaphalocrocis medinalis (Lepidoptera: Crambidae), Chilo suppressalis (Lepidoptera: Crambidae), the larvae of Nezara viridula (Hemiptera: Pentatomidae), Nezara viridula (Hemiptera: Pentatomidae), Naranga aenescens Moore (Lepidoptera: Noctuidae), and Sesamia inferens (Lepidoptera: Noctuidae). Representative images of these pests are shown in Figure 2. The self-built dataset used in this study was compiled from two primary sources. The first source was the IP102 dataset, which provided images of Nilaparvata lugens, Cnaphalocrocis medinalis, and Chilo suppressalis. The second source involved data collection from online platforms, including Kaggle and search engines such as Baidu, which yielded datasets for the larvae of Nezara viridula, Nezara viridula, Naranga aenescens Moore, and Sesamia inferens. These datasets were supplemented with additional images from the IP102 collection, resulting in a comprehensive total of 1551 rice pest images. The images were manually annotated using LabelImgv1.3.3 software.

Figure 2.

Samples of seven types of rice pests: (a) Cnaphalocrocis medinalis; (b) Chilo suppressalis; (c) Nilaparvata lugens; (d) the larvae of Nezara viridula; (e) Naranga aenescens Moore; (f) Nezara viridula; (g) Sesamia inferens.

Due to significant variations in the number of images across different pest types, image augmentation techniques were employed to mitigate overfitting and balance the dataset. The augmentation strategies included:

- (1)

- Random rotation (within a range of ±30°) to simulate pests’ multi-angle postures in natural environments;

- (2)

- Random cropping to mimic partial occlusion scenarios commonly encountered in field conditions;

- (3)

- Gaussian blur (σ = 1.0–2.0) to simulate defocus effects caused by varying camera distances or motion blur;

- (4)

- Random color adjustments (brightness ± 20%, contrast ± 15%) to replicate diverse lighting conditions, such as shadows or overexposure.

These techniques enhanced the model’s robustness by simulating real-field challenges, including pest pose variability, occlusion, dynamic lighting, and weather variations, thereby improving generalization capabilities for practical deployment.

After applying the image augmentation techniques, the self-built dataset contains a total of 18,168 rice pest images. The quantitative changes of the datasets are shown in Table 1. The augmented dataset was subsequently divided into training, validation, and test sets in an 8:1:1 ratio to support subsequent model training.

Table 1.

The initial quantity of the seven pest species and the quantity after applying data augmentation techniques.

2.2. The Proposed Method (YOLO-RMD)

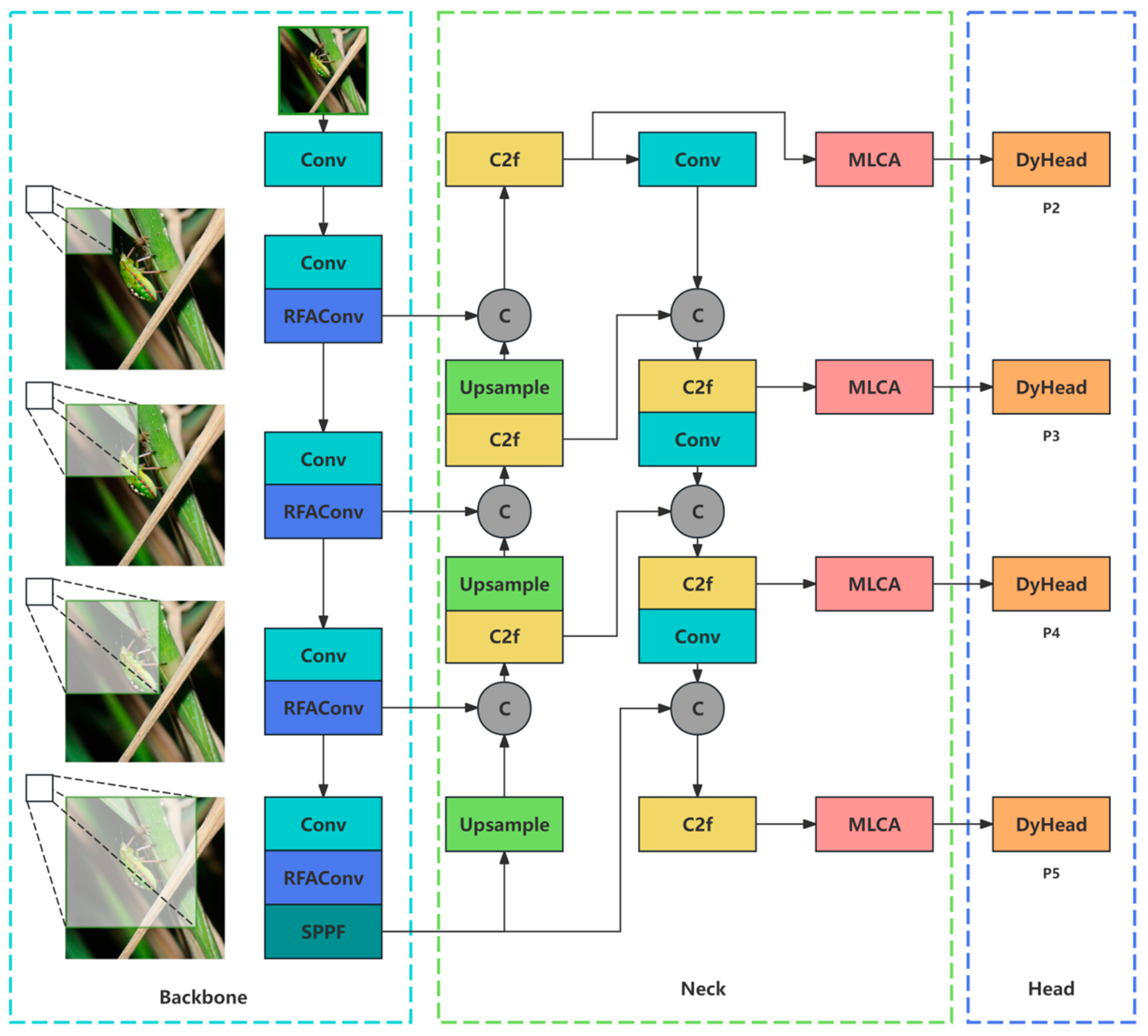

YOLO is a real-time object detection algorithm that can simultaneously predict multiple object locations and categories in a single forward pass. YOLOv8, developed by Ultralytics as an advanced iteration of YOLOv5, integrates the design principles of YOLOv7 ELAN into its backbone network and Neck components. It also replaces the YOLOv5 C3 structure with the C2f structure, which offers enhanced gradient flow. Compared to its predecessors, the YOLOv8 model exhibits a substantial improvement in performance. YOLOv8 is offered in five variants according to the different model parameter size: YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x. Training these five models on the self-built rice pest dataset resulted in the outcomes presented in Table 2. For practical applications, the YOLOv8n model was selected as the initial model due to its minimal parameters, fastest inference speed, and high accuracy. Given the diversity of pest types and sizes, as well as the complexity of the background, existing algorithms have shown limited performance. To address this issue, a novel enhancement, namely YOLO-RMD, was proposed specifically designed for rice pest detection in complex environments. The architecture of YOLO-RMD is illustrated in Figure 3. This study introduced Receptive Field Attention (RFA) convolution into YOLOv8n to replace the original C2f convolution, significantly enhancing feature extraction accuracy in complex backgrounds. The original detection head module was replaced with DyHead, supplemented by an additional detection head to improve multi-class and multi-scale pest feature extraction capabilities. Furthermore, hybrid local channel attention (MLCA) was integrated to optimize the balance between local and global feature focus, boosting overall multi-scale target detection performance without requiring additional parameters.

Table 2.

The comparison results of five models.

Figure 3.

Structure diagram of YOLO-RMD. The model has three parts: backbone, neck and head, where the RFAConv module is located in backbone, the MLCA module is located in neck, and the head has a total of four DyHead detection outputs.

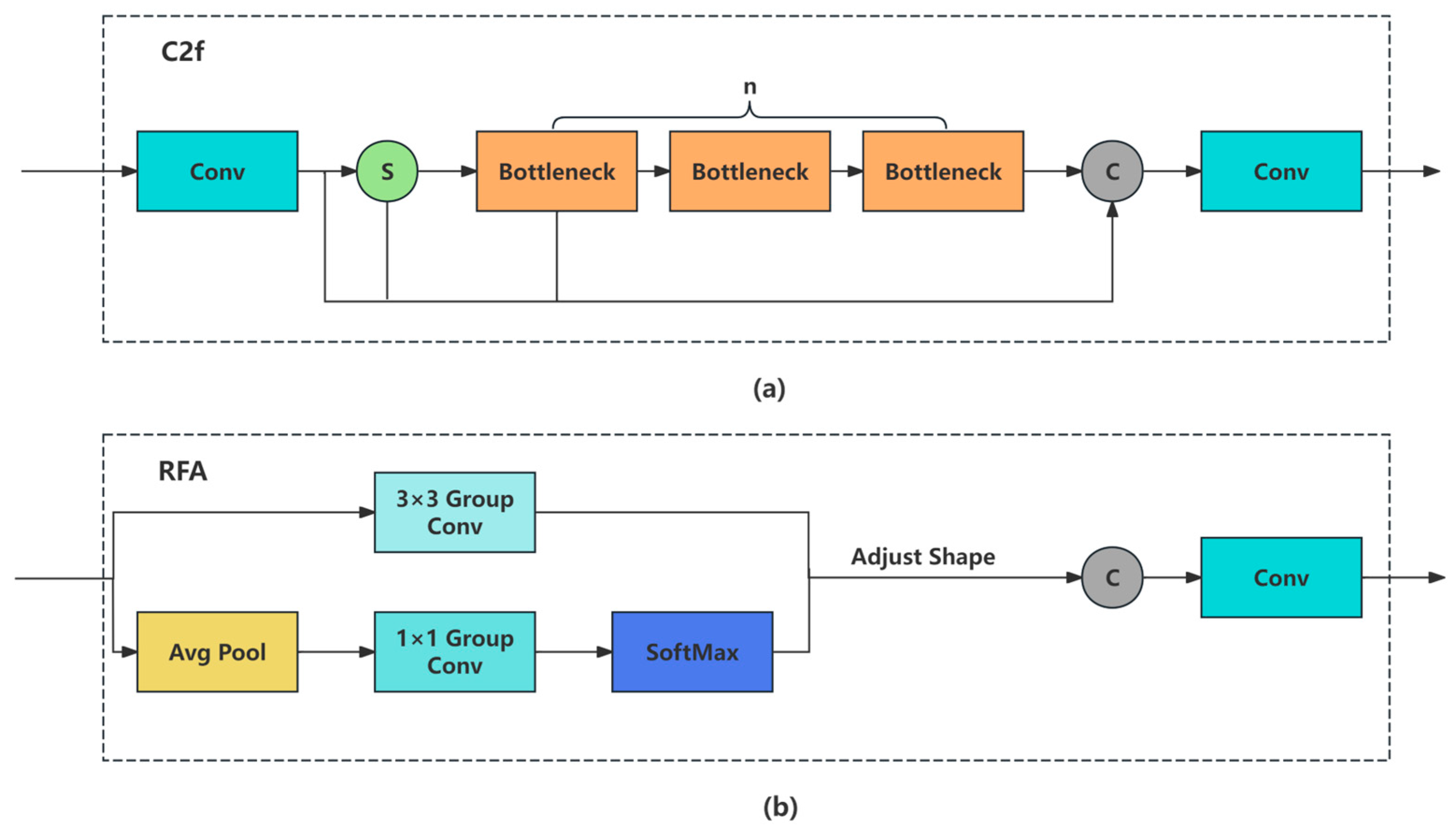

2.2.1. Receptive Field Attention

While conventional spatial attention like CBAM [19] and Coordinate Attention [20] enhance CNN performance, they inadequately address parameter-sharing limitations in standard convolutions. This proves critical in rice pest detection, where targets vary randomly in position/size against complex backgrounds. Fixed convolutional operations struggle to adapt to such variations, compromising feature extraction [21,22,23].

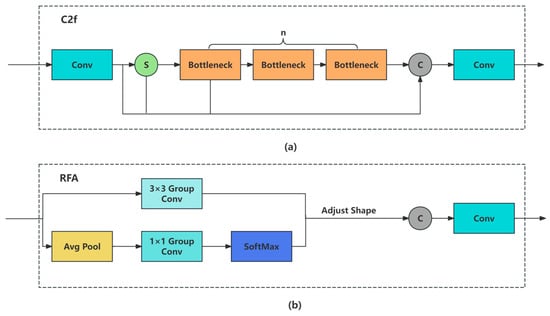

Receptive Field Attention (RFA) [24] overcomes these limitations through dynamic parameter adaptation. Unlike traditional approaches, RFA generates position-specific weights for each receptive field, enabling kernels to prioritize contextually relevant features. Integrated into YOLOv8′s backbone via RFAConv (Figure 4 and Figure 5), it replaces the C2f module’s fixed Bottleneck operations. While C2f concatenates multi-scale features through sequential convolutions, its uniform processing of scattered pests limits detection robustness.

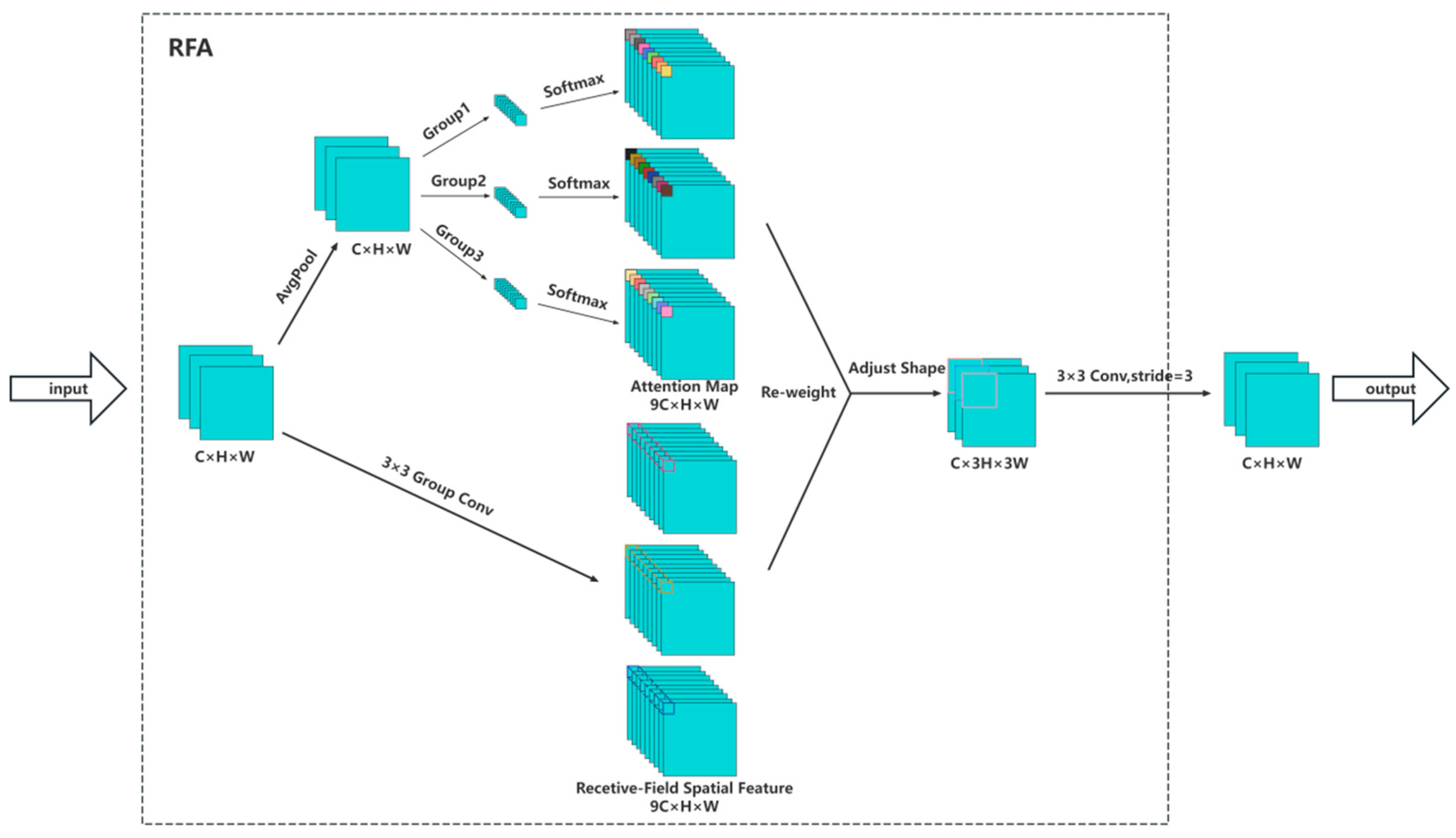

Figure 4.

Comparison of structural differences between C2f and RFAConv modules: (a) the structure of C2f module in original YOLOv8′s backbone; (b) the structure of RFAConv module used to replace C2f in this study.

Figure 5.

Workflow of RFAConv. The proposed RFAConv module sequentially performs: (1) rapid Group Convolutions for local feature extraction; (2) AvgPool-based global context aggregation; (3) 1 × 1 Group Convolutions for cross-receptive-field interaction; and (4) softmax-based feature importance weighting.

RFAConv enhances adaptability in the following ways: (1) group convolutions capturing localized details; (2) AvgPool-aggregated global context; (3) cross-receptive-field interactions via 1 × 1 group convolutions; and (4) Softmax-based feature prioritization. This architecture maintains computational efficiency while addressing positional insensitivity from parameter sharing, significantly improving pest detection in cluttered environments.

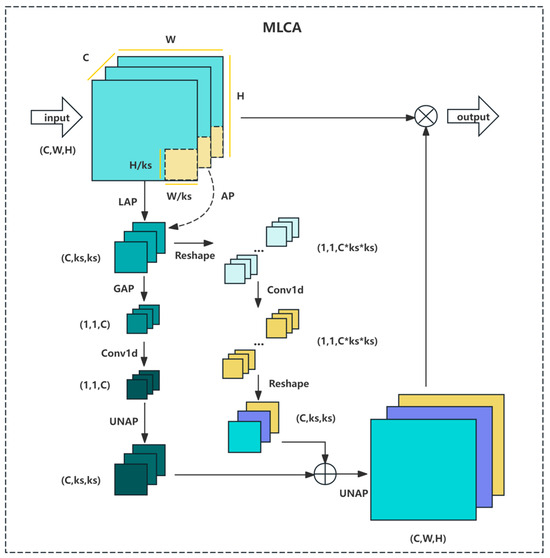

2.2.2. Mixed Local Channel Attention

To address the issue of low detection accuracy for rice pest detection in complex scenarios, it is crucial to enhance the network’s ability to extract relevant features. Typically, this involves incorporating channel attention into the network. Channel attention was first introduced by Hu et al. in the Squeeze-and-Excitation Network (SENet), which significantly improved image classification performance by learning the weights of each channel to adjust their importance in the feature map [25]. Following SENet, lightweight variants such as ECA-Net [26] and GE [27] were developed, further advancing the application of channel attention.

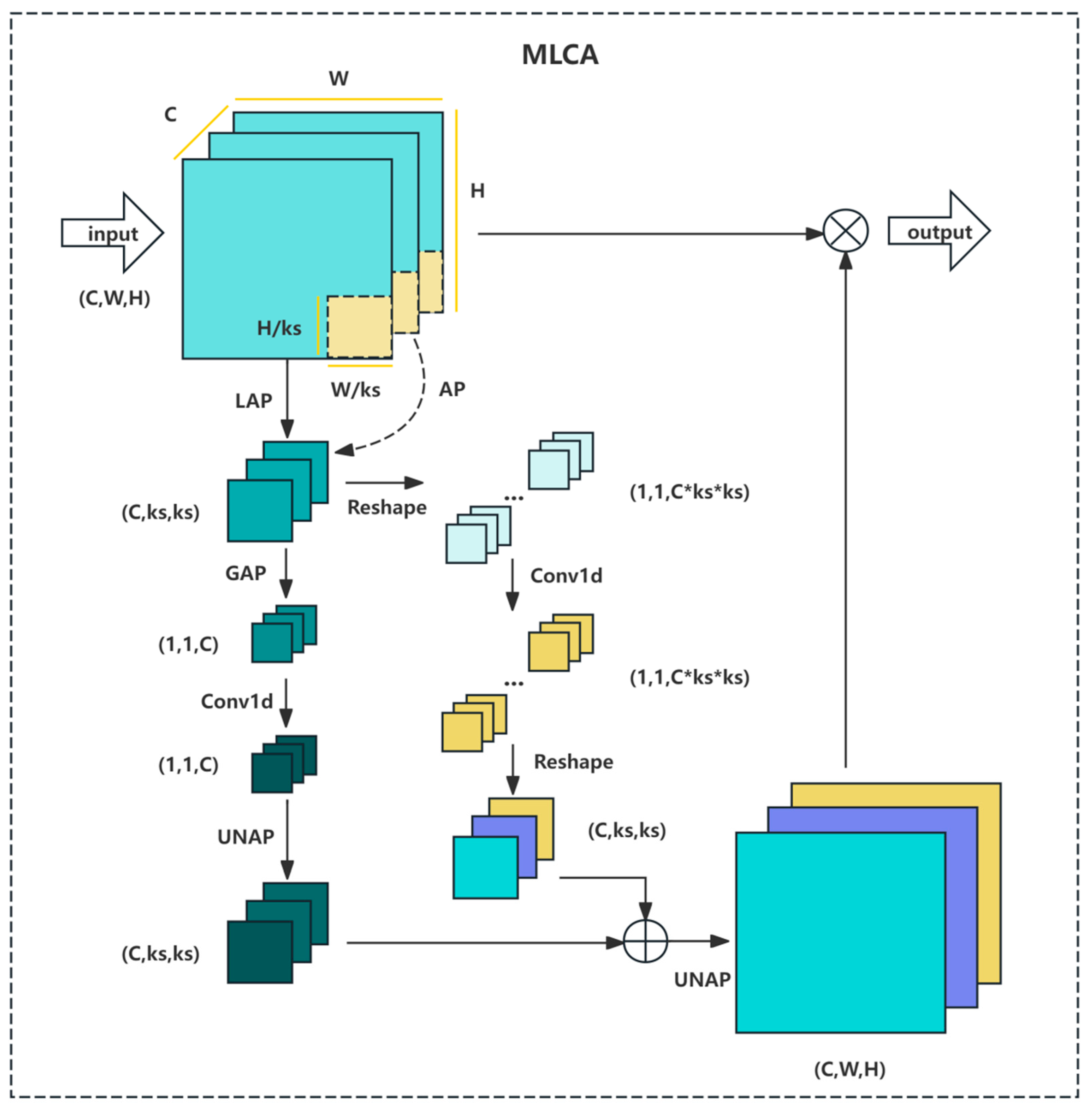

However, most channel attention focus solely on channel feature information while neglecting spatial feature information, resulting in suboptimal detection performance, particularly in complex scenarios. To address this limitation, Woo et al. proposed the Convolutional Block Attention Module, which combines both channel and spatial attention. This integrated attention captures and leverages feature map information more comprehensively, enhancing model performance in complex scenarios. Nevertheless, the additional steps introduced by CBAM, such as the MLP processing (which consists of two fully connected layers and a ReLU activation function to learn channel dependencies), increase the computational complexity of the model. In contrast, MLCA maintains the combination of channel and spatial attention while reducing computational complexity [28]. MLCA achieves this by sharing average pooling and eliminating MLP processing, thereby improving detection speed without compromising the original detection performance. The overall processing steps of MLCA are illustrated in Figure 6.

Figure 6.

The structure of MLCA and the MLCA’s efficient attention ability combining shared pooling (reduced computation) and MLP-free design (accelerated processing).

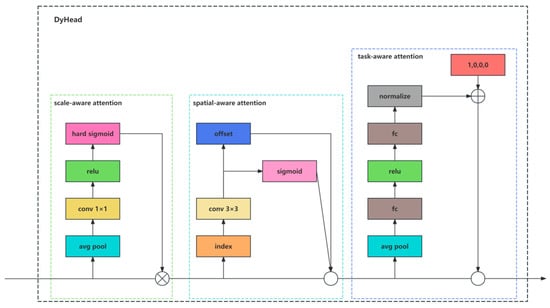

2.2.3. Dynamic Head

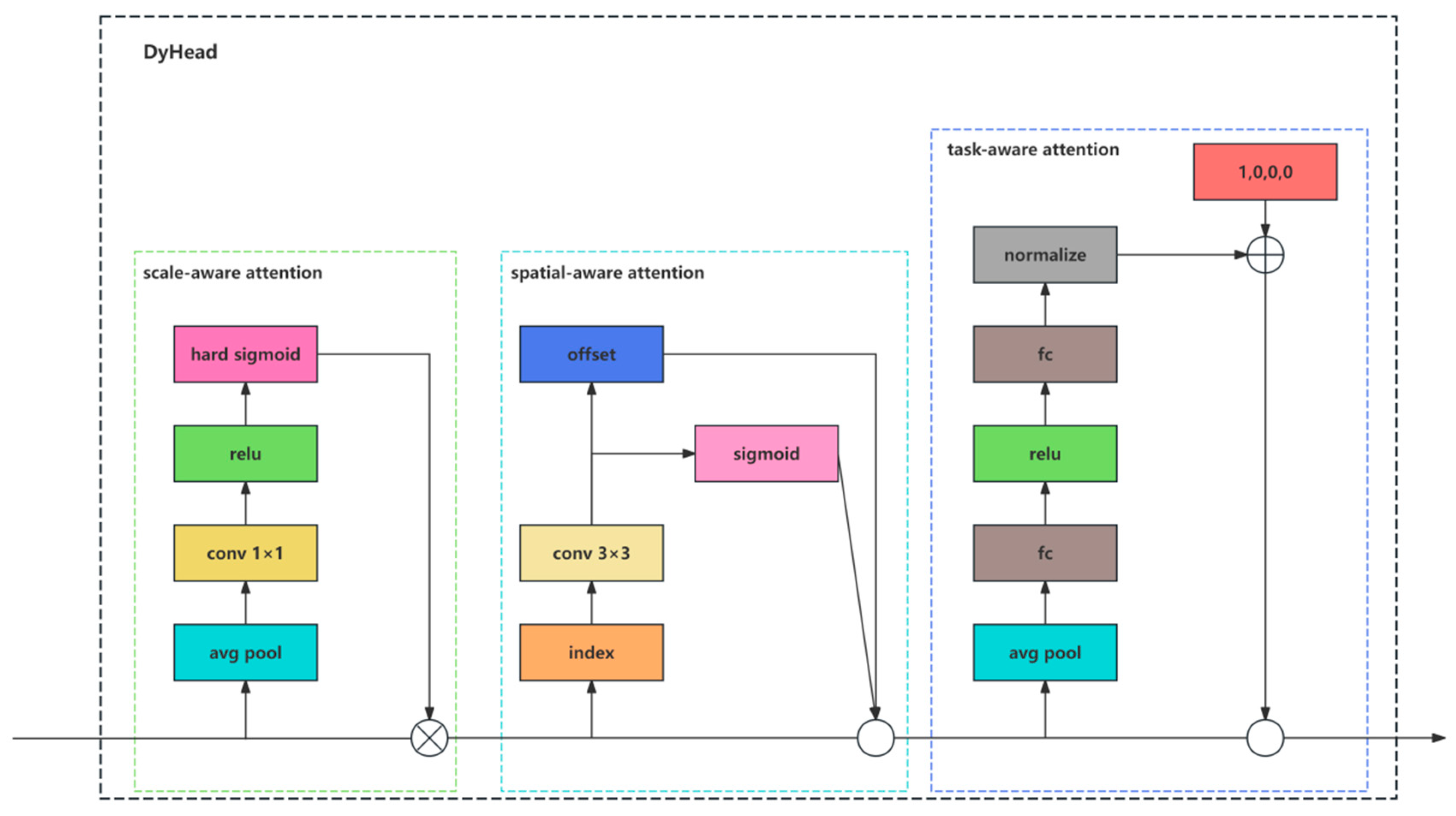

The Dynamic Head (DyHead) is a novel detection head that integrates multiple attention across feature levels, spatial positions, and output channels, enabling scale, spatial, and task awareness [29].

In the context of rice insect pest detection, a significant challenge arises from the presence of multi-scale detection targets. DyHead was implemented to substitute the original detection head module in YOLOv8. DyHead augments the detection capability for multi-scale targets through the integration of the scale-aware attention module and the spatial-aware attention module. The scale-aware attention module dynamically integrates features across various scales based on their semantic relevance and learns the relative significance of different feature levels, thereby enabling the model to adaptively enhance the representation of features at the appropriate scale. The spatial-aware attention module enhances attention learning sparsity through deformable convolution and aggregates features across levels at identical spatial locations, facilitating the development of a more discriminative representation and thereby augmenting the model’s perception and comprehension of objects in varying spatial contexts. This capability enables the model to more effectively manage variations in object shape, rotation, and position across different perspectives, thereby enhancing the accuracy and performance of object detection. The task-aware module embedded within DyHead dynamically toggles feature channels to accommodate various tasks, including classification, box regression, and center/key point learning. The structure of DyHead and its three included attention are illustrated in Figure 7.

Figure 7.

The detailed structure of DyHead integrates multiple attention across feature levels, spatial positions, and output channels.

2.2.4. Additional Detection Head

In the backbone network of YOLOv8, operations such as convolution, pooling, or stride convolution are used to down-sample the feature maps. And the receptive field will change accordingly during the operation process. The calculation formula of the receptive field is shown in Formula (1). In this formula, represents the size of the receptive field of the th layer, is the size of the convolution kernel, and is the stride of the previous layer. As the network progresses from P2 layer to the P6 layer, the resolution of the feature maps continuously decreases, while the receptive field of each convolutional kernel gradually expands. This expansion allows the network to capture broader contextual information at higher layers (e.g., P5 and P6), which is essential for detecting larger targets. However, the P2 layer, with its higher resolution and smaller receptive field, excels at capturing fine details, making it particularly effective for detecting smaller targets.

Due to the significant size variability among rice pests, the detection process inherently involves multi-scale targets. To address this challenge, this study introduced an additional detection head to expand the model’s scale detection range. Specifically, for small-sized pests such as the Nilaparvata lugens, this study incorporated a P2 layer into the detection framework. The P2 layer’s higher resolution and smaller receptive field enable the model to capture fine-grained details of smaller pests, significantly enhancing detection accuracy for these targets. Therefore, this multi-scale approach ensures that the model can effectively handle the diverse size range of rice pests, from millimeter-scale larvae to centimeter-scale adults.

2.3. Experiment Environment and Model Evaluation

The experimental hardware setup includes a 12 vCPU Intel (R) Xeon (R) Platinum 8352 V CPU @ 2.10 GHz, 90 GB of RAM, and an RTX 4090 GPU with 24 GB of VRAM. The software environment consists of Ubuntu 18.04, a 64-bit Linux operating system, with Python 3.10 and CUDA 12 used for model development and training on the rice pest dataset.

The model training utilized an input image size of 640 × 640 pixels and employed SGD as the optimizer, with an initial learning rate of 0.01 and weight decay of 0.0005. The model was trained for 200 epochs with a batch size of 16. Evaluation metrics included Precision (P), Recall (R), Average Precision (AP), and model size. Precision (P) measures the proportion of true positives among all predicted positives, while Recall (R) assesses the proportion of true positives correctly identified out of all actual positives. AP represents the area under the Precision–Recall (PR) curve, with the specific calculation formula provided as follows:

In this context, TP (True Positive) represents correctly predicted positive cases, FP (False Positive) refers to negative cases incorrectly predicted as positive, and FN (False Negative) denotes positive cases incorrectly predicted as negative.

3. Results

3.1. Ablation Studies

To assess whether the selected modules enhance the model’s performance, this study conducted ablation experiments on the rice pest dataset and included a comparative analysis at the P6 location. The results of the ablation and comparative experiments are presented in Table 3.

Table 3.

Results of ablation experiment with baseline of YOLOv8n.

Table 3 illustrates that replacing the original detection head with DyHead resulted in improvements in both precision and recall, with a 1% increase in mAP50 and a 4% increase in mAP50-90 compared to YOLOv8n. To validate the effectiveness of the P2 layer, a comparative P6 layer was introduced. The P6 layer, characterized by lower resolution and a larger receptive field, is more suitable for large objects. However, since rice pests are typically small in size, the P2 layer (YOLOv8n + P2 + DyHead) exhibited significant improvements across all metrics compared to the previous model (YOLOv8n + DyHead), while the P6 layer (YOLOv8n + P6 + DyHead) performed poorly, even reducing mAP by 1%. This comparative experiment further confirms the effectiveness of the P2 layer. By incorporating MLCA and RFAConv into the P2 layer, the model’s mAP50 increased by 0.1%, and mAP50-90 improved by 1%, accompanied by corresponding increases in both precision and recall. The improved model, YOLO-RMD (YOLOv8n + P2 + DyHead + MLCA + C2f_RFAConv), demonstrated a 3% increase in mAP50 and a 7.2% increase in mAP50-90 compared to YOLOv8n, with substantial improvements in both precision and recall. However, as detection accuracy improved, the model’s parameter counts also increased, resulting in a corresponding decrease in detection speed. Nevertheless, the detection speed of 29.5 FPS is sufficient to meet the daily detection requirements. The decrease in detection speed also provides a direction for optimization in future research.

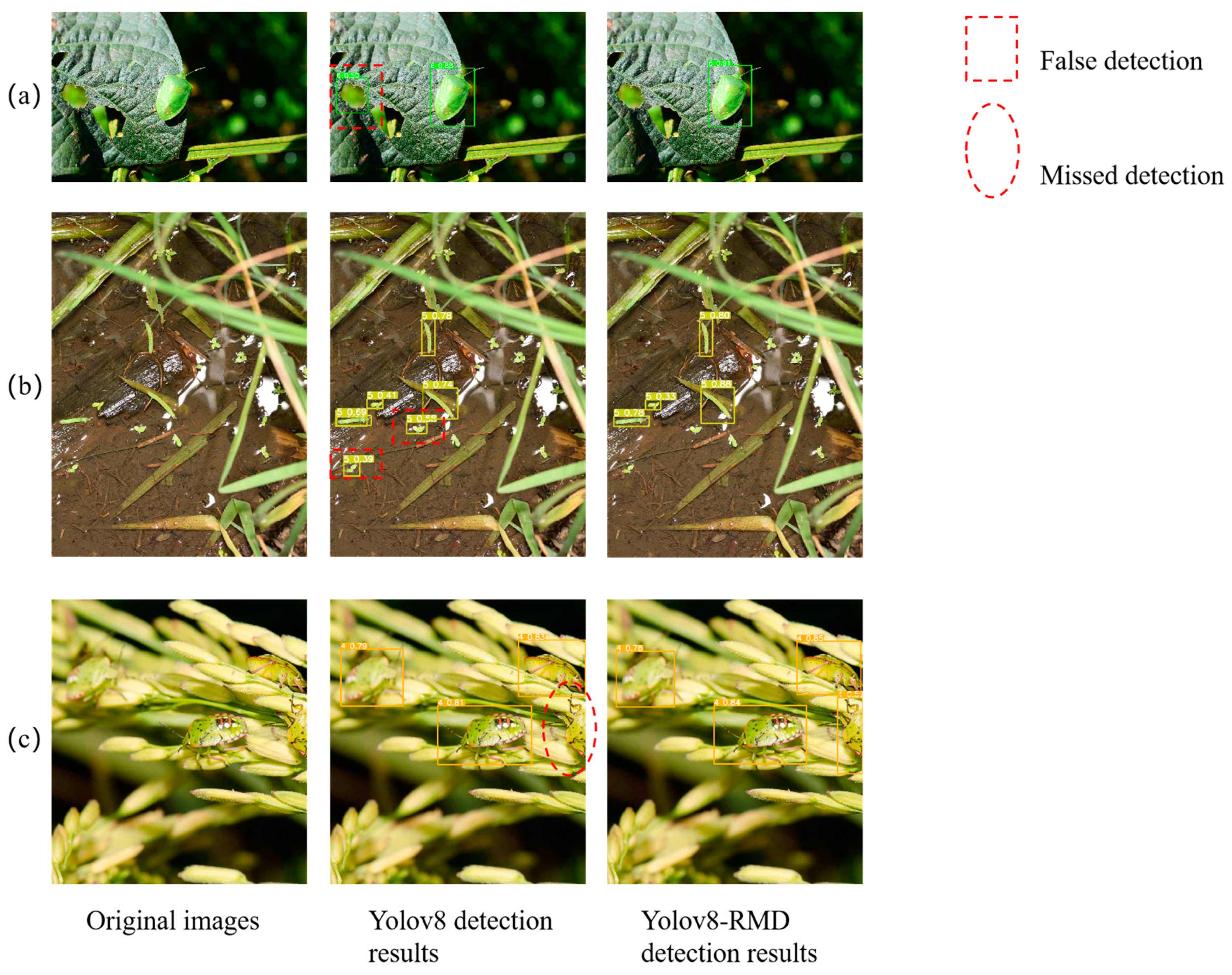

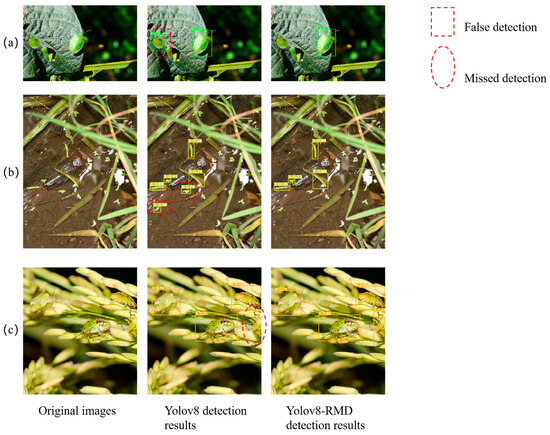

The comparative results between YOLO-RMD and the original model across different scenarios are shown in Figure 8. In detecting Nezara viridula (Figure 8a), YOLOv8n mistakenly identified holes in the leaves caused by insects as the pests themselves, while YOLO-RMD, with its enhanced feature extraction capabilities, accurately distinguished the actual pests without misidentifying the holes. In scenarios with complex backgrounds (Figure 8b), YOLOv8n incorrectly detected leaves on the water surface as the larvae of Sesamia inferens, whereas YOLO-RMD, utilizing multiple attention, significantly reduced false detections by better distinguishing features in the intricate backgrounds. For occluded targets, preprocessing included cropping to simulate occlusion, as shown in the rightmost part of Figure 8c, where a partially obscured larvae of Nezara viridula was accurately detected by YOLO-RMD but missed by YOLOv8n. This highlights YOLO-RMD’s superior ability to extract features from different regions and accurately detect occluded targets. At the same time, it also reflects the high-precision perception ability of YOLO-RMD in complex field environments. In addition, the accurate detection of large-scale and small-scale target pests by YOLO-RMD also demonstrates its outstanding ability in the multi-scale perception of rice pest and disease types.

Figure 8.

Comparison results: (a) detection of targets and similar targets; (b) detection of targets in complex backgrounds; (c) detection of occluded targets.

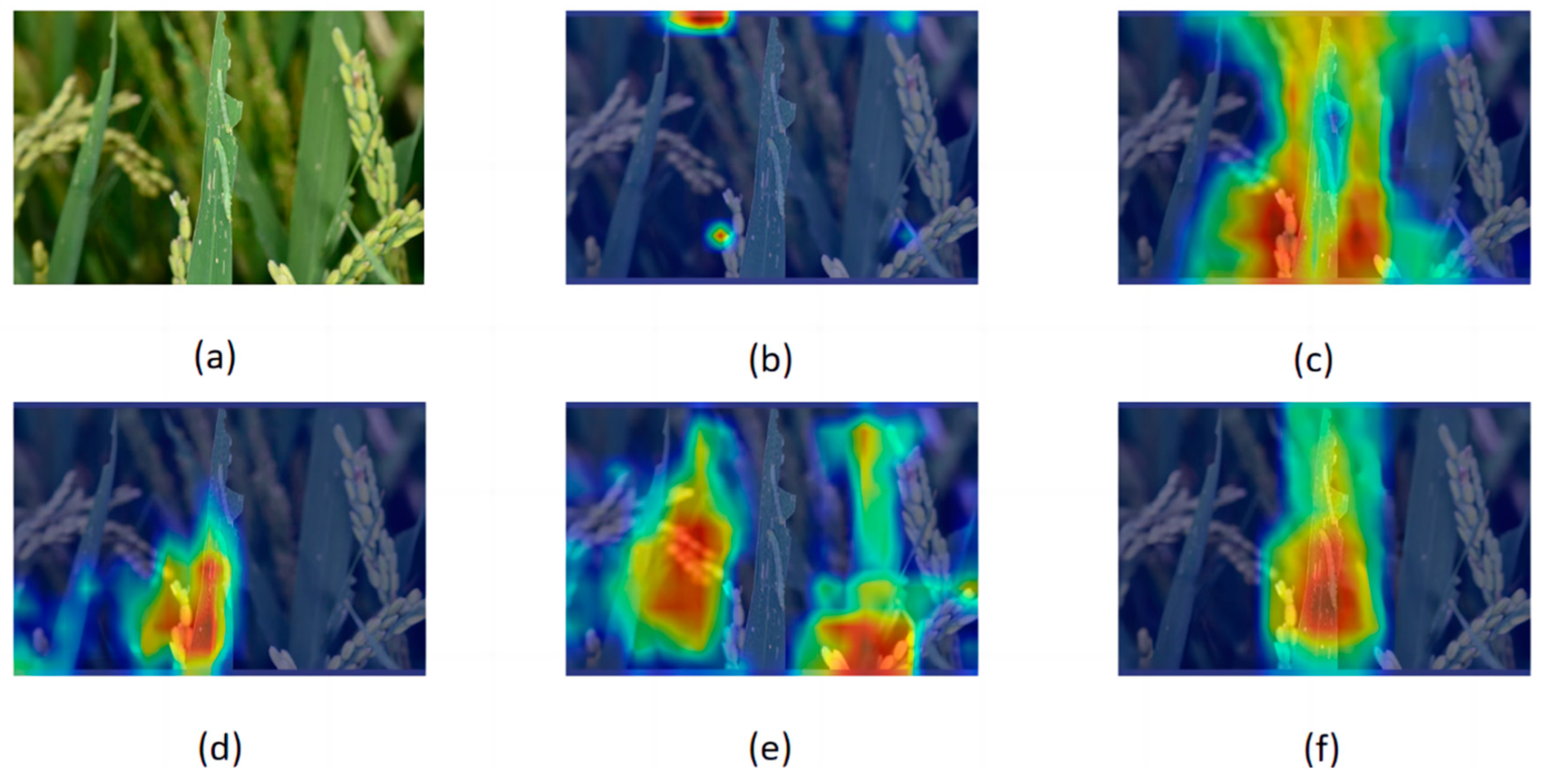

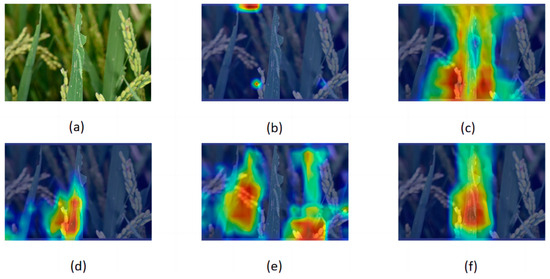

3.2. Grad-CAM Visualisation

Gradient-weighted Class Activation Mapping (Grad-CAM) is a powerful technique used to visualize and understand the decision-making processes of CNNs. In the experiments, Grad-CAM was employed to analyze how the models make decisions after various enhancements, thereby verifying the feasibility of the improvements. This study conducted experiments using five different stages of models: YOLOv8n, YOLOv8n + DyHead, YOLOv8n + P2 + DyHead, YOLOv8n + MLCA + P2 + DyHead, and YOLOv8-RMD and compared the Grad-CAM heatmaps of the lowest layer in the backbone network of each model. The heat map of the individual model is shown in Figure 9.

Figure 9.

Heat maps of different improved models: (a) original images; (b) heat maps of YOLOv8n; (c) heat maps of YOLOv8n+DyHead; (d) heat maps of YOLOv8n+P2+DyHead; (e) heat maps of YOLOv8n + MLCA + P2 + DyHead; (f) heat maps of YOLO-RMD.

In the YOLOv8n model, the concentration range of the heatmap significantly deviates from the actual target, as shown in Figure 9b. When the original detection head is replaced with DyHead, the heatmap’s range converges towards the target area, as illustrated in Figure 9c. The addition of a small target detection head further refines the feature extraction for small targets, narrowing the heatmap range towards the target area. However, it overlooks another target area, as shown in Figure 9d. After the incorporation of MLCA, the heatmap expands from the target region to the surrounding areas because of the enhanced global feature extraction, as depicted in Figure 9e. Finally, RFAConv is introduced. Since RFAConv can improve the accuracy of feature extraction in complex backgrounds, the regions of the heatmap are re-aggregated to the target area, covering two targets. The heatmap hotspots are closely aligned with the detected targets, as shown in Figure 9f.

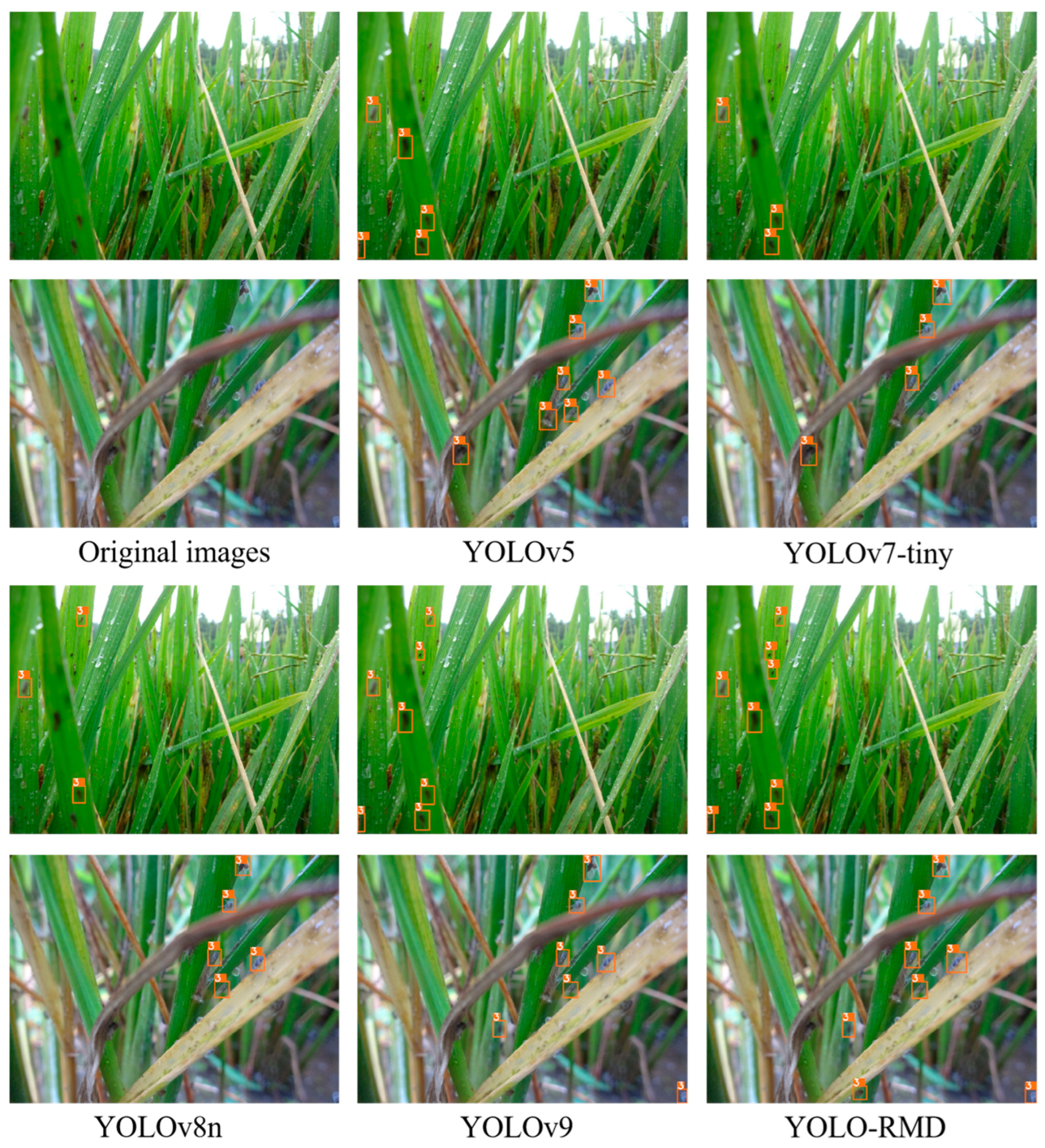

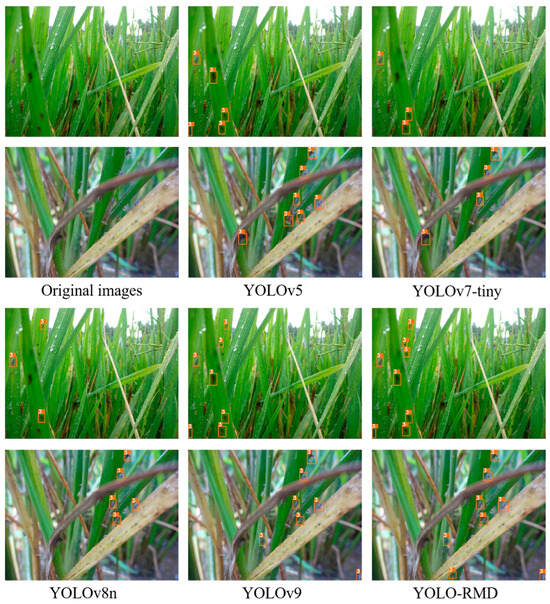

3.3. Comparison of Various YOLO Networks

To better demonstrate the performance of the improved model in rice pest detection, this study compared it with several mainstream object detection networks, including YOLOv5, YOLOv7-tiny, YOLOv8n and YOLOv9. The performance comparison of different models is shown in Table 4. YOLO-RMD is an improved version of YOLOv8n with added multiple attention. Compared with the original YOLOv8n and the later YOLOv9, its model size is relatively large. However, compared to YOLOv5 and the lightweight YOLOv7-tiny, the size advantage of YOLO-RMD is still evident. In terms of precision, recall, and mAP, YOLO-RMD significantly outperforms the other models, particularly in mAP, where YOLO-RMD is 4.5% higher than YOLOv5, 5.3% higher than YOLOv9, and 6.2% higher than YOLOv7-tiny. Although YOLO-RMD is slower in computational speed compared to YOLOv5, YOLOv7-tiny and YOLOv9, it outperforms these models in terms of overall detection performance. The comparison results of YOLO-RMD and the other four YOLO models in multi-size target detection under complex backgrounds are shown in Figure 10. As shown in the pictures, when it comes to detecting small-target pests in complex environments, YOLO-RMD demonstrates a more outstanding ability. YOLO-RMD has detected all detectable Nilaparvata lugens. In contrast, in other models, there are a large number of missed detections, especially for some extremely small targets, which can only be detected by YOLO-RMD. This reflects the high-precision perception of YOLO-RMD in the complex field environments. Overall, compared with several mainstream object detection models, the improved YOLO-RMD exhibits superior detection performance when handling complex backgrounds and multi-scale target tasks.

Table 4.

Comparison of the model performance.

Figure 10.

The comparison results of YOLO-RMD and the other four YOLO models in multi-scale pest detection.

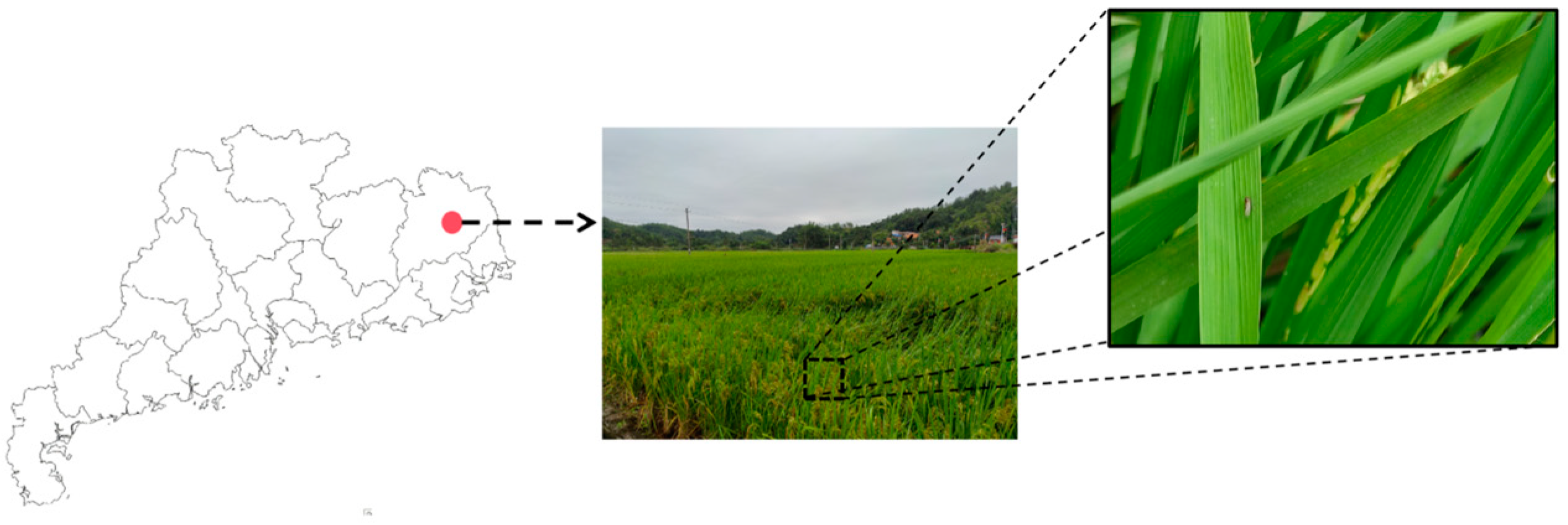

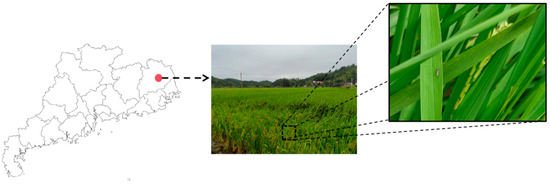

3.4. Comparison in Real Environment

To validate the performance of the model in real-world scenarios, this study selected a routinely cultivated paddy field for experimentation. The location of this paddy field is in Meizhou City, Guangdong Province, China. The test was carried out in October. Given that the paddy field was severely infested with Nilaparvata lugens during this period, thirty photographs of Nilaparvata lugens were gathered for assessment. The collection location and the test pictures are shown in Figure 11. As a baseline comparison, this study also implemented traditional threshold approaches, which resulted in 4319 misidentified targets due to their inability to distinguish pests from complex backgrounds, such as leaf holes and soil debris. This highlights the limitations of pixel-intensity-based methods in agricultural scenarios.

Figure 11.

The location of the paddy field and photos of Nilaparvata lugens.

Since the Nilaparvata lugens targets are diminutive and the rice at this juncture is in the heading stage, the rice in the paddy field is rather densely distributed. Consequently, the collected photographs fulfill the requirements of the experiment, including small targets, multiple scales, and complex backgrounds. This charter still employs four models, namely YOLOv5, YOLOv7-tiny and YOLOv9, along with the YOLO-RMD model to individually identify these thirty photos of Nilaparvata lugens. The performance of the model in real-life scenarios was evaluated by contrasting the final numbers of correctly and erroneously identified targets. The model identification results are presented in Table 5.

Table 5.

Comparison of the model identification results.

According to the results presented in Table 3, all four models can essentially identify the targets present in the photos. Nevertheless, due to the complex background and diminutive targets, the difficulty of model identification is augmented. The numbers of incorrect identifications for the three models, namely YOLOv5, YOLOv7-tiny and YOLOv9, are exceedingly high. With only 32 targets in total, the numbers of incorrect identifications reach 17, 22, and 11, respectively. Notably, these errors are predominantly caused by false positives on non-pest objects (e.g., water droplets and leaf textures), a challenge that conventional threshold approaches failed to address. This fails to meet the demand for accurate pest identification in practical applications.

In contrast, the enhanced YOLO-RMD model exhibits an excellent effect in actual detection. For 30 photos of Nilaparvata lugens, a total of 31 correct targets were detected, and there were merely two incorrect targets, achieving a 93.9% precision rate that significantly outperforms both traditional threshold approaches (0.7%) and baseline YOLO variants (52.5–73.8%). This fulfills the requirement for pest detection accuracy and substantiates the feasibility of the modified rice pest model in practical applications.

3.5. Real-Time Performance Evaluation of YOLO-RMD System

To validate the real-world performance of the improved YOLOv8n model, both YOLO-RMD and YOLOv8n were deployed to edge computing devices, namely Jetson Nano and Jetson Orin NX. Additionally, to enhance the detection performance for rice pest identification and meet the real-time detection requirements, the improved model was accelerated using the TensorRT inference library. The performance metrics of the model deployed on the devices are shown in Table 6.

Table 6.

Comparison of device deployment detection frame rates.

As shown in Table 6, the detection speed of YOLO-RMD on edge computing devices is slower than that of YOLOv8n. With a Jetson Nano, the detection speed of YOLO-RMD is only 17 FPS, whereas YOLOv8n reaches 67 FPS. With a Jetson Orin NX, YOLO-RMD achieves a detection speed of 31 FPS, while YOLOv8n reaches 132 FPS. In real-time interactions, 30 FPS is widely regarded as the benchmark for smooth experiences, particularly in mobile and embedded systems where 30 FPS is the commonly adopted standard. Therefore, in a real-world setting, a detection speed of 31 FPS is sufficient to meet the practical detection requirements. This also validates the feasibility of real-time detection with YOLO-RMD in real-world environments. However, this evaluation comes with a corresponding requirement for computational power, necessitating at least 100 TOPS to meet the real-time detection demands of YOLO-RMD. This also provides direction for future research.

4. Discussion

Although YOLO-RMD has achieved promising results in the intended direction, there is still room for improvement. The current dataset lacks photos of certain pests, such as adult moths of several species, making it impossible to detect their morphology throughout the entire life cycle. Furthermore, rice planthoppers can be further classified into subtypes based on surface texture, such as gray planthoppers and white-backed planthoppers, which was explored in other studies. Since this research primarily focuses on detecting multi-scale targets in complex backgrounds, it does not address more detailed classification tasks, resulting in limitations in the variety of rice pest detection. Therefore, continually supplementing the types of rice pests in the dataset is a worthwhile area for future research.

Another aspect is the deployment of the model. Currently, numerous studies have focused on deploying neural networks on mobile devices for target detection. The introduction of multiple attention in this research has increased the model’s computational complexity. Although this does not affect the field detection of rice pests, there is room for improvement in tasks that require high detection speed. Therefore, further research is needed on how to optimize the model for lightweight operations across various devices.

YOLO-RMD demonstrates excellent performance in detecting multi-scale targets in complex backgrounds, which is highly beneficial for practical applications in rice pest detection models. Previous rice pest studies have primarily focused on simple pest recognition, achieving high detection accuracy but failing to apply to in-field detection. Consequently, these methods offer limited practical assistance to farmers. The proposed model can perform in-field detection, and when combined with methods for assessing rice pest density, the deployed model can replace the manual counting of pest populations. This automation can significantly reduce manual labor and enhance efficiency in rice production, paving the way for future intelligent rice farming operations.

5. Conclusions

This research introduces YOLO-RMD, an innovative and comprehensive rice pest detection system designed to provide precise and timely pest monitoring in challenging field environments. By integrating novel components such as the Receptive Field Attention Convolution module, Mixed Local Channel Attention, and an enhanced multi-scale detection architecture with DynamicHead, YOLO-RMD achieves significant improvements in detection accuracy and adaptability. Specifically, these results demonstrate that YOLO-RMD achieves a 3% higher average training accuracy compared to the original YOLOv8n model on the rice pest dataset. In complex field environments, YOLO-RMD shows superior high-precision perception, effectively reducing false positives and detecting occluded pests. When compared with other leading models (YOLOv5, YOLOv7-tiny, and YOLOv9), YOLO-RMD outperforms them by 4.5%, 6.2%, and 5.3% in average training accuracy, respectively, while maintaining the smallest model size and superior overall performance. This highlights its strong multi-scale perception capabilities, which are crucial for accurately detecting pests of varying sizes. In practical paddy field applications, YOLO-RMD exhibits better detection performance than YOLOv5, YOLOv7-tiny, and YOLOv9, significantly reducing misidentifications. These findings confirm YOLO-RMD’s potential for accurate and real-time pest detection, bridging the gap between laboratory research and real-world agricultural applications. This study thus provides an effective solution for precise and timely rice pest monitoring in complex field conditions. Future work will focus on (1) expanding pest categories through collaborations with agricultural institutes to cover more species; (2) deploying YOLO-RMD on edge devices to achieve continuous pest population tracking across all rice growth stages; (3) edge deployment optimization for low-power devices (e.g., Jetson Nano) to enhance accessibility.

Author Contributions

J.Y.: Conceptualization, Investigation, Data curation, Writing—original draft. J.Z.: Validation, Visualization. G.C.: Software, Data curation. L.J.: Data curation. H.Z.: Data curation. H.D.: Formal analysis. Y.L. (Yongbing Long): Supervision, Funding acquisition. Y.L. (Yubin Lan): Supervision, Funding acquisition. B.W.: Methodology, Writing—review and editing. H.X.: Conceptualization, Funding acquisition, Supervision, Validation, Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Specific University Discipline Construction Project of Guangdong Province grant number [2023B10564002]. And the APC was funded by [2023B10564002].

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Publicly available datasets were used in this study. These data can be found at: https://github.com/xpwu95/IP102 (accessed on 20 March 2025). https://www.kaggle.com/datasets/hesi0ne/insectsound1000 (accessed on 20 March 2025). The trained model file can be found at https://github.com/yjd669/YOLO-RMD.git (accessed on 20 March 2025). Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors are grateful to the Specific University Discipline Construction Project of Guangdong Province (2023B10564002), the Natural Science Foundation of Guangdong Province (2021A1515012112, 2022A1515010411), the Youth Innovative Talent Project of General Universities in Guangdong Province (2023KQNCX078), the Key-Area Research and Development Program of Guangdong Province (2019B020219002, 2019B020219005), the National Natural Science Foundation of China (12174120, 11774099), The 111 Project (D18019), the Leading talents of Guangdong province program (2016LJ06G689) and the Laboratory of Lingnan Modern Agriculture Project (NT2021009) for the support to the work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fao, F.J.R. Food and Agriculture Organization of the United Nations. 2018, 403. Available online: http://faostat.fao.org (accessed on 14 August 2024).

- Chithambarathanu, M.; Jeyakumar, M.J.M.T. Survey on crop pest detection using deep learning and machine learning approaches. Multimed. Tools Appl. 2023, 82, 42277–42310. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part I 14. pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Kasinathan, T.; Uyyala, S.R. Machine learning ensemble with image processing for pest identification and classification in field crops. Neural Comput. Appl. 2021, 33, 7491–7504. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Xing, Z.; Wang, H.; Dong, X.; Gao, X.; Liu, Z.; Zhang, X.; Li, S.; Zhao, Y. Maize-YOLO: A New High-Precision and Real-Time Method for Maize Pest Detection. Insects 2023, 14, 278. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhang, S.; Wang, X.; Xu, C. Crop pest detection by three-scale convolutional neural network with attention. PLoS ONE 2023, 18, e0276456. [Google Scholar] [CrossRef]

- Lin, J.; Chengjun, X.; Peng, C.; Jianming, D.; Rui, L.; Jie, Z. Adaptive feature fusion pyramid network for multi-classes agricultural pest detection. Comput. Electron. Agric. 2022, 195, 106827. [Google Scholar] [CrossRef]

- Hu, K.; Liu, Y.; Nie, J.; Zheng, X.; Zhang, W.; Liu, Y.; Xie, T. Rice pest identification based on multi-scale double-branch GAN-ResNet. Front. Plant Sci. 2023, 14, 1167121. [Google Scholar] [CrossRef]

- Li, S.; Wang, H.; Zhang, C.; Liu, J. A Self-Attention Feature Fusion Model for Rice Pest Detection. IEEE Access 2022, 10, 84063–84077. [Google Scholar] [CrossRef]

- Hu, Y.; Deng, X.; Lan, Y.; Chen, X.; Long, Y.; Liu, C. Detection of Rice Pests Based on Self-Attention Mechanism and Multi-Scale Feature Fusion. Insects 2023, 14, 280. [Google Scholar] [CrossRef] [PubMed]

- Yao, Q.; Xian, D.X.; Liu, Q.J.; Yang, B.J.; Diao, G.Q.; Tang, J. Automated Counting of Rice Planthoppers in Paddy Fields Based on Image Processing. J. Integr. Agric. 2014, 13, 1736–1745. [Google Scholar] [CrossRef]

- He, Y.; Zhou, Z.; Tian, L.; Liu, Y.; Luo, X. Brown rice planthopper (Nilaparvata lugens Stal) detection based on deep learning. Precis. Agric. 2020, 21, 1385–1402. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhan, W.; Sun, K.; Zhang, Y.; Guo, Y.; He, Z.; Hua, D.; Sun, Y.; Zhang, X.; Tong, S.; et al. RPH-Counter: Field detection and counting of rice planthoppers using a fully convolutional network with object-level supervision. Comput. Electron. Agric. 2024, 225, 109242. [Google Scholar] [CrossRef]

- Liu, S.; Fu, S.; Hu, A.; Ma, P.; Hu, X.; Tian, X.; Zhang, H.; Liu, S. Research on Insect Pest Identification in Rice Canopy Based on GA-Mask R-CNN. Agronomy 2023, 13, 2155. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Li, D.; Hu, J.; Wang, C.; Li, X.; She, Q.; Zhu, L.; Zhang, T.; Chen, Q. Involution: Inverting the inherence of convolution for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12321–12330. [Google Scholar]

- Ramachandran, P.; Parmar, N.; Vaswani, A.; Bello, I.; Levskaya, A.; Shlens, J. Stand-alone self-attention in vision models. Neural Inf. Process. Syst. 2019, 32, 68–80. [Google Scholar]

- Elsayed, G.; Ramachandran, P.; Shlens, J.; Kornblith, S. Revisiting spatial invariance with low-rank local connectivity. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020; pp. 2868–2879. [Google Scholar]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating spatial attention and standard convolutional operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. In NIPS’18, Proceedings of the 32nd International Conference on Neural Information Processing System, Montreal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Wan, D.; Lu, R.; Shen, S.; Xu, T.; Lang, X.; Ren, Z. Mixed local channel attention for object detection. Eng. Appl. Artif. Intell. 2023, 123, 106442. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author (s) and contributor (s) and not of MDPI and/or the editor (s). MDPI and/or the editor (s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).