An AUV Target-Tracking Method Combining Imitation Learning and Deep Reinforcement Learning

Abstract

1. Introduction

2. Problem Formulation

2.1. Coordinate Systems of AUVs

2.2. Problem Description

2.3. State Space and Action Space of AUV

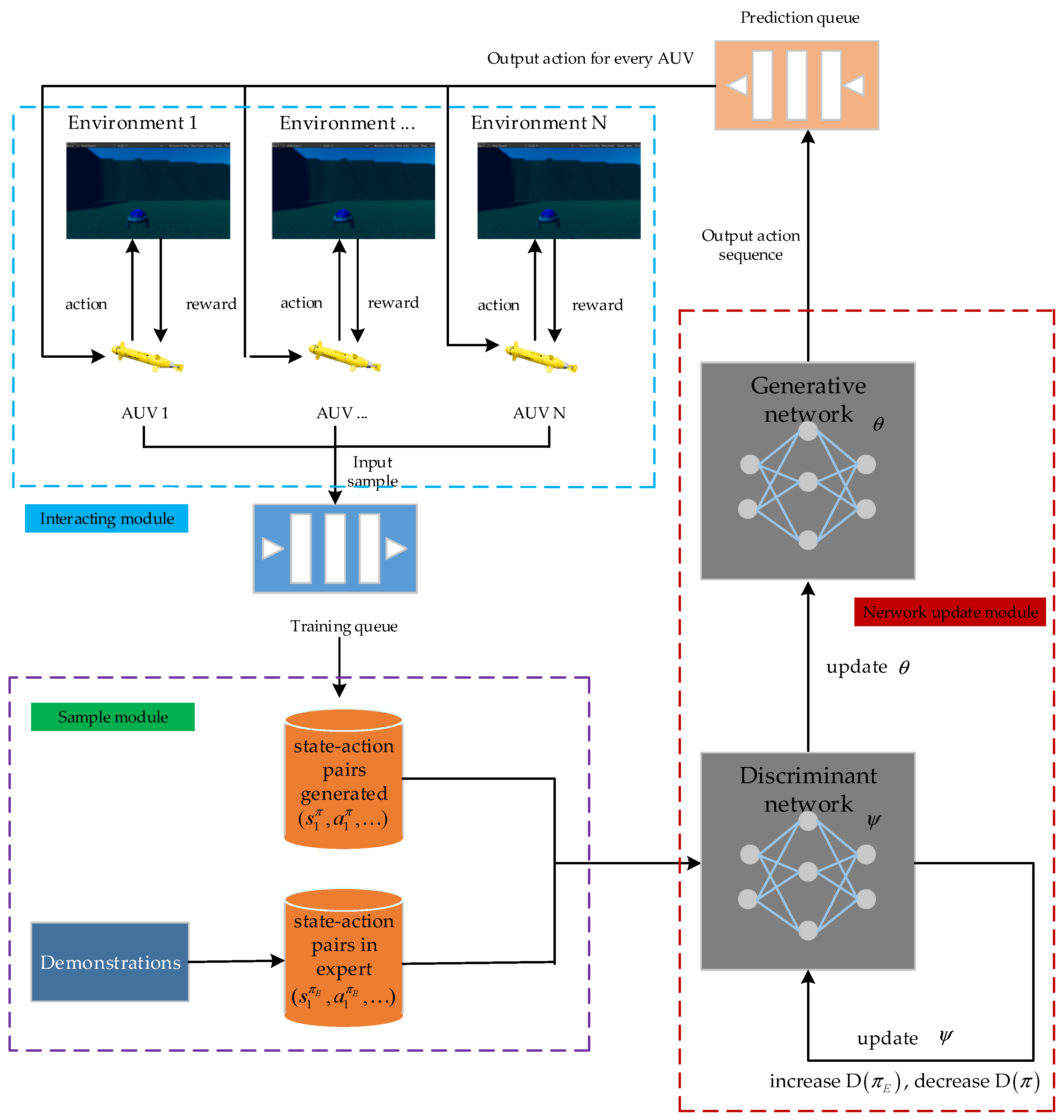

3. MAG Path-Planning Algorithm

3.1. Markov Decision Process

3.2. Discriminant Network

3.3. Generative Network

3.4. Multi-Agent Training

3.5. Algorithm Process

| Algorithm 1. The path-plan training process. |

| Input: expert demonstrations . Create multiple identical underwater environments and create an AUV with light sensor and target in each environment in the unity software. Initialize training queue and prediction queue. Randomly initialize the discriminant network and the generative network while each AUV shares KL divergence and parameters of and . for episode = 1 to M: for step = 1 to N: AUVs input state to training queue waiting for the output of action; Generative network output action sequences for prediction queue and obtains the generative trajectory ; Batch generative trajectory ; Step = step + 1; If (hit the target or obstacle): Calculate cumulative rewards; break; end for; The discriminant network scores generative trajectory ; Update the generative network parameters to by maximizing Equation (10); Update the discriminant network parameters to by maximizing Equation (8); Episode = episode + 1; end for; Output: the trained generative network to the AUV as a controller. |

4. Experiments

4.1. Environmental Model and Training Parameters

- The AUV collides with the target and gets a 0.1 reward.

- The AUV collides with an obstacle and gets a −0.1 reward.

- The AUV has completed 1000 steps. It can be expressed as follows:

4.2. Evaluation Standard

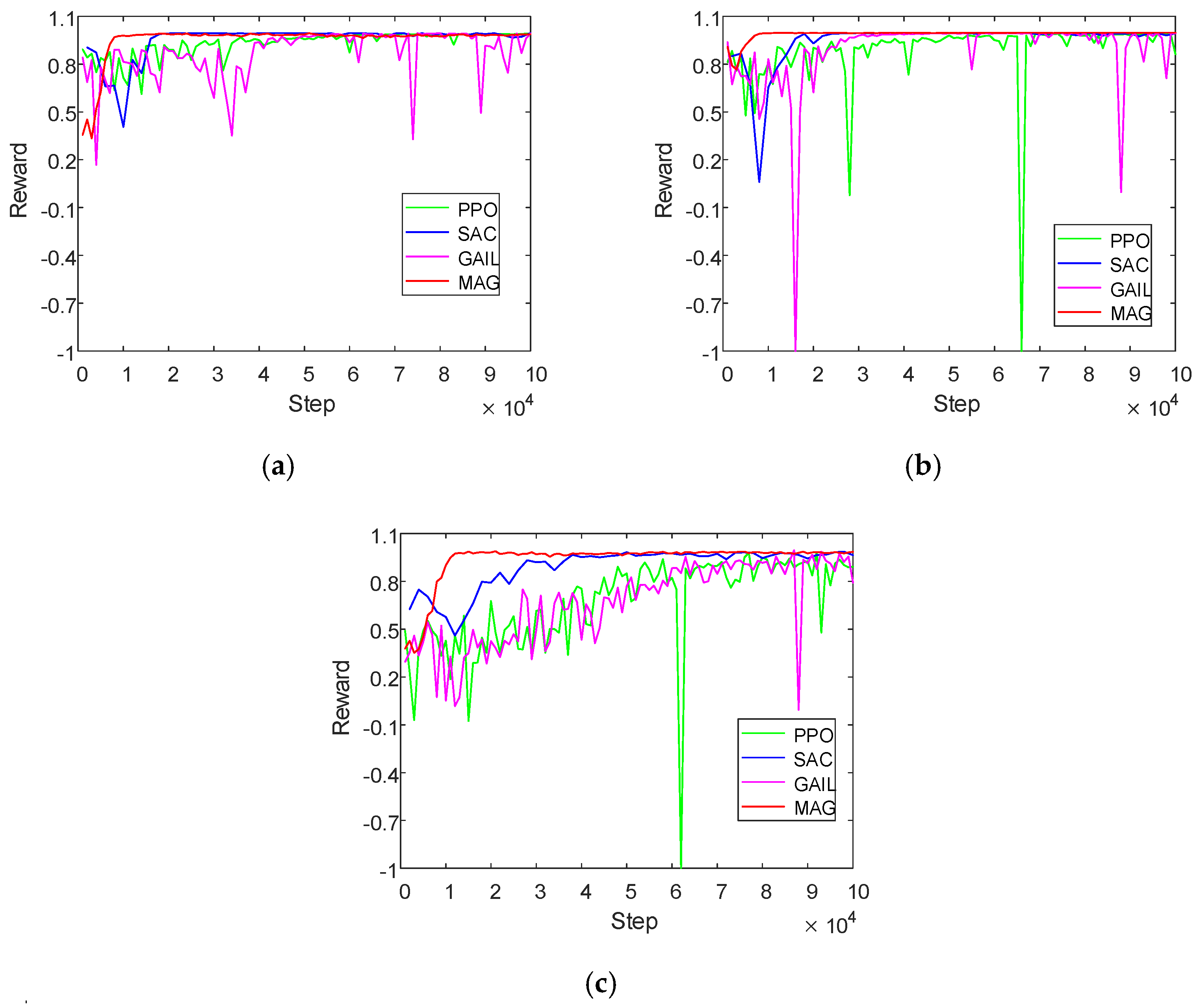

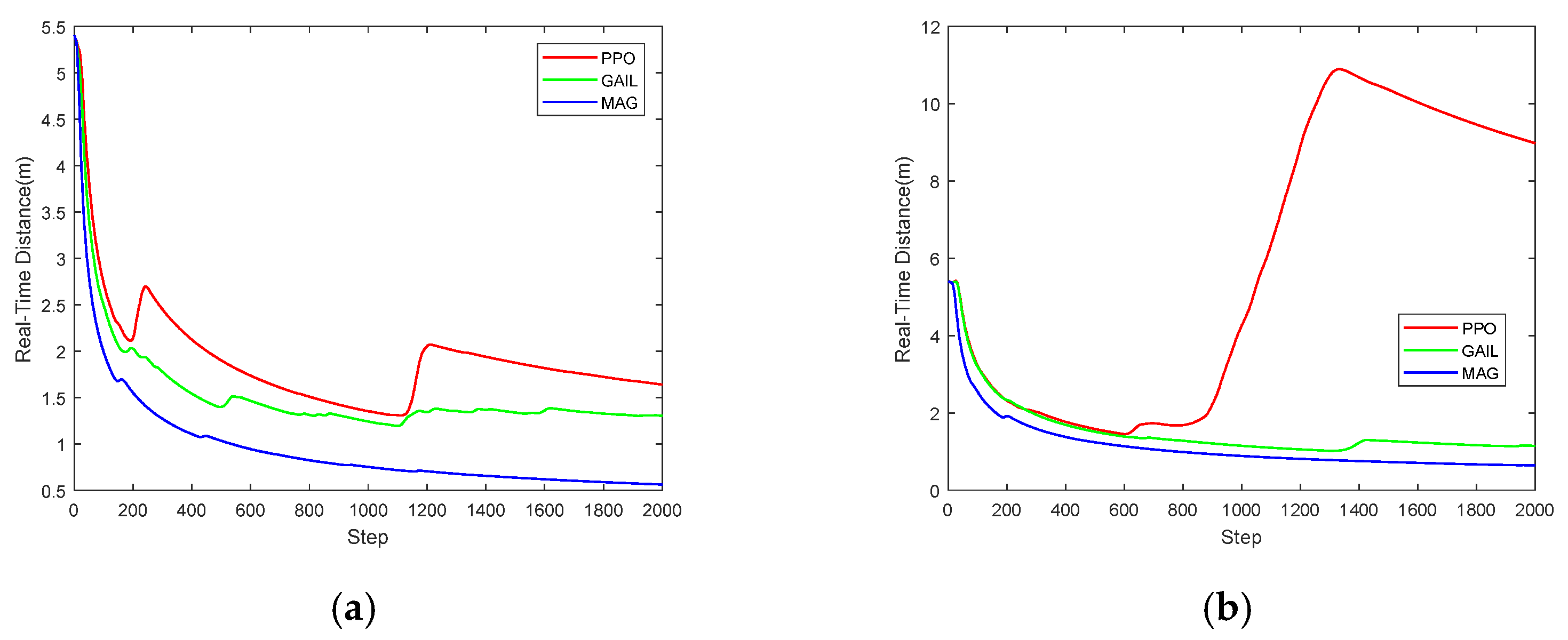

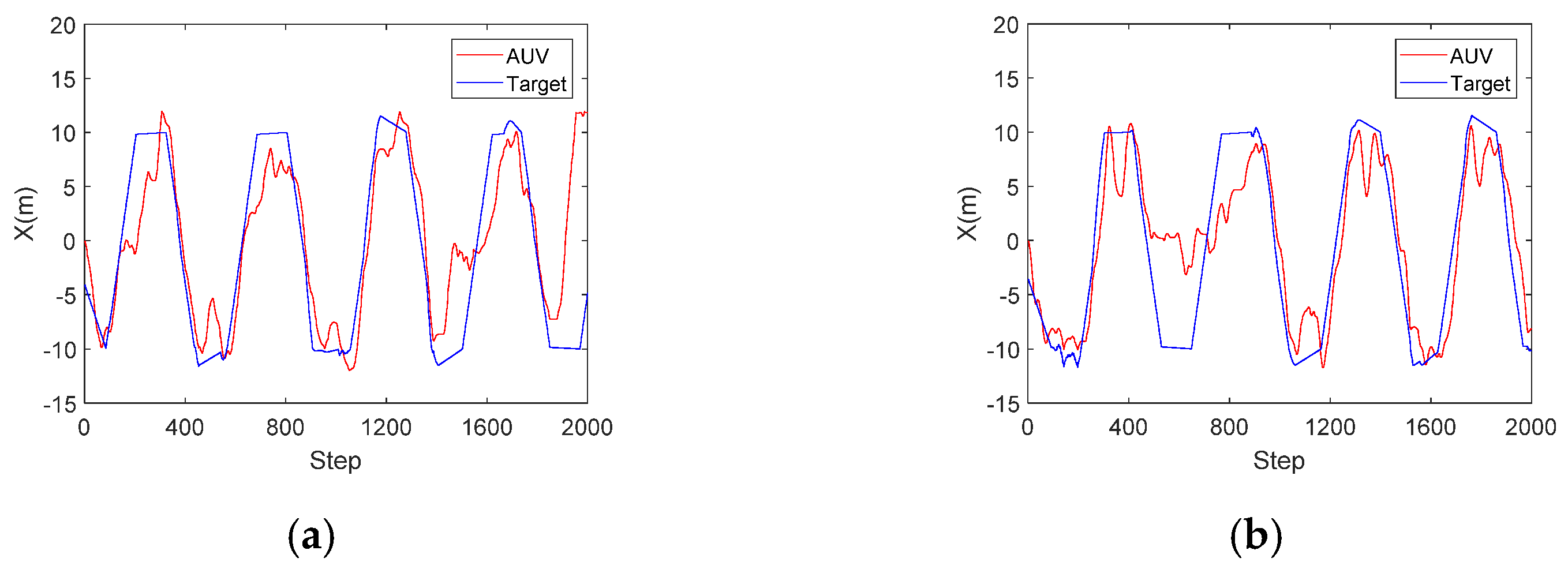

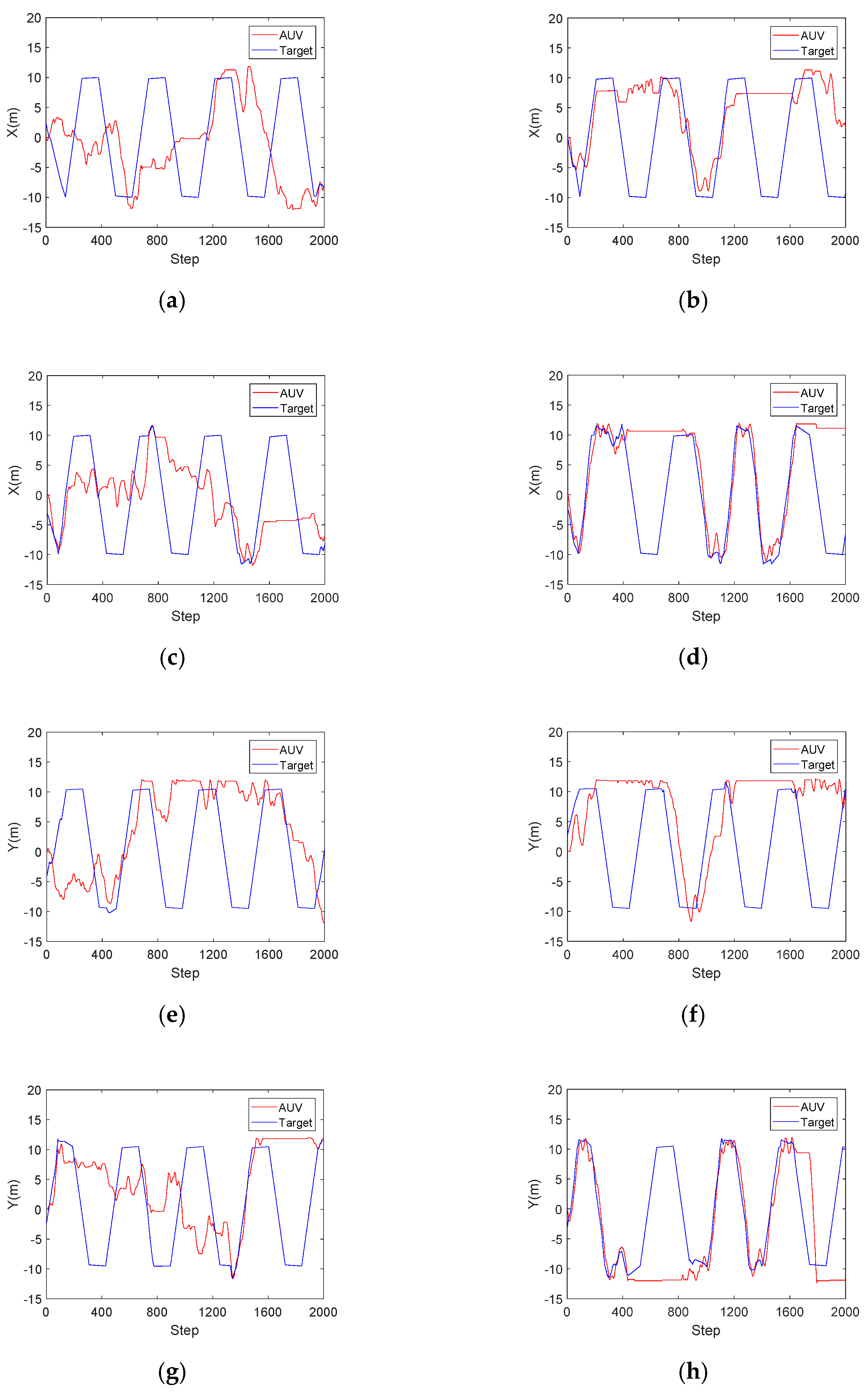

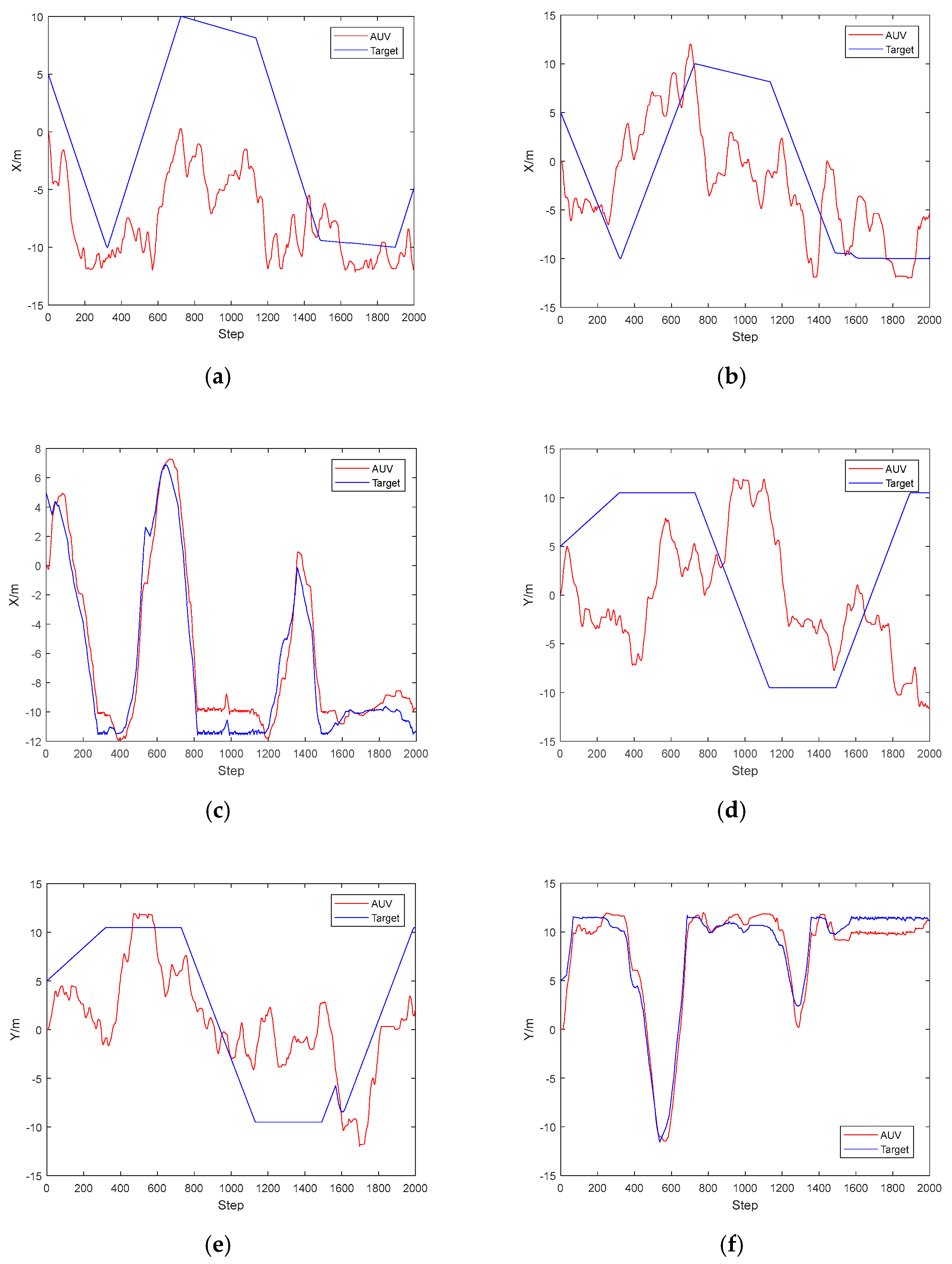

4.3. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, Q. Unmanned Underwater Vehicle, 1st ed.; National Defense Industry Press: Beijing, China, 2014; pp. 1–27. [Google Scholar]

- Kobayashi, R.; Okada, S. Development of hovering control system for an underwater vehicle to perform core internal inspections. J. Nucl. Sci. Technol. 2016, 53, 566–573. [Google Scholar] [CrossRef]

- Li, Y.; Ma, T.; Wang, R.; Chen, P.; Zhang, Q. Terrain correlation correction method for AUV seabed terrain mapping. J. Navig. 2017, 70, 1062–1078. [Google Scholar] [CrossRef]

- Zhao, Y.; Gao, F.; Yu, J.; Yu, X.; Yang, Z. Underwater image mosaic algorithm based on improved image registration. Appl. Sci. 2021, 11, 5986. [Google Scholar] [CrossRef]

- Han, Y.; Liu, Y.; Hong, Z.; Zhang, Y.; Yang, S.; Wang, J. Sea ice image classification based on heterogeneous data fusion and deep learning. Remote Sens. 2021, 13, 592. [Google Scholar] [CrossRef]

- Gao, F.; Wang, K.; Yang, Z.; Wang, Y.; Zhang, Q. Underwater image enhancement based on local contrast correction and multi-scale fusion. J. Mar. Sci. Eng. 2021, 9, 225. [Google Scholar] [CrossRef]

- Conti, R.; Meli, E.; Ridolfi, A.; Allotta, B. An innovative decentralized strategy for I-AUVs cooperative manipulation tasks. Robot. Auton. Syst. 2015, 72, 261–276. [Google Scholar] [CrossRef]

- Ribas, D.; Ridao, P.; Turetta, A.; Melchiorri, C.; Palli, G.; Fernández, J.J.; Sanz, P.J. I-AUV Mechatronics integration for the TRIDENT FP7 project. IEEE/ASME Trans. Mechatron. 2015, 20, 2583–2592. [Google Scholar] [CrossRef]

- Mazumdar, A.; Triantafyllou, M.S.; Asada, H.H. Dynamic analysis and design of spheroidal underwater robots for precision multidirectional maneuvering. IEEE/ASME Trans. Mechatron. 2015, 20, 2890–2902. [Google Scholar] [CrossRef]

- Ang, K.H.; Chong, G.; Li, Y. PID control system analysis, design, and technology. IEEE Trans. Control Syst. Technol. 2005, 13, 559–576. [Google Scholar]

- Balogun, O.; Hubbard, M.; DeVries, J. Automatic control of canal flow using linear quadratic regulator theory. J. Hydraul. Eng. 1988, 114, 75–102. [Google Scholar] [CrossRef]

- Li, S.; Liu, J.; Xu, H.; Zhao, H.; Wang, Y. Research status of my country’s deep-sea autonomous underwater vehicles. SCIENTIA SINICA Inf. 2018, 48, 1152–1164. [Google Scholar] [CrossRef]

- Malinowski, M.; Kazmierkowski, M.P.; Trzynadlowski, A.M. A comparative study of control techniques for PWM rectifiers in AC adjustable speed drives. IEEE Trans. Power Electron. 2003, 18, 1390–1396. [Google Scholar] [CrossRef]

- Christudas, F.; Dhanraj, A.V. System identification using long short term memory recurrent neural networks for real time conical tank system. Rom. J. Inf. Sci. Technol. 2020, 23, 57–77. [Google Scholar]

- Zamfirache, I.A.; Precup, R.-E.; Roman, R.-C.; Petriu, E.M. Reinforcement Learning-based control using Q-learning and gravitational search algorithm with experimental validation on a nonlinear servo system. Inf. Sci. 2022, 583, 99–120. [Google Scholar] [CrossRef]

- Precup, R.-E.; Roman, R.-C.; Teban, T.-A.; Albu, A.; Petriu, E.M.; Pozna, C. Model-free control of finger dynamics in prosthetic hand myoelectric-based control systems. Stud. Inform. Control 2020, 29, 399–410. [Google Scholar] [CrossRef]

- Precup, R.-E.; Roman, R.-C.; Safaei, A. Data-Driven Model-Free Controllers, 1st ed.; CRC Press: Boca Raton, FL, USA, 2021; pp. 167–210. [Google Scholar]

- Nian, R.; Liu, J.; Huang, B. A review on reinforcement learning: Introduction and applications in industrial process control. Comput. Chem. Eng. 2020, 139, 106886. [Google Scholar] [CrossRef]

- Webb, G.I.; Pazzani, M.J.; Billsus, D. Machine learning for user modeling. User Modeling User-Adapt. Interact. 2001, 11, 19–29. [Google Scholar] [CrossRef]

- Whitehead, S. Reinforcement Learning for the Adaptive Control of Perception and Action. PhD Thesis, University of Rochester, New York, NY, USA, 1992. [Google Scholar]

- Tariq, M.I.; Tayyaba, S.; Ashraf, M.W.; Balas, V.E. Deep learning techniques for optimizing medical big data. In Deep Learning Techniques for Biomedical and Health Informatics, 1st ed.; Agarwal, B., Balas, V., Jain, L., Poonia, R., Sharma, M., Eds.; Academic Press: New York, NY, USA, 2020; pp. 187–211. [Google Scholar]

- Ghasrodashti, E.K.; Sharma, N. Hyperspectral image classification using an extended Auto-Encoder method. Signal Processing Image Commun. 2021, 92, 116111. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A review of deep learning in multiscale agricultural sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Ishii, K.; Fujii, T.; Ura, T. An on-line adaptation method in a neural network based control system for AUVs. IEEE J. Ocean. Eng. 1995, 20, 221–228. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Yang, W.; Bai, C.; Cai, C. Survey on sparse reward in deep reinforcement learning. Comput. Sci. 2020, 47, 182–191. [Google Scholar]

- Wan, L.; Lan, X.; Zhang, H.; Zheng, N. Survey on deep reinforcement learning theory and its application. Pattem. Recognit. Aitificial Intell. 2019, 32, 67–81. [Google Scholar]

- Osa, T.; Sugita, N.; Mitsuishi, M. Online trajectory planning and force control for automation of surgical tasks. IEEE Trans. Autom. Sci. Eng. 2017, 15, 675–691. [Google Scholar] [CrossRef]

- Sermanet, P.; Xu, K.; Levine, S. Unsupervised perceptual rewards for imitation learning. arXiv 2016, arXiv:1612.06699. [Google Scholar]

- Torabi, F.; Warnell, G.; Stone, P. Behavioral cloning from observation. arXiv 2018, arXiv:1805.01954. [Google Scholar]

- Ng, A.Y.; Russell, S.J. Algorithms for inverse reinforcement learning. In Proceedings of the 17th International Conference on Machine Learning, Vienna, Austria, 12–18 July 2000; pp. 663–670. [Google Scholar]

- Ho, J.; Ermon, S. Generative adversarial imitation learning. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4565–4573. [Google Scholar]

- Riedmiller, M. Neural fitted Q iteration–first experiences with a data efficient neural reinforcement learning method. In Proceedings of the 16th European Conference on Machine Learning, Porto, Portugal, 3–7 October 2005; pp. 317–328. [Google Scholar]

- Gupta, J.K.; Egorov, M.; Kochenderfer, M. Cooperative multi-agent control using deep reinforcement learning. In Proceedings of the International Conference on Autonomous Agents and Multiagent Systems, Sao Paulo, Brazil, 8–12 May 2017; pp. 66–83. [Google Scholar]

- Babaeizadeh, M.; Frosio, I.; Tyree, S.; Clemons, J.; Kautz, J. Reinforcement learning through asynchronous advantage actor-critic on a gpu. arXiv 2016, arXiv:1611.06256. [Google Scholar]

- Fossen, T.I. Handbook of Marine Craft Hydrodynamics and Motion Control, 2nd ed.; John Wiley & Sons: West Sussex, UK, 2021; pp. 3–14. [Google Scholar]

- Wang, Z.; Merel, J.S.; Reed, S.E.; de Freitas, N.; Wayne, G.; Heess, N. Robust imitation of diverse behaviors. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5320–5329. [Google Scholar]

- Vanvuchelen, N.; Gijsbrechts, J.; Boute, R. Use of proximal policy optimization for the joint replenishment problem. Comput. Ind. 2020, 119, 103239. [Google Scholar] [CrossRef]

- Yu, X.; Sun, Y.; Wang, X.; Zhang, G. End-to-end AUV motion planning method based on soft actor-critic. Sensors 2021, 21, 5893. [Google Scholar] [CrossRef]

- Choi, S.; Kim, J.; Yeo, H. Trajgail: Generating urban vehicle trajectories using generative adversarial imitation learning. Transp. Res. Part C Emerg. Technol. 2021, 128, 103091. [Google Scholar] [CrossRef]

- Herlambang, T.; Djatmiko, E.B.; Nurhadi, H. Ensemble Kalman filter with a square root scheme (EnKF-SR) for trajectory estimation of AUV SEGOROGENI ITS. Int. Rev. Mech. Eng. 2015, 9, 553–560. [Google Scholar] [CrossRef]

- Yuan, J.; Wang, H.; Zhang, H.; Lin, C.; Yu, D.; Li, C. AUV obstacle avoidance planning based on deep reinforcement learning. J. Mar. Sci. Eng. 2021, 9, 1166. [Google Scholar] [CrossRef]

- Ganesan, V.; Chitre, M.; Brekke, E. Robust underwater obstacle detection and collision avoidance. Auton. Robot. 2016, 40, 1165–1185. [Google Scholar] [CrossRef]

- You, X.; Lv, Z.; Ding, Y.; Su, W.; Xiao, L. Reinforcement learning based energy efficient underwater localization. In Proceedings of the 2020 International Conference on Wireless Communications and Signal Processing (WCSP), Wuhan, China, 21–23 October 2020; pp. 927–932. [Google Scholar]

- MahmoudZadeh, S.; Powers, D.; Yazdani, A.M.; Sammut, K.; Atyabi, A. Efficient AUV path planning in time-variant underwater environment using differential evolution algorithm. J. Mar. Sci. Appl. 2018, 17, 585–591. [Google Scholar] [CrossRef]

- Bøhn, E.; Coates, E.M.; Moe, S.; Johansen, T.A. Deep reinforcement learning attitude control of fixed-wing uavs using proximal policy optimization. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 523–533. [Google Scholar]

- Barros, G.M.; Colombini, E.L. Using soft actor-critic for low-level UAV control. arXiv 2020, arXiv:2010.02293. [Google Scholar]

- Grando, R.B.; de Jesus, J.C.; Kich, V.A.; Kolling, A.H.; Bortoluzzi, N.P.; Pinheiro, P.M.; Neto, A.A.; Drews, P.L. Deep reinforcement learning for mapless navigation of a hybrid aerial underwater vehicle with medium transition. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1088–1094. [Google Scholar]

- Pham, D.-T.; Tran, T.-N.; Alam, S.; Duong, V.N. A generative adversarial imitation learning approach for realistic aircraft taxi-speed modeling. IEEE Trans. Intell. Transp. Syst. 2021, in press. [Google Scholar] [CrossRef]

- Tai, L.; Zhang, J.; Liu, M.; Burgard, W. Socially compliant navigation through raw depth inputs with generative adversarial imitation learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 1111–1117. [Google Scholar]

| Scene 1 | Scene 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| PPO [48] | 1.0264 | 0.6773 | 0.9257 | 0.0035 | 1.0100 | −0.4310 | 0.8753 | 0.0285 |

| SAC [50] | 0.9955 | 0.4053 | 0.9518 | 0.0121 | 0.9950 | 0.0591 | 0.9454 | 0.0220 |

| GAIL [51] | 0.9968 | 0.5482 | 0.9651 | 0.0057 | 0.9990 | 0.2442 | 0.9580 | 0.0147 |

| MAG (Ours) | 1.0031 | 0.8119 | 0.9861 | 0.0012 | 1.0032 | 0.1952 | 0.9548 | 0.0165 |

| Index | Scene | Algorithm | |||

|---|---|---|---|---|---|

| PPO [48] | SAC [50] | GAIL [51] | MAG (Ours) | ||

| Time(s) | Scene 1 | 1359.3 | 2857.7 | 1600.4 | 2558.1 |

| Scene 2 | 1716.1 | 3234.2 | 1583.1 | 2667.3 | |

| Scene 3 | 1554.4 | 2843.8 | 1623.7 | 2644.2 | |

| CV | Scene 3 | 4 | 4 | 2 | 1 |

| Scene 1 | Scene 2 | |||||

|---|---|---|---|---|---|---|

| PPO [48] | GAIL [51] | MAG (Ours) | PPO [48] | GAIL [51] | MAG (Ours) | |

| 1.9280 | 1.5436 | 0.9630 | 5.7500 | 1.5337 | 1.1443 | |

| 0.3661 | 0.3447 | 0.4073 | 13.7991 | 0.6411 | 0.5592 | |

| −7.1558 | 2.6253 | 8.5715 | −2.0401 | 0.3701 | −9.0751 | |

| 5.9853 | 3.6069 | 8.5584 | −0.1301 | 10.1977 | −1.3942 | |

| −8.3558 | 2.9713 | 9.9069 | 1.1671 | 0.5142 | −9.1093 | |

| 6.0639 | 3.5525 | 9.4420 | 4.1045 | 9.8918 | −2.4282 | |

| Scene 1 | Scene 2 | |||||

|---|---|---|---|---|---|---|

| PPO [48] | GAIL [51] | MAG (Ours) | PPO [48] | GAIL [51] | MAG (Ours) | |

| 12.8324 | 8.1974 | 1.4853 | 11.1855 | 7.1754 | 1.1321 | |

| 6.2000 | 1.0495 | 0.7206 | 3.7951 | 0.7804 | 0.5229 | |

| 4.0641 | 1.3391 | 5.6856 | −7.7581 | −2.3172 | −6.1136 | |

| 2.9278 | −0.3948 | 7.8973 | −0.2228 | 0.7792 | 7.9131 | |

| −1.0678 | −0.8711 | 6.2315 | −1.0512 | −1.2140 | −6.6929 | |

| 2.4404 | 2.3525 | 7.8203 | 2.4347 | 1.5609 | 8.2772 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mao, Y.; Gao, F.; Zhang, Q.; Yang, Z. An AUV Target-Tracking Method Combining Imitation Learning and Deep Reinforcement Learning. J. Mar. Sci. Eng. 2022, 10, 383. https://doi.org/10.3390/jmse10030383

Mao Y, Gao F, Zhang Q, Yang Z. An AUV Target-Tracking Method Combining Imitation Learning and Deep Reinforcement Learning. Journal of Marine Science and Engineering. 2022; 10(3):383. https://doi.org/10.3390/jmse10030383

Chicago/Turabian StyleMao, Yubing, Farong Gao, Qizhong Zhang, and Zhangyi Yang. 2022. "An AUV Target-Tracking Method Combining Imitation Learning and Deep Reinforcement Learning" Journal of Marine Science and Engineering 10, no. 3: 383. https://doi.org/10.3390/jmse10030383

APA StyleMao, Y., Gao, F., Zhang, Q., & Yang, Z. (2022). An AUV Target-Tracking Method Combining Imitation Learning and Deep Reinforcement Learning. Journal of Marine Science and Engineering, 10(3), 383. https://doi.org/10.3390/jmse10030383