Abstract

At present, 3D reconstruction technology is being gradually applied to underwater scenes and has become a hot research direction that is vital to human ocean exploration and development. Due to the rapid development of computer vision in recent years, optical image 3D reconstruction has become the mainstream method. Therefore, this paper focuses on optical image 3D reconstruction methods in the underwater environment. However, due to the wide application of sonar in underwater 3D reconstruction, this paper also introduces and summarizes the underwater 3D reconstruction based on acoustic image and optical–acoustic image fusion methods. First, this paper uses the Citespace software to visually analyze the existing literature of underwater images and intuitively analyze the hotspots and key research directions in this field. Second, the particularity of underwater environments compared with conventional systems is introduced. Two scientific problems are emphasized by engineering problems encountered in optical image reconstruction: underwater image degradation and the calibration of underwater cameras. Then, in the main part of this paper, we focus on the underwater 3D reconstruction methods based on optical images, acoustic images and optical–acoustic image fusion, reviewing the literature and classifying the existing solutions. Finally, potential advancements in this field in the future are considered.

1. Introduction

At present, 3D data measurement and object reconstruction technologies are being gradually applied to underwater scenes, which has become a hot research direction. They can be used for biological investigation, archaeology and other research [1,2] and can also facilitate people’s exploration and mapping of the seabed. These maps are usually made up of three-dimensional data collected by one or more sensors and then processed with 3D reconstruction algorithms. Then, the collected 3D data are processed to obtain the 3D information of the actual scene and the target’s actual 3D structure is restored. This workflow is called 3D reconstruction [3].

The development of 3D reconstruction has been a long process. Early 3D reconstruction was mainly completed by manual drawing, which was time-consuming and labor-intensive [4]. Nowadays, the main 3D reconstruction techniques can be divided into image-based 3D reconstruction and laser-scanner-based 3D reconstruction, which use different types of equipment (camera and laser scanner, respectively) to perform tasks [5]. Ying Lo et al. [6] studied the cost-effectiveness of the two methods based on their results in terms of accuracy, cost, time efficiency and flexibility. According to the findings, the laser scanning method’s accuracy is nearly on par with the image-based method’s accuracy. However, methods based on laser scanning require expensive instruments and skilled operators to obtain accurate models. Image-based methods, which automatically process data, are relatively inexpensive.

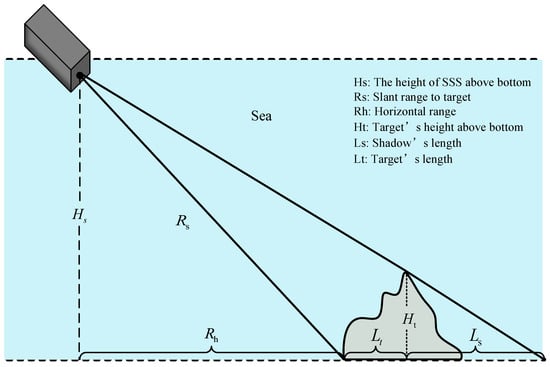

Therefore, image-based underwater 3D reconstruction is the focus of current research, which can be divided into the optical and acoustic 3D reconstruction of underwater images according to different means. The optical method mainly uses optical sensors to obtain three-dimensional information of underwater objects or scenes and reconstruct them. Recently, progress has been made in 3D reconstruction technology based on underwater optical images. However, it is frequently challenging to meet the demands of actual applications because of the undersea environment’s diversity, complexity and quick attenuation of the propagation energy of light waves. Therefore, researchers have also proposed acoustic methods based on underwater images, which mainly use sonar sensors to obtain underwater information. Due to the characteristics of sonar propagation in water, such as low loss, strong penetration ability, long propagation distance and little influence of water quality, sonar has become a good choice to study the underwater environment.

Regarding the carrier and imaging equipment, due to the continuous progress of science and technology, underwater camera systems and customized systems in deep-sea robots continue to improve. Crewed and driverless vehicles can slowly enter large ocean areas and continuously shoot higher-quality images and videos underwater to provide updated and more accurate data for underwater 3D reconstruction. Using sensors to record the underwater scene, scientists can now obtain accurate two-dimensional or three-dimensional data and use standard software to interact with them, which is helpful for understanding the underwater environment in real time. Data acquisition can be conducted using sensors deployed underwater (e.g., underwater tripods or stationary devices), sensors operated by divers, remotely operated vehicles (ROVs) or autonomous underwater vehicles (AUVs).

At present, there are few review papers in the field of underwater 3D reconstruction. In 2015, Shortis M [7] reviewed different methods of underwater camera system calibration from both theoretical and practical aspects and discussed the calibration of underwater camera systems with respect to their accuracy, dependability, efficacy and stability. Massot-Campos, M. and Oliver-Codina, G [3] reviewed the optical sensors and methods of 3D reconstruction commonly used in underwater environments. In 2017, Qiao Xi et al. [8] reviewed the development of the field of underwater machine vision and its potential underwater applications and compared the existing research and the underwater 3D scanner of commercial goods. In 2019, Miguel Castillón et al. [9] reviewed the research on optical 3D underwater scanners and the research progress of light-projection and light-sensing technology. Finally, in 2019, Avilash Sahoo et al. [10] reviewed the field of underwater robots, looked at future research directions and discussed in detail the current positioning and navigation technology in autonomous underwater vehicles as well as different optimal path planning and control methods.

The above review papers have made some contributions to the research on underwater 3D reconstruction. However, first, most of these contributions only focus on a certain key direction of underwater reconstruction or offer a review of a certain reconstruction method, such as underwater camera calibration, underwater 3D instrument, etc. There is no comprehensive summary of the difficulties encountered in 3D reconstruction in underwater environments and the current commonly used reconstruction methods for underwater images. Second, since 2019, there has been no relevant review to summarize the research results in this direction. Third, there is no discussion of the multi-sensor fusion issue that is currently under development.

Therefore, it is necessary to conduct an all-around survey of the common underwater 3D reconstruction methods and the difficulties encountered in the underwater environment to help researchers obtain an overview of this direction and continue to make efforts based on the existing state of affairs. Therefore, the contributions of this paper are as follows:

- (1)

- Using the Citespace software to visually analyze the relevant papers in the direction of underwater 3D reconstruction in the past two decades can more conveniently and intuitively display the research content and research hotspots in this field.

- (2)

- In the underwater environment, the challenges faced by image reconstruction and the solutions proposed by current researchers are addressed.

- (3)

- We systematically introduce the main optical methods for the 3D reconstruction of underwater images that are currently widely used, including structure from motion, structured light, photometric stereo, stereo vision and underwater photogrammetry, and review the classic methods used by researchers to apply these methods. Moreover, because sonar is widely used in underwater 3D reconstruction, this paper also introduces and summarizes underwater 3D reconstruction methods based on acoustic image and optical–acoustic image fusion.

This paper is organized as follows: The first portion mainly introduces the significance of underwater 3D reconstruction and the key research direction of this paper. Section 2 uses the Citespace software to perform a visual analysis of the area of underwater 3D reconstruction based on the documents and analyzes the development status of this field. Section 3 introduces the particularity of the underwater environment compared with the conventional system and the difficulties and challenges to be faced in underwater optical image 3D reconstruction. Section 4 introduces the underwater reconstruction technology based on optics and summarizes the development of existing technologies and the improvement of algorithms by researchers. Section 5 introduces underwater 3D reconstruction methods based on sonar images and offers a review of the existing results; it further summarizes 3D reconstruction with opto-acoustic fusion. Finally, in the sixth section, the current development of image-based underwater 3D reconstruction is summarized and prospected.

2. Development Status of Underwater 3D Reconstruction

Analysis of the Development of Underwater 3D Reconstruction Based on the Literature

The major research tool utilized for the literature analysis in this paper was the Citespace software developed by Dr. Chen Chaomei [11]. Citespace can be used to measure a collection of documents in a specific field to discover the key path of the evolution of the subject field and to form a series of visual maps to obtain an overview of the subject’s evolution and academic development [12,13,14]. A literature analysis based on Citespace can more conveniently and intuitively display the research content and research hotspots in a certain field.

We conducted an advanced retrieval on the Web of Science. By setting the keywords as underwater 3D reconstruction and underwater camera calibration, the time from 2002 to 2022, and the search scope to exclude references, and a total of more than 1000 documents was obtained. The subject of underwater camera calibration was the basis of optical image 3D reconstruction summarized in this paper, so we added underwater camera calibration when setting keywords. The Citespace software was utilized for the visual analysis of underwater 3D-reconstruction-related literature, and the exploration of underwater reconstruction in the most recent 20 years was analyzed in terms of a keyword map and the number of author contributions.

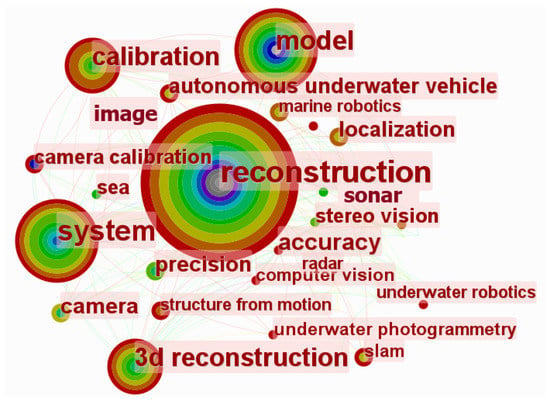

A keyword heat map was created using the retrieved documents, as shown in Figure 1. The larger the circle, the more times the keyword appears. The different layers of the circle represent different times from the inside to the outside. The connecting lines denote the connections between different keywords. Among them, ‘reconstruction’, with the largest circle, is the theme of this paper. The terms ‘camera calibration’, ‘structure from motion’, ‘stereo vision’, ‘underwater photogrammetry’ and ‘sonar’ in the larger circles are also the focus of this article and the focus of current underwater 3D reconstruction research. We can thus clearly see the current hotspots in this field and the key areas that need to be studied.

Figure 1.

Hot words in the field of underwater 3D reconstruction.

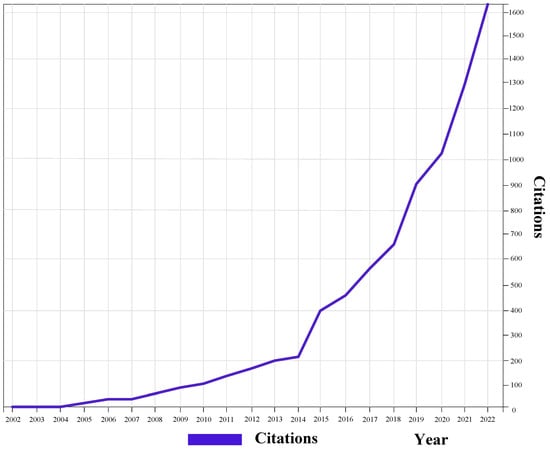

In addition, we also used the search result analysis function in Web of Science to analyze the research field statistics of papers published on the theme of underwater 3D reconstruction and the data cited by related articles. Figure 2 shows a line graph of the frequency of citations of related papers on the theme of underwater 3D reconstruction. The abscissa of the picture indicates the year and the ordinate indicates the number of citations of related papers. The graph shows that the number of citations of papers related to underwater 3D reconstruction rises rapidly as the years go on. Clearly, the area of underwater 3D reconstruction has received more and more attention, so this review is of great significance in combination with the current hotspots.

Figure 2.

Citations for Web of Science articles in recent years.

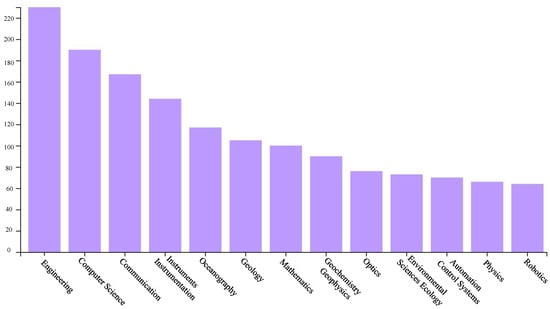

Figure 3 shows a histogram of statistics on the research field of papers published on the theme of underwater 3D reconstruction. The abscissa is the field of the retrieved paper and the ordinate is the number of papers in the field. Considering the research fields that retrieved relevant papers, underwater 3D reconstruction is a hot topic in engineering and computer science. Therefore, when we explore the direction of underwater 3D reconstruction, we should pay special attention to engineering issues and computer-related issues. From the above analysis, it is evident that the research on underwater 3D reconstruction is a hot topic at present, and it has attracted more and more attention as time progresses, mainly developing in the fields of computer science and engineering. Given the quick rise of deep learning methods in various fields [15,16,17,18,19,20,21,22,23], the development of underwater 3D reconstruction has also ushered in a period of rapid growth, which has greatly improved the reconstruction effect.

Figure 3.

Research fields of papers found using Web of Science.

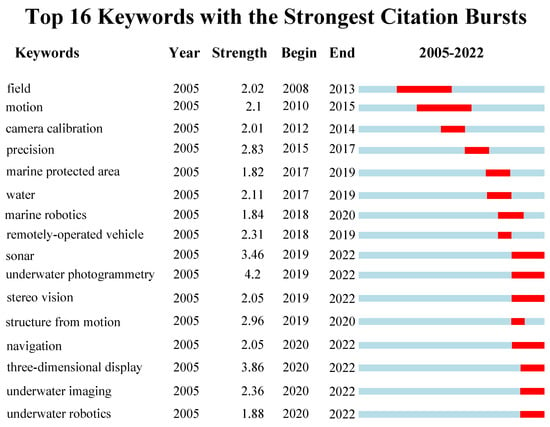

Figure 4 shows the top 16 keywords with high frequency from 2005 to 2022 made using the Citespace software. Strength stands for the strength of the keyword, and the greater the value, the more the keyword is cited. The line on the right is the timeline from 2005 to 2022. The ‘begin’ column indicates the time when the keyword first appeared. ‘Begin’ to ‘End’ indicates that the keyword is highly active during this year. The red line indicates the years with high activity. It can be seen from the figure that words such as ‘sonar’, ‘underwater photogrammetry’, ‘underwater imaging’ and ‘underwater robotics’ are currently hot research topics within underwater three-dimensional reconstruction. The keywords with high strength, such as ‘structure from motion’ and ‘camera calibration’, clearly show the hot research topics in this field, and are also the focus of this article.

Figure 4.

Timing diagram of the appearance of high-frequency keywords.

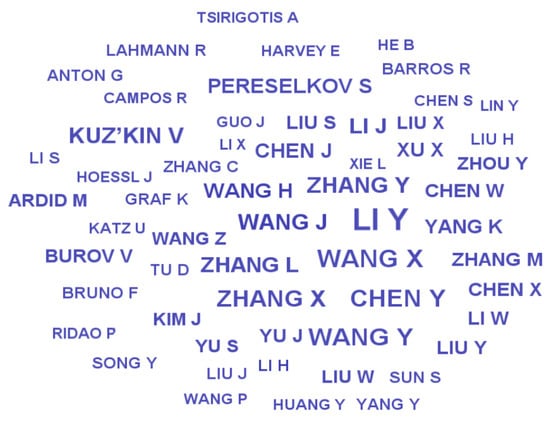

Considering the ongoing advancements in science and technology, the desire to explore the sea has become stronger and stronger, and some scholars and teams have made significant contributions to underwater reconstruction. The contributions of numerous academics and groups have aided in the improvement of the reconstruction process in the special underwater environment and laid the foundation for a series of subsequent reconstruction problems. We retrieved more than 1000 articles on underwater 3D reconstruction from Web of Science and obtained the author contribution map shown in Figure 5. The larger the font, the greater the attention the author received.

Figure 5.

Outstanding scholars in the area of underwater 3D reconstruction.

There are some representative research teams. Chris Beall et al. proposed a large-scale sparse reconstruction technology for underwater structures [24]. Bruno F et al. proposed the projection of structured lighting patterns based on a stereo vision system [25]. Bianco et al. compared two underwater 3D imaging technologies based on active and passive methods, as well as full-field acquisition [26]. Jordt A et al. used the geometric model of image formation to consider refraction. Then, starting from camera calibration, a complete and automatic 3D reconstruction system was proposed, which acquires image sequences and generates 3D models [27]. Kang L et al. studied a common underwater imaging device with two cameras, and then used a simplified refraction camera model to deal with the refraction problem [28]. Chadebecq F et al. proposed a novel RSfM framework [29] for a camera looking through a thin refractive interface to refine an initial estimate of the relative camera pose estimated. Song H et al. presented a comprehensive underwater visual reconstruction enhancement–registration–homogenization (ERH) paradigm [30]. Su Z et al. proposed a flexible and accurate stereo-DIC [31] based on the flat refractive geometry to measure the 3D shape and deformation of fluid-immersed objects. Table 1 lists their main contributions.

Table 1.

Some outstanding teams and their contributions.

This paper mainly used the Citespace software and Web of Science search and analysis functions to analyze the current development status and hotspot directions of underwater 3D reconstruction so that researchers can quickly understand the hotspots and key points in this field. In the next section, we analyze the uniqueness of the underwater environment in contrast to the conventional environment; that is, we analyzed the challenges that need to be addressed when performing optical image 3D reconstruction in the underwater environment.

3. Challenges Posed by the Underwater Environment

The development of 3D reconstruction based on optical image has been relatively mature. Compared with other methods, it has the benefits of being affordable and effective. However, in the underwater environment, it has different characteristics from conventional systems, mainly regarding the following aspects:

- (1)

- The underwater environment is complex, and the underwater scenes that can be reached are limited, so it is difficult to deploy the system and operate the equipment [32].

- (2)

- Data collection is difficult, requiring divers or specific equipment, and the requirements for the collection personnel are high [33].

- (3)

- The optical properties of the water body and insufficient light lead to dark and blurred images [34]. Light absorption can cause the borders of an image to blur, similar to a vignette effect.

- (4)

- When capturing underwater images in the air, there is a refraction effect between the sensor and the underwater object and between the air and the glass cover and the water due to the difference in density, which alters the camera’s intrinsic parameters, resulting in decreased algorithm performance while processing images [35]. Therefore, a specific calibration is required [36].

- (5)

- When photons propagate in an aqueous medium, they are affected by particles in the water, which can scatter or completely absorb the photons, resulting in the attenuation of the signal that finally reaches the image sensor [37]. The red, green and blue discrete waves are attenuated at different rates, and their effects are immediately apparent in the original underwater image, in which the red channel attenuates the most and the blue channel attenuates the least, resulting in the blue-green image effect [38].

- (6)

- Images taken in shallow-water areas (less than 10 m) may be severely affected by sunlight scintillation, which causes intense light variations as a result of sunlight refraction at the shifting air–water interface. This flickering can quickly change the appearance of the scene, which makes feature extraction and matching for basic image processing functions more difficult [39].

These engineering problems will affect the performance of underwater reconstruction systems. The algorithms of conventional systems used by researchers cannot often meet the needs of underwater practical applications with ease. Therefore, algorithm improvements are needed for 3D image reconstruction in underwater environments.

The 3D reconstruction of underwater images based on optics is greatly affected by the engineering problems proposed above. Research has shown that they can be mainly classified into two scientific problems, namely, the deterioration of underwater images and the calibration of underwater cameras. Meanwhile, underwater 3D reconstruction based on acoustic images is less affected by underwater environmental problems. Therefore, this section mainly introduces the processing of underwater image degradation and the improvement of underwater camera calibration for optical methods. They are the special features of conventional systems in underwater environments and are also the key and focus of underwater 3D reconstruction.

3.1. Underwater Image Degradation

The quality of the collected images is poor because of the unique underwater environment, which degrades the 3D reconstruction effect. In this section, we first discuss the caustic effect caused by light reflection or refraction in shallow water (water depth less than 10 m) and the solutions proposed by researchers. Second, we discuss image degradation caused by light absorption or scattering underwater and two common underwater image-processing approaches, namely underwater image restoration and visual image enhancement.

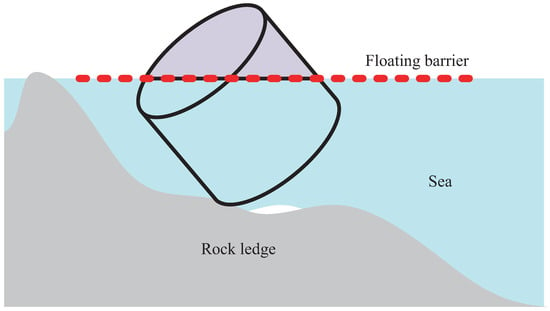

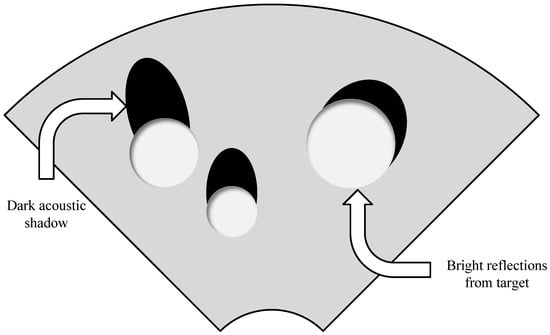

3.1.1. Reflection or Refraction Effects

Every depth in the underwater environment affects RGB images, but especially the caustics in shallow water (water depth less than 10 m), that is, the complex physical phenomenon of light reflected or refracted by a curved surface, which appears to be the primary factor lowering the image quality of all passive optical sensors [39]. In abyssal-sea photogrammetry methods, noon is usually the optimum period for data collection because of the bright illumination; with regard to shallow waters, the subject needs strong artificial lighting, or the image to be captured in shady conditions or on the horizon to avoid reflections on the seabed [39]. If it cannot be avoided in the procurement stage, the image-matching algorithm will be affected by caustics and lighting effects, with the final result being that the generated texture is different from the orthophoto. Furthermore, caustic effects destroy most image-matching algorithms, resulting in inaccurate matching [39]. Figure 6 shows pictures of different forms of caustic effects in underwater images.

Figure 6.

Caustic effects of different shapes in underwater images.

Only a few literature contributions currently mention methods for optimizing images by removing caustics from images and videos. For underwater sceneries that are constantly changing, Trabes and Jordan proposed a method that requires altering a filter for sunlight deflection [40]. Gracias et al. [41] presented a new strategy, where a mathematical solving scheme involved computing the median time between images within a sequence. Later on, these authors expanded upon their work in [42] and proposed an online method for removing sun glint that interprets caustics as a dynamic texture. However, as they note in their research, this technique is only effective if the seabed or seafloor surface is level.

In [43], Schechner and Karpel proposed a method for analyzing several consecutive frames based on a nonlinear algorithm to keep the composition of the image the same while removing fluctuations. However, this method does not consider camera motion, which will lead to inaccurate registration.

In order to avoid inaccurate registration, Swirski and Schechner [44] proposed a method to remove caustics using stereo equipment. The stereo cameras provide the depth maps, and then the depth maps can be registered together using the iterative nearest point. This again makes a strong assumption about the rigidity of the scene, which rarely happens underwater.

Despite the innovative and complex techniques described above, removing caustic effects using a procedural approach requires strong assumptions on the various parameters involved, such as the scene stiffness and camera motion.

Therefore, Forbes et al. [45] proposed a method without making such assumptions, a new solution based on two convolutional neural networks (CNNs) [46,47,48]: SalienceNet and DeepCaustics. The saliency graph is the caustic classification produced by the first network when it is trained, and the content represents the likelihood of a pixel being caustic. Caustic-free images are produced when the second network is trained. The true fundamentals of caustic point generation are extremely difficult. They use synthetic data for training and then enable the transfer of learning to real data. This is the first time the challenging corrosion-removal problem has been reconstructed and approached as a classification and learning problem among the few solutions that have been suggested. Two compact, simple-to-train CNNs are the foundation of the unique solution that Agrafiotis et al. [39] proposed and tested a novel solution based on two small, easily trainable CNNs [49]. They showed how to train a network using a small set of synthetic data and then transfer the learning to real data with robustness to within-class variation. The solution results in caustic-free images that can be further used for other possible tasks. They showed how to train a network using a small set of synthetic data and then transfer the learning to real data with robustness to within-class variation. The solution results in caustic-free images that can be further used for other possible tasks.

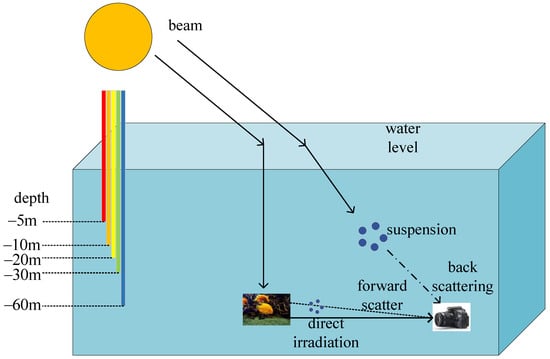

3.1.2. Absorption or Scattering Effects

Water absorbs and scatters light as it moves through it. Different wavelengths of light are absorbed differently by different types of water. The underwater-imaging process is shown in Figure 7. At a depth of around 5 m, red light diminishes and vanishes quickly. Green and blue light both gradually fade away underwater, with blue light disappearing at a depth of roughly 60 m. Light changes direction during transmission and disperses unevenly because it is scattered by suspended matter and other media. The characteristics of the medium, the light and the polarization all have an impact on the scattering process [38]. Therefore, underwater video images are typically blue-green in color with obvious fog effects. Figure 8 shows some low-quality underwater images. The image on the left has obvious chromatic aberration, and the overall appearance is green. The image on the right demonstrates fogging, which is common in underwater images.

Figure 7.

Underwater imaging model.

Figure 8.

Typical underwater images.

Low-quality images can affect subsequent 3D-reconstruction vision-processing missions. In actual utilization, projects are greatly hampered by the poor quality of underwater pictures, such as underwater archaeology, biological research and collection [50]. The underwater environment violates the brightness-constancy constraint in terrestrial techniques, so transferring reconstruction methods on land to the underwater domain remains challenging. The most advanced underwater 3D reconstruction approaches use the physical model of light propagation underwater to consider the equidistant effects of scattering and attenuation. However, these methods require careful calibration of the attenuation coefficients required for physical models or rely on rough estimates of these coefficients from previous laboratory experiments.

The current main method for 3D reconstruction of underwater images is to enhance the primordial underwater image before 3D reconstruction to restore the underwater image and possibly raise the level of the 3D point cloud that is produced [51]. Therefore, how to obtain as correct or real underwater color images as possible has become a very challenging problem, and at the same time it has become a promising research field. Underwater color images have affected image-based 3D-reconstruction and scene-mapping techniques [52].

To solve these problems, according to the description of underwater image processing in the literature, two different underwater image-processing methods are implemented. The first one is underwater image restoration. Its purpose is to reconstruct or restore degraded images caused by unfavourable factors, such as camera and object relative motion, underwater scattering, turbulence, distortion, spectral absorption and attenuation in complex underwater environments [53]. This rigorous approach tries to restore the true colors and corrects the image using an appropriate model. The second approach uses qualitative criteria-based underwater image-enhancement techniques [54,55]. It processes deteriorated underwater photographs using computer technology, turning the initial, low-quality images into high-quality images [56]. The enhancement technique effectively solves the issues with the primitive underwater video image, such as color bias, low contrast, fogging, etc. [57]. The visual perception improves with the enhancement of video images, which in turn facilitates the following visual tasks. The image-production process is not taken into account by image-enhancing techniques and does not require a priori knowledge of environmental factors [52]. New and better methods for underwater image processing have been made possible by recent developments in machine learning and deep learning in both approaches [22,58,59,60,61,62,63]. With the development of underwater image color restoration and enhancement technology, experts in the 3D reconstruction of underwater images are faced with the challenge of how to apply it to the 3D reconstruction of underwater images.

3.2. Underwater Camera Calibration

In underwater photogrammetry, the first aspect to consider is camera calibration, and while this is a trivial task in air conditions, it is not easy to implement underwater. Underwater camera calibration experiences more uncertainties than in-air calibration due to light attenuation through the housing ports and water medium, as well as tiny potential changes in the refracted light’s route due to the modelling hypothesis or nonuniformity of the medium error. Therefore, compared to identical calibrations in the air, underwater calibrations typically have a lower accuracy and precision. Due to these influences, experience has demonstrated that underwater calibration is more inclined to result in scale inaccuracies in the measurements [64].

Malte Pedersen et al. [65] compared three methods for the 3D reconstruction of underwater objects: a method relying only on aerial camera calibration, an underwater camera calibration method and a method based on Snell’s law with ray tracing. The aerial camera calibration display is the least accurate since it does not consider refraction. Therefore, the underwater camera needs to be calibrated.

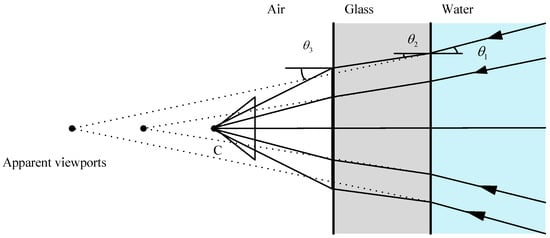

As mentioned in the particularity of the underwater environment, the refraction of the air–glass–water interface will cause a large distortion of the image, which should be considered when calibrating the camera [66]. The differential in densities between the two mediums is what causes this refraction. The incoming beam of light is modified as it travels through the two mediums, as seen in Figure 9, altering the optical path.

Figure 9.

Refraction caused by the air–glass (acrylic)–water interface.

Depending on their angle of incidence, refracted rays (shown by dashed lines) that extend into the air intersect at several spots, each representing a different viewpoint. Due to the influence of refraction, there is no collinearity between the object point in the water, the projection center of the camera and the image point [67], making the imaged scene appear wider than the actual scene. The distortion of the flat interface is affected by the distance from the pixel in the center of the camera, and the distortion increases with the distance. Variations in the pressure, temperature and salinity can change the refractive index of water and even how the camera is processed, thereby altering the calibration parameters [68]. Therefore, there is a mismatch between the object-plane coordinates and the image-plane coordinates.

This issue is mainly solved using two different methods:

- (1)

- The development of new calibration methods with a refraction-correction capability. Gu et al. [69] proposed an innovative and effective approach for medium-driven underwater camera calibration that can precisely calibrate underwater camera parameters, such as the direction and location of the transparent glass. To better construct the geometric restrictions and calculate the initial values of the underwater camera parameters, the calibration data are obtained using the optical path variations created by medium refraction between different mediums. At the same time, based on quaternions, they propose an underwater camera parameter-optimization method with the aim of improving the calibration accuracy of underwater camera systems.

- (2)

- The existing algorithm has been improved to reduce the refraction error. For example, Du et al. [70] established an actual underwater camera calibration image dataset in order to improve the accuracy of underwater camera calibration. The outcomes of conventional calibration methods are optimized using the slime mold optimization algorithm by combining the best neighborhood perturbation and reverse learning techniques. The precision and effectiveness of the proposed algorithm are verified using the seagull algorithm (SOA) and particle swarm optimization (PSO) algorithm on the surface.

Other researchers have proposed different methods, such as modifying the collinear equation. However, others have proposed that corrective lenses or circular holes can eliminate refraction effects and use dome-ported pressure shells, thereby providing near-perfect central projection underwater [71]. The entrance pupil of the camera lens and the center of curvature of the corrective lens must line up for the corrective-lens method to work. This presupposes that the camera is a perfect central projection. In general, to ensure the accuracy of the final results, comprehensive calibration is essential. For cameras with misaligned domes or flat ports, traditional methods of distortion-model adjustment are not sufficient, and complete physical models must be used [72], taking the glass thickness into account as in [67,73].

Other authors have considered refraction using the refraction camera model. As in [28], a simplified refraction camera model was adopted.

This section mainly introduces two main scientific problems arising from the special engineering problems of the underwater environment, namely, underwater image degradation and underwater camera calibration, and also introduces the existing solutions to the two main problems. In the next section, we introduce optical methods for the 3D reconstruction of underwater images. It uses optical sensors to obtain image information of underwater objects or scenes for reconstruction.

4. Optical Methods

Optical sensing devices can be divided into active and passive according to their interaction with media. Active sensor refers to sensors that can enhance or measure the collected data according to environmental radiation and projection. Structured light is an illustration of an active system, where a pattern is projected onto an object for 3D reconstruction [74]. The passive approach is to perceive the environment without changing or altering the scene. Structure from motion, photometric stereo, stereo vision and underwater photogrammetry acquire information by sensing the reality of the environment, and are passive methods.

This section introduces and summarizes the sensing technology of 3D underwater image reconstruction based on optical and related methods in detail and describes in detail the application of structure from motion, structured light, photometric stereo, stereo vision and underwater photogrammetry in underwater 3D reconstruction.

4.1. Structure from Motion

Structure from motion (SfM) is an efficient approach for 3D reconstruction using multiple images. It started with the pioneering paper of Longuet Higgins [75]. SfM is a method of triangulation that involves using a monocular camera to capture photographs of a subject or scene. To determine the relative camera motion and, thus, its 3D route, picture features are extracted from these camera shots and matched [76] between successive frames. First, suppose there is a calibrated camera in which the main point, calibration, lens distortion and refraction elements are known to ensure the accuracy of the final results.

Given a images of b fixed 3D points, then a projection matrices and b 3D points from the a·b correspondences of can be estimated.

Hence, the projection of the scene points is unaffected if the entire scene is scaled by a factor of m while also scaling the projection matrix by a factor of ; the projection of the scene points remains the same. Therefore, the scale is only unavailable with SfM.

The group of solutions parametrized by is:

where is the pseudo-inverse of P (i.e., ) and n is its null vector, namely, the camera center, defined by .

The SfM is the most economical method and easy to install on the robot, just needing a camera or recorder that can capture still images or video and has enough storage to hold the entire image. Essentially, SfM includes the automated tasks of feature-point detection, description and matching. The most critical tasks in this process are feature detection, description and matching, and then the required 3D model can be obtained. There are many feature-detection techniques that are frequently employed, including speeded-up robust features (SURF) [77], scale-invariant feature transform (SIFT) [78] and Harris. These feature detectors have spatially invariant characteristics. Nevertheless, they do not offer high-quality results when the images undergo significant modification, such as in underwater images. In fact, suspended particles in the water, light absorption and light refraction make the images blurred and add noise. To compare Harris and SIFT features, Meline et al. [79] used a 1280 × 720 px camera in shallow-water areas to obtain matching points robust enough to reconstruct 3D underwater archaeological objects. In this paper, the authors reconstructed a bust, and they concluded that the Harris method could obtain more robust points from the picture compared to SIFT, but the SIFT points could not be ignored either. Compared to Harris, SIFT is weak against speckle noise. Additionally, Harris presents better interior counts in diverse scenes.

SfM systems are a method for computing the camera pose and structure from a set of images [80] and are mainly separated into two types, incremental SfM and global SfM. Incremental SfM [81,82] uses SIFT to match the first two input images. These correspondences are then employed to estimate the relative pose of the second relative to the first camera. Once the poses of the two cameras are obtained, a sparse set of 3D points is triangulated. Although the RANSAC framework is often employed to estimate the relative poses, the outliers need to be found and eliminated once the points have been triangulated. The dual-view scenario is then optimized by applying bundle adjustment [83]. After the refactoring is initialized, other views are added in turn, that is, matching the corresponding relationship between the last view in the refactoring and the new view.

As a result of the 3D points presented in the reconstructed last view, a pair of new views with 2D–3D correspondences will be immediately generated. Therefore, the camera pose of the new view is determined by the absolute pose. A sequential reconstruction of scene models can be robust and accurate. However, with repeated registration and triangulation processes, the accumulated error becomes larger and larger, which may lead to scene drifts [84]. Additionally, repeatedly solving nonlinear bundle adjustments can lead to run-time inefficiencies. To prevent this from happening, a global SfM emerged. In this method, all correspondences between input image pairs are computed, so the input images do not need to be sorted [85]. Pipelines typically solve problems in three steps. The first step solves for all pairs of relative rotations through the epipolar geometry and constructs a view whose vertices represent the camera and whose edges represent the epipolar geometric constraints. The second step involves rotation averaging [86] and translation averaging [87], which address the camera orientation and motion, respectively. The final step is bundle adjustment, which aims to minimize the reprojection errors and optimize the scene structure and camera pose. Compared with incremental SfM, the global method avoids cumulative errors and is more efficient. The disadvantage is that it is not robust to outliers.

SfM has been shown to have good imaging conditions on land and is an effective method for 3D reconstruction [88]. In the underwater surroundings, using the SfM approach for 3D reconstruction has the characteristics of fast speed, ease of use and strong versatility, but there are also many limitations and deficiencies. In underwater media, both feature detection and matching have problems such as diffusion, uneven lighting and sun glints, making it more difficult to detect the same feature from different angles. According to the distance between the camera and the 3D point, the components of absorption and scattering change, thus altering the color and clarity of specific features in the picture. If the ocean is photographed from the air, there will be more difficulties, such as camera refraction [89].

Therefore, underwater SfM must take special underwater imaging conditions into consideration. Sedlazeck et al. [90], for the underwater imaging environment, proposed to computationally segment underwater images so that erroneous 2D correspondences can be segmented and eliminated. To eliminate the green or blue tint, they performed color correction using a physics model of light transmission underwater. Then, features were selected using an image-gradient-based Harris corner detector, and the outliers after feature matching were filtered through the RANSAC [91] process. The algorithm is essentially a classical incremental SfM method adapted to special imaging conditions. However, incremental SfM may suffer from scene drift. Therefore, Pizarro et al. [92] used a local-to-global SfM approach with the help of onboard navigation sensors to generate 3D submaps. They adopted a modified Harris corner detector as a feature detector with descriptors as generalized color moments and used RANSAC and the six-point algorithm that has been presented to evaluate the fundamental matrix stably, after breaking it down into movement parameters. Finally, the pose was optimized by minimizing all the reprojection errors that are considered as inline matches.

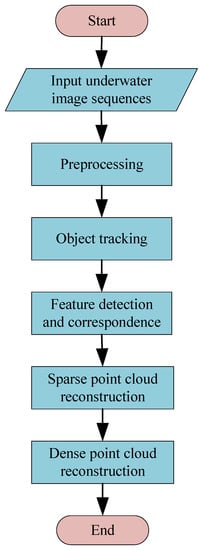

With the development of underwater robots, some authors have used ROVs and AUVs to capture underwater 3D objects from multiple angles and used continuous video streams to reconstruct underwater 3D objects. Xu et al. [93] combined SfM with an object-tracking strategy to try to explore a new model for underwater 3D object reconstruction from continuous video streams. A brief flowchart of their SfM reconstruction of underwater 3D objects is shown in Figure 10. First, the particle filter was used for image filtering to enhance the image, so as to obtain a clearer image for target tracking. They used SIFT and RANSAC to recognize and track features of objects. Based on this, a method for 3D point-cloud reconstruction with the support of SfM-based and patch-based multi-view stereo (PMVS) was proposed. This scheme achieves a consistent improvement in performance over multi-view 3D object reconstruction from underwater video streams. Chen et al. [94] proposed a clustering-based adaptive threshold keyframe-extraction algorithm, which extracts keyframes from video streams as image sequences for SfM. The keyframes are extracted from moving image sequences as features. They utilized the global SfM to create the scene and proposed a quicker rotational averaging approach, the least trimming square rotational average (LTS-RA) method, based on the least trimming squares (LTS) and L1RA methods. This method can reduce the time by 19.97%, and the dense point cloud reduces the transmission costs by around 70% in contrast to video streaming.

Figure 10.

Flow chart of underwater 3D object reconstruction based on SfM.

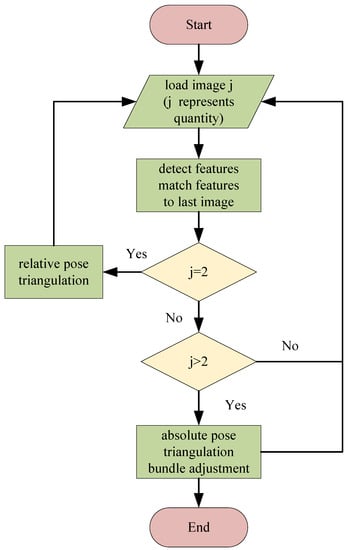

In addition, because of the diverse densities of water, glass and air, the light entering the camera housing causes refraction, and the light entering the camera is refracted twice. In 3D reconstruction, refraction causes geometric deformation. Therefore, refraction must be taken into account underwater. Sedlazeck and Koch [95] studied the calibration of housing parameters for underwater stereo camera setups. A refraction structure was developed based on a motion algorithm, a system for calculating camera paths and 3D points using a new pose-estimation method. In addition, they also introduced the Gauss–Helmert model [96] for nonlinear optimization, especially bundle adjustment. Both iterative optimization and nonlinear optimization are used within the framework of RANSAC. Using their proposed refraction SfM optimized the results of general SfM with a perspective camera model. A typical RSfM reconstruction system is shown in Figure 11, where j stands for the number of images. First, features in the two images are detected and matched, and then the relative pose of the second camera relative to the first camera is computed. Next, triangulation is performed using 2D–2D correspondences and camera poses. This finds the 2D–3D correspondence of the next image, so the absolute pose relative to the 3D point can be calculated. After adding fresh images and triangulating fresh points, a nonlinear optimization is used for the scene.

Figure 11.

Typical RSfM reconstruction system.

On the basis of Sedlazeck [90], Kang et al. [97] suggested two fresh ideasforof the refraction camera model, namely, the ellipse of refraction (EoR) and the profundity of refraction (RD) of scene points. Meanwhile, they proposed a new mixed majorization framework for performing dual-view underwater SfM. Compared to Sedlazeck [90], the algorithm they put forward permits more commonly used camera configurations and may efficiently minimize reprojection errors in picture interspace. On this basis, they derived two fresh expressions for the problem of undersea known rotating structures and motions in [28]. One provides a whole-situation optimum solution and the other is robust to abnormal values. The known rotation restraint is further broadened by introducing a robust known rotation SfM into a new mixed majorization framework. The means it can automatically perform underwater camera calibration and 3D reestablishment simultaneously without using any calibration objects or additional calibration devices, which significantly improves the precision of reconstructed 3D structures and the precision of the underwater application system parameters.

Jordt et al. [27] combined the refractive SfM routine and the refractive plane-sweep algorithm methods into an unabridged system for refraction reestablishment in larger scenes by improving nonlinear optimization. This study was the first to out forward, accomplish and assess an unabridged extensible 3D re-establishment system for deep-sea level port cameras. Parvathi et al. [98] only considered that refraction across medium boundaries could cause geometric changes that can result in incorrect correspondence matches between images. This method is only applicable to pictures acquired using a camera above the water’s surface, not underwater camera pictures, barring probable refraction at the glass–water interface. Therefore, they put forward a refraction re-establishment model to make up for refraction errors, assuming that the deflection of light rays takes place at the camera center. First, the correction parameters were modelled, and then the fundamental matrix was estimated using the coordinates of the correction model to build a multi-view geometric reconstruction.

Chadebecq et al. [99] derived a new four-view restraint formulation from refractive geometry and simultaneously proposed a new RSfM pipeline. The method depends on a refraction fundamental matrix derived from a generalized outer pole constraint, used together with a refraction–reprojection constraint, to optimize the primal estimation of the relative camera poses estimated using an adaptive pinhole model with lens distortion. On this basis, they extended the previous work in [29]. By employing the refraction camera model, a concise derivation and expression of the refraction basis matrix were given, and based on this, the former theoretical derivation of the two-view geometry with fixed refraction planes was further developed.

Qiao et al. [100] proposed a ray-tracing-based modelling approach for camera systems considering refraction. This method includes camera system modeling, camera housing calibration, camera system pose estimation and geometric reconstruction. They also proposed a camera housing calibration method on the basis of the back-projection error to accomplish accurate modelling. Based on this, a camera system pose-estimation method based on the modelled camera system was suggested for geometric reconstruction. Finally, the 3D reconstruction result was acquired using triangulation. The use of traditional SfM methods can lead to deformation of the reconstructed building, while their RSfM method can effectively reduce refractive index distortion and improve the final reconstruction accuracy.

Ichimaru et al. [101] proposed a technique to estimate all unknown parameters of the unified underwater SfM, such as the transformation of the camera and refraction interface and the shape of the underwater scene, using the extended beam-adjustment technique. Several types of constraints are used in optimization-based refactoring methods, depending on the capture settings, and an initialization procedure. Furthermore, since most techniques are performed under the assumption of planarity of the refraction interface, they proposed a technique to relax this assumption using soft constraints in order to apply this technique to natural water surfaces. Jeon and Lee [102] proposed the use of visual simultaneous localization and mapping (SLAM) to handle the localization of vehicle systems and the mapping of the surrounding environment. The orientation determined using SLAM improves the quality of 3D reconstruction and the computational efficiency of SfM, while increasing the number of point clouds and reducing the processing time.

In the underwater surroundings, the SfM method for 3D reconstruction is widely used because of its fast speed, ease of use and strong versatility. Table 2 lists different SfM solutions. In this paper, we mainly compared the feature points, matching methods and main contributions.

Table 2.

Summary of SfM 3D reconstruction motion solutions.

4.2. Photometric Stereo

Photometric stereo [103] is a commonly used optical 3D reconstruction approach that has the advantage of high-resolution and fine 3D reconstruction even in weakly textured regions. Photometric stereo scene-reconstruction technology needs to acquire a few photos taken in various lighting situations, and by shifting the location of the light source, 3D information may be retrieved, while maintaining a stable position for the camera and the objects. Currently, photometric stereo has been well-studied in air conditions and is capable of generating high-quality geometric data with specifics, but its performance is significantly degraded due to the particularities of underwater environments, including phenomena such as light scattering, refraction and energy attenuation [104].

The improvement of underwater photometric stereo under scattering effects has been widely discussed by researchers. In underwater environments, light is significantly attenuated due to scattering effects, resulting in an uneven illumination distribution in background areas. This leads to gradient errors and exacerbates the gradient integration in the photometric volume results in a buildup of height inaccuracies, which leads to the deformation of the reconstructed surface. Therefore, Narasimhan and Nayar [105] proposed a method for recovering the albedo, normal and depth maps from scattering media, deriving a physical model of surfaces surrounded by a scattering medium. Based on these models, they provide results on the conditions for detectability of objects in light fringes and the number of light sources required for the photometric stereo. It turns out that this method requires at least five images. Under special conditions, however, four different lighting conditions are sufficient.

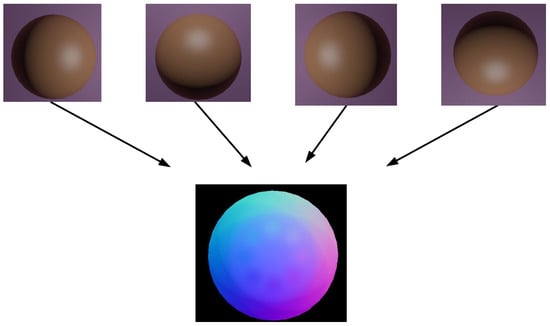

Wu L et al. [106] better addressed the 3D reconstruction problem through low-rank matrix completion and restoration. They used scotoma, the shadow and blackness in the water, to accommodate the distribution of dispersion effects, and then removed dispersion from the graphics. The image was restored by eliminating minor noise, shadows, contaminants, and a few damaged points, due to the usage of backscatter compensating with the robust principal component analysis method (RPCA). Finally, to acquire the surface normal and finish the 3D reconstruction, they used the RPCA results and the least-squares results. Figure 12 uses four lamps to illuminate the underwater scene. The same scene is illuminated by different light sources to obtain an image for restoring 3D information. The new technology could be employed to enhance almost all photometric stereo methods, incorporating uncalibrated photometric stereo.

Figure 12.

Photometric stereo installation: four lights are employed to illuminate the underwater landscape. The same scene employed different light-source images to recover 3D information.

In [107], Tsiotsios et al. showed that only three lights are sufficient to calculate 3D data using a linear formulation of photometric stereo by effectively compensating for the backscattered component. They compensated for the backscattering component by fitting a backscattering model to each pixel. Without any prior knowledge of the characteristics of the medium or the scene, one can estimate the uneven backscatter directly from a single image using the backscatter restitution method for point-sources. Numerous experimental results have demonstrated that, even in the case of very significant scattering phenomena, there is almost no decrease in the final quality compared to the effects of clear water. However, just as in time-multiplexed structured-light technology, photometric stereo also has the problem of long acquisition time. These methods are inappropriate for objects that move and are only effective for close-range static objects in clear water. Inspired by the method proposed by Tsiotsios, Wu Z et al. [108] presented a height-correction technique for underwater photometric stereo reconstruction based on the backdrop area height distribution. To accommodate the height mistake, subtract it from the reconstructed height and provide a more accurate reconstructed surface, a two-dimensional quadratic function was applied. The experimental results show the effectiveness of the method in water with different turbidity.

Murez et al. [109] proposed three contributions to address the key modes of light propagation under the ordinary single-scattering assumption of diluted media. First, a large number of simulations showed that a single scattered light from a light source can be approximated by a point light source with a single direction. Then, the blur caused by light scattering from objects was modeled. Finally, it was demonstrated that imaging fluorescence emission, where available, removes the backscatter component and improves the signal-to-noise ratio. They conducted experiments in water tanks with different concentrations of scattering media. The results showed that the quality of 3D reconstruction generated by deconvolution is higher than that of previous techniques, and when combined with fluorescence, even for highly turbid media, similar results can be generated to those in clean water.

Jiao et al. [110] proposed a high-resolution three-dimensional surface reconstruction method for underwater targets based on a single RGBD image-fusion depth and multi-spectral photometric stereo vision. First, they used a depth sensor to acquire an RGB image of the object with depth information. Then, the backscattering was removed by fitting a binary quadratic function, and a simple linear iterative clustering superpixel was applied to segment the RGB image. Based on these superpixels, they used multispectral photometric stereo to calculate the objects’ surface normal.

The above research focused on the scattering effect in underwater photometric volumes. However, the effects of attenuation and refraction were rarely considered [111]. In underwater environments, cameras are usually designed in flat watertight housings. The light reflected from underwater objects is refracted as it passes through the flat housing glass in front of the camera, which can lead to inaccurate reconstructions. Refraction does not affect the surface normal estimations, but it may distort the captured image and cause height integration errors in the normal field when estimating the actual 3D position of the target object. At the same time, light attenuation limits the detection range of photometric stereo systems and reduces the accuracy. Researchers have proposed many methods to solve this problem in the air, for example, close-range photometric stereo, which simulates the light direction and attenuation per pixel [112,113]. However, these methods are not suitable for underwater environments.

Fan et al. [114] proposed that, when the light source of the imaging device is uniformly placed on a circle with the same tilt angle, the main components of low frequency and high deformation in the near photometric stereo can be approximately described by a quadratic function. At the same time, they proposed a practical method to fit and eliminate the height deviation so as to obtain a better surface-restoration method than the existing methods. It is also a valuable solution for underwater close-range photometric stereo. However, scale bias may occur due to the unstable light sensitivity of the camera sensor, underwater light attenuation and low-frequency noise cancellation [115].

In order to solve problems such as low-frequency distortion, scale deviation and refraction effects, Fan et al. combined underwater photometric stereo measurement with underwater laser triangulation in [116] to improve the performance of underwater photometric stereo measurement. Based on the underwater imaging model, an underwater photometric stereo model was established, which uses the underwater camera refraction model to remove the non-linear refraction distortion. At the same time, they also proposed a photometric stereo compensation method for close-range ring light sources.

However, the lack of constraints between multiple disconnected patches, the frequent presence of low-frequency distortions and some practical situations often lead to bias during photometric stereo reconstruction using direct integration. Therefore, Li et al. [117] proposed a fusion method to correct photometric stereo bias using the depth information generated by an encoded structured light system. This method preserves high-precision normal information, not only recovering high-frequency details, but also avoiding or at least reducing low-frequency deviations. A summary of underwater 3D reconstruction methods based on photometric stereo is shown in Table 3, which mainly compares the main considerations and their contributions.

Table 3.

Summary of photometric stereo 3D reconstruction solutions.

4.3. Structured Light

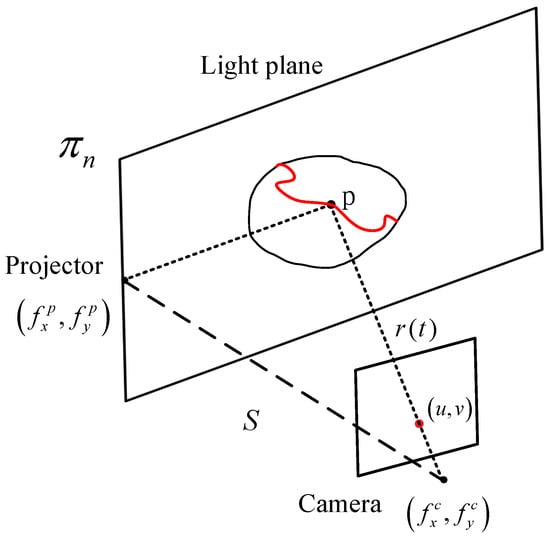

A structured light system consists of a color (or white light) projector and a camera. Between these two components and projected objects, the triangulation concept is applied. According to Figure 13, if both the plane and the camera ray are identifiable, the projector projects a recognized pattern onto the scene, often a collection of light planes. It is possible to compute the intersection between them using the following formula.

Figure 13.

Triangulation geometry principle of the structured light system.

Mathematically, a straight line can be expressed in parametric form as:

where is the focal length of the camera on the x and y axes, is the center pixel of the image and is one of the pixels detected in the image. Assuming a calibrated camera and origin camera frame, the light plane can be expressed as shown in Equation (5).

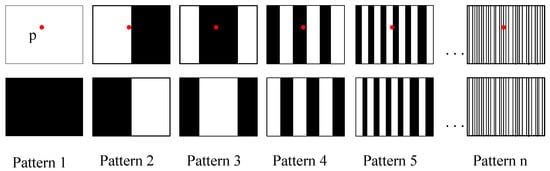

Binary modes are the most commonly employed as they are the simplest to use and implement with projectors. Only two states of the scene’s light streaks, typically white light, are utilized in the binary mode. The pattern starts out with just one sort of partition (black to white). Projections of the prior pattern’s subdivisions continue until the software is unable to separate two consecutive stripes, as seen in Figure 14. The time-multiplexing technique handles the related issue of continuous light planes. This method yields a fixed number of light planes that are typically related to the projector’s resolution. The time-multiplexing technique uses codewords generated by repeated pattern projections onto an object’s surface. As a result, until all patterns are projected, the codewords connected to specific spots in the image are not entirely created. According to a pattern of coarse to fine, the initial projection mode typically correlates to the most important portion. The number of projections directly affects the accuracy because each pattern introduces a sharper resolution to the image. Moreover, the codeword base is smaller, providing a higher noise immunity [118].

Figure 14.

Binary structured light pattern. The codeword for point p is created with successive projections of the patterns.

On the other hand, the phase-shift mode uses a sinusoidal projection to cover larger grayscale values in the same working mode. By decomposing the phase values, different light planes of a state can be obtained in the equivalent binary mode. A phase-shift graph is also a time-multiplexed graph. Frequency-multiplexing methods provide dense reconstructions of moving scenes, but are highly sensitive to camera nonlinearities, reducing the accuracy and sensitivity to target surface details. These methods utilize multiple projection modes to determine a distance. De Bruijn sequences can be reconstructed once using a pseudorandom sequence of symbols in a circular string. These patterns are known as m-arrays when this theory is applied to matrices rather than vectors (e.g., strings). They can be constructed by following pseudorandom sequences [119]. Often, these patterns utilize color to better distinguish the symbols of the alphabet. However, not all surface treatments and colors accurately reflect the incident color spectrum back to the camera [120].

In the air, shape, spatial-distribution and color-coding modes have been widely used. However, little has been reported on these encoding strategies in underwater scenes. Zhang et al. [121] proposed a grayscale fourth-order sinusoidal fringe. This mode employs four separate modes as part of a time-multiplexing technique. They compared structured light (SL) with stereo vision (SV), and SL showed better results on untextured items. Törnblom, in [122], projected 20 different gray-encoded patterns onto a pool and came up with results that were similar. The system achieved an accuracy of 2% in the z-direction. Massot-Campos et al. [123] also compared SL and SV in a common underwater environment of known size and objects. The results showed that SV is most suitable for long-distance and high-altitude measurements, depending on whether there is enough texture, and SL reconstruction can be better applied to short-distance and low-altitude methods, because accurate object or structure size is required.

Some authors combined the two methods of SL and SV to perform underwater 3D reconstruction. Bruno et al. [25] projected gray-encoded patterns with a terminal codeshift of four pixel broad bands. They used projectors to light the scene while gaining depth from the stereo deck. Therefore, there is no need to conduct lens calibration of the projection screen, and it is possible to utilize any projector that is offered for sale without sacrificing measurement reliability. They demonstrated that the final 3D reconstruction works well even with high haze values, despite substantial scattering and absorption effects. Similarly, using this method of SL and SV technology fusion, Tang et al. [124] reconstructed a cubic artificial reef (CTAR) in the underwater setting, proving that the 3D reconstruction quality in the underwater environment can be used to estimate the size of the CTAR set.

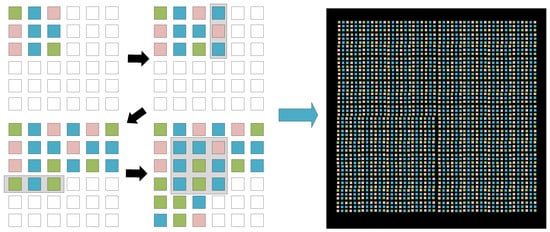

In addition, Sarafraz et al. extended the structured-light technique for the particular instance of a two-phase environment in which the camera is submerged and the projector is above the water [125]. The authors employed dynamic pseudorandom patterns combined with an algorithm to produce an array while maintaining the uniqueness of subwindows. They used three colors (red, green and blue) to construct the pattern, as shown in Figure 15. A projector placed above the water created a distinctive color pattern, and an underwater camera captured the image. Only one shot was required with this distinct color mode in order to rebuild both the seabed and the water’s surface. Therefore, it can be used in both dynamic scenes and static scenes.

Figure 15.

Generating patterns for 3 × 3 subwindows using three colors (R, G, B). (left) Stepwise pattern generation for a 6 × 6 array; (right) example of a generated 50 × 50 pattern.

At present, underwater structured-light technology has received more and more concentration, primarily to address the 3D reconstruction of items and structures with poor textures and to circumvent the difficulty in employing conventional optical-imaging systems in hazy waters. The majority of structured-light techniques presumptively assume that light is neither dispersed nor absorbed and that the scene and light source are both submerged in pure air. However, in recent years, structured lighting has become more and more widely used in underwater imaging, and the scattering effect cannot be ignored.

Fox [126] originally proposed structured light using a single scanned light strip to lessen backscatter and provide 3D underwater object reconstruction. In this case, the basics of stereo-system calibration were applied to treat the projector as a reverse camera. Narasimhan and Nayar [105] developed a physical model of the appearance of a surface submerged in a scattering medium. In order to assess the media’s characteristics, the models describe how structured light interacts with scenes and media. This outcome can then be utilized to eliminate scattering effects and determine how the scene will appear. Using a model of image formation from strips of light, they created a straightforward algorithm to find items accurately. By reducing the illuminated area to the plane of the light, the shape of distant objects can be picked up for triangulation.

Another crucial concern for raising the performance of 3D reconstruction analysis based on the structured-light paradigm is the characterization of the projection patterns. An experimental investigation that assessed the effectiveness of several projected patterns and image-enhancement methods for detection under varied turbidity conditions revealed that, with increasing turbidity, the contrast loss is greater for stripes than for dots [127]. Therefore, Wang et al. [128] proposed a non-single-view point (SVP) ray-tracing model for calibrating projector camera systems for 3D reconstruction premised on the structured-light paradigm, using dot patterns as a basis. The rough depth map was reconstructed from the sparse point mode projection, and the gamut of surface points was used to texture the denser-mode image to improve point detection so as to estimate the finer surface reconstruction. Based on the medium, optical properties and projector camera geometry, they estimated the backscattering size and adjusted for signal attenuation to remove the picture for a specific projector pattern.

Massone et al. [129] proposed an approach that relies on the projection of light patterns, using a simple cone-shaped diving lamp as the projector. Images were recovered using closed 2D curves extracted by a light-profile-detection method they developed. They also created a new calibration method to determine the cone geometry relative to the camera. Thus, finding a match between the projection and recovery modes can be achieved by obtaining a fixed projector–camera pair. Finally, the 3D data were recovered by contextualizing the derived closed 2D curves and the camera conic relations.

Table 4 lists the underwater SL 3D reconstruction methods, mainly comparing colors, projector patterns and their main contributions.

Table 4.

Summary of SL 3D reconstruction solutions.

4.4. Stereo Vision

Stereo imaging works in the same manner as SfM, using feature matching between the stereo camera’s left and right frames to calculate 3D correspondences. After the stereo system has been calibrated, the relative position of one camera relative to the second camera was determined, thus resolving the problem of scale blur. The earliest stereo-matching technology was developed in the area of photogrammetry. Stereo matching has been extensively investigated in computer vision [130] and remains one of the most active study fields.

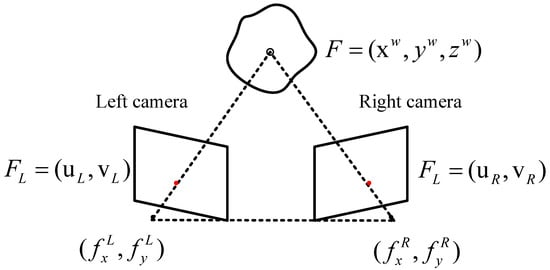

Suppose that there are two cameras and , and each camera image has two similar features and , as shown in Figure 16. To calculate the 3D coordinates of the feature F projected on as and projected on as , the line intersecting the focus and and the line intersecting the focus and are traced. If the calibration of both cameras is perfect, then . However, the least-squares method is typically used to address the camera-calibration problem, so the result is not always accurate. Therefore, an approximate solution is taken as the closest point between and [131].

Figure 16.

Triangulation geometry principle of the stereo system.

After determining the relative position of the camera and the position of the same feature in the two images, the 3D coordinates of the feature in the world can be calculated through triangulation. In Figure 16, the image coordinate , and the 3D point corresponding to is the point , which can also be written as , where F is the fundamental matrix [131].

Once the cameras are calibrated (the baseline, relative camera pose and undistorted image are known), 3D imaging can be produced by computing the divergence of each pixel. These 3D data are gathered, and other 3D registration techniques can be used to register between successive frames and the iterative closest point (ICP) [132]. SIFT, SURF and the sum of absolute differences (SAD) [133] are the most-commonly employed methods, and SIFT or ICP can also be used for direct 3D matching.

Computer vision provides promising techniques for constructing 3D models of environments from 2D images, but underwater environments suffer from increased radial distortion due to the refraction of light rays through multiple media. Therefore, the underwater camera-calibration problem is very important in stereo vision systems. Rahman et al. [134] studied the differences between terrestrial and underwater camera calibrations, quantitatively determining the necessity of in situ calibration for underwater environments. They used two calibration algorithms, the Rahman–Krouglicof [135] and Heikkila [136] algorithms, to calibrate the underwater SV system. The stereo capability of the two calibration algorithms was evaluated from the perspective of the reconstruction error, and the experimental data confirmed that the Rahman–Krouglicof algorithm could solve the characteristics of underwater 3D reconstruction well. Oleari et al. [137] proposed a camera-calibration approach for SV systems without the need for intricate underwater processes. It is a two-stage calibration method in which, in the initial phase, an air standard calibration is carried out. In the following phase, utilizing prior data on the size of the submerged cylindrical pipe, the camera’s settings are tuned. Deng et al. [138] proposed an aerial calibration method for binocular cameras for underwater stereo matching. They investigated the camera’s imaging mechanism, deduced the connection between the camera in the air and underwater and carried out underwater stereo-matching experiments using the camera parameters calibrated in the air, and the results showed the effectiveness of the method.

SLAM is the most accurate positioning method, using the data provided by the navigation sensors installed on the underwater vehicle [139]. To provide improved reconstructions, rapid advances in stereo SLAM have also been applied underwater. These methods make use of stereo cameras to produce depth maps that can be utilized to recreate environments in great detail. Bonin-Font et al. [140] compared two different stereo-vision-based SLAM methods, graph-SLAM and EKF SLAM, for the real-time localization of moving AUVs in underwater ecosystems. Both methods utilize only 3D models. They conducted experiments in a controllable water scene and the sea, and the results showed that, under the same working and environmental conditions, the graph-SLAM method is superior to the EKF counterpart method. SLAM pose estimation based on the globalized framework, matching methods with small cumulative errors, was used to reconstruct a virtual 3D map of the surrounding area from a combination of contiguous stereo-vision point clouds [141] placed at the corresponding SLAM positions.

One of the main problems of underwater volumetric SLAM is the refractive interface between the air inside the container and the water outside. If refraction is not taken into account, it can severely distort both the individual camera images and the depth that is calculated as a result of stereo correspondence. These mistakes might compound and lead to more significant mistakes in the final design. Servos et al. [142] generated dense, geometrically precise underwater environment reconstructions by correcting for refraction-induced image distortions. They used the calibration images to compute the camera and housing refraction models offline and generate nonlinear epipolar curves for stereo matching. Using the SAD block-matching algorithm, a stereo disparity map was created by executing this 1D optimization along the epipolar curve for each pixel in the reference image. The junction of the left and right image rays was then located utilizing pixel ray tracing through the refraction interface to ascertain the depth of each corresponding pair of pixels. They used ICP to directly register the generated point clouds. Finally, the depth map was employed to carry out dense SLAM and produce a 3D model of the surroundings. The SLAM algorithm combines ray tracing with refraction correction to enhance the map accuracy.

The underwater environment is more challenging than that on land, and directly applying standard 3D reconstruction methods underwater will make the final effect unsatisfactory. Therefore, underwater 3D reconstruction requires accurate and complete camera trajectories as a foundation for detailed 3D reconstruction. High-precision sparse 3D reconstruction determines the effect of subsequent dense reconstruction algorithms. Beall et al. [24] used stereo image pairs, detected salient features, calculated 3D locations and predicted the camera pose’s trajectory. SURF features were extracted from the left and right image pairs using synchronized high-definition video acquired with a wide-baseline stereo setup. The trajectories were used together with 3D feature points as a preliminary estimation and optimized with feedback to smoothing and mapping. After that, the mesh was texture-mapped with the image after the 3D points were triangulated using Delaunay triangulation. This device is being used to recreate coral reefs in the Bahamas.

Nurtantio et al. [143] used a camera system with multiple views to collect subsea footage in linear transects. Following the manual extraction of image pairs from video clips, the SIFT method automatically extracted related points from stereo pairs. Based on the generated point cloud, a Delaunay triangulation algorithm was used to process the sum of 3D points to generate a surface reconstruction. The approach is robust, and the matching accuracy of underwater images reached more than 87%. However, they manually extracted image pairs from video clips and then preprocessed the images.

Wu et al. [144] improved the dense disparity map, and their stereo-matching algorithm included a disparity-value search, per-pixel cost calculation, difference cumulative integral calculation, window statistics calculation and sub-pixel interpolation. In the fast stereo-matching algorithm, biological vision consistency checks and uniqueness-verification strategies were adopted to detect occlusion and unreliable matching and eliminate false matching of the underwater vision system. At the same time, they constructed a disparity map, that is, the relative profundity data of the ocean SV, to complete the three-dimensional surface model. It was further adjusted with image quality enhancement combined with homomorphic filtering and wavelet decomposition.

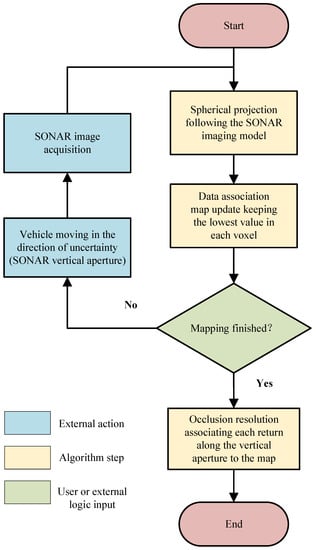

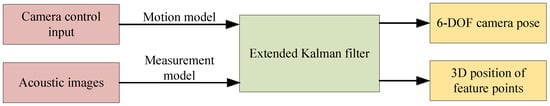

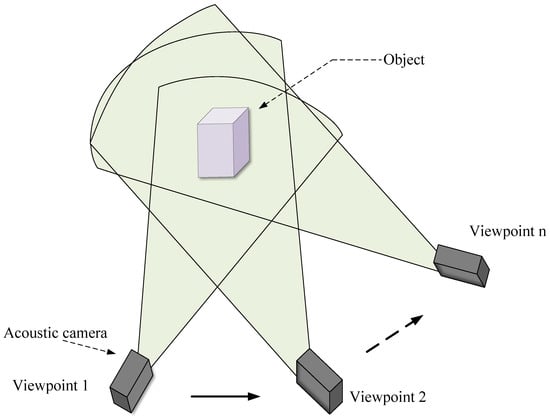

Zheng et al. [145] proposed an underwater binocular SV system under non-uniform illumination based on Zhang’s camera-calibration method [146]. For stereo matching, according to the research on SIFT’s image-matching technology, they adopted a new matching method that combines characteristic matching and district matching as well as margin features and nook features. This method can decrease the matching time and enhance the matching accuracy. The three-dimensional coordinate projection transformation matrix solved using the least-squares method was used to accurately calculate the three-dimensional coordinates of each point in the underwater scene.