DSE-NN: Discretized Spatial Encoding Neural Network for Ocean Temperature and Salinity Interpolation in the North Atlantic

Abstract

1. Introduction

2. Materials and Methods

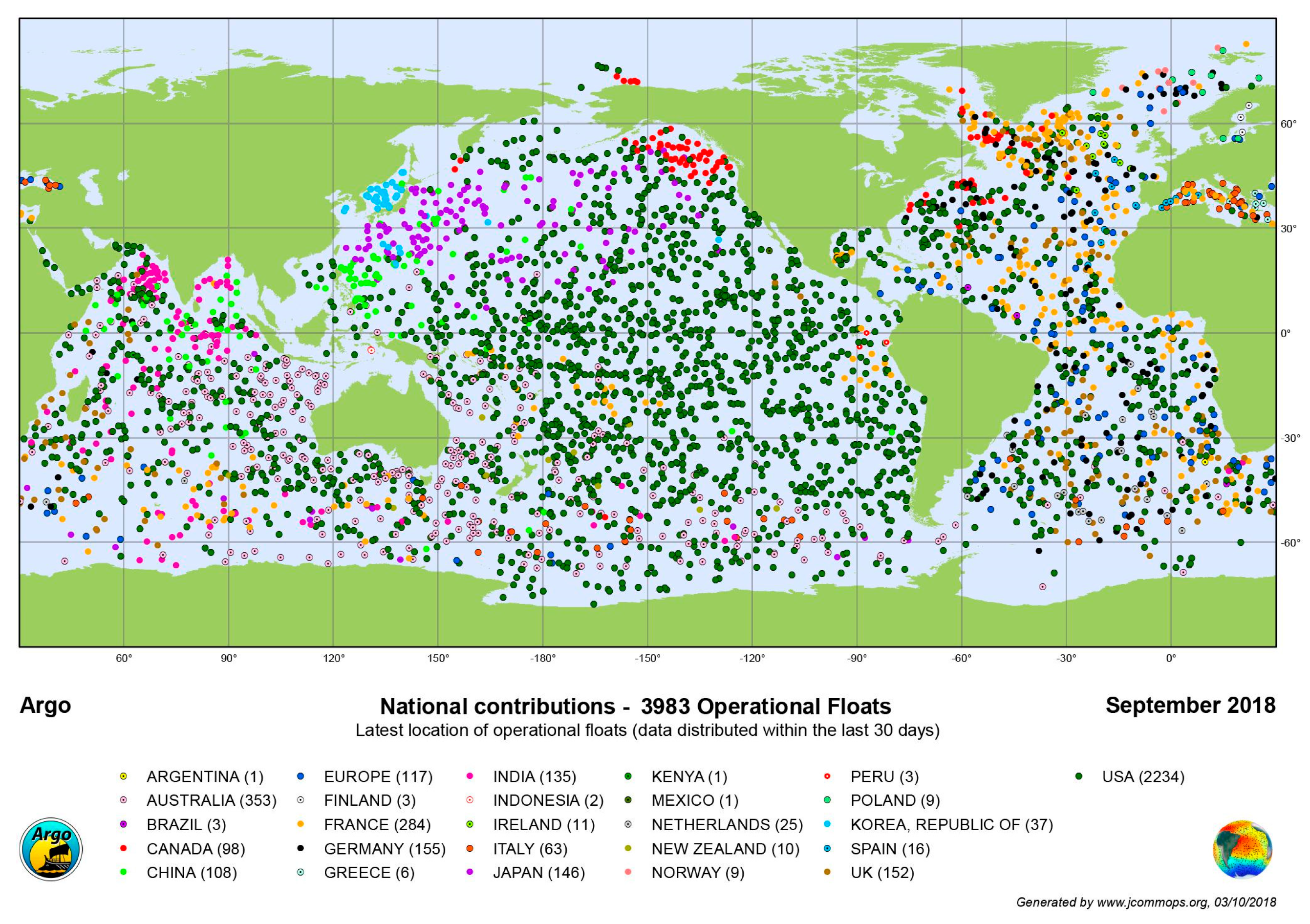

2.1. Data: In Situ Measurements (EN4.2.2)

2.2. Methods: DSE-NN Data Discretization Method

2.2.1. Spatial Discretization Method

2.2.2. Time Discretization Method

2.3. Method: Construction of a Deep Neural Network

2.3.1. Network Architecture

2.3.2. Weight Decay

2.3.3. Design of Loss Function and Data Testing Criteria

3. Results

3.1. RMSE Curves

3.2. North Atlantic Data Interpolation Demonstration

3.3. Comparison of DSE-NN and DNN Results

4. Results with Weight Decay

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vargas-Yanez, M.; Mallard, E.; Michel, R. The effect of interpolation methods in temperature and salinity trends in the Western Mediterranean. Mediterr. Mar. Sci. 2012, 12, 117–125. [Google Scholar] [CrossRef]

- Ran, J.; Chao, N.; Yue, L.; Chen, G.; Wang, Z.; Wu, T.; Li, C. Quantifying the contribution of temperature, salinity, and climate change to sea level rise in the Pacific Ocean: 2005–2019. Front. Mar. Sci. 2023, 10, 1200883. [Google Scholar] [CrossRef]

- Stammer, D.; Bracco, A.; AchutaRao, K.; Beal, L.; Bindoff, N.L.; Braconnot, P.; Cai, W.; Chen, D.; Collins, M.; Danabasoglu, G.; et al. Ocean climate observing requirements in support of climate research and climate information. Front. Mar. Sci. 2019, 6, 444. [Google Scholar] [CrossRef]

- Smith, P.A.H.; Sorensen, K.A.; Nardelli, B.B.; Chauhan, A.; Christensen, A.; John, M.S.; Rodrigues, F.; Mariani, P. Reconstruction of subsurface ocean state variables using convolutional neural networks with combined satellite and in situ data. Front. Mar. Sci. 2023, 10, 1218514. [Google Scholar] [CrossRef]

- Cui, Y.; Li, Q.; Li, Q.; Zhu, J.; Wang, C.; Ding, K.; Wang, D.; Yang, B. A triangular prism spatial interpolation method for mapping geological property fields. ISPRS Int. J. Geo-Inf. 2017, 6, 241. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, D.; Liu, Z.; Lu, S.; Sun, C.; Wei, Y.; Zhang, M. Global gridded argo dataset based on gradient-dependent optimal interpolation. J. Mar. Sci. Eng. 2022, 10, 650. [Google Scholar] [CrossRef]

- Dobesch, E.; Dumolard, P.; Dyras, I. Spatial Interpolation for Climate Data: The Use of GIS in Climatology and Meteorology; John Wiley & Sons: Hoboken, NJ, USA, 2013. [Google Scholar]

- Li, L.; Revesz, P. A Comparison of Two Spatio-Temporal Interpolation Methods. In Proceedings of the International Conference on Geographic Information Science, Boulder, CO, USA, 25–28 September 2002; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Lv, C.; Yu, F. The application of a complex composite fractal interpolation algorithm in the seabed terrain simulation. Math. Probl. Eng. 2018, 2018, 8641471. [Google Scholar] [CrossRef]

- Troccoli, A.; Balmaseda, M.A.; Segschneider, J.; Vialard, J.; Anderson, D.L.T.; Haines, K.; Stockdale, T.; Vitart, F.; Fox, A. Salinity adjustments in the presence of temperature data assimilation. Mon. Weather Rev. 2002, 130, 89–102. [Google Scholar] [CrossRef]

- Xie, C.; Li, M.; Wang, H.; Dong, J. A survey on visual analysis of ocean data. Vis. Inform. 2019, 3, 113–128. [Google Scholar] [CrossRef]

- Kurylyk, B.L.; Smith, K.A. Stuck in the middle: Thermal regimes of coastal lagoons and estuaries in a warming world. Environ. Res. Lett. 2023, 18, 061003. [Google Scholar] [CrossRef]

- Van der Zanden, J.; Cáceres, I.; Larsen, B.E.; Fromant, G.; Petrotta, C.; Scandura, P.; Li, M. Spatial and temporal distributions of turbulence under bichromatic breaking waves. Coast. Eng. 2019, 146, 65–80. [Google Scholar] [CrossRef]

- Johnson, G.C.; Fassbender, A.J. After two decades, Argo at PMEL, looks to the future. Oceanography 2023, 36, 54–59. [Google Scholar] [CrossRef]

- Bovenga, F.; Pasquariello, G.; Refice, A. Statistically-based trend analysis of MTInSAR displacement time series. Remote Sens. 2021, 13, 2302. [Google Scholar] [CrossRef]

- Benway, H.M.; Lorenzoni, L.; White, A.; Fiedler, B.; Levin, N.M.; Nicholson, D.P.; DeGrandpre, M.D.; Sosik, H.M.; Church, M.J.; O’Brien, T.D.; et al. Ocean time series observations of changing marine ecosystems: An era of integration, synthesis, and societal applications. Front. Mar. Sci. 2019, 6, 393. [Google Scholar] [CrossRef]

- Sunder, S.; Ramsankaram, R.; Ramakrishnan, B. Machine learning techniques for regional scale estimation of high-resolution cloud-free daily sea surface temperatures from MODIS data. ISPRS J. Photogramm. Remote Sens. 2020, 166, 228–240. [Google Scholar] [CrossRef]

- Xu, J.; Xu, Z.; Kuang, J.; Lin, C.; Xiao, L.; Huang, X.; Zhang, Y. An alternative to laboratory testing: Random forest-based water quality prediction framework for inland and nearshore water bodies. Water 2021, 13, 3262. [Google Scholar] [CrossRef]

- Wu, W.; Zucca, C.; Muhaimeed, A.S.; Al-Shafie, W.M.; Al-Quraishi, A.M.F.; Nangia, V.; Zhu, M.; Liu, G. Soil salinity prediction and mapping by machine learning regression in Central Mesopotamia, Iraq. Land Degrad. Dev. 2018, 29, 4005–4014. [Google Scholar] [CrossRef]

- Haghbin, M.; Sharafati, A.; Motta, D.; Al-Ansari, D.; Noghani, M.H.M. Applications of soft computing models for predicting sea surface temperature: A comprehensive review and assessment. Prog. Earth Planet. Sci. 2021, 8, 4. [Google Scholar] [CrossRef]

- Tan, J.; NourEldeen, N.; Mao, K.; Shi, J.; Li, Z.; Xu, T.; Yuan, Z. Deep learning convolutional neural network for the retrieval of land surface temperature from AMSR2 data in China. Sensors 2019, 19, 2987. [Google Scholar] [CrossRef]

- Han, M.; Feng, Y.; Zhao, X.; Sun, C.; Hong, F.; Liu, C. A convolutional neural network using surface data to predict subsurface temperatures in the Pacific Ocean. IEEE Access 2019, 7, 172816–172829. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Yin, X. Aquarius sea surface salinity retrieval in coastal regions based on deep neural networks. Remote Sens. Environ. 2023, 284, 113357. [Google Scholar] [CrossRef]

- Tran, T.T.; Nguyen, L.D.; Ngoc, H.P.; Pham, Q.B.; Thanh, H.P.T.; Dong, N.N.P.; Hieu, H.H.; Hien, N.T. Long Short-Term Memory (LSTM) neural networks for short-term water level prediction in Mekong river estuaries. Songklanakarin J. Sci. Technol. 2022, 44, 1057–1066. [Google Scholar] [CrossRef]

- Pyo, J.; Pachepsky, Y.; Kim, S.; Abbas, A.; Kim, M.; Kwon, Y.S.; Ligaray, M.; Cho, K.H. Long short-term memory models of water quality in inland water environments. Water Res. X 2023, 21, 100207. [Google Scholar] [CrossRef] [PubMed]

- Mohammed, A.; Corzo, G. Spatiotemporal convolutional long short-term memory for regional streamflow predictions. J. Environ. Manag. 2024, 350, 119585. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Zhang, S.; Yu, J.J. Reconstruction of Missing Trajectory Data: A Deep Learning Approach. In Proceedings of the Conference: 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020. [Google Scholar] [CrossRef]

- Langehaug, H.R.; Sagen, H.; Stallemo, A.; Uotila, P.; Rautianinen, L.; Olsen, S.M.; Devilliers, M.; Yang, S.; Storheim, E. Constraining CMIP6 estimates of Arctic Ocean temperature and salinity in 2025–2055. Front. Mar. Sci. 2023, 10, 1211562. [Google Scholar] [CrossRef]

- Killick, R.; National Center for Atmospheric Research Staff. (Eds.) The Climate Data Guide: EN4 Subsurface Temperature and Salinity for the Global Oceans. Available online: https://climatedataguide.ucar.edu/climate-data/en4-subsurface-temperature-and-salinity-global-oceans (accessed on 25 March 2024).

- Met Office Hadley Centre Observations Datasets. En4 Quality Controlled Ocean Data: Select Version to Download. Available online: https://www.metoffice.gov.uk/hadobs/en4/download.html (accessed on 25 March 2024).

- NASA. Argo. Available online: https://sealevel.nasa.gov/missions/argo (accessed on 25 March 2024).

- USCD. What Is Argo? Available online: https://argo.ucsd.edu/ (accessed on 25 March 2024).

- NOAA’s Atlantic Oceanographic and Meteorological Laboratory. Argo. Available online: https://www.aoml.noaa.gov/argo/ (accessed on 25 March 2024).

- Hosoda, S.; Ohira, T.; Nakamura, T. A monthly mean dataset of global oceanic temperature and salinity derived from Argo float observations. JAMSTEC Rep. Res. Dev. 2008, 8, 47–59. [Google Scholar] [CrossRef]

- Wong, A.P.; Gilson, J.; Cabanes, C. Argo salinity: Bias and uncertainty evaluation. Earth Syst. Sci. Data 2023, 15, 383–393. [Google Scholar] [CrossRef]

- Wong, A.P.; Wijffels, S.E.; Riser, S.C.; Pouliquen, S.; Hosoda, S.; Roemmich, D.; Gilson, J.; Johnson, G.C.; Martini, K.; Murphy, D.J.; et al. Argo data 1999–2019: Two million temperature-salinity profiles and subsurface velocity observations from a global array of profiling floats. Front. Mar. Sci. 2020, 7, 700. [Google Scholar] [CrossRef]

- Sze, V.; Chen, Y.-H.; Yang, T.-J.; Emer, J.S. Efficient processing of deep neural networks: A tutorial and survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Wang, S.; Cao, J.; Yu, P.S. Deep learning for spatio-temporal data mining: A survey. IEEE Trans. Knowl. Data Eng. 2022, 34, 3681–3700. [Google Scholar] [CrossRef]

- Wikle, C.K. Comparison of deep neural networks and deep hierarchical models for spatio-temporal data. J. Agric. Biol. Environ. Stat. 2019, 24, 175–203. [Google Scholar] [CrossRef]

- Olmedo, E.; Turiel, A.; González-Gambau, V.; González-Haro, C.; García-Espriu, A.; Gabarró, C.; Portabella, M.; Corbella, I.; Martín-Neira, M.; Arias, M.; et al. Increasing stratification as observed by satellite sea surface salinity measurements. Sci. Rep. 2022, 12, 6279. [Google Scholar] [CrossRef] [PubMed]

- Legg, S.; McWilliams, J.C. Temperature and salinity variability in heterogeneous oceanic convection. J. Phys. Oceanogr. 2000, 30, 1188–1206. [Google Scholar] [CrossRef]

- Kara, A.B.; Rochford, P.A.; Hurlburt, H.E. Mixed layer depth variability over the global ocean. J. Geophys. Res. Ocean. 2003, 108, C3. [Google Scholar] [CrossRef]

- Boyer Montégut, C.; Madec, G.; Fischer, A.S.; Lazar, A.; Iudicone, D. Mixed layer depth over the global ocean: An examination of profile data and a profile-based climatology. J. Geophys. Res. Ocean. 2004, 109, C12. [Google Scholar] [CrossRef]

- Kuhlbrodt, T.; Swaminathan, R.; Ceppi, P.; Wilder, T. A glimpse into the future: The 2023 ocean temperature and sea-ice extremes in the context of longer-term climate change. Bull. Am. Meteorol. Soc. 2024, 105, E474–E485. [Google Scholar] [CrossRef]

- Nakamura, K.; Hong, B.W. Adaptive weight decay for deep neural networks. IEEE Access 2019, 7, 118857–118865. [Google Scholar] [CrossRef]

- Reddy, T.; RM, S.P.; Parimala, M.; Chowdhary, C.L.; Hakak, S.; Khan, W.Z. A deep neural networks based model for uninterrupted marine environment monitoring. Comput. Commun. 2020, 157, 64–75. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Jia, W.; Zhang, W. DSE-NN: Discretized Spatial Encoding Neural Network for Ocean Temperature and Salinity Interpolation in the North Atlantic. J. Mar. Sci. Eng. 2024, 12, 1013. https://doi.org/10.3390/jmse12061013

Liu S, Jia W, Zhang W. DSE-NN: Discretized Spatial Encoding Neural Network for Ocean Temperature and Salinity Interpolation in the North Atlantic. Journal of Marine Science and Engineering. 2024; 12(6):1013. https://doi.org/10.3390/jmse12061013

Chicago/Turabian StyleLiu, Shirong, Wentao Jia, and Weimin Zhang. 2024. "DSE-NN: Discretized Spatial Encoding Neural Network for Ocean Temperature and Salinity Interpolation in the North Atlantic" Journal of Marine Science and Engineering 12, no. 6: 1013. https://doi.org/10.3390/jmse12061013

APA StyleLiu, S., Jia, W., & Zhang, W. (2024). DSE-NN: Discretized Spatial Encoding Neural Network for Ocean Temperature and Salinity Interpolation in the North Atlantic. Journal of Marine Science and Engineering, 12(6), 1013. https://doi.org/10.3390/jmse12061013