Photogrammetry of the Deep Seafloor from Archived Unmanned Submersible Exploration Dives

Abstract

:1. Introduction

2. Materials and Methods

- Collect video imagery in the study area and then convert it to individual images.

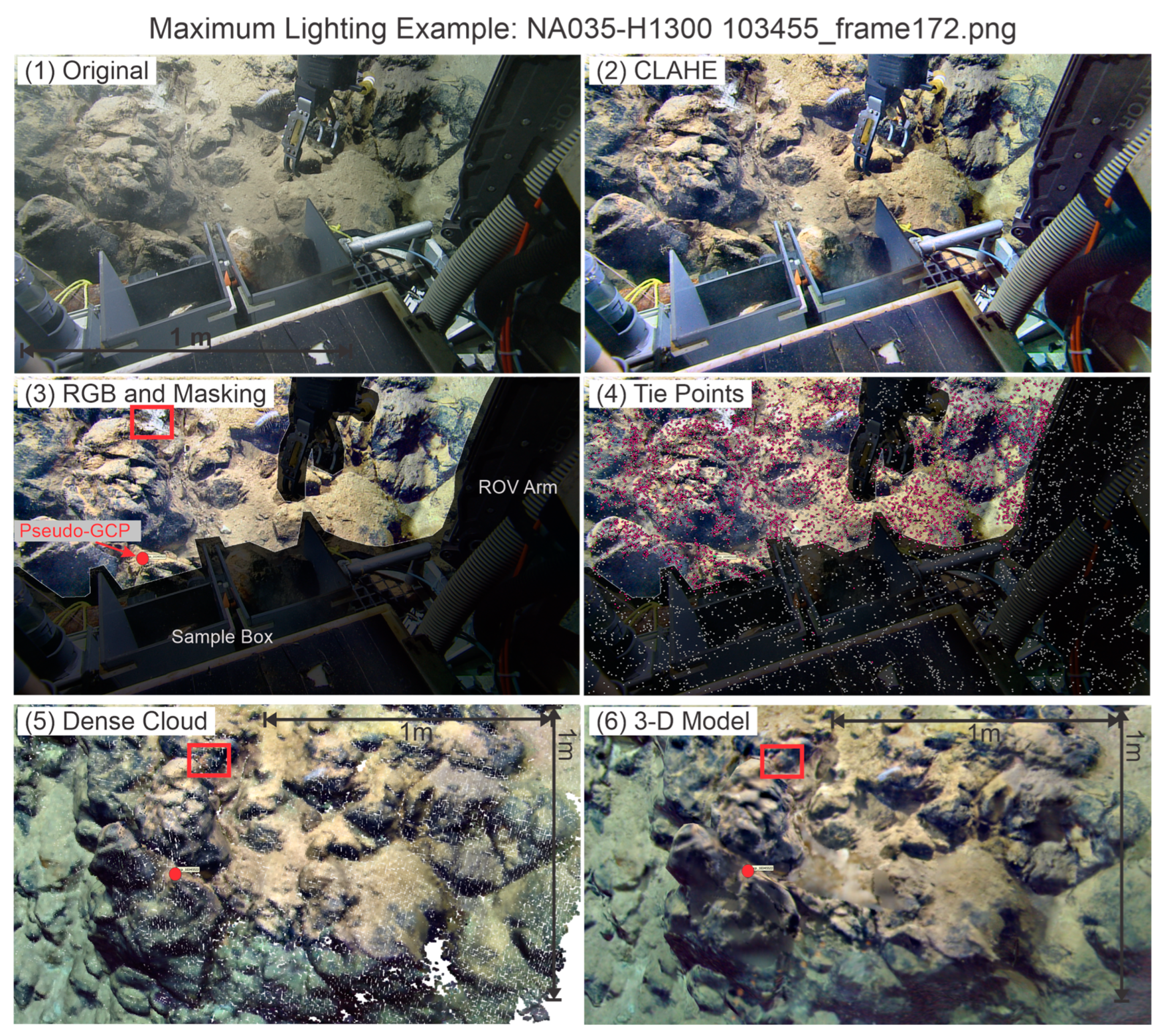

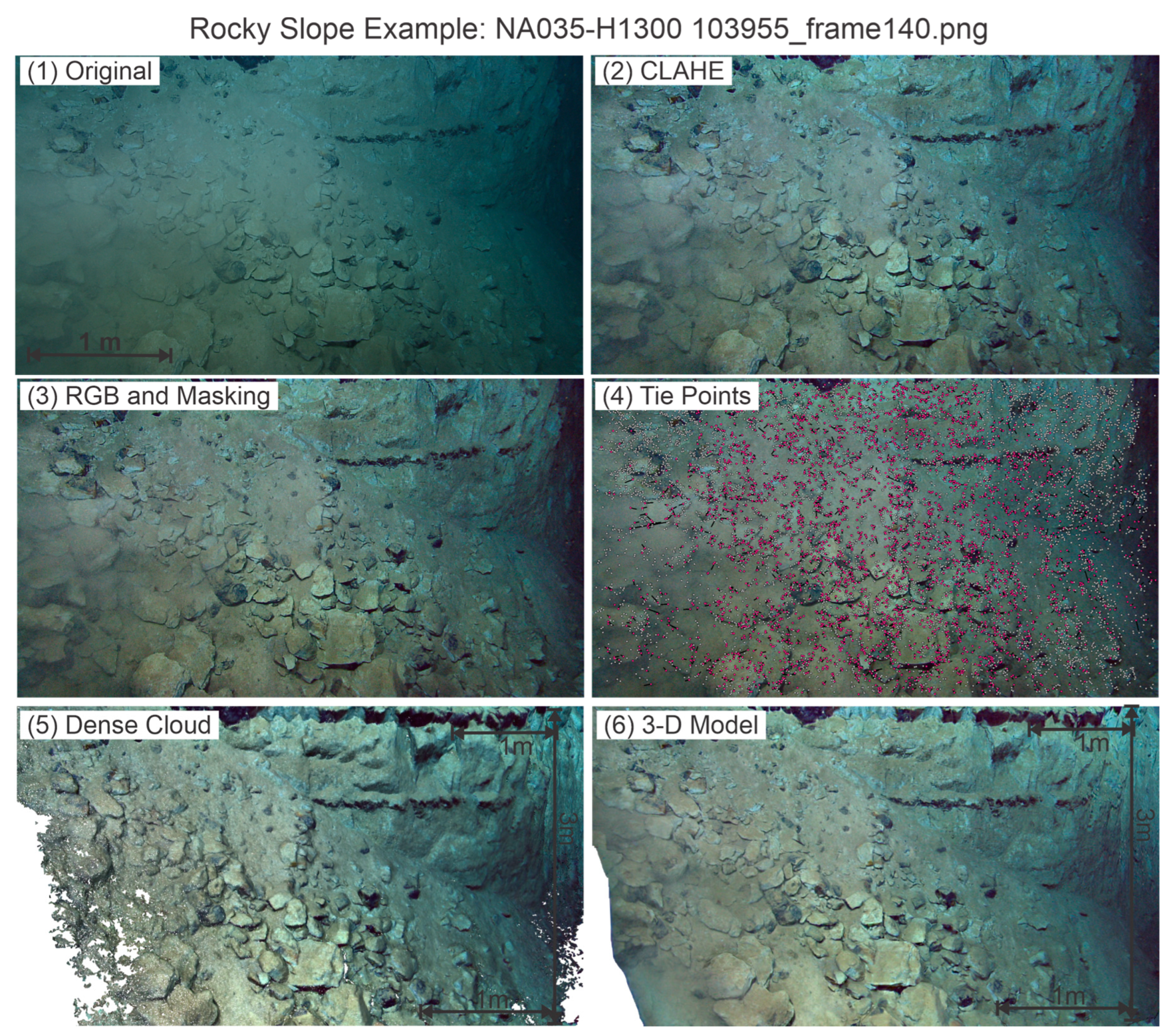

- Use masks to cover unwanted sections of images (example: edge of ROV).

- Align the images using photogrammetric software algorithms to obtain a tie point cloud.

- Convert the tie points and camera paths into a dense cloud which is the 3-D representation of the 3-D surface.

- From the dense cloud, export end products such as photomosaics or 3-D meshes with texture.

- Carry out scientific interpretation from these end products.

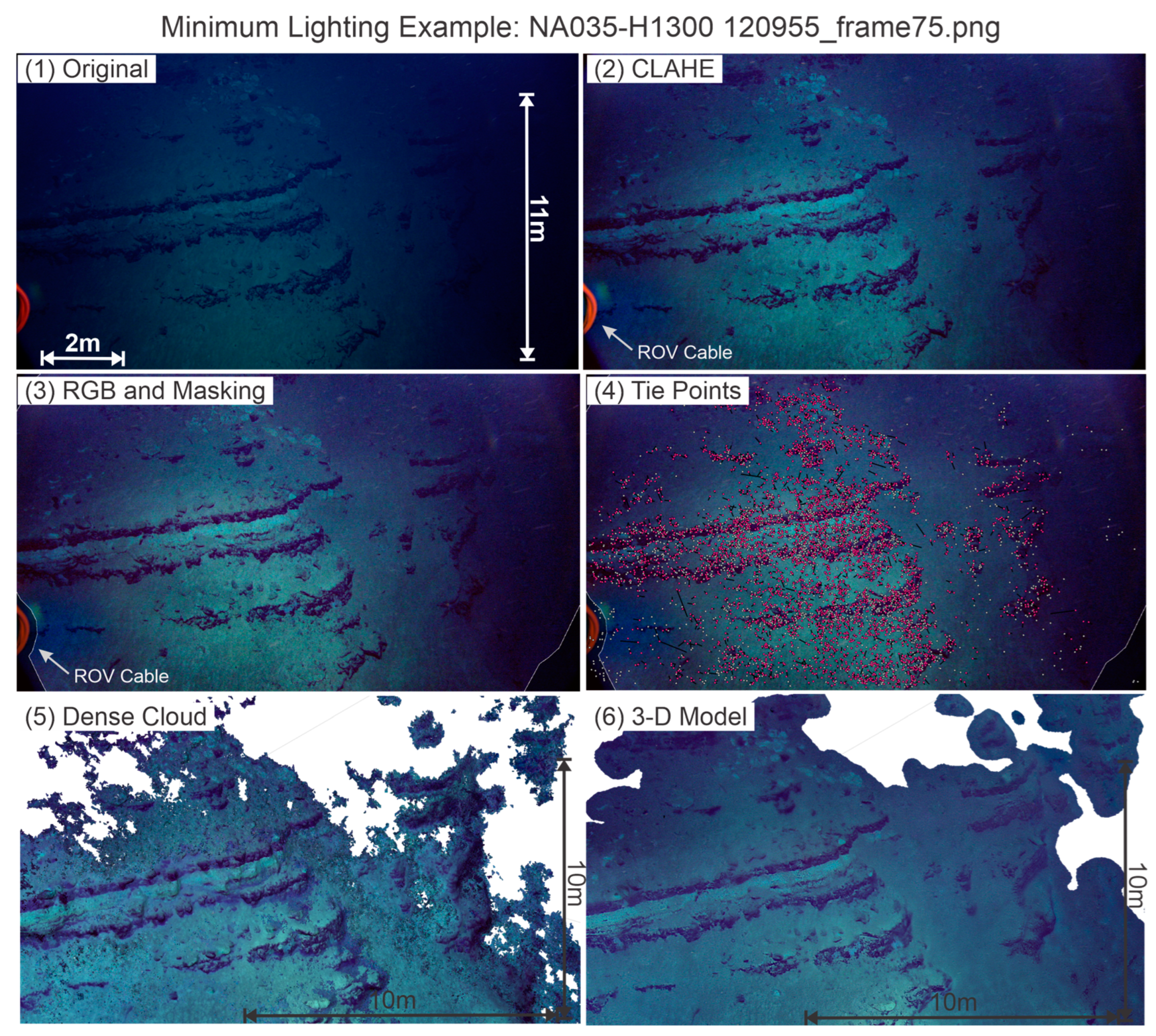

- Lighting: The distance that light can travel underwater is limited. In addition, light absorption by seawater is not uniform across the visible spectrum, and therefore, the color of an object is distorted by the distance from the camera lens. When objects are hard to make out because of insufficient lighting or because of turbid water, the photogrammetric process fails (Section 3.2).

- The ROV systems in the dives that we processed comprise two ROVs, one hovering over the other. While this configuration may help illuminate the seafloor, it may also add dark shadows that need to be masked in the images (Section 3.3).

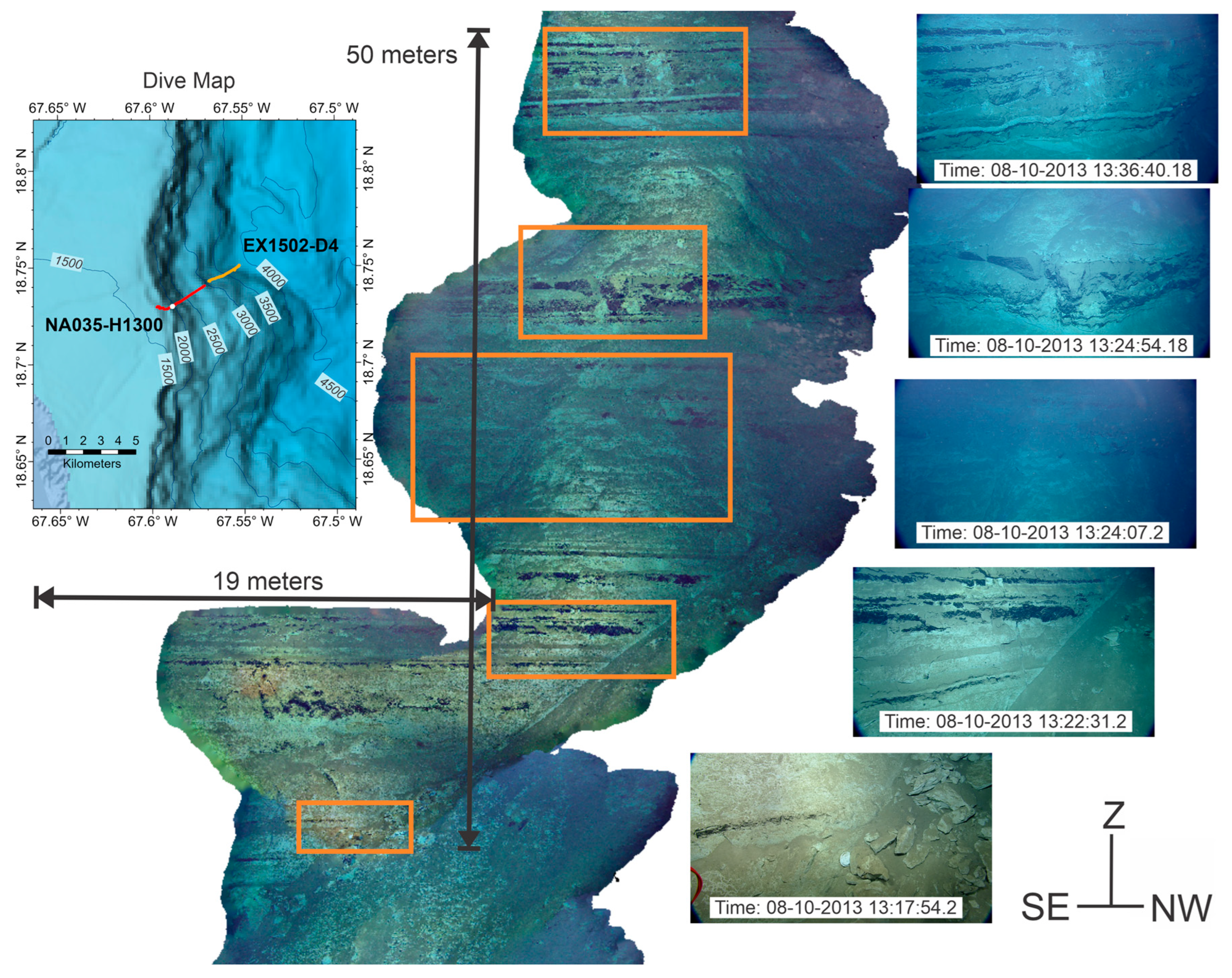

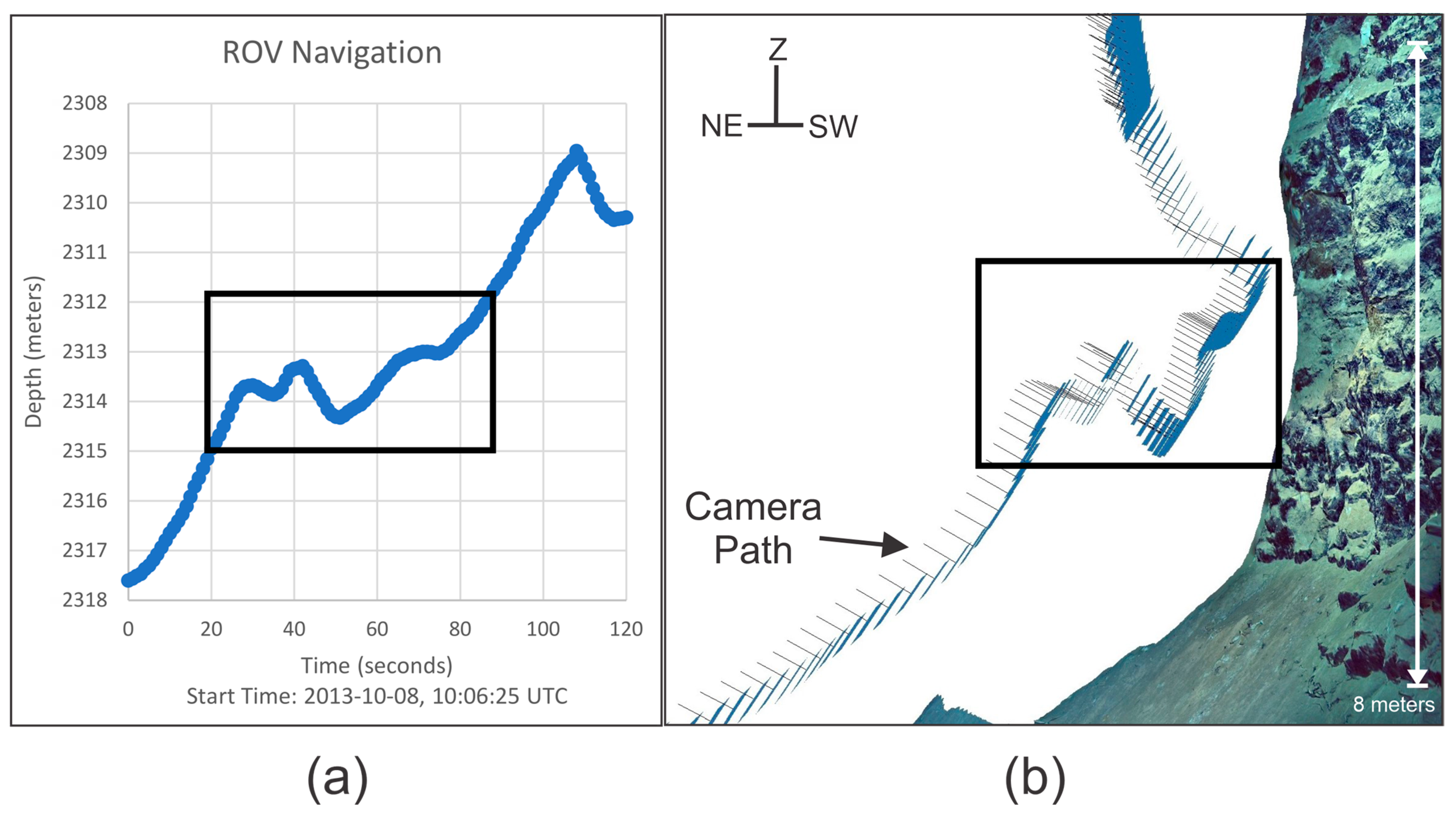

- Travel paths: ROV paths do not follow a pre-planned grid pattern. They meander along a long and circuitous path from one point of interest to the next. As the ROV travels, it does not always maintain a constant speed and height above the seafloor. The height above the seafloor controls the resolution and the areal extent of ground captured in the image. Video sections cannot be used in photogrammetry when the ROV stops moving (Section 3.4 and Section 3.5).

- Navigation: ROV navigation involves acoustic ranging between the ROV and the ship. Acoustic navigation has a limited depth resolution relative to electromagnetic ranging (e.g., GPS) and requires knowledge of sound speed in the seawater column [22]. ROV position was calculated via continuous acoustic ultra-short baseline (USBL) tracking [23]. The USBL position accuracy for the ROV Hercules at the time of data collection was 1° degree, or better than 2% of the slant range [24], which translates to an accuracy of a few tens of meters in deep-water dives. Unlike navigation in the terrestrial domain, the use of preexisting bathymetry for navigation is limited in the deep sea by its resolution (typically 10–25 m or more, depending on water depth) because the bathymetry has been collected by acoustic sounding from surface ships (Section 3.7).

- Ground control points (GCPs): Ground control points are not placed on the seafloor during an ROV traverse, limiting our ability to accurately georeference the photogrammetry products (Section 3.7).

- Camera orientation: Unlike a camera mounted on a drone where the camera angle is fixed with respect to the drone, the camera on the ROV moves up or down and from side to side independent of the ROV roll, pitch, and yaw, and this camera motion is not recorded. The camera may be at a 45° angle at the start of the dive and while the seafloor is flat, but it changes when the ROV is moving up along a steep incline or as the ROV is collecting and viewing objects of interest. The camera orientation is not a problem by itself, but it becomes an issue when combined with a change in the camera lens focal length reducing the overlap between images (Section 3.7).

- Camera lens focal length: Zooming with the HD camera is different from the ROV moving very close to an object because zooming changes the peripheral angle of view of the edges of the image. For photogrammetry software to work, there is a need to keep the apparent focal length of the camera lens as constant as possible, especially when the camera angle is moving (Section 3.4).

- Synchronizing the video data with the navigation: In our work, we have been using video that does not have EXIF (Exchangeable Image File Format) metadata embedded in the files. EXIF metadata can provide information on camera settings, date and time, and location. The only information we have is the frames-per-second rate of the video and the start time of the video, which is included in the file name (Section 3.7).

3. Results

3.1. Video Stream to Imagery

3.2. Digital Image Processing to Improve Image Quality

3.3. Masking Unwanted Areas of the Images

3.4. Building Image Groups and Editing out Unnecessary Images

3.5. Running the Alignment

3.6. Continuing the Alignment Where It Failed

- Alignment often fails in sandy, featureless sections as shown in Figure 3. To resolve this issue, rebuild the camera path a few frames at a time until the image group is completed. There are usually enough points at the bottom two-thirds of the images to manually complete the alignment on the entire image group.

- Sometimes there is a continuity jump inside the image group that is too great for the software algorithm to match. In this situation, identify matching points or set up a minimum of four but preferably between six and eight markers or points to help guide the software to match up sections and continue the camera path.

- Turbid water blocks the view of the seafloor. These are the most difficult sections to align because the ROV is usually moving into or out of the sediment cloud, and points of reference are hard to find before and after these sediment clouds clear away. A section with a sediment cloud can be treated as a jump and processed as in item B above.

- When the focal length of the ROV camera changes when zooming in and out, the software applies one camera parameter where there should be two different camera parameters. To resolve this issue, points of reference need to be identified as in item B above and the section be treated as a continuity jump.

- Low lighting, even after improving the contrast, may impede the software’s ability to pick features to match (see Figure 6). It may be necessary to identify dark or light features and use them as markers to build a camera path, following the procedure in item B above. More than four points may be needed depending on how fast the ROV is moving. Once there is enough light in the images, the software can take over.

- Bad alignment solutions can show up as unconventional camera paths or tight spirals and can be the result of poor image overlap. The solution is to reset the camera alignment in those sections and rebuild the camera path a few frames at a time to fix the problem.

3.7. Adding Navigation and Pseudo-GCPs and Calibrating the Camera Paths

3.8. Merging Image Groups and Optimization

3.9. Building the Dense Cloud from the Tie Points

3.10. Converting the Dense Cloud into Other Viewable Products for Collaboration

4. Discussion

4.1. Reliance on Image Quality Instead of Navigation to Build the Model

4.2. True Color of Objects Underwater

4.3. Suggested Improvements to ROV Operations in Deep Water

- (a)

- More reference points are needed to improve the navigation. Because it is impractical to place markers on the sea floor, we generated pseudo-GCP markers by having the ROV rest on the seafloor or hover in place for at least 15 s but preferably for 60 s and averaging the X, Y, and Z coordinate reading. Pseudo-GCPs should preferably be collected every 15 min along the transect.

- (b)

- Flying through sediment clouds should be avoided because the accuracy of the navigation is not sufficient to piece together sections across the sediment cloud. It is preferable to wait until the sediment settles and visibility improves before moving again.

- (c)

- When chasing fish and other deep-sea fauna, the ROV should return to a reference feature to create a “continuous” video.

4.4. Building a Project for a Visiting Summer Student

4.5. Measurable Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Abdelaziz, M.; Elsayed, M. Underwater Photogrammetry Digital Surface Model (DSM) of the Submerged Site of the Ancient Lighthouse near Qaitbay Fort in Alexandria, Egypt. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 1–8. [Google Scholar] [CrossRef]

- Boittiaux, C.; Dune, C.; Ferrera, M.; Arnaubec, A.; Marxer, R.; Matabos, M.; Van Audenhaege, L.; Hugel, V. Eiffel Tower: A Deep-Sea Underwater Dataset for Long-Term Visual Localization. Int. J. Robot. Res. 2023, 42, 689–699. [Google Scholar] [CrossRef]

- Barreyre, T.; Escartín, J.; Garcia, R.; Cannat, M.; Mittelstaedt, E.; Prados, R. Structure, Temporal Evolution, and Heat Flux Estimates from the Lucky Strike Deep-sea Hydrothermal Field Derived from Seafloor Image Mosaics. Geochem. Geophys. Geosystems 2012, 13, 2011GC003990. [Google Scholar] [CrossRef]

- Price, D.M.; Robert, K.; Callaway, A.; Lo Lacono, C.; Hall, R.A.; Huvenne, V.A.I. Using 3D Photogrammetry from ROV Video to Quantify Cold-Water Coral Reef Structural Complexity and Investigate Its Influence on Biodiversity and Community Assemblage. Coral Reefs 2019, 38, 1007–1021. [Google Scholar] [CrossRef]

- Escartín, J.; Leclerc, F.; Olive, J.-A.; Mevel, C.; Cannat, M.; Petersen, S.; Augustin, N.; Feuillet, N.; Deplus, C.; Bezos, A.; et al. First Direct Observation of Coseismic Slip and Seafloor Rupture along a Submarine Normal Fault and Implications for Fault Slip History. Earth Planet. Sci. Lett. 2016, 450, 96–107. [Google Scholar] [CrossRef]

- Reid, H.F.; Taber, S. The Porto Rico Earthquakes of October-November, 1918. Bull. Seismol. Soc. Am. 1919, 9, 95–127. [Google Scholar] [CrossRef]

- Mercado, A.; McCann, W. Numerical Simulation of the 1918 Puerto Rico Tsunami. Nat. Hazards 1998, 18, 57–76. [Google Scholar] [CrossRef]

- Doser, D.I.; Rodriguez, C.M.; Flores, C. Historical Earthquakes of the Puerto Rico–Virgin Islands Region (1915–1963). In Active Tectonics and Seismic Hazards of Puerto Rico, the Virgin Islands, and Offshore Areas; Mann, P., Ed.; Geological Society of America: Boulder, CO, USA, 2005; Volume 385, ISBN 978-0-8137-2385-3. [Google Scholar] [CrossRef]

- Hornbach, M.J.; Mondziel, S.A.; Grindlay, N.; Frohlich, C.; Mann, P. Did a Submarine Slide Trigger the 1918 Puerto Rico Tsunami? Sci. Tsunami Hazards 2008, 27, 22–31. [Google Scholar]

- López-Venegas, A.M.; Ten Brink, U.S.; Geist, E.L. Submarine Landslide as the Source for the October 11, 1918 Mona Passage Tsunami: Observations and Modeling. Mar. Geol. 2008, 254, 35–46. [Google Scholar] [CrossRef]

- López-Venegas, A.M.; Horrillo, J.; Pampell-Manis, A.; Huérfano, V.; Mercado, A. Advanced Tsunami Numerical Simulations and Energy Considerations by Use of 3D–2D Coupled Models: The October 11, 1918, Mona Passage Tsunami. Pure Appl. Geophys. 2015, 172, 1679–1698. [Google Scholar] [CrossRef]

- Andrews, B.D.; ten Brink, U.S.; Danforth, W.W.; Chaytor, J.D.; Granja-Bruna, J.; Carbo-Gorosabel, A. Bathymetric Terrain Model of the Puerto Rico Trench and the Northeastern Caribbean Region for Marine Geological Investigations; U.S. Geological Survey: Reston, Virginia, 2014; p. 1. [Google Scholar] [CrossRef]

- ten Brink, U.S.; Coleman, D.F.; Chaytor, J.; Demopoulos, A.W.J.; Armstrong, R.; Garcia-Moliner, G.; Raineault, N.; Andrews, B.; Chastain, R.; Rodrigue, K.; et al. Earthquake, Landslide, and Tsunami Hazards and Benthic Biology in the Greater Antilles. Oceanography 2014, 27, 34–35. [Google Scholar]

- Kennedy, B.R.C.; Cantwell, K.; Sowers, D.; Cheadle, M.J.; McKenna, L.A. EX1502 Leg 3, Océano Profundo 2015: Exploring Puerto Rico’s Seamounts, Trenches, and Troughs: Expedition Report; U.S. National Oceanic and Atmospheric Administration, Office of Ocean Exploration and Research: Silver Spring, MD, USA, 2015. [Google Scholar] [CrossRef]

- Mondziel, S.; Grindlay, N.; Mann, P.; Escalona, A.; Abrams, L. Morphology, Structure, and Tectonic Evolution of the Mona Canyon (Northern Mona Passage) from Multibeam Bathymetry, Side-Scan Sonar, and Seismic Reflection Profiles: Mona canyon tectonics. Tectonics 2010, 29, TC2003. [Google Scholar] [CrossRef]

- ten Brink, U.; Danforth, W.; Polloni, C.; Andrews, B.; Llanes, P.; Smith, S.; Parker, E.; Uozumi, T. New Seafloor Map of the Puerto Rico Trench Helps Assess Earthquake and Tsunami Hazards. Eos Trans. Am. Geophys. Union 2004, 85, 349–354. [Google Scholar] [CrossRef]

- ten Brink, U.; Chaytor, J.; Flores, C.; Wei, Y.; Detmer, S.; Lucas, L.; Andrews, B.; Georgiopoulou, A. Seafloor Observations Eliminate a Landslide as the Source of the 1918 Puerto Rico Tsunami. Bull. Seismol. Soc. Am. 2023, 113, 268–280. [Google Scholar] [CrossRef]

- ten Brink, U.S.; Bialik, O.M.; Chaytor, J.D.; Flores, C.H.; Philips, M.P. Field Geology under the Sea with a Remotely Operated Vehicle: Mona Rift, Puerto Rico. Geosphere 2024. submitted. Available online: https://eartharxiv.org/repository/view/6838/ (accessed on 19 July 2024).

- Arnaubec, A.; Ferrera, M.; Escartín, J.; Matabos, M.; Gracias, N.; Opderbecke, J. Underwater 3D Reconstruction from Video or Still Imagery: Matisse and 3DMetrics Processing and Exploitation Software. J. Mar. Sci. Eng. 2023, 11, 985. [Google Scholar] [CrossRef]

- Over, J.-S.R.; Ritchie, A.C.; Kranenburg, C.J.; Brown, J.A.; Buscombe, D.D.; Noble, T.; Sherwood, C.R.; Warrick, J.A.; Wernette, P.A. Processing Coastal Imagery with Agisoft Metashape Professional Edition, Version 1.6—Structure from Motion Workflow Documentation; U.S. Geological Survey: Reston, Virginia, 2021. [Google Scholar] [CrossRef]

- Hansman, R.J.; Ring, U. Workflow: From Photo-Based 3-D Reconstruction of Remotely Piloted Aircraft Images to a 3-D Geological Model. Geosphere 2019, 15, 1393–1408. [Google Scholar] [CrossRef]

- Blain, M.; Lemieux, S.; Houde, R. Implementation of a ROV Navigation System Using Acoustic/Doppler Sensors and Kalman Filtering. In Proceedings of the Oceans 2003. Celebrating the Past... Teaming Toward the Future (IEEE Cat. No.03CH37492), San Diego, CA, USA, 22–26 September 2003; Volume 3, pp. 1255–1260. [Google Scholar]

- Zieliński, A.; Zhou, L. Precision Acoustic Navigation for Remotely Operated Vehicles (ROV). Hydroacoustics 2005, 8, 255–264. [Google Scholar]

- Bell, K.L.C.; Brennan, M.L.; Raineault, N.A. New Frontiers in Ocean Exploration: The E/V Nautilus 2013 Gulf of Mexico and Caribbean Field Season. Oceanography 2014, 27, 52. [Google Scholar] [CrossRef]

- Agisoft Metashape: Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 22 February 2024).

- Mangeruga, M.; Bruno, F.; Cozza, M.; Agrafiotis, P.; Skarlatos, D. Guidelines for Underwater Image Enhancement Based on Benchmarking of Different Methods. Remote Sens. 2018, 10, 1652. [Google Scholar] [CrossRef]

- OpenCV. Available online: https://opencv.org/ (accessed on 2 April 2024).

- Istenič, K.; Gracias, N.; Arnaubec, A.; Escartín, J.; Garcia, R. Scale Accuracy Evaluation of Image-Based 3D Reconstruction Strategies Using Laser Photogrammetry. Remote Sens. 2019, 11, 2093. [Google Scholar] [CrossRef]

- CloudCompare—Open Source Project. Available online: https://www.cloudcompare.org/ (accessed on 22 February 2024).

- Schütz, M. Potree: Rendering Large Point Clouds in Web Browsers. Master’s Thesis, Technische Universität Wien, Vienna, Austria, 2015. [Google Scholar]

- Buckley, S.J.; Ringdal, K.; Naumann, N.; Dolva, B.; Kurz, T.H.; Howell, J.A.; Dewez, T.J.B. LIME: Software for 3-D Visualization, Interpretation, and Communication of Virtual Geoscience Models. Geosphere 2019, 15, 222–235. [Google Scholar] [CrossRef]

- Needle, M.D.; Mooc, J.; Akers, J.F.; Crider, J.G. The Structural Geology Query Toolkit for Digital 3D Models: Design Custom Immersive Virtual Field Experiences. J. Struct. Geol. 2022, 163, 104710. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T. Sea-Thru: A Method for Removing Water From Underwater Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1682–1691. [Google Scholar] [CrossRef]

- Detmer, S.; ten Brink, U.S.; Flores, C.H.; Chaytor, J.D.; Coleman, D.F. High Resolution 3D Geological Mapping Using Structure-from-Motion Photogrammetry in the Deep Ocean Bathyal Zone. In Proceedings of the AGU Fall Meeting 2021, New Orleans, LA, USA, 15 December 2021. [Google Scholar]

- Lucas, L.C.; ten Brink, U.S.; Flores, C.H.; Chaytor, J.D. Geological Mapping in the Deep Ocean Using Structure-from-Motion Photogrammetry. In Proceedings of the AGU Fall Meeting 2022, Chicago, IL, USA, 12 December 2022. [Google Scholar]

- Paskevich, V.F.; Wong, F.L.; O’Malley, J.J.; Stevenson, A.J.; Gutmacher, C.E. GLORIA Sidescan-Sonar Imagery for Parts of the U.S. Exclusive Economic Zone and Adjacent Areas; U.S. Geological Survey: Reston, VA, USA, 2011. [Google Scholar] [CrossRef]

- Triezenberg, P.J.; Hart, P.J.; Childs, J.R. National Archive of Marine Seismic Surveys (NAMSS: A USGS Data Website of Marine Seismic Reflection Data within the U.S. Exclusive Economic Zone (EEZ). 2016. Available online: https://www.usgs.gov/data/national-archive-marine-seismic-surveys-namss-a-usgs-data-website-marine-seismic-reflection (accessed on 22 February 2024). [CrossRef]

| Process (Step) | Software Used | Section | Process Summary |

|---|---|---|---|

| Image Generation and Preparation (A and B) | Metashape Professional v. 1.7 & 1.8 | Section 3.1 | Convert video stream to images at one-second increments. Each file should have a unique name and be based on the video file name. |

| Python v. 3.8.5 & 3.8.12 | Section 3.2 | Generate an initial navigation file using the same one-second time increment to assign a unique navigation point for each image. Clean the images using the OpenCV CLAHE module. | |

| Metashape Professional v. 1.7 & 1.8 | Section 3.3 and Section 3.4 | Set up image groups and delete duplicate images and camera zoom-in sections. Mask unnecessary parts of images. Apply RGB color correction where needed. Apply brightness at 120%. Find pseudo-GCPs and mark them in the images. Add laser point references if available in any of the images and mark them. | |

| Alignment: Build Tie Point Cloud (C) | Metashape Professional v. 1.7 & 1.8 | Section 3.5 and Section 3.6 | Apply alignment: If the software aligns successfully, continue to tie point cloud optimization. If the software fails to complete alignment, identify the reasons why the alignment of the image group failed. Continue with manually guided alignment to finish the alignment. Manually guided alignment: Based on the reason for alignment failure, guide the software to complete the alignment manually. |

| Georeference | Metashape Professional v. 1.7 & 1.8 | Section 3.7 | Synchronize the camera paths to the ROV navigation on a small section of the dive. |

| Python v. 3.8.5 & 3.8.12 | Section 3.7 | Generate corrected camera navigation points for use in the rest of the dive. | |

| Microsoft Excel 2021 | Section 3.7 | Generate the pseudo-GCP X, Y, Z coordinates with the corrected camera navigation. | |

| Tie Point Cloud Optimization | Metashape Professional v. 1.7 & 1.8 | Section 3.8 | Build hour-long camera sections from the smaller image groups. Remove outliers. Insert corrected camera navigation and pseudo-GCPs. Optimize camera paths based on navigation and pseudo-GCPs. |

| Build Dense Cloud (D) | Metashape Professional v. 1.7 & 1.8 | Section 3.9 | Remove outliers. Export and archive the dense cloud as .las or .laz files. |

| Build Products (E and F) | Metashape Professional v. 1.7 & 1.8 | Section 3.10 | Export and archive mesh as .obj files. Meshes should be no greater than 100,000 vertices to keep file size manageable. |

| Rov Estimated Distance to Seafloor | Red | Green | Blue | |

|---|---|---|---|---|

| (A) | Very close, inches to a couple of meters from the target. Sand has a tan color. | 100% | 100% | 100% |

| (B) | Close, ~2–4 m, sand is a little tan but has some blue bias. | 100% | 95% | 90% |

| (C) | Medium distance, ~4–8 m. All features in the image show a blue bias. | 100% | 90% | 85% |

| (D) | Far distance, ~8–14 m. Everything in the image is in various shades of blue. | 100% | 85–80% | 80–75% |

| (E) | Far away, ~>14 m. Everything in the image is dark blue. | 100% | 80% | 70% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Flores, C.H.; ten Brink, U.S. Photogrammetry of the Deep Seafloor from Archived Unmanned Submersible Exploration Dives. J. Mar. Sci. Eng. 2024, 12, 1250. https://doi.org/10.3390/jmse12081250

Flores CH, ten Brink US. Photogrammetry of the Deep Seafloor from Archived Unmanned Submersible Exploration Dives. Journal of Marine Science and Engineering. 2024; 12(8):1250. https://doi.org/10.3390/jmse12081250

Chicago/Turabian StyleFlores, Claudia H., and Uri S. ten Brink. 2024. "Photogrammetry of the Deep Seafloor from Archived Unmanned Submersible Exploration Dives" Journal of Marine Science and Engineering 12, no. 8: 1250. https://doi.org/10.3390/jmse12081250