Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification

Abstract

1. Introduction

1.1. Seagrass Habitat, Importance and Knowledge

1.2. Application of Multispectral Satellite Systems in Seagrass Habitat Mapping

1.3. Application of Multibeam Systems in Seagrass Habitat Mapping

1.4. Application of Unmanned Aerial Vehicles (UAVs) and Autonomous Surface Vehicles (ASVs) Systems in Seagrass Habitat Mapping

1.5. OBIA Classification Algorithms in Seagrass Habitat Mapping

2. Materials and Methods

2.1. Study Sites and Geomorphological Characterization

2.2. Remote Sensing

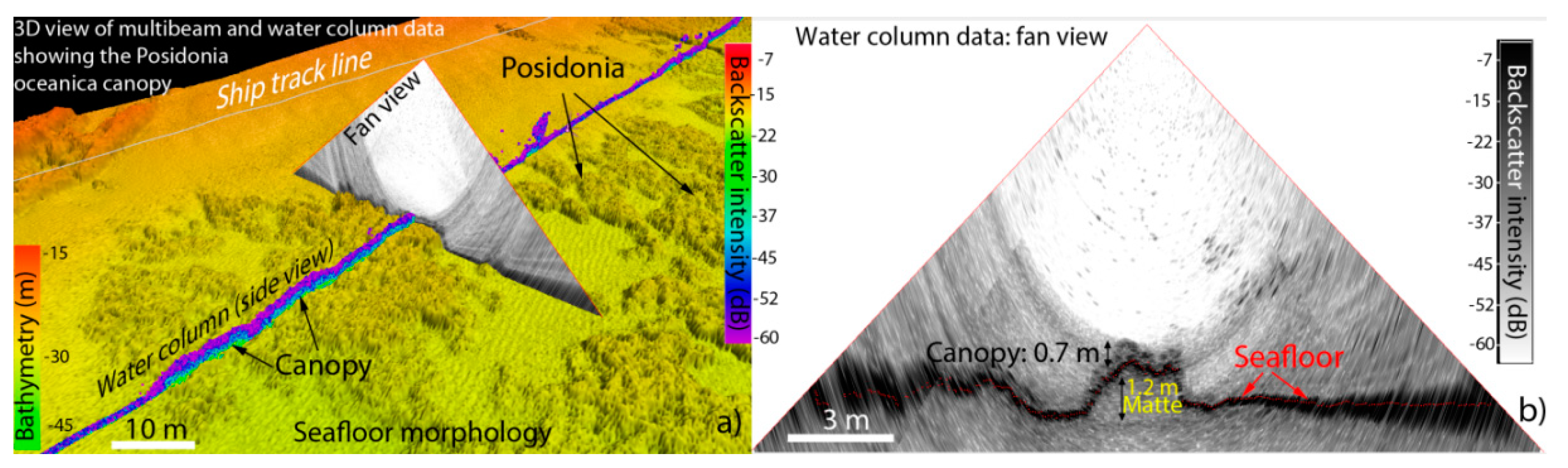

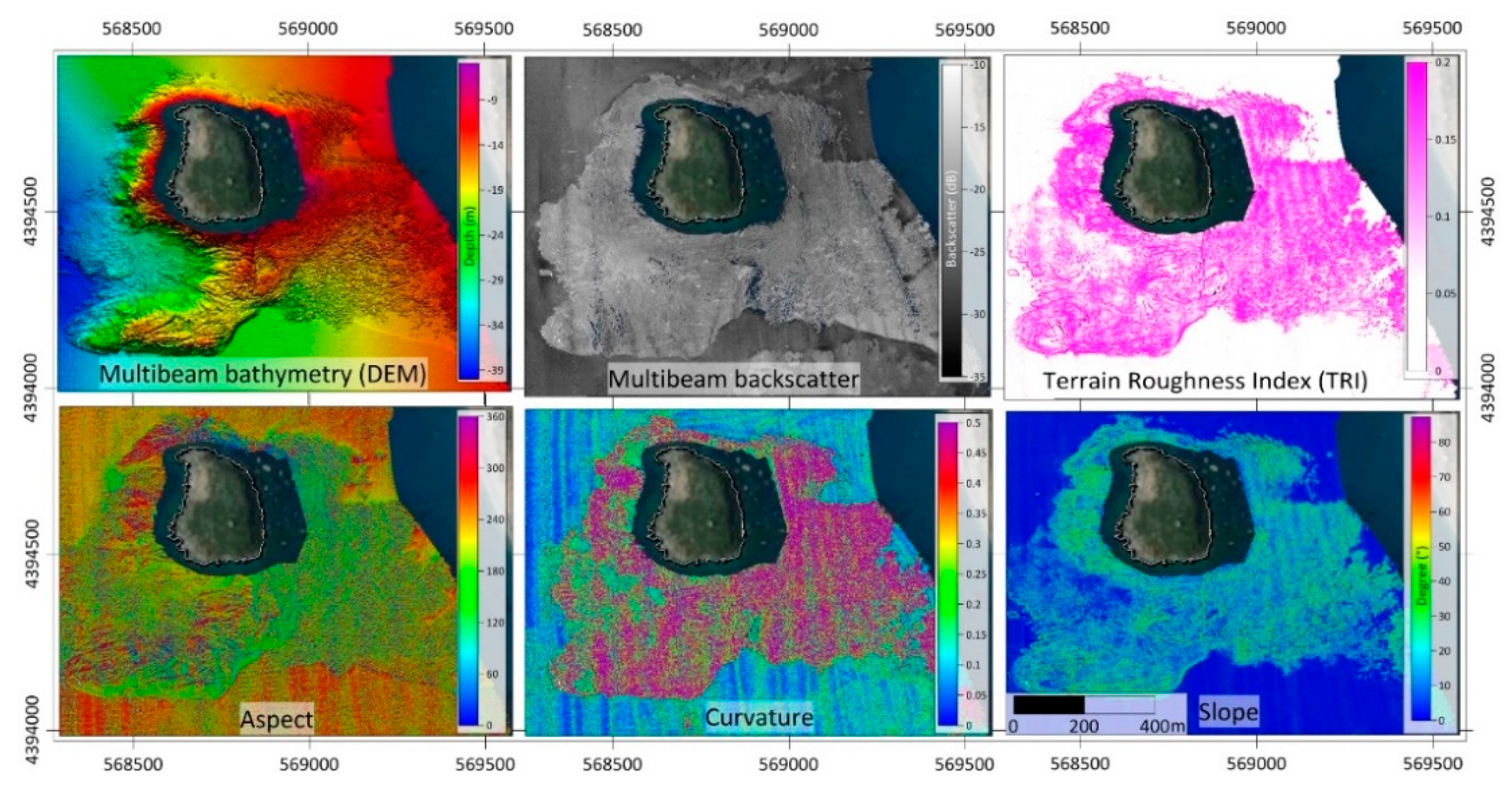

2.3. Multibeam Bathymetry

2.4. UAV Survey and Processing for Digital Terrain and Marine Model Generation

2.5. Image Ground-Truth Data

2.6. OBIA Segmentation and Classification

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Green, E.P.; Short, F.T.; Frederick, T. World Atlas of Seagrasses; University of California Press: Berkeley, CA, USA, 2003. [Google Scholar]

- Den Hartog, C.; Kuo, J. Taxonomy and Biogeography of Seagrasses. In Seagrasses: Biology, Ecologyand Conservation; Springer: Dordrecht, The Netherlands, 2007; pp. 1–23. [Google Scholar] [CrossRef]

- EEC. Council Directive 92/43/EEC of 21 May 1992 on the conservation of natural habitats and of wild fauna and flora. Off. J. Eur. Commun. 1992, 206, 7–50. [Google Scholar]

- Serrano, O.; Kelleway, J.J.; Lovelock, C.; Lavery, P.S. Conservation of Blue Carbon Ecosystems for Climate Change Mitigation and Adaptation. In Coastal Wetlands, 2nd ed.; Elsevier: Amsterdam, The Netherlands; Oxford, UK; Cambridge, MA, USA, 2019; pp. 965–996. [Google Scholar] [CrossRef]

- Orth, R.J.; Carruthers, T.J.B.; Dennison, W.C.; Duarte, C.M.; Fourqurean, J.W.; Heck, K.L.; Randall Hughes, A.; Kendrick, G.A.; Kenworthy, W.J.; Olyarnik, S.; et al. A global crisis for seagrass ecosystems. Bioscience 2006, 56, 987–996. [Google Scholar] [CrossRef]

- Duarte, L.D.S.; Machado, R.E.; Hartz, S.M.; Pillar, V.D. What saplings can tell us about forest expansion over natural grasslands. J. Veg. Sci. 2006, 17, 799–808. [Google Scholar] [CrossRef]

- Turner, S.J.; Hewitt, J.E.; Wilkinson, M.R.; Morrisey, D.J.; Thrush, S.F.; Cummings, V.J.; Funnell, G. Seagrass patches and landscapes: The influence of wind-wave dynamics and hierarchical arrangements of spatial structure on macrofaunal seagrass communities. Estuaries 1999, 22, 1016–1032. [Google Scholar] [CrossRef]

- Lathrop, R.G.; Montesano, P.; Haag, S. A multi scale segmentation approach to mapping seagrass habitats using airborne digital camera imagery. Photogramm. Eng. Remote Sens. 2006, 72, 665–675. [Google Scholar] [CrossRef]

- O’Neill, J.D.; Costa, M. Mapping eelgrass (Zostera marina) in the Gulf Islands National Park Reserve of Canada using high spatial resolution satellite and airborne imagery. Remote Sens. Environ. 2013, 133, 152–167. [Google Scholar] [CrossRef]

- Hogrefe, K.; Ward, D.; Donnelly, T.; Dau, N. Establishing a baseline for regional scale monitoring of eelgrass (Zostera marina) habitat on the lower Alaska Peninsula. Remote Sens. 2014, 6, 12447–12477. [Google Scholar] [CrossRef]

- Reshitnyk, L.; Robinson, C.L.K.; Dearden, P. Evaluation of WorldView-2 and acoustic remote sensing for mapping benthic habitats in temperate coastal Pacific waters. Remote Sens. Environ. 2014, 153, 7–23. [Google Scholar] [CrossRef]

- Traganos, D.; Aggarwal, B.; Poursanidis, D.; Topouzelis, K.; Chrysoulakis, N.; Reinartz, P. Towards Global-Scale Seagrass Mapping and Monitoring Using Sentinel-2 on Google Earth Engine: The Case Study of the Aegean and Ionian Seas. Remote Sens. 2018, 10, 1227. [Google Scholar] [CrossRef]

- Finkl, C.W.; Makowski, C. The Biophysical Cross-shore Classification System (BCCS): Defining Coastal Ecological Sequences with Catena Codification to Classify Cross-shore Successions Based on Interpretation of Satellite Imagery. J. Coast. Res. 2020, 36, 1–29. [Google Scholar] [CrossRef]

- Hossain, M.S.; Bujang, J.S.; Zakaria, M.H.; Hashim, M. The application of remote sensing to seagrass ecosystems: An overview and future research prospects. Int. J. Remote Sens. 2015, 36, 61–114. [Google Scholar] [CrossRef]

- Pham, T.D.; Yokoya, N.; Bui, D.T.; Yoshino, K.; Friess, D.A. Remote Sensing Approaches for Monitoring Mangrove Species, Structure, and Biomass: Opportunities and Challenges. Remote Sens. 2019, 11, 230. [Google Scholar] [CrossRef]

- Green, E.P.; Mumby, P.J.; Edwards, A.J.; Clark, C.D. Remote Sensing Handbook for Tropical Coastal Management; Unesco: Paris, France, 2000; pp. 1–316. [Google Scholar]

- Zoffoli, M.L.; Frouin, R.; Kampel, M. Water Column Correction for Coral Reef Studies by Remote Sensing. Sensors 2014, 14, 16881–16931. [Google Scholar] [CrossRef] [PubMed]

- Kenny, A.; Cato, I.; Desprez, M.; Fader, G.; Schüttenhelm, R.; Side, J. An overview of seabed-mapping technologies in the context of marine habitat classification. ICES J. Mar. Sci. 2003, 60, 411–418. [Google Scholar] [CrossRef]

- Brown, C.; Blondel, P. Developments in the application of multibeam sonar backscatter for seafloor habitat mapping. Appl. Acoust. 2009, 70, 1242–1247. [Google Scholar] [CrossRef]

- Pergent, G.; Monnier, B.; Clabaut, P.; Gascon, G.; Pergent-Martini, C.; Valette-Sansevin, A. Innovative method for optimizing Side-Scan Sonar mapping: The blind band unveiled. Estuar. Coast. Shelf Sci. 2017, 194, 77–83. [Google Scholar] [CrossRef]

- Le Bas, T.; Huvenne, V. Acquisition and processing of backscatter data for habitat mapping–comparison of multibeam and sidescan systems. Appl. Acoust. 2009, 70, 1248–1257. [Google Scholar] [CrossRef]

- De Falco, G.; Tonielli, R.; Di Martino, G.; Innangi, S.; Parnum, S.; Iain, I.M. Relationships between multibeam backscatter, sediment grain size and Posidonia oceanica seagrass distribution. Cont. Shelf Res. 2010, 30, 1941–1950. [Google Scholar] [CrossRef]

- Lacharité, M.; Brown, C.; Gazzola, V. Multisource multibeam backscatter data: Developing a strategy for the production of benthic habitat maps using semi-automated seafloor classification methods. Mar. Geophys. Res. 2018, 39, 307–322. [Google Scholar] [CrossRef]

- Gumusay, M.U.; Bakirman, T.; Tuney Kizilkaya, I.; Aykut, N.O. A review of seagrass detection, mapping and monitoring applications using acoustic systems. Eur. J. Remote Sens. 2019, 52, 1–29. [Google Scholar] [CrossRef]

- Micallef, A.; Le Bas, T.P.; Huvenne, V.A.; Blondel, P.; Hühnerbach, V.; Deidun, A. A multi-method approach for benthic habitat mapping of shallow coastal areas with high-resolution multibeam data. Cont. Shelf Res. 2012, 39, 14–26. [Google Scholar] [CrossRef]

- Held, P.; Schneider von Deimling, J. New Feature Classes for Acoustic Habitat Mapping—A Multibeam Echosounder Point Cloud Analysis for Mapping Submerged Aquatic Vegetation (SAV). Geosciences 2019, 9, 235. [Google Scholar] [CrossRef]

- Bosman, A.; Casalbore, D.; Anzidei, M.; Muccini, F.; Carmisciano, C. The first ultra-high resolution Marine Digital Terrain Model of the shallow-water sector around Lipari Island (Aeolian archipelago, Italy). Ann. Geophys. 2015, 58, 1–11. [Google Scholar] [CrossRef]

- Bosman, A.; Casalbore, D.; Romagnoli, C.; Chiocci, F. Formation of an ‘a’ā lava delta: Insights from time-lapse multibeam bathymetry and direct observations during the Stromboli 2007 eruption. Bull. Volcanol. 2014, 76, 1–12. [Google Scholar] [CrossRef]

- Tecchiato, S.; Collins, L.; Parnumb, I.; Stevens, A. The influence of geomorphology and sedimentary processes on benthic habitat distribution and littoral sediment dynamics: Geraldton, Western Australia. Mar. Geol. 2015, 359, 148–162. [Google Scholar] [CrossRef]

- Wölfl, A.C.; Snaith, H.; Amirebrahimi, S.; Devey, C.W.; Dorschel, B.; Ferrini, V.; Huvenne, V.A.I.; Jakobsson, M.; Jencks, J.; Johnston, G.; et al. Seafloor Mapping—The Challenge of a Truly Global Ocean Bathymetry. Front. Mar. Sci. 2019, 6, 283. [Google Scholar] [CrossRef]

- Clarke, J.H.; Lamplugh, M.; Czotter, K. Multibeam water column imaging: Improved wreck least-depth determination. In Proceedings of the Canadian Hydrographic Conference, Halifax, NS, Canada, 6–9 June 2006; pp. 5–9. [Google Scholar]

- Colbo, K.; Ross, T.; Brown, C.; Weber, T. A review of oceanographic applications of water column data from multibeam echosounders. Estuar. Coast. Shelf Sci. 2014, 145, 41–56. [Google Scholar] [CrossRef]

- Dupré, S.; Scalabrin, C.; Grall, C.; Augustin, J.; Henry, P.; Celal Şengör, A.; Görür, N.; Namık Çağatay, M.; Géli, L. Tectonic and sedimentary controls on widespread gas emissions in the Sea of Marmara: Results from systematic, shipborne multibeam echo sounder water column imaging. J. Geophys. Res. Solid Earth 2015, 120, 2891–2912. [Google Scholar] [CrossRef]

- Bosman, A.; Romagnoli, C.; Madricardo, F.; Correggiari, A.; Fogarin, S.; Trincardi, F. Short-term evolution of Po della Pila delta lobe from high-resolution multibeam bathymetry (2013–2016). Estuar. Coast. Shelf Sci. 2020, 233, 106533. [Google Scholar] [CrossRef]

- Doukari, M.; Batsaris, M.; Papakonstantinou, A.; Topouzelis, K. A Protocol for Aerial Survey in Coastal Areas Using UAS. Remote Sens. 2019, 11, 1913. [Google Scholar] [CrossRef]

- Barrell, J.; Grant, J. High-resolution, low altitude aerial photography in physical geography: A case study characterizing eelgrass (Zostera marina L.) and blue mussel (Mytilus edulis L.) landscape mosaic structure. Prog. Phys. Geogr. 2015, 39, 440–459. [Google Scholar] [CrossRef]

- Duffy, J.P.; Pratt, L.; Anderson, K.; Land, P.E.; Shutler, J.D. Spatial assessment of intertidal seagrass meadows using optical imaging systems and a lightweight drone. Estuar. Coast. Shelf Sci. 2018, 200, 169–180. [Google Scholar] [CrossRef]

- Makri, D.; Stamatis, P.; Doukari, M.; Papakonstantinou, A.; Vasilakos, C.; Topouzelis, K. Multi-scale seagrass mapping in satellite data and the use of UAS in accuracy assessment. In Proceedings of the Sixth International Conference on Remote Sensing and Geoinformation of the Environment, Proc. SPIE 10773, Paphos, Cyprus, 6 August 2018. [Google Scholar] [CrossRef]

- Nahirnick, N.K.; Reshitnyk, L.; Campbell, M.; Hessing-Lewis, M.; Costa, M.; Yakimishyn, J.; Lee, L. Mapping with confidence; delineating seagrass habitats using Unoccupied Aerial Systems (UAS). Remote Sens. Ecol. Conserv. 2019, 5, 121–135. [Google Scholar] [CrossRef]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and Classification of Ecologically Sensitive Marine Habitats Using Unmanned Aerial Vehicle (UAV) Imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Carlson, D.; Fürsterling, A.; Vesterled, L.; Skovby, M.; Pedersen, S.; Melvad, C.; Rysgaard, S. An affordable and portable autonomous surface vehicle with obstacle avoidance for coastal ocean monitoring. Hardwarex 2019, 5, e00059. [Google Scholar] [CrossRef]

- Alvsvåg, D. Mapping of a Seagrass Habitat in Hopavågen, Sør-Trøndelag, with the Use of an Autonomous Surface Vehicle Combined with Optical Techniques. Master’s Thesis, NTNU, Gjøvik, Norway, 2017. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Diesing, M.; Mitchell, P.; Stephens, D. Image-based seabed classification: What can we learn from terrestrial remote sensing? ICES J. Mar. Sci. 2016, 73, 2425–2441. [Google Scholar] [CrossRef]

- Janowski, L.; Tęgowski, J.; Nowak, J. Seafloor mapping based on multibeam echosounder bathymetry and backscatter data using Object-Based Image Analysis: A case study from the Rewal site, the Southern Baltic. Oceanol. Hydrobiol. Stud. 2018, 47, 248–259. [Google Scholar] [CrossRef]

- Janowski, L.; Trzcinska, K.; Tegowski, J.; Kruss, A.; Rucinska-Zjadacz, M.; Pocwiardowski, P. Nearshore benthic habitat mapping based on multi-frequency, multibeam echosounder data using a combined object-based approach: A case study from the Rowy Site in the Southern Baltic Sea. Remote Sens. 2018, 10, 1983. [Google Scholar] [CrossRef]

- Wicaksono, P.; Aryaguna, P.A.; Lazuardi, W. Benthic Habitat Mapping Model and Cross Validation Using Machine-Learning Classification Algorithms. Remote Sens. 2019, 11, 1279. [Google Scholar] [CrossRef]

- Mohamed, H.; Nadaoka, K.; Nakamura, T. Assessment of machine learning algorithms for automatic benthic cover monitoring and mapping using towed underwater video camera and high-resolution satellite images. Remote Sens. 2018, 10, 773. [Google Scholar] [CrossRef]

- Menandro, P.S.; Bastos, A.C.; Boni, G.; Ferreira, L.C.; Vieira, F.V.; Lavagnino, A.C.; Moura, R.; Diesing, M. Reef Mapping Using Different Seabed Automatic Classification Tools. Geosciences 2020, 10, 72. [Google Scholar] [CrossRef]

- Benfield, S.L.; Guzman, H.M.; Mair, J.M.; Young, J.A.T. Mapping the distribution of coral reefs and associated sublittoral habitats in Pacific Panama: A comparison of optical satellite Sensors and classification methodologies. Int. J. Remote Sens. 2007, 28, 5047–5070. [Google Scholar] [CrossRef]

- Leon, J.; Woodroffe, C.D. Improving the synoptic mapping of coral reef geomorphology using object-based image analysis. Int. J. Geogr. Inf. Sci. 2011, 25, 949–969. [Google Scholar] [CrossRef]

- Phinn, S.R.; Roelfsema, C.M.; Mumby, P.J. Multi-scale, object-based image analysis for mapping geomorphic and ecological zones on coral reefs. Int. J. Remote Sens. 2012, 33, 3768–3797. [Google Scholar] [CrossRef]

- Wahidin, N.; Siregar, V.P.; Nababan, B.; Jaya, I.; Wouthuyzen, S. Object-based image analysis for coral reef benthic habitat mapping with several classification algorithms. Procedia Environ. Sci. 2015, 24, 222–227. [Google Scholar] [CrossRef]

- Roelfsema, C.M.; Lyons, M.; Kovacs, E.M.; Maxwell, P.; Saunders, M.I.; Samper-Villarreal, J.; Phinn, S.R. Multi-temporal mapping of seagrass cover, species and biomass: A semi-automated object based image analysis approach. Remote Sens. Environ. 2014, 150, 172–187. [Google Scholar] [CrossRef]

- Siregar, V.P.; Agus, S.B.; Subarno, T.; Prabowo, N.W. Mapping Shallow Waters Habitats Using OBIA by Applying Several Approaches of Depth Invariant Index in North Kepulauan seribu. In Proceedings of the IOP Conference Series: Earth and Environmental Science, The 4th International Symposium on LAPAN-IPB Satellite for Food Security and Environmental Monitoring, Bogor, Indonesia, 9–11 October 2017; IOP Publishing: Bristol, UK, 2018; Volume 149, p. 012052. [Google Scholar] [CrossRef]

- Papakonstantinou, A.; Stamati, C.; Topouzelis, K. Comparison of True-Color and Multispectral Unmanned Aerial Systems Imagery for Marine Habitat Mapping Using Object-Based Image Analysis. Remote Sens. 2020, 12, 554. [Google Scholar] [CrossRef]

- Amodio-Morelli, L.; Bonardi, G.; Colonna, V.; Dietrich, D.; Giunta, G.; Ippolito, F.; Liguori, V.; Lorenzoni, S.; Paglionico, A.; Perrone, V.; et al. L’arco Calabro-peloritano nell’Orogene Appeninico-Maghrebide. Mem. Soc. Geol. Ital. 1976, 17, 1–60. [Google Scholar]

- Pléiades Images. Available online: https://www.intelligence-airbusds.com/en/8692-pleiades (accessed on 15 January 2020).

- Lyzenga, D.R. Passive Remote Sens. techniques for mapping water depth and bottom features. Appl. Opt. 1978, 17, 379–383. [Google Scholar] [CrossRef] [PubMed]

- Erdas Imagine. Available online: https://www.hexagongeospatial.com/products/power-portfolio/erdas-imagine (accessed on 15 January 2020).

- Global Mapper 20.1. Available online: https://www.bluemarblegeo.com/products/global-mapper.php (accessed on 22 January 2020).

- Pix4DMapper. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software (accessed on 22 January 2020).

- 3D Research Srl. Available online: http://www.3dresearch.it/en/ (accessed on 20 January 2020).

- Rende, F.S.; Irving, A.D.; Lagudi, A.; Bruno, F.; Scalise, S.; Cappa, P.; Di Mento, R. Pilot application of 3D underwater imaging techniques for mapping Posidonia oceanica (L.) Delile meadows. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 5, W5. [Google Scholar] [CrossRef]

- Rende, F.S.; Irving, A.D.; Bacci, T.; Parlagreco, L.; Bruno, F.; De Filippo, F.; Montefalcone, M.; Penna, M.; Trabbucco, B.; Di Mento, R.; et al. Advances in micro-cartography: A two-dimensional photo mosaicing technique for seagrass monitoring. Estuar. Coast. Shelf Sci. 2015, 167, 475–486. [Google Scholar] [CrossRef]

- Mangeruga, M.; Cozza, M.; Bruno, F. Evaluation of underwater image enhancement algorithms under different environmental conditions. J. Mar. Sci. Eng. 2018, 6, 10. [Google Scholar] [CrossRef]

- Agisoft. Available online: https://www.agisoft.com/ (accessed on 20 January 2020).

- Borra, S.; Thanki, R.; Dey, N. Satellite Image Analysis: Clustering and Classification; Springer: Dordrecht, The Netherlands, 2019. [Google Scholar] [CrossRef]

- eCognition Essential. Available online: http://www.ecognition.com/essentials (accessed on 23 January 2020).

- SAGA. Available online: http://www.saga-gis.org/en/index.html (accessed on 23 January 2020).

- Kursa, M.; Rudnicki, W. Feature selection with the Boruta Package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Congalton, R. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- BORUTA Package. Available online: https://cran.r-project.org/web/packages/Boruta/index.html (accessed on 23 January 2020).

- Ierodiaconou, D.; Schimel, A.; Kennedy, D.; Monk, J.; Gaylard, G.; Young, M.; Diesing, M.; Rattray, A. Combining pixel and object based image analysis of ultra-high resolution multibeam bathymetry and backscatter for habitat mapping in shallow marine waters. Mar. Geophys. Res. 2018, 39, 271–288. [Google Scholar] [CrossRef]

- Montereale Gavazzi, G.; Madricardo, F.; Janowski, L.; Kruss, A.; Blondel, P.; Sigovini, M.; Foglini, F. Evaluation of seabed mapping methods for fine-scale classification of extremely shallow benthic habitats—Application to the Venice Lagoon, Italy. Estuar. Coast. Shelf Sci. 2016, 170, 45–60. [Google Scholar] [CrossRef]

- Hasan, R.; Ierodiaconou, D.; Monk, J. Evaluation of Four Supervised Learning Methods for Benthic Habitat Mapping Using Backscatter from Multi-Beam Sonar. Remote Sens. 2012, 4, 3427–3443. [Google Scholar] [CrossRef]

- Stephens, D.; Diesing, M. A comparison of supervised classification methods for the prediction of substrate type using multibeam acoustic and legacy grain-size data. PLoS ONE 2014, 9, e93950. [Google Scholar] [CrossRef] [PubMed]

- Diesing, M.; Stephens, D. A multi-model ensemble approach to seabed mapping. J. Sea Res. 2015, 100, 62–69. [Google Scholar] [CrossRef]

- Moniruzzaman, M.; Islam, S.; Lavery, P.; Bennamoun, M.; Lam, C.P. Imaging and classification techniques for seagrass mapping and monitoring: A comprehensive survey. arXiv 2019, arXiv:1902.11114. [Google Scholar]

- Zhang, C. Applying data fusion techniques for benthic habitat mapping and monitoring in a coral reef ecosystem. ISPRS J.Photogramm. Remote Sens. 2015, 104, 213–223. [Google Scholar] [CrossRef]

- Veettil, B.; Ward, R.; Lima, M.; Stankovic, M.; Hoai, P.; Quang, N. Opportunities for seagrass research derived from remote sensing: A review of current methods. Ecol. Indic. 2020, 117, 106560. [Google Scholar] [CrossRef]

- Dattola, L.; Rende, S.; Dominici, R.; Lanera, P.; Di Mento, R.; Scalise, S.; Cappa, P.; Oranges, T.; Aramini, G. Comparison of Sentinel-2 and Landsat-8 OLI satellite images vs. high spatial resolution images (MIVIS and WorldView-2) for mapping Posidonia oceanica meadows. In Proceedings of the Remote Sensing of the Ocean, Sea Ice, Coastal Waters, and Large Water Regions, Proc. SPIE 10784, Berlin, Germany, 10 October 2018; Volume 10784. [Google Scholar] [CrossRef]

- Pham, T.D.; Xia, J.; Ha, N.T.; Bui, D.T.; Le, N.N.; Tekeuchi, W. A Review of Remote Sens. Approaches for Monitoring Blue Carbon Ecosystems: Mangroves, Seagrassesand Salt Marshes during 2010–2018. Sensors 2019, 19, 1933. [Google Scholar] [CrossRef]

- Li, J.; Schill, S.R.; Knapp, D.E.; Asner, G.P. Object-Based Mapping of Coral Reef Habitats Using Planet Dove Satellites. Remote Sens. 2019, 11, 1445. [Google Scholar] [CrossRef]

- Ardhuin, F.; Rogers, E.; Babanin, A.; Filipot, J.F.; Magne, R.; Roland, A.; Van der Westhuysen, A.; Queffeulou, P.; Lefevre, J.; Aouf, L.; et al. Semiempirical dissipation source functions for ocean waves. Part I: Definition, calibration, and validation. J. Phys. Oceanogr. 2010, 40, 1917–1941. [Google Scholar] [CrossRef]

| Class | MBES | UTCS | ASV | UAV |

|---|---|---|---|---|

| P. oceanica (P) | 214 | 41 | 26 | 13 |

| Rock (R) | 95 | \ | 6 | 50 |

| Mobile Fine sediment (FS) | 197 | 13 | \ | \ |

| Coarse sediment (CS) | 211 | 5 | \ | \ |

| Cystoseira (Cy) | \ | \ | \ | 49 |

| Total | 717 | 59 | 32 | 112 |

| Source | Features | Resolution | Software | Area |

|---|---|---|---|---|

| Multibeam EM2040 | Backscatter/Bathymetry | 0.3 m | Caris HIPS and SIPS | shallow |

| Bathymetry | Curvature General | 0.5 m | SAGA-GIS | shallow |

| Bathymetry | Curvature Total | 0.5 m | SAGA-GIS | shallow |

| Bathymetry | Slope | 0.5 m | SAGA-GIS | shallow |

| Bathymetry | Aspect | 0.5 m | SAGA-GIS | shallow |

| Bathymetry | Northness | 0.5 m | SAGA-GIS | shallow |

| Bathymetry | Eastness | 0.5 m | SAGA-GIS | shallow |

| Bathymetry | Terrain Ruggedness Index (TRI) | 0.5 m | SAGA-GIS | shallow |

| Pléiades | Satellite image | 2 m | ERDAS IMAGINE | shallow |

| UTCS | Image Truth data | 0.001 m | AGISOFT METASHAPE | shallow |

| Parrot Anafi Work | Orthophoto | 0.02 m | PIX4D Mapper | very shallow |

| ASVs (DEVSS) | Image Truth data | 0.001 m | AGISOFT METASHAPE | very shallow |

| Shallow Water | Very Shallow Water | |||

|---|---|---|---|---|

| Class | Training Set | Validation Set | Training Set | Validation Set |

| P. oceanica (P) | 123 | 122 | 23 | 26 |

| Rock (R) | 80 | 15 | 35 | 21 |

| Fine sediment (FS) | 207 | 170 | \ | \ |

| Coarse sediment (CS) | 44 | 5 | \ | \ |

| Cystoseira (Cy) | \ | \ | 31 | 18 |

| Total | 454 | 312 | 89 | 65 |

| Combinations (Data Source) | Decision Tree (DT) | Random Tree (RT) | k-NN | ||||

|---|---|---|---|---|---|---|---|

| A Pléiades image | Overall accuracy: 67.83% K: 0.48 | Overall accuracy: 66.78% K: 0.47 | Overall accuracy: 71.33% K: 0.48 | ||||

| Class | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | |

| (P) | 75.36% | 85.25% | 72.67% | 89.34% | 70.31% | 73.77% | |

| (R) | 83.33% | 33.33% | 83.33% | 33.33% | 100.00% | 33.33% | |

| (FS) | 84.21% | 55.56% | 87.80% | 50.00% | 75.35% | 74.31% | |

| (CS) | 10.64% | 100.00% | 10.42% | 100.00% | 18.18% | 40.00% | |

| B Pléiades-Backscatter Bathymetry | Overall accuracy: 83.61% K: 0.73 | Overall accuracy: 91.80% K: 0.85 | Overall accuracy: 82.38% K: 0.69 | ||||

| Class | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | |

| (P) | 95.45% | 77.78% | 96.97% | 88.89% | 90.22% | 76.85% | |

| (R) | 28.57% | 80.00% | 42.11% | 80.00% | 42.11% | 80.00% | |

| (FS) | 100.00% | 88.43% | 100.00% | 95.04% | 89.92% | 88.43% | |

| (CS) | 23.81% | 100.00% | 45.45% | 100.00% | 21.43% | 60.00% | |

| C Pléiades-Backscatter Bathymetry Secondary features | Overall accuracy: 88.57% K: 0.80 | Overall accuracy: 99.63% K: 0.99 | Overall accuracy: 86.94% K: 0.77 | ||||

| Class | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | |

| (P) | 94.95% | 86.24% | 100.00% | 99.07% | 94.95% | 86.24% | |

| (R) | 43.75% | 70.00% | 100.00% | 100.00% | 43.75% | 70.00% | |

| (FS) | 94.74% | 89.26% | 99.31% | 100.00% | 94.74% | 89.26% | |

| (CS) | 25.00% | 80.00% | 100.00% | 100.00% | 25.00% | 80.00% | |

| Decision Tree (DT) | Random Tree (RT) | k-NN | ||||

|---|---|---|---|---|---|---|

| Overall Accuracy: 74.6% | Overall Accuracy: 77.78% | Overall Accuracy: 95.24% | ||||

| K: 0.61 | K: 0.65 | K: 0.92 | ||||

| Class | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy | User’s accuracy | Producer’s accuracy |

| (P) | 71% | 65.38% | 66.70% | 100% | 100.00% | 100.00% |

| (R) | 62.50% | 75% | 88% | 35.00% | 87.00% | 100.00% |

| (Cy) | 100.00% | 88.24% | 100% | 94.12% | 100.00% | 82% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rende, S.F.; Bosman, A.; Di Mento, R.; Bruno, F.; Lagudi, A.; Irving, A.D.; Dattola, L.; Giambattista, L.D.; Lanera, P.; Proietti, R.; et al. Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification. J. Mar. Sci. Eng. 2020, 8, 647. https://doi.org/10.3390/jmse8090647

Rende SF, Bosman A, Di Mento R, Bruno F, Lagudi A, Irving AD, Dattola L, Giambattista LD, Lanera P, Proietti R, et al. Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification. Journal of Marine Science and Engineering. 2020; 8(9):647. https://doi.org/10.3390/jmse8090647

Chicago/Turabian StyleRende, Sante Francesco, Alessandro Bosman, Rossella Di Mento, Fabio Bruno, Antonio Lagudi, Andrew D. Irving, Luigi Dattola, Luca Di Giambattista, Pasquale Lanera, Raffaele Proietti, and et al. 2020. "Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification" Journal of Marine Science and Engineering 8, no. 9: 647. https://doi.org/10.3390/jmse8090647

APA StyleRende, S. F., Bosman, A., Di Mento, R., Bruno, F., Lagudi, A., Irving, A. D., Dattola, L., Giambattista, L. D., Lanera, P., Proietti, R., Parlagreco, L., Stroobant, M., & Cellini, E. (2020). Ultra-High-Resolution Mapping of Posidonia oceanica (L.) Delile Meadows through Acoustic, Optical Data and Object-based Image Classification. Journal of Marine Science and Engineering, 8(9), 647. https://doi.org/10.3390/jmse8090647