1. Introduction

Currently, more people than ever have access to the Internet. Social changes have transformed both the way people live and how the world works. There has been a significant increase in the growth of the mobile market and advances in cloud computing technology are generating a huge amount of data, thereby implying unprecedented demands on energy consumption. This digital universe corresponds to 500 billion gigabytes of data [

1], and since 25% of this is from on-line data, this value may therefore increase greatly over time.

Data center power consumption has increased significantly over recent years influenced by the increasing demand for storage capacity and data processing [

2]. In 2013, data centers in the US consumed 91 billion kilowatt-hours of electricity [

3], which is expected to continue rising. Moreover, critical elements in the performance of daily tasks, such as social networks, e-commerce and data storage, also contribute to the rise in energy consumption across these systems.

Due to the growing awareness of issues such as climate change, pollution, and environmental degradation, the scientific and industrial communities have paid increasing attention to the impact of human activity. The environmental impact caused by the production of some energy sources is massive. For example, the carbon dioxide (CO

2) released by the use of coal, petroleum, natural gas and other similar energy sources contributes significantly to global warming. According to estimates, CO

2 emissions may increase between 9% and 27% by 2030, depending on which policies are enacted [

4].

The main focus of this paper is to propose an integrated strategy to evaluate the operational costs and estimate the environmental impacts (CO2 emissions) of data centers.

In this strategy, an artificial neural network (ANN) is applied to the energy flow model (EFM) metrics, which forecasts the values in the future, based on the consumption of data center electrical architectures and by considering different energy sources. To demonstrate the applicability of the proposed strategy, a case study compared costs and environmental impacts, according to the Brazilian, Chinese German and US energy mixes adopted. Autoregressive integrated moving average (ARIMA) was used to validate perceptron predictions. The results demonstrate that RNA predictions were within the 90% confidence interval of ARIMA.

The cost used in this work was computed according to the time of operation of the data center, how much energy was consumed in this period and its financial cost. The calculation of the environmental impact considered the amount and source of energy consumed by the environment.

Section 6 details these relationship.

A substantial amount of CO

2 emissions originates from the production of energy. In China, burning coal generates 65% of the electricity consumed [

5], which is a very high level of non-green power generation when compared with Brazil, where the level is 3%. In Germany, 14.7% of the energy produced comes from nuclear fission [

6], whereas in China this figure is only 1% [

7]. Many countries now require that polluting energy sources should be replaced by cleaner alternatives, such as solar, wind or hydro plants.

Data center infrastructures entail more redundant components that consume more and more electricity, influenced by the increasing demand for storage capacity and data processing. The concept of green data center is related to electricity consumption and CO

2 emissions, which depends on the utility power source adopted. For example, in Brazil, 73% of electrical power is derived from clean electricity generation [

8], whereas in USA 82.1% of generated electricity comes from petroleum, coal or gas [

9].

The main contributions of this work are as follows: considering the energy mix of the data centers to estimate the emission of carbon dioxide in the atmosphere through the energy consumption of the centers mentioned above; cost evaluation, availability and sustainability for the electric infrastructures of the data centers; and use of an artificial neural network (ANN) along with the energy flow model (EFM), to predict the consumption of energy in the next few months, based on the environment history.

In the present work, the power subsystem electrical flow is represented by an energy flow model (EFM) [

8,

10]. Both the proposed strategy and the EFM are supported by the Mercury tool [

11]. In addition to the EFM, Mercury offers support models for reliability block diagrams (RBD) [

12], Markov chains and stochastic Petri nets (SPN) [

13].

The paper is organized as follows:

Section 2 presents studies along the same line of research, highlighting the key differences.

Section 3 introduces the basic concepts of sustainability, ANN and ARIMA.

Section 4 describes the adopted methodology.

Section 5 describes the EFM.

Section 6 illustrates how to consider different energy mixes in the EFM.

Section 7 presents the artificial neural networks in EFM.

Section 8 presents a case study.

Section 9 concludes the paper.

2. Related Works

Over the last few years, considerable research has been conducted into energy consumption in data centers. This section presents studies related to this research field. Some papers have used neural networks and others have adopted strategies to reduce the energy consumption of data centers. To demonstrate the importance of the present study, we analyzed the environmental impact caused by the energy consumption of data centers and have proposed neural networks to forecast the impact over the following months based on information from previous data.

Zeng [

14] proposed the use of a hybrid model of ANN of back propagations with an evolutionary algorithm adapted to predict the consumption of natural gas. Zeng applied the evolutionary algorithm to find initial values of the synaptic weights and the hit values (limits) of the activation functions of the neural network to improve the prediction performance. The simulation results prove that the weight and bias optimization technique improves the accuracy of the prediction. Our study is different from Zeng because it uniquely considered the electric consumption history to establish the prediction, whereas Zeng considered multiple variables as the input of the prediction model, such as gross domestic product, population and import and export data.

Wang [

15] suggested using an effective and stable model of consumption prediction based on echo state network (ESN) by using a differential evolutionary algorithm to optimize the three essential parameters of an ESN, such as scale of the reservoir (N), connectivity rate (a) and spectral radius (p). In an ESN, the concealed layers are replaced by a dynamic reservoir of neurons. Besides, the prediction model of the electric power consumption is simulated in three situations: monthly consumption in the northeast of China between the period of January 2004 and April 2009, the yearly consumption of Taiwan from 1945 to 2003 and the monthly consumption in Zhengzhou from January 2012 to February 2017. The results of the experiments show that the proposed prediction model obtains superior results to the other models compared. Different from our proposal, this one includes the electric consumption of cities and not data centers, and it does not compare the environmental impact caused by energy consumption.

Wang [

16] combined a novel sparse adaboost with echo state network (ESN) and fruit fly optimization algorithm (FOA to enhance the forecasting accuracy of mid-term electricity consumption demand in China. The sparse adaboost (adaboostsp) is designed to overcome the instability and limited generalization ability of individual ESN, and reduce the computational complexity of adaboost through its sparse ensemble strategy. According to Wang [

16], the proposed adaboostsp-ESN approach presents high performance in two IEC forecasting applications in China. The ensemble computation cost is slashed by the well-designed sparse adaboost structure. Time lag effects of influence factors on IEC are explored. Different from our proposal, this work does not relate energy consumption of data centers with environmental impact.

Yaoyao [

17] presented a prediction method of consumption that combines the LASSO regressions with the Quantile Regression Neural Networks (LASSO-QRNN). The LASSO regression is used to produces high-quality attributes and to reduce the data dimensionality effectively. The suggested method is compared to state-of-the-art methods, including Radial Bias Function (RBF), Back-propagation (BP), QR and NLQR. Case studies are carried out using electricity consumption data from the Guangdong, China and California, USA. From the analysis of the results, the suggested method shows superior accuracy to the other methods and reduced data dimensionality. This work also refers neither to data centers nor issues related to sustainability.

The next works are related to the issues of sustainability and energy consumption in data centers, however they also do not fill the gaps mentioned above.

The goal is to provide an integrated workload management system for data centers that takes advantage of the efficiency gains possible by shifting demand in a way that exploits time variations in electricity price, the availability of renewable energy, and the efficiency of cooling. However, the focus is on the low level of the environment (load schedulers). Our proposed method enables conducting a global analysis of the energy consumption.

Reddy [

18] presented various metrics relating to a data center and classification based on the different core dimensions of data center operations. They defined the core dimensions of data center operations as follows: energy efficiency, cooling, greenness, performance, thermal and air management, network, security, storage, and financial impact. They presented a taxonomy of state-of-the-art metrics used in the data center industry, which is useful for the researchers and practitioners working on monitoring and improving the energy efficiency of data centers. Our proposal method uses one of the metrics pointed out by Reddy (efficiency of energy use) and proposes a new metric, considering an integrated analysis of the energy cost and CO

2 emissions.

Dandres [

19] affirmed that cloud computing technology enables real-time load migration to a data center in the region where the greenhouse gas (GHG) emissions per kWh are the lowest. He proposed a novel approach to minimize GHG emissions cloud computing relying on distributed data centers. In the case of the GJG, the GHG emission factor for the electric grid is the same as that of the electric grid. Results show that load migrations make it possible to minimize marginal GHG emissions from the cloud computing service. Our proposed method may be used as support for decision making regarding the need for service migration, thus complementing this research.

5. Energy Flow Model (EFM)

The EFM represents the energy flow between the components of a cooling or power architecture, considering the respective efficiency and energy that each component is able to support (cooling) or provide (power). The EFM is represented by a directed acyclic graph in which components of the architecture are modeled as vertices and the respective connections correspond to edges [

8].

The following defines the EFM: , where:

represents the set of nodes (i.e., the components), in which is the set of source nodes, is the set of target nodes and denotes the set of internal nodes, .

() ∪ () ∪ () = {(a,b) ∣ a ≠ b} denotes the set of edges (i.e., the component connections).

is a function that assigns weights to the edges (the value assigned to the edge (j and k) is adopted for distributing the energy assigned to the node, j, to the node, k, according to the ratio, w(j,k)/ w(j, i), where is the set of output nodes of j).

is a function that assigns to each node the heat to be extracted (considering cooling models) or the energy to be supplied (regarding power models).

is a function that assigns each node with the respective maximum energy capacity.

is a function that assigns each node (a node represents a component) with its retail price.

is a function that assigns each node with the energetic efficiency.

Mercury engine provides support for EFM and an example is depicted in

Figure 2. The rounded rectangles are the type of equipment, and the labels represent each item. The edges have weights that are used to direct the energy that flows through the components. For the sake of simplicity, the graphical representation of EFM hides the default weight 1.

TargetPoint1 and SourcePoint1 represent the IT power demanded and the power supply, respectively. The weights present on the edges, (0.7 and 0.3) are used to direct the energy flow through the components. In other words, UPS1 (Uninterrupted Power Supply) is responsible for providing 70% and UPS2 for 30% of the energy demanded by the IT system.

The EFM is employed to compute the overall energy required to provide the necessary energy at the target point. Assuming 100 kW as the demanded energy for the data center computer room, this value is thus associated to the TargetPoint1. Considering the efficiency of STS1 (Static Transfer Switch) is 95%, the electrical power that the STS component receives is 105.26 kW.

A similar strategy is adopted for components UPS1 and UPS2, however, now, dividing the flow accord to the associated edge weights, 70% (73.68 kW) for UPS1 and 30% (31.27 kW) for UPS2. Thus, the UPS1 needs 77.55 kW, considering 95% efficiency, and UPS2 needs 34.74, considering 90% electrical efficiency. The Source Point 1 accumulates the total flow (112.29 kW).

It is important to stress that the edge weights are defined by the model designer, and there is no guarantee that designers allocate the best values for the distribution, and the outcome may increase power consumption.

For more details about EFM, the reader is redirected to [

27].

6. Considering Energy Mix in the EFM

Nowadays, there are different energy sources, such as solar, geothermal, thermoelectric, biomass, hydrogen fuel, tidal, ethanol, blue or melanin. However, in this study, we only considered the most frequently used: wind, coal, hydroelectric, nuclear and oil. Furthermore, this study also considered the amount of emissions according to the energy source used.

The inclusion of the energy mix in the EFM is proposed for a more detailed analysis of the operational costs and the estimation of emissions in the atmosphere, according to the energy consumed. By considering the energy mix in the EFM, it is possible to represent more details of the electrical infrastructures of a real-world data center. Data center designers may consider more than one energy source, which represents the energy mix of the utility. Additionally, this EFM extension allows operational costs and the environmental impacts of the electricity consumption to be calculated, as well as the emissions from the adopted energy mix.

Table 1 presents the relation between the source used to produce the energy and the amount of

emitted by each. These values were obtained from [

8]. This new feature is supported by the Mercury tool, in which the designer only needs to inform the amount of energy consumed by each source and the corresponding

emissions in the atmosphere will be calculated.

To compute the amount of

emissions, the percentage of each energy source is multiplied by its factor of aggression (see

Table 1). This factor shows the amount of

that is provided by each energy source. This process is described by the following equation:

where

i is the energy source (wind, coal, hydroelectric, nuclear or oil),

is the percentage of energy source and

is the factor of aggression to the environment.

In this study, the operational cost was calculated based on the data center operation period, the energy consumed, the cost of energy and the data center availability. Equation (

4) denotes the operational cost:

where

i is the energy source (wind, coal, hydroelectric, nuclear or oil),

is the percentage of power supply for the source i,

is the energy cost of power energy unit, T is the considered time period, and A is the system availability.

is the energy percentage that continues to be consumed when the system fails.

7. Applying ANNs to the EFM

Several forecasting methods have been developed over the last decades. Various methods, e.g., regression models, neural networks, fuzzy logic, expert systems, and statistical learning algorithms, are commonly used for forecasting [

28]. The development, improvement, and investigation of appropriate tools have led to the development of more accurate forecasting techniques. In this work, we integrated an ANN into the energy flow model.

An EFM with artificial neural networks (ANN) could expand the horizons of the modeling strategy previously adopted in [

8,

10,

27]. In addition to computing exergy consumption, operational costs, input power and PUE, this new approach enables the values of these metrics to be forecast, based on a historical series. The multi-layer perceptron (MLP) [

29] is adopted in this method. The MLP basically consists of a layer of nodes (input sources), one or more layers of hidden processor or computational nodes (neurons) and an output layer also composed of computational nodes. The layer composed of hidden neurons is called the hidden layer, as no access to the input or output of this layer may be reached.

In

Figure 3, it is possible to identify three basic elements of the neural model:

A set of synapses, each characterized by a weigh. Specifically, a signal in the input of the synapse j connected to the neuron k is multiplied by the synaptic weigh . It is important to notice the way how the indexes of the synaptic weigh are written. The first index refers to the neuron under analysis and the second one refers to the terminal input of the synapse which the weight refers to.

An adder to add two input signals, weighed by the respective synapses of the neuron. These operations were implemented in the Mercury tool and constitute a linear combiner.

An activation function to limit the output range of a neuron. Typically, the normalized interval of the output range of a neuron is written as a closed unitary interval [0, 1] or alternatively [−1, 1].

The bias, represented by , has the effect of increasing or reducing the input value of the activation function, depending if it is positive or negative, respectively.

In terms of mathematical concepts, it is possible to describe a neuron k by writing the following pair of equations:

where

,

, …,

are the input signals;

,

, …,

are the synaptic weighs of the neuron k;

(not shown in

Figure 3) is the output of the linear combiner due to input signals;

is the bias, which has the effect of increasing or reducing the polarization in the activation function;

(.) is the activation function of the neuron; and

is the output signal of the neuron. The use of the bias

has the function of applying some transformation to the output

of the linear combiner, as shown in:

The activation function, represented by

, defines the output of a neuron in terms of the induced local field

. There are three basic types of activation functions: threshold function, linear part function and sigmoid function [

30]. In this study, a sigmoidal function was used, being the most common in the artificial neural network construction. Its graph is similar to the letter s. It is defined as a strictly rising function, which displays an adequate balance between linear and non-linear behavior. The sigmoidal function implemented in the Mercury was the logistics, defined by Equation (

8).

where

is the inclination parameter of the sigmoidal function.

Figure 4 represents the sigmoidal function with a variation of

.

The number of neurons, layers, degree of connectivity and the presence or absence of retro propagation connections define the topology of an artificial neural network [

29]. This step is very important because it directly defines the processing power of the network. In light of modern knowledge, it is not possible to determine the exact number of neurons and the number of layers for general problems. It is imperative that the network does not suffer overfitting or underfitting, depending on the excess or lack of layers/neurons [

29]. Thus, the number of neurons and layers must be extensively tested, with several configurations of neural networks, containing the same training tables. The final values used are defined in the validation phase. For example, a network is created and trained with a set of parameters. If the results are good, it is used as the final model, otherwise the network is trained again with other values.

ANN was implemented in the Mercury tool and is composed of five phases: load and normalize data, create an ANN, ANN training, forecasting and graph.

Figure 5 presents the user view, where it is possible to create, train, forecast and visualize ANN in the Mercury tool.

Load and normalize data: Mercury has been configured to accept spreadsheets in odt, xls and csv formats, with three columns (year, month and power consumption). Thus, users may upload a file with the monthly levels of power consumption from the previous years of a data center. These data are read and stored by the engine to use during the following phases.

Create an artificial neural network: In this phase, the basic parameters for creating the artificial neural network are set (e.g., number of neurons in the input layer, number of neurons in the first hidden layer and the number of neurons in the output layer). Empirical testing with the MLP backpropagation neural network [

31] does not demonstrate a significant advantage in the use of two hidden layers rather than one for small problems. Therefore, most problems consider only one hidden layer.

ANN training: The most important property of neural networks is the ability to learn in their own environment, and thereby to improve their performance. This is done through an iterative process of adjustments applied to their weights, and training. The backpropagation training algorithm is the most popular algorithm for training multi-layer ANNs. The algorithm consists of two steps: propagation and backpropagation. In the first step, an input vector is applied to the input layer and its effect propagates across the network producing a set of outputs. The response obtained by the network is subtracted from the desired response to produce an error signal. The second step propagates this error signal in the opposite direction to the synaptic connections, adjusting them in order to approximate to the network outputs. Additionally, using the EFM with RNA, it is possible to set the training stop criteria for a specific error rate or a fixed number of iterations.

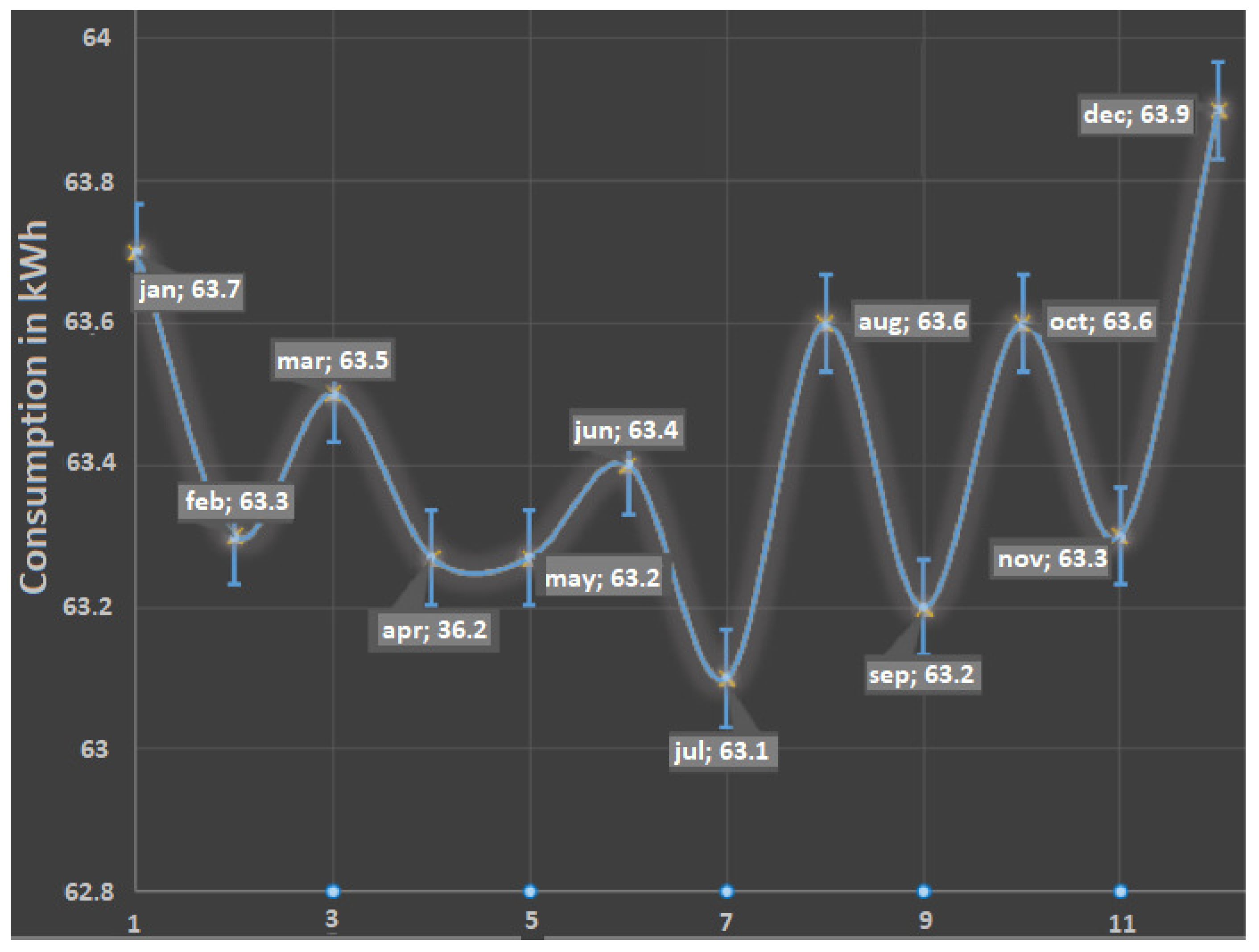

Prediction: This option produces forecasts related to the energy consumption of the environment over the next twelve months. At the end of the forecasts, the mean absolute percentage error is displayed.

Graph: This button graphically displays a comparison between the measurements, with a blue line for the actual data and a red line for the expected monthly consumption values.