With the growth of computer applications, matrix inversion has become a basic operation that is widely used in various industries. For example, online video service providers (such as YouTube) use various types of the matrices to store user and item information [

1]; in satellite navigation and positioning, matrix inversion is used to solve positioning equations [

2]; triangular matrix inversion is used in the fast algorithm of radar pulse compression (based on reiterative minimum mean-square error); and, matrices are also used in multivariate public key encryption [

3], etc. Next, the application of matrix in cryptography is described in detail. In some of existing cryptographic algorithms, a matrix is used to directly encrypt a message. When the process is duplicated, it is found that the encrypted message can be obtained by solving the inversion of the encryption matrix. Additionally, some algorithms can be transfromed into multiple linear equations, represented as

, where

A is an

matrix;

x and

b are

vectors. Subsequently, the equation can be solved by computing the inverse of the matrix

A, denoted by

, in order to obtain

. For general matrices, some common inversion algorithms exist such as Gaussian elimination, Gauss-Jordan elimination [

4], Cholesky decomposition [

5], QR decomposition [

6], LU decomposition [

6], and so on. Meanwhile, other algorithms focus on special types of matrices, such as the positive definite matrix [

7], tridiagonal matrix [

8], adjacent pentadiagonal matric [

9], and triangular matrix [

10]. However, these algorithms for general matrices are computationally intensive and they require a cubic number of operations. Hence, many studies have been performed to reduce the complexity. In 1969, Strassen [

11] presented a method that reduces the complexity from

to

, where

n denotes the order of the matrix. In 1978, Pan et al. [

12] proved that the complexity can be less than

. Coppersmith and Winograd [

13] were the first to reduce the index of

n less than

: the obtained time complexity was

. Despite various optimizations, the index of

n had never been less than 2. However, with increasing matrix dimensions and the exponential growth of data volume, it is impossible to solve the problem that is mentioned above by using only these methods proposed previously and their optimizations. With the rapid development of parallel computing, the problem of designing an efficient algorithm for large scale distributed matrix inversion has garnered the significant attention of researchers.

Message Passing Interface (MPI) is a programming model that can effectively support parallel matrix inversion. Apart from MPI, plenty of new distributed computing technologies have emerged as platforms for large data processing tasks in recent years; the most popular ones are MapReduce and Spark, which exhibit outstanding scalability and fault-tolerance capabilitys. Xiang et al. [

14] proposed a scalable matrix inversion method that was implemented on MapReduce and discussed some optimizations. Liu et al. [

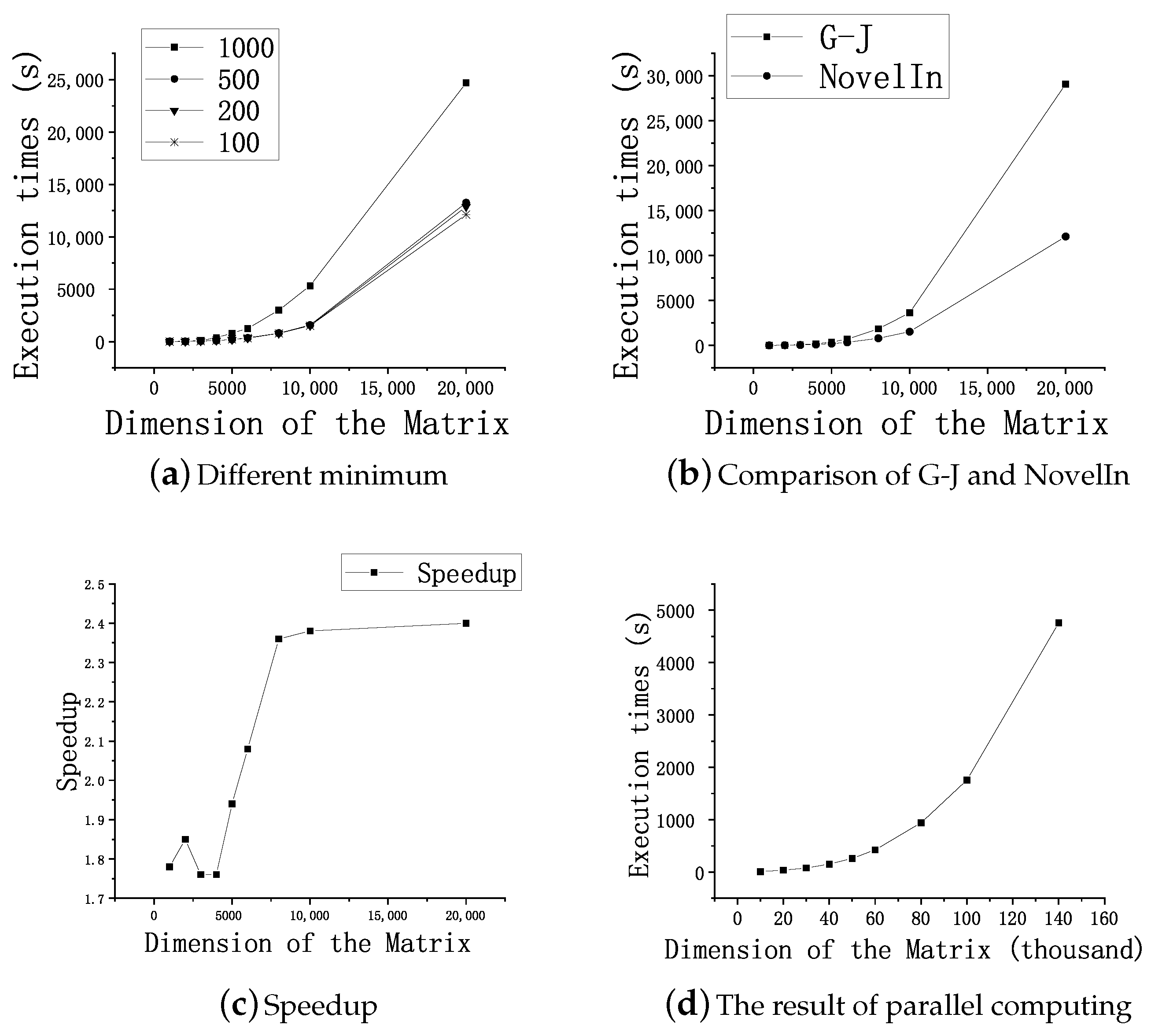

15] described an inversion algorithm using LU decomposition on Spark. In this paper, we propose a novel distributed matrix inversion algorithm for a large-scale matrix that is based on Strassen’s original serial inversion scheme and implement it on the MPI. Essentially, it is a block-recursive method to decompose the original matrix into a series of small ones that can be processed on a single server. We present a detailed derivation and the detailed steps of the algorithm and then show the experimental results on the MPI. However, it is noteworthy that the primary aim of this paper is not to compare the performance of the MPI, Spark, and MapReduce. In summary:

Note that the all operations for solving matrix inversion is based on finite fields and only for a square matrix.