Design of a 3D Platform for Immersive Neurocognitive Rehabilitation

Abstract

:1. Introduction

- Unlike the current state-of-the-art, the tool allows us to create 3D environments both automatically (i.e., by using a planimetry) and manually (i.e., created ad-hoc by a therapist). In this way, it is possible to recreate an environment similar to the patient’s home, thus decreasing the stress induced by the rehabilitation sessions;

- Another novelty with respect to the common literature is that the tool allows us to create serious games with an increasing level of difficulty, thus allowing both patients to familiarize with the exercises and therapists to adapt game parameters according to the patients’ status;

- A final not common novelty of the proposed tool is the implementation of a random mechanism, inside each serious game, that allows it, for example, to place the objects in the rooms always in different positions, thus increasing the longevity of the rehabilitative exercises and, at the same time, limiting the habituation factor of the patients that, as well known, is a crucial aspect of the neurocognitive rehabilitation.

2. Related Work

2.1. Non Immersive Neurocognitive Rehabilitation

2.2. Immersive Neurocognitive Rehabilitation

3. Proposed Tool

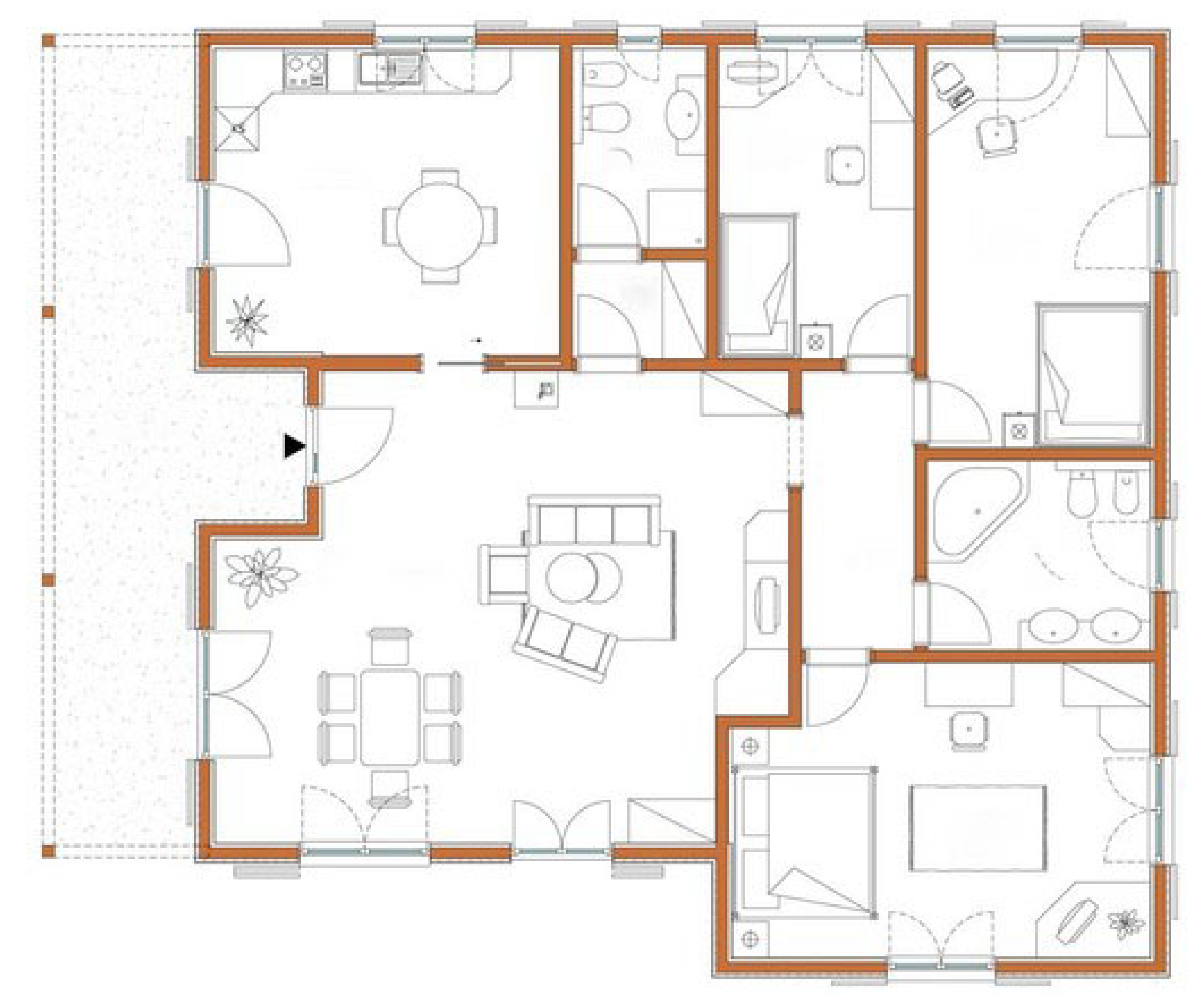

- The patient starts the rehabilitation from the bedroom, in which the first exercise is performed;

- From the bedroom, the patient goes to the kitchen, where the second exercise is performed;

- From the kitchen, the patient moves to the living room, in which the third exercise is performed;

- From the living room, the patient goes to both the bathroom to perform the fourth exercise;

- Subsequently, the patient goes to the small bedroom to perform the fifth exercise;

- Finally, the patient goes to the office to perform the last exercise.

3.1. Exercise 1

- To get closer to the drawer;

- To bow in order to open the drawer;

- To open the drawer by pulling it towards his body;

- To get the key;

- To go to the closed door, and open it as a real door.

3.2. Exercise 2

3.3. Exercise 3

- The patient enters the living room and observes all the present objects;

- Subsequently, the patient goes to the garden next to the living room. As soon the patient reaches the garden, a random object changes its position and it is placed in the centre of the table;

- Next, the patient comes back in the living room, and has to move the object in the centre of the table to its original position.

3.4. Exercise 4

3.5. Exercise 5

3.6. Exercise 6

- The first number is obtained by summing all the chairs of the house;

- The second number is obtained from the difference between the chairs in the garden and the chairs in the living room. If the number is negative, the modulus is applied to the result;

- The last two numbers are obtained by dividing by two the total number of the chair’s legs.

- The first number is 9, obtained by summing ;

- The second number is 2, obtained by the subtraction ;

- The last two numbers are 18, by dividing 36, i.e., the total number of chairs legs, by 2.

4. Experiments and Discussion

4.1. Healthy Subjects Pre-Test

4.2. Test on Real Patients

4.2.1. Exercise 1

4.2.2. Exercise 2

4.2.3. Exercise 3

4.2.4. Exercise 4

4.2.5. Exercise 5

4.2.6. Exercise 6

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, Y.; Carboni, G.; Gonzalez, F.; Campolo, D.; Burdet, E. Differential game theory for versatile physical human-robot interaction. Nat. Mach. Intell. 2019, 1, 36–43. [Google Scholar] [CrossRef] [Green Version]

- de Graaf, M.M.A.; Allouch, S.B.; van Dijk, J.A.G.M. Why Would I Use This in My Home? A Model of Domestic Social Robot Acceptance. Hum. Comput. Interact. 2019, 34, 115–173. [Google Scholar] [CrossRef]

- Martinel, N.; Avola, D.; Piciarelli, C.; Micheloni, C.; Vernier, M.; Cinque, L.; Foresti, G.L. Selection of temporal features for event detection in smart security. In Proceedings of the International Conference on Image Analysis and Processing (ICIAP), Cagliari, Italy, 6–8 September 2015; pp. 609–619. [Google Scholar]

- Arunnehru, J.; Chamundeeswari, G.; Bharathi, S.P. Human action recognition using 3D convolutional neural networks with 3D motion cuboids in surveillance videos. Procedia Comput. Sci. 2018, 133, 471–477. [Google Scholar] [CrossRef]

- Baba, M.; Gui, V.; Cernazanu, C.; Pescaru, D. A sensor network approach for violence detection in smart cities using deep learning. Sensors 2019, 19, 1676. [Google Scholar] [CrossRef] [Green Version]

- Avola, D.; Bernardi, M.; Foresti, G.L. Fusing depth and colour information for human action recognition. Multimed. Tools Appl. 2019, 78, 5919–5939. [Google Scholar] [CrossRef]

- Yi, Y.; Li, A.; Zhou, X. Human action recognition based on action relevance weighted encoding. Signal Process. Image Commun. 2020, 80, 1–11. [Google Scholar] [CrossRef]

- Avola, D.; Foresti, G.L.; Martinel, N.; Micheloni, C.; Pannone, D.; Piciarelli, C. Aerial video surveillance system for small-scale UAV environment monitoring. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Avola, D.; Cinque, L.; Foresti, G.L.; Martinel, N.; Pannone, D.; Piciarelli, C. A UAV video dataset for mosaicking and change detection from low-altitude flights. IEEE Trans. Syst. Man Cybern. Syst. 2018, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Chiu, L.; Chang, T.; Chen, J.; Chang, N.Y. Fast SIFT Design for Real-Time Visual Feature Extraction. IEEE Trans. Image Process. 2013, 22, 3158–3167. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Martinel, N.; Pannone, D.; Piciarelli, C. Low-Level Feature Detectors and Descriptors for Smart Image and Video Analysis: A Comparative Study. In Bridging the Semantic Gap in Image and Video Analysis; Springer International Publishing: Cham, Switzerland, 2018; pp. 7–29. [Google Scholar]

- Ghariba, B.; Shehata, M.S.; McGuire, P. Visual saliency prediction based on deep learning. Information 2019, 10, 257. [Google Scholar] [CrossRef] [Green Version]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Adaptive bootstrapping management by keypoint clustering for background initialization. Pattern Recognit. Lett. 2017, 100, 110–116. [Google Scholar] [CrossRef]

- Ramirez-Alonso, G.; Ramirez-Quintana, J.A.; Chacon-Murguia, M.I. Temporal weighted learning model for background estimation with an automatic re-initialization stage and adaptive parameters update. Pattern Recognit. Lett. 2017, 96, 34–44. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Massaroni, C.; Pannone, D. A keypoint-based method for background modeling and foreground detection using a PTZ camera. Pattern Recognit. Lett. 2017, 96, 96–105. [Google Scholar] [CrossRef]

- Chemuturi, R.; Amirabdollahian, F.; Dautenhahn, K. A Study to Understand Lead-Lag Performance of Subject vs Rehabilitation System. In Proceedings of the IEEE International Conference on Augmented Human (AH), Megeve, France, 8–9 March 2012; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- Avola, D.; Cinque, L.; Levialdi, S.; Placidi, G. Human body language analysis: A preliminary study based on kinect skeleton tracking. In Proceedings of the International Conference on Image Analysis and Processing (ICIAP), Naples, Italy, 9–13 September 2013; pp. 465–473. [Google Scholar]

- Lin, W.; Shen, Y.; Yan, J.; Xu, M.; Wu, J.; Wang, J.; Lu, K. Learning Correspondence Structures for Person Re-Identification. IEEE Trans. Image Process. 2017, 26, 2438–2453. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Avola, D.; Cascio, M.; Cinque, L.; Fagioli, A.; Foresti, G.L.; Massaroni, C. Master and Rookie Networks for Person Re-identification. In Proceedings of the International Conference on Computer Analysis of Images and Patterns (CAIP), Salerno, Italy, 3–5 September 2019; pp. 470–479. [Google Scholar]

- Zhou, L.; Zhang, D. Following Linguistic Footprints: Automatic Deception Detection in Online Communication. Commun. ACM 2008, 51, 119–122. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Pannone, D. Automatic Deception Detection in RGB Videos Using Facial Action Units. In Proceedings of the International Conference on Distributed Smart Cameras (ICDSC), Trento, Italy, 9–11 September 2019; pp. 470–479. [Google Scholar]

- Avola, D.; Ferri, F.; Grifoni, P.; Paolozzi, S. A Framework for Designing and Recognizing Sketch-Based Libraries for Pervasive Systems. In Proceedings of the International Conference on Information Systems and e-Business Technologies (UNISCOM), Guangzhou, China, 7–9 May 2008; pp. 405–416. [Google Scholar]

- Avola, D.; Del Buono, A.; Gianforme, G.; Wang, R. A novel client-server sketch recognition approach for advanced mobile services. In Proceedings of the IADIS International Conference on WWW/Internet (ICWI), Algarve, Portugal, 5–8 November 2009; pp. 400–407. [Google Scholar]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Online separation of handwriting from freehand drawing using extreme learning machines. In Multimedia Tools and Applications; Springer: New York, NY, USA, 2019; pp. 1–19. [Google Scholar]

- Nam, G.K.; Choong, K.Y.; Jae, J.I. A new rehabilitation training system for postural balance control using virtual reality technology. IEEE Trans. Rehabil. Eng. 1999, 7, 482–485. [Google Scholar]

- Avola, D.; Cinque, L.; Levialdi, S.; Petracca, A.; Placidi, G.; Spezialetti, M. Time-of-Flight camera based virtual reality interaction for balance rehabilitation purposes. In Proceedings of the International Symposium on Computational Modeling of Objects Represented in Images (CompIMAGE), Pittsburgh, PA, USA, 3–5 September 2014; pp. 363–374. [Google Scholar]

- Torner, J.; Skouras, S.; Molinuevo, J.L.; Gispert, J.D.; Alpiste, F. Multipurpose Virtual Reality Environment for Biomedical and Health Applications. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1511–1520. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, T.M.; Coutinho, D.S.; Pereira, V.M.; de Oliveira Ribeiro, N.P.; Nardi, A.E.; de Oliveira e Silva, A.C. The Nintendo Wii as a tool for neurocognitive rehabilitation, training and health promotion. Comput. Hum. Behav. 2014, 31, 384–392. [Google Scholar] [CrossRef]

- Kim, K.J.; Heo, M. Effects of virtual reality programs on balance in functional ankle instability. J. Phys. Ther. Sci. 2015, 27, 3097–3101. [Google Scholar] [CrossRef] [PubMed]

- Faria, A.L.; Andrade, A.; Soares, L.; Badia, S.B. Benefits of virtual reality based cognitive rehabilitation through simulated activities of daily living: A randomized controlled trial with stroke patients. J. NeuroEng. Rehab. 2016, 13, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Avola, D.; Spezialetti, M.; Placidi, G. Design of an efficient framework for fast prototyping of customized human–computer interfaces and virtual environments for rehabilitation. Comput. Methods Programs Biomed. 2013, 110, 490–502. [Google Scholar] [CrossRef]

- Placidi, G.; Avola, D.; Iacoviello, D.; Cinque, L. Overall design and implementation of the virtual glove. Comput. Biol. Med. 2013, 43, 1927–1940. [Google Scholar] [CrossRef] [PubMed]

- Avola, D.; Cinque, L.; Foresti, G.L.; Marini, M.R.; Pannone, D. VRheab: A Fully Immersive Motor Rehabilitation System Based on Recurrent Neural Network. Multimed. Tools Appl. 2018, 77, 24955–24982. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Foresti, G.L.; Marini, M.R. An interactive and low-cost full body rehabilitation framework based on 3D immersive serious games. J. Biomed. Inform. 2019, 89, 81–100. [Google Scholar] [CrossRef] [PubMed]

- Shapi’i, A.; Zin, N.A.M.; Elaklouk, A.M. A game system for cognitive rehabilitation. BioMed Res. Int. 2015, 2015, 493562. [Google Scholar] [CrossRef] [PubMed]

- Manera, V.; Petit, P.D.; Derreumaux, A.; Orvieto, I.; Romagnoli, M.; Lyttle, G.; David, R.; Robert, P.H. ‘Kitchen and cooking,’ a serious game for mild cognitive impairment and Alzheimer’s disease: A pilot study. Front. Aging Neurosci. 2016, 7, 1–10. [Google Scholar]

- van der Kuil, M.N.A.; Visser-Meily, J.M.A.; Evers, A.W.M.; van der Ham, I.J.M. A Usability Study of a Serious Game in Cognitive Rehabilitation: A Compensatory Navigation Training in Acquired Brain Injury Patients. Front. Psychol. 2018, 9, 1–12. [Google Scholar]

- Thomas, J.S.; France, C.R.; Applegate, M.E.; Leitkam, S.T.; Pidcoe, P.E.; Walkowski, S. Effects of Visual Display on Joint Excursions Used to Play Virtual Dodgeball. JMIR Serious Games 2016, 4, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Schnack, A.; Wright, M.J.; Holdershaw, J.L. Immersive virtual reality technology in a three-dimensional virtual simulated store: Investigating telepresence and usability. Food Res. Int. 2019, 117, 40–49. [Google Scholar] [CrossRef]

- Émilie, O.; Boller, B.; Corriveau-Lecavalier, N.; Cloutier, S.; Belleville, S. The Virtual Shop: A new immersive virtual reality environment and scenario for the assessment of everyday memory. J. Neurosci. Methods 2018, 303, 126–135. [Google Scholar]

| Subject | Sex | Age | Videogame Experience |

|---|---|---|---|

| 1 | F | 24 | No |

| 2 | M | 23 | Yes |

| 3 | M | 52 | No |

| 4 | F | 53 | No |

| 5 | M | 26 | Yes, had also VR experience |

| 6 | M | 29 | Yes, had little VR experience |

| 7 | M | 45 | Yes, had VR experience |

| Subject | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 1 | 4.56 | 16 | 0.45 | 10 | 9 | 10 |

| 2 | 1.48 | 14 | 0.26 | 7 | 8 | 8 |

| 3 | 5.10 | 20 | 0.53 | 10 | 12 | 12 |

| 4 | 6.37 | 20 | 0.41 | 12 | 10 | 11 |

| 5 | 0.39 | 12 | 0.19 | 8 | 8 | 9 |

| 6 | 2.13 | 15 | 0.33 | 9 | 10 | 10 |

| 7 | 0.42 | 17 | 0.18 | 11 | 10 | 11 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Avola, D.; Cinque, L.; Pannone, D. Design of a 3D Platform for Immersive Neurocognitive Rehabilitation. Information 2020, 11, 134. https://doi.org/10.3390/info11030134

Avola D, Cinque L, Pannone D. Design of a 3D Platform for Immersive Neurocognitive Rehabilitation. Information. 2020; 11(3):134. https://doi.org/10.3390/info11030134

Chicago/Turabian StyleAvola, Danilo, Luigi Cinque, and Daniele Pannone. 2020. "Design of a 3D Platform for Immersive Neurocognitive Rehabilitation" Information 11, no. 3: 134. https://doi.org/10.3390/info11030134

APA StyleAvola, D., Cinque, L., & Pannone, D. (2020). Design of a 3D Platform for Immersive Neurocognitive Rehabilitation. Information, 11(3), 134. https://doi.org/10.3390/info11030134