Preliminary Study on the Knowledge Graph Construction of Chinese Ancient History and Culture

Abstract

:1. Introduction

- This paper proposes a model for constructing a graph of knowledge regarding ancient Chinese history and culture.

- It introduces, in detail, how the knowledge graph is constructed, and the main steps in the construction process, namely entity recognition.

- Finally, a visual display of the ancient Chinese history and culture knowledge graph constructed is convenient for the public to better understand ancient history and culture.

2. Related Work

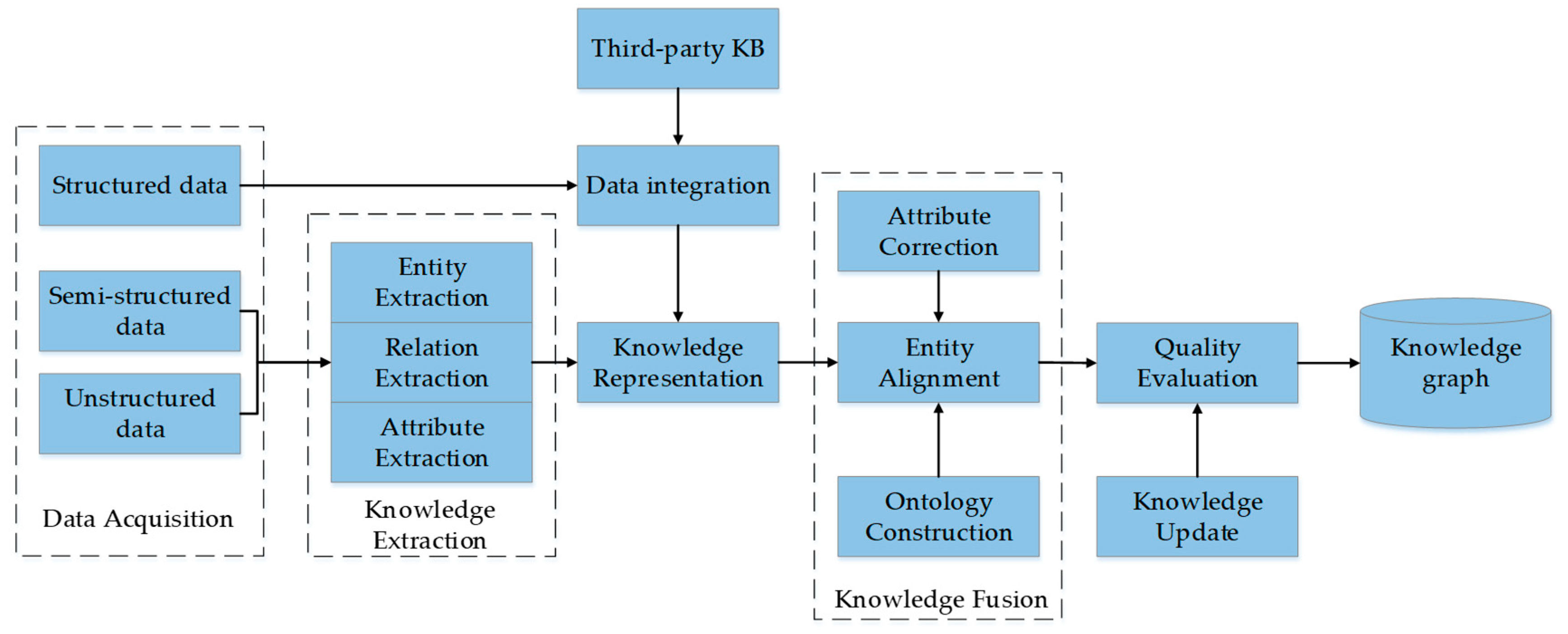

2.1. Knowledge Graph Construction

2.2. Named Entity Recognition

3. Knowledge Graph Construction Process

3.1. Data Acquisition

3.2. Knowledge Extraction

- Named entity recognition: Also known as entity extraction, refers to the automatic identification of named entities from text content, and it is the most critical part of information extraction. The quality of entity extraction will directly affect the work of relationship extraction and knowledge fusion in subsequent work. A deep learning algorithm is used for named entity recognition in this paper. A variety of methods are used for comparison experiments, and the conditional random field is used as a benchmark. The BiLSTM, BiLSTM-CRF, and BiLSTM-CNN-CRF methods are used for comparison experiments on custom data sets. Finally, the BiLSTM-CNN-CRF model is used to extract the entities in the text. Specific experiments on named entity recognition will be explained in detail in the fourth part of the paper.

- Relationship extraction: After obtaining the entities, the relationships between the entities need to be extracted from the relevant corpus, and the unrelated entities that are initially connected are connected through the relationship to form a knowledge network structure. This article will use the Chinese relation extraction tool DeepKE that was developed by Zhejiang University to extract the relationships between entities.

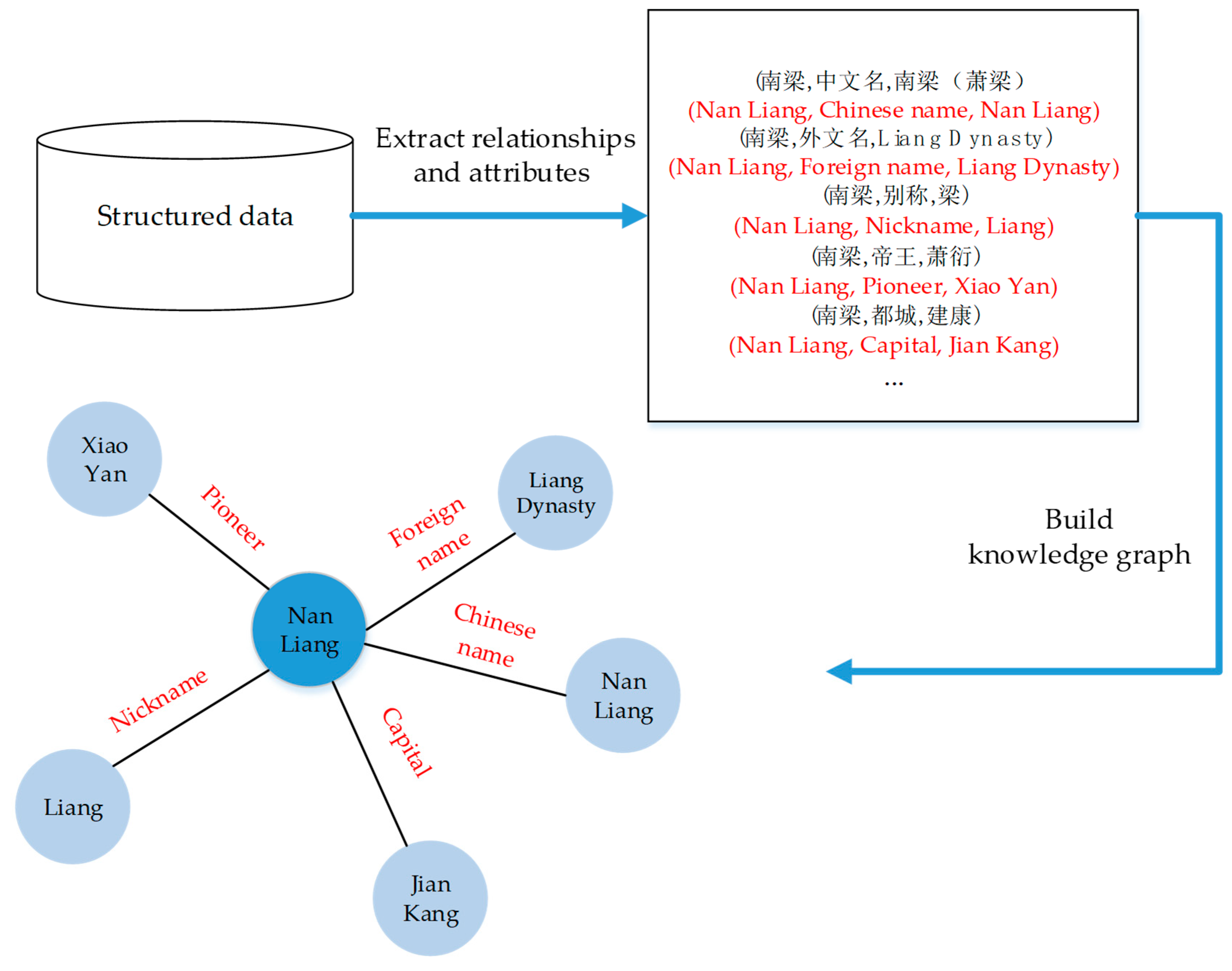

- Attribute extraction: Attribute extraction is to aggregate the information of the same entity from various data to achieve the complete outline of the entity attributes. The attribute extraction in this article mainly comes from the semi-structured data in the information box in Baidu Encyclopedia. The knowledge extraction in this part mainly uses Python programming, using the Xpath selector to extract the contents of the InfoBox in the saved web page. Mainly save the contents of “basicInfo-item name” and “basicInfo-item value” in InfoBox to a txt file. The $ symbol is used to divide between “basicInfo-item name” and “basicInfo-item value”. The information that is extracted from each webpage is saved to a txt file separately. Subsequently, the txt files that are extracted from multiple web pages are combined. It is finally stored as a triple. Figure 3 shows the results after processing.

3.3. Knowledge Fusion

3.4. Graph Construction

- The type of each node is represented by its identifier. If the identifier is “dynasty”, the type of node created is “dynasty”.

- In the entity-relation table, the storage format of the triples data is “Entity1-Relation-Entity2” or “Entity1-Attribute-Attribute Value”.

4. Acquisition of Chinese Ancient Historical and Cultural Knowledge

4.1. Overall Framework of the Model

4.2. Feature Representation

4.3. CNN Model

4.4. BiLSTM Model

4.4.1. LSTM Unit

4.4.2. BiLSTM Unit

4.5. CRF Model

5. Experimental Results and Analysis

5.1. Data Preparation

5.2. Data Annotations

5.3. Evaluation Metrics

5.4. Experimental Environment

5.5. Parameter Setting

5.6. Experimental Results and Analysis

6. Visual Display of Knowledge Graph

- Inquiry module of knowledge graph of ancient Chinese history and culture. The dynasty is used as an entity to expand and display historical figures of the same dynasty, the start and end time of the dynasty, and the name of the emperor. The knowledge graph supports zooming in, zooming out, and moving. The entities that have a relationship with the clicked entity can be highlighted when you click an entity with the mouse, while other unrelated entities are displayed in grayscale, as shown in Figure 13.

- Entity attribute knowledge query module. This module is mainly divided into two parts for display: The first part is to display the relevant attribute knowledge of search entities while using force-oriented diagrams. The second part shows the picture information of this entity, and some information introduced by encyclopedia, as shown in Figure 14.

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- China Internet Information Center. CNNIC: Statistical Report on Internet Development in China in 2019. Available online: http://www.cnnic.net.cn/ (accessed on 30 March 2020).

- Singhal, A. Introducing the Knowledge Graph: Things, Not Strings. Available online: https://googleblog.blogspot.com/2012/05/introducing-knowledge-graphthings-not.html (accessed on 15 October 2019).

- Biega, J.; Kuzey, E.; Suchanek, F.M. Inside YAGO2s: A transparent information extraction architecture. In Proceedings of the 22nd International Conference on World Wide Web Companion, Rio de Janeiro, Brazil, 13–17 May 2013; International World Wide Web Conferences Steering Committee: Rio de Janeiro, Brazil, 2013; pp. 325–328. [Google Scholar]

- Bizer, C.; Lehmann, J.; Kobilarov, G.; Auer, S.; Becker, C.; Cyganiak, R.; Hellmann, S. DBpedia-A crystallization point for the Web of data. J. Web Semant. 2009, 7, 154–165. [Google Scholar] [CrossRef]

- Erxleben, F.; Günther, M.; Krötzsch, M.; Mendez, J.; Vrandečić, D. Introducing wikidata to the linked data web. In Proceedings of the 13th International Semantic Web Conference, Riva del Garda, Italy, 19–23 October 2014. [Google Scholar]

- Xu, B.; Xu, Y.; Liang, J.; Xie, C.; Liang, B.; Cui, W.; Xiao, Y. CN-DBpedia: A Never-Ending Chinese Knowledge Extraction System. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Arras, France, 27–30 June 2017; Springer: Cham, Switzerland, 2017; pp. 428–438. [Google Scholar]

- Niu, X.; Sun, X.; Wang, H.; Rong, S.; Qi, G.; Yu, Y. Zhishi. me-weaving chinese linking open data. In Proceedings of the Semantic Web–ISWC 2011, Bonn, Germany, 23–27 October 2011; Springer: Berlin, Germany, 2011; pp. 205–220. [Google Scholar]

- MrYener. OwnThink Knowledge Graph. Available online: https://www.ownthink.com/ (accessed on 30 March 2020).

- Wang, Z.; Li, J.; Wang, Z.; Li, S.; Li, M.; Zhang, D.; Shi, Y.; Liu, Y.; Zhang, P.; Tang, J. XLore: A Large-scale English-Chinese Bilingual Knowledge Graph. Presented at the Meeting of the International Semantic Web Conference (Posters & Demos), Sydney, Australia, 21–25 October 2013. [Google Scholar]

- IMDB Official. IMDB. Available online: http://www.imdb.com (accessed on 30 March 2020).

- MetaBrainz Foundation. Musicbrainz. Available online: http://musicbrainz.org/ (accessed on 30 March 2020).

- Knowledge Map of Traditional Chinese Medicine. Available online: http://www.tcmkb.cn/kg/index.php (accessed on 30 March 2020).

- Audema; Yang, Y.; Sui, Z.; Dai, D.; Chang, B.; Li, S.; Xi, H. Preliminary Study on Construction of Chinese Medical Knowledge Atlas CMeKG. J. Chin. Inf. Process. 2019, 33, 1–9. [Google Scholar]

- Pandolfo, L. “STOLE: A Reference Ontology for Historical Research Documents.” DC@ AI* IA. 2015. Available online: https://www.semanticscholar.org/paper/STOLE%3A-A-Reference-Ontology-for-Historical-Research-Pandolfo/90441c6089e278045980777a2fefb8fe5d41a41c (accessed on 30 March 2020).

- Doerr, M. Ontologies for Cultural Heritage. In Handbook on Ontologies; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Wang, W.W.; Wang, Z.G.; Pan, L.M.; Liu, Y.; Zhang, J.T. Construction and Implementation of Historical Graph Knowledge Graph in Big Data Environment. J. Syst. Simul. 2016, 28, 2560–2566. [Google Scholar]

- Gene Ontology Consortium. Available online: http://geneontology.org/ (accessed on 30 March 2020).

- Hu, C.M.; Cai, W.C.; Huang, L.J.; Chao, C.J.; Hsu, C.Y. A nutrition analysis system based on recipe ontology. Univ. Taipei Med. 2006, 15, 57–71. [Google Scholar] [CrossRef]

- Ruan, T.; Wang, M.; Sun, J.; Wang, T.; Zeng, L.; Yin, Y.; Gao, J. An automatic approach for constructing a knowledge base of symptoms in Chinese. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; Volume 8, p. 33. [Google Scholar]

- Wang, W.; Wang, Z.; Pan, L.; Liu, Y.; Zhang, J. Research on the Construction of Bilingual Movie Knowledge Map. J. Peking Univ. (Nat. Sci. Ed.) 2016, 52, 25–34. [Google Scholar]

- Breast Cancer Knowledge Atlas. Available online: http://wasp.cs.vu.nl/BreastCancerKG/ (accessed on 30 March 2020).

- Chi, Y.; Yu, C.; Qi, X.; Xu, H. Knowledge Management in Healthcare Sustainability: A Smart Healthy Diet Assistant in Traditional Chinese Medicine Culture. Sustainability 2018, 10, 4197. [Google Scholar] [CrossRef] [Green Version]

- Huang, L.; Yu, C.; Chi, Y.; Qi, X.; Xu, H. Towards Smart Healthcare Management Based on Knowledge Graph Technology. In Proceedings of the 2019 8th International Conference on Software and Computer Applications, Penang, Malaysia, 19–21 February 2019; pp. 330–337. [Google Scholar] [CrossRef]

- Haihong, E.; Zhang, W.J.; Xiao, S.Q.; Cheng, R.; Hu, Y.X.; Zhou, X.S.; Niu, P.Q. Survey of entity relationship extraction based on deep learning. Ruan Jian Xue Bao/J. Softw. 2019, 30, 1793–1818. (In Chinese). Available online: http://www.jos.org.cn/1000-9825/5817.htm (accessed on 30 March 2020).

- Han, X.; Huang, D. Study of Chinese Part-of-Speech Tagging Based on Semi-Supervised Hidden Markov Model. Small Microcomput. Syst. 2015, 36, 2813–2816. [Google Scholar]

- Borthwick, A.E. A Maximum Entropy Approach to Named Entity Recognition. Ph.D. Thesis, New York University, New York, NY, USA, 1999. [Google Scholar]

- Wallach, H.M. Conditional Random Fields: An Introduction. Tech. Rep. 2004, 53, 267–272. [Google Scholar]

- He, Y.; Luo, C.; Hu, B. A Geographic Named Entity Recognition Method Based on the Combination of CRF and Rules. Comput. Appl. Softw. 2015, 32, 179–185. [Google Scholar]

- Wang, Z.; Jiang, M.; Gao, J.; Chen, Y. A Chinese Named Entity Recognition Method Based on BERT. Comput. Sci. 2019, 46, 138–142. [Google Scholar]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural Language Processing (Almost) from Scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Santos, C.N.; Guimaraes, V. Boosting named entity recognition with neural character embeddings. arXiv 2015, arXiv:1505.05008. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar]

- Strubell, E.; Verga, P.; Belanger, D.; McCallum, A. Fast and accurate entity recognition with iterated dilated convolutions. arXiv 2017, arXiv:1702.02098. [Google Scholar]

- Feng, Y.H.; Yu, H.; Sun, G.; Sun, J.J. Named Entity Recognition Method Based on BLSTM. Comput. Sci. 2018. [Google Scholar]

- Maimai, A.; Wushou, S.; Palidan, M.; Yang, W. Uighur named entity recognition based on BILSTM-CNN-CRF model. Comput. Eng. 2018, 44, 230–236. [Google Scholar]

- Li, L.S.; Guo, Y. Biomedical named entity recognition based on CNN-BILSTM-CRF model. Chin. J. Inf. 2018, 32, 116–122. [Google Scholar]

- Yang, S.; Han, R. Method and Tool Analysis of KnowledgeMapping Abroad. Libr. Inf. Knowl. 2012, 6, 101–109. [Google Scholar]

- Börner, K.; Chen, C.; Boyack, K.W. Visualizing knowledge domains. Annu. Rev. Inf. Sci. Technol. 2003, 37, 179–255. [Google Scholar] [CrossRef]

- Baidu Encyclopedia the World’s Largest Chinese Encyclopedia. Available online: https://baike.baidu.com/ (accessed on 30 March 2020).

- HDWiki—More Authoritative Encyclopedia. Available online: http://www.baike.com/ (accessed on 30 March 2020).

- Wu, T.; Qi, G.; Li, C.; Wang, M. A Survey of Techniques for Constructing Chinese Knowledge Graphs and Their Applications. Sustainability 2018, 10, 3245. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Qi, G.; Chen, H. Knowledge Atlas: Method, Practice and Application; Electronic Industry Press: Beijing, China, 2019; pp. 154–180. [Google Scholar]

- Wang, H.; Fang, Z.; Zhang, L.; Pan, J.Z.; Ruan, T. Effective Online Knowledge Graph Fusion. In Proceedings of the Semantic Web-ISWC 2015, Bethlehem, PA, USA, 11–15 October 2015; Springer International Publishing: Bethlehem, PA, USA, 2015; pp. 286–302. [Google Scholar]

- Tarjan, R.E. Finding optimum branchings. Networks 1977, 7, 25–35. [Google Scholar] [CrossRef]

- Cowie, J.; Lehnert, W. Information extraction. Commun. ACM 1996, 39, 80–91. [Google Scholar] [CrossRef]

- Wang, N.; Haihong, E.; Song, M.; Wang, Y. Construction Method of Domain Knowledge Graph Based on Big Data-Driven. In Proceedings of the 2019 5th International Conference on Information Management (ICIM), Cambridge, UK, 24–27 March 2019; pp. 165–172. [Google Scholar] [CrossRef]

- Huang, H.; Yu, J.; Liao, X.; Xi, Y. Summary of Knowledge Graph Research. Appl. Comput. Syst. 2019, 28, 1–12. Available online: http://www.c-s-a.org.cn/1003-3254/6915.html (accessed on 30 March 2020).

- Meng, J.; Long, Y.; Yu, Y.; Zhao, D.; Liu, S. Cross-Domain Text Sentiment Analysis Based on CNN_FT Method. Information 2019, 10, 162. [Google Scholar] [CrossRef] [Green Version]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrasesand their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Chiu, J.P.C.; Nichols, E. Named entity recognition with Bidirectional LSTM-CNNs. Trans. Assoc. Comput. Linguist. 2016, 4, 357–370. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Wu, H.; Lu, L.; Yu, B. Chinese Named Entity Recognition Based on Transfer Learning and BiLSTM-CRF. Small Micro Comput. Syst. 2019, 40, 1142–1147. [Google Scholar]

- Han, X.; Zhang, Y.; Zhang, W.; Huang, T. An Attention-Based Model Using Character Composition of Entities in Chinese Relation Extraction. Information 2020, 11, 79. [Google Scholar] [CrossRef] [Green Version]

- Ratinov, L.; Roth, D. Design Challenges and Misconceptions in Named Entity Recognition. In Proceedings of the Thirteenth Conference on Computational Natural Language Learning, Boulder, CO, USA, 4–5 June 2009. [Google Scholar]

- Dai, H.J.; Lai, P.T.; Chang, Y.C.; Tsai RT, H. Enhancing of chemical compound and drug name recognition using representative tag scheme and fine-grained tokenization. J. Cheminform. 2015, 7 (Suppl. 1), S14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- CRF++/Wiki/Home. Available online: https://sourceforge.net/p/crfpp/wiki/Home/ (accessed on 30 March 2020).

| Type | Start Tag | Middle Tag | End Tag |

|---|---|---|---|

| Time | B-TIME | M-TIME | E-TIME |

| Name | B-NAME | M-NAME | E-NAME |

| Location | B-LOC | M-LOC | E-LOC |

| Dynasty | B-DYN | M-DYN | E-DYN |

| Designation | B-CH | M-CH | E-CH |

| … | … | … | … |

| Non entity tag | O | O | O |

| English | Li Yuan Starts His Army in Jinyang | ||||||

|---|---|---|---|---|---|---|---|

| Text | 李 | 渊 | 于 | 晋 | 阳 | 起 | 兵 |

| Tag | B-NAME | E-NAME | O | B-LOC | E-LOC | O | O |

| Project | Environment |

|---|---|

| Operating system | Ubuntu 16.04 |

| GPU | NAVIDIA Quadro K1200 |

| Hard disk | 500G |

| Memory | 8G |

| Python edition | 3.6 |

| Parameter Name | Parameter Value |

|---|---|

| Word vector dimension | 300 |

| Learning rate | 0.001 |

| Epoch | 100 |

| Dropout | 0.5 |

| Method | Precision/% | Recall/% | F1_Score/% |

|---|---|---|---|

| LSTM | 76.53 | 73.26 | 74.85 |

| BiLSTM | 78.87 | 74.12 | 76.42 |

| Method | Precision/% | Recall/% | F1_Score/% |

|---|---|---|---|

| BiLSTM | 78.87 | 74.12 | 76.42 |

| BiLSTM-CRF | 80.67 | 76.46 | 78.5 |

| Method | Precision/% | Recall/% | F1_Score/% |

|---|---|---|---|

| BiLSTM-CRF | 80.67 | 76.46 | 78.5 |

| BiLSTM-CNN-CRF | 84.73 | 82.26 | 83.47 |

| Method | Precision/% | Recall/% | F1_Score/% |

|---|---|---|---|

| CRF | 75.45 | 72.38 | 73.89 |

| LSTM | 76.53 | 73.26 | 74.85 |

| BiLSTM | 78.87 | 74.12 | 76.42 |

| BiLSTM-CRF | 80.67 | 76.46 | 78.5 |

| BiLSTM-CNN-CRF | 84.73 | 82.26 | 83.47 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Yang, H.; Li, J.; Kolmanič, S. Preliminary Study on the Knowledge Graph Construction of Chinese Ancient History and Culture. Information 2020, 11, 186. https://doi.org/10.3390/info11040186

Liu S, Yang H, Li J, Kolmanič S. Preliminary Study on the Knowledge Graph Construction of Chinese Ancient History and Culture. Information. 2020; 11(4):186. https://doi.org/10.3390/info11040186

Chicago/Turabian StyleLiu, Shuang, Hui Yang, Jiayi Li, and Simon Kolmanič. 2020. "Preliminary Study on the Knowledge Graph Construction of Chinese Ancient History and Culture" Information 11, no. 4: 186. https://doi.org/10.3390/info11040186

APA StyleLiu, S., Yang, H., Li, J., & Kolmanič, S. (2020). Preliminary Study on the Knowledge Graph Construction of Chinese Ancient History and Culture. Information, 11(4), 186. https://doi.org/10.3390/info11040186