Efficient Paradigm to Measure Street-Crossing Onset Time of Pedestrians in Video-Based Interactions with Vehicles

Abstract

:1. Introduction

1.1. Previously Applied Research Methods to Capture Pedestrian Crossing Decisions

1.2. Proposed Concept to Capture Street Crossing Onset Time (COT)

2. Materials and Methods

2.1. Participants

2.2. Independent Variable

- 1.

- Driverless SDV without eHMI: there is no indication whether the vehicle is in automated mode, i.e., self-driving, or conventional mode, i.e., steered by a driver;

- 2.

- Driverless SDV with status eHMI: steadily emitting blue-green lights on each fake Lidar sensor indicates that the vehicle is in automated mode. The design follows the recommended practice of the SAE [36];

- 3.

- Driverless SDV with status+intent eHMI: additionally to the “status” message, the “intent” signal was turned on when the approaching car started to brake, thus resembling the frontal brake light concept of previous eHMI studies [13,18,24,38]. To communicate the SDV’s intent to yield, a light above the windshield flashed with a frequency at 0.5 Hz and a sinus cycle from 30% to 100% light intensity. The design follows the recommendation of Faas et al. [37]. The video of the status+intent eHMI test condition is available through the link: https://dl.acm.org/doi/fullHtml/10.1145/3313831.3376484.

- 4

- SDV steered by a driver: yielding;

- 4b.

- SDV steered by a driver: non-yielding (filler test condition);

- 5.

- CV steered by a driver: yielding;

- 5b.

- CV steered by a driver: non-yielding (filler test condition).

2.3. Materials and Equipment

2.4. Real-World Video Clips

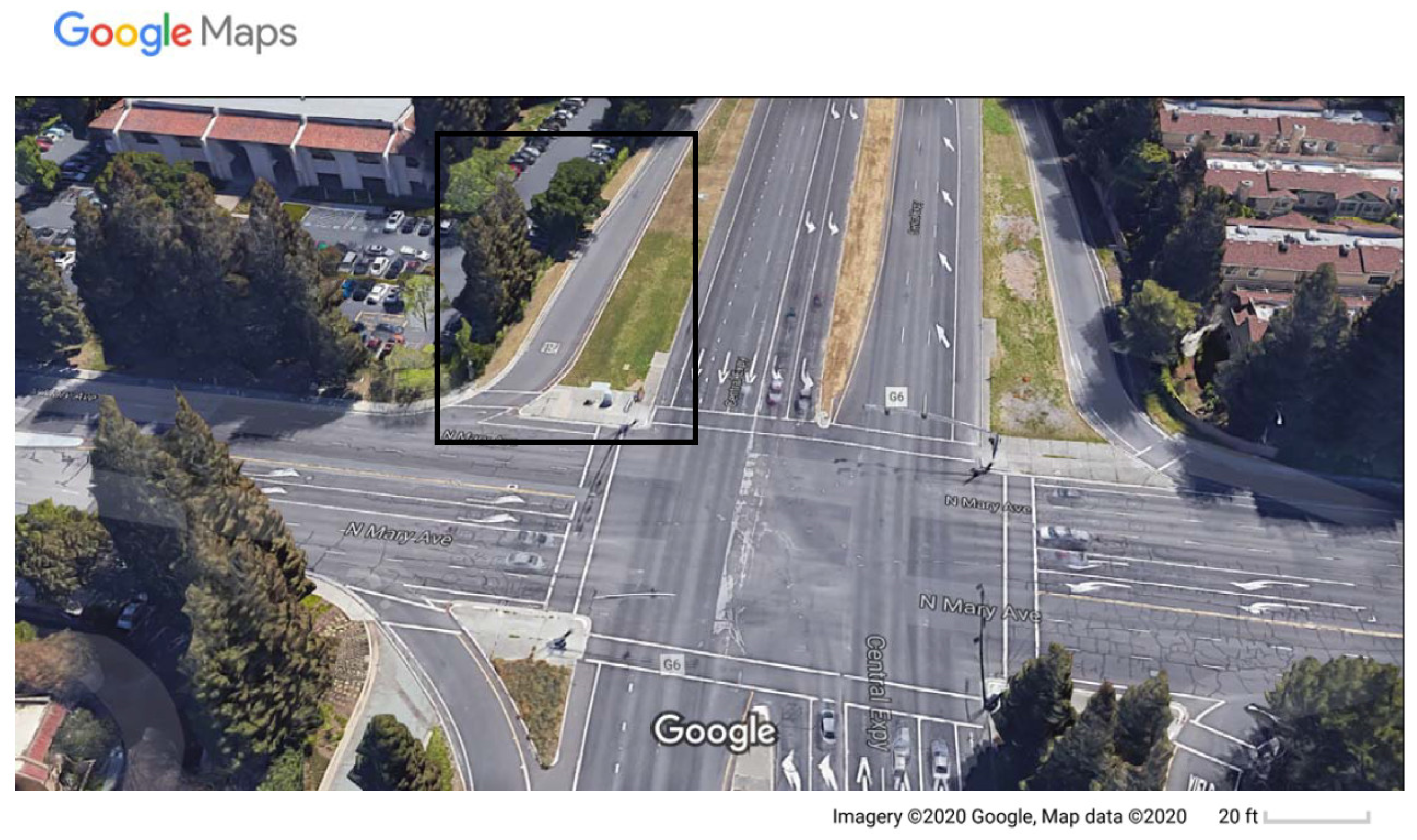

2.4.1. Real-World Crossing Scenario

2.4.2. Video Flow

2.5. Procedure and Participants’ Task

2.6. Dependent Variables

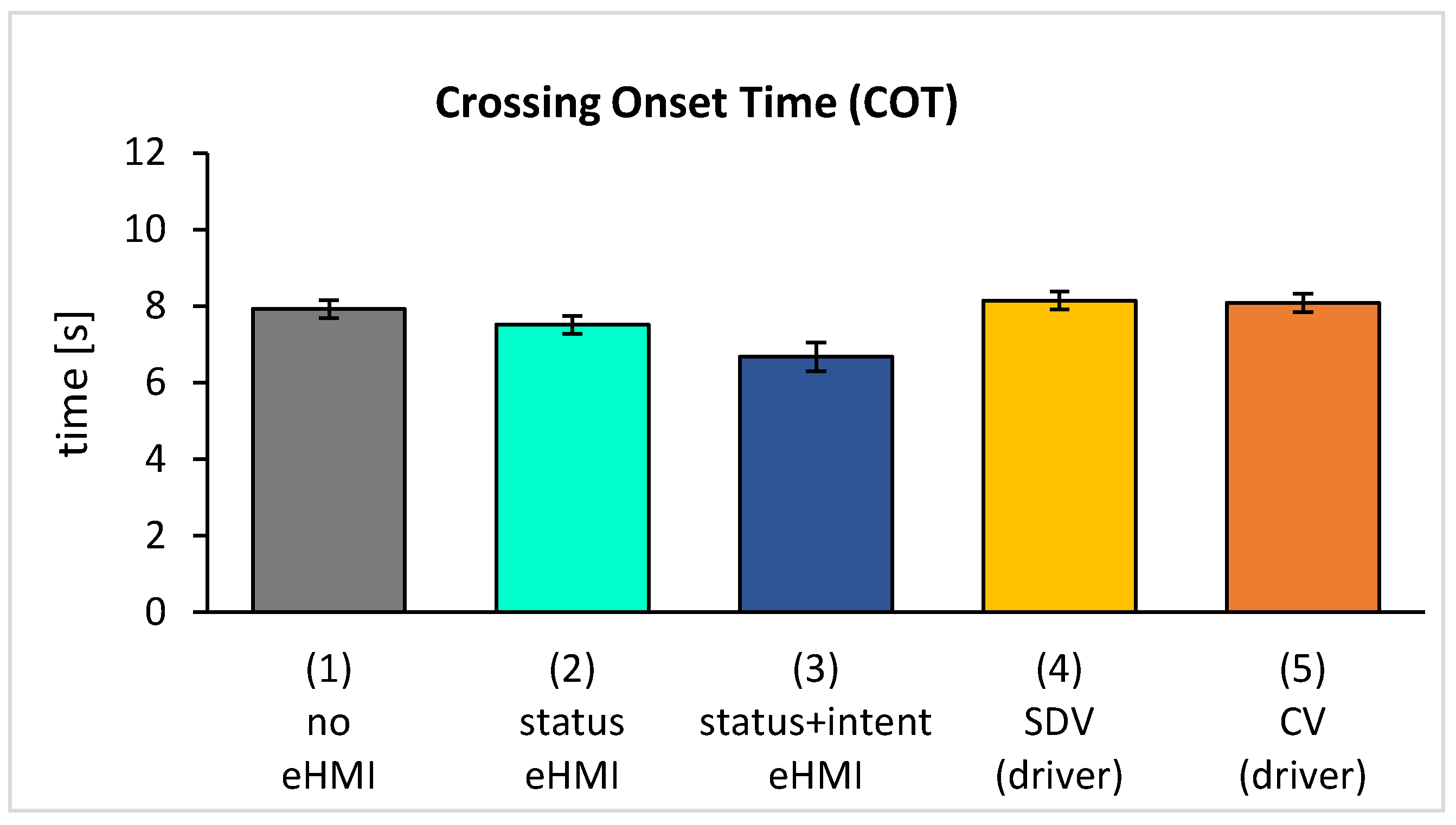

- Crossing Onset Time (COT): After each yielding vehicle trial (test conditions 1, 2, 3, 4, 5), we determined COT. COT indicates the time in seconds between the vehicle yielding and the pedestrian stepping off the “sidewalk”. Hence, to calculate the COT, we have subtracted the time between the pedestrian entering the “sidewalk” and the vehicle yielding (3s countdown + 3s vehicle approaching at constant speed). We used COT as an index of traffic flow. Shorter times indicate an earlier crossing decision. The earlier pedestrians cross when it is safe to do so, the more efficient the traffic flows. We excluded extreme cases from data analysis, defined as more than three times the interquartile range (IQR) greater than the upper or lower quartile (2 values of N = 1 participant excluded).

- Perceived Safety: After each yielding vehicle trial (test conditions 1, 2, 3, 4, 5), participants reported their perceived safety with four items (based on [44]) with semantic differentials answered on a 7-point scale ranging from −3 to +3 (“anxious–relaxed”, “agitated–calm“, “unsafe–safe“, “timid–confident“). Reliability was excellent, with Cronbach’s α = 0.90 to 0.96;

- User Experience (UX) Qualities: After each driverless SDV trial (test condition 1, 2, 3), participants completed the short version of the User Experience Questionnaire (UEQ-S) [45]. The scale consists of two dimensions: pragmatic quality (PQ) and hedonic quality (HQ). Participants reported their user experience with semantic differentials ranging from −3 (negative) to +3 (positive). The reliability of all subscales was good to excellent, with Cronbach’s α = 0.80 to 0.94;

- Naturalism: In the post-experiment interview, participants rated the items “How immersive was the study setup?” and “How natural was it to take a step forward to indicate that you would cross the street?” (based on [33]) on a scale from −3 (“not at all”) to +3 (“extremely”).

2.7. Data Analysis

3. Results

3.1. Crossing Onset Time (COT)

3.2. Perceived Safety

3.3. User Experience

3.4. Comparison of Participants’ PQ Ratings in This Lab Study and a Test Track Study

3.5. Self-Reported Naturalism

4. Discussion

4.1. Validation

4.2. Benefits with Regard to Related Approaches

4.3. Limitations and Recommendations

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles (J3016); SAE International: Warrendale, PA, USA, 2018. [Google Scholar]

- Sivak, M.; Schöttle, B. Road Safety with Self-Driving Vehicles: General Limitations and Road Sharing with Conventional Vehicles (Report No. UMTRI-2015-2). 2015. Available online: https://deepblue.lib.umich.edu/bitstream/handle/2027.42/111735/103187.pdf?sequence=1&isAllowed=y (accessed on 25 July 2019).

- Ackermann, C.; Beggiato, M.; Bluhm, L.-F.; Löw, A.; Krems, J.F. Deceleration parameters and their applicability as informal communication signal between pedestrians and automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 757–768. [Google Scholar] [CrossRef]

- Petzoldt, T.; Schleinitz, K.; Banse, R. The potential safety effects of a frontal brake light for motor vehicles. IET Intell. Transp. Syst. 2018, 12, 449–453. [Google Scholar] [CrossRef]

- Šucha, M.; Dostal, D.; Risser, R. Pedestrian-driver communication and decision strategies at marked crossings. Accid. Anal. Prev. 2017, 102, 41–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.-C.; Tung, Y.-C. Risk analysis of pedestrians’ road-crossing decisions: Effects of age, time gap, time of day, and vehicle speed. Saf. Sci. 2014, 63, 77–82. [Google Scholar] [CrossRef]

- Rodríguez, P. Safety of Pedestrians and Cyclists When Interacting with Automated Vehicles: A Case Study of the Wepods. 2017. Available online: https://www.raddelft.nl/wp-content/uploads/2017/06/Paola-Rodriguez-Safety-of-Pedestrians-and-Cyclists-when-Interacting-with…pdf (accessed on 19 April 2019).

- Li, Y.; Dikmen, M.; Hussein, T.; Wang, Y.; Burns, C. To Cross or Not to Cross: Urgency-Based External Warning Displays on Autonomous Vehicles to Improve Pedestrian Crossing Safety. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications Pages, Toronto, ON, Canada, 23–25 September 2018; pp. 188–197. [Google Scholar]

- Färber, B. Communication and Communication Problems between Autonomous Vehicles and Human Drivers. In Autonomous Driving, 1st ed.; Maurer, M., Gerdes, J.C., Lenz, B., Winner, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 125–144. [Google Scholar]

- Schieben, A.; Wilbrink, M.; Kettwich, C.; Madigan, R.; Louw, T.; Merat, N. Designing the interaction of automated vehicles with other traffic participants: Design considerations based on human needs and expectations. Cogn. Technol. Work 2019, 2019, 69–85. [Google Scholar] [CrossRef] [Green Version]

- Jayaraman, S.K.; Creech, C.; Tilbury, D.M.; Yang, X.J.; Pradhan, A.K.; Tsui, K.M.; Robert, L.P. Pedestrian trust in automated vehicles: Role of traffic signal and AV driving behavior. Front. Robot. AI 2019, 6, 14. [Google Scholar] [CrossRef] [Green Version]

- Faas, S.M.; Mathis, L.-A.; Baumann, M. External HMI for self-driving vehicles: Which information shall be displayed? Transp. Res. Part F Traffic Psychol. Behav. 2020, 68, 171–186. [Google Scholar] [CrossRef]

- De Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; de Winter, J.; Happee, R. External human-machine interfaces on automated vehicles: Effects on pedestrian crossing decisions. Hum. Factors 2019, 61, 1353–1370. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rothenbücher, D.; Li, J.; Sirkin, D.; Mok, B.; Ju, W. Ghost Driver: A Field Study Investigating the Interaction between Pedestrians and Driverless Vehicles. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication (IEEE Ro-Man ‘16), New York, NY, USA, 26–31 August 2016; pp. 795–802. [Google Scholar]

- Song, Y.E.; Lehsing, C.; Fuest, T.; Bengler, K. External HMIs and Their Effect on the Interaction between Pedestrians and Automated Vehicles. In Proceedings of the 1st International Conference on Intelligent Human Systems Integration (IHSI ‘18), Dubai, United Arab Emirates, 7–9 January 2018; pp. 13–18. [Google Scholar]

- Böckle, M.-P.; Brenden, A.P.; Klingegård, M.; Habibovic, A.; Bout, M. SAV2P: Exploring the impact of an interface for shared automated vehicles on pedestrians’ experience. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ‘17), New York, NY, USA, 24–27 September 2017; pp. 136–140. [Google Scholar]

- Chang, C.-M.; Toda, K.; Sakamoto, D.; Igarashi, T. Eyes on a Car: An Interface Design for Communication between an Autonomous Car and a Pedestrian. In Proceedings of the 9th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, New York, NY, USA, 24–27 September 2017; pp. 65–73. [Google Scholar]

- Habibovic, A.; Andersson, J.; Malmsten Lundgren, V.; Klingegård, M.; Englund, C.; Larsson, S. External Vehicle Interfaces for Communication with Other Road Users? In Road Vehicle Automation 5; Meyer, G., Beiker, S., Eds.; Springer: Cham, Germany, 2019; pp. 91–102. [Google Scholar]

- Hudson, C.R.; Deb, S.; Carruth, D.W.; McGinley, J.; Frey, D. Pedestrian Perception of Autonomous Vehicles with External Interacting Features. In Proceedings of the 9th International Conference on Applied Human Factors and Ergonomics (AHFE ‘18), Orlando, FL, USA, 21–25 July 2018; pp. 33–39. [Google Scholar]

- Ackermann, C.; Beggiato, M.; Schubert, S.; Krems, J.F. An experimental study to investigate design and assessment criteria: What is important for communication between pedestrians and automated vehicles? Appl. Ergon. 2019, 75, 272–282. [Google Scholar] [CrossRef]

- Kooijman, L.; Happee, R.; de Winter, J.C.F. How do eHMIs affect pedestrians’ crossing behavior? A study using a head-mounted display combined with a motion suit. Information 2019, 10, 386. [Google Scholar] [CrossRef] [Green Version]

- Mahadevan, K.; Sanoubari, E.; Somanath, S.; Young, J.E.; Sharlin, E. AV-Pedestrian Interaction Design Using a Pedestrian Mixed Traffic Simulator. In Proceedings of the 2019 on Designing Interactive Systems Conference (DIS ‘19), San Diego, CA, USA, 23–28 June 2019; pp. 475–486. [Google Scholar]

- Eisma, Y.B.; van Bergen, S.; ter Brake, S.M.; Hensen, M.T.T.; Tempelaar, W.J.; de Winter, J.C.F. External human–machine interfaces: The effect of display location on crossing intentions and eye movements. Information 2020, 11, 13. [Google Scholar] [CrossRef] [Green Version]

- Lagström, T.; Lundgren, V.M. Automated Vehicle’s Interaction with Pedestrians. 2015. Available online: http://publications.lib.chalmers.se/records/fulltext/238401/238401.pdf (accessed on 20 April 2019).

- Texas A&M Transportation Institute. Variable Speed Limits. 2018. Available online: https://mobility.tamu.edu/mip/strategies-pdfs/active-traffic/technical-summary/Variable-Speed-Limit-4-Pg.pdf (accessed on 10 February 2020).

- Walker, F.; Dey, D.; Martens, M.; Pfleging, B.; Eggen, B.; Terken, J. Feeling-of-Safety Slider: Measuring Pedestrian Willingness to Cross Roads in Field Interactions with Vehicles. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019. [Google Scholar]

- Dey, D.; Walker, F.; Martens, M.; Terken, J. Gaze Patterns in Pedestrian Interaction with Vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ‘19), Utrecht, The Netherlands, 22–25 September 2019; pp. 369–378. [Google Scholar]

- Dey, D.; Martens, M.; Eggen, B.; Terken, J. Pedestrian road-crossing willingness as a function of vehicle automation, external appearance, and driving behaviour. Transp. Res. Part F Traffic Psychol. Behav. 2019, 65, 191–205. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Dodou, D.; de Winter, J. Survey on eHMI concepts: The effect of text, color, and perspective. Transp. Res. Part F Traffic Psychol. Behav. 2019, 67, 175–194. [Google Scholar] [CrossRef]

- Fridman, L.; Mehler, B.; Xia, L.; Yang, Y.; Facusse, L.Y.; Reimer, B. To Walk or not to walk: Crowdsourced assessment of external vehicle-to-pedestrian displays. arXiv 2017, arXiv:1707.02698. [Google Scholar]

- Fuest, T.; Michalowski, L.; Träris, L.; Bellem, H.; Bengler, K. Using the Driving Behavior of an Automated Vehicle to Communicate Intentions: A Wizard of Oz Study. In Proceedings of the 21st International Conference on Intelligent Transportation Systems (ITSC ‘18), Maui, HI, USA, 4–7 November 2018; pp. 3596–3601. [Google Scholar]

- Lee, Y.M.; Uttley, J.; Solernou, A.; Giles, O.; Romano, R.; Markkula, G.; Merat, N. Investigating Pedestrians’ Crossing Behaviour During Car Deceleration Using Wireless Head Mounted Display: An Application Towards the Evaluation of eHMI of Automated Vehicles. In Proceedings of the Tenth International Driving Symposium on Human Factors in Driver Assessment, Training and Vehicle Design, Santa Fe, NM, USA, 24–27 June 2019; pp. 252–258. [Google Scholar]

- Palmeiro, A.R.; van der Kint, S.; Vissers, L.; Farah, H.; de Winter, J.C.F.; Hagenzieker, M. Interaction between pedestrians and automated vehicles: A Wizard of Oz experiment. Transp. Res. Part F Traffic Psychol. Behav. 2018, 58, 1005–1020. [Google Scholar] [CrossRef] [Green Version]

- Rietveld, E. Situated normativity: The normative aspect of embodied cognition in unreflective action. Mind 2008, 117, 973–1001. [Google Scholar] [CrossRef] [Green Version]

- Herbert, B.M.; Pollatos, O. The body in the mind: On the relationship between interoception and embodiment. Top. Cogn. Sci. 2012, 4, 692–704. [Google Scholar] [CrossRef] [PubMed]

- SAE International. Automated Driving System (ADS) Marker Lamp (J3134); SAE International: Warrendale, PA, USA, 2019. [Google Scholar]

- Faas, S.M.; Baumann, M. Yielding Light Signal Evaluation for Self-Driving Vehicle and Pedestrian Interaction. In Proceedings of the 2nd International Conference on Human Systems Engineering and Design: Future Trends and Applications (IHSED ‘19), Munich, Germany, 16–18 September 2019; pp. 189–194. [Google Scholar]

- Mahadevan, K.; Somanath, S.; Sharlin, E. Communicating Awareness and Intent in Autonomous Vehicle-Pedestrian Interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘18), Montreal, QC, Canada, 21–27 April 2018; pp. 1–12. [Google Scholar]

- Faas, S.M.; Kao, A.C.; Baumann, M. A longitudinal Video Study on Communicating Status and Intent for Self-Driving Vehicle—Pedestrian Interaction. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘20), Oahu, HI, USA, 25–30 April 2020. [Google Scholar]

- Dahlbäck, N.; Jönsson, A.; Ahrenberg, L. Wizard of Oz Studies: Why and How. In Proceedings of the 1st International Conference on Intelligent User Interfaces (IUI ‘93), Orlando, FL, USA, 4–7 January 1993; pp. 193–200. [Google Scholar]

- Garsten, E. Mercedes-Benz, Bosch Launch Robocar Ride-Hailing Pilot in San Jose. 2019. Available online: https://www.forbes.com/sites/edgarsten/2019/12/09/mercedes-benz-bosch-launch-robocar-ride-hailing-pilot-in-san-jose/#441deb7e3c5b (accessed on 21 June 2020).

- Randazzo, R. Waymo’s Driverless Cars on the Road: Cautious, Clunky, Impressive. 2019. Available online: https://eu.azcentral.com/story/money/business/tech/2018/12/05/phoenix-waymo-vans-how-self-driving-cars-operate-roads/2082664002/ (accessed on 21 June 2020).

- Ackermans, S.; Dey, D.; Ruijten, P.; Cuijpers, R.H.; Pfleging, B. The Effects of Explicit Intention Communication, Conspicuous Sensors, and Pedestrian Attitude in Interactions with Automated Vehicles. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ‘20), Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 71–81. [Google Scholar] [CrossRef] [Green Version]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Design and evaluation of a short version of the user experience questionnaire (UEQ-S). IJIMAI 2017, 103–108. [Google Scholar] [CrossRef] [Green Version]

- Bortz, J.; Schuster, C. Statistik für Human- und Sozialwissenschaftler, 7th ed.; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Field, A. Discovering Statistics: Cluster Analysis. 2017. Available online: https://www.discoveringstatistics.com/2017/01/13/cluster-analysis/ (accessed on 21 June 2020).

- Field, A. Discovering Statistics Using IBM SPSS Statistics, 5th ed.; SAGE Publications Ltd.: London, UK, 2018. [Google Scholar]

- Hinderks, A.; Schrepp, M.; Thomaschewski, J. UEQ Data Analysis Tool. 2019. Available online: https://www.ueq-online.org/Material/Short_UEQ_Data_Analysis_Tool.xlsx (accessed on 22 June 2019).

- Sun, D.J.; Elefteriadou, L. Lane-Changing Behavior on Urban Streets: An “In-Vehicle” Field Experiment-Based Study. Comput.-Aided Civ. Infrastruct. Eng. 2012, 27, 525–542. [Google Scholar] [CrossRef]

- Feldstein, I.T.; Lehsing, C.; Dietrich, A.; Bengler, K. Pedestrian simulators for traffic research: State of the art and future of a motion lab. Int. J. Hum. Factors Model. Simul. 2018, 6, 250–265. [Google Scholar] [CrossRef]

- Hettinger, L.J.; Riccio, G.E. Visually induced motion sickness in virtual environments. Presence Teleoperators Virtual Environ. 1992, 3, 306–310. [Google Scholar] [CrossRef]

- Weiß, T.; Petzoldt, T.; Bannert, M.; Krems, J.F. Einsatz von computergestuetzten Medien und Fahrsimulatoren in Fahrausbildung, Fahrerweiterbildung und Fahrerlaubnispruefung. Ber. Bundesanst. Straßenwesen Reihe M (Mensch Sicherh.) 2009, 202. Available online: https://bast.opus.hbz-nrw.de/opus45-bast/frontdoor/deliver/index/docId/1/file/BASt_Schlussbericht_November_2007.pdf (accessed on 10 July 2020).

| Participants’ Task | Left Screen | Right Screen | |

|---|---|---|---|

| Participant is ready for the next trial and asked to step on the “sidewalk”. |  |  |

| Participant steps on the footprint… | ||

| …which triggers the 3s countdown… |  |  | |

| …followed by the video of the approaching vehicle. |  |  | |

| To indicate her/his crossing decision, the participant steps off the “sidewalk” to enter the “crosswalk”… | ||

| …which is a safe decision for yielding videos (test conditions 1, 2, 3, 4, 5), triggering a crossing video. |  |  | |

| …which is a safe decision if letting the vehicle go first for non-yielding videos (test conditions 4b, 5b), triggering a crossing video. |  |  | |

| …which is an unsafe decision if the vehicle is still approaching for non-yielding videos (test conditions 4b, 5b), triggering a visual warning and a passing car video. |  |  | |

| Test Condition | This Lab Study 1 | Test Track Study 2 | t-Tests | |||||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | df | t-Value | p-Value | r | |

| (1) no eHMI | −0.49 | 1.30 | 0.31 | 1.74 | 62 | −2.10 | p = 0.040 * | 0.26 |

| (2) status eHMI | 1.03 | 1.37 | 1.56 | 1.05 | 62 | −1.71 | p = 0.092 | 0.21 |

| (3) status+intent eHMI | 1.93 | 0.86 | 1.98 | 0.85 | 62 | −0.23 | p = 0.822 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Faas, S.M.; Mattes, S.; Kao, A.C.; Baumann, M. Efficient Paradigm to Measure Street-Crossing Onset Time of Pedestrians in Video-Based Interactions with Vehicles. Information 2020, 11, 360. https://doi.org/10.3390/info11070360

Faas SM, Mattes S, Kao AC, Baumann M. Efficient Paradigm to Measure Street-Crossing Onset Time of Pedestrians in Video-Based Interactions with Vehicles. Information. 2020; 11(7):360. https://doi.org/10.3390/info11070360

Chicago/Turabian StyleFaas, Stefanie M., Stefan Mattes, Andrea C. Kao, and Martin Baumann. 2020. "Efficient Paradigm to Measure Street-Crossing Onset Time of Pedestrians in Video-Based Interactions with Vehicles" Information 11, no. 7: 360. https://doi.org/10.3390/info11070360

APA StyleFaas, S. M., Mattes, S., Kao, A. C., & Baumann, M. (2020). Efficient Paradigm to Measure Street-Crossing Onset Time of Pedestrians in Video-Based Interactions with Vehicles. Information, 11(7), 360. https://doi.org/10.3390/info11070360