A Framework for Generating Extractive Summary from Multiple Malayalam Documents

Abstract

1. Introduction

2. Related Works

3. System Architecture

3.1. Preprocessing

- Segmentation of sentence: Each document of the input set consists of a collection of sentences. Here, each is segmented as where each denotes ith sentence in the document and n the number of sentences in the document. Language specific rules were used for sentence boundary detection and abbreviations.

- Tokenization: Terms in each sentence are tokenized to where each denotes the distinct terms occurring in of D and m the number of words in .

- Stop word removal: Stop words are generally the most common words in a language. They are filtered out using a Stop word list. Removing stop words simplifies the vectorization of the sentence.

- Stemming: It is a process of reducing inflected words to their word stems. Stemming is essential because the same document can have a word in different forms with grammatical variations. This module uses a rule-based approach that utilizes a set of suffix stripping rules. It follows the iterative suffix stripping algorithm to handle multiple levels of inflection. The stemmer used is similar to Indicstemmer [35].

3.2. Sentence Encoder

- TF-IDF representationThe vectorization technique commonly used in information retrieval is Term Frequency Inverse Document Frequency (TF-IDF). The algorithm measures how often a term occurs (tf) in a specific document and multiplies this with the value accounting for how common the word is in the complete document collection (idf), as in Equation (1). Terms with the highest tf-idf scores are the terms in a document that are distinctively frequent in a document. The sentence score is obtained by taking the average tf-idf of the terms in the sentence.

- Word2Vec representation.Words are mapped to the vectors by several methods such as Continuous Bag-of-Words model(CBOW), Continuous Skip-Gram model, etc. [36]. It extracts semantic and syntactic information about the word. Semantically similar words are mapped to nearby points in the vector space. In this work the vectorization of the terms in the document are performed using the pretrained word embedding model FastText for Malayalam, trained on Common Crawl and Wikipedia. FastText follows CBOW model with position weights in dimension 300, and character ngram of length 5. The final sentence level representation is the average of the vectors corresponding to words in the sentence.

- Smooth Inverse Frequency encoding.Similar to regular word embedding, sentence embedding embed a full sentence into a vector space. These sentence embedding inherit features from their underlying word embedding. Taking the average of the word embedding in a sentence tends to give too much weight to terms that are quite irrelevant. Arora et al. propose a different, surprisingly simple unsupervised approach for sentence embedding construction called the smooth inverse frequency(SIF) embedding, which they propose as a new baseline for sentence embedding [37]. Their approach is summarized in Algorithm 1 [37]. SIF takes the weighted average of the word embedding in the sentence. Every word embedding is weighted by , where a is a parameter that is typically set to , and is the estimated frequency of the word in a reference corpus.After computing all means, the first principal component is computed, and the projection of all sentence vectors on this first principal component is removed. The authors claim that this common component removal reduces the amount of syntactic information contained by the sentence embedding, thereby allowing the semantic information to be more dominant in the vector’s direction.The SIF model first computes the weighted average of the word embedding vectors as the initial embedding vector of each sentence in the document. FastText the pretrained model for Malayalam, is used to get the word embedding. These initial sentence embedding vectors are modified with the Principal Component Analysis to get the final embedding vector of each sentence.

| Algorithm 1: 1 SIF embedding algorithm by Arora et al. (2017) [37]. |

|

3.3. Sentence Selection

- TextRankTextRank is a graph-based ranking algorithm used for extractive Single Document Summarization which can be extended for MDS. Graph-based methods are frequently used in text summarization as they provide handy solutions. It intends to rank each vertex in a graph by importance with regard to the connecting vertices. The ranks are updated by recursive computing until the vertex rank globally converge, as described in [18]. The philosophy is same as for the PageRank algorithm introduced by Brin and Page (1998) [27]. The more incoming links a page has, the more important it is. The more important the page, the more important the pages’ outgoing links are.The difference when working with text instead of web-pages is how the links are formed. In TextRank the pages are represented as sentences, and the links are determined by the sentence-level similarity by a chosen metric [18]. For example, the TF-IDF representation can be used to represent sentences, and cosine similarity can be used to calculate sentence closeness. The graph obtained is an undirected graph. Traditionally PageRank which is applied on directed graph, can be applied to undirected graphs where the out-degree of a vertex is equal to the in-degree of the vertex. By iteratively scoring sentence importance by recommending edges in the graph, the most important sentences attain higher scores at convergence. The vertices with the highest probability represent the most important sentences, and are selected for the summary.PageRank algorithm starts by initializing the rank of each node by 1/N, where N is the number of nodes in the graph which will be same as the number of sentences in the document. The proposed work uses noun count in each sentence to modify the original PageRank algorithm. The initial rank of each node is the noun count in the sentence instead of 1/N. In the proposed model the graph is built based on a document, which has links between sentences in the document that can be indicated with weights. The formula is modified so that it takes into account edge weights when computing the score associated with a vertex in the graph. is modified as in Equation (2), where is the weight of the edge connecting the sentences and which is the cosine similarity between these two sentences.where d is the damping factor with a value .To determine the initial PageRank of each sentence, noun count has to be obtained for each sentence. This is extracted using the POS Tagger developed by CDAC which tags the nouns in each sentence [38].Consider a document with five sentences, and the noun count in each sentence is as listed in Table 2. The cosine similarity between sentences or edge weights are recorded in Table 3. Table 4 records the new rank for the sentences after one iteration on applying the Algorithm 2 which uses a modified PageRank algorithm.Calculation of the modified PageRank is as follows:

- Maximum Marginal RelevanceMaximum Marginal Relevance is a classic unsupervised algorithm used for sentence selection. MMR is used to maximize relevance and novelty in automatic summarization [39]. MMR was actually proposed to solve the information retrieval problem by measuring the relevance between the user query Q and sentences in the document. This measure is calculated by the formulawhere, Q is the queryR is the set of documents related to the QueryA is the subset of documents in R already selectedis the set of unselected documents in Ris a constant in the range [0–1], for diversification of results.is the similarity between the considering sentence and Q.is the similarity between the considering sentence and the existing sentences in the summary.For applying MMR in document summarization, the following changes are made to Equation (4):R is the set of sentences obtained from the previous process, i.e., TextRank. is an element of unselected sentences in the document R, and is an element of the existing summary list, and Q is formed with the terms that best describe the input set R with high TF-IDF values. and are the similarities between the sentences calculated using the cosine similarity between their corresponding TF-IDF vectors.

| Algorithm 2: Textrank algorithm with modified Page Rank. |

|

3.4. Summary Extraction

4. Description of Dataset

5. Performance Evaluation

5.1. Evaluation Metric

- Recall: Recall is the ratio of total retrieved valid sentences to the total of retrieved and non-retrieved valid sentences in summary.

- Precision: Precision is the ratio of total retrieved valid sentences to the total of retrieved valid and invalid sentences in summary.

- F-score: F-score is the harmonic mean of precision and recall.

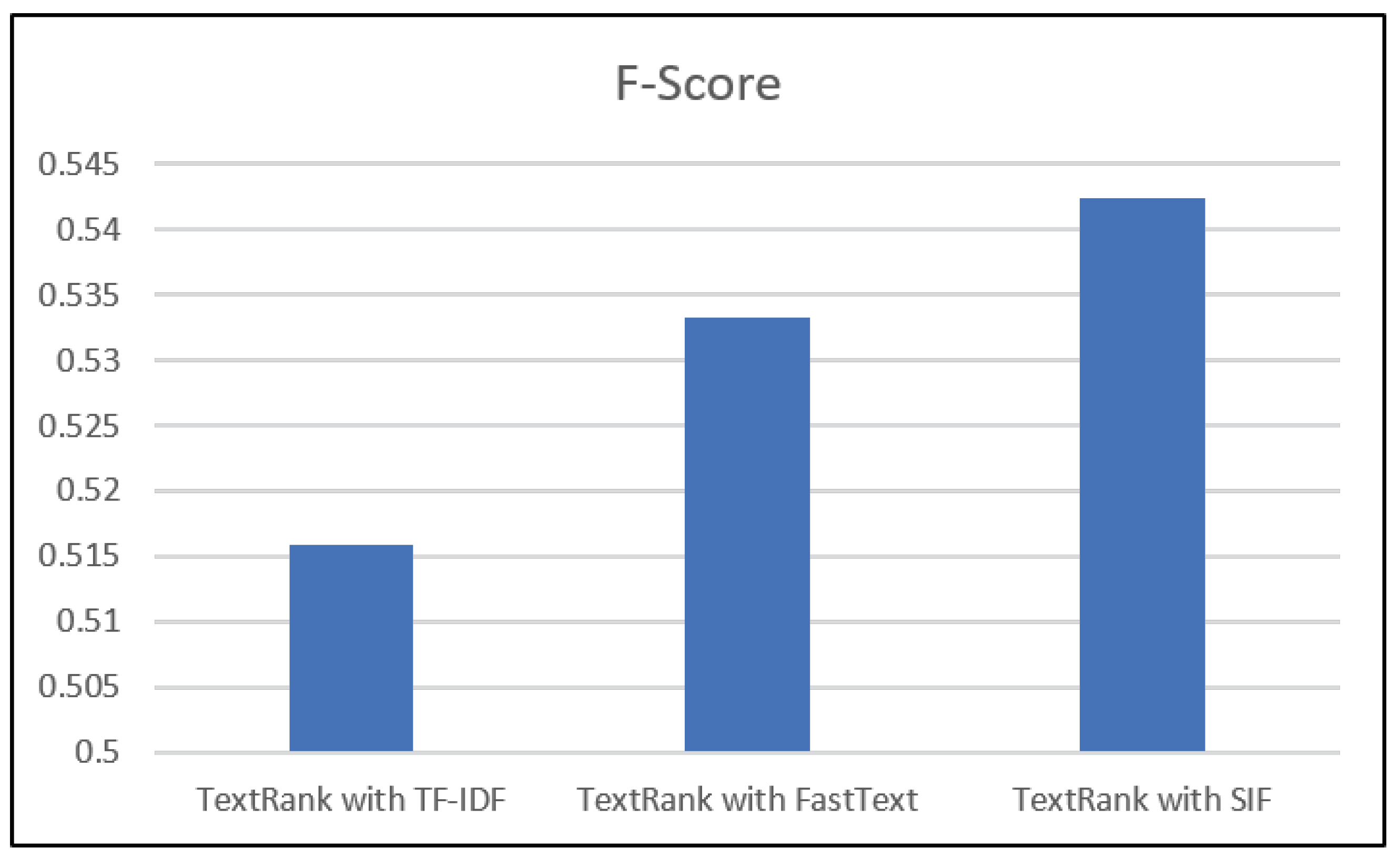

5.2. Experiments and Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Luhn, H.P. The Automatic Creation of Literature Abstracts. IBM J. Res. Dev. 1958, 2, 159–165. [Google Scholar] [CrossRef]

- Gong, Y.; Liu, X. Generic Text Summarization Using Relevance Measure and Latent Semantic Analysis; Association for Computing Machinery: New York, NY, USA, 2001. [Google Scholar] [CrossRef]

- Ouyang, Y.; Li, W.; Li, S.; Lu, Q. Applying regression models to query-focused multi-document summarization. Inf. Process. Manag. 2011, 47, 227–237. [Google Scholar] [CrossRef]

- Radev, D.; Blair-Goldensohn, S.; Zhang, Z. Experiments in Single and Multi-Document Summarization Using MEAD. In Proceedings of the First Document Understanding Conference, New Orleans, LA, USA, 13–14 September 2001. [Google Scholar]

- Qiang, J.P.; Chen, P.; Ding, W.; Xie, F.; Wu, X. Multi-document summarization using closed patterns. Knowl.-Based Syst. 2016, 99, 28–38. [Google Scholar] [CrossRef]

- John, A.; Premjith, P.; Wilscy, M. Extractive multi-document summarization using population-based multicriteria optimization. Expert Syst. Appl. 2017, 86, 385–397. [Google Scholar] [CrossRef]

- Widjanarko, A.; Kusumaningrum, R.; Surarso, B. Multi document summarization for the Indonesian language based on latent dirichlet allocation and significance sentence. In Proceedings of the 2018 International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 6–7 March 2018; pp. 520–524. [Google Scholar]

- Fang, C.; Mu, D.; Deng, Z.; Wu, Z. Word-sentence co-ranking for automatic extractive text summarization. Expert Syst. Appl. 2017, 72, 189–195. [Google Scholar] [CrossRef]

- Gambhir, M.; Gupta, V. Recent automatic text summarization techniques: A survey. Artif. Intell. Rev. 2017, 47, 1–66. [Google Scholar] [CrossRef]

- Krishnaprasad, P.; Sooryanarayanan, A.; Ramanujan, A. Malayalam text summarization: An extractive approach. In Proceedings of the 2016 International Conference on Next Generation Intelligent Systems (ICNGIS), Kottayam, India, 1–3 September 2016; pp. 1–4. [Google Scholar]

- Kishore, K.; Gopal, G.N.; Neethu, P. Document Summarization in Malayalam with sentence framing. In Proceedings of the 2016 International Conference on Information Science (ICIS), Kochi, India, 12–13 August 2016; pp. 194–200. [Google Scholar]

- Kabeer, R.; Idicula, S.M. Text summarization for Malayalam documents—An experience. In Proceedings of the 2014 International Conference on Data Science & Engineering (ICDSE), Kochi, India, 26–28 August 2014; pp. 145–150. [Google Scholar]

- Ajmal, E.; Rosna, P. Summarization of Malayalam Document Using Relevance of Sentences. Int. J. Latest Res. Eng. Technol. 2015, 1, 8–13. [Google Scholar]

- Raj, M.R.; Haroon, R.P.; Sobhana, N. A novel extractive text summarization system with self-organizing map clustering and entity recognition. Sādhanā 2020, 45, 32. [Google Scholar]

- Manju, K.; David, P.S.; Idicula Sumam, M. An extractive multi-document summarization system for Malayalam news documents. In Proceedings of the 1st EAI International Conference on Computer Science and Engineering, Penang, Malaysia, 11–12 November 2016; p. 218. [Google Scholar]

- Ko, Y.; Seo, J. An effective sentence-extraction technique using contextual information and statistical approaches for text summarization. Pattern Recognit. Lett. 2008, 29, 1366–1371. [Google Scholar] [CrossRef]

- Wu, Z.; Lei, L.; Li, G.; Huang, H.; Zheng, C.; Chen, E.; Xu, G. A Topic Modeling Based Approach to Novel Document Automatic Summarization. Expert Syst. Appl. 2017, 84, 12–23. [Google Scholar] [CrossRef]

- Mihalcea, R.; Tarau, P. Textrank: Bringing order into text. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing, Barcelona, Spain, 25–26 July 2004; pp. 404–411. [Google Scholar]

- Elbarougy, R.; Behery, G.; El Khatib, A. Extractive Arabic Text Summarization Using Modified PageRank Algorithm. Egypt. Inform. J. 2020, 21, 73–81. [Google Scholar] [CrossRef]

- Goldstein, J.; Carbonell, J. Summarization: Using MMR for Diversity-Based Reranking and Evaluating Summaries; Technical Report; Language Technologies Institute at Carnegie Mellon University: Pittsburgh, PA, USA, 1998. [Google Scholar]

- Verma, P.; Verma, A. Accountability of NLP Tools in Text Summarization for Indian Languages. J. Sci. Res. 2020, 64, 258–263. [Google Scholar] [CrossRef]

- Radev, D.R.; McKeown, K.R. Generating Natural Language Summaries from Multiple On-Line Sources. Comput. Linguist. 1998, 24, 469–500. [Google Scholar]

- Erkan, G.; Radev, D. Lexpagerank: Prestige in multi-document text summarization. In Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing, Barcelona, Spain, 25–26 July 2004; pp. 365–371. [Google Scholar]

- Barrera, A.; Verma, R. Combining Syntax and Semantics for Automatic Extractive Single-Document Summarization; Springer: Berlin/Heidelberg, Germany, 2012; pp. 366–377. [Google Scholar] [CrossRef]

- Uçkan, T.; Karcı, A. Extractive multi-document text summarization based on graph independent sets. Egypt. Inform. J. 2020, 21, 145–157. [Google Scholar] [CrossRef]

- De la Peña Sarracén, G.L.; Rosso, P. Automatic Text Summarization Based on Betweenness Centrality; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Page, L.; Brin, S.; Motwani, R.; Winograd, T. The PageRank Citation Ranking: Bringing Order to the Web; Technical Report; Stanford InfoLab: Stanford, CA, USA, 1999. [Google Scholar]

- Hark, C.; Karcı, A. Karcı summarization: A simple and effective approach for automatic text summarization using Karcı entropy. Inf. Process. Manag. 2020, 57, 102187. [Google Scholar] [CrossRef]

- Nasar, Z.; Jaffry, S.W.; Malik, M.K. Textual keyword extraction and summarization: State-of-the-art. Inf. Process. Manag. 2019, 56, 102088. [Google Scholar] [CrossRef]

- Moratanch, N.; Chitrakala, S. A survey on extractive text summarization. In Proceedings of the 2017 International Conference on Computer, Communication and Signal Processing (ICCCSP), Chennai, India, 10–11 January 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Nallapati, R.; Zhai, F.; Zhou, B. SummaRuNNer: A Recurrent Neural Network Based Sequence Model for Extractive Summarization of Documents. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, AAAI’17, San Francisco, CA, USA, 4–9 February 2017; pp. 3075–3081. [Google Scholar]

- Al-Sabahi, K.; Zuping, Z.; Nadher, M. A Hierarchical Structured Self-Attentive Model for Extractive Document Summarization (HSSAS). IEEE Access 2018, 6, 24205–24212. [Google Scholar] [CrossRef]

- Dhanya, P.M.; Jathavedan, M. Article: Comparative Study of Text Summarization in Indian Languages. Int. J. Comput. Appl. 2013, 75, 17–21. [Google Scholar]

- Sunitha, C.; Jaya, A.; Ganesh, A. A study on abstractive summarization techniques in indian languages. Procedia Comput. Sci. 2016, 87, 25–31. [Google Scholar] [CrossRef]

- Thottungal, S. Indic Stemmer. 2019. Available online: https://silpa.readthedocs.io/projects/indicstemmer (accessed on 12 March 2019).

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Arora, S.; Liang, Y.; Ma, T. A simple but tough-to-beat baseline for sentence embeddings. In Proceedings of the ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Natural Language Processing at KBCS, CDAC Mumbai. Available online: http://kbcs.in/tools.php (accessed on 18 May 2019).

- Verma, P.; Om, H. A novel approach for text summarization using optimal combination of sentence scoring methods. Sādhanā 2019, 44, 110. [Google Scholar] [CrossRef]

- Jones, K.S.; Galliers, J.R. Evaluating Natural Language Processing Systems: An Analysis and Review; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1995; Volume 1083. [Google Scholar]

- Ibrahim Altmami, N.; El Bachir Menai, M. Automatic summarization of scientific articles: A survey. J. King Saud Univ. Comput. Inf. Sci. 2020. [Google Scholar] [CrossRef]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Workshop on Text Summarization Branches Out (WAS 2004), Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Kumar, K.V.; Yadav, D. An improvised extractive approach to hindi text summarization. In Information Systems Design and Intelligent Applications; Springer: Berlin/Heidelberg, Germany, 2015; pp. 291–300. [Google Scholar]

- Gupta, V.; Kaur, N. A novel hybrid text summarization system for Punjabi text. Cogn. Comput. 2016, 8, 261–277. [Google Scholar] [CrossRef]

- Rathod, Y.V. Extractive Text Summarization of Marathi News Articles. Int. Res. J. Eng. Technol. 2018, 5, 1204–1210. [Google Scholar]

- Banu, M.; Karthika, C.; Sudarmani, P.; Geetha, T. Tamil document summarization using semantic graph method. In Proceedings of the International Conference on Computational Intelligence and Multimedia Applications (ICCIMA 2007), Sivakasi, India, 13–15 December 2007; Volume 2, pp. 128–134. [Google Scholar]

| Authors, Publication Year | Extractive/Abstractive | Single/Multi | Method Used | Evaluation |

|---|---|---|---|---|

| [10] KrishnaPrasad et al., 2016 | Extractive | Single | Heuristic | Automatic, ROUGE Score ROUGE1: 0.57 ROUGE 2: 0.53 |

| [11] Kishore et al., 2016 | Abstractive | Single | Semantic Representation and Sentence Framing | Manual, F-Score 0.48 on News articles |

| [12] Kabeer et al., 2014 | Extractive Abstractive | Single | Statistical scoring Semantic graph | Automatic, ROUGE Score(avg) ROUGE1: 0.533 (Extract) ROUGE1: 0.40 (Abstract) |

| [13] Ajmal et al., 2015 | Extractive | Single | MMR | Metric not used |

| [14] Rahulraj et al., 2020 | Extractive | Single | Semantic Role Labelling, Self organizing Maps | Manual, F-Score: 0.75 for a small data set. |

| [15] Manju et al., 2016 | Extractive | Multidocument | Statistical Score | Manual, F-Score: 0.45 |

| Sentence # | S_1 | S_2 | S_3 | S_4 | S_5 |

|---|---|---|---|---|---|

| Initial Rank (Noun Count) | 5 | 3 | 2 | 1 | 2 |

| S_1 | S_2 | S_3 | S_4 | S_5 | |

|---|---|---|---|---|---|

| S_1 | 3 | 5 | 1 | 3 | |

| S_2 | 3 | 2 | 4 | 1 | |

| S_3 | 5 | 2 | 1 | 2 | |

| S_4 | 1 | 4 | 1 | 3 | |

| S_5 | 3 | 1 | 2 | 3 |

| Sentence # | S_1 | S_2 | S_3 | S_4 | S_5 |

|---|---|---|---|---|---|

| Initial Rank (Noun Count) | 5.78 | 5.56 | 7.9 | 5.56 | 5.56 |

| Dataset Parameters | |

|---|---|

| Number of sets of documents | 100 |

| Number of documents in each set | 3 |

| Average number of sentences per document | 21.7 |

| Maximum number of sentences per document | 70 |

| Minimum number of sentences per document | 10 |

| Summary length (%) | 40 |

| Model | ROUGE1 | ROUGE2 |

|---|---|---|

| Statistical with TF-IDF | ||

| Recall | 0.480132 | 0.438446 |

| Precision | 0.441331 | 0.417328 |

| F-score | 0.459915 | 0.427626 |

| Modified TextRank with TF-IDF | ||

| Recall | 0.536102 | 0.506133 |

| Precision | 0.497013 | 0.472813 |

| F-score | 0.515818 | 0.488906 |

| Modified TextRank with Fasttext | ||

| Recall | 0.553261 | 0.500123 |

| Precision | 0.514732 | 0.491721 |

| F-score | 0.533302 | 0.495886 |

| Modified TextRank with SIF | ||

| Recall | 0.568312 | 0.512782 |

| Precision | 0.518761 | 0.481371 |

| F-score | 0.542407 | 0.49658 |

| Modified TextRank with SIF+MMR | ||

| Recall | 0.588137 | 0.543281 |

| Precision | 0.56211 | 0.507652 |

| F-score | 0.574829 | 0.524863 |

| Methods | Language | Precision | Recall | F-Score |

|---|---|---|---|---|

| Kumar et al. (2015) [43] | Hindi | 0.44 | 0.32 | 0.37 |

| Gupta and Kaur (2016) [44] | Punjabi | 0.45 | 0.21 | 0.29 |

| Rathod (2018) [45] | Marathi | 0.43 | 0.27 | 0.33 |

| Banu et al. (2007) [46] | Tamil | 0.42 | 0.31 | 0.35 |

| TextRank with SIF + MMR (Proposed Model) | Malayalam | 0.59 | 0.56 | 0.57 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manju, K.; David Peter, S.; Idicula, S.M. A Framework for Generating Extractive Summary from Multiple Malayalam Documents. Information 2021, 12, 41. https://doi.org/10.3390/info12010041

Manju K, David Peter S, Idicula SM. A Framework for Generating Extractive Summary from Multiple Malayalam Documents. Information. 2021; 12(1):41. https://doi.org/10.3390/info12010041

Chicago/Turabian StyleManju, K., S. David Peter, and Sumam Mary Idicula. 2021. "A Framework for Generating Extractive Summary from Multiple Malayalam Documents" Information 12, no. 1: 41. https://doi.org/10.3390/info12010041

APA StyleManju, K., David Peter, S., & Idicula, S. M. (2021). A Framework for Generating Extractive Summary from Multiple Malayalam Documents. Information, 12(1), 41. https://doi.org/10.3390/info12010041