WebPGA: An Educational Technology That Supports Learning by Reviewing Paper-Based Programming Assessments

Abstract

:1. Introduction

2. Literature Review

2.1. Assessments in Higher Education

2.2. Role of Feedback in Learning

2.3. Technology Support in Feedback Generation

2.4. Behavioral Analytics in Programming Learning

2.5. Personalized Guidance in Learning

3. Web-based Programming Grading Assistant (WebPGA)

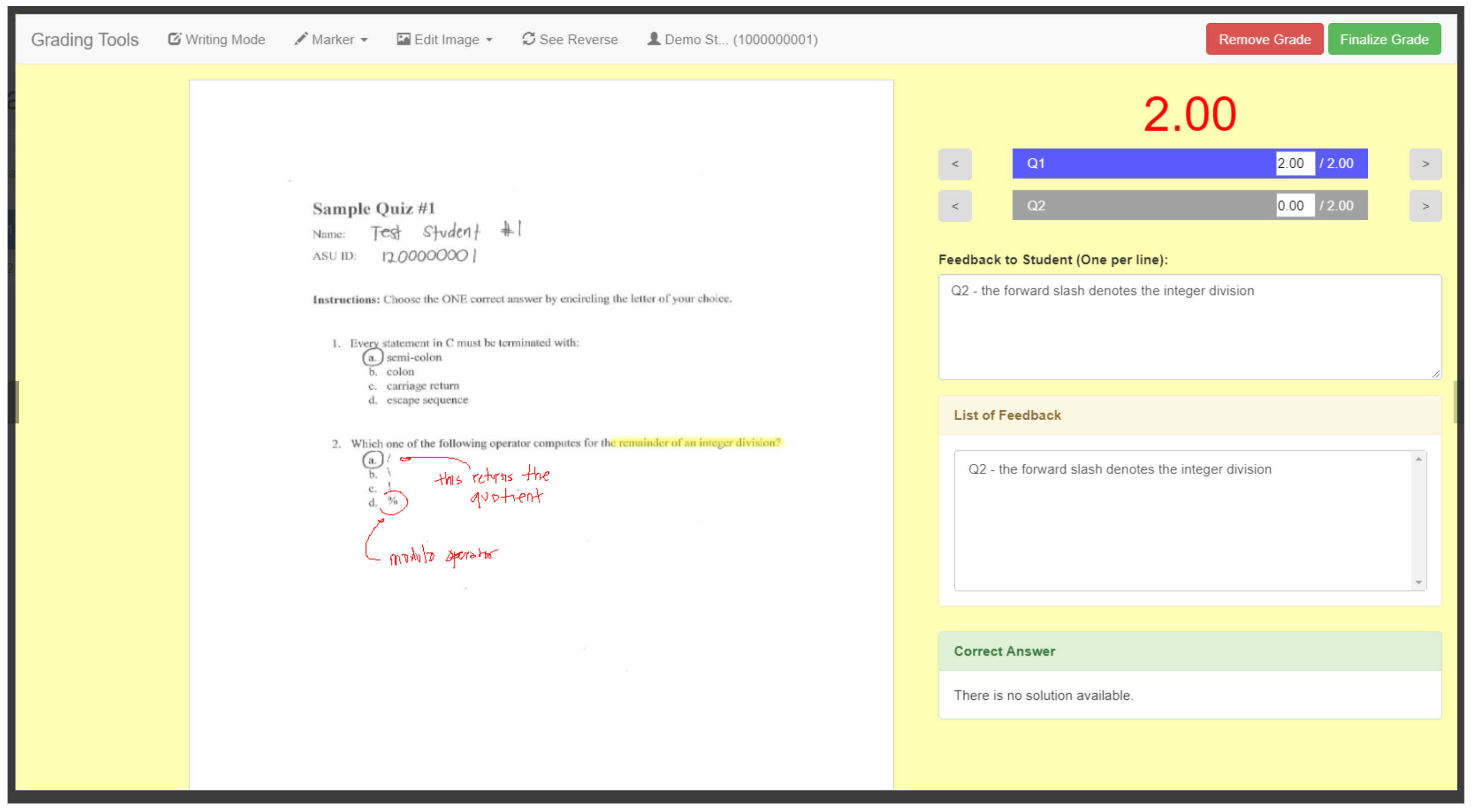

3.1. Grading Interface

3.1.1. Image Annotation

3.1.2. Grading Rubrics

3.1.3. Free-Form Feedback

3.2. Reviewing Interface

3.2.1. Dashboard

3.2.2. Assessment Overview

3.2.3. Question Overview

3.2.4. Personalization

| Algorithm 1 Assessment and Question Listing in the Reminders Panel |

|

| Algorithm 2 Recommended Sequence |

|

4. Methods

4.1. Data Collection

4.2. Data Processing

4.2.1. Overall Academic Performance

4.2.2. Performance Trajectory

4.2.3. Reviewing Behavior

4.2.4. Personalized Guidance

4.3. Data Analysis

5. Results and Discussion

5.1. The Learning Effects of Reviewing Behaviors

5.1.1. Impact of Assessment Types: Quizzes and Examinations

5.1.2. High Achievers Focused on Reviewing Their Mistakes

5.1.3. Improving Students Reviewed Most of their Mistakes

5.1.4. Spending More Time Reviewing Mistakes is Associated with Improved Performance

5.1.5. Reviewing Promptly is Associated with Academic Performance

5.2. Personalized Guidance Effects: Students Reviewed More Mistakes

5.3. Subjective Evaluation

5.3.1. Ease of Use

5.3.2. Usefulness of Features

5.3.3. Future Improvement

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Trees, A.R.; Jackson, M.H. The learning environment in clicker classrooms: Student processes of learning and involvement in large university-level courses using student response systems. Learn. Media Technol. 2007, 32, 21–40. [Google Scholar] [CrossRef]

- Martinez-Maldonado, R.; Dimitriadis, Y.; Martinez-Monés, A.; Kay, J.; Yacef, K. Capturing and analyzing verbal and physical collaborative learning interactions at an enriched interactive tabletop. Int. J. Comput.-Support. Collab. Learn. 2013, 8, 455–485. [Google Scholar] [CrossRef]

- Hanna, G.S.; Dettmer, P. Assessment for Effective Teaching: Using Context-Adaptive Planning; Allyn & Bacon: Boston, MA, USA, 2004. [Google Scholar]

- Biggs, J. Assessment and classroom learning: A role for summative assessment? Assess. Educ. Princ. Policy Pract. 1998, 5, 103–110. [Google Scholar] [CrossRef]

- Yan, Z.; Boud, D. Conceptualising assessment-as-learning. In Assessment as Learning; Routledge: Abingdon, UK, 2021; pp. 11–24. [Google Scholar]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Kulkarni, C.E.; Bernstein, M.S.; Klemmer, S.R. PeerStudio: Rapid peer feedback emphasizes revision and improves performance. In Proceedings of the Second (2015) ACM Conference on Learning @ Scale, Vancouver, BC, Canada, 14–18 March 2015; pp. 75–84. [Google Scholar]

- Dihoff, R.E.; Brosvic, G.M.; Epstein, M.L.; Cook, M.J. Provision of feedback during preparation for academic testing: Learning is enhanced by immediate but not delayed feedback. Psychol. Rec. 2004, 54, 207. [Google Scholar] [CrossRef] [Green Version]

- Jackson, G.T.; Graesser, A.C. Content matters: An investigation of feedback categories within an ITS. Front. Artif. Intell. Appl. 2007, 158, 127. [Google Scholar]

- Carless, D.; Boud, D. The development of student feedback literacy: Enabling uptake of feedback. Assess. Eval. High. Educ. 2018, 43, 1315–1325. [Google Scholar] [CrossRef] [Green Version]

- Edwards, S.H.; Perez-Quinones, M.A. Web-CAT: Automatically grading programming assignments. ACM SIGCSE Bulletin; ACM: New York, NY, USA, 2008; Volume 40, p. 328. [Google Scholar]

- Jackson, D.; Usher, M. Grading student programs using ASSYST. ACM SIGCSE Bulletin; ACM: New York, NY, USA, 1997; Volume 29, pp. 335–339. [Google Scholar]

- Hartmann, B.; MacDougall, D.; Brandt, J.; Klemmer, S.R. What would other programmers do: Suggesting solutions to error messages. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; ACM: New York, NY, USA, 2010; pp. 1019–1028. [Google Scholar]

- Denny, P.; Luxton-Reilly, A.; Hamer, J. Student use of the PeerWise system. ACM SIGCSE Bull. 2008, 40, 73–77. [Google Scholar] [CrossRef]

- Gehringer, E.F. Electronic peer review and peer grading in computer-science courses. ACM SIGCSE Bull. 2001, 33, 139–143. [Google Scholar] [CrossRef] [Green Version]

- Hsiao, I.H.; Sosnovsky, S.; Brusilovsky, P. Guiding students to the right questions: Adaptive navigation support in an E-Learning system for Java programming. J. Comput. Assist. Learn. 2010, 26, 270–283. [Google Scholar] [CrossRef]

- Singh, A.; Karayev, S.; Gutowski, K.; Abbeel, P. Gradescope: A Fast, Flexible, and Fair System for Scalable Assessment of Handwritten Work. In Proceedings of the Fourth (2017) ACM Conference on Learning @ Scale, Cambridge, MA, USA, 20–21 April 2017; ACM: New York, NY, USA, 2017; pp. 81–88. [Google Scholar]

- Guerra, J.; Sahebi, S.; Lin, Y.R.; Brusilovsky, P. The Problem Solving Genome: Analyzing Sequential Patterns of Student Work with Parameterized Exercises. Available online: http://d-scholarship.pitt.edu/21805/ (accessed on 14 August 2021).

- Piech, C.; Sahami, M.; Koller, D.; Cooper, S.; Blikstein, P. Modeling how students learn to program. In Proceedings of the 43rd ACM technical symposium on Computer Science Education, Raleigh, NC, USA, 29 February 2012–3 March 2012; pp. 153–160. [Google Scholar]

- Lu, Y.; Hsiao, I.H. Seeking Programming-related Information from Large Scaled Discussion Forums, Help or Harm? In Proceedings of the 9th International Conference on Educational Data Mining, Raleigh, NC, USA, 29 June–2 July 2016; pp. 442–447. [Google Scholar]

- Altadmri, A.; Brown, N.C. 37 million compilations: Investigating novice programming mistakes in large-scale student data. In Proceedings of the 46th ACM Technical Symposium on Computer Science Education, Kansas, MO, USA, 4–7 March 2015; pp. 522–527. [Google Scholar]

- Buffardi, K.; Edwards, S.H. Effective and ineffective software testing behaviors by novice programmers. In Proceedings of the Ninth Annual International ACM Conference on International Computing Education Research, San Diego, CA, USA; 12–14 August 2013; pp. 83–90. [Google Scholar]

- Boyer, K.E.; Phillips, R.; Ingram, A.; Ha, E.Y.; Wallis, M.; Vouk, M.; Lester, J. Investigating the relationship between dialogue structure and tutoring effectiveness: A hidden Markov modeling approach. Int. J. Artif. Intell. Educ. 2011, 21, 65–81. [Google Scholar]

- Carter, A.S.; Hundhausen, C.D.; Adesope, O. The normalized programming state model: Predicting student performance in computing courses based on programming behavior. In Proceedings of the Eleventh Annual International Conference on International Computing Education Research, Omaha, NE, USA, 9–13 July 2015; pp. 141–150. [Google Scholar]

- Chen, C.M. Intelligent web-based learning system with personalized learning path guidance. Comput. Educ. 2008, 51, 787–814. [Google Scholar] [CrossRef]

- Azevedo, R.; Jacobson, M.J. Advances in scaffolding learning with hypertext and hypermedia: A summary and critical analysis. Educ. Technol. Res. Dev. 2008, 56, 93–100. [Google Scholar] [CrossRef]

- Brusilovsky, P. Methods and techniques of adaptive hypermedia. User Model. User-Adapt. Interact. 1996, 6, 87–129. [Google Scholar] [CrossRef]

- Brusilovsky, P.; Sosnovsky, S. Individualized exercises for self-assessment of programming knowledge: An evaluation of QuizPACK. J. Educ. Resour. Comput. (JERIC) 2005, 5, 6. [Google Scholar] [CrossRef]

- Hosseini, R.; Hsiao, I.H.; Guerra, J.; Brusilovsky, P. Off the Beaten Path: The Impact of Adaptive Content Sequencing on Student Navigation in an Open Social Student Modeling Interface. In Artificial Intelligence in Education; Conati, C., Heffernan, N., Mitrovic, A., Verdejo, M.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 624–628. [Google Scholar]

- Hosseini, R.; Hsiao, I.H.; Guerra, J.; Brusilovsky, P. What Should I Do Next? Adaptive Sequencing in the Context of Open Social Student Modeling. In Design for Teaching and Learning in a Networked World; Conole, G., Klobučar, T., Rensing, C., Konert, J., Lavoué, E., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 155–168. [Google Scholar]

- Hsiao, I.H. Mobile Grading Paper-Based Programming Exams: Automatic Semantic Partial Credit Assignment Approach. In Adaptive and Adaptable Learning; Verbert, K., Sharples, M., Klobučar, T., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 110–123. [Google Scholar]

- Ball, E.; Franks, H.; Jenkins, J.; Mcgrath, M.; Leigh, J. Annotation is a valuable tool to enhance learning and assessment in student essays. Nurse Educ. Today 2009, 29, 284–291. [Google Scholar] [CrossRef]

- Biggs, J.; Tang, C. Teaching for Quality Learning at University; UK Higher Education OUP Humanities & Social Sciences Higher Education OUP; McGraw-Hill Education: New York, NY, USA, 2011. [Google Scholar]

- Mensink, P.J.; King, K. Student access of online feedback is modified by the availability of assessment marks, gender and academic performance. Br. J. Educ. Technol. 2020, 51, 10–22. [Google Scholar] [CrossRef]

- Chi, M.T. Self-explaining expository texts: The dual processes of generating inferences and repairing mental models. Adv. Instr. Psychol. 2000, 5, 161–238. [Google Scholar]

- Roscoe, R.D.; Chi, M.T.H. Understanding Tutor Learning: Knowledge-Building and Knowledge-Telling in Peer Tutors’ Explanations and Questions. Rev. Educ. Res. 2007, 77, 534–574. [Google Scholar] [CrossRef] [Green Version]

- Winstone, N.; Bourne, J.; Medland, E.; Niculescu, I.; Rees, R. “Check the grade, log out”: Students’ engagement with feedback in learning management systems. Assess. Eval. High. Educ. 2021, 46, 631–643. [Google Scholar] [CrossRef]

- Paredes, Y.V.; Azcona, D.; Hsiao, I.H.; Smeaton, A. Learning by Reviewing Paper-Based Programming Assessments. In European Conference on Technology Enhanced Learning, EC-TEL; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11082, pp. 510–523. [Google Scholar]

- Paredes, Y.V.; Hsiao, I.H. Personalized guidance on how to review paper-based assessments. In Proceedings of the 26th International Conference on Computers in Education, Main Conference Proceedings, Manila, Philppines, 26–30 November 2018; pp. 26–30. [Google Scholar]

- Zimmerman, B.J. Self-regulated learning and academic achievement: An overview. Educ. Psychol. 1990, 25, 3–17. [Google Scholar] [CrossRef]

- Zimbardi, K.; Colthorpe, K.; Dekker, A.; Engstrom, C.; Bugarcic, A.; Worthy, P.; Victor, R.; Chunduri, P.; Lluka, L.; Long, P. Are they using my feedback? The extent of students’ feedback use has a large impact on subsequent academic performance. Assess. Eval. High. Educ. 2017, 42, 625–644. [Google Scholar] [CrossRef]

- Aleven, V.; Koedinger, K.R. Limitations of student control: Do students know when they need help? In Proceedings of the International Conference on Intelligent Tutoring Systems, Montréal, QC, Canada, 19–23 June 2000; Springer: Berlin/Heidelberg, Germany, 2000; pp. 292–303. [Google Scholar]

- Boud, D.; Molloy, E. Rethinking models of feedback for learning: The challenge of design. Assess. Eval. High. Educ. 2013, 38, 698–712. [Google Scholar] [CrossRef]

| Category | Group | No. of Students | |

|---|---|---|---|

| Academic Performance | High-achieving | 86 | |

| Low-achieving | 71 | ||

| Performance Trajectory | Exam1-Exam2 | Improving | 53 |

| Dropping | 102 | ||

| Retaining | 2 | ||

| Exam2-Exam3 | Improving | 79 | |

| Dropping | 77 | ||

| Retaining | 1 | ||

| Personalized Guidance | Guided | 46 | |

| Not Guided | 111 | ||

| Exam | Avg. Score | No. of Students Who Reviewed | Avg. Reviewing Delay (Days) | Std. Dev. (Days) |

|---|---|---|---|---|

| 1 | 83.3% | 142 (89.87%) | 4.7 | 14.4 |

| 2 | 78.6% | 131 (82.91%) | 2.4 | 6.8 |

| 3 | 79.6% | 100 (63.29%) | 0.9 | 2.2 |

| Group | Review Count | Time Spent Reviewing Assessments (mins) | Examination Review Count | Time Spent Reviewing Examinations * (mins) | Correct (mins) | Mistakes * (mins) | Review Coverage * |

|---|---|---|---|---|---|---|---|

| High | 48.10 | 23.38 | 24.95 | 8.42 | 6.51 | 1.62 | 0.73 |

| Low | 47.73 | 25.42 | 29.39 | 12.64 | 7.51 | 4.73 | 0.65 |

| Period | Group | r_correct | r_mistake |

|---|---|---|---|

| Exam1-Exam2 | Improving * | 0.39 | 0.63 |

| Dropping * | 0.42 | 0.64 | |

| Exam2-Exam3 | Improving * | 0.15 | 0.32 |

| Dropping | 0.26 | 0.31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paredes, Y.V.; Hsiao, I.-H. WebPGA: An Educational Technology That Supports Learning by Reviewing Paper-Based Programming Assessments. Information 2021, 12, 450. https://doi.org/10.3390/info12110450

Paredes YV, Hsiao I-H. WebPGA: An Educational Technology That Supports Learning by Reviewing Paper-Based Programming Assessments. Information. 2021; 12(11):450. https://doi.org/10.3390/info12110450

Chicago/Turabian StyleParedes, Yancy Vance, and I-Han Hsiao. 2021. "WebPGA: An Educational Technology That Supports Learning by Reviewing Paper-Based Programming Assessments" Information 12, no. 11: 450. https://doi.org/10.3390/info12110450

APA StyleParedes, Y. V., & Hsiao, I.-H. (2021). WebPGA: An Educational Technology That Supports Learning by Reviewing Paper-Based Programming Assessments. Information, 12(11), 450. https://doi.org/10.3390/info12110450