The Evolution of Language Models Applied to Emotion Analysis of Arabic Tweets

Abstract

1. Introduction

- Studying the evolution of language models for Arabic: a low-resource language.

- Developing classification models for an under resourced NLP task: emotion analysis.

- Analysis of different BERT models for Arabic.

2. Background and Related Work

3. Materials and Methods

3.1. Dataset

3.2. Modeling Study

3.2.1. First Study

- Emotion classification using SVM and TF–IDF as features (one experiment).

- Emotion classification using SVM and different versions of word2vec as features (four experiments).

- Emotion classification by finetuning on different monolingual BERT models for Arabic (five experiments).

- tp is the number of tweets classified correctly to the correct emotion e (true positive),

- fp is the number of tweets falsely classified to the emotion e (false positive),

- and fn is the number of tweets that should have been classified as emotion e but were falsely classified to another emotion (false negative).

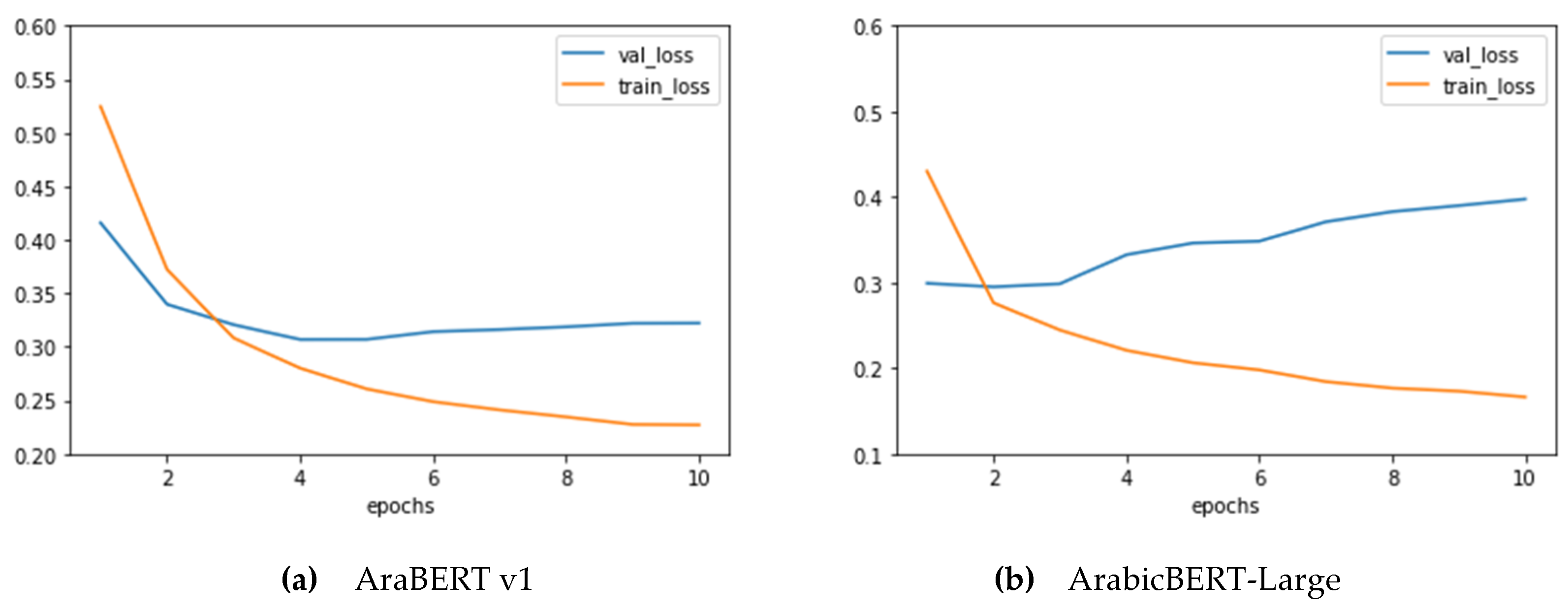

3.2.2. Second Study

4. Results and Discussion

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. In Proceedings of the Advances in Neural Information Processing Systems 26, Lake Tahoe, NV, USA, 5–10 December 2013; p. 9. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; pp. 5998–6008. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training (2018). Available online: https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 31 December 2020).

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692[cs]2019. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 5753–5763. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-Supervised Learning of Language Representations. arXiv 2020, arXiv:1909.11942 [cs] 2020. [Google Scholar]

- Clark, K.; Luong, M.-T.; Le, Q.V.; Manning, C.D. ELECTRA: Pre-Training Text Encoders as Discriminators Rather Than Generators. In Proceedings of the ICLR, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Qiu, X.; Sun, T.; Xu, Y.; Shao, Y.; Dai, N.; Huang, X. Pre-Trained Models for Natural Language Processing: A Survey. arXiv 2020, arXiv:2003.08271 [cs] 2020. [Google Scholar] [CrossRef]

- Mohammad, S.; Kiritchenko, S. Understanding Emotions: A Dataset of Tweets to Study Interactions between Affect Categories. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Ekman, P. An Argument for Basic Emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Plutchik, R. A general psychoevolutionary theory of emotion. In Theories of Emotion; Elsevier: San Leandro, CA, USA, 1980; pp. 3–33. [Google Scholar]

- Cherry, C.; Mohammad, S.M.; Bruijn, B.D. Binary Classifiers and Latent Sequence Models for Emotion Detection in Suicide Notes. Biomed. Inform. Insights 2012. [CrossRef] [PubMed]

- Jabreel, M.; Moreno, A.; Huertas, A. Do local residents and visitors express the same sentiments on destinations through social media? In Information and Communication Technologies in Tourism; Springer: Cham, Switzerland, 2017; pp. 655–668. [Google Scholar]

- Mohammad, S.M.; Zhu, X.; Kiritchenko, S.; Martin, J. Sentiment, Emotion, Purpose, and Style in Electoral Tweets. Inf. Process. Manag. 2015, 51, 480–499, . [Google Scholar] [CrossRef]

- Cambria, E. Affective Computing and Sentiment Analysis. IEEE Intell. Syst. 2016, 31, 102–107. [Google Scholar] [CrossRef]

- Mohammad, S.; Bravo-Marquez, F.; Salameh, M.; Kiritchenko, S. SemEval-2018 Task 1: Affect in Tweets. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 23 April 2018; pp. 1–17. [Google Scholar]

- Al-Khatib, A.; El-Beltagy, S.R. Emotional Tone Detection in Arabic Tweets. In Proceedings of the Computational Linguistics and Intelligent Text Processing, Hanoi, Vietnam, 18–24 March 2018; pp. 105–114. [Google Scholar]

- Abdul-Mageed, M.; AlHuzli, H.; Abu Elhija, D.; Diab, M. DINA: A Multi-Dialect Dataset for Arabic Emotion Analysis. In Proceedings of the 2nd Workshop on Arabic Corpora and Processing Tools 2016 Theme: Social Media held in conjunction with the 10th International Conference on Language Resources and Evaluation (LREC2016), Portorož, Slovenia, 23–28 May 2016. [Google Scholar]

- Alhuzali, H.; Abdul-Mageed, M.; Ungar, L. Enabling Deep Learning of Emotion with First-Person Seed Expressions. In Proceedings of the Second Workshop on Computational Modeling of People’s Opinions, Personality, and Emotions in Social Media, New Orleans, LA, USA, 5–6 June 2018; pp. 25–35. [Google Scholar]

- Antoun, W.; Baly, F.; Hajj, H. AraBERT: Transformer-Based Model for Arabic Language Understanding. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, with a Shared Task on Offensive Language Detection, Marseille, France, 12 May 2020; pp. 9–15. [Google Scholar]

- Safaya, A.; Abdullatif, M.; Yuret, D. KUISAIL at SemEval-2020 Task 12: BERT-CNN for Offensive Speech Identification in Social Media. In Proceedings of the Fourteenth Workshop on Semantic Evaluation; International Committee for Computational Linguistics: Barcelona (online), Barcelona, Spain, 12–13 December 2020; pp. 2054–2059. [Google Scholar]

- Talafha, B.; Ali, M.; Za’ter, M.E.; Seelawi, H.; Tuffaha, I.; Samir, M.; Farhan, W.; Al-Natsheh, H.T. Multi-Dialect Arabic BERT for Country-Level Dialect Identification. In Proceedings of the Fifth Arabic Natural Language Processing Workshop (WANLP2020), Barcelona, Spain, 12 December 2020. [Google Scholar]

- Soliman, A.B.; Eissa, K.; El-Beltagy, S.R. AraVec: A Set of Arabic Word Embedding Models for Use in Arabic NLP. Procedia Comput. Sci. 2017, 117, 256–265, . [Google Scholar] [CrossRef]

- Abdelali, A.; Darwish, K.; Durrani, N.; Mubarak, H. Farasa: A Fast and Furious Segmenter for Arabic. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations, San Diego, CA, USA, 12–17 June 2016; pp. 11–16. [Google Scholar]

- Zampieri, M.; Nakov, P.; Rosenthal, S.; Atanasova, P.; Karadzhov, G.; Mubarak, H.; Derczynski, L.; Pitenis, Z.; Çöltekin, Ç. SemEval-2020 Task 12: Multilingual Offensive Language Identification in Social Media (OffensEval 2020). In Proceedings of the Fourteenth Workshop on Semantic Evaluation; International Committee for Computational Linguistics, Barcelona, Spain, 12–13 December 2020; pp. 1425–1447. [Google Scholar]

- Abdul-Mageed, M.; Zhang, C.; Bouamor, H.; Habash, N. NADI 2020: The First Nuanced Arabic Dialect Identification Shared Task. In Proceedings of the Fifth Arabic Natural Language Processing Workshop; Association for Computational Linguistics, Barcelona, Spain, 12 December 2020; pp. 97–110. [Google Scholar]

- Badaro, G.; El Jundi, O.; Khaddaj, A.; Maarouf, A.; Kain, R.; Hajj, H.; El-Hajj, W. EMA at SemEval-2018 Task 1: Emotion Mining for Arabic. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 236–244. [Google Scholar]

- Mulki, H.; Bechikh Ali, C.; Haddad, H.; Babaoğlu, I. Tw-StAR at SemEval-2018 Task 1: Preprocessing Impact on Multi-Label Emotion Classification. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 167–171. [Google Scholar]

- Abdullah, M.; Shaikh, S. TeamUNCC at SemEval-2018 Task 1: Emotion Detection in English and Arabic Tweets Using Deep Learning. In Proceedings of the 12th International Workshop on Semantic Evaluation, New Orleans, LA, USA, 5–6 June 2018; pp. 350–357. [Google Scholar]

- Jabreel, M.; Moreno, A. A Deep Learning-Based Approach for Multi-Label Emotion Classification in Tweets. Appl. Sci. 2019, 9, 1123. [Google Scholar] [CrossRef]

- Mao, X.; Chang, S.; Shi, J.; Li, F.; Shi, R. Sentiment-Aware Word Embedding for Emotion Classification. Appl. Sci. 2019, 9, 1334. [Google Scholar] [CrossRef]

- Erenel, Z.; Adegboye, O.R.; Kusetogullari, H. A New Feature Selection Scheme for Emotion Recognition from Text. Appl. Sci. 2020, 10, 5351. [Google Scholar] [CrossRef]

- Al-A’abed, M.; Al-Ayyoub, M. A Lexicon-Based Approach for Emotion Analysis of Arabic Social Media Content. In Proceedings of the The International Computer Sciences and Informatics Conference (ICSIC), Amman, Jordan, 12–13 January 2016. [Google Scholar]

- Hussien, W.A.; Tashtoush, Y.M.; Al-Ayyoub, M.; Al-Kabi, M.N. Are Emoticons Good Enough to Train Emotion Classifiers of Arabic Tweets? In Proceedings of the 2016 7th International Conference on Computer Science and Information Technology (csit), Amman, Jordan, 13–14 July 2016; IEEE: New York, NY, USA, 2016. ISBN 978-1-4673-8913-6. [Google Scholar]

- Rabie, O.; Sturm, C. Feel the Heat: Emotion Detection in Arabic Social Media Content. In Proceedings of the International Conference on Data Mining, Internet Computing, and Big Data (BigData2014), Kuala Lumpur, Malaysia, 17–19 November 2014; pp. 37–49. [Google Scholar]

- Abdullah, M.; Hadzikadicy, M.; Shaikhz, S. SEDAT: Sentiment and Emotion Detection in Arabic Text Using CNN-LSTM Deep Learning. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 835–840. [Google Scholar]

- Abdul-Mageed, M.; Zhang, C.; Hashemi, A.; Nagoudi, E.M.B. AraNet: A Deep Learning Toolkit for Arabic Social Media. In Proceedings of the 4th Workshop on Open-Source Arabic Corpora and Processing Tools, with a Shared Task on Offensive Language Detection, Marseille, France, 12 May 2020; pp. 16–23. [Google Scholar]

- Alswaidan, N.; Menai, M.E.B. Hybrid Feature Model for Emotion Recognition in Arabic Text. IEEE Access 2020, 8, 37843–37854, . [Google Scholar] [CrossRef]

- Almahdawi, A.J.; Teahan, W.J. A New Arabic Dataset for Emotion Recognition. In Proceedings of the Intelligent Computing; Arai, K., Bhatia, R., Kapoor, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 200–216. [Google Scholar]

| AraBert | ArabicBERT Mini | ArabicBERT Medium | ArabicBERT Base | ArabicBERT Large | |

|---|---|---|---|---|---|

| Hidden Layers | 12 | 4 | 8 | 12 | 24 |

| Attention heads | 12 | 4 | 8 | 12 | 16 |

| Hidden size | 768 | 256 | 512 | 768 | 1024 |

| Parameters | 110 M | 11 M | 42 M | 110 M | 340 M |

| Emotion | Train | Test | Total |

|---|---|---|---|

| anger | 827 | 210 | 1037 |

| fear | 715 | 181 | 896 |

| joy | 953 | 237 | 1190 |

| sadness | 674 | 165 | 839 |

| Total | 3169 | 793 | 3962 |

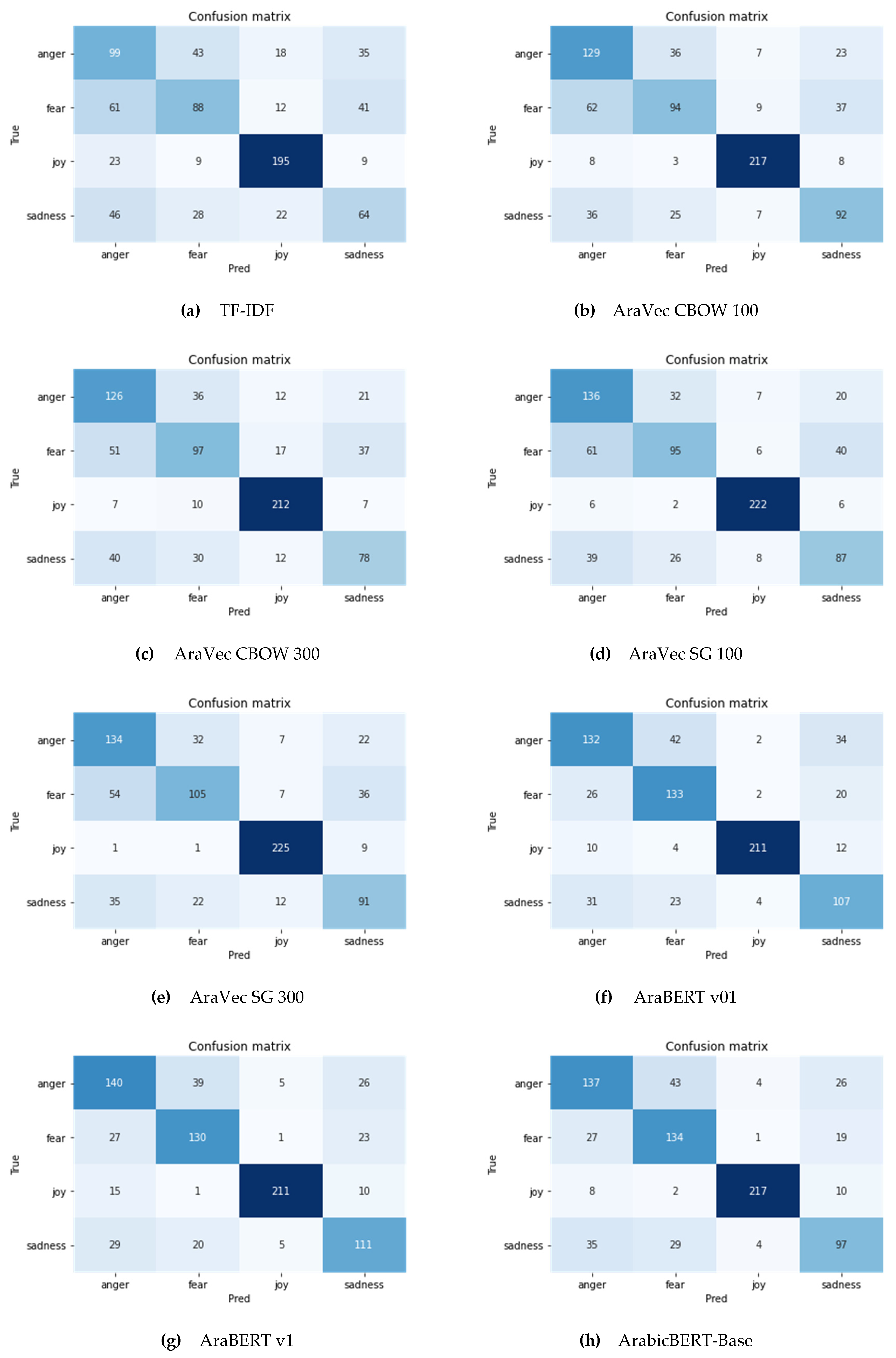

| Method | Anger-F1 | Fear-F1 | Sad-F1 | Joy-F1 | Macro F1 | Micro F1 |

|---|---|---|---|---|---|---|

| TF-IDF | 0.47 | 0.48 | 0.41 | 0.81 | 0.54 | 0.56 |

| AraVecCBOW100 | 0.60 | 0.52 | 0.57 | 0.91 | 0.65 | 0.67 |

| AraVecSG100 | 0.62 | 0.53 | 0.56 | 0.93 | 0.66 | 0.68 |

| AraVecCBOW300 | 0.60 | 0.53 | 0.52 | 0.87 | 0.63 | 0.65 |

| AraVecSG300 | 0.64 | 0.58 | 0.57 | 0.92 | 0.68 | 0.70 |

| AraBertv01 | 0.66 | 0.64 | 0.68 | 0.92 | 0.73 | 0.74 |

| AraBertv1 | 0.67 | 0.65 | 0.68 | 0.91 | 0.73 | 0.74 |

| ArabicBertBase | 0.66 | 0.60 | 0.66 | 0.92 | 0.71 | 0.73 |

| ArabicBertLarge | 0.69 | 0.67 | 0.71 | 0.95 | 0.76 | 0.77 |

| Multi-DialectBert | 0.64 | 0.64 | 0.68 | 0.92 | 0.72 | 0.73 |

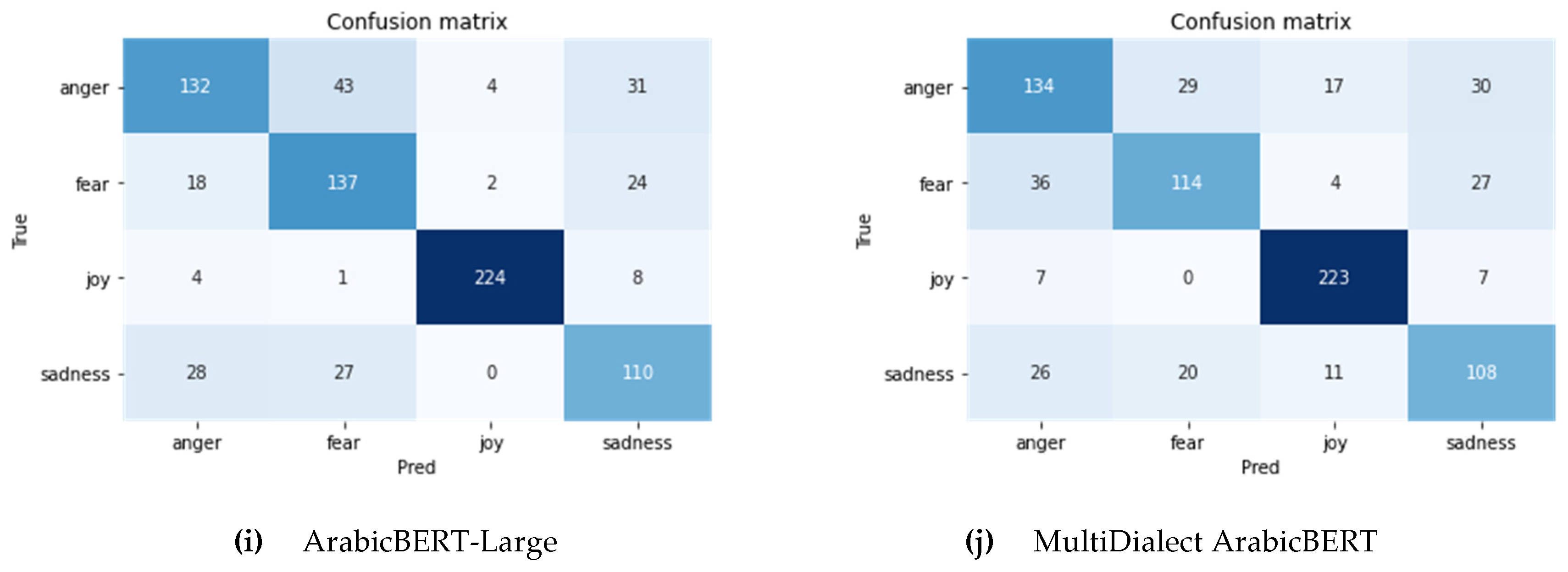

| Method | Macro F1 | Micro F1 | Confidence Interval |

|---|---|---|---|

| AraBertv01 | 0.73 | 0.74 | 74 (+/− 0.03) |

| AraBertv1 | 0.72 | 0.73 | 73 (+/− 0.02) |

| ArabicBertBase | 0.70 | 0.72 | 72 (+/− 0.04) |

| ArabicBertLarge | 0.72 | 0.74 | 74 (+/− 0.04) |

| Multi-DialectBert | 0.70 | 0.71 | 71 (+/− 0.02) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Twairesh, N. The Evolution of Language Models Applied to Emotion Analysis of Arabic Tweets. Information 2021, 12, 84. https://doi.org/10.3390/info12020084

Al-Twairesh N. The Evolution of Language Models Applied to Emotion Analysis of Arabic Tweets. Information. 2021; 12(2):84. https://doi.org/10.3390/info12020084

Chicago/Turabian StyleAl-Twairesh, Nora. 2021. "The Evolution of Language Models Applied to Emotion Analysis of Arabic Tweets" Information 12, no. 2: 84. https://doi.org/10.3390/info12020084

APA StyleAl-Twairesh, N. (2021). The Evolution of Language Models Applied to Emotion Analysis of Arabic Tweets. Information, 12(2), 84. https://doi.org/10.3390/info12020084