RDAMS: An Efficient Run-Time Approach for Memory Fault and Hardware Trojans Detection

Abstract

:1. Introduction

- (i).

- Extend the March C- algorithm and innovatively present a diagnosis process of the algorithm, which enables the detection of both run-time memory faults and HT attacks.

- (ii).

- Design a low latency detection control scheme that can handle the memory access collision between regular bus operation and security detection.

- (iii).

- Propose a block-based memory detection approach and implement four modes with different detection granularity. Users can flexibly configure the most applicable memory detection mode online according to their detection requirements.

2. Fault and Attack Models

2.1. Fault Models

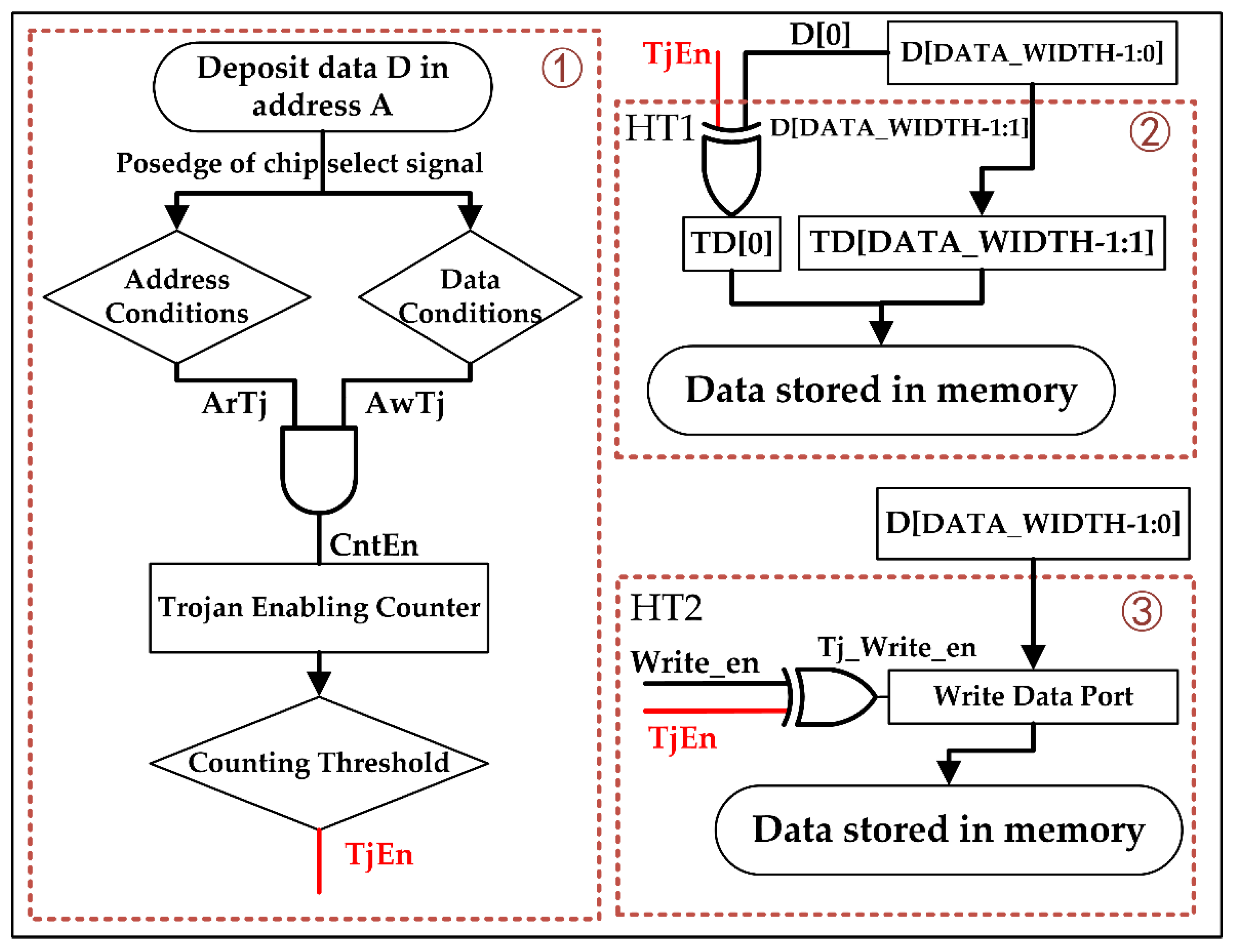

2.2. Attack Models

3. Design of RDAMS

3.1. Detection Algorithm

3.2. Diagnosis Process

- (a)

- We limit the potential HTs types in memory into functional integrity destruction and availability disruption only (HT1 and HT2). Because they have covered the most typical types of the run-time attack, others involving information leaking can be prohibited by designing a side channel-resisted circuit [33].

- (b)

- The memory faults that RDAMS can detect and identify are limited to the ten types of faults in Table 1. For some other faults, RDAMS can also detect but cannot identify it.

- Each potential fault and HT1 in memory only affects one part in each cell (32 bit), HT2 affects 32 parts in each cell. The case that one cell has multiple bits with faults is not considered.

- Fault and HTs will not appear in the same cell at the same time.

- HTs attacks are episodic, so HT will not be triggered at the same cell in two consecutive detections.

3.3. Detection Architecture

3.3.1. MDM

- (1)

- IDLE: Waiting for enabling detection;

- (2)

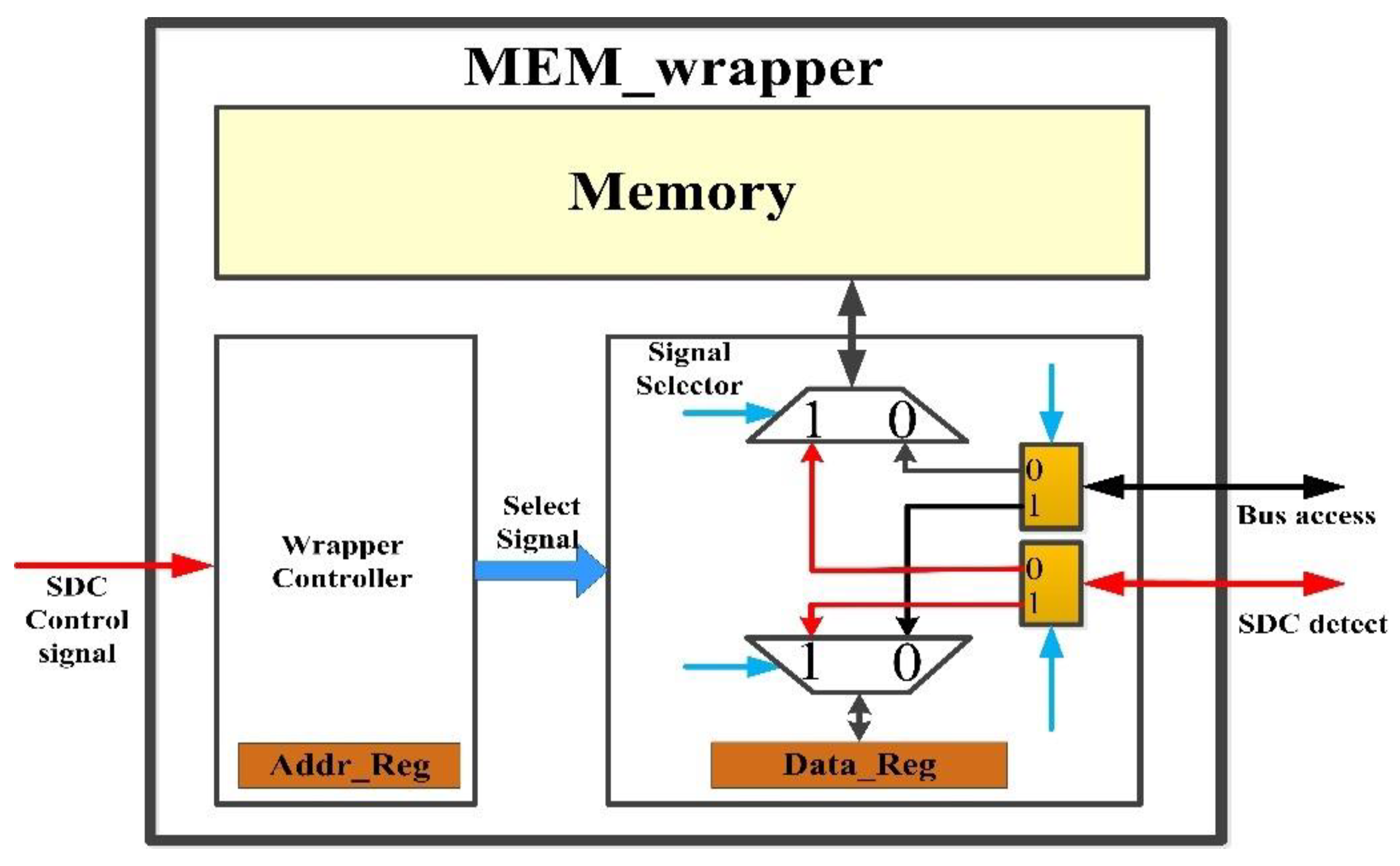

- COPY_MEM & WRITE_REG: Copy the memory cells under detection into MEM_wrapper’s back up registers;

- (3)

- UP_W0→DOWN_R0: From UP_W0 to DOWN_R0, Executing the March C- algorithm to test memory;

- (4)

- RECOPY_REG &WRITE_MEM: Write the value of MEM_wrapper’s register back to the detected cell.

- (5)

- ERROR_LOG: Write the detection results to Error Log Reg.

3.3.2. MEM_Wrapper

4. Optimization of RDAMS

4.1. Processing of Access Collision

| Algorithm 1. Processing of Access Collision. |

| 1:Backup phase. 2: if bus access memory then 3: The access is processed in testing phase. end if 4: Testing phase. 5: if bus accessmemory then 6: if axi_addr_i = Addr_Reg[i] then 7: Bus access Data_Reg[i] and testing continues. 8: else 9: Record testing status, testing pause and bus access memory. 10: if bus accessing is over then 11: Testing continues. end if 12: end if 13: else 14: testing continues. 15: end if 16: Write Back phase. 17: if bus access memory then 18: The access is processed in Error log phase end if 19: Error Log phase. 20: Log detection result and process bus access. |

4.2. Multi-Granularity Detection Mode

4.2.1. The Meaning of Multi-Granularity Detection

- Mode 1: Randomly select one cell to detect in each group. If no security threat is reported in result, all eight cells are considered secure.

- Mode 2: Randomly select two cells to detect in each group. If no security threat is reported in result, all eight cells are considered secure.

- Mode 3: Randomly select four cells to detect in each group. If no security threat is reported in result, all eight cells are considered secure.

- Mode 4: All eight cells are detected in each group. If no security threat is reported in result, all eight cells are considered secure.

4.2.2. Cost Analysis for Multi-Granularity Detection

5. Results and Discussion

5.1. Fault Detection Results

5.2. Attack Detection Results

5.3. Verification on FPGA

5.4. Verification of Access Collision Processing

5.5. Cost of Detection Architecture

6. Conclusions

- (i).

- The RDAMS can correctly detect and identify all the ten types of memory faults listed in Table 1 and two types of HTs triggered at runtime—HT1 (for functional integrity), HT2 (for availability). The diagnosis process ensures that the architecture can detect faults after one round of detection, and diagnose HTs attacks after two rounds of detection.

- (ii).

- The RDAMS is designed with a collision handling mechanism which enables a lower latency response to bus access requests during detection (For read requests: TS = 1 clock, TE = 2 clock; for write requests: TS = 0 clock, TE = 2 clock).

- (iii).

- The block-based sampling approach and DPR-based implementation make the architecture scalable for different memories and online reconfigurable for detection modes, which occupies fewer resources.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dahlqvist, F.; Patel, M.; Rajko, A.; Shulman, J. Growing Opportunities in the Internet of Things. Available online: https://www.mckinsey.com/industries/private-equity-and-principal-investors/our-insights/growing-opportunities-in-the-internet-of-things (accessed on 2 March 2021).

- SEMICO Research Corporation. Available online: http://www.semico.com (accessed on 18 February 2021).

- Saeidi, R.; Garakani, H.G. SRAM hardware Trojan. In Proceedings of the 2016 8th International Symposium on Telecommunications (IST), Tehran, Iran, 27–28 September 2016; pp. 719–722. [Google Scholar]

- Suwansrikham, P.; Kun, S.; Hayat, S.; Jackson, J. Dew Computing and Asymmetric Security Framework for Big Data File Sharing. Information 2020, 11, 303. [Google Scholar] [CrossRef]

- Hamad, M.; Prevelakis, V. SAVTA: A Hybrid Vehicular Threat Model: Overview and Case Study. Information 2020, 11, 273. [Google Scholar] [CrossRef]

- Kim, L.; Villasenor, J.D. Dynamic Function Verification for System on Chip Security Against Hardware-Based Attacks. IEEE Trans. Reliab. 2015, 64, 1229–1242. [Google Scholar] [CrossRef]

- Das, B.; Das, N.; Sikdar, B.K. Effect of Trojans on Write Data Access in Memory. In Proceedings of the 2019 9th International Symposium on Embedded Computing and System Design (ISED), Kollam, India, 13–14 December 2019; pp. 1–5. [Google Scholar]

- Khan, M.N.I.; De, A.; Ghosh, S. Cache-Out: Leaking Cache Memory Using Hardware Trojan. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020, 28, 1461–1470. [Google Scholar] [CrossRef]

- Jianfeng, Z.; Gang, S. A Survey on the studies of Hardware Trojan. J. Cyber Secur. 2017, 2, 74–90. [Google Scholar]

- Koshy, T.; Arun, C.S. Diagnostic data detection of faults in RAM using different march algorithms with BIST scheme. In Proceedings of the 2016 International Conference on Emerging Technological Trends (ICETT), Kollam, India, 21–22 October 2016; pp. 1–6. [Google Scholar]

- Die, H.; Junmin, W. A Parallel Memory Fault Detection Scheme Combined with Hardware Characteristic. Comput. Eng. 2014, 40, 310–314. [Google Scholar]

- Tong, Z.; Benmao, C.; Xiaofeng, Z. Design of BIST Testing SRAM Based on MARCH Algorithm. Comput. Mod. 2013, 1, 99–101. [Google Scholar]

- Jun, X.; Lan, W.; Jinlong, G.; Dan, C.; Zenghua, F. Design of memory detection for raising reliability of vital computer. Railw. Comput. Appl. 2014, 23, 47–52. [Google Scholar]

- Ailing, R.; Ming, L.; Guanglin, W.; Rui, L. An Efficient Diagnosis Algorithm for the Test of Embedded SRAM. J. Appl. Sci. 2005, 2, 178–182. [Google Scholar]

- Zhenkun, T. An Online Self-Healing Research Based on ECC and BISR for Embedded RAM. Master’s Thesis, University of Electronic Science and Technology of China, Chendu, China, 2020. [Google Scholar]

- De, A.; Nasim Imtiaz Khan, M.; Nagarajan, K.; Ghosh, S. HarTBleed: Using Hardware Trojans for Data Leakage Exploits. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020, 28, 968–979. [Google Scholar] [CrossRef]

- Bolat, A.; Cassano, L.; Reviriego, P.; Ergin, O.; Ottavi, M. A Microprocessor Protection Architecture against Hardware Trojans in Memories. In Proceedings of the 2020 15th Design & Technology of Integrated Systems in Nanoscale Era (DTIS), Marrakech, Morocco, 1–3 April 2020; pp. 1–6. [Google Scholar]

- Xiongwei, L.; Xiaohan, W.; Yang, Z.; Kaiyan, C.; Lu, X. Survey on the Hardware Trojan Protection. J. Ordnance Eng. Coll. 2015, 27, 40–50. [Google Scholar]

- Hoque, T.; Wang, X.; Basak, A.; Karam, R.; Bhunia, S. Hardware Trojan attacks in embedded memory. In Proceedings of the 2018 IEEE 36th VLSI Test Symposium (VTS), San Francisco, CA, USA, 22–25 April 2018; pp. 1–6. [Google Scholar]

- Palmiero, C.; Guglielmo, G.D.; Lavagno, L.; Carloni, L.P. Design and Implementation of a Dynamic Information Flow Tracking Architecture to Secure a RISC-V Core for IoT Applications. In Proceedings of the 2018 IEEE High Performance extreme Computing Conference (HPEC), Greater Boston, MA, USA, 25–27 September 2018; pp. 1–7. [Google Scholar]

- Huayu, Y.; Lan, C.; Xin, T.; Ying, L. Design of SoC Security Check Architecture for Internet of Things Applications. Comput. Eng. 2021, 47, 152–159. [Google Scholar]

- Basak, A.; Bhunia, S.; Ray, S. A flexible architecture for systematic implementation of SoC security policies. In Proceedings of the 2015 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Austin, TX, USA, 2–6 November 2015; pp. 536–543. [Google Scholar]

- Libo, Z.; Wei, T.; Wei, Y.; Junling, L. Full-Speed Test Design Based on Memory Built-in Self-Test. Microelectron. Comput. 2018, 35, 43–46. [Google Scholar]

- Dongming, C.; Jianbing, C.; Zhikuang, C. MBIST Design for the 40 nm SoC Chip. Chin. J. Electron Devices 2017, 40, 813–818. [Google Scholar]

- Basak, A.; Bhunia, S.; Ray, S. Exploiting design-for-debug for flexible SoC security architecture. In Proceedings of the 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 5–9 June 2016; pp. 1–6. [Google Scholar]

- Xilinx. Vivado Design Suite User Guide: Partial Reconfiguration. Available online: https://www.xilinx.com/support/documentation/sw_manuals/xilinx2018_2/ug909-vivado-partial-reconfiguration.pdf (accessed on 20 May 2020).

- Xilinx. Partial Reconfiguration Controller v1.3: LogiCORE IP Product Guide. Available online: https://www.xilinx.com/support/documentation/ip_documentation/prc/v1_0/pg193-partial-reconfiguration-controller.pdf (accessed on 26 May 2020).

- Nath, A.P.D.; Bhunia, S.; Ray, S. ArtiFact: Architecture and CAD Flow for Efficient Formal Verification of SoC Security Policies. In Proceedings of the 2018 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Hong Kong, China, 8–11 July 2018; pp. 411–416. [Google Scholar]

- Nath, A.P.D.; Ray, S.; Basak, A.; Bhunia, S. System-on-chip security architecture and CAD framework for hardware patch. In Proceedings of the 2018 23rd Asia and South Pacific Design Automation Conference (ASP-DAC), Jeju Island, Korea, 22–25 January 2018; pp. 733–738. [Google Scholar]

- Zhe, H. Design and Detection of Hardware Trojan Horse. Master’s Thesis, South China University of Technology, Taipei, Taiwan, 2016. [Google Scholar]

- Huang, Z.; Yao, R.; Luo, F. Trigger Circuit of Hardware Trojan Based on Up/Down Counter. IEICE Trans. Electron. 2015, 98, 279–282. [Google Scholar] [CrossRef]

- Kan, S.; Dworak, J. Triggering Trojans in SRAM circuits with X-propagation. In Proceedings of the 2014 IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems (DFT), Amsterdam, The Netherlands, 1–3 October 2014; pp. 1–8. [Google Scholar]

- Fang, X.; Luo, P.; Fei, Y.; Leeser, M. Balance power leakage to fight against side-channel analysis at gate level in FPGAs. In Proceedings of the 2015 IEEE 26th International Conference on Application-specific Systems, Architectures and Processors (ASAP), Jeju, Korea, 27–29 July 2015; pp. 154–155. [Google Scholar]

- Crockett, L.H.; Elliot, R.; Enderwitz, M.; Stewart, R. The Zynq Book: Embedded Processing with the ARM Cortex-A9 on the Xilinx Zynq-7000 All Programmable SoC; Strathclyde Academic Media: Glasgow, Scotland, 2014. [Google Scholar]

| Type | Name | Behavior |

|---|---|---|

| Stuck at Fault (SAF) | SAF 0 | The value of cell is stuck at 0 |

| SAF 1 | The value of cell is stuck at 1 | |

| Transition Fault (TF) | TF 0→1 | The value of cell cannot transition from 0 to 1 |

| TF 1→0 | The value of cell cannot transition from 1 to 0 | |

| Inversion Coupling Fault (CFin) | CFin(↑, ↕) 1 | When writing a cell, the value of another cell is inverted |

| CFin(↓, ↕) 2 | ||

| Idempotent Coupling Fault (CFid) | CFid(↑, 0) 3 | When writing a cell, the value of another cell is fixed at 0 |

| CFid(↓, 0) | ||

| CFid(↑, 1) 4 | When writing a cell, the value of another cell is fixed at 1 | |

| CFid(↓, 1) |

| Algorithm | Ω(n) | SAF | TF | CFin | CFid |

|---|---|---|---|---|---|

| MATS | 4n | N | N | N | N |

| MATS+ | 5n | A | N | N | N |

| MATS++ | 6n | A | A | N | N |

| March A | 15n | A | A | A | A |

| March C- | 10n | A | A | A | A |

| MSCAN | 4n | A | N | N | N |

| GALPAT | 4 | A | A | A | A |

| Fault | ↑(w0) | ↑(r0,w1) | ↑(r1,w0) | ↓(r0,w1) | ↓(r1,w0) | ↓(r0) | Results |

|---|---|---|---|---|---|---|---|

| SAF0 | 0 | 0 | 1 | 0 | 1 | 0 | 01010 |

| SAF1 | 0 | 1 | 0 | 1 | 0 | 1 | 10101 |

| TF 0→1 | 0 | 0 | 1 | 0 | 1 | 0 | 01010 |

| TF 1→0 | 0 | 0 | 0 | 1 | 0 | 1 | 00101 |

| CFin(↑,↕) | 0 | 1 | 1 | 0 | 1 | 0 | 11010 |

| CFin(↓,↕) | 0 | 1 | 1 | 0 | 1 | 1 | 11011 |

| CFid(↑,0) | 0 | 0 | 1 | 0 | 1 | 0 | 01010 |

| CFid(↑,1) | 0 | 1 | 0 | 1 | 0 | 0 | 10100 |

| CFid(↓,0) | 0 | 0 | 1 | 0 | 1 | 0 | 01010 |

| CFid(↓,1) | 0 | 1 | 0 | 0 | 0 | 1 | 10001 |

| No Fault | 0 | 0 | 0 | 0 | 0 | 0 | 00000 |

| Some Other Fault | 0 | 1/0 | 1/0 | 1/0 | 1/0 | 1/0 | Other |

| Fault Model | Address | Depth |

|---|---|---|

| SAF0 | 32′H40000004 | 1 |

| SAF1 | 32′H40000010 | 4 |

| TF 0 → 1 | 32′H40000028 | 10 |

| TF 1 → 0 | 32′H40000034 | 13 |

| CFin (↓,↕) | 32′H40000050 | 20 |

| CFid (↓,1) | 32′H40000058 | 22 |

| Collision (Yes/No) | Request(W/R) | TS (/clk:100 MHz) | TE (/clk:100 MHz) |

|---|---|---|---|

| Yes | W | 0 | 2 |

| R | 1 | 2 |

| Mode | Resource Cost | Time (/clk: 100 MHz) | ||

|---|---|---|---|---|

| Slices | LUT | FF | ||

| Mode 1 | 147 | 448 | 164 | 243 |

| Mode 2 | 174 | 532 | 208 | 453 |

| Mode 3 | 222 | 624 | 292 | 869 |

| Mode 4 | 281 | 776 | 440 | 1545 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Li, Y. RDAMS: An Efficient Run-Time Approach for Memory Fault and Hardware Trojans Detection. Information 2021, 12, 169. https://doi.org/10.3390/info12040169

Wang J, Li Y. RDAMS: An Efficient Run-Time Approach for Memory Fault and Hardware Trojans Detection. Information. 2021; 12(4):169. https://doi.org/10.3390/info12040169

Chicago/Turabian StyleWang, Jian, and Ying Li. 2021. "RDAMS: An Efficient Run-Time Approach for Memory Fault and Hardware Trojans Detection" Information 12, no. 4: 169. https://doi.org/10.3390/info12040169