Why Does the Automation Say One Thing but Does Something Else? Effect of the Feedback Consistency and the Timing of Error on Trust in Automated Driving

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Apparatus

2.3. Procedure

2.4. Data Analysis

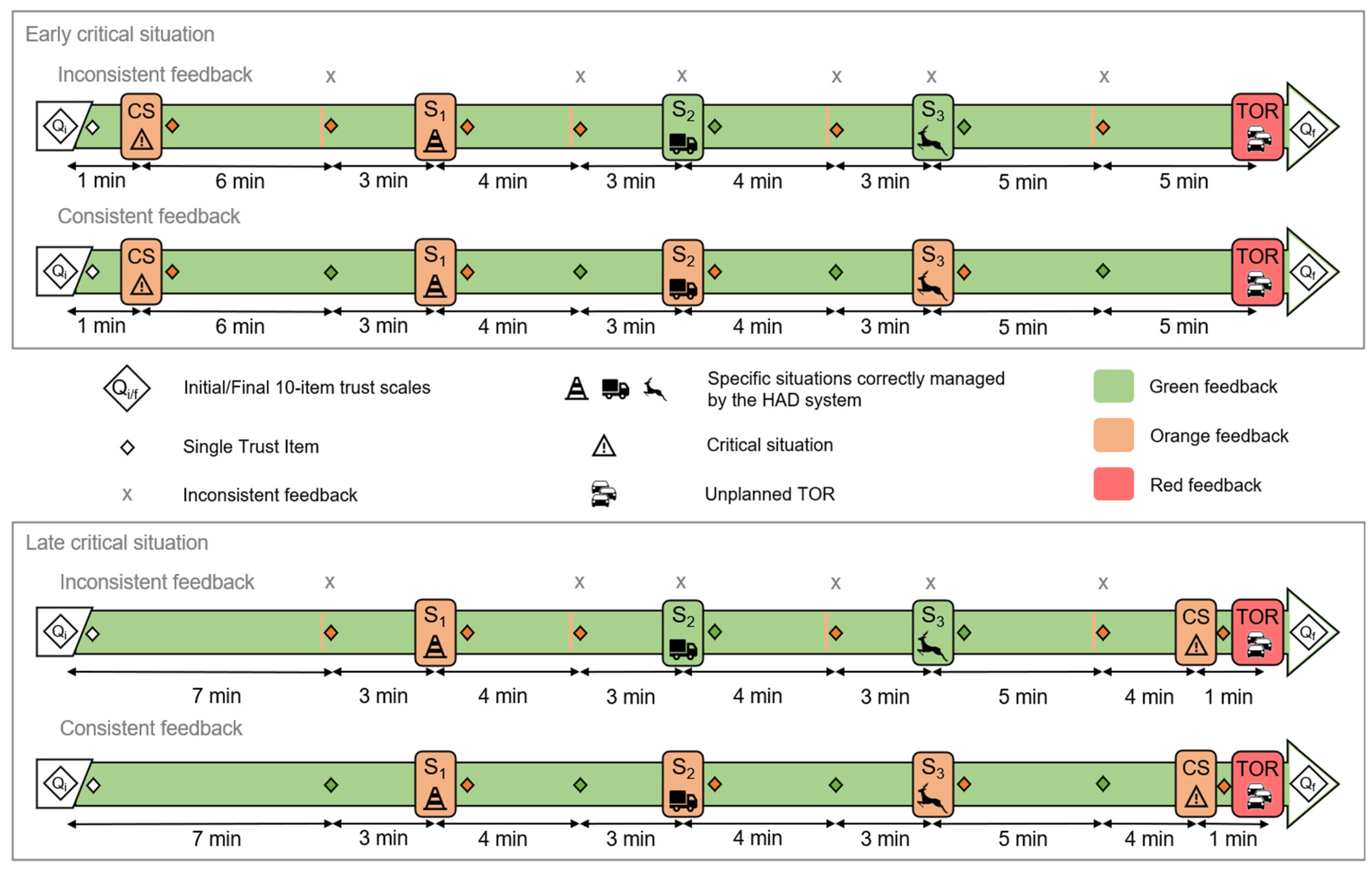

2.5. Design

3. Results

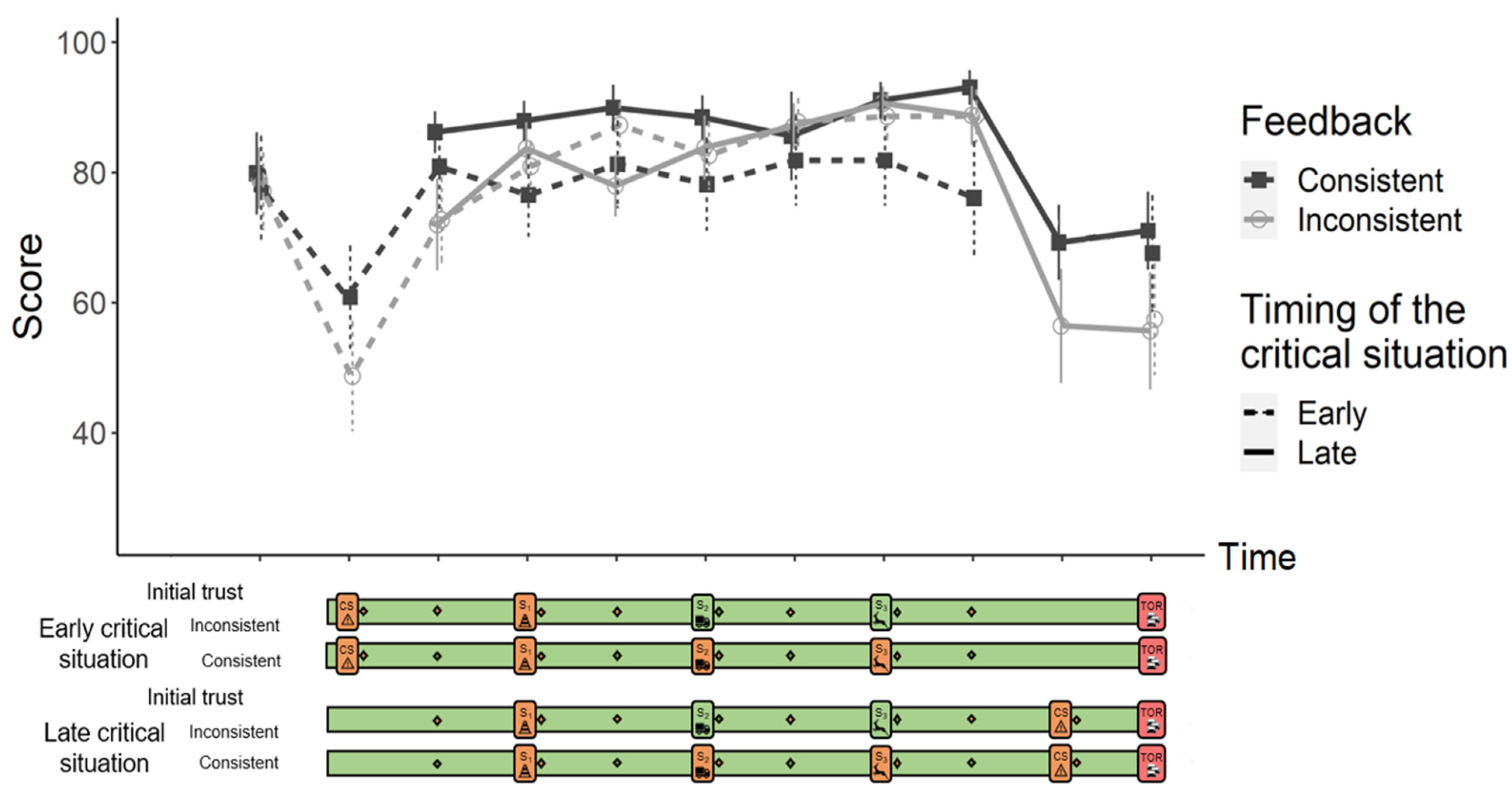

3.1. Trust and Perceived Understanding Questionnaires

3.2. Visual Behaviour

4. Discussion

4.1. Effect of the Timing of a Critical Situation

4.2. Effect of the Feedback Consistency

4.3. Effect of the Initial Learned Level of Trust

4.4. Limitations and Perspectives

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Society of Automotive Engineers (SAE) International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE International: Warrendale, PA, USA, 2021. [Google Scholar] [CrossRef]

- Navarro, J. A state of science on highly automated driving. Theor. Issues Ergon. Sci. 2019, 20, 366–396. [Google Scholar] [CrossRef]

- Dzindolet, M.T.; Peterson, S.A.; Pomranky, R.A.; Pierce, L.G.; Beck, H.P. The role of trust in automation reliance. Int. J. Hum. Comput. Stud. 2003, 58, 697–718. [Google Scholar] [CrossRef]

- Lee, J.; Moray, N. Trust, control strategies and allocation of function in human-machine systems. Ergonomics 1992, 35, 1243–1270. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.D.; Moray, N. Trust, self-confidence, and operators’ adaptation to automation. Int. J. Hum. Comput. Stud. 1994, 40, 153–184. [Google Scholar] [CrossRef]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Manchon, J.B.; Bueno, M.; Navarro, J. From manual to automated driving: How does trust evolve? Theor. Issues Ergon. Sci. 2020, 22, 528–554. [Google Scholar] [CrossRef]

- Hoff, K.A.; Bashir, M. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Liu, P.; Yang, R.; Xu, Z. Public acceptance of fully automated driving: Effects of social trust and risk/benefit perceptions: Public acceptance of fully automated driving. Risk Anal. 2019, 39, 326–341. [Google Scholar] [CrossRef]

- Molnar, L.J.; Ryan, L.H.; Pradhan, A.K.; Eby, D.W.; Louis, R.M.S.; Zakrajsek, J.S. Understanding trust and acceptance of automated vehicles: An exploratory simulator study of transfer of control between automated and manual driving. Transp. Res. Part F Traffic Psychol. Behav. 2018, 58, 319–328. [Google Scholar] [CrossRef]

- Kundinger, T.; Wintersberger, P.; Riener, A. (Over)Trust in Automated Driving: The sleeping Pill of Tomorrow? In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, UK, 4–9 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Kraus, J.; Scholz, D.; Stiegemeier, D.; Baumann, M. The more you know: Trust dynamics and calibration in highly automated driving and the effects of take-overs, system malfunction, and system transparency. Hum. Factors 2020, 62, 718–736. [Google Scholar] [CrossRef]

- Manchon, J.B.; Bueno, M.; Navarro, J. Calibration of Trust in Automated Driving: A matter of initial level of trust and automated driving style? Hum. Factors 2021. [Google Scholar] [CrossRef] [PubMed]

- Manchon, J.B.; Bueno, M.; Navarro, J. How the Initial Level of Trust in Automated Driving Impacts Drivers’ Behaviour and Early Trust Construction. Transp. Res. Part F Traffic Psychol. Behav. 2022, 86, 281–291. [Google Scholar] [CrossRef]

- Manzey, D.; Reichenbach, J.; Onnasch, L. Human Performance Consequences of Automated Decision Aids: The Impact of Degree of Automation and System Experience. J. Cogn. Eng. Decis. Mak. 2012, 6, 57–87. [Google Scholar] [CrossRef]

- Sanchez, J. Factors That Affect Trust and Reliance on an Automated Aid. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2006. Available online: https://core.ac.uk/display/4682674 (accessed on 2 March 2022).

- Seong, Y.; Bisantz, A.M. The impact of cognitive feedback on judgment performance and trust with decision aids. Int. J. Ind. Ergon. 2008, 38, 608–625. [Google Scholar] [CrossRef]

- Beller, J.; Heesen, M.; Vollrath, M. Improving the driver–automation interaction: An approach using automation uncertainty. Hum. Factors 2013, 55, 1130–1141. [Google Scholar] [CrossRef]

- Helldin, T.; Falkman, G.; Riveiro, M.; Davidsson, S. Presenting system uncertainty in automotive UIs for supporting trust calibration in autonomous driving. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Application—AutomotiveUI ’13, Eindhoven, The Netherlands, 27–30 October 2013; pp. 210–217. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2017; Available online: https://www.R-project.org/ (accessed on 12 January 2022).

- Wickham, H. Ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016; Available online: http://ggplot2.tidyverse.org (accessed on 12 January 2022).

- Forster, Y.; Naujoks, F.; Neukum, A. Increasing Anthropomorphism and Trust in Automated Driving Functions by Adding Speech Output. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 365–372. [Google Scholar] [CrossRef]

- Häuslschmid, R.; von Buelow, M.; Pfleging, B.; Butz, A. Supporting Trust in Autonomous Driving. In Proceedings of the 22nd International Conference on Intelligent User Interfaces—IUI ’17, Limassol, Cyprus, 13–16 March 2017; pp. 319–329. [Google Scholar] [CrossRef]

- Niu, D.; Terken, J.; Eggen, B. Anthropomorphizing information to enhance trust in autonomous vehicles. Hum. Factors Ergon. Manuf. Serv. Ind. 2018, 28, 352–359. [Google Scholar] [CrossRef]

- Koo, J.; Kwac, J.; Ju, W.; Steinert, M.; Leifer, L.; Nass, C. Why did my car just do that? Explaining semi-autonomous driving actions to improve driver understanding, trust, and performance. Int. J. Interact. Des. Manuf. IJIDeM 2015, 9, 269–275. [Google Scholar] [CrossRef]

- Norman, D.A.; Broadbent, D.E.; Baddeley, A.D.; Reason, J. The ‘problem’ with automation: Inappropriate feedback and interaction, not ‘over-automation’. Philos. Trans. R. Soc. London. B Biol. Sci. 1990, 327, 585–593. [Google Scholar] [CrossRef]

- Wagenaar, W.A.; Keren, G.B. Does The Expert Know? The Reliability of Predictions and Confidence Ratings of Experts. In Intelligent Decision Support in Process Environments; Springer: Berlin/Heidelberg, Germany, 1986; pp. 87–103. [Google Scholar] [CrossRef]

- Kraus, J.M.; Forster, Y.; Hergeth, S.; Baumann, M. Two routes to trust calibration: Effects of reliability and brand information on Trust in Automation. Int. J. Mob. Hum. Comput. Interact. 2019, 11, 17. [Google Scholar] [CrossRef]

- Navarro, J.; Deniel, J.; Yousfi, E.; Jallais, C.; Bueno, M.; Fort, A. Does False and Missed Lane Departure Warnings Impact Driving Performances Differently? Int. J. Hum. Comput. Interact. 2019, 35, 1292–1302. [Google Scholar] [CrossRef]

- Navarro, J.; Deniel, J.; Yousfi, E.; Jallais, C.; Bueno, M.; Fort, A. Influence of lane departure warnings onset and reliability on car drivers’ behaviors. Appl. Ergon. 2017, 59, 123–131. [Google Scholar] [CrossRef] [PubMed]

- Hartwich, F.; Hollander, C.; Johannmeyer, D.; Krems, J.F. Improving passenger experience and Trust in Automated Vehicles through user-adaptive HMIs: “The more the better” does not apply to everyone. Front. Hum. Dyn. 2021, 3, 669030. [Google Scholar] [CrossRef]

| Items | |

|---|---|

| 1 | I would feel safe in an automated vehicle. |

| 2 | The automated driving system provides me with more safety compared to manual driving. |

| 3 * | I would rather keep manual control of my vehicle than delegate it to the automated driving system on every occasion. |

| 4 | I would trust the automated driving system decisions. |

| 5 | I would trust the automated driving system capacities to manage complex driving situations. |

| 6 | If the weather conditions were bad (e.g., fog, glare, rain), I would delegate the driving task to the automated driving system. |

| 7 | Rather than monitoring the driving environment, I could focus on other activities confidently. |

| 8 | If driving was boring for me, I would rather delegate it to the automated driving system than do it myself. |

| 9 | I would delegate the driving to the automated driving system if I was tired. |

| Initial Level of Learned Trust | Feedback Consistency | Timing of Error | Trust Measurement | N | Age (Mean) | Trust | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Min. | Q1 | Median | Q3 | Max. | ||||||

| Trustful | Consistent | Early | Initial | 8 | 45.2 | 69.8 | 60 | 67.5 | 69 | 71 | 82 |

| Final | 81.2 | 50 | 75.5 | 82 | 91.5 | 100 | |||||

| Trustful | Consistent | Late | Initial | 8 | 41.1 | 75 | 58 | 71.5 | 73 | 80 | 90 |

| Final | 94 | 72 | 80 | 87 | 90.5 | 100 | |||||

| Trustful | Inconsistent | Early | Initial | 8 | 36.5 | 74.5 | 58 | 68.5 | 74 | 82.5 | 90 |

| Final | 80.2 | 66 | 71 | 83 | 87 | 94 | |||||

| Trustful | Inconsistent | Late | Initial | 9 | 43.3 | 72.9 | 58 | 66 | 70 | 82 | 90 |

| Final | 80 | 54 | 68 | 82 | 86 | 100 | |||||

| Distrustful | Consistent | Early | Initial | 7 | 40.7 | 41.4 | 10 | 42 | 44 | 50 | 52 |

| Final | 52 | 30 | 34 | 54 | 68 | 76 | |||||

| Distrustful | Consistent | Late | Initial | 7 | 42.6 | 45.1 | 30 | 45 | 46 | 49 | 52 |

| Final | 69.7 | 52 | 61 | 64 | 75 | 100 | |||||

| Distrustful | Inconsistent | Early | Initial | 7 | 41.6 | 47.1 | 36 | 41 | 46 | 55 | 56 |

| Final | 65.1 | 46 | 49 | 58 | 80 | 94 | |||||

| Distrustful | Inconsistent | Late | Initial | 7 | 44.7 | 39.1 | 24 | 31 | 38 | 47 | 56 |

| Final | 69.4 | 38 | 57 | 72 | 74 | 100 | |||||

| Feedback Consistency | Timing of Error | Initial Level of Learned Trust | Bartlett’s df | Bartlett’s t | Bartlett’s p |

|---|---|---|---|---|---|

| Consistent | Early | Trustful | 1 | 3.79 | 0.052 |

| Distrustful | |||||

| Consistent | Late | Trustful | 1 | 0.595 | 0.441 |

| Distrustful | |||||

| Inconsistent | Early | Trustful | 1 | 0.339 | 0.560 |

| Distrustful | |||||

| Inconsistent | Late | Trustful | 1 | 0.002 | 0.967 |

| Distrustful |

| Trust | Perceived Understanding | |||

|---|---|---|---|---|

| (Intercept) | 40.50 | *** | 51.57 | *** |

| Timing of Error and Feedback Consistency | ||||

| Early critical situation and consistent feedback | Ref. | Ref. | ||

| Early critical situation and inconsistent feedback | −4.50 | ns | ||

| Late critical situation and consistent feedback | 7.65 | * | ns | |

| Late critical situation and inconsistent feedback | −1.24 | ns | ||

| Feedback Color and Timing of Error | ||||

| Green/HAD | Ref. | Ref. | ||

| Orange/HAD and early critical situation | 8.56 | −16.47 | *** | |

| Green/Correct situations and early critical situation | 9.23 | −2.23 | ||

| Orange/Correct situations and early critical situation | 1.39 | −0.67 | ||

| Orange/Critical situation and early critical situation | −17.33 | ** | −21.00 | *** |

| Red/TOR and early critical situation | −20.22 | *** | −18.43 | *** |

| Orange/HAD and late critical situation | 2.11 | −24.92 | *** | |

| Green/Correct situations and late critical situation | 7.05 | 0.31 | ||

| Orange/Correct situations and late critical situation | 2.41 | 1.73 | ||

| Orange/Critical situation and late critical situation | −25.87 | *** | −21.75 | *** |

| Red/TOR and late critical situation | −25.71 | *** | −20.88 | *** |

| Initial learned level of trust | 0.46 | *** | 0.40 | *** |

| Time (in minutes) | 0.33 | * | 0.10 | |

| Age | −0.00 | 0.08 | ||

| Male | −3.43 | * | −4.34 | * |

| Adjusted R2 | 0.2998 | |||

| Comparisons | χ2 | df | n | p | ||

|---|---|---|---|---|---|---|

| Green/HAD | Orange/HAD | 32.23 | 3 | 61 | <0.001 | *** |

| Green/Correct Situations | 40.36 | 3 | 61 | <0.001 | *** | |

| Orange/Correct Situations | 40.82 | 3 | 61 | <0.001 | *** | |

| Orange/Critical Situation | 50.53 | 3 | 61 | <0.001 | *** | |

| Red/TOR | 31.97 | 3 | 61 | <0.001 | *** | |

| Orange/HAD | Green/Correct Situations | 1.48 | 3 | 61 | >0.1 | |

| Orange/Correct Situations | 4.62 | 3 | 61 | >0.1 | ||

| Orange/Critical Situation | 8.34 | 3 | 61 | <0.05 | * | |

| Red/TOR | 0.902 | 3 | 61 | >0.1 | ||

| Green/Correct Situations | Orange/Correct Situations | 1.87 | 3 | 61 | >0.1 | |

| Orange/Critical Situation | 5.06 | 3 | 61 | >0.1 | ||

| Red/TOR | 3.5 | 3 | 61 | >0.1 | ||

| Orange/Correct Situations | Orange/Critical Situation | 10.23 | 3 | 61 | <0.05 | * |

| Red/TOR | 8.85 | 3 | 61 | <0.05 | * | |

| Orange/Critical Situation | Red/TOR | 7.67 | 3 | 61 | >0.1 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manchon, J.B.; Beaufort, R.; Bueno, M.; Navarro, J. Why Does the Automation Say One Thing but Does Something Else? Effect of the Feedback Consistency and the Timing of Error on Trust in Automated Driving. Information 2022, 13, 480. https://doi.org/10.3390/info13100480

Manchon JB, Beaufort R, Bueno M, Navarro J. Why Does the Automation Say One Thing but Does Something Else? Effect of the Feedback Consistency and the Timing of Error on Trust in Automated Driving. Information. 2022; 13(10):480. https://doi.org/10.3390/info13100480

Chicago/Turabian StyleManchon, J. B., Romane Beaufort, Mercedes Bueno, and Jordan Navarro. 2022. "Why Does the Automation Say One Thing but Does Something Else? Effect of the Feedback Consistency and the Timing of Error on Trust in Automated Driving" Information 13, no. 10: 480. https://doi.org/10.3390/info13100480

APA StyleManchon, J. B., Beaufort, R., Bueno, M., & Navarro, J. (2022). Why Does the Automation Say One Thing but Does Something Else? Effect of the Feedback Consistency and the Timing of Error on Trust in Automated Driving. Information, 13(10), 480. https://doi.org/10.3390/info13100480