Deep Learning with Word Embedding Improves Kazakh Named-Entity Recognition

Abstract

:1. Introduction

- We take the word-based and stem-based embedding pre-trained as an input, rather than only using word embedding. Additionally, we first consider the named-entity gazetteers with a graph structure for Kazakh NER.

- We design a novel WSGGA model for Kazakh NER, which integrates word–stem-based embeddings, gazetteer graph structures, GGNN, and an attention mechanism.

- We comprehensively analyze the structural characteristics of named entities in the Kazakh tourism domain, and effectively combine these specifics with neural networks. In addition, we evaluate our model on a benchmark dataset, with a considerable improvement over most state-of-the-art (SOTA) methods.

2. Related Works

3. Description of the Problem

3.1. The Complex Morphological Features of Kazakh

3.2. Specific Issues in Kazakh Tourism NER

- Some scenic spots are named too long, and the naming conventions are arbitrary. The length of most scenic areas is between 1 and 13 words. For example: “بانفاڭگۋ اۋىلدىق وسى زامانعى اۋىل-شارۋاشىلىق عىلىم تەحنيكاسىنان ۇلگى كورسەتۋ باقشاسى” (Banfanggou Township Modern Agricultural Technology Demonstration Park). According to statistics, 87.84% of the entities consist of more than two words, 55.12% of which are composed of two words, and in which the last word is the same to some extent. For example: “سايرام كولى” (Sayram Lake), “قاناس كولى” (Hanas Lake), “ۇلىڭگىر كولى” (Ulungur Lake), “حۋالين باقشاسى” (Hualin Park), “حۇڭشان باقشاسى” (Hongshan Park), and “اققايىڭ باقشاسى” (White Birch Forest Park).

- Some names of peoples, places, and nationalities are often nested. For example, “ىلە قازاق اۆتونوميالى وبلىسى” (Ili Kazakh Autonomous Prefecture), “گەنارال تاۋى ورمان باقشاسى” (Jiangjunshan Forest Park), or “قۇربان تۇلىم ەسكەرتكىش سارايى” (Kurban Tulum Memorial Hall).

- Multiple scenic spots may have the same name, or one scenic spot may have several names. For example, “نارات” (Narat), “ىلە نارات” (Ili Narat), “نارات ساحاراسى” (Narat Prairie), “نارات جايلاۋى” (Narat grasslands), “نارات كورىنس رايونى” (Narat scenic area), “نارات ساياحات وڭىرى” (Narat tourist spot), etc., are actually one entity.

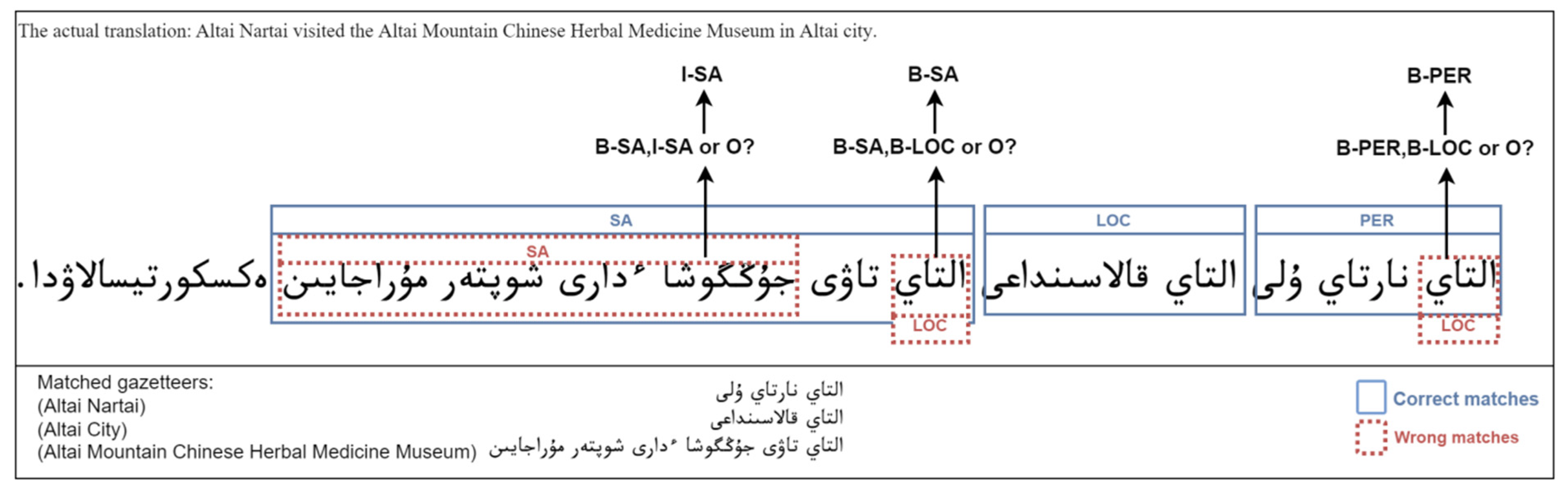

- Several entities lack clear boundaries and nest, and those types of entities are diverse, as shown in Figure 1.

4. The Proposed Approach

4.1. Model Architecture

4.2. Feature Representation Layer

4.2.1. Pre-Training Word–Stem Vectors

4.2.2. Training Word-Based and Stem-Based Embeddings

4.2.3. Constructing a Graph Structure

4.3. Graph-Gated Neural Network

4.4. Attention Mechanism

4.5. CRF Layer

5. Experiment

5.1. Dataset

5.2. Parameter Settings

5.3. Experimental Results and Analysis

5.3.1. Comparison of Word Embeddings

5.3.2. Experiment for Kazakh NER in Different Model

5.4. Ablation Experiment

5.5. Case Study

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kuwanto, G.; Akyürek, A.F.; Tourni, I.C.; Li, S.; Jones, A.G.; Wijaya, D. Low-Resource Machine Translation for Low-Resource Languages: Leveraging Comparable Data, Code-Switching and Compute Resources. Arxiv 2021, arXiv:2103.13272. [Google Scholar]

- Li, X.; Li, Z.; Sheng, J.; Slamu, W. Low-Resource Text Classification via Cross-Lingual Language Model Fine-Tuning; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Kumar, A.; Irsoy, O.; Ondruska, P.; Iyyer, M.; Bradbury, J.; Gulrajani, I.; Zhong, V.; Paulus, R.; Socher, R. Ask me anything: Dynamic memory networks for natural language processing. In Proceedings of the 33rd International Conference on Machine Learning, PMLR, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Ekbal, A.; Bandyopadhyay, S. Named entity recognition using support vector machine: A language independent approach. Int. J. Electr. Comput. Syst. Eng. 2010, 4, 155–170. [Google Scholar]

- Saito, K.; Nagata, M. Multi-language named-entity recognition system based on HMM. In Proceedings of the ACL 2003 Workshop on Multilingual and Mixed-Language Named Entity Recognition, Sapporo, Japan, 12 July 2003; pp. 41–48. [Google Scholar]

- Lafferty, J.; McCallum, A.; Pereira, F.C. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the 18th International Conference on Machine Learning 2001 (ICML 2001), Oslo, Norway, 22–25 August 2001. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. Arxiv 2013, arXiv:1301.3781. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef] [Green Version]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar]

- Chiu, J.P.C.; Nichols, E. Named entity recognition with bidirectional LSTM-CNNs. Trans. Assoc. Comput. Linguist. 2016, 4, 357–370. [Google Scholar] [CrossRef]

- Straková, J.; Straka, M.; Hajič, J. Neural architectures for nested NER through linearization. arXiv 2019, arXiv:1908.06926. [Google Scholar]

- Liu, L.; Shang, J.; Ren, X.; Xu, F.; Gui, H.; Peng, J.; Han, J. Empower sequence labeling with task-aware neural language model. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Lample, G.; Conneau, A. Cross-lingual language model pretraining. arXiv 2019, arXiv:1901.07291. [Google Scholar]

- Wang, S.; Chen, Z.; Ni, J.; Yu, X.; Li, Z.; Chen, H.; Yu, P.S. Adversarial defense framework for graph neural network. arXiv 2019, arXiv:1905.03679. [Google Scholar]

- Peng, D.L.; Wang, Y.R.; Liu, C.; Chen, Z. TL-NER: A transfer learning model for Chinese named entity recognition. Inf. Syst. Front. 2019, 22, 1291–1304. [Google Scholar] [CrossRef]

- Ding, R.; Xie, P.; Zhang, X.; Lu, W.; Li, L.; Si, L. A neural multi-digraph model for Chinese NER with gazetteers. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 1462–1467. [Google Scholar]

- Zhang, J.; Hao, K.; Tang, X.; Cai, X.; Xiao, Y.; Wang, T. A multi-feature fusion model for Chinese relation extraction with entity sense. Knowl.-Based Syst. 2020, 206, 106348. [Google Scholar] [CrossRef]

- Altenbek, G.; Abilhayer, D.; Niyazbek, M. A Study of Word Tagging Corpus for the Modern Kazakh Language. J. Xinjiang Univ. (Nat. Sci. Ed.) 2009, 4. Available online: https://xueshu.baidu.com/usercenter/paper/show?paperid=a3871a5f9467444c61107b80ce2cf989&site=xueshu_se (accessed on 1 March 2022). (In Chinese).

- Feng, J. Research on Kazakh Entity Name Recognition Method Based on N-gram Model; Xinjiang University: Ürümqi, China, 2010. [Google Scholar]

- Altenbek, G.; Wang, X.; Haisha, G. Identification of basic phrases for kazakh language using maximum entropy model. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, Dublin, Ireland, 23–29 August 2014; pp. 1007–1014. [Google Scholar]

- Wu, H.; Altenbek, G. Improved Joint Kazakh POS Tagging and Chunking. In Chinese Computational Linguistics and Natural Language Processing Based on Naturally Annotated Big Data; Springer: Cham, Switzerland, 2016; pp. 114–124. [Google Scholar]

- Gulmira, T.; Alymzhan, T.; Zheng, X. Named Entity Recognition for Kazakh Using Conditional Random Fields. In Proceedings of the 4-th International Conference on Computer Processing of Turkic Languages “TurkLang 2016”, Bishkek, Kyrgyzstan, 23–25 August 2016. [Google Scholar]

- Tolegen, G.; Toleu, A.; Mamyrbayev, O.; Mussabayev, R. Neural named entity recognition for Kazakh. arXiv 2020, arXiv:2007.13626. [Google Scholar]

- Akhmed-Zaki, D.; Mansurova, M.; Barakhnin, V.; Kubis, M.; Chikibayeva, D.; Kyrgyzbayeva, M. Development of Kazakh Named Entity Recognition Models. In Computational Collective Intelligence; International Conference on Computational Collective Intelligence; Springer: Cham, Switzerland, 2020; pp. 697–708. [Google Scholar]

- Abduhaier, D.; Altenbek, G. Research and implementation of Kazakh lexical analyzer. Comput. Eng. Appl. 2008, 44, 4. [Google Scholar]

- Altenbek, G.; Wang, X.L. Kazakh segmentation system of inflectional affixes. In Proceedings of the CIPS-SIGHAN Joint Conference on Chinese Language Processing, Beijing, China, 28–29 August 2010. [Google Scholar]

- Gilmer, J.; Schoenholz, S.S.; Riley, P.F.; Vinyals, O.; Dahl, G.E. Message passing neural networks. In Machine Learning Meets Quantum Physics; Springer: Cham, Switzerland, 2020; pp. 199–214. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Li, Y.; Tarlow, D.; Brockschmidt, M.; Zemel, R. Gated Graph Sequence Neural Networks. arXiv 2015, arXiv:1511.05493. [Google Scholar]

| Data | Sentence | SA | LOC | ORG | PER | SC | NA | CU |

|---|---|---|---|---|---|---|---|---|

| Train | 4800 | 4456 | 5064 | 510 | 728 | 914 | 241 | 629 |

| Dev | 600 | 360 | 475 | 73 | 64 | 70 | 35 | 101 |

| Test | 600 | 486 | 534 | 62 | 47 | 89 | 22 | 46 |

| Gazetteers’ size | -- | 2000 | 2350 | 1650 | 5000 | 2300 | 56 | 1630 |

| Parameters | Parameter Size |

|---|---|

| Training batch size | 10 |

| Word vector dimension | 200 |

| Stem vector dimension | 200 |

| Word2vec window size | 8 |

| Word2vec min_count | 3 |

| GGNN_hidden_size | 200 |

| Dropout | 0.5 |

| Learning rate | 0.001 |

| Model optimizer | SGD |

| Attention heads | 8 |

| Epochs | 50 |

| Text Size | Original Words | Words (Remove Duplicates) | Stems (Remove Duplicates) | Stem–Word Ratio |

|---|---|---|---|---|

| 1.3 MB | 108,589 | 20,137 | 12,627 | 62.71% |

| 2.5 MB | 217,815 | 34,615 | 22,764 | 65.76% |

| 6.1 MB | 617,396 | 57,112 | 36,694 | 64.25% |

| 16.3 MB | 1,737,125 | 106,885 | 71,787 | 67.16% |

| Words | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| جۇڭگو | جۇڭگودا | جۇڭگوشا | جۇڭگونىڭ | جۇڭگوعا | جۇڭگودان |

| (China) | (In China) | (Chinese style) | (China’s) | (To China) | (In China) |

| Cos distance | 0.9706 | 0.9606 | 0.9549 | 0.9400 | 0.9391 |

| قىستاق | قىستاقتا | قىستاققا | قىستاقتان | قىستاقتار | قىستاعى |

| (Village) | (In village) | (To the village) | (From village) | (Village) | (Their village) |

| Cos distance | 09657 | 0.9616 | 0.9502 | 0.9433 | 0.9399 |

| ساياحات | ساياحاتى | ساياحاتتا | ساياحاتشى | ساياحاتقا | ساياحاتتاي |

| (Tourism) | (Tourism) | (Travel) | (Traveler) | (On travel) | (Trip) |

| Cos distance | 0.9022 | 0.8536 | 0.82.69 | 0.8161 | 0.8133 |

| ساۋدا | ساۋدادا | ساۋدانى | ساۋداگەر | ساۋداسى | ساۋداعا |

| (Trade) | (In the trade) | (Trade on) | (Trader) | (Business) | (To business) |

| Cos distance | 0.9701 | 0.9686 | 0.9499 | 0.9318 | 0.9221 |

| Stems | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| جۇڭگو | جۇڭحۋا | امەريكا | جاپونيا | روسسيا | اۆسترليا |

| (China) | (In China) | (America) | (Japan) | (Russia) | (Australia) |

| Cos distance | 0.9352 | 0.9115 | 0.9070 | 0.9066 | 0.9038 |

| قىستاق | اۋىل | قىستاۋ | قالاشىق | اۋدان | ايماق |

| (Village) | (village) | (Rural) | (City) | (Town) | (Area) |

| Cos distance | 0.9561 | 0.9283 | 0.8805 | 0.8632 | 0.8450 |

| ساياحات | جولاۋشى | ساۋىق | سالتسانا | جول | كورىنس |

| (Tourism) | (Traveler) | (Pleasure) | (Culture) | (Journey) | (Scenic) |

| Cos distance | 0.8272 | 0.8265 | 0.8112 | 0.8049 | 0.7986 |

| ساۋدا | ساتتىق | اينالىم | تاۋار | كاسىپ | باعا |

| (Trade) | (Purchase) | (Trade) | (Goods) | (Business) | (Price) |

| Cos distance | 0.9666 | 0.9453 | 0.9224 | 0.9180 | 0.9076 |

| Word Embedding | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| Glove | 87.27 | 87.10 | 87.18 |

| FastText | 87.62 | 87.74 | 87.67 |

| Word2vec | 87.95 | 88.14 | 88.04 |

| Models | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| HMM | 64.01 | 60.99 | 62.46 |

| CRF | 76.88 | 62.23 | 68.78 |

| BiLSTM+CRF | 79.41 | 74.27 | 76.75 |

| BERT+BiLSTM+CRF | 84.91 | 83.19 | 84.04 |

| WSGGA(ours) | 87.95 | 88.14 | 88.04 |

| Different Features | P (%) | R (%) | F1 (%) |

|---|---|---|---|

| WSGGA(complete model) | 87.95 | 88.14 | 88.04 |

| W/O (stem embedding) | 86.70 | 87.55 | 87.12 |

| W/O attention | 85.26 | 86.89 | 86.07 |

| W/O gazetteers | 84.33 | 83.92 | 84.12 |

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haisa, G.; Altenbek, G. Deep Learning with Word Embedding Improves Kazakh Named-Entity Recognition. Information 2022, 13, 180. https://doi.org/10.3390/info13040180

Haisa G, Altenbek G. Deep Learning with Word Embedding Improves Kazakh Named-Entity Recognition. Information. 2022; 13(4):180. https://doi.org/10.3390/info13040180

Chicago/Turabian StyleHaisa, Gulizada, and Gulila Altenbek. 2022. "Deep Learning with Word Embedding Improves Kazakh Named-Entity Recognition" Information 13, no. 4: 180. https://doi.org/10.3390/info13040180