Instruments and Tools to Identify Radical Textual Content

Abstract

:1. Introduction

- Internet and social media represent an ideal opportunity for self-expression and communication.

- Internet and social media can reach millions of addressees around the world in a very short time.

- Internet and social media offer an ideal opportunity for networking with like-minded people.

- On Internet and social media, social control, not only by the security authorities but also by the social environment is made considerably more difficult.

- The banning of a site by state authorities is an indicator of a high degree of radicalisation.

- If a site calls for violence against people and/or objects, this is an indicator of a high degree of radicalisation.

- If a propaganda site explicitly calls on people to join extremist/terrorist groups, this is an indicator of a high degree of radicalisation.

- If a propaganda site calls for sympathy with persons or groups who have been involved in politically motivated (violent) acts in the past, this can be considered as a high degree of radicalisation.

- The first series of tools we developed relies on a lexicon built thanks to key-phrase extraction. Recent related work has developed new algorithms for key-phrase extraction [21,22,23]. In the literature, these key phrases are used for information retrieval purposes. Here, we develop an original use of the key phrases, which is to rank documents, not according to a query, but rather according to the lexicon itself. Because the lexicon is representative of a sub-domain an LEA is interested in (e.g., religious extremism), it is then possible to order the texts according to their inner interest for the user.

- Document ordering is based on two means. One relies on expert knowledge of the importance of some criteria. In this, our models are more task-oriented than the usual models.

- Current search engines do not explain to the user the results that they retrieve. As opposed to that, here, the link between the lexicon and the text is highlighted so that the user can understand the reason for the document order (and can agree/disagree with the results).

- Visual tools complement the underlying representation of texts and help the user understand what the texts are about by an overview of the main important terms.

- One fundamental point of PREVISION is that it integrates all the elementary tools into a complete processing chain that is directly usable by LEAs; such a platform does not exist where the user keeps control of the system results.

2. Related Work

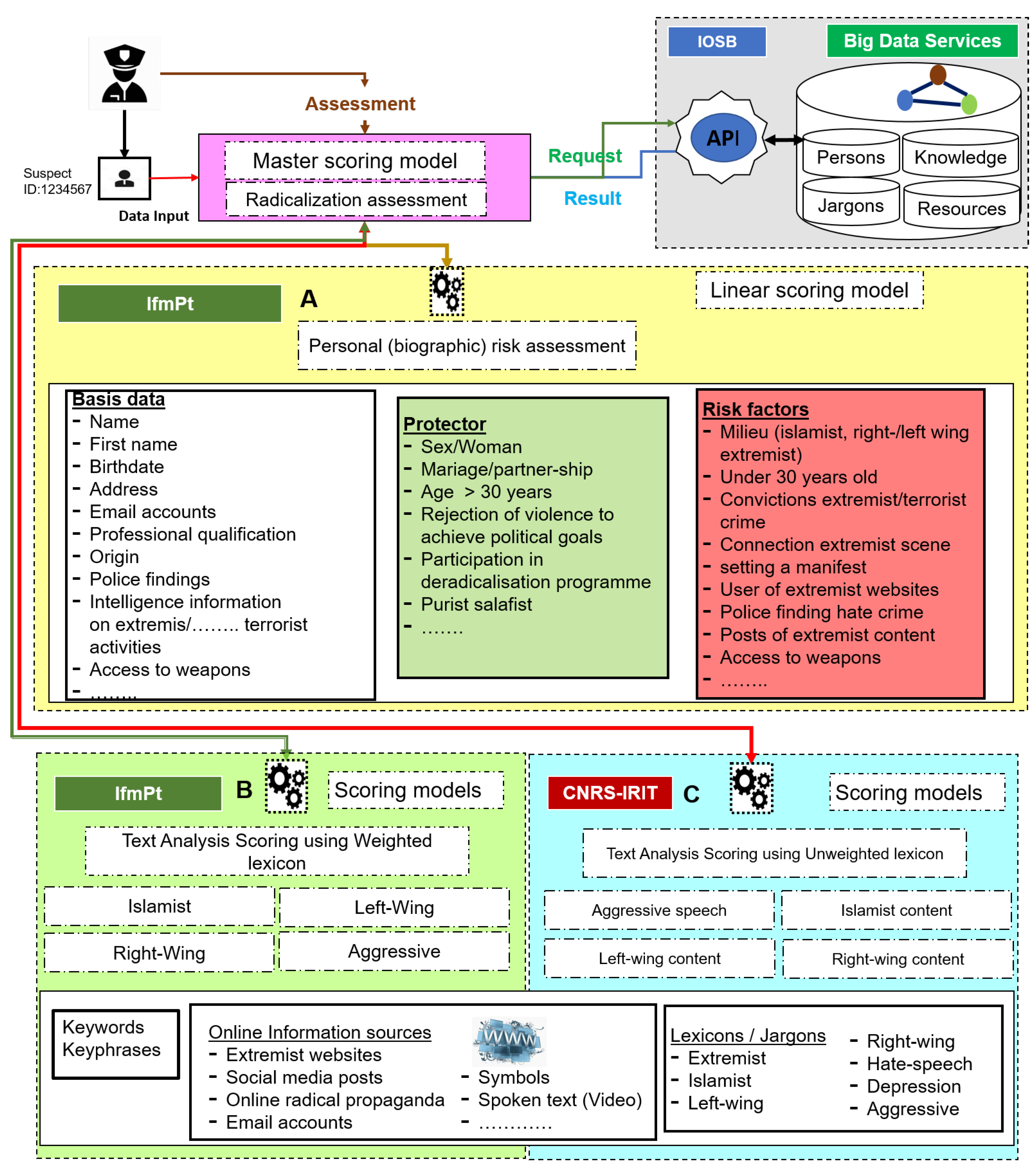

3. Overview of Methods and Tools for Identification of Radical Content

- Detection of the main key-phrases of the domain to build a domain-oriented lexicon;

- Scoring texts according to a lexicon considering the matching between individual texts and a chosen lexicon or set of key-phrases;

- Evaluating the risk of radicalisation of a suspect based on the texts written;

- Visualisation of the results in a way that the user can understand the results provided by the algorithms and tools

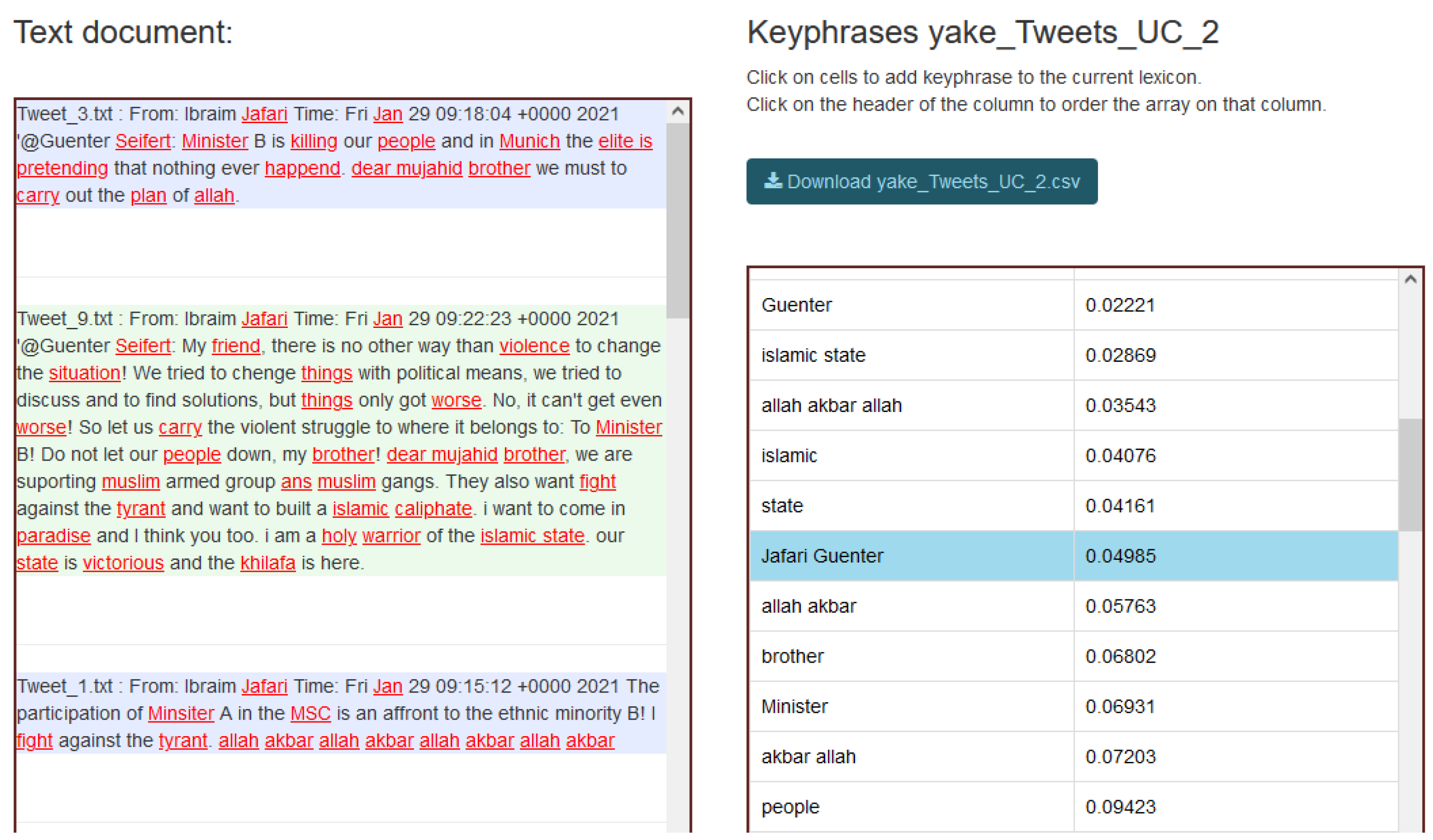

3.1. Jargon Detection

3.2. Scoring Texts and Language Contents

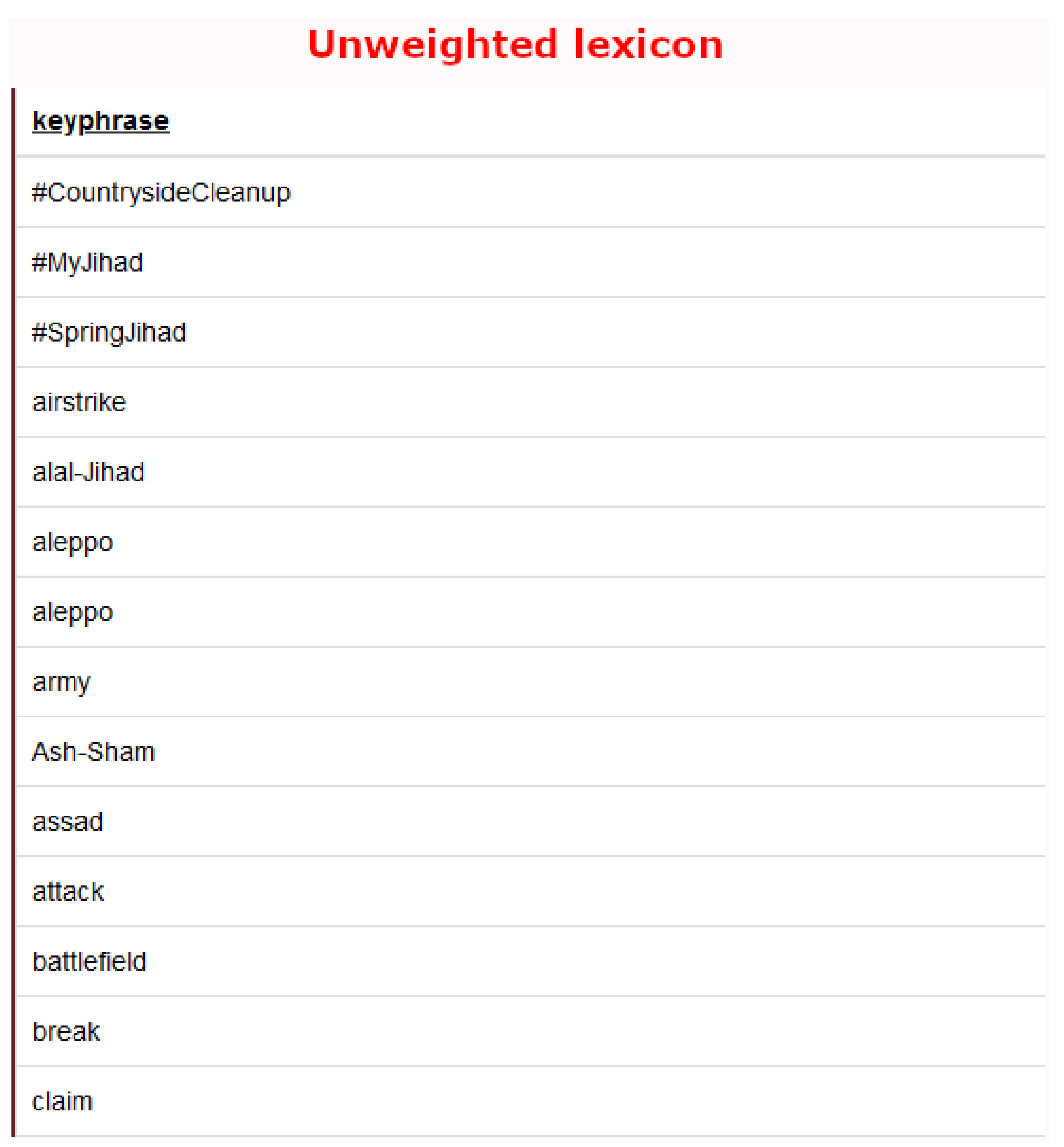

3.2.1. Scoring with an Unweighted Lexicon

3.2.2. Scoring with a Weighted Lexicon

- Manually: in this case, some abnormal text has been identified and automated classification is targeted.

- Crawler searches the web for content to select the relevant texts that include the so-called “Main Trigger”. Only main triggers start an automated analysis.

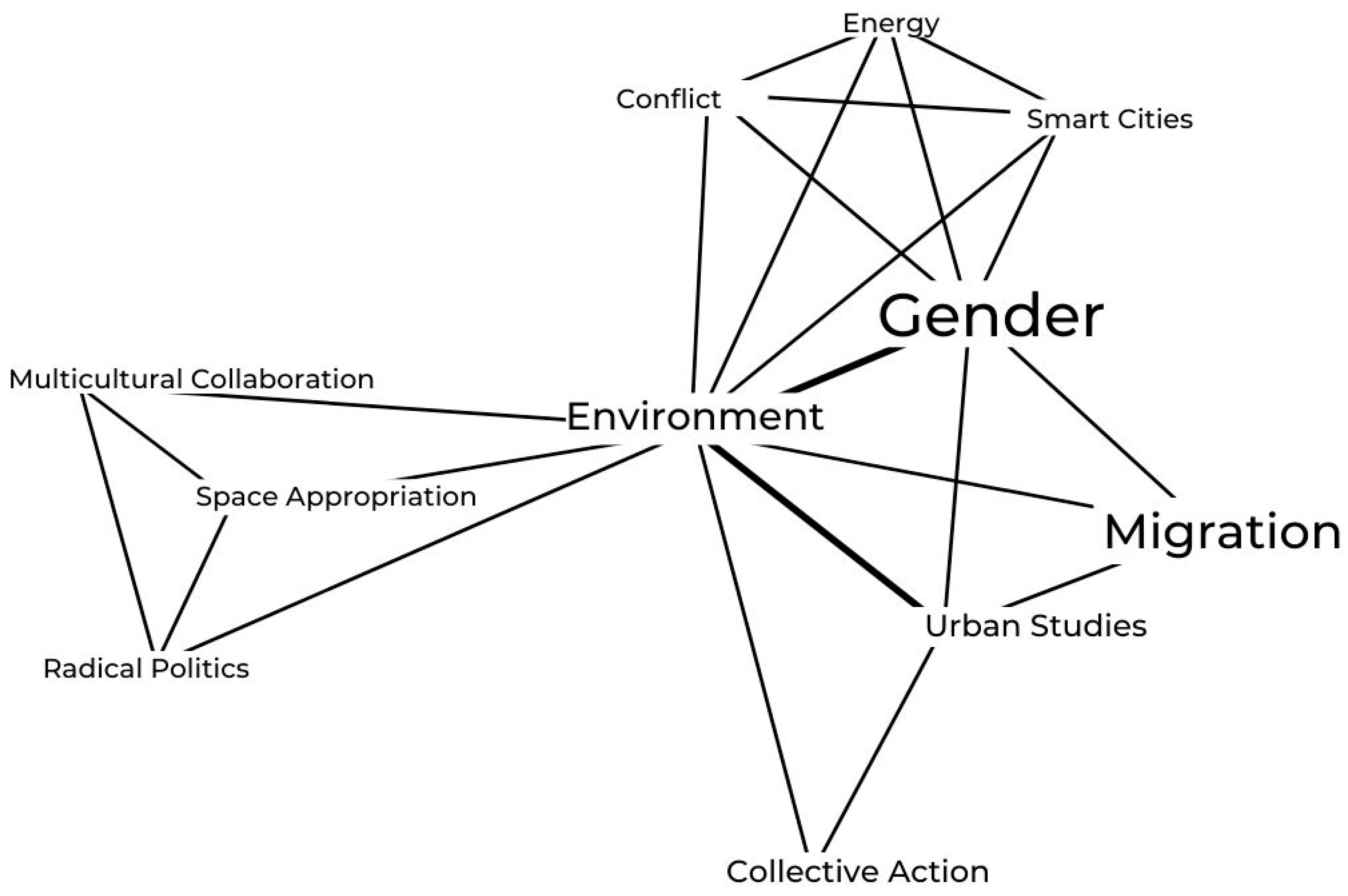

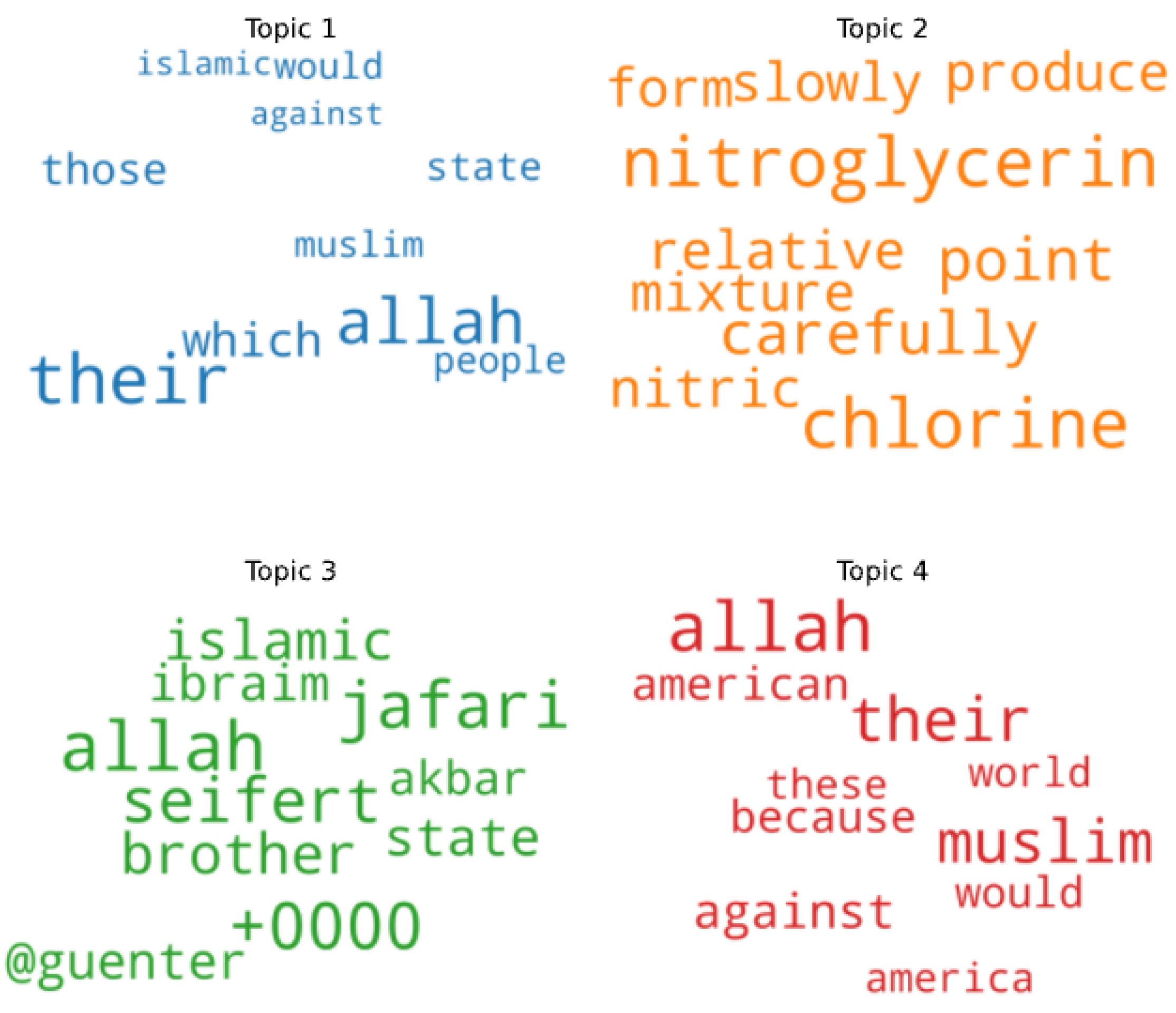

3.3. Text Visualisation Tool

- It is assumed that there are k topics across all of the documents in the corpus.

- These k topics are distributed across document m by assigning each word a topic.

- Word w in the document m is probabilistically assigned a topic based on two things:

- what topics are in document m

- how many times word w has been assigned a particular topic across all of the document.

4. Methodology

4.1. Dataset and Example Use Case

“From: Ibraim Jafari

Time: Fri. 29 Jan. 09:22:23 +0000 2021

@Guenter Seifert: My friend, there is no other way than violence to change the situation! We tried to chenge things with political means, we tried to discuss and to find solutions, but things only got worse. No, it can’t get even worse! So let us carry the violent struggle to where it belongs to: To Minister B! Do not let our people down, my brother! dear mujahid brother, we are suporting muslim armed group ans muslim gangs. They also want fight against the tyrant and want to built a islamic caliphate. i want to come in paradise and I think you too. i am a holy warrior of the islamic state. our state is victorious and the khilafa is here.”

4.2. Methods

5. Results on the Radicalisation Use Case

5.1. Jargon Detection and Text Highlighting

5.2. Scoring Texts with Unweighted Lexicon on Radicalisation

- publicly available websites,

- social media (WhatsApp, Twitter, Facebook, etc.).

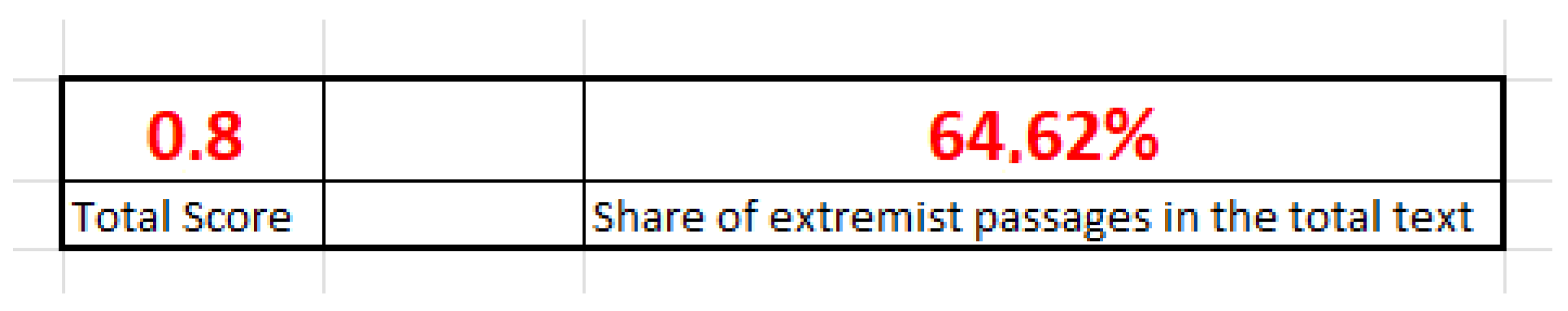

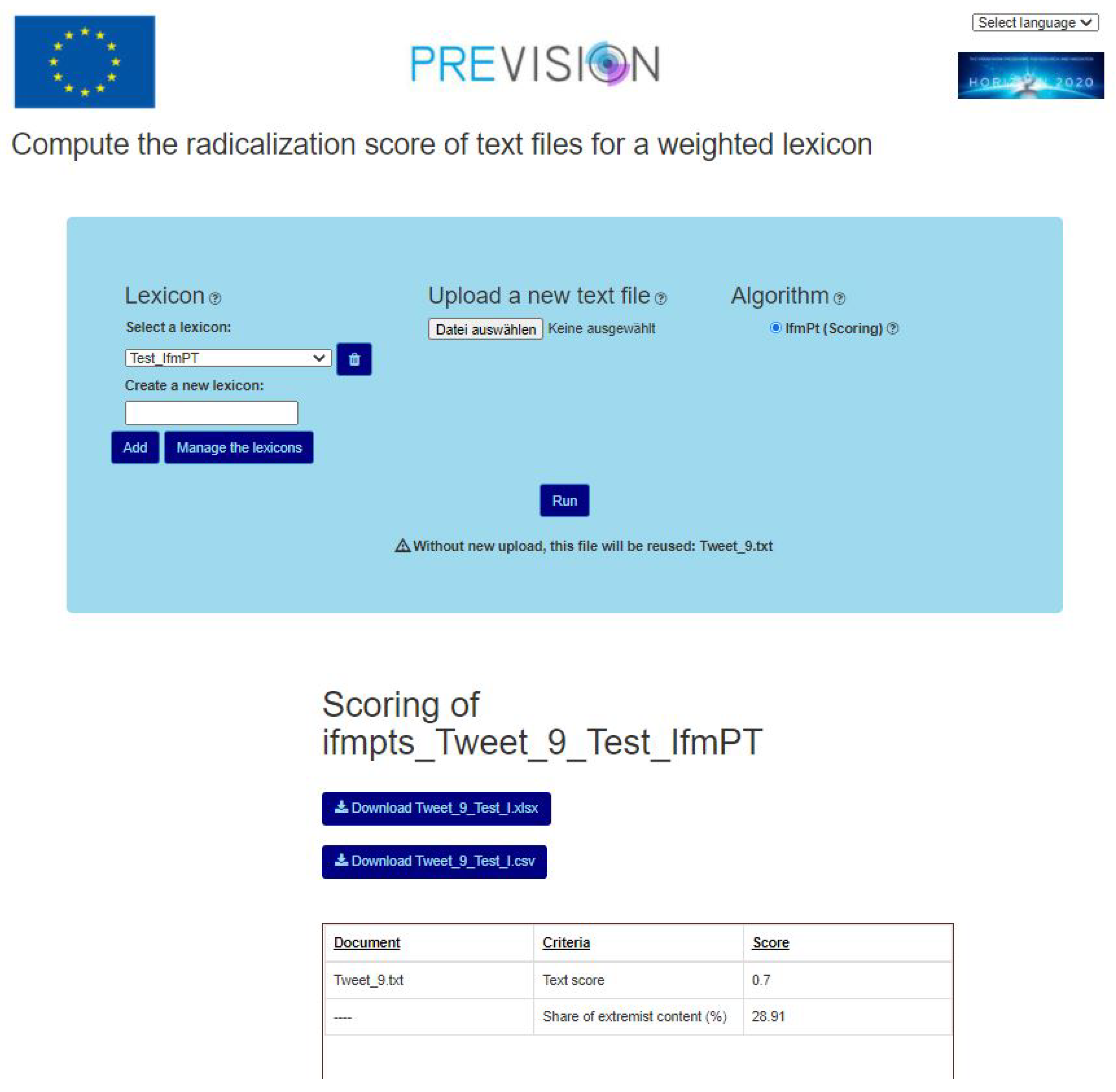

5.3. Scoring with a Weighted Lexicon on Radicalisation

| ⇒ “i am a holy warrior of the islamic state” |

| ⇒ “i will be a mujahid” |

| ⇒ “mock the messenger” |

| ⇒ “our islamic caliphate” |

| ⇒ “allah” |

| ⇒ “with our death”=Trigger |

| ⇒ “allah” |

| ⇒ “until we fall in battle (0.8) |

| ⇒ “forth (0.3)” |

| ⇒ “black flag” = MAIN TRIGGER |

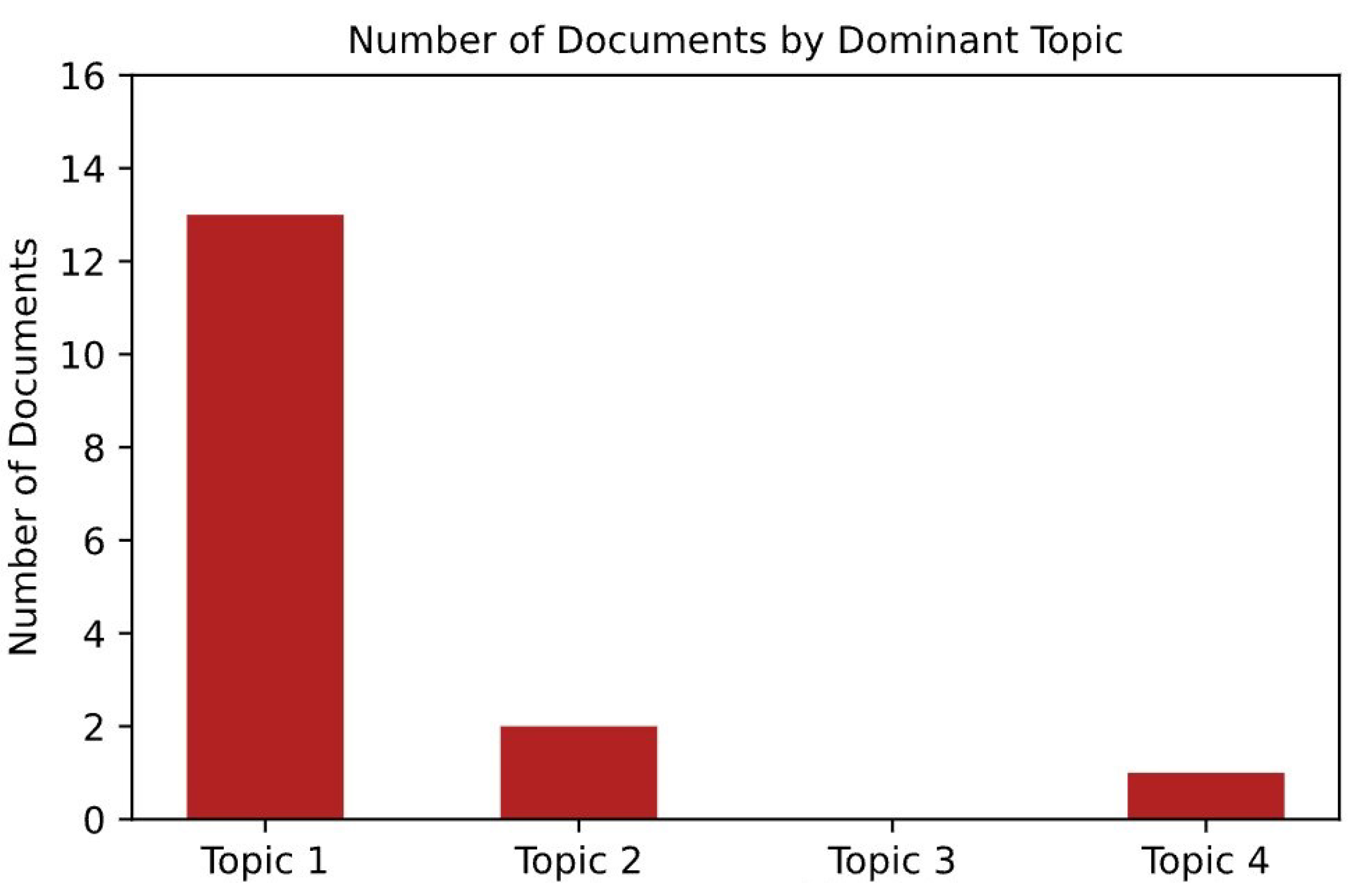

5.4. Text Visualisation Tool—Application of Visual Analytics for Analysis of Radicalised Content

5.5. Machine Learning Model to Predict the Risk of Radicalisation

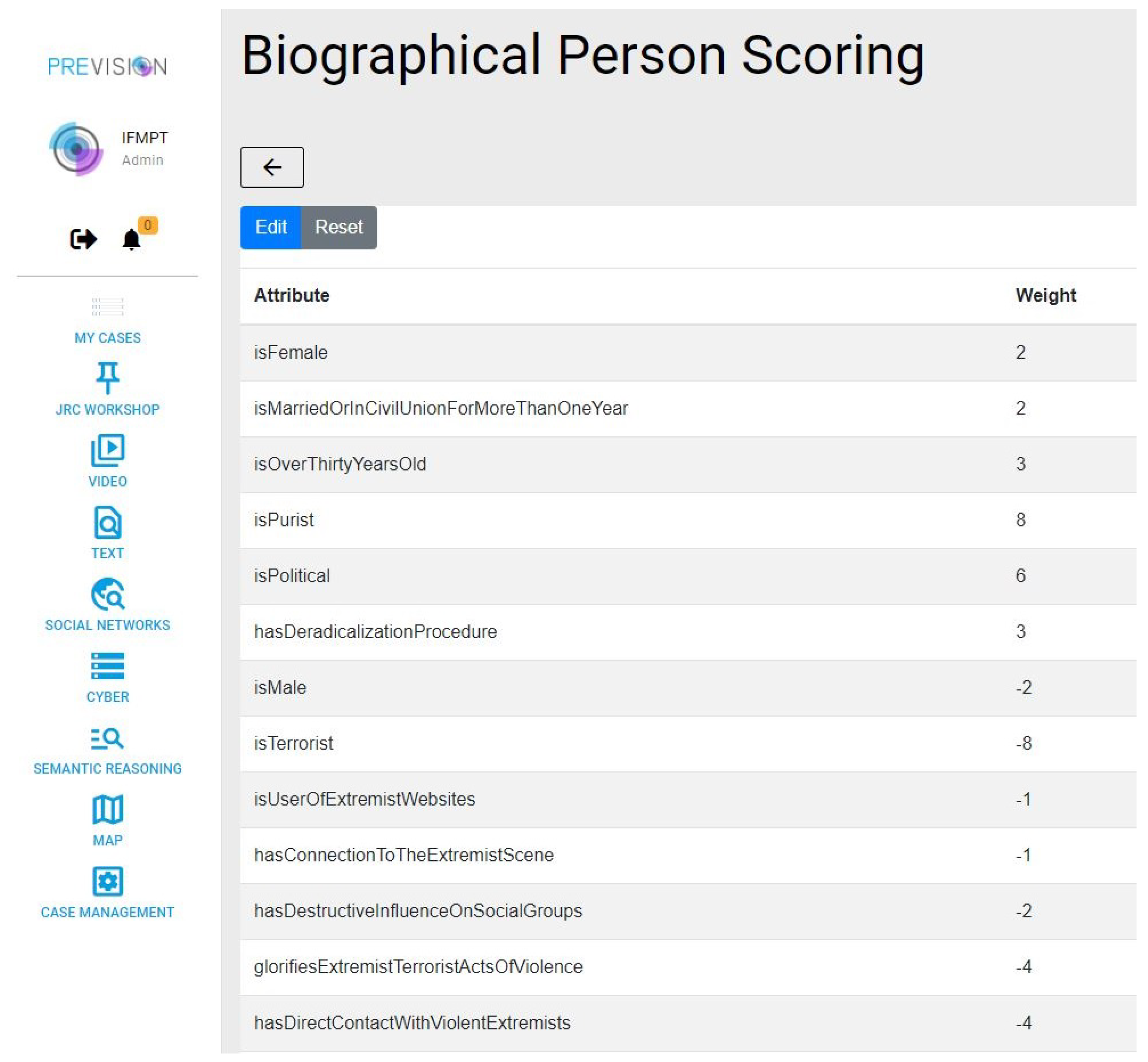

5.5.1. Biographical Scoring from Basic Data

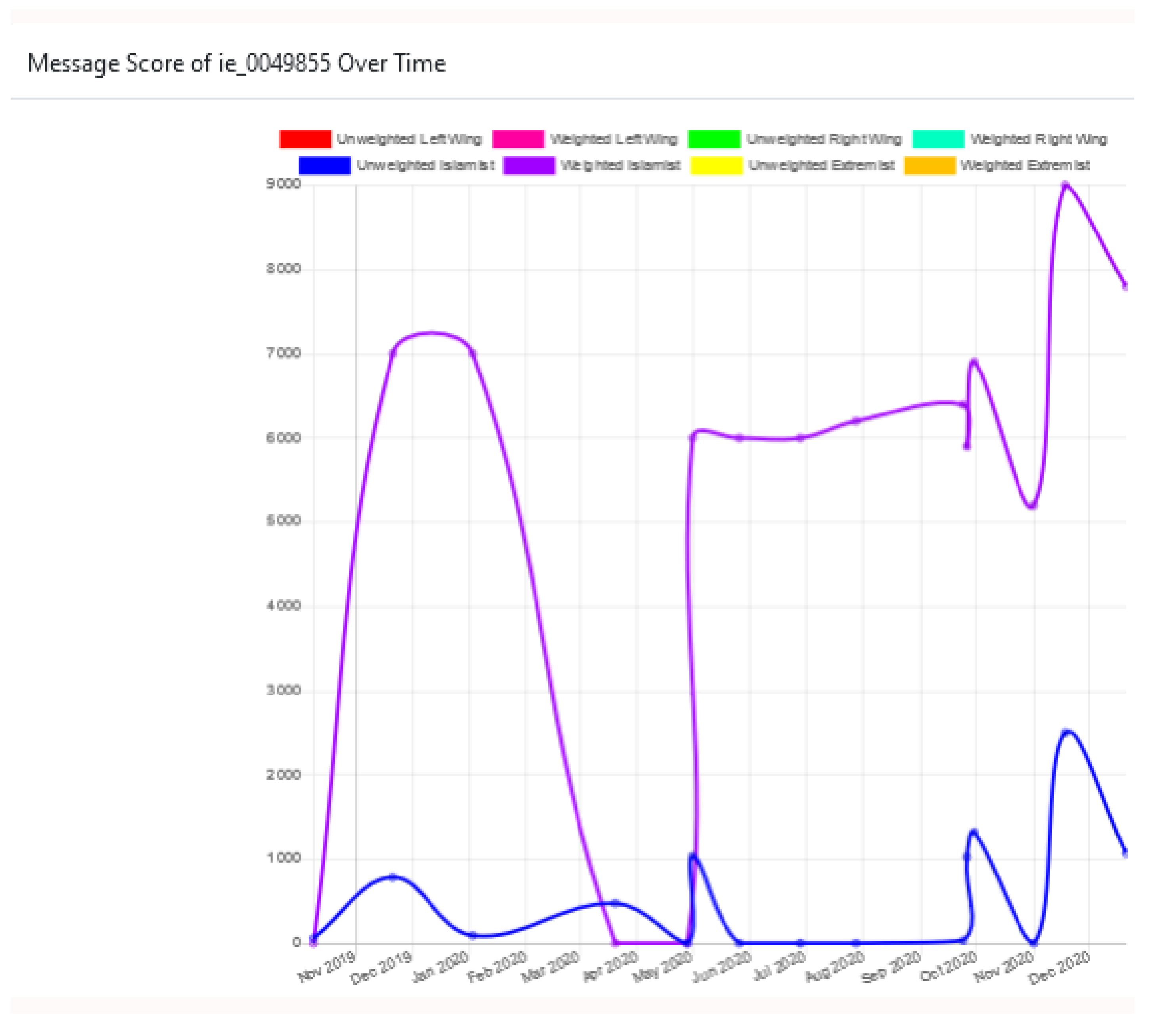

5.5.2. Linear/Non-Linear Scoring Models from Online Information

5.5.3. Knowledge Base

5.5.4. Master Scoring Model (LEAs)

6. Discussion, Implication, and Conclusions

- We used two types of data sources: the sources used to train word embeddings which are unknown (since we used pre-trained systems) and the sources used to extract the jargon, which are provided by the user or collected by the user and thus known. To address the lack of transparency on the first type of data sources, the tool shows both the Jargon that is extracted, the position of the jargon in the users’ texts and provides the explanation of the score calculation for the scoring tools to the user. The data description is thus available.

- To address the risk of bias (describing the data types, features types, dictionaries, external resources used during training the tool): text data type was used across all tools, no pre-defined dictionary was used in the tools developed although the users can start from their own dictionaries. To model the pattern matching in the scoring tools, the Spacy language model was used, which code is available.

- To address the lack of transparency as to how the tool works (explaining the data representation, mathematical logic and rules underlying the models, exploring the features and the data that has an impact on the classification score): the tools tips have been added in the interface so the user knows how the tool works. The detailed explanation and mathematical calculations and rules underlying the models are also available.

- To further address the lack of transparency -explaining the confidence score of the model and the features that contribute to this confidence score. The explanation of the calculation of scoring tools is presented as a pop-up to the user.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kalisch, M.; Stotz, P. Wer Liest Das Eigentlich? Die GELäufigsten Corona-Verschwörungstheorien und Die Akteure Dahinter. Available online: https://www.spiegel.de/netzwelt/web/corona-verschwoerungstheorien-und-die-akteure-dahinter-bill-gates-impfzwang-und-co-a-2e9a0e78-4375-4dbd-815f-54571750d32d (accessed on 7 November 2021).

- Reinecke, S. Konjunktur der Verschwörungstheorien: Die Nervöse Republik. Available online: https://taz.de/Konjunktur-der-Verschwoerungstheorien/!5681544/ (accessed on 7 November 2021).

- Berlin, B. Antisemitische Verschwörungstheorien Haben Während Corona Konjunktur. Available online: https://www.bz-berlin.de/berlin/antisemitische-verschwoerungstheorien-haben-waehrend-corona-konjunktur (accessed on 7 November 2021).

- Fielitz, M.; Ebner, J.; Guhl, J.; Quent, M. Hassliebe: Muslimfeindlichkeit, Islamismus und Die Spirale Gesellschaftlicher Polarisierung; Amadeu Antonio Stiftung: Berlin, Germany, 2018; Volume 1. [Google Scholar]

- Chen, H. Dark Web: Exploring and Data Mining the Dark Side of the Web; Springer Science & Business Media: Berlin, Germany, 2011; Volume 30. [Google Scholar]

- Akinboro, S.; Adebusoye, O.; Onamade, A. A Review on the Detection of Offensive Content in Social Media Platforms. FUOYE J. Eng. Technol. 2021, 6. [Google Scholar] [CrossRef]

- Neumann, P.R. Der Terror ist unter uns: Dschihadismus, Radikalisierung und Terrorismus in Europa; Ullstein eBooks: Berlin, Germany, 2016. [Google Scholar]

- Scruton, R. The Palgrave Macmillan Dictionary of Political Thought; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Ali, F.; Ali, A.; Imran, M.; Naqvi, R.A.; Siddiqi, M.H.; Kwak, K.S. Traffic accident detection and condition analysis based on social networking data. Accid. Anal. Prev. 2021, 151, 105973. [Google Scholar] [CrossRef] [PubMed]

- Types de Radicalisation. Available online: https://info-radical.org/fr/types-de-radicalisation/ (accessed on 7 November 2021).

- MDR.DE. Wie das Internet zur Radikalisierung Beiträgt|MDR.DE. Available online: https://www.mdr.de/wissen/bildung/extremismus-internet-online-radikalisierung-100.html (accessed on 7 November 2021).

- Holbrook, D. A critical analysis of the role of the internet in the preparation and planning of acts of terrorism. Dyn. Asymmetric Confl. 2015, 8, 121–133. [Google Scholar] [CrossRef] [Green Version]

- Kahl, M. Was wir über Radikalisierung im Internet wissen. Forschungsansätze und Kontroversen. Demokr. Gegen Menschenfeindlichkeit 2018, 3, 11–25. [Google Scholar] [CrossRef]

- Reicher, S.D.; Spears, R.; Postmes, T. A social identity model of deindividuation phenomena. Eur. Rev. Soc. Psychol. 1995, 6, 161–198. [Google Scholar] [CrossRef]

- Spears, R.; Lea, M. Panacea or panopticon? The hidden power in computer-mediated communication. Commun. Res. 1994, 21, 427–459. [Google Scholar] [CrossRef]

- Tajfel, H.; Turner, J.C. The Social Identity Theory of Intergroup Behavior. In Political Psychology: Key Readings; Psychology Press/Taylor & Francis: London, UK, 2004; pp. 276–293. [Google Scholar]

- Boehnke, K.; Odağ, Ö.; Leiser, A. Neue Medien und politischer Extremismus im Jugendalter: Die Bedeutung von Internet und Social Media für jugendliche Hinwendungs-und Radikalisierungsprozesse. In Stand der Forschung und Zentrale Erkenntnisse Themenrelevanter Forschungsdisziplinen aus Ausgewählten Ländern. Expertise im Auftrag des Deutschen Jugendinstituts (DJI); DJI München Deutsches Jugendinstitut e.V.: Munich, Germany, 2015. [Google Scholar]

- Kimmerle, J. SIDE-Modell im Dorsch Lexikon der Psychologie. Available online: https://dorsch.hogrefe.com/stichwort/side-modell (accessed on 7 November 2021).

- Skrobanek, J. Regionale Identifikation, Negative Stereotypisierung und Eigengruppenbevorzugung; Das Beispiel Sachsen; VS Verlag für Sozialwissenschaften: Wiesbaden, Germany, 2004. [Google Scholar]

- Knipping-Sorokin, R. Radikalisierung Jugendlicher über das Internet?: Ein Literaturüberblick, DIVSI Report. Available online: https://www.divsi.de/wp-content/uploads/2016/11/Radikalisierung-Jugendlicher-ueber-das-Internet.pdf (accessed on 23 November 2021).

- Mothe, J.; Ramiandrisoa, F.; Rasolomanana, M. Automatic keyphrase extraction using graph-based methods. In Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, 9–13 April 2018; pp. 728–730. [Google Scholar]

- Campos, R.; Mangaravite, V.; Pasquali, A.; Jorge, A.; Nunes, C.; Jatowt, A. YAKE! Keyword extraction from single documents using multiple local features. Inf. Sci. 2020, 509, 257–289. [Google Scholar] [CrossRef]

- Rose, S.; Engel, D.; Cramer, N.; Cowley, W. Automatic keyword extraction from individual documents. Text Mining Appl. Theory 2010, 1, 1–20. [Google Scholar]

- Ashcroft, M.; Fisher, A.; Kaati, L.; Omer, E.; Prucha, N. Detecting jihadist messages on twitter. In Proceedings of the 2015 European Intelligence and Security Informatics Conference, Manchester, UK, 7–9 September 2015; pp. 161–164. [Google Scholar]

- Rowe, M.; Saif, H. Mining pro-ISIS radicalisation signals from social media users. In Proceedings of the Tenth International AAAI Conference on Web and Social Media, Cologne, Germany, 17–20 May 2016. [Google Scholar]

- Nouh, M.; Nurse, J.R.; Goldsmith, M. Understanding the radical mind: Identifying signals to detect extremist content on twitter. In Proceedings of the 2019 IEEE International Conference on Intelligence and Security Informatics (ISI), Shenzhen, China, 1–3 July 2019; pp. 98–103. [Google Scholar]

- Gaikwad, M.; Ahirrao, S.; Phansalkar, S.; Kotecha, K. Online extremism detection: A systematic literature review with emphasis on datasets, classification techniques, validation methods, and tools. IEEE Access 2021, 9, 48364–48404. [Google Scholar] [CrossRef]

- Alatawi, H.S.; Alhothali, A.M.; Moria, K.M. Detecting white supremacist hate speech using domain specific word embedding with deep learning and BERT. IEEE Access 2021, 9, 106363–106374. [Google Scholar] [CrossRef]

- Cohen, K.; Johansson, F.; Kaati, L.; Mork, J.C. Detecting linguistic markers for radical violence in social media. Terror. Political Violence 2014, 26, 246–256. [Google Scholar] [CrossRef]

- Chalothorn, T.; Ellman, J. Affect analysis of radical contents on web forums using SentiWordNet. Int. J. Innov. Manag. Technol. 2013, 4, 122. [Google Scholar]

- Jurek, A.; Mulvenna, M.D.; Bi, Y. Improved lexicon-based sentiment analysis for social media analytics. Secur. Inform. 2015, 4, 9. [Google Scholar] [CrossRef] [Green Version]

- Fernandez, M.; Asif, M.; Alani, H. Understanding the roots of radicalisation on twitter. In Proceedings of the 10th ACM Conference on Web Science, Amsterdam, The Netherlands, 27–30 May 2018; pp. 1–10. [Google Scholar]

- Araque, O.; Iglesias, C.A. An approach for radicalization detection based on emotion signals and semantic similarity. IEEE Access 2020, 8, 17877–17891. [Google Scholar] [CrossRef]

- Mothe, J.; Chrisment, C.; Dkaki, T.; Dousset, B.; Karouach, S. Combining mining and visualization tools to discover the geographic structure of a domain. Comput. Environ. Urban Syst. 2006, 30, 460–484. [Google Scholar] [CrossRef] [Green Version]

- Dousset, B.; Mothe, J. Getting Insights from a Large Corpus of Scientific Papers on Specialisted Comprehensive Topics-the Case of COVID-19. Procedia Comput. Sci. 2020, 176, 2287–2296. [Google Scholar] [CrossRef]

- Leavy, S.; Meaney, G.; Wade, K.; Greene, D. Curatr: A platform for semantic analysis and curation of historical literary texts. In Proceedings of the Research Conference on Metadata and Semantics Research, Rome, Italy, 28–31 October 2019; pp. 354–366. [Google Scholar]

- Paranyushkin, D. InfraNodus: Generating Insight Using Text Network Analysis. In Proceedings of the World Wide Web Conference, WWW’19, San Francisco, CA, USA, 13–17 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 3584–3589. [Google Scholar] [CrossRef]

- Hasan, K.S.; Ng, V. Automatic Keyphrase Extraction: A Survey of the State of the Art. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, ACL 2014, Baltimore, MD, USA, 22–27 June 2014; pp. 1262–1273. [Google Scholar]

- Mahata, D.; Shah, R.R.; Kuriakose, J.; Zimmermann, R.; Talburt, J.R. Theme-Weighted Ranking of Keywords from Text Documents Using Phrase Embeddings. In Proceedings of the IEEE 1st Conference on Multimedia Information Processing and Retrieval, MIPR 2018, Miami, FL, USA, 10–12 April 2018; pp. 184–189. [Google Scholar] [CrossRef] [Green Version]

- El-Beltagy, S.R.; Rafea, A.A. KP-Miner: Participation in SemEval-2. In Proceedings of the 5th International Workshop on Semantic Evaluation, SemEval@ACL 2010, Uppsala, Sweden, 15–16 July 2010; pp. 190–193. [Google Scholar]

- Kim, S.N.; Medelyan, O.; Kan, M.; Baldwin, T. SemEval-2010 Task 5: Automatic Keyphrase Extraction from Scientific Articles. In Proceedings of the 5th International Workshop on Semantic Evaluation, SemEval@ACL 2010, Uppsala, Sweden, 15–16 July 2010; pp. 21–26. [Google Scholar]

- Litvak, M.; Last, M. Graph-based keyword extraction for single-document summarization. In Proceedings of the Coling 2008: Proceedings of the workshop Multi-source Multilingual Information Extraction and Summarization, COLING, Manchester, UK, 23 August 2008; pp. 17–24. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the Advances in Neural Information Processing Systems 26 (NIPS 2013), Lake Tahoe, NV, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Bail, C.A. Combining natural language processing and network analysis to examine how advocacy organizations stimulate conversation on social media. Proc. Natl. Acad. Sci. USA 2016, 113, 11823–11828. [Google Scholar] [CrossRef] [Green Version]

- Rule, A.; Cointet, J.P.; Bearman, P.S. Lexical shifts, substantive changes, and continuity in State of the Union discourse, 1790–2014. Proc. Natl. Acad. Sci. USA 2015, 112, 10837–10844. [Google Scholar] [CrossRef] [Green Version]

- Fabo, P.R.; Plancq, C.; Poibeau, T. More than Word Cooccurrence: Exploring Support and Opposition in International Climate Negotiations with Semantic Parsing. In Proceedings of the LREC: The 10th Language Resources and Evaluation Conference, Portorož, Slovenia, 23–28 May 2016. [Google Scholar]

- Cambria, E.; Das, D.; Bandyopadhyay, S.; Feraco, A. A Practical Guide to Sentiment Analysis; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Liu, L.; Tang, L.; Dong, W.; Yao, S.; Zhou, W. An overview of topic modeling and its current applications in bioinformatics. SpringerPlus 2016, 5, 1608. [Google Scholar] [CrossRef] [Green Version]

- Murtagh, F.; Taskaya, T.; Contreras, P.; Mothe, J.; Englmeier, K. Interactive visual user interfaces: A survey. Artif. Intell. Rev. 2003, 19, 263–283. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Roberts, M.E.; Stewart, B.M.; Tingley, D. Stm: An R package for structural topic models. J. Stat. Softw. 2019, 91, 1–40. [Google Scholar] [CrossRef] [Green Version]

- Arlacchi, P. Mafia von Innen. Das Leben des Don Antonio Corleone; FISCHER: Taschenbuch, Germany, 1995. [Google Scholar]

- Galliani, C. Mein Leben für Die Mafia: Der Lebensbericht Eines Ehrbaren Anonymen Sizilianers; Rowohlt: Hamburg, Germany, 1989. [Google Scholar]

- Lara-Cabrera, R.; Gonzalez-Pardo, A.; Camacho, D. Statistical analysis of risk assessment factors and metrics to evaluate radicalisation in Twitter. Future Gener. Comput. Syst. 2019, 93, 971–978. [Google Scholar] [CrossRef]

- Gilpérez-López, I.; Torregrosa, J.; Barhamgi, M.; Camacho, D. An initial study on radicalization risk factors: Towards an assessment software tool. In Proceedings of the 2017 28th International Workshop on Database and Expert Systems Applications (DEXA), Lyon, France, 28–31 August 2017; pp. 11–16. [Google Scholar]

- Van Brunt, B.; Murphy, A.; Zedginidze, A. An exploration of the risk, protective, and mobilization factors related to violent extremism in college populations. Violence Gend. 2017, 4, 81–101. [Google Scholar] [CrossRef]

- Knight, S.; Woodward, K.; Lancaster, G.L. Violent versus nonviolent actors: An empirical study of different types of extremism. J. Threat Assess. Manag. 2017, 4, 230. [Google Scholar] [CrossRef]

- Gebru, T.; Morgenstern, J.; Vecchione, B.; Vaughan, J.W.; Wallach, H.; Daumé, H., III; Crawford, K. Datasheets for datasets. arXiv 2018, arXiv:1803.09010. [Google Scholar] [CrossRef]

- Hovy, D.; Prabhumoye, S. Five sources of bias in natural language processing. Lang. Linguist. Compass 2021, 15, e12432. [Google Scholar] [CrossRef]

- Bolukbasi, T.; Chang, K.W.; Zou, J.Y.; Saligrama, V.; Kalai, A.T. Man is to computer programmer as woman is to homemaker? debiasing word embeddings. Adv. Neural Inf. Process. Syst. 2016, 29, 4349–4357. [Google Scholar]

- Bender, E.; Friedman, B. Data Statements for NLP: Toward Mitigating System Bias and Enabling Better Science. 2019. Available online: https://aclanthology.org/Q18-1041/ (accessed on 23 November 2021).

- Mitchell, M.; Wu, S.; Zaldivar, A.; Barnes, P.; Vasserman, L.; Hutchinson, B.; Spitzer, E.; Raji, I.D.; Gebru, T. Model cards for model reporting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; pp. 220–229. [Google Scholar]

- Crimmins, F.; Smeaton, A.F.; Dkaki, T.; Mothe, J. TetraFusion: Information discovery on the Internet. IEEE Intell. Syst. Their Appl. 1999, 14, 55–62. [Google Scholar] [CrossRef]

| Document | Criteria | Score | Keyphrase | Frequency | Text Score |

|---|---|---|---|---|---|

| Tweet_3.txt | Text score | 0.05 | killing | 1 | 0.02564 |

| … | Share of lexicon | 0.02 | brother | 1 | 0.02564 |

| Tweet_9.txt | Text score | 0.05 | fight | 1 | 0.00781 |

| … | Share of lexicon | 0.04 | paradise | 1 | 0.00781 |

| brother | 2 | 0.01562 | |||

| armed | 1 | 0.00781 | |||

| islamic state | 1 | 0.01562 | |||

| Islam_test.txt | Text score | 0.03 | army | 18 | 0.00098 |

| Share of lexicon | 0.27 | attack | 11 | 0.0006 | |

| battlefield | 1 | 0.00005 | |||

| claim | 10 | 0.00054 | |||

| extreme | 1 | 0.00005 | |||

| fight | 9 | 0.00049 | |||

| kafir | 3 | 0.00016 | |||

| libya | 1 | 0.00005 | |||

| mosul | 2 | 0.00011 | |||

| soldier | 4 | 0.00022 | |||

| support | 12 | 0.00065 | |||

| ummah | 12 | 0.00065 | |||

| victory | 6 | 0.00033 | |||

| war | 20 | 0.00108 |

| Key Words and Phrases | Category | Score | Main Trigger | Number of Matches | Total Score | Number of Words |

|---|---|---|---|---|---|---|

| In the name of allah | Neutral | |||||

| In the name of allah, the merciful, the gracious | Neutral | |||||

| there is only one god | Neutral | |||||

| achieve martyrdom | Trigger | 0.8 | ||||

| against kuffar and murtaddin | Trigger | 0.8 | ||||

| allah | Neutral | |||||

| allah akbar | Neutral | |||||

| allah willing | Neutral | |||||

| apostates of Islam | Trigger | 0.7 | ||||

| banner of the khilafah | Trigger | 0.7 | ||||

| become a martyr | Trigger | 0.8 | ||||

| black flag | Trigger | 0.8 | Main Trigger | 1 | 0.8 | 2 |

| brothers it’s time to rise | Trigger | 0.7 | ||||

| brothers rise up | Trigger | 0.7 | 2 | 1.4 | 6 | |

| caliphate | Trigger | 0.7 | ||||

| call of allah | Neutral | |||||

| call of allah and his messenger | Neutral | |||||

| claim your victory | Trigger | 0.7 | 2 | 1.4 | 6 | |

| contradict the sharia | Trigger | |||||

| dear mujahid brother | Trigger | 0.6 | ||||

| death for paradise | Trigger | 0.8 | ||||

| demolish | Trigger | 0.6 | ||||

| fight against the tyrant | Trigger | 0.7 | ||||

| forth | Trigger | 0.3 | 2 | 0.6 | 2 |

| Document | Criteria | Score | Keyphrase | Category | Weight | Frequency | Total Words | Text Score | %-of-Share |

|---|---|---|---|---|---|---|---|---|---|

| Tweet_3.txt | Text score | 0.6 | dear mujahid brother | Trigger | 0.6 | 1 | 3 | 0.6 | 7.69 |

| Share of extremist content (%) | 7.69 | ||||||||

| Tweet_9.txt | Text score | 0.7 | dear mujahid brother | Trigger | 0.6 | 1 | 3 | 0.6 | 2.34 |

| Share of extremist content (%) | 28.91 | muslim armed group | Trigger | 0.6 | 1 | 3 | 0.6 | 2.34 | |

| muslim gangs | Trigger | 0.6 | 1 | 2 | 0.6 | 1.56 | |||

| fight against the tyrant | Trigger | 0.7 | 1 | 4 | 0.7 | 3.12 | |||

| i want to come in paradise | Trigger | 0.7 | 1 | 6 | 0.7 | 4.69 | |||

| islamic caliphate | Trigger | 0.7 | 1 | 2 | 0.7 | 1.56 | |||

| i am a holy warrior of the islamic state | Trigger | 0.8 | 1 | 9 | 0.8 | 7.03 | |||

| our state is victorious | Trigger | 0.8 | 1 | 4 | 0.8 | 3.12 | |||

| the khilafa is here | Trigger | 0.8 | 1 | 4 | 0.8 | 3.12 | |||

| islam_test.txt | Text score | 0.64 | forth | Trigger | 0.3 | 7 | 7 | 2.1 | 0.04 |

| Share of extremist content (%) | 1.29 | jihad | Trigger | 0.6 | 29 | 29 | 17.4 | 0.16 | |

| jihad for allah | Trigger | 0.6 | 1 | 3 | 0.6 | 0.02 | |||

| mujahidin | Trigger | 0.6 | 38 | 38 | 22.8 | 0.21 | |||

| murtaddin | Trigger | 0.6 | 16 | 16 | 9.6 | 0.09 | |||

| islamic state | Trigger | 0.7 | 52 | 104 | 36.4 | 0.56 | |||

| kafir | Trigger | 0.7 | 3 | 3 | 2.10 | 0.02 | |||

| kuffar | Trigger | 0.7 | 8 | 8 | 5.6 | 0.04 | |||

| kufr | Trigger | 0.7 | 14 | 14 | 9.80 | 0.08 | |||

| taghut | Trigger | 0.7 | 16 | 16 | 11.2 | 0.09 | |||

| Post_Example2.txt | Text score | 0.61 | strong explosive | Trigger | 0.2 | 1 | 2 | 0.2 | 0.3 |

| Share of extremist content (%) | 7.14 | chlorix | Trigger | 0.3 | 1 | 1 | 0.3 | 0.15 | |

| chlorine | Trigger | 0.6 | 7 | 7 | 4.2 | 1.04 | |||

| nitroglycerin | Trigger | 0.6 | 7 | 7 | 4.2 | 1.04 | |||

| nitroglycerin | Trigger | 0.6 | 7 | 7 | 4.2 | 1.04 |

| Doc. Number | Dominant Topic | Topic Perc Contrib | Keywords | Text |

|---|---|---|---|---|

| 0 | 0 | 99.99% | their, allah, which, state, those, people, isl… | [allah, expel, disbeliever, among, people, scr… |

| 1 | 0 | 97.75% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:20:46, +0000, @guenter, se… |

| 2 | 0 | 96.34% | their, allah, which, state, those, people, isl… | [guenter, seifert, 09:22:15, +0000, @ibraim, j… |

| 3 | 0 | 93.15% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:18:35, +0000, @guenter, se… |

| 4 | 0 | 96.36% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:15:12, +0000, participatio… |

| 5 | 0 | 96.12% | their, allah, which, state, those, people, isl… | [guenter, seifert, 09:19:45, +0000, @ibraim, j… |

| 6 | 1 | 99.72% | allah, their, which, muslim, those, would, peo… | [production, chlorine, chlorine, already, worl… |

| 7 | 0 | 98.55% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:22:23, +0000, @guenter, se… |

| 8 | 0 | 96.73% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:22:12, +0000, @guenter, se… |

| 9 | 0 | 92.36% | their, allah, which, state, those, people, isl… | [guenter, seifert, 09:16:12, +0000, @ibraim, j… |

| 10 | 0 | 95.67% | their, allah, which, state, those, people, isl… | [09:22:41, +0000, @ibraim, jafari, @guenter, s… |

| 11 | 3 | 99.99% | allah, their, muslim, against, american, becau… | [jihad, fight, against, tyrant, supporting, as… |

| 12 | 0 | 98.82% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:22:52, +0000, @guenter, se… |

| 13 | 1 | 99.98% | allah, their, which, muslim, those, would, peo… | [guenter, against, kuffar, murtaddin, fighting… |

| 14 | 0 | 96.00% | their, allah, which, state, those, people, isl… | [ibraim, jafari, 09:18:04, +0000, @guenter, se… |

| 15 | 0 | 97.37% | their, allah, which, state, those, people, isl… | [guenter, seifert, 09:22:30, +0000, @ibraim, j… |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mothe, J.; Ullah, M.Z.; Okon, G.; Schweer, T.; Juršėnas, A.; Mandravickaitė, J. Instruments and Tools to Identify Radical Textual Content. Information 2022, 13, 193. https://doi.org/10.3390/info13040193

Mothe J, Ullah MZ, Okon G, Schweer T, Juršėnas A, Mandravickaitė J. Instruments and Tools to Identify Radical Textual Content. Information. 2022; 13(4):193. https://doi.org/10.3390/info13040193

Chicago/Turabian StyleMothe, Josiane, Md Zia Ullah, Guenter Okon, Thomas Schweer, Alfonsas Juršėnas, and Justina Mandravickaitė. 2022. "Instruments and Tools to Identify Radical Textual Content" Information 13, no. 4: 193. https://doi.org/10.3390/info13040193

APA StyleMothe, J., Ullah, M. Z., Okon, G., Schweer, T., Juršėnas, A., & Mandravickaitė, J. (2022). Instruments and Tools to Identify Radical Textual Content. Information, 13(4), 193. https://doi.org/10.3390/info13040193