Abstract

Automated vehicles can perceive their environment and control themselves, but how to effectively transfer the information perceived by the vehicles to human drivers through interfaces, or share the awareness of the situation, is a problem to be solved in human–machine co-driving. The four elements of the shared situation awareness (SSA) interface, namely human–machine state, context, current task status, and plan, were analyzed and proposed through an abstraction hierarchy design method to guide the output of the corresponding interface design elements. The four elements were introduced to visualize the interface elements and design the interface prototype in the scenario of “a vehicle overtaking with a dangerous intention from the left rear”, and the design schemes were experimentally evaluated. The results showed that the design with the four elements of an SSA interface could effectively improve the usability of the human–machine interface, increase the levels of human drivers’ situational awareness and prediction of dangerous intentions, and boost trust in the automatic systems, thereby providing ideas for the design of human–machine collaborative interfaces that enhance shared situational awareness in similar scenarios.

1. Introduction

As intelligent driving technologies develop, advanced automated systems are equipped with driving abilities that can coexist with those of human drivers, both of which are capable of independently controlling the vehicles to various extents [1]. The progress made in artificial intelligence and cutting-edge automation technologies has also made it increasingly important to consider the awareness of human and non-human agents [2]. The American Society of Automotive Engineers (SAE) defines six levels (L0-L5) of driving automation. L2 automated driving systems can control the vehicle horizontally or vertically, but the whole process still requires close supervision and observation of the vehicle’s behavior and surrounding environment by human drivers [3]. When the control of vehicles is gradually transferred to intelligent systems, the cooperation between human drivers and intelligent systems has higher requirements. Walch et al. argue that there are four basic requirements for human–machine collaborative interfaces: mutual predictability, directability, shared situation representation, and trust and calibrated reliance on the system [4]. Among the four requirements, shared situation representation is the key difficulty in human–machine cooperation in intelligent driver’s compartments, where intelligent systems can obtain information about the driver by recognizing the driver’s intentions and predicting their driving behavior, but the information acquired by the driver from the automated systems presents a paradox of heavy burden and little information [1]. Sometimes, vehicles with a driving assistance function have traffic accidents after the function is enabled because people overtrust the immature autonomous driving system [5]. Sometimes, passengers sitting in automated driving vehicles are confused by the sudden change of direction or deceleration of the vehicle. This may be because the vehicle does not inform the human in advance or afterward of the road information it has obtained, which makes the human unable to understand and predict the behavior of the vehicle [6].

Therefore, research on human–machine shared situation representation in intelligent driving, especially research on how automated systems can effectively share their situation awareness with human drivers, is of vital significance for the safety, credibility, and cooperation efficiency of human–machine co-driving in the future, but there is a lack of research in the field to guide the design of shared situation awareness (SSA) interfaces in human–machine co-driving. This paper aims to enhance the SSA of drivers and intelligent systems in driving scenarios. Using abstraction hierarchy analysis to break down the design goal layer by layer, generic element types of shared situation representation interfaces are proposed to guide the design of human–machine collaborative interfaces and apply it to design cases.

2. Related Work

2.1. Situation Awareness

Situation awareness (SA) focused more on individuals in the early stages [7]. It is an essential concept in the ergonomics study of individual tasks and is defined as the ability to perceive, understand, and predict elements in the environment in certain temporal and spatial contexts [8]. The importance of situation awareness in individual task operations and its high correlation with performance has been demonstrated by many studies [9,10,11].

During automated driving, drivers are likely to take their eyes off the road and are often unable to monitor the driving situation [12]. They need to actively scan relevant areas of the driving scenarios to maintain SA acquisition and an adequate SA level [13,14].

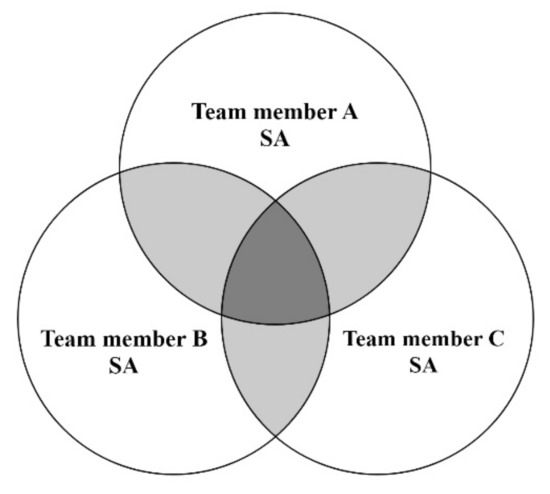

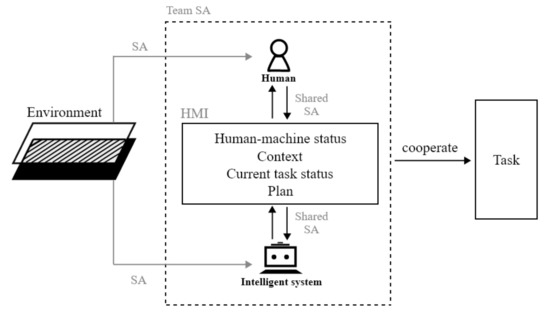

As research on SA goes deeper, it has been extended from the individual level to the team level, indicating the shared understanding of a situation by team members at some point [15] (Figure 1). The SA of a team is deemed as an important factor that influences team output, evaluates team performance, and improves team performance [16].

Figure 1.

Team situation awareness model from Endsley.

2.2. Shared Situation Awareness

SSA is critical for teams to cooperate efficiently and adapt to dynamic challenges when team members are working together to complete a task, and this is true in various fields that require cooperation to complete activities, such as railway operations, nuclear power plant management, military teamwork, and crisis management [17].

SSA reflects the extent to which all team members accurately understand the information required to achieve the goals and sub-goals related to their joint mission. Studies have shown that for drivers engaged in non-driving-related tasks during automated driving, it takes at least seven seconds to acquire sufficient SA [18]. Once the system sounds an alarm, the driver needs to take a series of actions, including sheering their attention from non-driving-related tasks to the traffic-related environment, acquiring SA, making decisions, and making a move. Some of the actions are dealt with simultaneously, which significantly increases the driver’s burden [19]. With the enhancement of automation capabilities, the automated system can perform a wider range of driving tasks, while drivers will naturally reduce their attention to driving tasks. The obtained SA information is insufficient, resulting in the driver being unable to monitor the vehicle and traffic environment and deal with emergencies properly. The ability of drivers to understand the driving situation is weakened, thereby affecting driving safety [20,21]. Therefore, the sharing of situation awareness between the driver and the intelligent system in the driving environment, especially the SA sharing of the system to the human, is of great importance and will help the driver to understand the decision of the vehicle, predict the behavior of the vehicle, and make an early judgment [22].

2.3. Interfaces Design of Human–Machine Co-Driving

It has been established that situation awareness shared between humans and computers is crucial for safe driving, especially in potentially dangerous emergency scenarios. However, there is much research on how the vehicle or intelligent driving system shares the SA that needs to be understood by human drivers and through which carrier or method. Kirti Mahajan believed that the warning sound issued by the system could help drivers realize the danger and respond to the takeover request more quickly [23]. Cristy Ho et al. demonstrated that the prompt of tactile vibration has a positive effect on the driver’s attention returning to the right direction [24]. It is believed that each stage of automated driving should have an appropriate interface to ensure that the driver can establish accurate SA [25]. Therefore, the multi-channel design of vision, hearing, and touch can help the driver obtain the SA of the intelligent driving system. However, the visual channel is still the main source for drivers to obtain information in driving scenarios [26]. Therefore, it is crucial to study how the human–machine interface helps human drivers to obtain the SA of an intelligent vehicle.

Chao Wang’s research showed that human–system cooperation in interface design and implementation of predictive information could improve the experience and comfort of automated driving effectively [27]. Guo et al. formulated three principles for Human–Machine Interface (HMI) design to improve efficient cooperation between drivers and the system. They are “displaying the driving environment”, “displaying the intention and available alternatives”, and “providing users with a way to select alternatives” [28]. Zimmermann et al. proposed that in human–machine cooperation, the goal of the human–machine interface should be to display and infer the intention of users and machines and to convey dynamic system task allocation [29]. Kraft et al. proposed four principles and applied them to the design of the human–machine interface to achieve collaborative driving. They are “displaying the driving environment”, ”explaining the intentions of the partners”, ”suggesting actions”, and ”successful feedback” [30]. There have been many studies on how to promote human–machine cooperation, but there is still a lack of relevant studies on how to improve the HMI design of shared situation awareness between human and intelligent systems during driving.

Based on the above SSA and HMI design theories, we put forward some design factors of shared situation awareness HMI in automated driving of SAE L2–L3 and provide direct suggestions for rapid HMI design practice in related scenarios.

3. Design Methods

3.1. Abstraction Hierarchy Analysis

The Abstraction Hierarchy (AH) is an analysis tool used to disassemble work domains and unravel hierarchical structures. It is the main method for work domain analysis and is widely applied in the design of complex interfaces in the field of human–machine interaction. The AH describes the work domain according to five levels of abstraction (Table 1), functional purposes, values and priority measures, purpose-related functions, object-related processes, and physical objects. Each layer is connected to the next by a structural framework. The purpose of the decomposition hierarchy AH is to decompose a system into subsystems, each subsystem into functional units, each unit into subsets, and each subset into components, so as to analyze the specific functional architecture and morphological output of the system. The analysis process of the AH is based on aspects such as the environment, technology, and human capabilities and relies on experienced domain experts [31].

Table 1.

Descriptions of abstraction hierarchy.

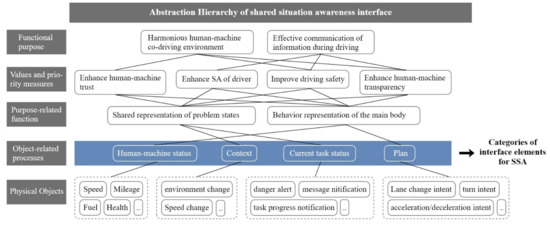

3.2. The AH Analysis of SSA Human–Machine Interaction Interfaces

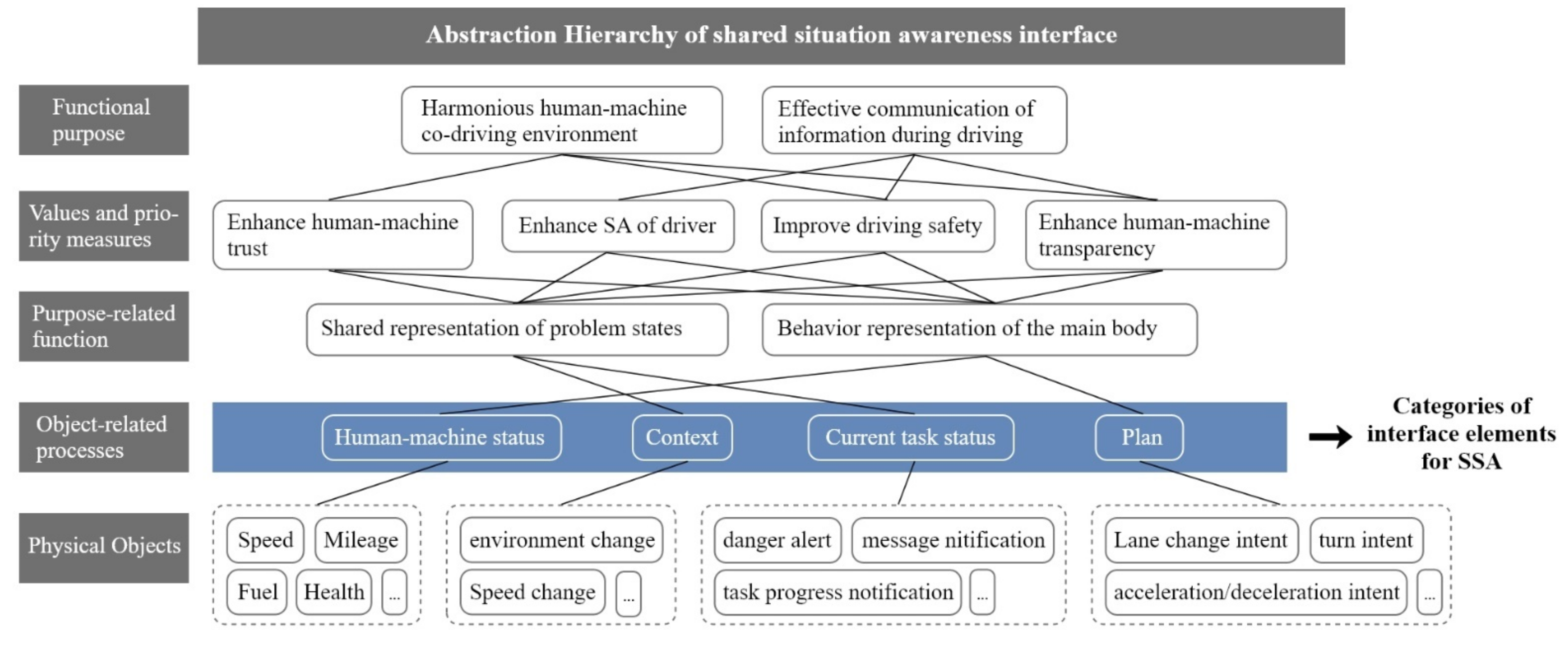

The AH approach has been widely used in the design of complex systems in fields such as aviation and shipbuilding [31] but is less commonly applied in the design of interfaces for increasingly complex intelligent cars. This paper, through the AH method, proposed the four types of information that should be included in an SSA interface (Figure 2) to facilitate more efficient communication and cooperation between human drivers and intelligent vehicles. The whole analysis process of AH was jointly discussed by five domain experts and scholars. They extensively discussed how to organize the five levels of AH when the design goal is to enhance shared situation awareness. After reasoning, analysis, and induction, the AH results are summarized, as shown in Figure 2. The first hierarchy of the AH system aims to achieve an effective communication mechanism and a harmonious human–machine co-driving environment. The second hierarchy values enhancing the driver’s SA of the environment and enhancing the transparency of the information passed to the driver by the system, thus boosting trust in human–machine cooperation [32] and improving driving safety [33]. The third hierarchy describes functions related to the purposes (i.e., the interpretation of general functions) and realizes the value dimension mentioned in the previous hierarchy through the shared representation of the problem or task state and that of the activities of the actors in the cooperative team. The fourth hierarchy describes functions related to the objects (i.e., further reasoning, analysis, and generalization of the general functions mentioned in the previous hierarchy), thus obtaining the following four types of information: human–machine state, context, current task status, and plan, which help the system to deliver its shared scenarios to the driver and help the driver understand the connotations of the system decision. The final hierarchy of the model contains specific representation elements, such as speed, fuel level, environmental changes, hazard warnings, and lane change intentions. The elements are to be presented on the interface of which scenarios need to be analyzed and selected according to the specific needs of the scenario.

Figure 2.

Abstraction Hierarchy of shared situation awareness interface.

3.3. Four Factors of SSA Interface

This paper, through the above analysis, reasoned and proposed four generic types of interface elements for the shared situation representation of human–machine collaborative interfaces, namely human–machine state, context, current task status, and plan or motive. The human–machine state explains the current level, attribute, or normal state of both or one of the parties of SSA, such as the display of automated and manual driving, fuel level, speed, and other driving states of the car. The context contains both temporal and spatial dimensions. The temporal context explains the changes taking place in the intelligent vehicle-mounted system over a period of time, and the spatial context explains the relationship between the car and the surrounding vehicles. The current task status, related to specific tasks, explains the progress of the tasks, such as displaying the status of “searching” when using the search function to explain to the user the transparency of the system’s operation. The plan shows the reasons why the system is about to manipulate the vehicle to perform a specific behavior, enhancing the transparency of information in human–machine communication.

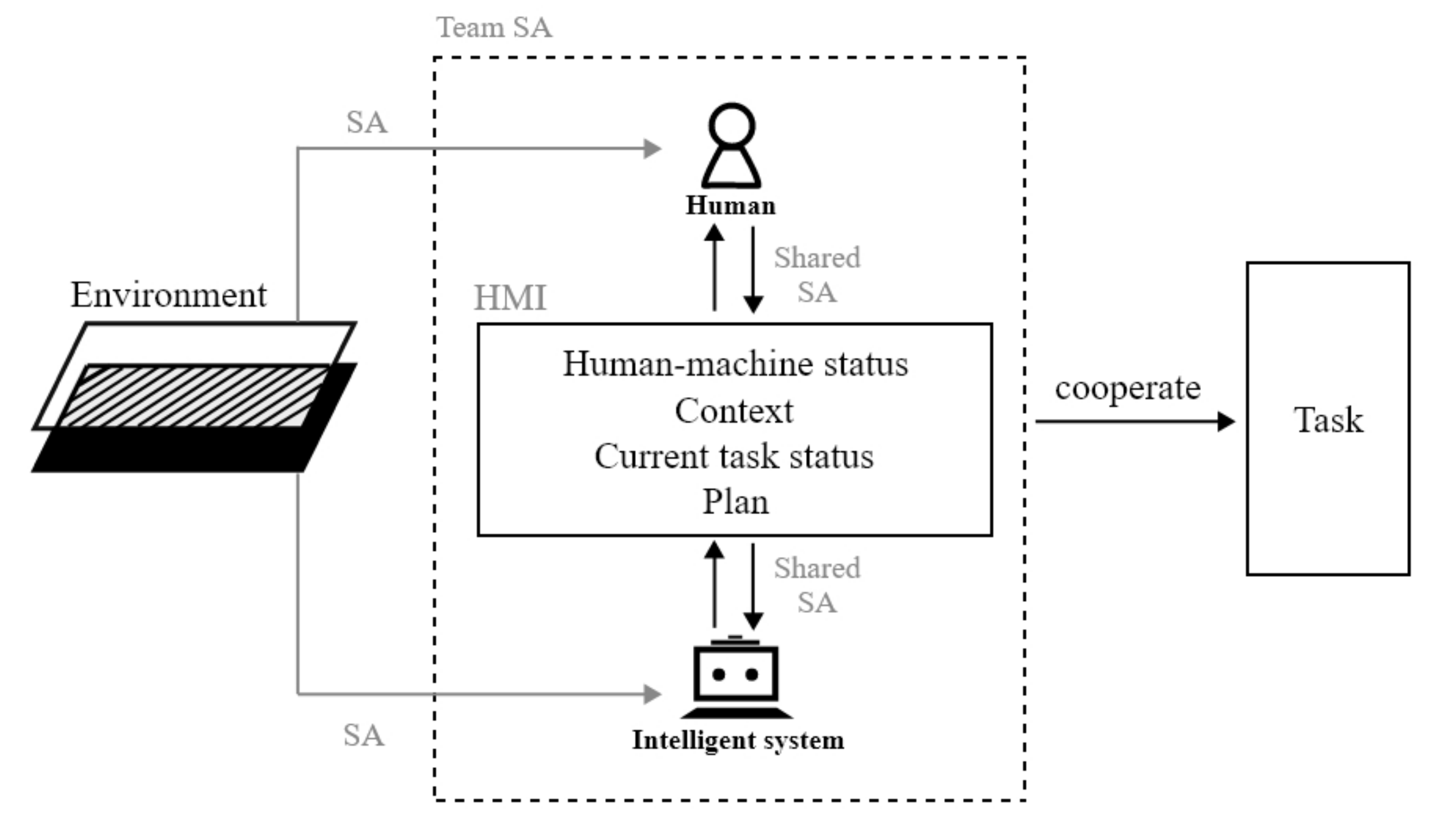

A driving scenario contains four modules: human, intelligent system, environment, and task. The human and the intelligent system communicate through the human–machine interface, which contains the four factors of SSA when completing a task (Figure 3).

Figure 3.

Abstraction Hierarchy of shared situation awareness interface.

4. Simulation Experiment

4.1. Experimental Subjects

A total of 16 subjects (8 males and 8 females) aged from 25 to 40 were selected (mean = 32.1, SD = 4.61), all of whom had 3–13 years of driving experience (mean = 7.3, SD = 3.11), with normal visual acuity.

4.2. Experimental Design

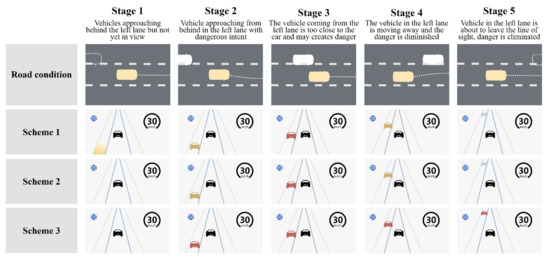

A within-subjects experiment was adopted in this study. The experimental variable for the three schemes was the number of types of SSA human–machine collaborative interface elements included in the interface (Table 2). Scheme 1 contained all four interface elements, Scheme 2 contained three types of elements (human–machine state, context, and current task status), and Scheme 3 contained only two types of elements (human–machine state and context).

Table 2.

Comparison of experimental schemes.

4.3. Selection of Experimental Scenarios and Requirement Analysis of SSA Information

The design and experiment were carried out by selecting typical driving scenarios to verify the effectiveness of the HMI design using the four elements of an SSA interface proposed above.

A potentially dangerous scenario of a vehicle coming from the rear was selected in this experiment. In this scenario, the system needs to share the dangerous intentions of the vehicle from the rear that it senses and the safety status of the vehicle with the driver in time to help the driver react correctly to avoid risks and improve driving safety.

According to the aforementioned four types of interface elements for shared situation representation and taking into account the driving environment, road characteristics, and driving habits of the scenario with a vehicle approaching from the rear, the information requirements for the scenario were obtained, as shown in Table 3.

Table 3.

Descriptions of Levels of Abstraction.

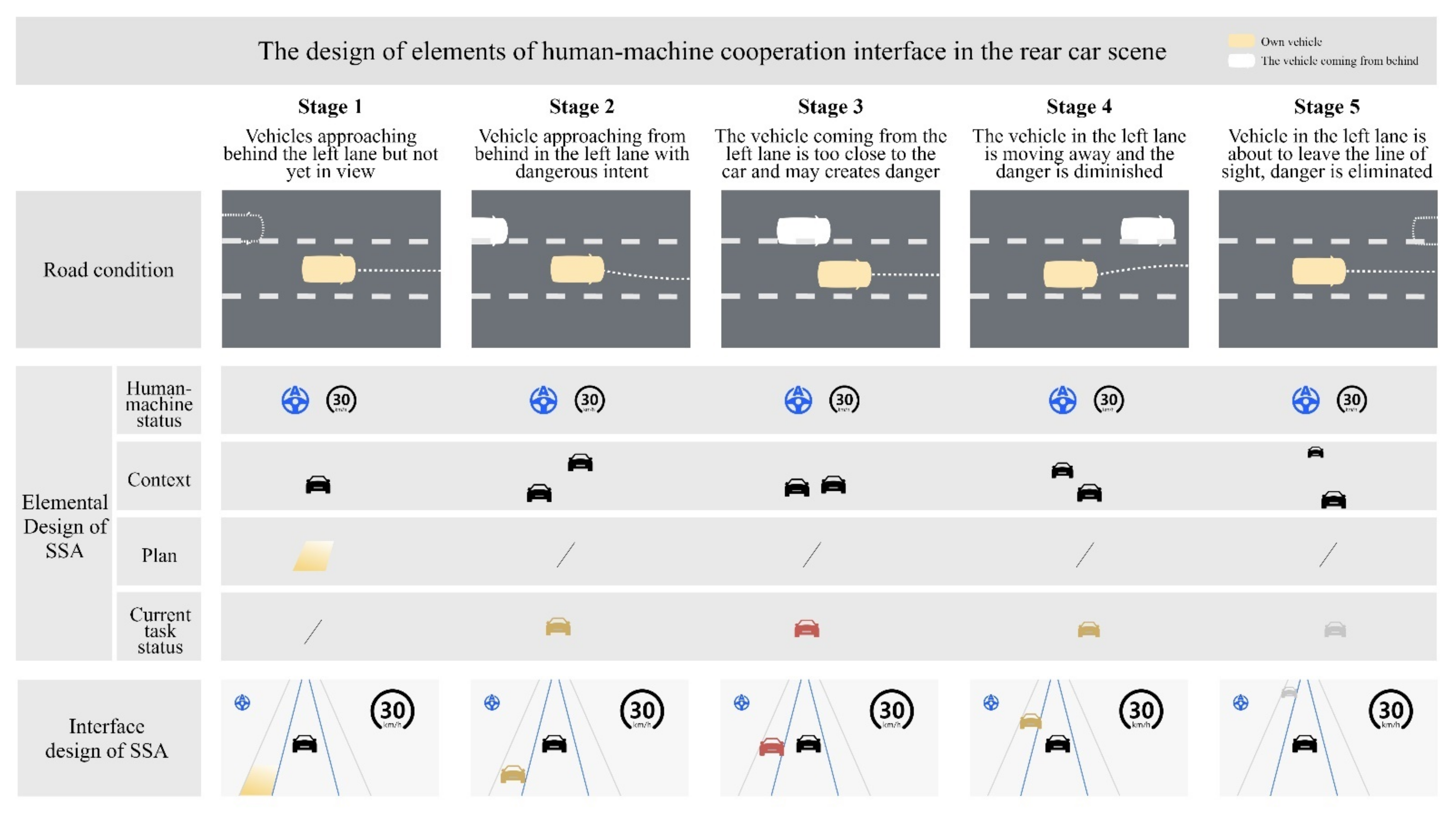

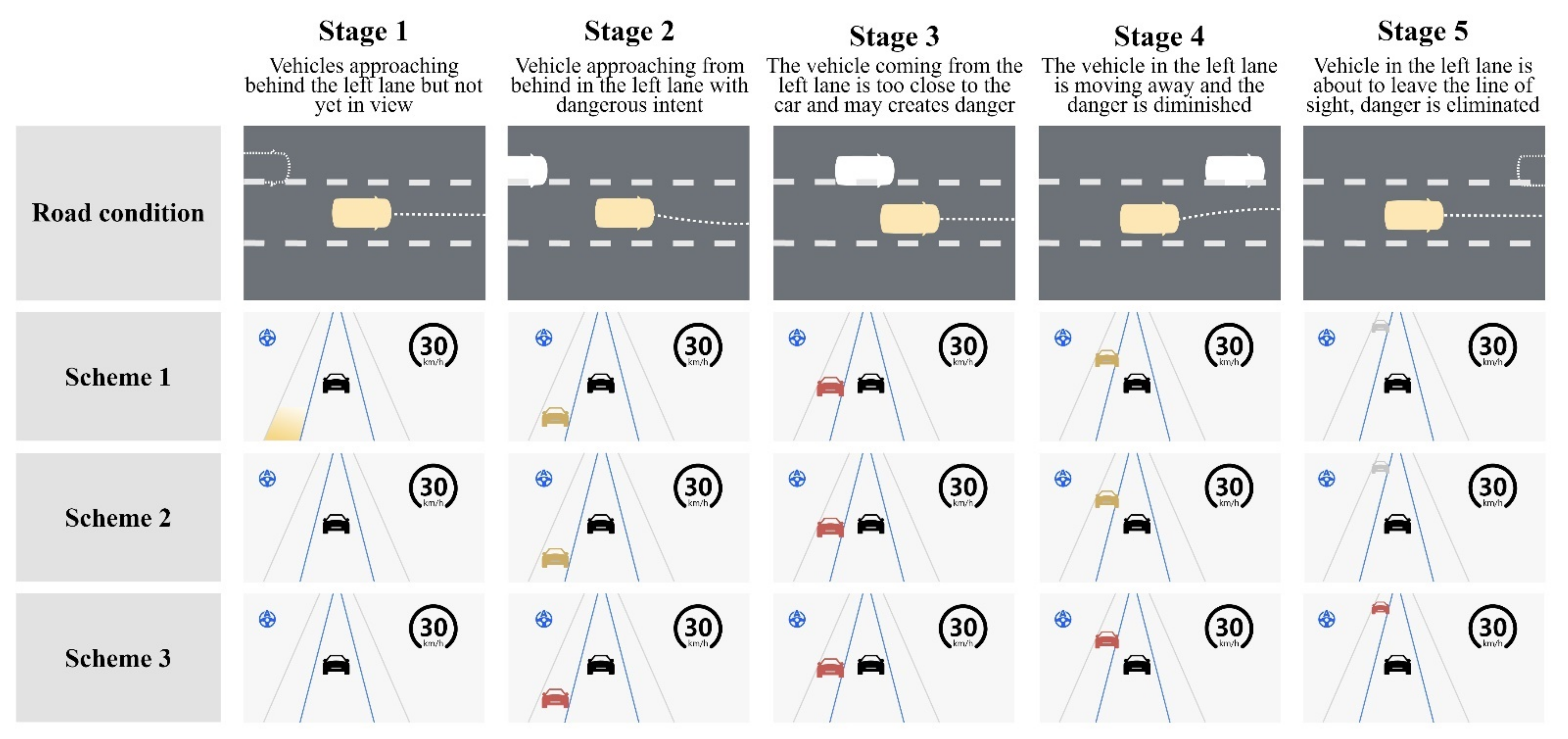

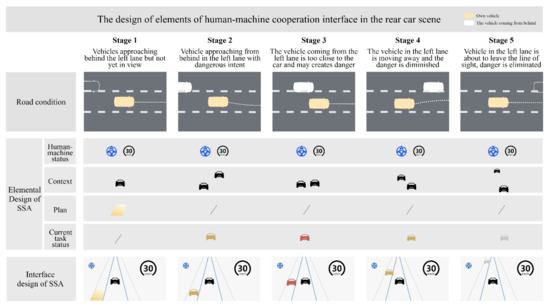

The overtaking process includes the following five stages in chronological sequence (Figure 4). The vehicle coming from the rear in the left lane is about to enter the field of vision, the vehicle with a dangerous intention is approaching from the rear in the left lane, the vehicle in the left lane is abreast of the present vehicle, the vehicle in the left lane overtakes the present vehicle and gradually drives away, and the vehicle in the left lane is about to leave the field of vision. The design of interface elements for the five stages of the scenario was visualized (not all four types of information are included in each stage) based on information requirements.

Figure 4.

The design of elements of human–machine cooperation interface in the rear car scene.

- Human–machine status: the automated driving status—the icon of using the steering wheel (blue color means the automated driving function is on); the current speed—a more common circular icon containing a number that varies according to the actual speed;

- Context: a car approaching from the rear—the icon of two cars, with their relative positions in sync with the experiment process;

- Plan (only in the first stage): a potentially dangerous vehicle is about to appear in the view from the left rear—a gradient color block displayed in the left rear lane on the interface;

- Current task status: the level of danger the drive is in—color added to the vehicle on the interface, with gray, yellow, and red indicating no danger, mildly dangerous, and highly dangerous, respectively.

A prototype interface for each stage can be obtained by combining the aforementioned design elements through a reasonable layout that can be used in the subsequent evaluation experiment. A prototype interface was designed for each scheme (Figure 5) for subsequent experiments.

Figure 5.

Low-fidelity prototype of the testing interface.

4.4. Experimental Environment

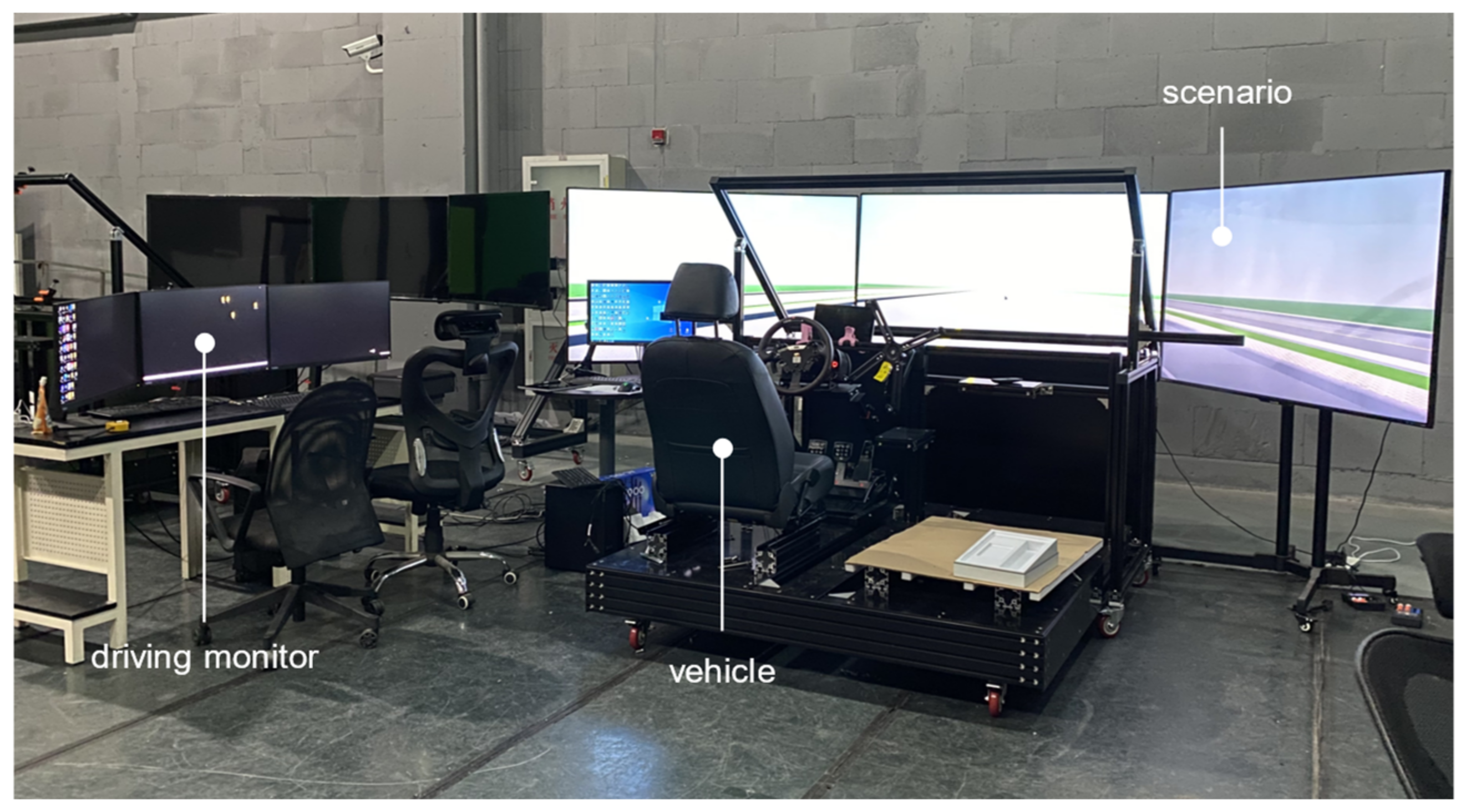

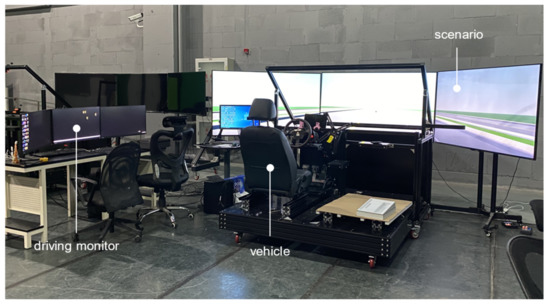

The experiment was based on a self-developed driving simulation system that includes three modules: vehicle, scenario, and driving monitoring and analysis (Figure 6). The vehicle module is the test bench simulating driving, composed of parts such as acceleration and deceleration pedals, seats, steering wheel, and rearview mirror. The scene module builds simulation scenarios based on Unity software to simulate factors such as the weather, road conditions, and surrounding vehicles in the real driving environment. The module of driving monitoring and analysis monitors the behaviors of the user’s head and feet and records driving data. Eye-tracking devices were used in the experiment to capture dynamic eye movement data.

Figure 6.

Experimental site.

4.5. Experimental Process

The subjects filled in the basic information form and the informed consent form before the experiment. They were briefed on the purpose and task of the experiment, as well as simulator driving. The subjects completed safe driving at 30 km/h for 2 min to ensure that they could adapt to simulator driving and maintain safe driving in a task-free state. The subjects put on the eye-tracking device and completed the calibration. The experimenter announced tasks to the subjects. The automated driving mode was turned on after a beep with the subject driving smoothly in the middle lane. The subjects observed the vehicle approaching from the rear in the left lane through the rearview mirror and the central control interface (namely, the designed interfaces in the three experimental schemes, and the order of schemes is random) and answered the questions raised by the experimenter during the experiment. The task finished when the car behind overtook the car driven by the subject and drove out of the field of view, and the subjects were guided to fill in the subjective evaluation scales and interviewed at that time.

4.6. Experimental Evaluation Method

The subjective evaluation scales of the subjects and objective data analysis were adopted in the experiment, coupled with post-experiment interviews. The subjective scales included the Situation Awareness Global Assessment Technique (SAGAT) [34] scale used to test the level of SA and the After-Scenario Questionnaire (ASQ) [35] used to assess the usability of a scheme. Objective data included task response time and task accuracy, which were used to test task performance.

4.6.1. SAGAT

The SAGAT scale evaluated the level of SA by examining three dimensions: perception, understanding, and prediction [34]. Subjects needed to answer SA questions that had correct answers, such as how many times the color changed of the car in another lane on the screen during the experiment just now. One point was awarded if the subject answered each question correctly; otherwise, there were no points.

4.6.2. After-Scenario Questionnaire

The ASQ is available after each task and can be performed quickly [36]. The three questions in ASQ refer to three dimensions of the usability of the central control interface, namely ease of task completion, time required to complete tasks, and satisfaction with support information, which were scored using 7-point graphic scales. The options were set from 1 to 7, with higher scores referring to a higher level of performance.

4.6.3. Task Response Time and Task Accuracy

The task response time refers to the time from the start of the task to the time when the subject first notices the approaching car behind him/her, which was collected by the subject pressing a button on the steering wheel. The task accuracy refers to the subjects’ correct judgment of whether the vehicle is in potential danger and the danger level (low, medium, and high) in stage 2. The accuracy data were collected by thinking aloud in which the experimenter asked the subjects questions when the task reached stage 2, and the subject responded promptly. In fact, in stage 2, the vehicle is in potential danger, but the level is not the highest. If the subject correctly judges the dangerous situation of stage 2, the accuracy of his task is considered to be 100%; otherwise, the accuracy is 0.

4.6.4. Quick Interview

After all tasks, the subjects were interviewed briefly and quickly. The questions included whether they noticed the difference between the different schemes, how they viewed the three schemes, and which scheme they think is the best and why. Furthermore, the subjects were asked how much they trusted the information prompts on the interface subjectively. The purpose of the interview is to gain insight into more potential subjective feelings beyond the scale assessment as a supplement and possible explanation of the assessment.

5. Results

One-way analysis of variance (ANOVA) with post hoc pairwise comprehension (Tukey’s Honestly Significant Difference) was conducted for the experiment (alpha = 0.05, effect size f = 0.46) with the scheme as the within-subject factor. The experimental results are divided into the following parts.

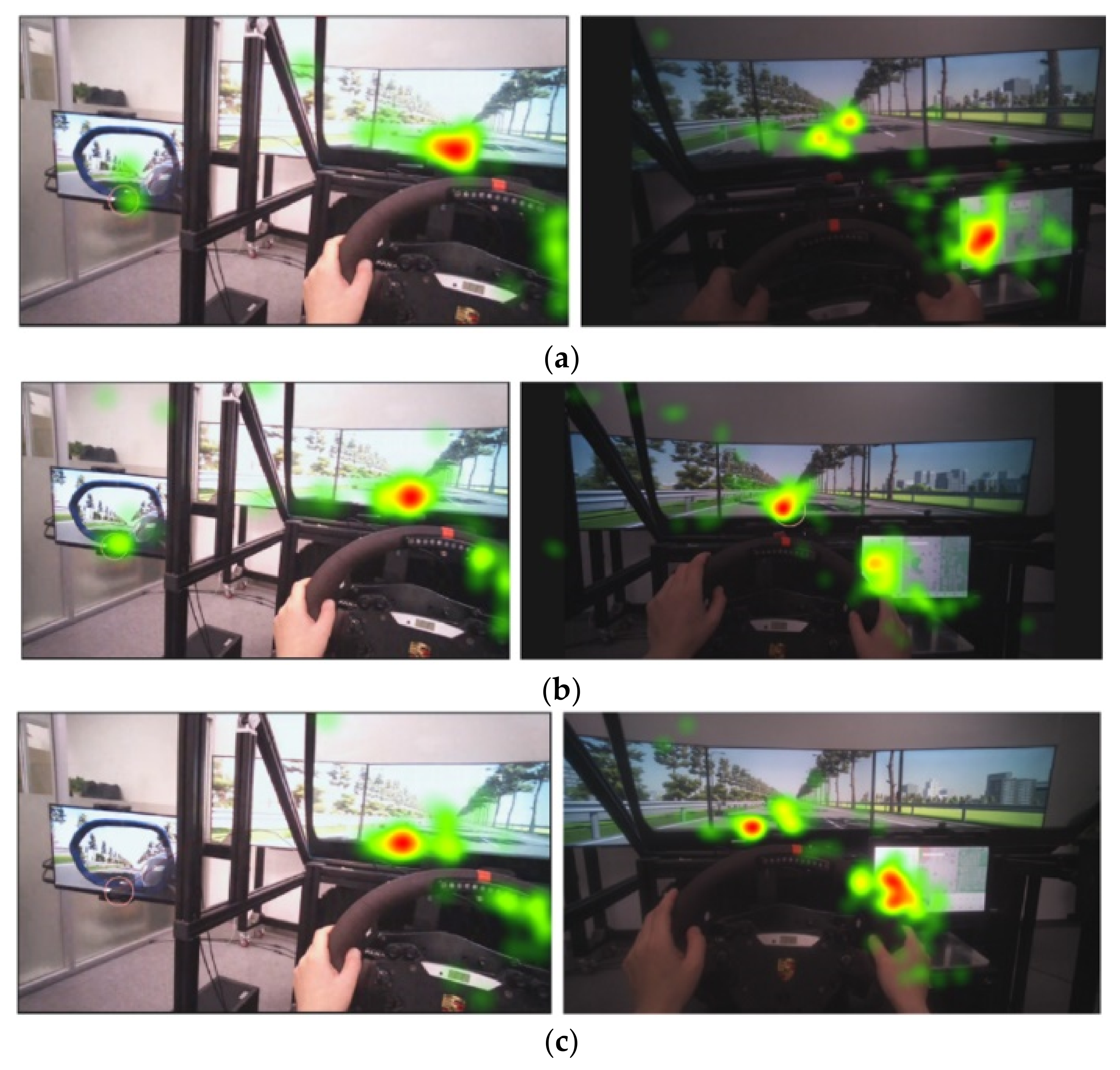

5.1. Eye Movement

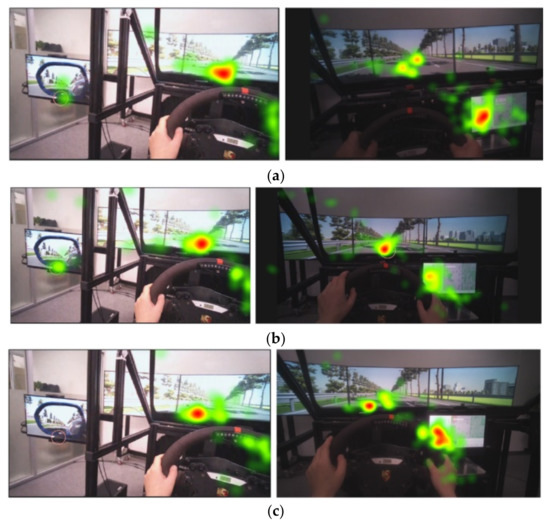

The eye movement of all subjects in the process of completing the task was superimposed. According to the thermodynamic diagram of the eye movement of the three schemes (Figure 7), the central control area of Scheme 3 had more concentrated sight lines than that of Scheme 1, on both of which sight lines stayed longer than that of Scheme 2. Specifically, there were more scattered weak hot zones near the central control area of Scheme 1, and the hot zones near the central control area of Scheme 2 were too weak to form a concentrated red area, while the hot zones in Scheme 3 were concentrated in the location where the car appeared on the screen, indicating that the red car on the interface of Scheme 3 is more attractive to the subjects. In terms of the rearview mirror area, Scheme 1 and Scheme 2 presented hot zones, while Scheme 3 did not, suggesting that the subjects in Scheme 1 and Scheme 2 had a more comprehensive observation of vehicles coming from the rear in the real environment and a relatively better perception of the environment.

Figure 7.

Superimposed schematic diagram of the subject’s eye-movement heat zone: (a) Scheme 1; (b) Scheme 2; (c) Scheme 3.

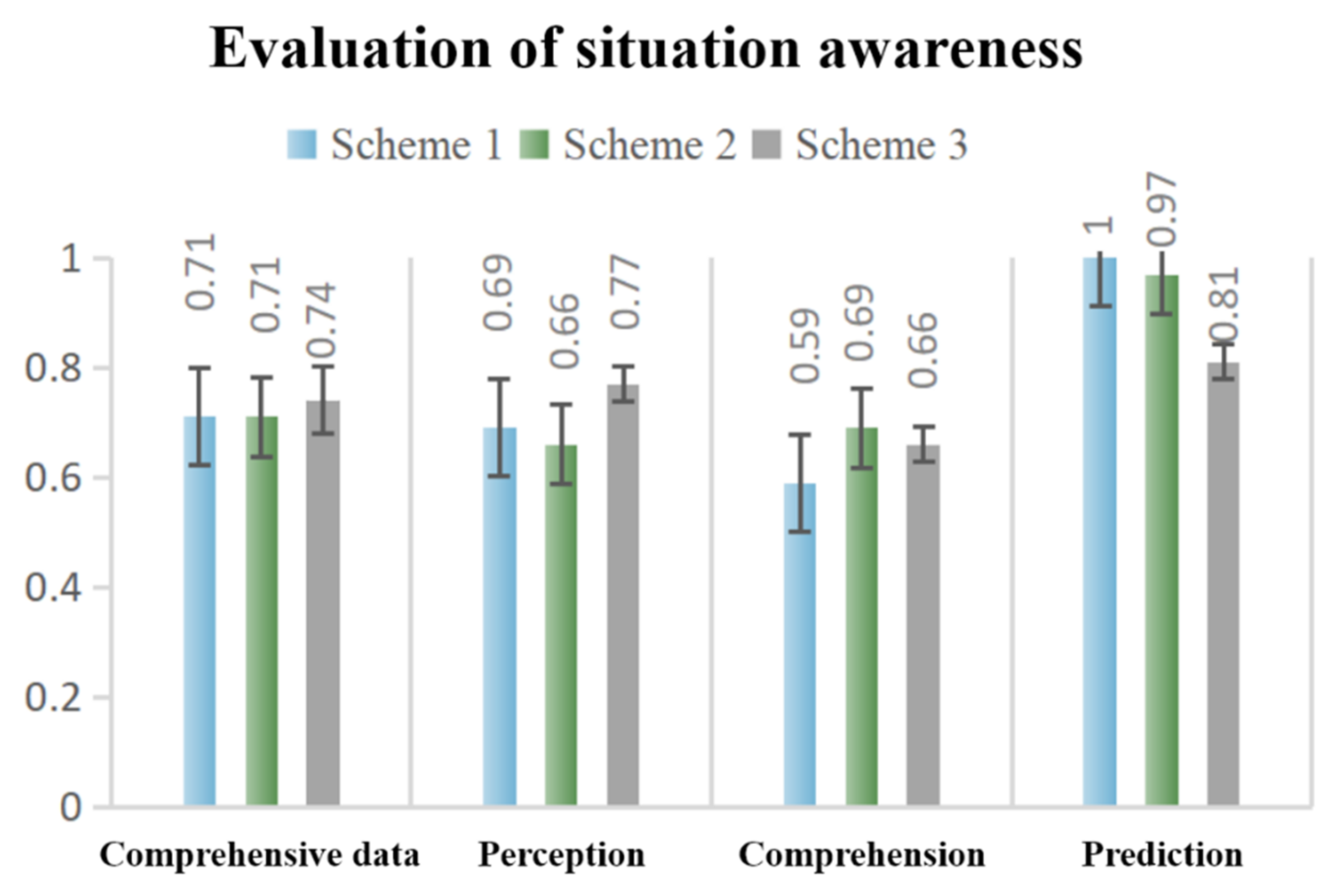

5.2. Situation Awareness

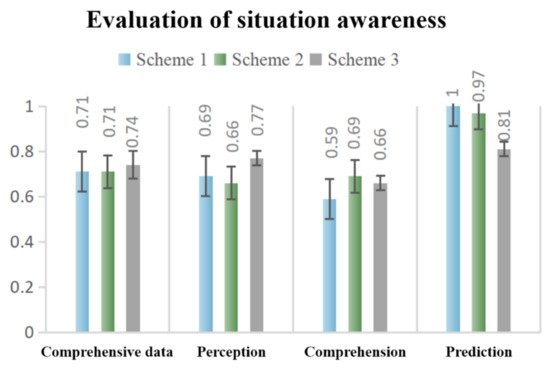

The scores of the SAGAT scales after normalization processing (Figure 8) showed that the overall SA scores of Scheme 1 and Scheme 2 were the same, slightly lower than Scheme 3 (0.74 ± 0.06) by 0.03 points. In terms of prediction, however, Scheme 1 scored higher than Scheme 3 by 0.19 points, and Scheme 2 scored higher than Scheme 3 by 0.16 points, indicating that the subjects had a stronger ability to predict the danger behind them when using Scheme 1, which is also one of the factors that have a great impact on driving safety in dangerous driving situations. Nonetheless, more effort is required in perception and understanding, maybe due to the large number of elements and changing colors on the interface.

Figure 8.

Situation awareness assessment results.

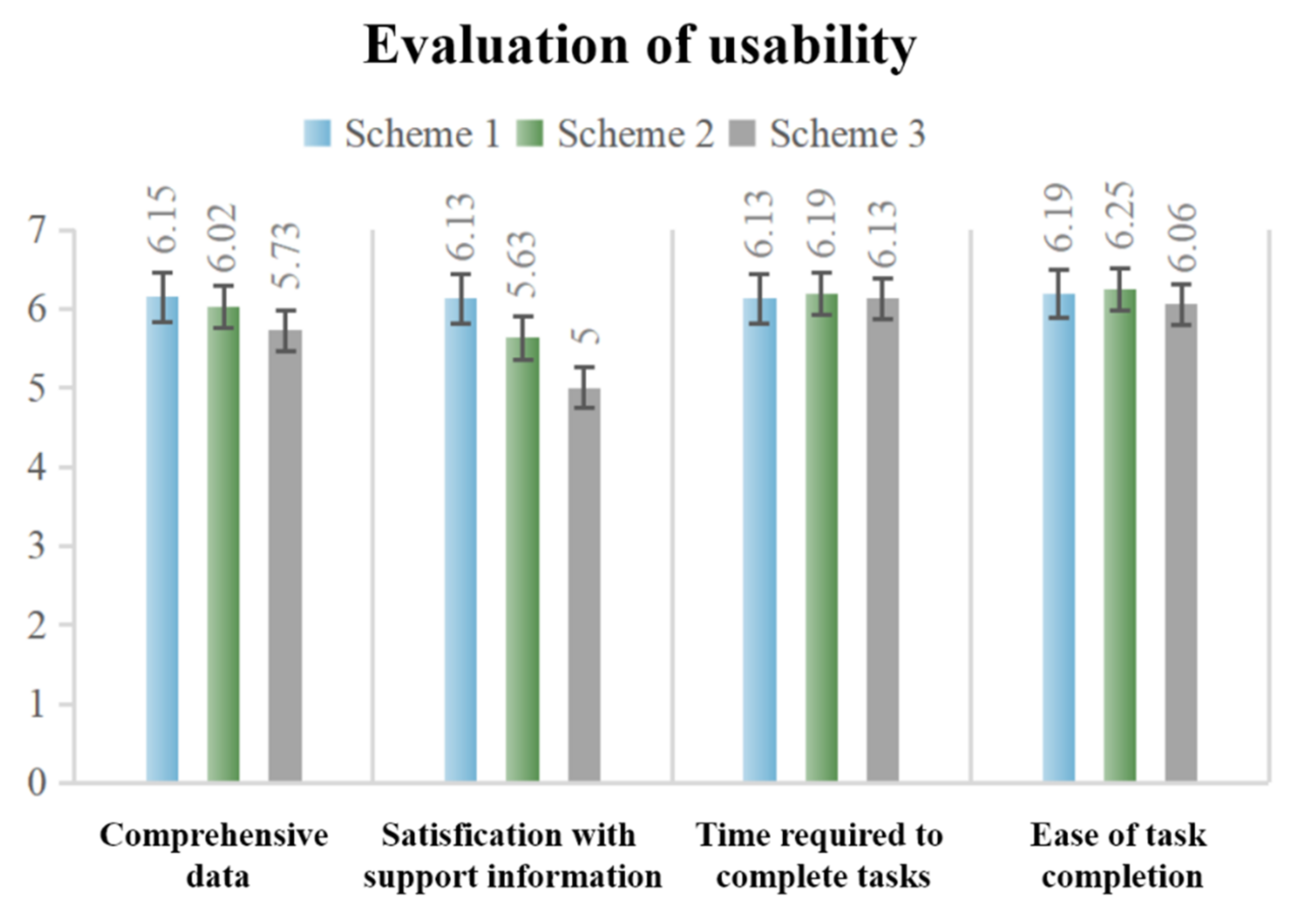

5.3. Usability

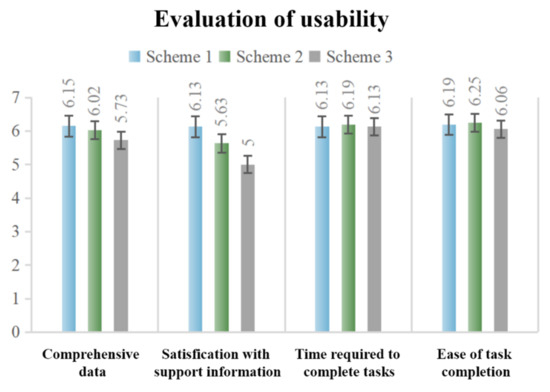

Scores of the ASQ scale reflect the usability of the design schemes. As shown in Figure 9, Scheme 1 scored the highest in terms of the overall ASQ score, followed by Scheme 2 and then Scheme 3. The scores of satisfaction with support information of the three schemes varied drastically, with the largest gap of 1.13 points. Overall, the scores of various indicators in Scheme 1 were balanced, with an overall score of 6.15 ± 0.31 points (out of 7 points), reaching a relatively high level, indicating that Scheme 1 outperforms the other two schemes in terms of usability. Through one-way analysis of variance (ANOVA), only the dimension “Satisfaction with support information” showed significant difference among the three schemes (F(2.45) = 4.442, p = 0.017 < 0.05, effect size f = 0.46). Furthermore, the Post hoc tests (Tukey’s Honestly Significant Difference) revealed that there was a significant difference between Scheme 1 and Scheme 3 (p = 0.013 < 0.05), with no significant difference between other schemes.

Figure 9.

Usability Assessment Results.

Correlation analysis was conducted between SAGAT and ASQ data (including each subdimension). The results (Table 4) showed that there was a moderate positive correlation (r = 0.488 **, p = 0.000 < 0.001) between “Prediction” of SA and “Satisfaction with support information” of Usability, which suggested that the effective design of help information could improve the situation awareness and prediction level of human drivers. Other than that, there was no correlation among the subdimensions of SAGAT and ASQ.

Table 4.

The results of the analysis of Correlation.

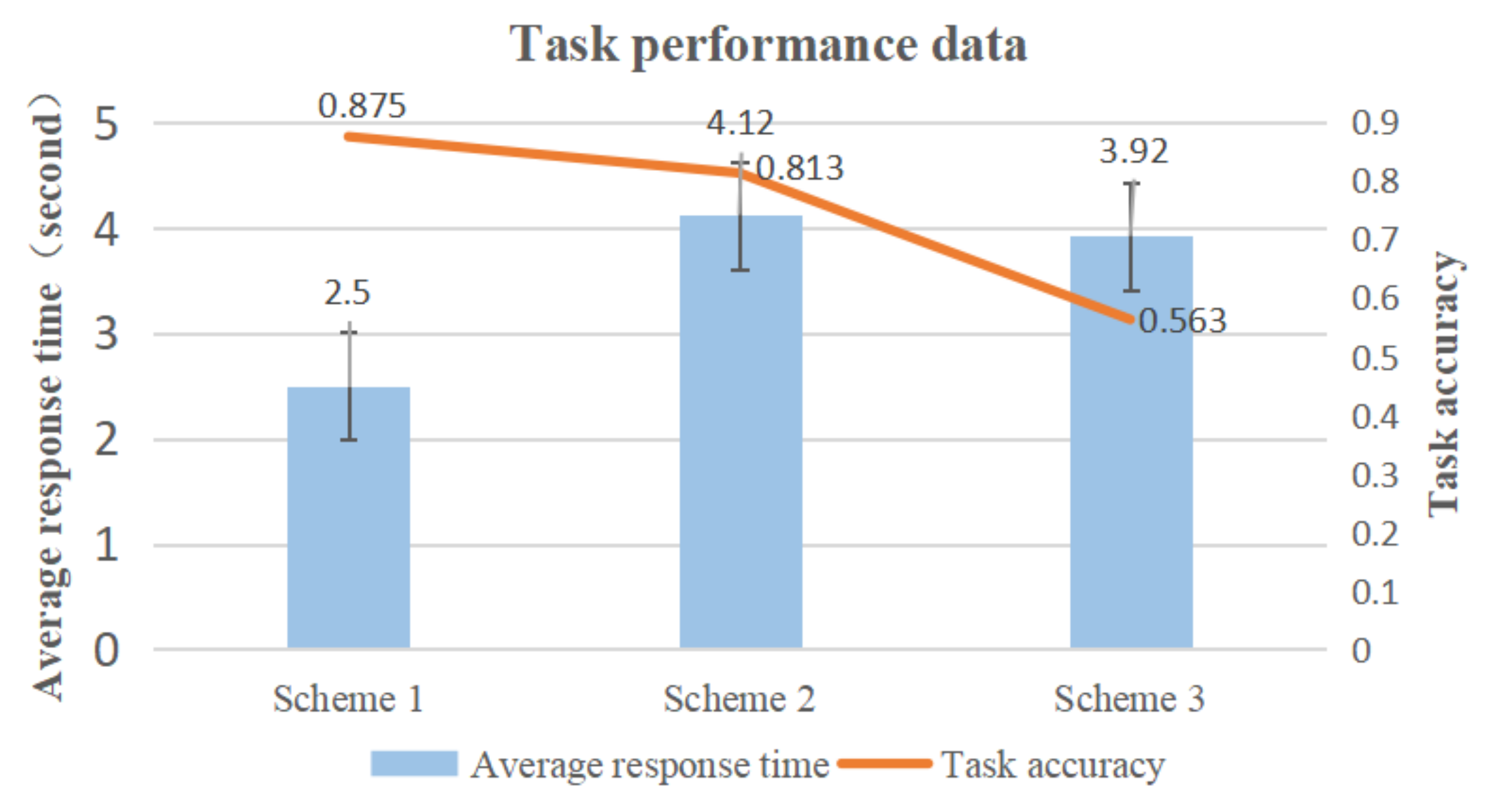

5.4. Task Response Time and Task Accuracy

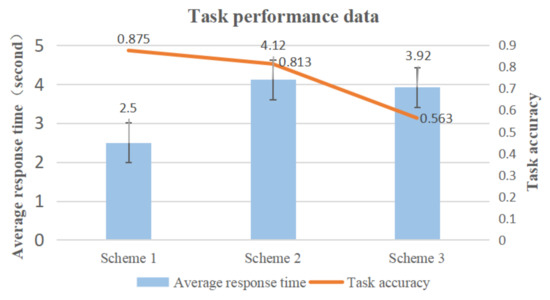

Task response time and the accuracy of identifying dangerous conditions are important factors reflecting task performance, while task performance, to some extent, reflects the effects of the HMI design. According to the statistics (Figure 10), the values of task accuracy of the three schemes were 87.5% (Scheme 1), 81.3% (Scheme 2), and 56.3% (Scheme 3), respectively, indicating that the subjects in Scheme 1 had the highest accuracy in identifying potentially dangerous conditions. In Scheme 3, most of the subjects thought that the danger happened earlier, and it was found, from the follow-up interview, that the subjects could not accurately perceive the change in hazard because the color of the icon of a car representing the current task status on the interface was always red.

Figure 10.

Average reaction time and correct rate results.

The average task response time of Scheme 1 was 2.5 s, that of Scheme 2 was 4.12 s, and that of Scheme 3 was 3.91 s. The subjects in Scheme 1 performed better in terms of the response time than those in Scheme 2 and Scheme 3. In other words, subjects in Scheme 1 made more rapid and effective judgments about approaching vehicles based on their observations. According to the one-way ANOVA of the data on the subjects’ task response time, the results (Table 5) showed a significant difference among the three schemes (F(2.45) = 24.217, p = 0.000 < 0.001, effect size f = 0.46). Post hoc tests (Tukey’s Honestly Significant Difference) revealed that the response time of Scheme 1 was significantly lower than that of Scheme 2 and Scheme 3 (p = 0.000 < 0.001), with no significant difference between Scheme 2 and Scheme 3 (p = 0.694 > 0.05).

Table 5.

The results of the analysis of variance.

5.5. Interview

The interview responses were coded, analyzed, and summarized by two researchers and verified to be 82.5% inter-rated congruency, meeting the congruency requirement [37]. The main conclusions are shown in Table 6. We also annotated the proportion of subjects who mentioned this content in all subjects. In general, all subjects indicated that they noticed the differences between the schemes, and most (12/16) of the subjects in the interview had a stronger subjective preference for Scheme 1. They believed the yellow block element (Plan) helped them predict cars coming from behind. The color of the other car (Current task status) on the interface during the process made them more aware of the danger level they were in at that time. Additionally, 12/16 of the subjects believed that the system reliability was higher when Scheme 1 was presented, resulting in higher subjective trust. Meanwhile, 3/16 of the subjects said that they observed the car conditions through the rearview mirror instead of paying attention to the HMI during the automated driving process, so they may have missed some information during the experiment. However, this long-term inherent driving habit is not easy to change.

Table 6.

The results of interviews.

6. Discussion

The main purpose of this paper was to study how the interface design of vehicles with automated driving functions can share SA with human drivers better. We proposed four factors of the SSA interface by applying the analytical approach of AH and relying on expert experience and evaluated the experimental design in terms of situation awareness, usability, and task performance. Overall, Scheme 1, containing the complete four shared situation awareness interface factors (human–machine state, context, plan, and current task state), had better comprehensive performance, followed by Scheme 2, containing three factors, and Scheme 3, containing only two kinds of factors, had the worst performance.

In terms of situation awareness, there was little difference in the total score among the three schemes, with Scheme 3 scoring slightly higher than the other two schemes. However, by comparing the scores of the three sub-dimensions, it could be seen that Scheme 3 mainly got higher scores in the “Perception” dimension than the other two schemes, which led to a high total score, but there was no better performance in the “Comprehension” and “Prediction” dimensions. What we found in our interviews was that the subjects thought that the colors on the screen would attract their attention. Red would make them more likely to notice changes, particularly. Scheme 3 happened to be the scheme where the red element appeared for the longest time, which may impact perception. In addition, Scheme 1 got full marks in the “Prediction” dimension, which meant that all subjects obtained the meaning of the yellow color block representing “Plan” accurately. This result suggests that in future interface design, a transparent presentation of the “Plan” element could help drivers to obtain a better level of prediction of driving activity.

For usability, Scheme 1 is generally higher than Schemes 2 and 3, especially in the dimension of “Satisfaction with support information”. In the “Time required to complete tasks” and “Ease of task completion” dimensions, the scores of the three schemes are very close; although Scheme 2 is slightly higher than Scheme 1, there is no significant difference. From the perspective of experimental design, it may be because the task is too simple to cause time urgency and difference in task difficulty.

In terms of task performance, the task reaction time and accuracy mainly measure whether the subjects can capture the important prompt information quickly and accurately so as to illustrate the helping effect of the design scheme. The task reaction time of Scheme 1 was significantly shorter than that of the other two schemes, which may be because the subjects observed the interface and understood the meaning of the yellow block element (Plan), and predicted the incoming car correctly. This suggests that an early presentation of the “Plan” factor for potential hazards on the interface may help drivers to react quickly to driving situations. Task accuracy is higher in Scheme 1 than in Scheme 2, and Scheme 2 is higher than in Scheme 3. Scheme 1 showed two more of the four factors and achieved the highest data in terms of task accuracy. This suggests that we could consider adding “Current task status” and “Plan” factors on the interfaces to help drivers make more accurate judgments about current driving activities, such as the safety status of the vehicle in the future.

Interviews and eye-tracking heat maps were used to assist in the interpretation of the overall experimental results. Through the interview, the subjects’ evaluation can explain some of their subjective feelings. Most of the participants agreed that Scheme 1 gave them a better prompt for the car coming from behind. However, some people mentioned that due to their long-term driving habits, they may have habitually watched the rearview mirror and missed the prompt information from the center control interface. Some subjects mentioned that the yellow block is not obvious enough, which reminds us that when designing, we should consider the user’s past experience, and when displaying important information, we should consider the proper use of color, shape, motion effect, and so on to make it more easily perceived. For example, using more eye-catching colors or adding an animated display for icons on the interface, but paying attention to the duration and avoiding meaningless visual occupancy. The eye movement heat map showed the main areas where the subjects’ eyes focus. Subjects in Scheme 1 not only observed the central control interface fully but also took the road conditions in the rearview mirror into account, which was closer to the expected observation state in the experiment.

This study completed the whole experimental research based on the driving simulator, which has the advantages of being easy to operate, high safety, and saving time. However, the three screens in front that simulate the external road environment are not large enough to fully cover the visual field of the subjects’ eyes, which weakens the realism of driving. In the future, we will consider increasing the screen size or using a wraparound screen to create immersive driving. In addition, the “Plan” factor only appears in Stage 1 of the experimental design, and it is not visually obvious, which may affect the evaluation results of Scheme 1. In the future, we will consider adding the design of the “Plan” factor reasonably in more stages, such as adding the forecast route of the vehicle on the interface from Stage 2 to Stage 4. In addition, we will consider expanding driving scenarios, such as lane changing and overtaking, to evaluate the design value of the four factors of the SSA interface in more typical driving scenarios.

7. Conclusions

Based on the SSA theoretical background and AH analysis method, we proposed four factors of interface design to enhance human–machine SSA. Through experiment, we verified that the factor “Plan” and “Current task status” contribute to the level of SA prediction, usability, and task performance in the human–machine co-driving scenario of automated driving. In addition, statements of the interview implied that the interface containing the four factors had a positive impact on the subjective trust attitude of humans toward the automated system.

In the future, for interface design of automated driving scenarios at SAE L2-L3, we first recommend dividing the scenarios by phases, such as pre-activity, in-activity, and post-activity, or in more detail, in order to analyze the information needs of the interface during each phase. Then we suggest that the interface should contain “human–machine state” and “context” factors, such as vehicle speed, assisted driving status icon, front and rear vehicle position display, etc. This is very basic information for driving activities. In addition, and more importantly, we recommend including “Plan” and “Current task status” factors in the interface, which will help the driver to predict the driving situation better. However, it is not necessary to include the four factors above in every stage. They should be planned and laid out according to the information needs of the actual scenario. For scenarios with potential hazards, such as lane changing, overtaking, intersections, etc., the “Plan” factor is recommended to be displayed on the interface at an early stage of the scenario, which will help the driver to know the existence of the hazardous situation earlier and avoid the risks.

Author Contributions

Conceptualization, F.Y. and X.Y.; methodology, X.Y.; software, W.C.; validation, X.Y., J.Z. and W.C.; formal analysis, X.Y. and J.Z.; investigation, X.Y.; writing—original draft preparation, X.Y.; writing—review and editing, W.C.; visualization, X.Y.; supervision, F.Y.; project administration, F.Y.; funding acquisition, F.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by CES-Kingfar Excellent Young Scholar Joint Research Funding (No. 202002JG26); Association of Fundamental Computing Education in Chinese Universities (2020-AFCEC-087); Association of Fundamental Computing Education in Chinese Universities (2020-AFCEC-088); China Scholarship Council Foundation (2020-1509); Tongji University Excellent Experimental Teaching Program (0600104096); Shenzhen Collaborative Innovation Project: International Science and Technology Cooperation (No. GHZ20190823164803756); Shenzhen Basic Research Program for Shenzhen Virtual University Park (2021Szvup175). Intelligent cockpit from the 2022 Intelligent New Energy Vehicle Collaborative Innovation Center, Tongji University.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zong, C.F.; Dai, C.H.; Zhang, D. Human-machine Interaction Technology of Intelligent Vehicles: Current Development Trends and Future Directions. China J. Highw. Transp. 2021, 34, 214–237. [Google Scholar] [CrossRef]

- Hancock, P.A. Human-machine Interaction Technology of Intelligent Vehicles: Current Development Trends and Future Directions. Ergonomics 2017, 60, 284–291. [Google Scholar] [CrossRef] [PubMed]

- J3016_202104; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Warrendale, PA, USA, 2016.

- Dixon, L. Autonowashing: The Greenwashing of Vehicle Automation. Transp. Res. Interdiscip. Perspect. 2020, 5, 100113. [Google Scholar] [CrossRef]

- Pokam, R.; Debernard, S.; Chauvin CLanglois, S. Principles of transparency for autonomous vehicles: First results of an experiment with an augmented reality human–machine interface. Cognit. Technol. Work 2019, 21, 643–656. [Google Scholar] [CrossRef]

- Walch, M.; Mühl, K.; Kraus, J.; Stoll, T.; Baumann, M.; Weber, M. From Car-Driver-Handovers to Cooperative Interfaces: Visions for Driver–Vehicle Interaction in Automated Driving. In Automotive User Interfaces; Human–Computer Interaction Series; Meixner, G., Müller, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Endsley, M.R. Situation Awareness Global Assessment Technique (SAGAT). In Proceedings of the National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 23–27 May 1988; pp. 789–795. [Google Scholar]

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. Hum. Fact. J. Hum. Fact. Ergon. Soc. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Shi, Y.S.; Huang, W.F.; Tian, Z.Q. Team Situation Awareness: The Concept, models and Measurements. Space Med. Med. Eng. 2017, 30, 463–468. [Google Scholar]

- Ma, R.; Kaber, D.B. Situation awareness and driving performance in a simulated navigation task. Ergonomics 2007, 50, 1351–1364. [Google Scholar] [CrossRef]

- Burke, J.L.; Murphy, R.R. Situation Awareness and Task Performance in Robot-Assisted Technical Search: Bujold Goes to Bridgeport; University of South Florida: Tampa, FL, USA, 2007. [Google Scholar]

- Merat, N.; Seppelt, B.; Louw, T.; Engström, J.; Lee, J.D.; Johansson, E.; Green, C.A.; Katazaki, S.; Monk, C.; Itoh, M.; et al. The “Out-of-the-Loop” concept in automated driving: Proposed definition, measures and implications. Cognit. Technol. Work 2019, 21, 87–98. [Google Scholar] [CrossRef]

- Louw, T.; Madigan, R.; Carsten, O.M.J.; Merat, N. Were they in the loop during automated driving? Links between visual attention and crash potential Injury Prevention. Inj. Prev. 2017, 23, 281–286. [Google Scholar] [CrossRef]

- Louw, T.; Merat, N. Are you in the loop? Using gaze dispersion to understand driver visual attention during vehicle automation. Transp. Res. Part C Emerg. Technol. 2017, 76, 35–50. [Google Scholar] [CrossRef]

- Endsley, M.R.; Jones, W.M. A model of inter and intra team situation awareness: Implications for design, training and measurement. New trends in cooperative activities: Understanding system dynamics in complex environments. Hum. Fact. Ergono. Soc. 2001, 7, 46–47. [Google Scholar]

- Salas, E.; Prince, C.; Baker, D.P.; Shrestha, L. Situation Awareness in Team Performance: Implications for Measurement and Training. Hum. Fact. 1995, 37, 1123–1136. [Google Scholar] [CrossRef]

- Valaker, S.; Hærem, T.; Bakken, B.T. Connecting the dots in counterterrorism: The consequences of communication setting for shared situation awareness and team performance. Conting. Crisis Manag. 2018, 26, 425–439. [Google Scholar] [CrossRef]

- Lu, Z.; Coster, X.; de Winter, J. How much time do drivers need to obtain situation awareness? A laboratory-based study of automated driving. Appl. Ergon. 2017, 60, 293–304. [Google Scholar] [CrossRef]

- McDonald, A.D.; Alambeigi, H.; Engström, J.; Markkula, G.; Vogelpohl, T.; Dunne, J.; Yuma, N. Toward computational simulations of behavior during automated driving takeovers: A review of the empirical and modeling literatures. Hum. Fact. 2019, 61, 642–688. [Google Scholar] [CrossRef]

- Baumann, M.; Krems, J.F. Situation Awareness and Driving: A Cognitive Model. In Modelling Driver Behaviour in Automotive Environments; Cacciabue, P.C., Ed.; Springer: London, UK, 2007. [Google Scholar]

- Fisher, D.L.; Horrey, W.J.; Lee, J.D.; Regan, M.A. (Eds.) Handbook of Human Factors for Automated, Connected, and Intelligent Vehicles, 1st ed.; CRC Press: Boca Raton, FL, USA, 2020; Chapter 7. [Google Scholar]

- Park, D.; Yoon, W.C.; Lee, U. Cognitive States Matter: Design Guidelines for Driving Situation Awareness in Smart Vehicles. Sensors 2020, 20, 2978. [Google Scholar] [CrossRef]

- Mahajan, K.; Large, D.R.; Burnett, G.; Velaga, N.R. Exploring the benefits of conversing with a digital voice assistant during automated driving: A parametric duration model of takeover time. Transp. Res. Part F Traffic Psychol. Behav. 2021, 80, 104–126. [Google Scholar] [CrossRef]

- Ho, C.; Spence, C. The Multisensory Driver: Implications for Ergonomic Car Interface Design, 1st ed.; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Debernard, S.; Chauvin, C.; Pokam, R.; Langlois, S. Designing Human-Machine Interface for Autonomous Vehicles. IFAC-PapersOnLine 2016, 49, 609–614. [Google Scholar] [CrossRef]

- Xue, J.; Wang, F.Y. Research on the Relationship between Drivers’ Visual Characteristics and Driving Safety. In Proceedings of the 4th China Intelligent Transportation Conference, Beijing, China, 12–15 October 2008; pp. 488–493. [Google Scholar]

- Wang, C.; Weisswange, T.H.; Krüger, M. Designing for Prediction-Level Collaboration Between a Human Driver and an Automated Driving System. In AutomotiveUI ‘21: Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Leeds, UK, 9–14 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 213–216. [Google Scholar]

- Guo, C.; Sentouh, C.; Popieul, J.-C.; Haué, J.-B.; Langlois, S.; Loeillet, J.-J.; Soualmi, B.; That, T.N. Cooperation between driver and automated driving system: Implementation and evaluation. Transp. Res. Part F Traffic Psychol. Behav. 2019, 61, 314–325. [Google Scholar] [CrossRef]

- Zimmermann, M.; Bengler, K. A multimodal interaction concept for cooperative driving. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, QLD, Australia, 23–26 June 2013; pp. 1285–1290. [Google Scholar]

- Kraft, A.K.; Maag, C.; Baumann, M. Comparing dynamic and static illustration of an HMI for cooperative driving. Accid. Anal. Prev. 2020, 144, 105682. [Google Scholar] [CrossRef]

- Stanton, N.A.; Salmon, P.M.; Walker, G.H.; Jenkins, D.P. Cognitive Work Analysis: Applications, Extensions and Future Directions; CRC Press: Boca Raton, FL, USA, 2017; pp. 8–19. [Google Scholar]

- Khurana, A.; Alamzadeh, P.; Chilana, P.K. ChatrEx: Designing Explainable Chatbot Interfaces for Enhancing Usefulness, Transparency, and Trust. In Proceedings of the 2021 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), St. Louis, MO, USA, 10–13 October 2021; pp. 1–11. [Google Scholar]

- Schneider, T.; Hois, J.; Rosenstein, A.; Ghellal, S.; Theofanou-Fülbier, D.; Gerlicher, A.R.S. ExplAIn Yourself! Transparency for Positive UX in Autonomous Driving. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ‘21), Yokohama Japan, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021. Article 161. pp. 1–12. [Google Scholar]

- Endsley, M.R. Direct Measurement of Situation Awareness: Validity and Use of SAGAT. In Situation Awareness Analysis and Measurement; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2000; pp. 147–173. [Google Scholar]

- Lewis, J.R. Psychometric evaluation of an after-scenario questionnaire for computer usability studies. ACM Sigchi Bull. 1991, 23, 78–81. [Google Scholar] [CrossRef]

- Sauro, J.; Lewis, J.R. Chapter 8—Standardized Usability Questionnaires, Quantifying the User Experience, 2nd ed.; Morgan Kaufmann: Burlington, MA, USA, 2016; pp. 185–248. [Google Scholar]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).